Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights reserved to the original author.

We discussed the poor performance of MapReduce caused by a large number of I/O operations in the previous article. Then, Spark, a memory-based distributed computing framework, and Alluxio, a memory-based distributed storage middleware, are introduced to reduce I/O operations.

These two frameworks significantly reduce I/O pressure and improve performance. However, the focus introduced in the previous article is relatively coarse-grained. We can go further into the execution process to see if there is any possibility of optimization.

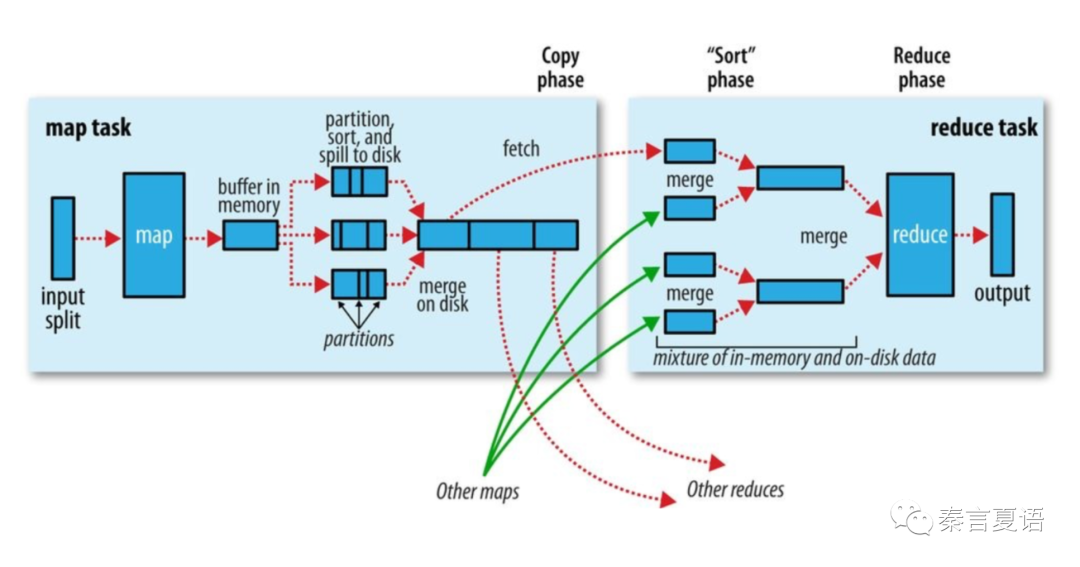

The previous article also gave this MapReduce execution process diagram. A lot of I/O operations are concentrated in the shuffle process.

Today, let's take a look at the shuffle process in the two typical distributed computing frameworks: MapReduce and Spark.

In the following description, we use Spark terms under the typical map → reduce process to understand easier.

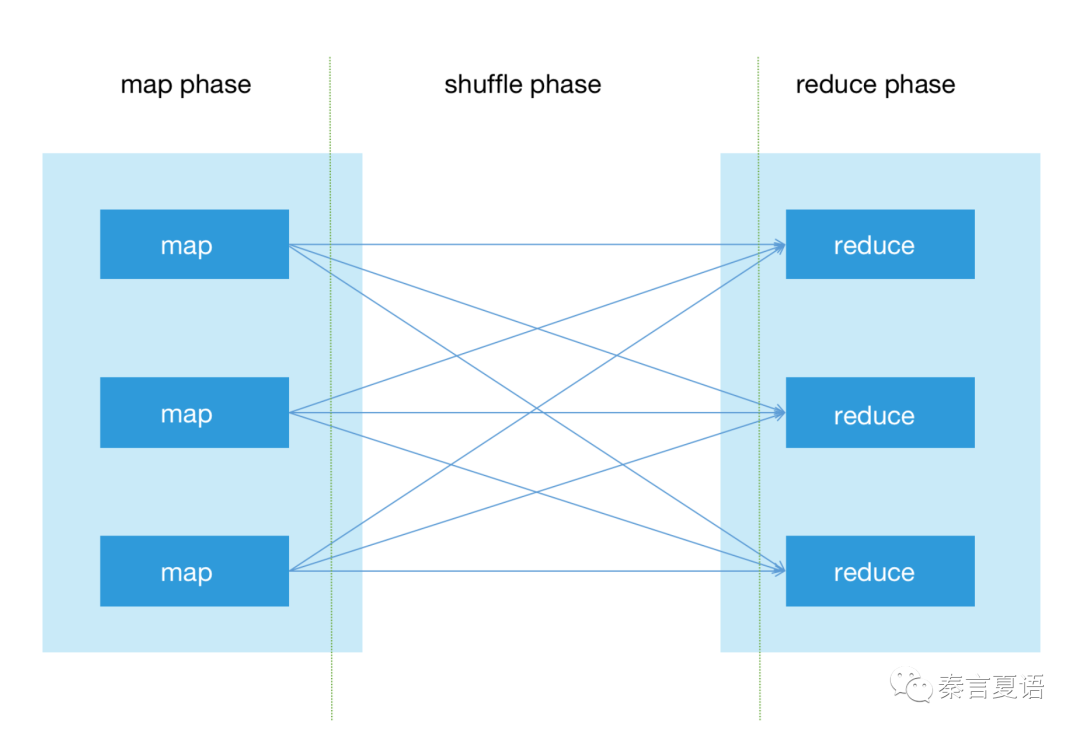

We can roughly divide the execution process of the program into the following three phases:

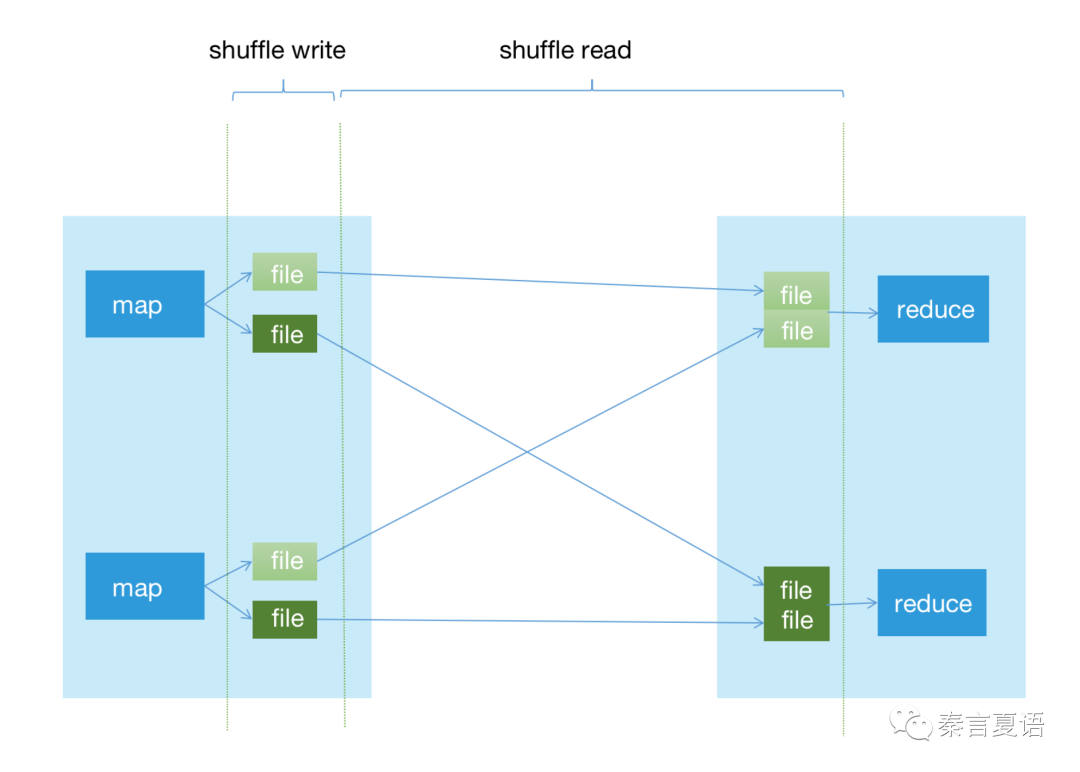

Since we want to optimize I/O, we need to pay attention to the files and focus on the shuffle phase, so we can draw a diagram like this:

The entire shuffle phase is split into two steps:

Since shuffle read comes after shuffle write, it is relatively passive. No matter how many files are written upstream and how they are written, the downstream has to deal with them accordingly. So let's focus on shuffle write.

Shuffle, as the name implies, is to disperse data and then distribute it to the corresponding downstream.

Therefore, the key is to provide data independently for each downstream, which means writing data for each reducer together.

What's more, each mapper generates a file for each reducer, so we can write the corresponding data into it. This is also known as Hash Shuffle.

Spark 0.8 introduced Hash Shuffle and used it as the default shuffle method until version 1.2.

As shuffle read pulls data in partitions, it will be more radical that each mapper generates a file for each partition, but this will result in too many files. So, we can write all partitions processed by the same reducer to one file.

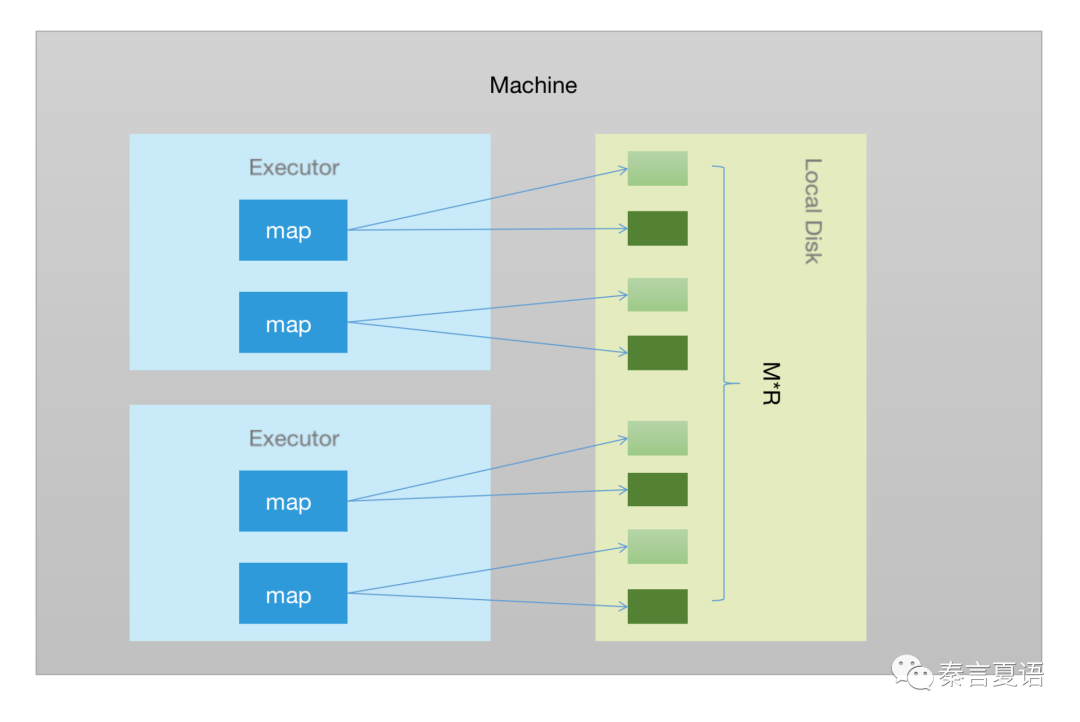

Since we only focus on the shuffle write step, we can scroll the mouse wheel (like looking at a map) to see how mappers on a local machine write output to files.

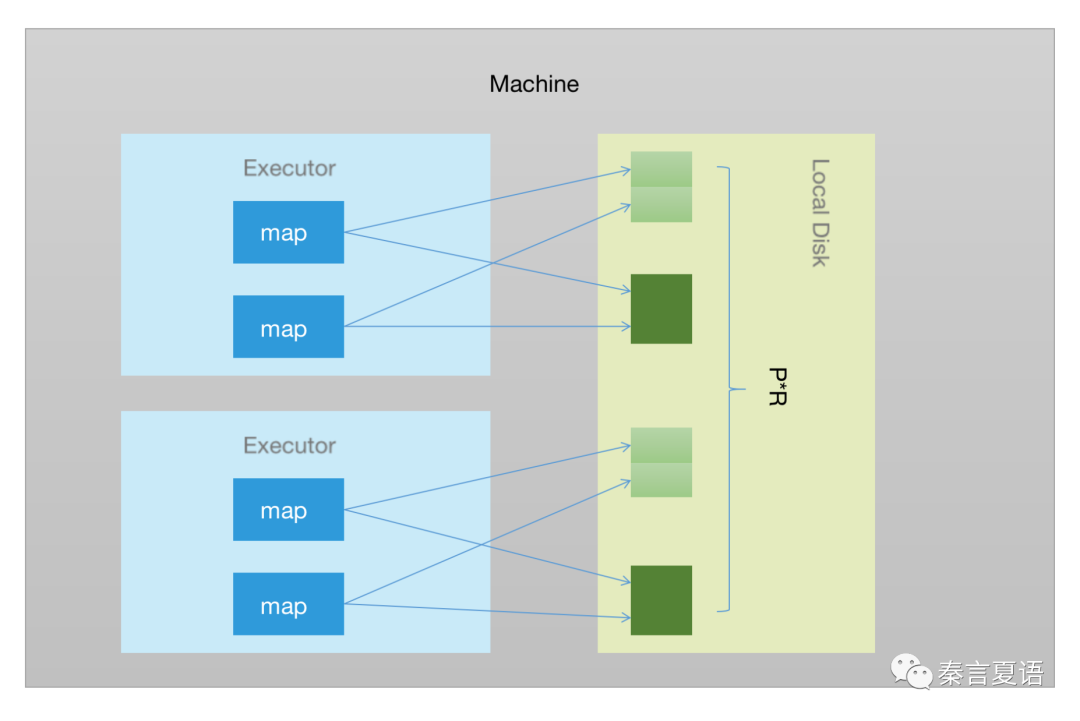

As shown in the preceding figure, two Executors are allocated to a machine, and each Executor executes two map tasks successively. All maps write the processing results to the local hard disk.

This way, at the end of the map phase, there will be M*R files on the local hard disk, where M is the number of Mappers and R is the number of Reducers.

On the whole, if a large task requires 40K mappers and 20K reducers, 0.8 billion files will be generated.

From the perspective of a cluster, running many such tasks at the same time will leave unbearable pressure on the disk and network of all machines.

Therefore, optimization is required.

As mentioned in the fifth article of the series, one of the two purposes of building distributed systems is to improve the parallelism of computing for faster calculation.

However, computing resources are limited, so it is unnecessary to run all mappers and reducers at once. Instead, we run one part at a time with limited concurrency and then run the other part until the run is finished.

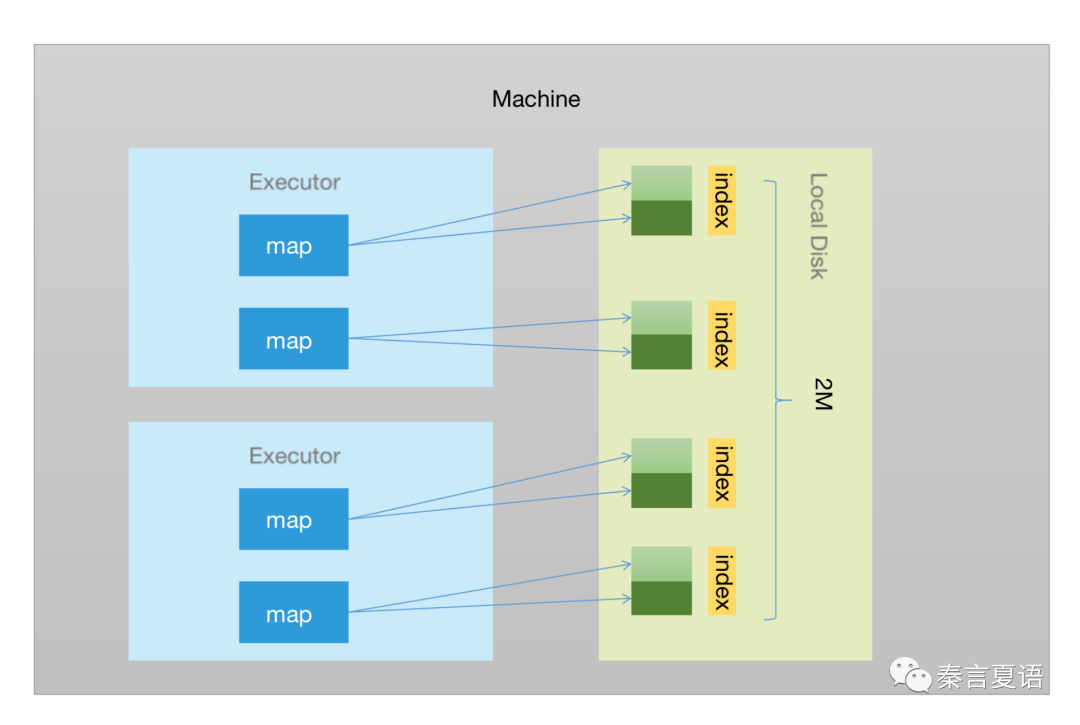

As shown in the preceding figure, each Executor runs two maps, but at any specific moment, only one is running. Therefore, it is entirely possible for these maps to share files, but as the downstream pulls and processes data in partitions, files should be shared in partitions.

As shown above, we can call it Consolidated Hash Shuffle.

Executor maintains a file pool in which a file is opened for each reducer. When each map task is executed, these files are obtained and data is written to these files. After execution, these files are returned. Then, the next map starts and repeats the operations above.

In this case, the number of files on the local hard disk is reduced to P*R, where P is the number of concurrent tasks. For a single machine, we can divide the number of CPU cores of this machine (C) by the number of cores required for each map (T), which is P = C/T.

Spark introduced the consolidation mechanism for Hash Shuffle in version 0.8.1 to ensure that it does not become obsolete due to performance issues.

Consolidated Hash Shuffle did optimize performance, changing the M in the original M*R to the number of concurrent tasks, so the number of files is independent of the number of maps.

However, when the number of reducers is large, the number of files is still large. Is it possible to decouple the number of files from the number of reducers?

According to the figure above, the number of files is related to reducers because we create a file for each reducer. We can write the data of multiple reducers to the same file or write the data of all reducers to one file to break this one-to-one relationship.

However, this way, all the data are mixed together, resulting in the fact that the reducer can no longer read its data directly in the shuffle read phase, as it does in hash shuffle.

It is impossible for every shuffle read operation to traverse all files. It is too inefficient.

Naturally, referring to the database approach, create an index, so we can know where the desired data is.

However, shuffle read does not use value as a condition to query data like a database but pulls data by partition. Each reducer may pull many partitions. Therefore, it is best to use the partition as the unit of index rather than the reducer to decrease the number of files, as in the case of hash shuffle.

Since the index is organized in partitions, the data must be consistent, so the data in the same partition can be stored together.

Therefore, a sorting operation with partition as the key is required to sort all data by partitionId and write all data to a unique file.

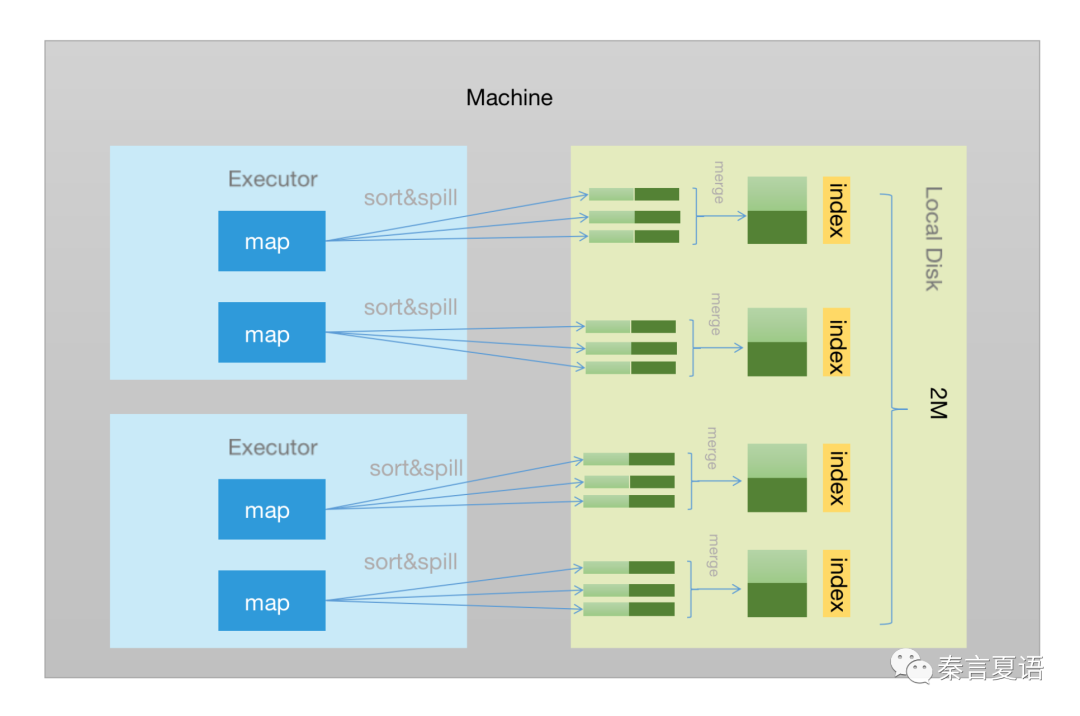

The figure below shows Sort Shuffle:

At this time, the number of output files in the map phase is 2M. The first M indicates the number of data files, and the second M indicates the number of index files.

Since sorting is involved, it is impossible to append the data directly to the file, and memory will be insufficient, so it will be necessary to sort the remaining data in batches and then spill it to the hard disk. This way, there will be many spill files, and we need to merge and sort them into one file.

In addition, if the processing in reduce phase is considered, the data with the same key should be aggregated together. If the data with the same key is scattered everywhere, it must be cached in memory, so all data can be collected and calculated, especially for operations such as avg(). If the real-time aggregation (avg can also aggregate data in real-time by saving (sum,count)) is carried out, the intermediate result set of all keys will quickly occupy a large amount of memory, and OOM is prone to occur.

However, if we put the data with the same key together in advance, we can finish the calculation at one time and append the data to the file in batch before clearing the cache. In order to determine whether a key ends, we only need to see whether the current key is equal to the key being processed. As I said just now, if we want to put them together, we have to sort them.

In addition, sorting is indeed required in many application scenarios. Therefore, sorting both partitionId and data keys becomes the default behavior.

However, in the shuffle read phase, since reduce has to read data from a lot of maps and cannot sort the data in memory, it can only perform external sorting (such as multi-way merge sort). This requires us to do local sorting in the shuffle write phase to reduce the sorting pressure in the shuffle read phase.

The Sort Shuffle diagram for the sort, spill, and merge processes is listed below:

Sort Shuffle is the default shuffler for MapReduce. After the Hash Shuffle attempt, Spark also implemented Sort Shuffle in version 1.1 modeled on MapReduce and made it the default Shuffler in version 1.2. Later, Hash Shuffle was officially removed in version 2.0.

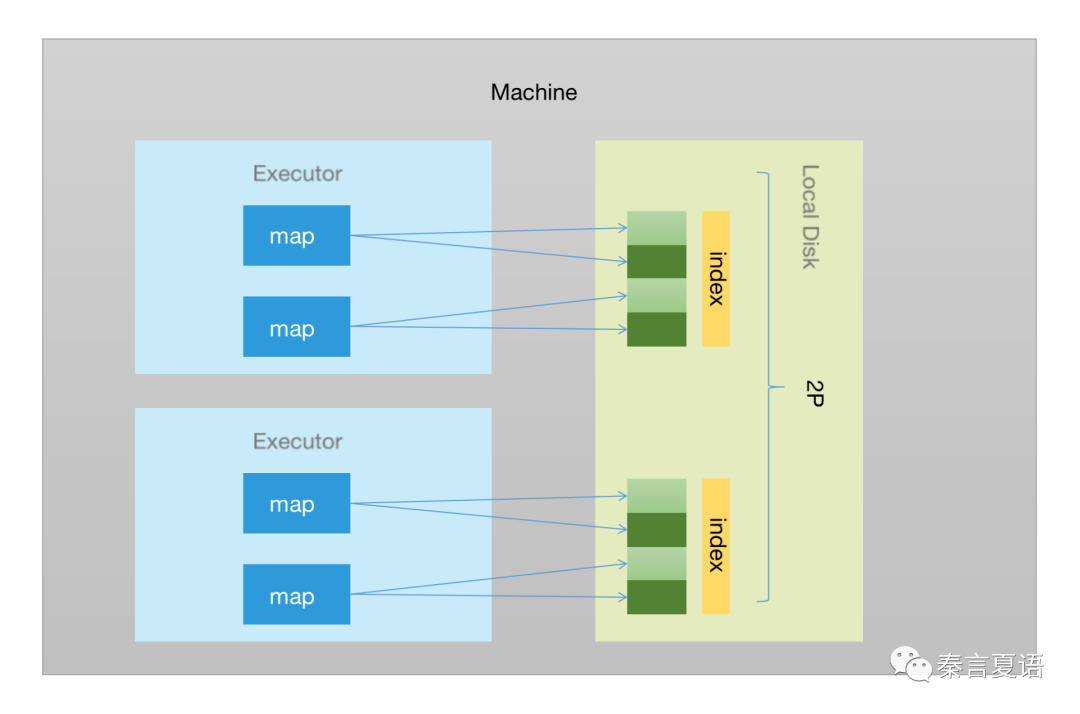

Those carefully following my thinking may think of solutions for further optimization. It aims to combine the decoupling of the map number in the consolidated hash shuffle with the decoupling of the reduce number in Sort Shuffle.

This way, the number of files is only related to parallelism. At the same time, the number of files is further reduced to 2P without the constraint of the number of maps and reduces. We can call it consolidated Sort Shuffle.

Data in the same partition may appear in multiple places in the data file and index file and needs to be processed in the shuffle read phase.

As the 2M files of Sort Shuffle are acceptable in most scenarios, the complexity brought by consolidated Sort Shuffle may not be cost-effective, so it has not been widely adopted. But from the continuity of thought, it is still worth our understanding.

In addition, we have to admit that the multi-round sort of Sort Shuffle will slow down the performance, especially when the number of maps and reduces is small, and the proportion of sorting to execution time is extremely high. Also, sorting is unnecessary for some application scenarios that do not require sorting.

Therefore, a judgment logic can be introduced to Sort Shuffle. When the number of partitions is greater than a certain number, Sort Shuffle is enabled. Otherwise, hash shuffle will be adopted, and a non-sorting merge will be performed at the end to ensure only one data file and its index file are output.

The implementation by Spark is represented by different shuffleWriters: SortShuffleWrite and BypassMergeSortShuffleWriter.

Sort Shuffle has been working well, but there are some things we have always believed have gradually changed:

This makes the CPU gradually become the bottleneck of some big data applications.

Spark started the Tungsten project to solve this problem.

Tungsten is a very important milestone for Spark. However, I will only cover the parts related to the topic here. Interested readers can research to learn more about it.

Tungsten implements a new memory management method that stores data in the off-heap memory. It has the following two common benefits:

Shuffle involves operations (such as caching, sorting, and merging a large amount of data), so it can also benefit from Tungsten. Since it uses the Java Unsafe package for implementation, it is also called Unsafe Sort Shuffle.

In addition to these two general benefits, Tungsten has the following advantages in the shuffle process (the overall process of Tungsten Sort Shuffle and Sort Shuffle is similar):

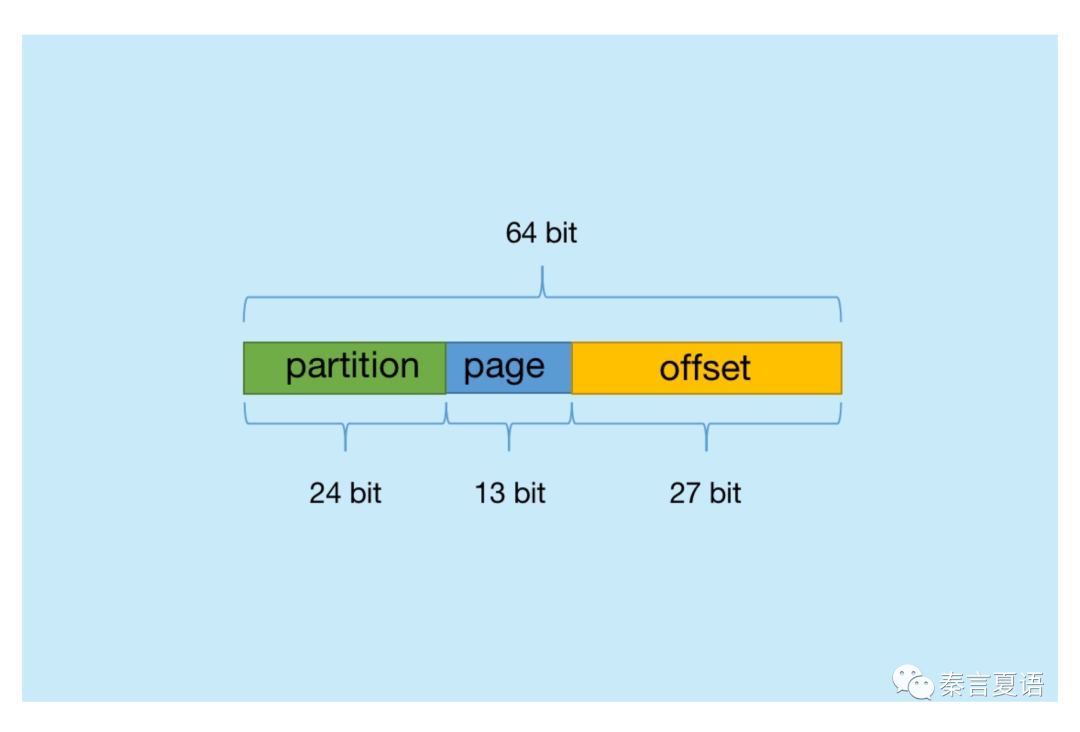

Tungsten uses a two-level architecture similar to the operating system to manage memory. It locates the page first and then finds the specific data by offset.

As mentioned earlier, the data in the Shuffle process is written through the ShuffleWrite class.

We trace the call chain from UnsafeShuffleWriter to ShuffleExternalSorter to ShuffleInMemorySorter and finally to PackedRecordPointer to find the code that packs the pointer:

public static long packPointer(long recordPointer, int partitionId) {

assert (partitionId <= MAXIMUM_PARTITION_ID);

// Note that without word alignment we can address 2^27 bytes = 128 megabytes per page.

// Also note that this relies on some internals of how TaskMemoryManager encodes its addresses.

final long pageNumber = (recordPointer & MASK_LONG_UPPER_13_BITS) >>> 24;

final long compressedAddress = pageNumber | (recordPointer & MASK_LONG_LOWER_27_BITS);

return (((long) partitionId) << 40) | compressedAddress;

}This code is not difficult to understand. Since there is a lot of information to be saved, Tungsten has compressed the pointer to some extent. The following figure is more intuitive:

We can also summarize some limitations of Tungsten Sort Shuffle from this structure:

Spark introduced Tungsten Sort Shuffle in version 1.4 and Sort Shuffle in version 1.6.

After several stages of evolution, Spark Shuffle gradually stabilized. From the perspective of ShuffleWrite, the selection order becomes BypassMergeSortShuffleWriter > UnsafeShuffleWriter > SortShuffleWriter. Since each has its own applicable scene, when certain conditions are met, it will be automatically selected.

Another point worth mentioning is Spark supports dynamic allocation to improve resource utilization, which causes the executor to be recycled. This way, the output file in the shuffle write phase is lost.

In order to solve this problem, Spark starts an independent and resident service on each machine to ensure the downstream can still perform shuffle read after the executor is recycled.

In terms of configuration, after we set spark.dynamicAllocation.enabled=true, we also need to set spark.shuffle.service.enabled=true to start this external shuffle service.

This article introduced several possible shuffle methods and their adoption in MapReduce and Spark.

Shuffle is a very performance-impacting and complex process. However, there are many methods to optimize it (such as combining with the combiner, choosing reduceByKey instead of groupByKey as much as possible, and setting parameters, such as spark.shuffle.compress reasonably). Due to the topic and limited space, this part will not be discussed further.

The main points of this article are listed below:

The performance problem of Shuffle has been solved well, and some parameters and methods are provided to enable applications to flexibly select tuning methods.

Performance optimization is endless, so let's stop for a while and discuss it later. The next article will continue along the lines of this series.

As discussed in the last few articles, we have solved the performance problems of masters, slaves, and workers in distributed systems.

However, in terms of efficiency, there are execution efficiency and development efficiency. Performance involves execution efficiency, but what about development efficiency?

In the next article, we will learn how to improve the application development efficiency on distributed systems.

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a storytelling way. Stay tuned for the next one!

Learning about Distributed Systems – Part 16: Solve the Performance Problem of Worker

Learning about Distributed Systems – Part 18: Run AND Write Fast

64 posts | 59 followers

FollowAlibaba Cloud_Academy - July 24, 2023

Apache Flink Community - July 18, 2024

Apache Flink Community China - February 28, 2022

Alibaba Cloud MaxCompute - August 31, 2020

Alibaba Cloud MaxCompute - September 18, 2019

Alibaba Cloud_Academy - August 25, 2023

64 posts | 59 followers

Follow Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud_Academy