Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights reserved to the original author.

At the beginning of the series, I mentioned that although this series is called Learning about Distributed Systems, I will develop the series with big data as the core scenario.

Therefore, I introduced Hadoop to solve the problem of inadequate storage and slow computing.

However, distributed systems are not only for the big data scenario, and Hadoop is not the only solution.

Let's focus on the original situation. In the beginning, a single machine is enough. Gradually, when the amount of data and computing increases, the architecture needs to be scaled out. The natural idea is to improve the machine configuration, which is what we often call vertical expansion.

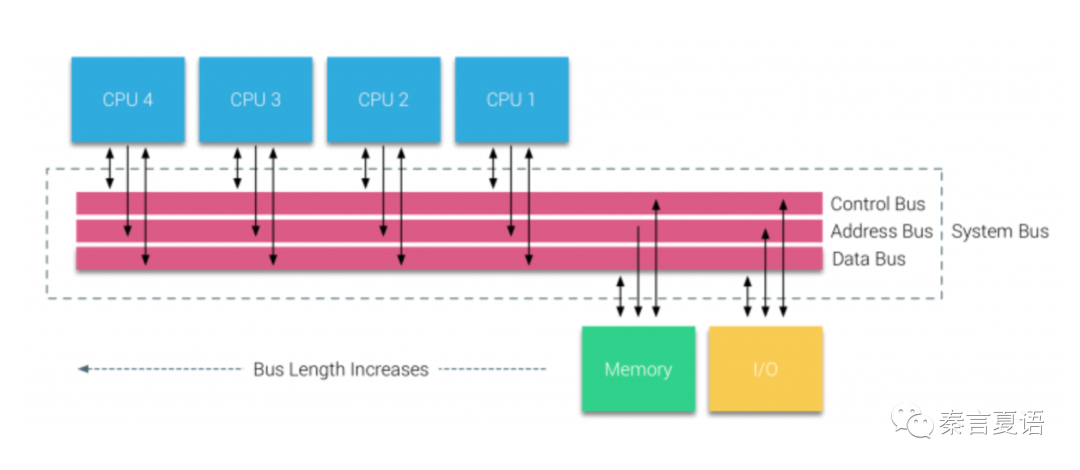

Then, all resources are shared (shared everything), and all CPU cores share memory and disk, which is called Symmetric Multi-Processor (SMP) architecture.

This architecture is unsustainable. Due to resource sharing, competition will soon occur, resulting in performance that cannot be linearly improved. For example, when the system bus performance reaches the bottleneck, adding more CPU will not help. The SMP architecture is known as the Uniform Memory Access (UMA) architecture because of the significant impact of memory on performance.

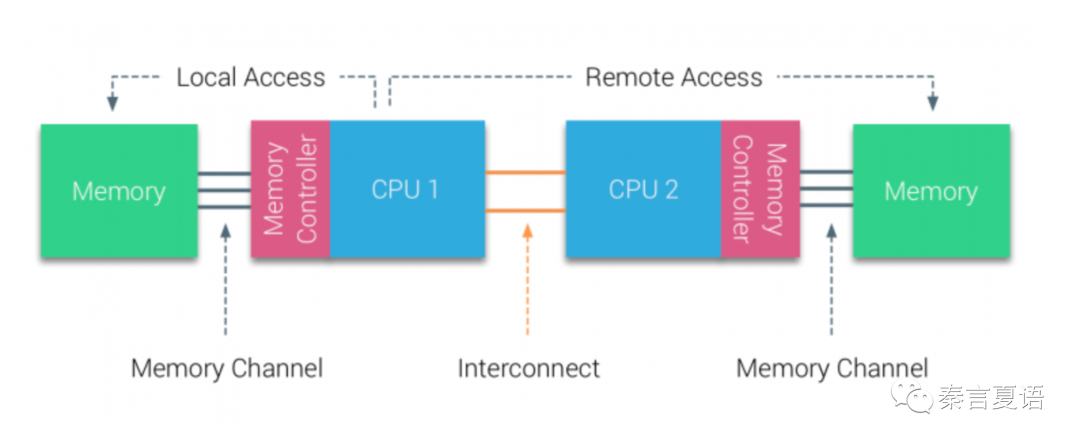

Since it is easy for shared everything to cause competition, share as little as possible and only share the memory that has the greatest impact on performance (shared memory). Then, the Non-Uniform Memory Access (NUMA) architecture was created. If this architecture is implemented and deployed across multiple machines, the extensibility will improve more.

With the support of system-level software and hardware, a CPU can access local memory and remote memory through interconnect.

This can improve the overall performance, but accessing remote memory is much slower than access to local memory. So, it cannot deliver the expected performance boost. As the scale of the system increases, the performance loss and even blocking caused by accessing remote memory will become severe. When you develop applications, it is necessary to avoid accessing remote memory as much as possible. These are the extensibility of the NUMA architecture that cannot meet expectations.

Shared memory of NUMA only shares memory. There will also be a shared disk architecture that only shares disks.

In the stand-alone case, the shared disk architecture is reflected that each CPU has a dedicated bus and memory, but all CPUs share the disk. This can maximize the extensibility and utilization of storage space. However, there may be disk performance competition that prevents further expansion.

If you extend this idea to multiple machines like NUMA, you will get a shared disk cluster. Our commonly used NFS is similar to this.

According to this idea, it is easy to draw two conclusions:

The first conclusion is quite simple. The trend is definitely to scale out and create clusters.

The second conclusion is that we need a new shared nothing architecture.

(Following these two ideas, the generalized distributed system we mentioned earlier and the implementation of many frameworks in the Hadoop ecosystem are all narrowly reflected in the big data field.)

The database field encountered and solved this problem first. Since databases have played a key role for a long time (they are still important today), a large amount of storage and computing revolves around databases. Once the data volume and business volume expand, the database will be the first to hit the bottleneck.

SMP and NUMA cannot solve the problem well in practice. We can only work towards shared nothing.

The most apparent solution is the divide-conquer method (like sharding), and with the help of powerful middleware, we can realize horizontal expansion under shared nothing.

However, the granularity of sharding is too coarse, uneven data distribution will drag down overall performance, and the cost of data rebalance is also high.

Therefore, there is a finer granularity scheme - splitting by key, combined with a hash algorithm and other schemes. As such, we can solve the sharding problem.

This led to the birth of the Massive Parallel Processing (MPP) database. This includes many other well-known databases (such as Teradata, Greenplum, and Vertica).

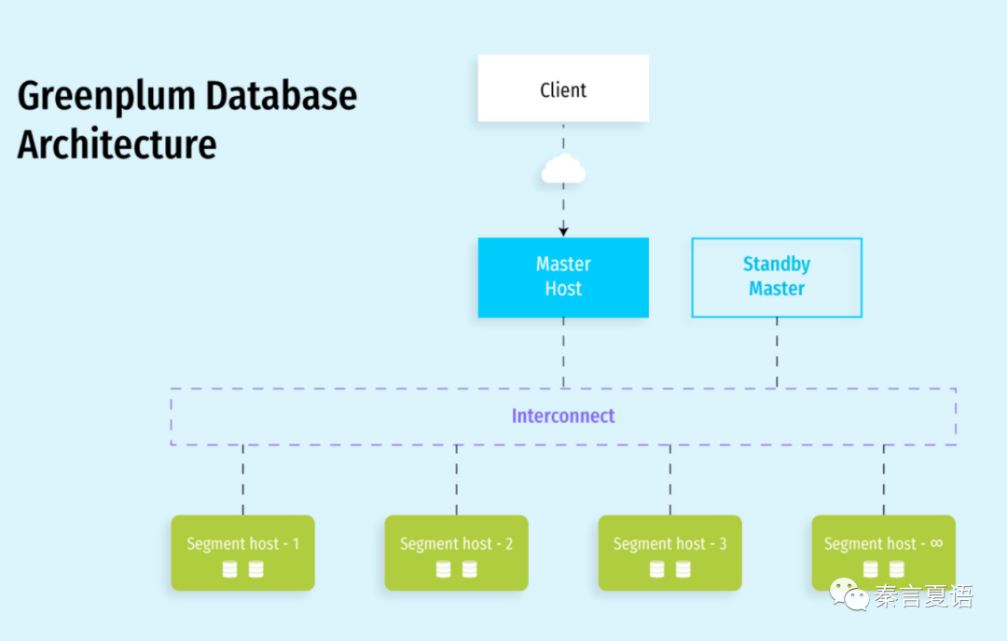

Taking Greenplum as an example, you can see what an MPP looks like from the following architecture diagram:

There are several different roles in Greenplum:

Each segment is independent of the other, and nothing is shared. Necessary interactions are also transferred through interconnect, and Master provides unified logical access to the outside world. During scale out, you only need to add new segments and redistribute data. To a certain extent, the performance increases linearly with the scale.

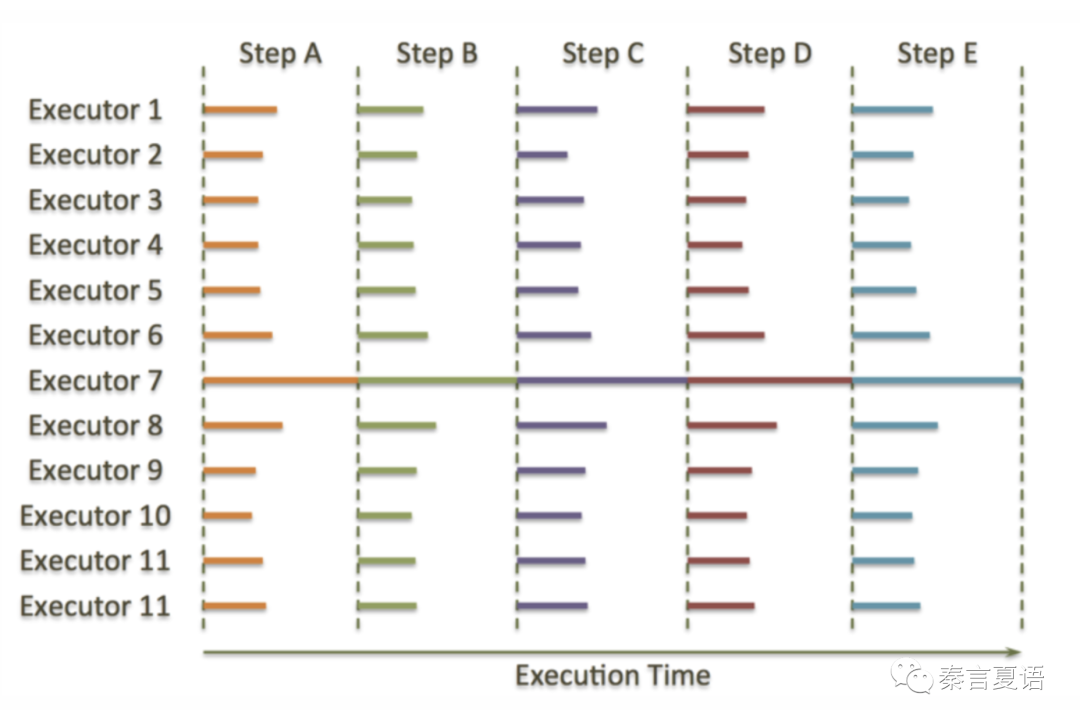

However, introducing such MPP databases is not once and for all. Usually, after expanding to 1-200 nodes, it is difficult to continue, and adding nodes cannot support more data and concurrency because the barrel effect is apparent in the MPP architecture.

MPP distributes queries to all nodes and then summarizes the results. Any single slow node will slow down all requests. When the cluster scale becomes larger, the probability of slow nodes is much higher.

Similarly, since each query must be distributed to all nodes, and the resources of each node are limited, the entire system is destined to be unable to bear a large amount of concurrency.

Yes, the concurrent execution of all nodes brings high performance, but it also limits the cluster scale and concurrency.

In addition to MPP architecture, another example of shared nothing is the Batch architecture, which corresponds to related frameworks of Hadoop we have discussed a lot. The typical frameworks are MapReduce and various SQL on Hadoop solutions (such as Hive and Spark SQL).

In the computing layer, the Batch architecture processes data in batch form, seeking to process larger amounts of data with greater throughput while abandoning the pursuit of excessive timeliness and latency (another typical trade-off).

In the storage layer, unlike MPP architecture, which cuts at the key level, the Batch architecture with HDFS as the storage facility cuts with block as the granularity. The former has evident business meaning, while the latter has none and gets rid of the shackles of business. Therefore, the Batch architecture has more flexibility in storage extensibility.

I won't go into the features and principles of the Batch architecture here. For more information, see the previous articles in this series.

A typical Batch architecture can scale out to thousands of nodes, far exceeding the scale of an MPP architecture but is usually an order of magnitude worse than MPP in query performance (latency).

Generally speaking, MPP is faster than SQL on Hadoop solutions of Batch class for several reasons:

Therefore, MPP and Batch are not substitutes. They have advantages, disadvantages, and applicable scenarios:

The section above is mainly concerned with performance. There are many differences in functions. MPP is a database, so in terms of support for SQL standards and transactions, it is much better than Batch. Batch can handle unstructured data, but MPP cannot.

MPP and Batch have applicable scenarios, but due to the prevalence of various complex requirements, the combination of the two solutions has increased, and some hybrid architectures have been created.

For example, a lot of data has already run Batch tasks on Hadoop, but there is also a need for interactive queries. You can copy data to an MPP database, but cost and consistency are issues that cannot be ignored.

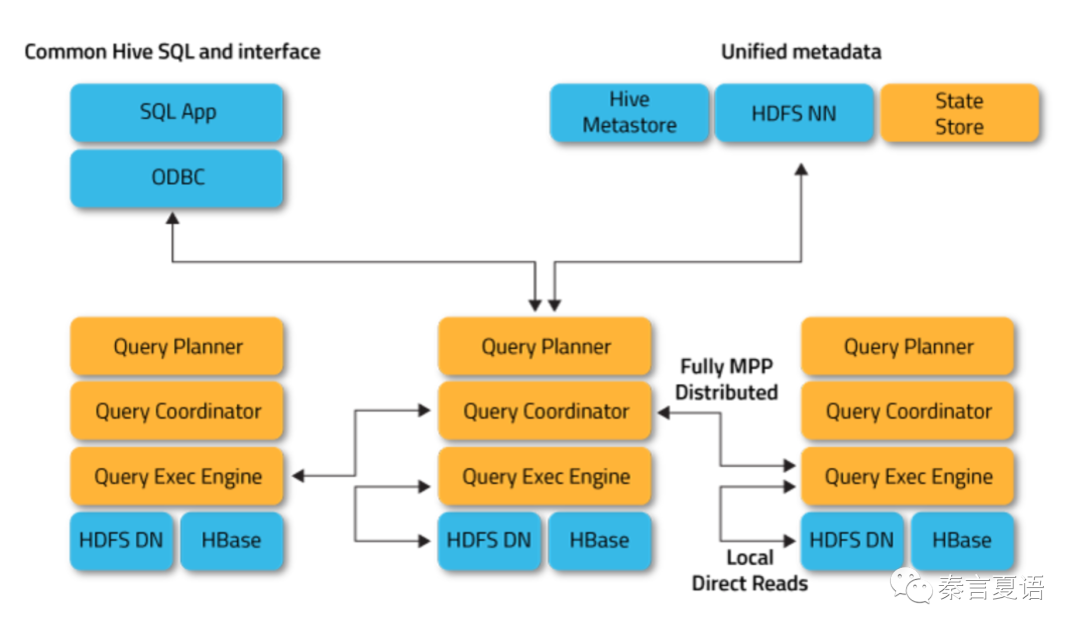

The most typical solution of MPP on HDFS is Apache Impala.

The architecture diagram above shows Impala queries are still executed in MPP, but data is stored in HDFS and HBase. Coupled with the support for Hive metadata, it is easy to use the same data in different ways.

Combined with the discussion on architecture evolution at the beginning, it is a bit like the architecture of shared storage (I coined the term myself :smile:). The difference between shared storage and the shared disk is that it does not directly share the hard disk physically but provides a shared storage layer logically.

Therefore, it is not difficult to imagine that Impala will perform better than traditional SQL on Hadoop solutions, but there will still be some gaps compared with standard narrow MPP databases.

Another broad requirement is whether you can enjoy the high performance of MPP while being able to scale horizontally to an infinite extent (like Batch) and support greater concurrency.

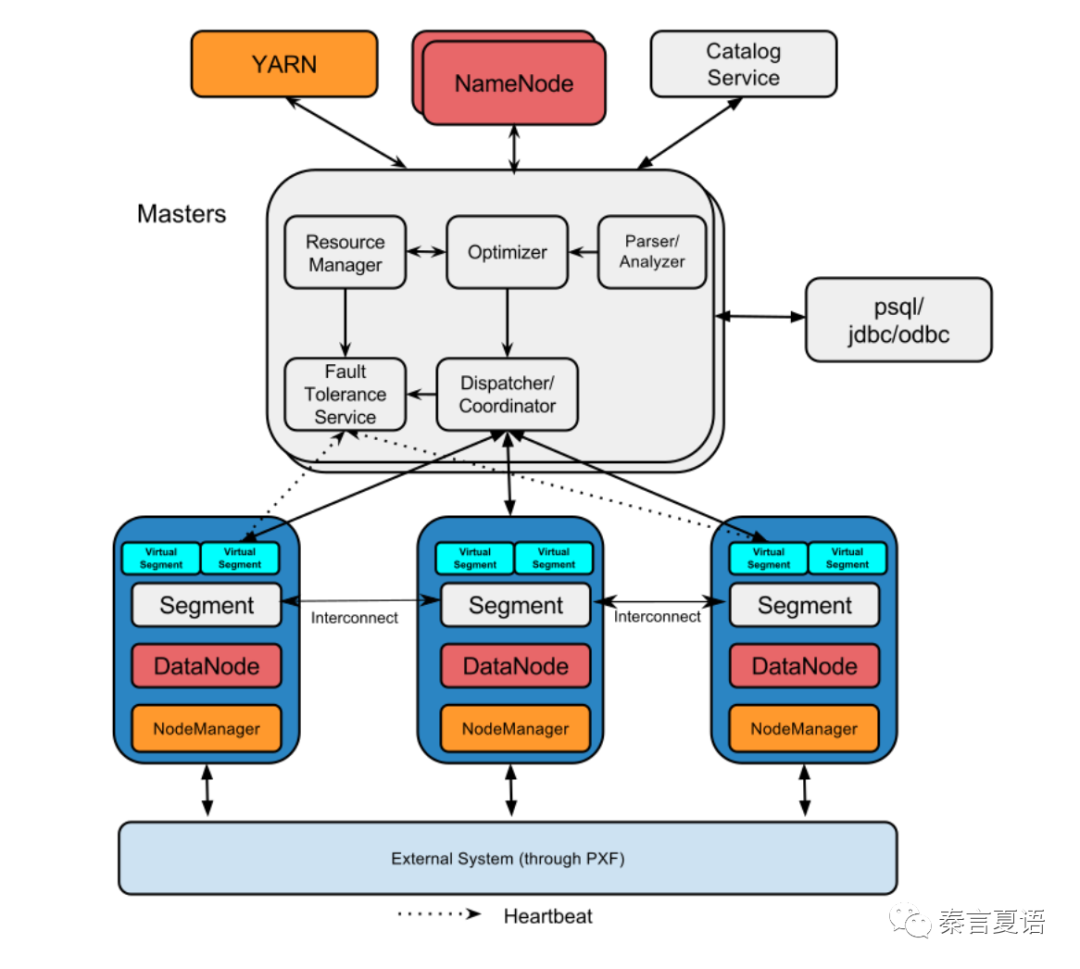

A typical scheme is Apache HAWQ.

From the above architecture diagram, it is easy to see that HAWQ is also on HDFS in storage, which is similar to Impala.

However, unlike Impala, HAWQ can freely combine computing and queries in the form of virtual segments. Unlike real database instances (such as the physical segment of Greenplum), HAWQ also defines a virtual segment on top of the physical segment. It is the virtual segment that performs the queries.

By assigning different numbers and locations of virtual segments to different queries, it is possible to maximize overall resource utilization and avoid the barrel effect, freeing the traditional MPP architecture from limitations in scale.

Apache Doris is similar to HAWQ in solving extensibility problems. However, Doris has more comprehensive support in real-time and multi-dimensional analysis. If you are interested in Apache Doris, you can research more information.

That's all for this article. The idea is still consistent with the previous articles. From MR to Spark, from SQL to Hive and Spark SQL to applications of Hive on the data warehouses, and then to MPP in this article, in addition to providing alternatives to Hive and Spark to meet the demand for query delay, it echoes the exploration of system extensibility at the beginning of the series.

The next article will explore whether there are other ways to pursue higher performance.

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a storytelling way. Stay tuned for the next one!

Alibaba Cloud Academy Student Central: The Invaluable Portal for Students

Alibaba Cloud x MongoDB Bootcamp: Getting Started with ApsaraDB for MongoDB

64 posts | 59 followers

FollowApsaraDB - April 10, 2024

Alibaba Clouder - December 13, 2017

ApsaraDB - August 1, 2022

ApsaraDB - October 21, 2020

Alibaba Cloud MaxCompute - September 18, 2019

Alibaba Clouder - December 12, 2017

64 posts | 59 followers

Follow Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud_Academy