By Pinqu, Zongyan, Tongchang, Xiadiao, and Weilin

Large models have always been proven to have significant advantages in model effects. The emergence of ChatGPT proves it has great potential in industrial production tools. There is no doubt that the training of large models requires huge computing power, which is a test for distributed parallel frameworks. Nowadays, more teams in the industry are turning to the research and development of distributed frameworks, including extreme manual optimization for specific scenarios and specific models and automatic distributed work for general scenarios and general models. However, distributed frameworks still face some challenges in actual production scenarios. On the one hand, for the manually optimized framework, although its performance in specific cases is more extreme, there is a limit to versatility. On the other hand, existing automatic distributed frameworks may perform well in terms of versatility and performance, but they usually cost a long strategy searching time, which has a certain negative impact on business implementation. The Alibaba Cloud Machine Learning Platform for Artificial Intelligence (PAI) Team launched the Tensor Program Distributed System (TePDist) and opened its source code to the public to address the preceding issues. TePDist decouples the user model language by performing distributed strategy search on HLO. While maintaining versatility, TePDist pursues a high-performance distributed strategy in the acceptable strategy searching time.

How is the technical framework of TePDist designed? What are the future plans? Let's learn more about it together.

TePDist is a fully automatic distributed deep learning system based on the HLO IR layer developed by the Alibaba Cloud PAI Team. TePDist is a distributed compiler with its own distributed runtime, which effectively solves the problems of automatic search for parallel strategies of deep learning models and distributed strategy implementation.

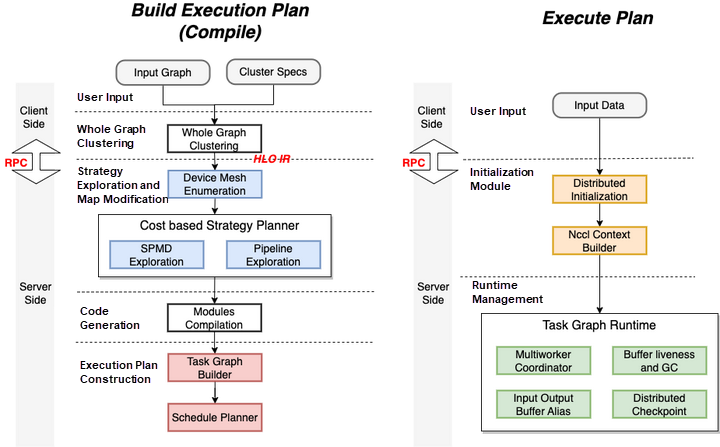

In terms of architecture, TePDist adopts the client/server mode to realize the decoupling of distributed strategies and model descriptions. The server side is the most important part of TePDist. It uses HLO IR as input to automatically explore and implement distributed parallel strategies. The client side takes the model described by users as input and converts it into HLO IR. Therefore, any client with the ability to convert a model to HLO IR can be connected to the server after adaptation.

In terms of function, TePDist is divided into two parts. One part is the strategy search that performs Data Parallel and Sharding (SPMD) and Pipeline parallel on HLO IR. An execution plan is built and compiled based on the task graph. The second part is a distributed execution engine that efficiently runs the execution plan.

In terms of usage, TePDist provides different optimization levels, with high optimization levels pursuing distributed strategy quality and low optimization levels adopting some additional heuristics to slightly sacrifice strategy quality in exchange for faster searching time to meet implementation requirements.

Open-source address: https://github.com/alibaba/TePDist

The main features of TePDist are listed below:

TePDist uses a client/server-separated architecture to decouple frontend model building from backend strategy search implementation. The server side is divided into the following two processes:

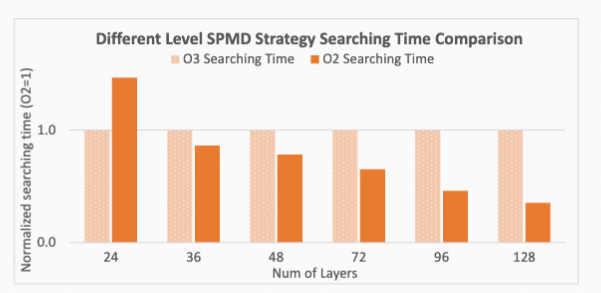

Automatic distribution is one of the core functions of TePDist. We have done a reasonable hierarchical disassembly of the search space of the SPMD strategy and used different algorithms at different levels. At the same time, different optimization levels are provided to users to control the time of strategy search. For pipeline stage division, users do not need to linearly sort DAG before using ILP to model the stage division. TepDist also provides pruning parameters to control search time for users. The following is a brief description of this part.

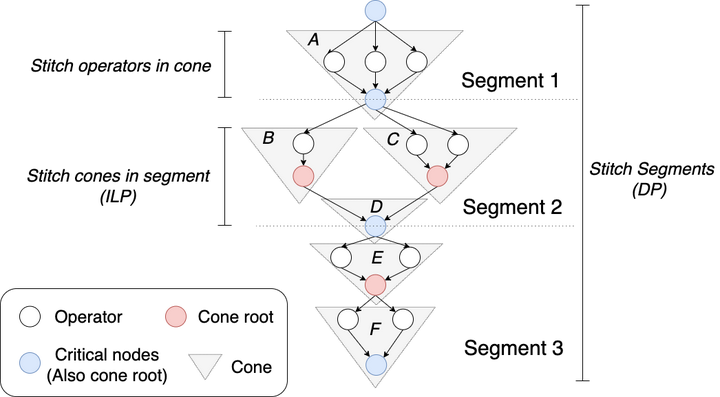

Due to the large number of HLO instructions, directly using ILP modeling to solve problems may cause searching time to be too long due to the large scale of problems. Also, the scale of problem solving can be reduced by contracting images. TePDist divides DAG into three levels and uses different optimization methods to solve problems.

1. Cone Structure

There is a large number of cone structures in HLO DAG, which are very important for our formulations. What is cone structure? It is a subgraph that contains two kinds of nodes: root and non-root. Root nodes have multiple inputs or are computationally intensive; non-roots are other nodes. We can identify all cone structures in the DAG of HLO. Most of the cone structures appear in the form of an inverted triangle, as indicated by the gray triangle area in the figure above. Cones represent coarser-grained nodes, and our algorithm needs to determine a sharding strategy for each cone. How many possible sharding strategies can each cone have? It depends on how many sharding strategies its cone roots have. Specifically, we need to enumerate every sharding strategy of cone roots within a cone and then get sharding strategies for the remaining nodes in the cone by greedy or dynamic planning methods, with the goal of minimizing the communication cost. We can get a variety of different sharding strategies for this cone, which are candidate strategies for each cone.

2. Segment

Although the granularity of the cone structure is coarser, it reduces the scale of problems to a certain extent. However, the number of cones is still huge and may need further processing for large models. Large models have the characteristics of repeated stacking in terms of structure. This means each model may be divided into multiple layer-like structures, thereby dividing the large model. To this end, we have analyzed the workloads running on the PAI platform and summarized a general graph-splitting processing method. Through graph analysis, all critical nodes in the graph are identified and used as graph intervals to divide the overall model into multiple approximately linear segments (such as the three segments marked in the preceding figure). Each segment contains several cone structures. There is a similar question here. How many candidate strategies are needed to keep for each segment? It depends on the combination of the number of sharding strategies of the critical nodes the segment contains. In practice, we need to enumerate the strategies of each critical node, use its internal cones as the basic units, aim at the minimum communication cost, and determine the overall sharding strategy for the segment with ILP.

3. Overall Graph

The near-linear topology between segments is naturally suitable for dynamic programming. After determining the candidate sharding strategies for each segment, TePDist uses dynamic programming (DP) to determine the unique strategy for the overall DAG with the goal of minimizing the communication cost.

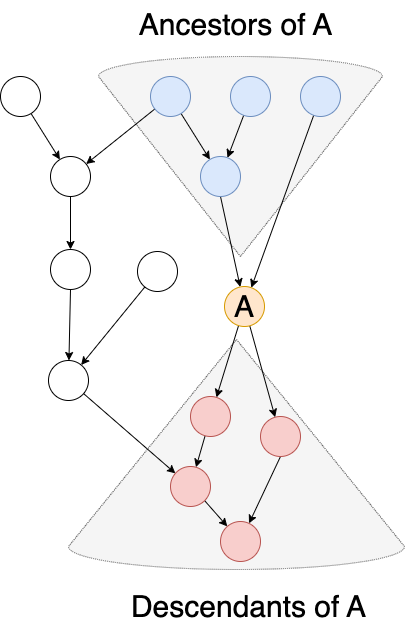

One of the major features of TePDist in dividing pipeline stages is that it does not need to arrange DAG into linear sequences. In addition, TePDist models stage partitioning into ILP problem solving. The basic principle of partition is to try to ensure that the communication section is the smallest with the calculation amount of each stage being uniform. Since each node in DAG can find its ancestor and successor nodes. This dependency of the predecessor and successor can be described as a linear constraint on the ILP problem, as shown in blue and red in the following figure. In terms of the compression of the strategy search time, TePDist provides users with a control interface for controlling the uniformity ratio of stage calculations.

TePDist customizes the execution engine by instantiating and organizing the HLO computation into the task graph and executing it on a static schedule.

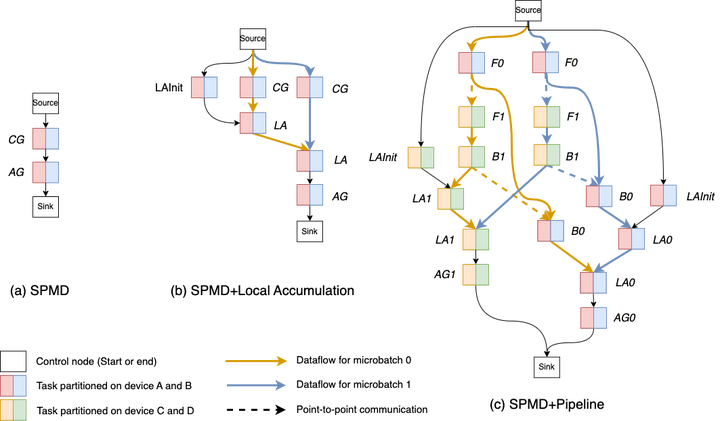

We abstract the task graph to be able to combine various parallel patterns. When a parallel strategy is applied to an HLO, multiple split or sharded HLO computations need to be combined into a complete execution logic. To this end, we abstract the task graph, which consists of a series of task nodes with connections. In the task graph, we agree that the source and sink are the start and end nodes, and other task nodes are instances of various HLO computations. For example, for a training task that contains gradient accumulation, the original HLO computation is divided into three parts: compute gradients, gradient accumulation, and apply gradients. Three different task nodes are created from this and combined into a complete execution logic. The following figure shows the specific form of the task graph when the three sharding strategies are used.

When building the execution plan, TePDist develops a static scheduling plan on the task graph. Static scheduling has better performance than dynamic scheduling during the task graph being executed. Static scheduling determines the scheduling order in advance during compilation, while dynamic scheduling relies heavily on the control nodes of the execution period, which may become a performance bottleneck due to the existence of central management nodes. However, once the static scheduling is determined, each worker can be executed step by step without dependence on central control. Static scheduling makes memory usage steady. When the pipeline parallel strategy is implemented, the 1F1B scheduling strategy enables the memory to be released in a timely manner to reduce peak memory usage. In contrast, dynamic scheduling cannot guarantee that the scheduling order of 1F1B is fully represented.

We have done the following work in terms of the execution engine:

1. Distributed Initialization

TePDist initializes each shard of the sharding tensor. Different shards should be given the same initialization seed but different initial random number generation states to ensure the initialization result after sharding is the same as before sharding. In TePDist, there is a sharding initializer implemented in the C++ layer, which can, on different tensor shards, make the random number generator skip to the correct starting state to initialize and accelerate initialization in parallel through multithreading. This is very useful for super-large models.

2. Manage Complex Communication Domains with NcclContext

A complex distributed strategy may contain set communication and point-to-point communication. As each communication may involve a completely different device, the management of communication domains is complicated. TePDist collects all communication instructions and nodes before the first run starts and then establishes corresponding communication domains and stores them in the cache for reuse at an appropriate time.

3. Execution of the Task Graph

Since the task graph is a new abstraction we proposed, we need to manage its run time comprehensively, including:

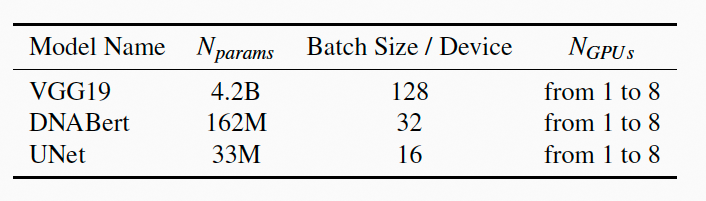

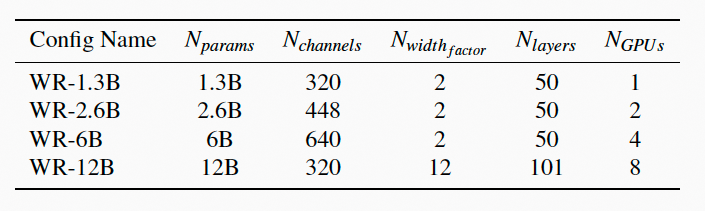

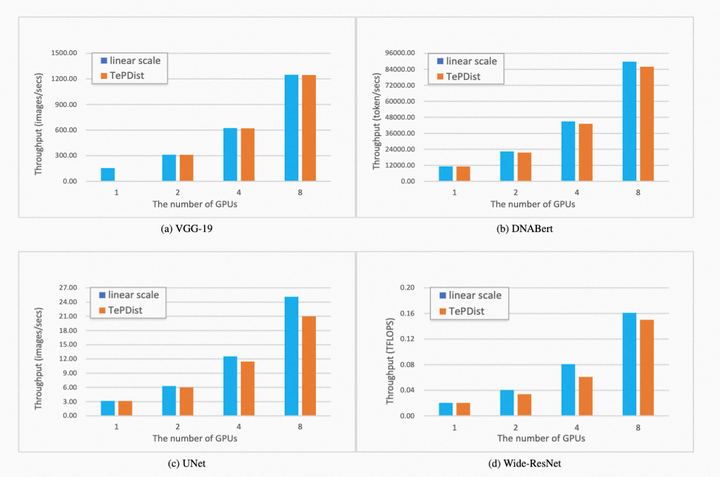

We conducted performance experiments on TePDist on the following two commercial platforms, and all experiments were conducted with FP32.

(Modify the table format according to the needs of the platforms or use pictures)

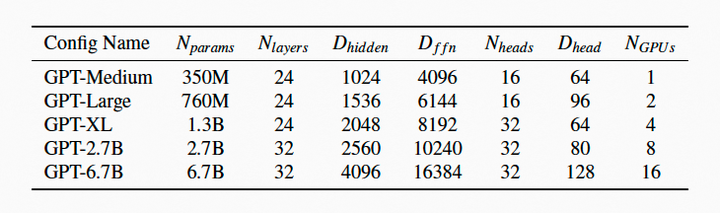

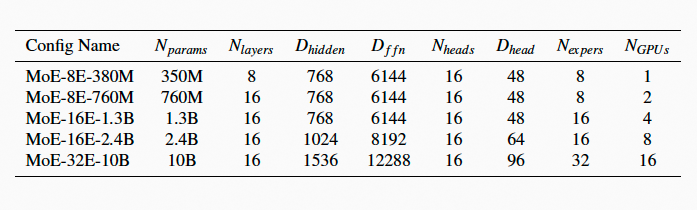

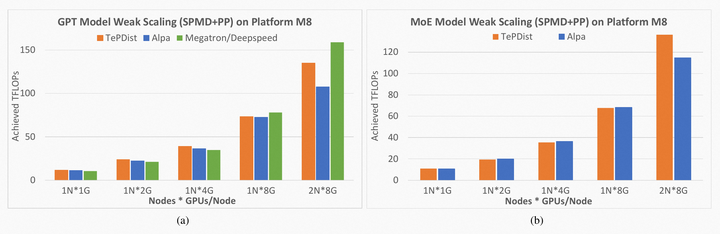

We did the model extension experiment of the SPMD + Pipeline strategy on GPT and MoE models and compared it with Alpa and Megatron/DeepSpeed.

The following two tables list the different version configurations of GPT and MoE, and the number of parameters increases row by row.

The amount of computation of statistics (TFLOPS) is applied as a measure of extensibility. The model is gradually expanded on the computing power resources from single-machine single-card to two-machine 16-card. GPT and MoE perform well in extensibility from the experimental performance. In terms of single-precision computing power, the ideal computing peak value of V100 is 15.6 TFLOPS. TePDist enables GPT and MoE to achieve 62% and 58% of their computing peaks, respectively. Compared with Alpa (an automatic distributed framework), TePDist can provide virtually equivalent performance or better performance in some cases.

We also provide benchmarks for other models to demonstrate TePDist's general performance in automation. We did data weak expansion experiments on VGG-19, DNABert, and UNet. Among them, the VGG-19 classifier was extended to the million classification level, and the experimental configuration is listed below:

A model weak expansion experiment was performed on Wide-ResNet. The experiment configuration is listed below:

On the M8 platform, TePDist showed a performance close to the ideal linear speedup ratio. In the VGG-19 experiment, TePDist found a strategy to make the last layer of the large-scale classifier model parallel.

For GPT-3 model, we compared strategy searching time under the two optimization levels of SPMD. O2 represents a three-layer searching algorithm with heuristics, and O3 represents a non-hierarchical searching algorithm. The experiment shows that O2 optimization is not dominant in searching efficiency in a small model due to the division of three layers and the use of ILP for each level. However, the search efficiency is significantly improved with the increase of the model scale. O2 search has great advantages on a super-large model.

In the future, we plan to optimize the TePDist system regularly and complete productization continuously:

Open-source address: https://github.com/alibaba/TePDist

We welcome all interested developers to build a faster and better automatic distributed system with us!

The EasyNLP Chinese Text-to-Image Generation Model Can Make Anyone an Artist in Seconds!

EasyCV | Out-of-the-Box Visual Self-Supervision + Transformer Algorithm Library

44 posts | 1 followers

FollowOceanBase - May 30, 2022

Alibaba Clouder - November 18, 2019

Alibaba Cloud Native Community - August 25, 2025

Alibaba Developer - March 8, 2021

Alibaba Clouder - November 20, 2019

Alibaba System Software - December 6, 2018

44 posts | 1 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Data Intelligence