Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights reserved to the original author.

I mentioned Hive several times in the previous articles on SQL. As the pioneer of SQL on Hadoop, Hive significantly reduces the learning and use costs of using distributed systems to process mass data while providing some means of performance optimization.

In addition to the preceding functions, Hive has an even more important function and responsibility, which is the focus of this article. Hive can act as the core of databases or even the core of data warehouses.

Although SQL can be used to analyze self-descriptive files, it is more often used to manipulate databases.

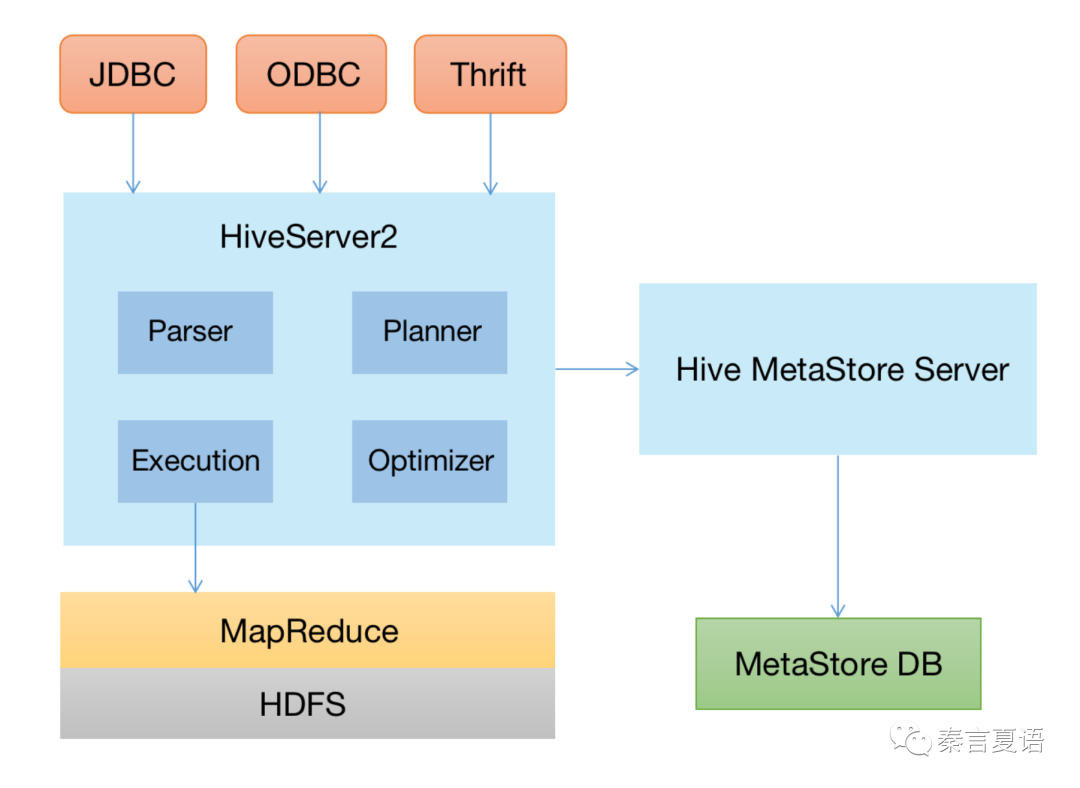

As shown on the architecture diagram of Hive, Hive provides complete metadata storage and management functions, allowing us to process data (like operating a traditional relational database and obtaining a distributed database) without introducing various middleware to do sharding.

Since we are familiar with DDL and DML operations and data planning at different levels (such as databases, tables, partitions, and buckets), I won't go into details.

With the expansion of data volume, the escalation of business complexity, and the expansion of participants, the technical database can no longer meet people's needs.

A large amount of data redundancy and job redundancy is generated, a large number of business ambiguities cannot be unified, and a large number of data islands are clustered.

Therefore, data normalization becomes urgent. In the past few decades, in the field of relational databases, a set of data warehouse methodologies has been summarized from practice to guide the construction of enterprise data.

Like the Join algorithms mentioned in the previous articles, in a distributed scenario, you can use the methodology to build a distributed data warehouse as long as you follow the example.

Building data warehouses based on the distributed database capabilities provided by Hive has also become mainstream in the big data field.

Even distributed data warehouses are not technically different from traditional data warehouses. Distributed data warehouses are more reflected at the lower layer. Let's go through the main points of data warehouses.

There are two classic data warehouse models:

As the name suggests, both models have specific characteristics:

Neither model is superior to the other, and the correct one to choose is based on different business scenarios. It even gave birth to the Hybrid Model, which combines the characteristics of each model.

For typical Internet businesses, more business agility is pursued to adapt to the rapid changes in the market. Therefore, the Dimensional Model has been more widely used.

According to the different ways of dimension combination, the Dimensional Model can be divided into two types:

As their names suggest, when the dimensions are complex and reflect the hierarchy, you can use the snowflake schema. Otherwise, the star schema is sufficient.

In the practice of data warehouses, horizontal and vertical segmentation will be done to organize and use data better:

There is no fixed method for the horizontal domain. It all depends on businesses. If the business is complex, multi-subdivision is needed. If the business is simple, make it as simple as possible.

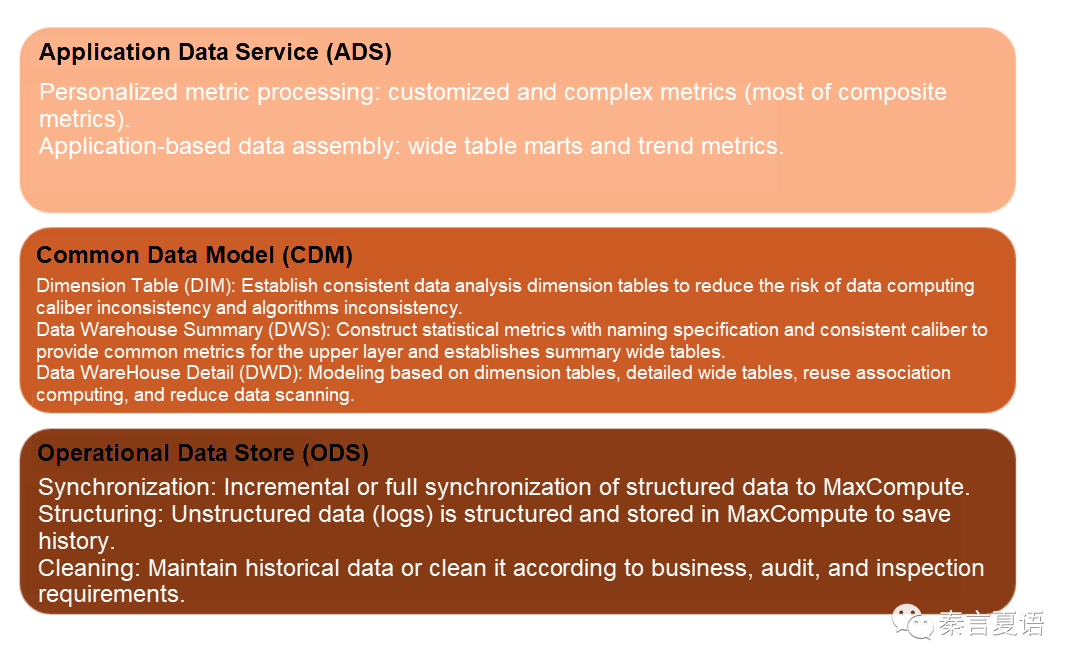

The method of vertical stratification has its practice, but there are fixed routines. Taking Alibaba, the first initiative of the data middle platform, as an example, we can see that the data flow and aggregation granularity are relatively clear.

After the data warehouse architecture is designed, sufficient restrictions and support are required in process specifications and supporting tools to ensure smooth implementation.

According to these models and specifications, we can obtain a distributed data warehouse based on Hive.

The data warehouses mentioned above are all offline data warehouses that require long data processing flows to get updated data.

For some scenarios with high requirements for real-time ability, offline data warehouses are not enough. At this time, real-time or streaming data warehouses are required.

Stream processing is a broad topic we will focus on later. Here, I will only introduce the parts related to data warehouses.

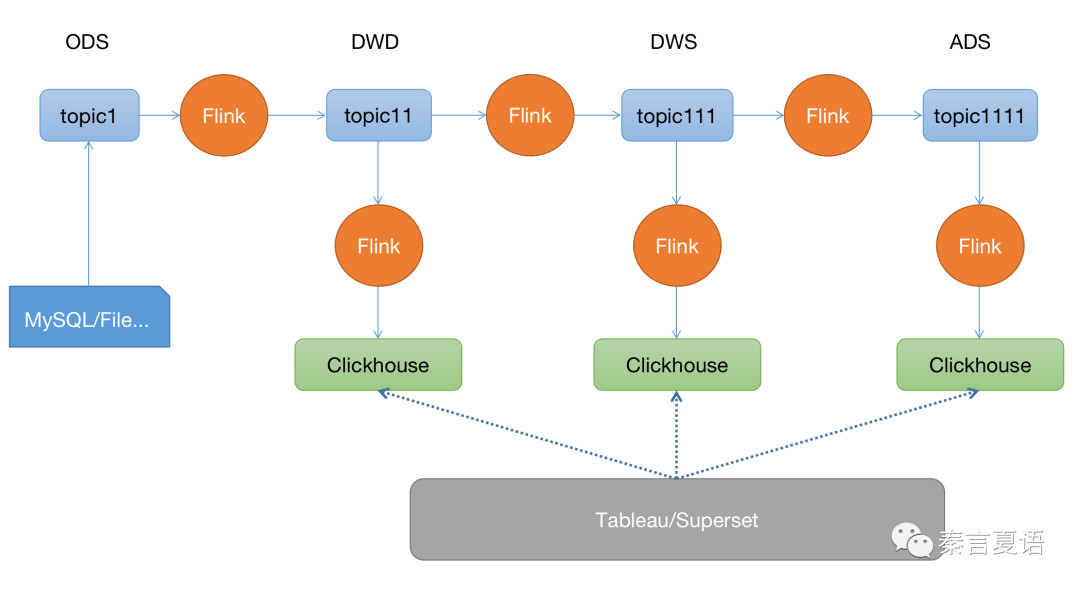

For the most typical real-time data warehouse, you can consider relying on message middleware (such as Kafka and stream processing engines represented by Flink). The main difference between real-time data warehouses and offline data warehouses is that offline tables are replaced with real-time topics, which can provide more timely data updates based on the capability of message middleware.

The hierarchy partition, dimension definition, metric definition, and construction and review processes in the offline data warehouse can be used.

This brings two sets of different technical architectures, which means the Lambda architecture in the scenario of a data warehouse and the development and maintenance costs are doubled. I'll talk more about Lambda architecture later.

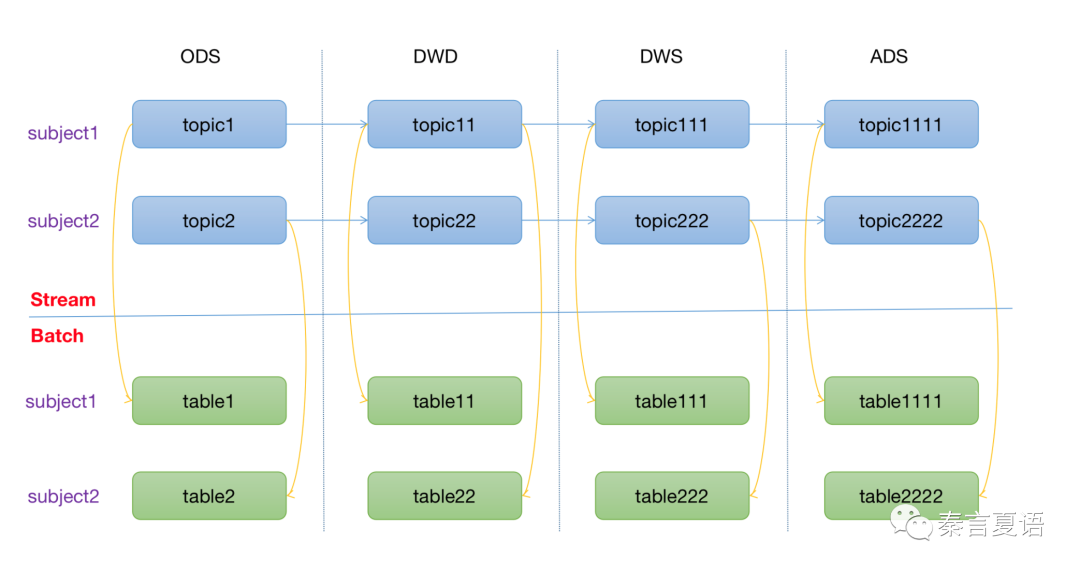

Here, we can propose a compromise improvement idea. Consider doing system-level batch and stream double write on data storage based on the streaming data warehouse in the computing engine and ETL stage. Combined with unified metadata, stream and batch data warehouse can be provided. It is related to stream-batch integration. We will talk about it later in the follow-up articles.

This is just an idea, and there are still many problems to be solved when we implement it.

On the other hand, although Kafka can also access old historical data by setting offsets, it is not good at it due to design trade-offs.

Therefore, the way that the Kafka topic replaces the Hive table to build real-time data warehouses is more suitable for real-time response scenarios, such as real-time ETL and real-time reports.

However, it is unsuitable for scenarios where a large amount of historical data needs to be processed frequently, such as exploratory ad-hoc queries or detailed data queries.

In these scenarios, you can write the data of a Kafka topic to a distributed OLAP database (such as ClickHouse and Druid in real-time).

The schematic diagram is listed below:

Another trend worth mentioning is the growing popularity of cloud-native data warehouses. Companies (like Snowflake) have gone public, and they are not small companies.

It is easy to see that data warehouses will have many specifications and restrictions in the early construction stage to use the data better.

In other words, the burden of writing is aggravated to be read-friendly.

This limits the applicable scenarios of data warehouses. For example, for some exploratory businesses, it is impossible to define the data model in advance, and it is not willing to invest a large amount of manpower and time to build standardized data warehouses at an early stage.

Some say, "The people have been suffering from data warehouses for a long time," so the concept of a data lake and some technologies and practices have emerged. I will write an article on data lake later.

Here, we need to know if the data warehouse can work well through specification construction. However, the data warehouse is not a silver bullet, so do not expect it to solve all the problems.

That's all for this article. Offline data warehouses based on Hive and real-time data warehouses based on Kafka + Flink make it easy to distribute data warehouses without worrying about storage and computing power.

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a storytelling way. Stay tuned for the next one!

Learning about Distributed Systems - Part 22: Adaptive Optimization

Alibaba Cloud Academy Student Central: The Invaluable Portal for Students

64 posts | 59 followers

FollowApsaraDB - November 17, 2020

ApsaraDB - July 4, 2022

Alibaba Clouder - April 26, 2020

Alibaba Clouder - December 13, 2019

ApsaraDB - December 27, 2023

Alibaba Cloud New Products - January 19, 2021

64 posts | 59 followers

Follow Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn MoreMore Posts by Alibaba Cloud_Academy