By Jingxuan

The concept of data lakes has recently become a hot topic. There are currently heated discussions among frontline personnel on the best way to build a data lake. Does Alibaba Cloud have a mature data lake solution? If so, has this solution been applied in actual scenarios? What is a data lake? What are the differences between a data lake and a big data platform? What data lake solutions are provided by major players in the field of cloud computing? This article attempts to answer these questions and provide deep insights into the concept of data lakes. I would like to thank Nanjing for compiling the cases in Section 5.1 of this article and thank Xibi for his review.

This article consists of seven sections:

If you have any questions when reading this article, please do not hesitate to let me know.

The data lake concept has recently become a hot topic. Many enterprises are building or plan to build their own data lakes. Before starting to plan a data lake, we must answer the following key questions:

First, I want to look at the data lake definitions provided by Wikipedia, Amazon Web Services (AWS), and Microsoft.

Wikipedia defines a data lake as:

A data lake is a system or repository of data stored in its natural/raw format,[1] usually object blobs or files. A data lake is usually a single store of all enterprise data, including raw copies of source system data and transformed data used for tasks, such as reporting, visualization, advanced analytics, and machine learning. A data lake can include structured data from relational databases (rows and columns), semi-structured data (CSV, logs, XML, JSON), unstructured data (emails, documents, PDFs), and binary data (images, audio, video). [2]A data swamp is a deteriorated and unmanaged data lake that is either inaccessible to its intended users or is providing little value.

AWS defines a data lake in a more direct manner:

A data lake is a centralized repository that allows you to store all of your structured and unstructured data at any scale. You can store your data as-is, without having to first structure the data, and run different types of analytics—from dashboards and visualizations to big data processing, real-time analytics, and machine learning to guide better decisions.

Microsoft's definition of a data lake is more ambiguous. It just lists the features of a data lake.

Azure Data Lake includes all of the capabilities required to make it easy for developers, data scientists, and analysts to store data of any size, shape, and speed, and do all types of processing and analytics across platforms and languages. It removes the complexities of ingesting and storing all of your data while making it faster to get up and running with batch, streaming, and interactive analytics. Azure Data Lake works with existing IT investments for identity, management, and security for simplified data management and governance. It also integrates seamlessly with operational stores and data warehouses so you can extend current data applications. We've drawn on the experience of working with enterprise customers and running some of the largest scale processing and analytics in the world for Microsoft businesses like Office 365, Xbox Live, Azure, Windows, Bing, and Skype. Azure Data Lake solves many of the productivity and scalability challenges that prevent you from maximizing the value of your data assets with a service that's ready to meet your current and future business needs.

Regardless of the source, most definitions of the data lake concept focus on the following characteristics of data lakes:

In short, a data lake is an evolving and scalable infrastructure for big data storage, processing, and analytics. Oriented toward data, a data lake can retrieve and store full data of any type and source at any speed and scale. It processes data in multiple modes and manages data throughout its lifecycle. It also supports enterprise applications by interacting and integrating with a variety of disparate external data sources.

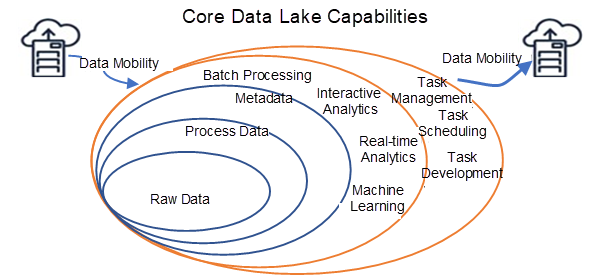

Figure 1: A schematic drawing of a data lake's basic capabilities

Note the following two points:

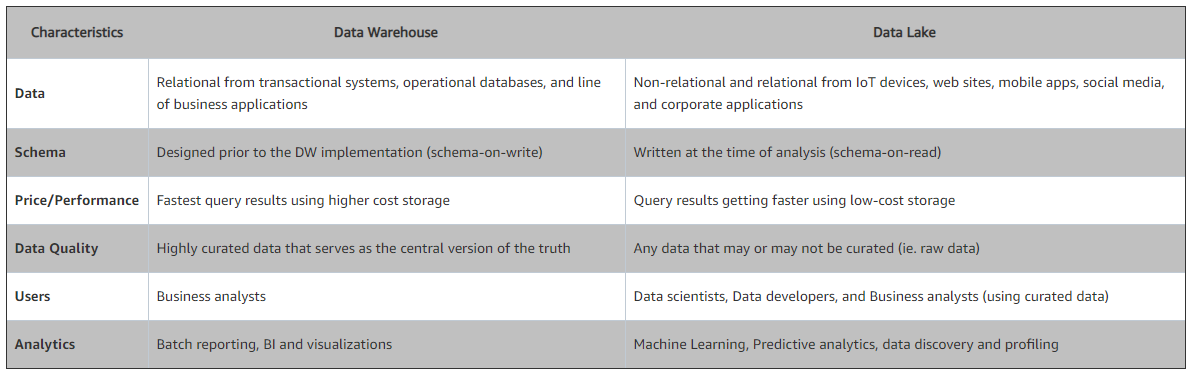

This section introduces the basic characteristics of a data lake, especially the characteristics that differentiate a data lake from a big data platform or a traditional data warehouse. First, let's take a look at a comparison table from the AWS website.

The preceding table compares the differences between a data lake and a traditional data warehouse. We can further analyze the characteristics of a data lake in terms of data and computing:

1. Data Fidelity: A data lake stores data as it is in a business system. Different from a data warehouse, a data lake stores raw data, whose format, schema, and content cannot be modified. A data lake stores your business data as-is. The stored data can include data of any format and of any type.

2. Data Flexibility: As shown in the "Schema" row of the preceding table, schema-on-write or schema-on-read indicates the phase in which the data schema is designed. A schema is essential for any data application. Even schema-less databases, such as MongoDB, recommend using identical or similar structures as a best practice. Schema-on-write means a schema for data importing is determined based on a specific business access mode before data is written. This enables effective adaptation between data and your businesses but increases the cost of data warehouse maintenance at the early stage. You may be unable to flexibly use your data warehouse if you do not have a clear business model for your start-up.

A data lake adopts schema-on-read, meaning it sees business uncertainty as the norm and can adapt to unpredictable business changes. You can design a data schema in any phase as needed, so the entire infrastructure generates data that meets your business needs. Fidelity and flexibility are closely related to each other. Since business changes are unpredictable, you can always keep data as-is and process data as needed. Therefore, a data lake is more suitable for innovative enterprises and enterprises with rapid business changes and growth. A data lake is intended for data scientists and business analysts that usually need highly efficient data processing and analytics and prefer to use visual tools.

3. Data Manageability: A data lake provides comprehensive data management capabilities. Due to its fidelity and flexibility, a data lake stores at least two types of data: raw data and processed data. The stored data constantly accumulates and evolves. This requires robust data management capabilities, which cover data sources, data connections, data formats, and data schemas. A data schema includes a database and related tables, columns, and rows. A data lake provides centralized storage for the data of an enterprise or organization. This requires permission management capabilities.

4. Data Traceability: A data lake stores the full data of an organization or enterprise and manages the stored data throughout its lifecycle, from data definition, access, and storage to processing, analytics, and application. A robust data lake fully reproduces the data production process and data flow, ensuring that each data record is traceable through the processes of access, storage, processing, and consumption.

A data lake requires a wide range of computing capabilities to meet your business needs.

5. Data Rich Computing Engines: A data lake supports a diversity of computing engines, including batch processing, stream computing, interactive analytics, and machine learning engines. Batch processing engines are used for data loading, conversion, and processing. Stream computing engines are uses for real-time computing. Interactive analytics engines are used for exploratory analytics. The combination of big data and artificial intelligence (AI) gave birth to a variety of machine learning and deep learning algorithms. For example, TensorFlow and PyTorch can be trained on sample data from the Hadoop Distributed File System (HDFS), Amazon S3, or Alibaba Cloud Object Storage Service (OSS). Therefore, a qualified data lake project should provide support for scalable and pluggable computing engines.

6. Multi-Modal Storage Engine: In theory, a data lake should provide a built-in multi-modal storage engine to enable data access by different applications, while considering a series of factors, such as the response time (RT), concurrency, access frequency, and costs. However, in reality, the data stored in a data lake is not frequently accessed, and data lake-related applications are still in the exploration stage. To strike a balance between cost and performance, a data lake is typically built by using relatively inexpensive storage engines, such as Amazon S3, Alibaba Cloud OSS, HDFS, or Object-Based Storage (OBS). When necessary, a data lake can collaborate with external storage engines to meet the needs of various applications.

A data lake is a next-generation big data infrastructure. First, let's take a look at the evolution of the big data infrastructure.

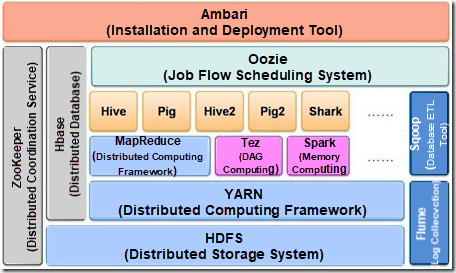

Phase 1: This shows the offline data processing infrastructure, as represented by Hadoop. As shown in Figure 2, Hadoop is a batch data processing infrastructure that uses HDFS as its core storage and MapReduce (MR) as the basic computing model. A series of components have been developed for HDFS and MR. These components continuously improve big data platforms' data processing capabilities, such as HBase for online key-value (KV) operations, Hive for SQL, and Pig for workflows. New computing models are constantly proposed to meet increasing needs for batch processing performance, resulting in computing engines, such as Tez, Spark, and Presto. The MR model has also evolved into the directed acyclic graph (DAG) model. The DAG model improves computing models' abstract concurrency. It splits each computing process by dividing a job into logical stages based on aggregation operations. Each stage consists of one or more tasks, which are executed concurrently to improve the computing process parallelism. To reduce the frequency of writing intermediate results from data processing, computing engines, such as Spark and Presto, cache data in the memory of compute nodes whenever possible. This improves data process efficiency and system throughput.

Figure 2: Hadoop Architecture Diagram

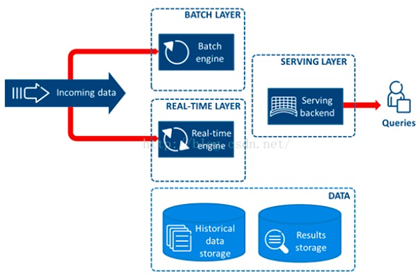

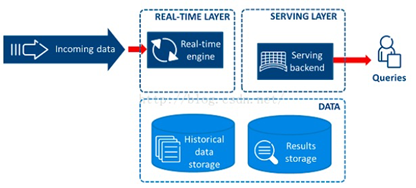

Phase 2: Lambda architecture. With constant changes in data processing capabilities and processing demand, you may find it impossible to achieve high real-time performance in certain processing scenarios no matter how you improve the batch processing performance. This problem is solved by stream computing engines, such as Storm, Spark Streaming, and Flink. Batch processing is combined with stream computing to meet the needs of many emerging applications. Lambda provides a data schema that unifies the results returned by batch processing and stream computing, so you do not have to concern yourself with what underlying computing model is used. Figure 3 shows the Lambda architecture. The Lambda and Kappa architecture diagrams were sourced from the Internet.

Figure 3: Lambda Architecture Diagram

The Lambda architecture integrates stream computing and batch processing. Data flows through the Lambda platform from left to right, as shown in Figure 3. The incoming data is divided into two parts. One part is subject to batch processing, and the other part is subject to stream computing. The final results of batch processing and stream computing are provided to applications through the service layer, ensuring access consistency.

Phase 3: Kappa architecture. The Lambda architecture allows applications to read data consistently. However, the separation of batch processing and stream computing complicates research and development. Is there a single system to solve all these problems? A common practice is to use stream computing, which features an inherent and highly scalable distributed architecture. The two computing models of batch processing and stream computing are unified by improving the stream computing concurrency and increasing the time window of streaming data.

Figure 4: Kappa Architecture Diagram

In short, the big data infrastructure has evolved from the Hadoop architecture to the Lambda and Kappa architecture. Big data platforms process the full data of an enterprise or organization while providing a full range of data processing capabilities to meet application needs. In current enterprise practices, relational databases store data based on independent business systems. Other data is stored on big data platforms for unified processing. The big data infrastructure is specially designed for storage and computing, but it ignores data asset management. A data lake is designed based on a consideration of asset management.

Once, I read an interesting article that raised this question: Why do we use the term "data lake" instead of data river or data sea? I would like to answer this question by making the following points:

As the big data infrastructure evolves, enterprises and organizations manage data as an important asset type. To make better use of data, enterprises and organizations must take the following measures to manage data assets:

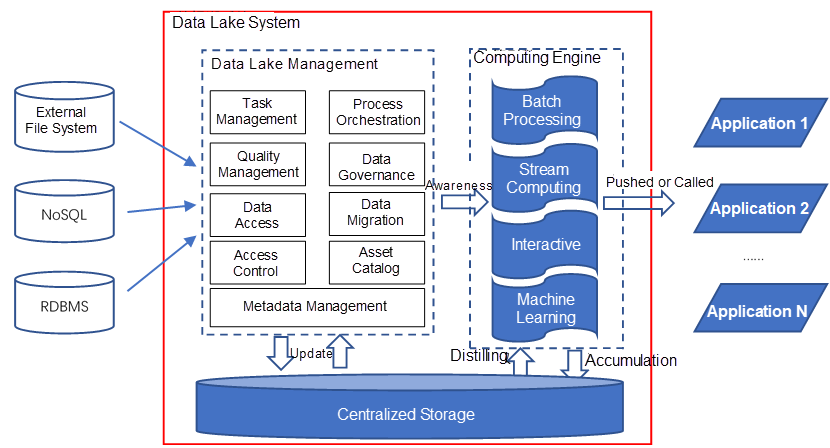

A data lake not only provides the basic capabilities of a big data platform, but also data management, data governance, and data asset management capabilities. To implement these capabilities, a data lake provides a series of data management components, including data access, data migration, data governance, quality management, asset catalog, access control, task management, task orchestration, and metadata management. Figure 5 shows the reference architecture of a data lake system. Similar to a big data platform, a typical data lake provides the storage and computing capabilities needed to process data at an ultra-large-scale, as well as multi-modal data processing capabilities. In addition, a data lake provides the following more sophisticated data management capabilities:

Figure 5: The Reference Architecture of Data Lake Components

The centralized storage shown in Figure 5 is a business-related concept. It provides a unified area for the storage of the internal data of an enterprise or organization. A data lake uses a scalable distributed file system for storage. Most data lake practices recommend using distributed systems, such as Amazon S3, Alibaba Cloud OSS, OBS, and HDFS, as the data lake's unified storage.

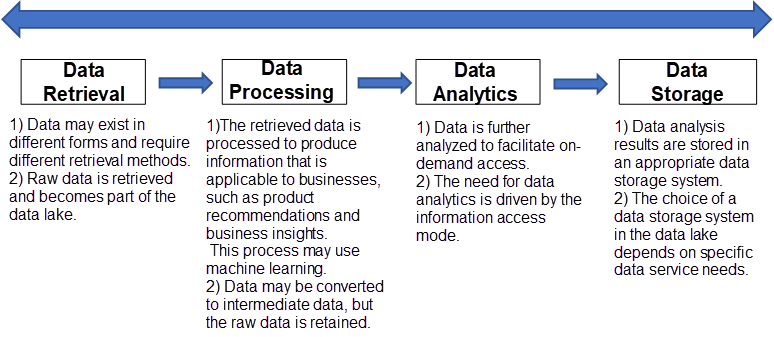

Figure 6 illustrates the overall data lifecycle in a data lake. In theory, a well-managed data lake retains raw data permanently, while constantly improving and evolving process data to meet your business needs.

Figure 6: Data Lifecycle in a Data Lake

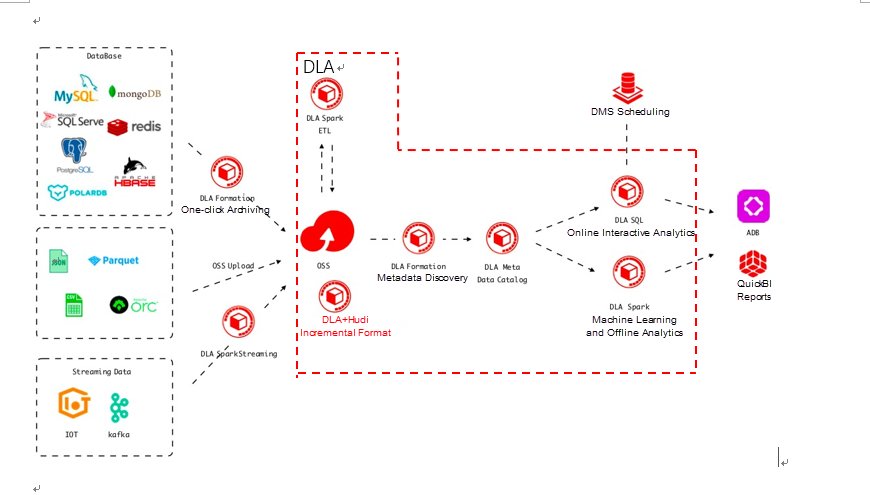

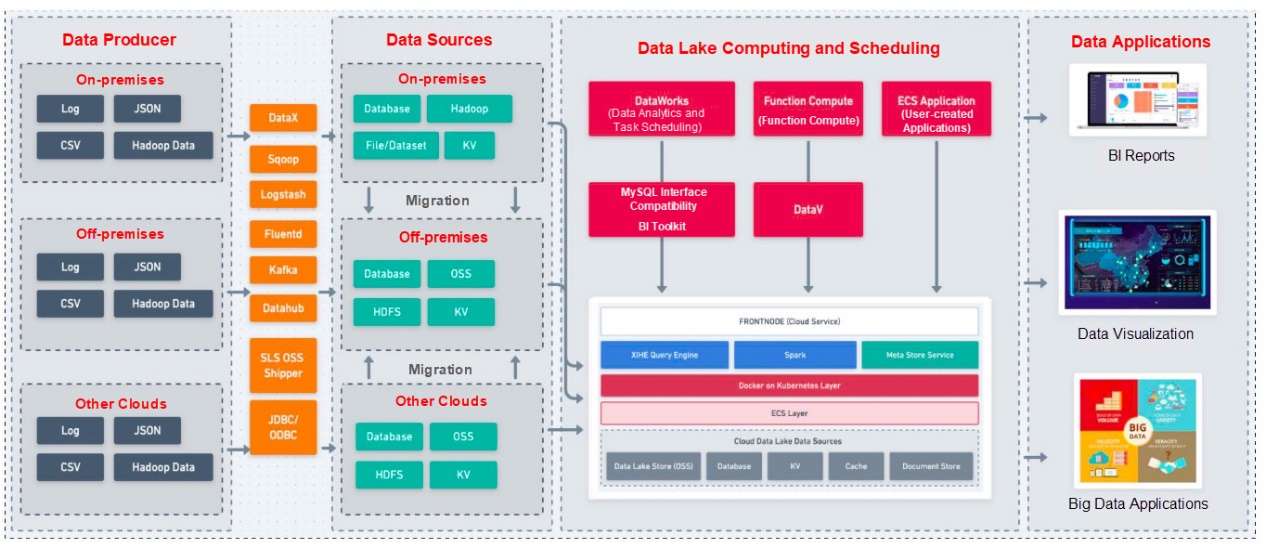

Alibaba Cloud provides a wide range of data products. I currently work in the data business unit. In this section, I will focus on how to build a data lake using the products of the data business unit. Other cloud products may also be involved. Alibaba Cloud's data lake solution is specially designed for data lake analytics and federated analytics. It is based on Alibaba Cloud's database products. Figure 12 illustrates Alibaba Cloud's data lake solution.

Figure 12: Data Lake Solution Provided by Alibaba Cloud

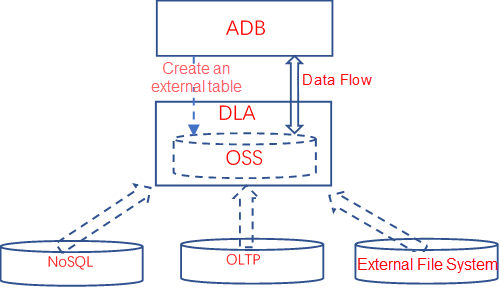

The solution uses Alibaba Cloud OSS as the data lake's centralized storage. The solution can use all Alibaba Cloud databases as data sources, including online transaction processing (OLTP), OLAP, and NoSQL databases. Alibaba Cloud's data lake solution provides the following key features:

This further refines the data application architecture of Alibaba Cloud's data lake solution.

Figure 13: Data Application Architecture of Alibaba Cloud's Data Lake Solution

Data flows from left to right. Data producers produce all types of data, including on-premises data, off-premises data, and data from other clouds, and use tools to upload the produced data to generic or standard data sources, including Alibaba Cloud OSS, HDFS, and databases. DLA implements data discovery, data access, and data migration to build a complete data lake that is adaptable to all types of data sources. DLA processes incoming data based on SQL and Spark and externally provides visual data integration and development capabilities based on DataWorks and DMS. To implement external application service capabilities, DLA provides a standard Java database connectivity (JDBC) interface that can be directly connected to all types of report tools and dashboards. Based on Alibaba Cloud's database ecosystem, including OLTP, OLAP, and NoSQL databases, DLA provides SQL-based external data processing capabilities. If your enterprise develops technology stacks based on databases, DLA allows you to implement transformations more easily and at a lower cost.

DLA integrates data lakes and data warehouses based on cloud-native. Traditional enterprise data warehouses are still essential for report applications in the era of big data. However, data warehouses do not support flexible data analytics and processing. Therefore, we recommend deploying a data warehouse as an upper-layer application in a data lake. The data lake is the only place to store your enterprise or organization's raw business data. It processes raw data as required by business applications to generate reusable intermediate results. DLA pushes the intermediate results to the data warehouse with a relatively fixed data schema, so you can implement business applications based on the data warehouse. DLA is deeply integrated with AnalyticDB in the following two aspects:

The combination of DLA and AnalyticDB integrates data lakes and data warehouses under cloud-native. DLA can be viewed as the near-source layer of a scalable data warehouse. Compared with a traditional data warehouse, this near-source layer provides the following advantages:

By integrating DLA and AnalyticDB, you can enjoy the processing capabilities of a big data platform and a data warehouse at the same time.

DLA enables "omnidirectional" data mobility, allowing you to access data in any location just as you would access data in a database, regardless of whether the data is on-premises or off-premises, inside or outside your organization. In addition, the data lake monitors and records inter-system data mobility so you can trace the data flow.

A data lake is more than a technical platform and can be implemented in many ways. The maturity of a data lake is primarily evaluated based on its data management capabilities and its interworking with peripheral ecosystems. Data management capabilities include capabilities related to metadata, data asset catalogs, data sources, data processing tasks, data lifecycles, data governance, and permission management.

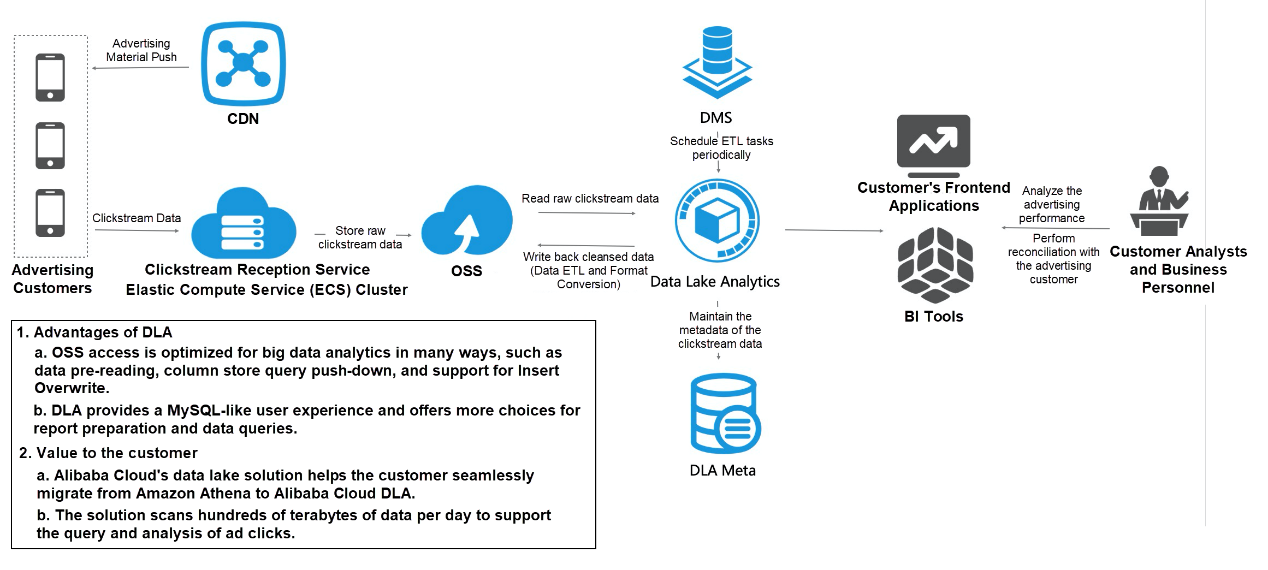

In recent years, the cost of traffic acquisition has been increasing, forcing many companies to invest heavily to attract new online customers. Increasing Internet advertising costs have made companies give up the strategy of expanding their customer bases by buying traffic. Frontend traffic optimization no longer works well. An effective way to break out of ineffective online advertising methods is to use data tools to convert more of your website visitors into paying customers and refine ad serving comprehensively. Big data analytics is essential for the conversion from advertising traffic into sales.

To provide a stronger foundation for decision support, you can collect more tracking data, including channels, ad serving times, and target audiences. Then, you can analyze the data based on the click-through rate (CTR) to develop strategies that lead to better performance and higher productivity. Data lake analytics products and solutions are widely favored by advertisers and ad publishers. They provide next-generation technologies to help collect, store, and analyze a wide variety of structured, semi-structured, and unstructured data related to ad serving.

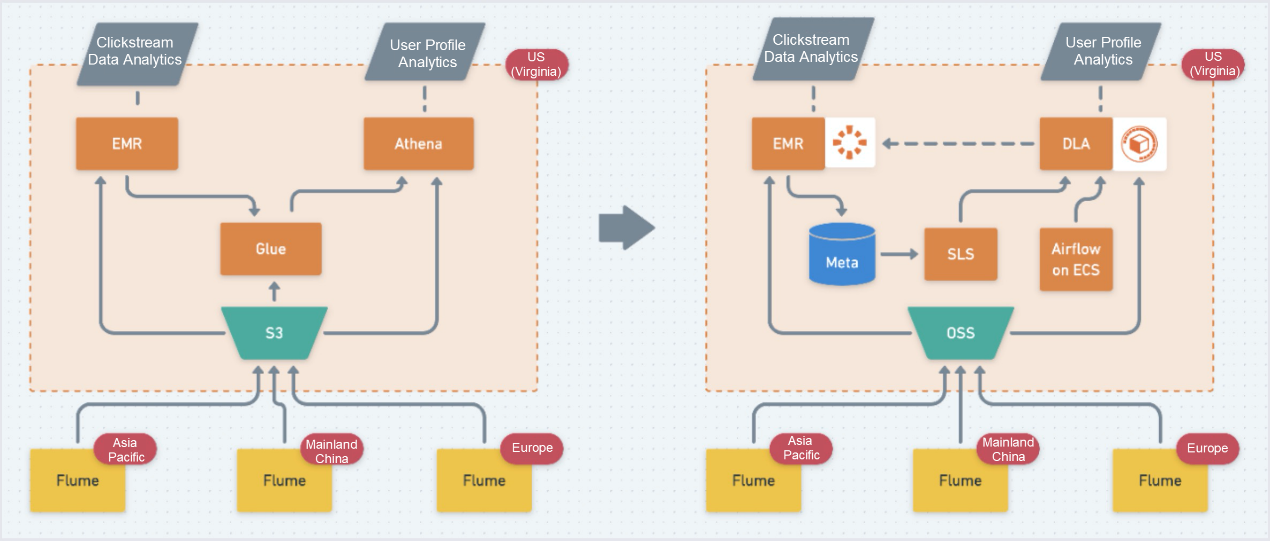

DG is a leading global provider of intelligent marketing services to enterprises looking to expand globally. Based on its advanced advertising technology, big data, and operational capabilities, DG provides customers with high-quality services for user acquisition and traffic-to-sales conversion. When it was founded, DG decided to build its IT infrastructure on a public cloud. Initially, DG chose the AWS cloud platform. It stored its advertising data in a data lake built on Amazon S3 and used Amazon Athena for interactive analytics. However, the rapid development of Internet advertising has created several challenges for the advertising industry. This has given rise to mobile advertising and tracking systems designed to solve the following problems:

Apart from the preceding three business challenges, DG was also facing rapidly increasing daily data volumes, having to scan more than 100 TB of data daily. The AWS platform no longer provided sufficient bandwidth for Amazon Athena to read data from Amazon S3, and data analytics seriously lagged. To drive down analytics costs resulting from exponential data growth, DG decided on full migration from the AWS platform to the Alibaba Cloud platform after meticulous testing and analysis. Figure 16 shows the architecture of DG's transformed advertising data lake solution.

Figure 16: DG's Transformed Advertising Data Lake Solution

After migration, we integrated DLA and OSS to provide superior analytics capabilities for DG. This would allow it to better deal with traffic peaks and valleys. On the one hand, this made it easy to perform provisional analysis on data collected from brand customers. On the other hand, DLA provides powerful computing capabilities, allowing DG to analyze ad serving on a monthly and quarterly basis, accurately calculate the number of activities for each brand, and analyze the ad performance of each activity in terms of media, markets, channels, and data management platforms (DMPs). This approach allows an intelligent traffic platform to better improve the conversion rate for brand marketing. In terms of the total cost of ownership (TCO) for ad serving and analytics, DLA provides serverless elastic services that are billed in pay-as-you-go mode, with no need to purchase fixed resources. Customers can purchase resources based on the peaks and valleys of their businesses. This meets the needs of elastic analytics and significantly lowers O&M costs and operational costs.

Figure 17: The Deployment of a Data Lake

Overall, DG was able to significantly lower its hardware costs, labor costs, and development costs after migration from AWS to Alibaba Cloud. By using DLA's serverless cloud services, DG did not pay a lot of upfront fees for servers, storage, and devices and the company did not have to purchase many cloud services all at once. Instead, DG can scale out its infrastructure as needed. It adds servers during business peaks and reduces servers during business valleys, improving its capital utilization. The Alibaba Cloud platform also empowered DG with improved performance. DG's mobile advertising system frequently encountered exponential increases in traffic volume during its rapid business growth and introduction of multiple business lines. After DG migrated to Alibaba Cloud, Alibaba Cloud's DLA team worked with the OSS team to implement deep optimizations and transformations to significantly improve DG's analytics performance through DLA. The DLA computing engine dedicated to database analytics and the AnalyticDB shared computing engine, which ranked first in the TPC Benchmark DS (TPC-DS), improve performance dozens of times over compared with the Presto-native computing engine.

A data lake is a type of big data infrastructure with an excellent total cost of operation (TCO) performance. For many fast-growing game companies, a popular game often results in extremely fast data growth in a short time. If this happens, it is difficult for R&D personnel to adapt their technology stacks to the amount and speed of data growth. It is also difficult to utilize data growing at such a fast rate. A data lake is a technical solution that can solve these problems.

YJ is a fast-growing game company. It plans to develop and operate games based on an in-depth analysis of user behavior data. There is a core logic behind data analytics. As the gaming industry becomes more competitive, gamers are demanding higher quality products and the lifecycles of game projects are becoming increasingly short, which directly affects projects' return on investment (ROI). Through data operations, developers can effectively extend their project lifecycles and precisely control the various business stages. In addition, traffic costs are constantly increasing. Therefore, it is increasingly important to create an economic and efficient precision data operations system to better support business development. A company relies on its technical decision makers to select an appropriate infrastructure to support its data operations system by considering the following factors:

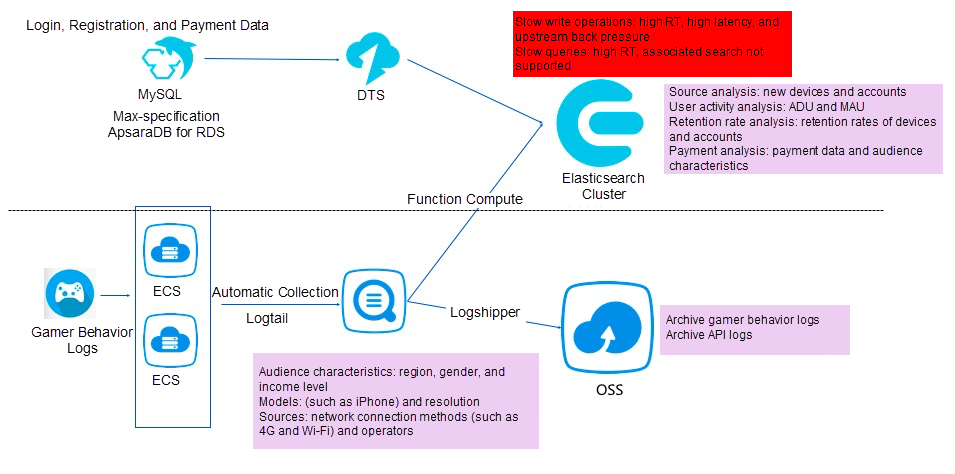

Figure 18: YJ's Data Analytics Solution Before the Transformation

Before the transformation, YJ stored all its structured data in a max-specification MySQL database. Gamer behavior data was collected by Logtail in Log Service (SLS) and then shipped to OSS and Elasticsearch. This architecture had the following problems:

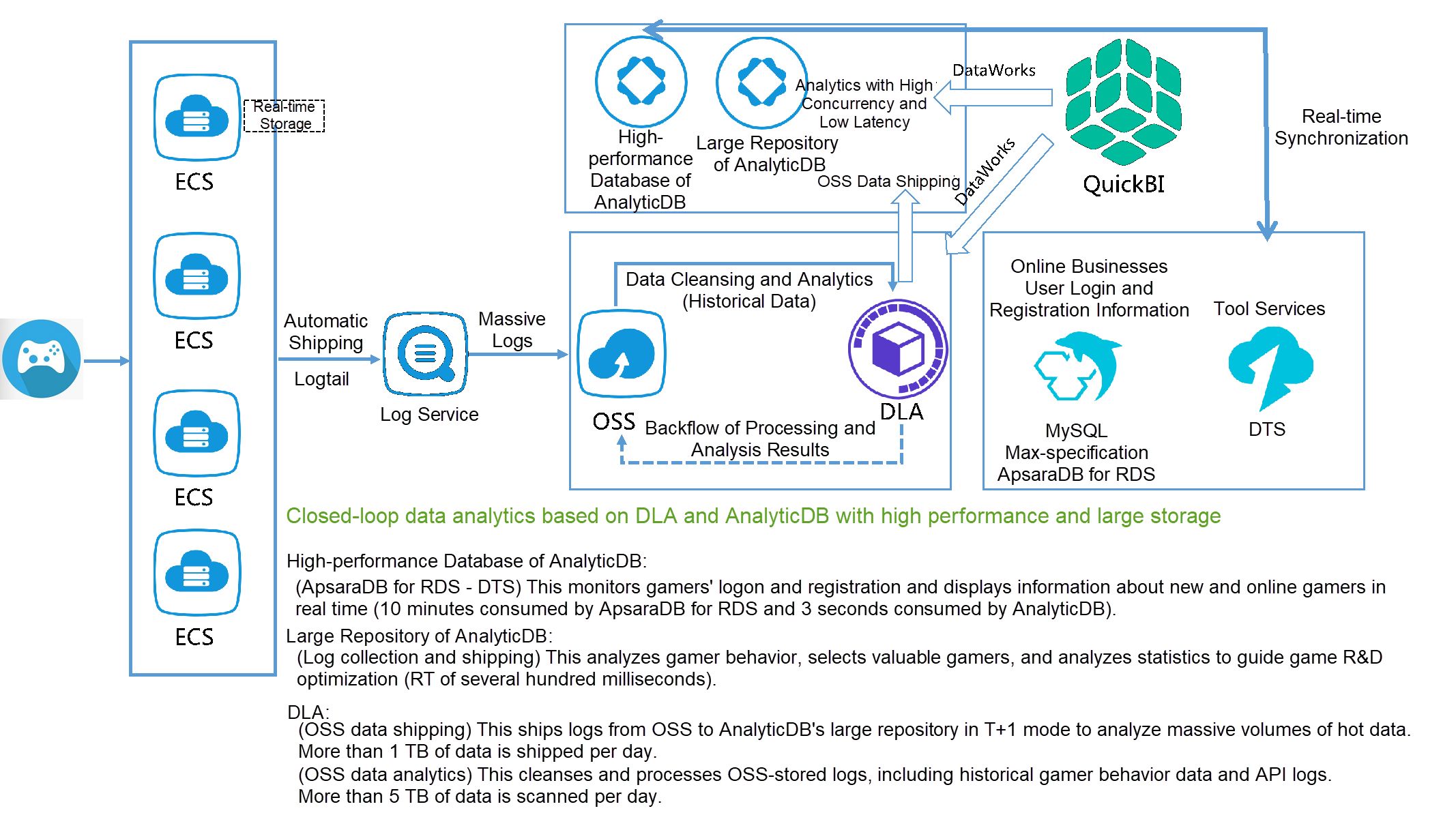

Our analysis showed that YJ's architecture was a prototype of a data lake because full data is stored in OSS. A simple way to transform the architecture was to improve YJ's ability to analyze the data in OSS. A data lake would support SQL-based data processing, allowing YJ to develop technology stacks. In short, we transformed YJ's architecture to help it build a data lake, as shown in Figure 19.

Figure 19: YJ's Data Lake Solution After the Transformation

While retaining the original data flow, the data lake solution adds DLA for secondary processing of data stored in OSS. DLA provides a standard SQL computing engine and supports access to various disparate data sources. The DLA-processed data can be directly used by businesses. The data lake solution introduces AnalyticDB, a cloud-native data warehouse, to support low-latency interactive analytics that otherwise cannot be implemented by DLA. The solution also introduces QuickBI in the frontend for visual analysis. Figure 14 illustrates a classic implementation of data lake-data warehouse integration in the gaming industry.

YM is a data intelligence service provider. It provides data analytics and operations services to medium- and small-sized merchants. Figure 20 shows the Software as a Service (SaaS) model of YM's data intelligence services.

Figure 20: SaaS Model of YM's Data Intelligence Services

The platform provides multi-client SDKs for merchants to access tracking data in diverse forms, such as webpages, apps, and mini programs. The platform also provides unified data access and analytics services in SaaS mode. Merchants can analyze this tracking data at a fine granularity through data analytics services. The analyzed data can be used for basic analytics functions, such as behavior statistics, customer profiling, customer selection, and ad serving monitoring. However, this SaaS model has the following problems:

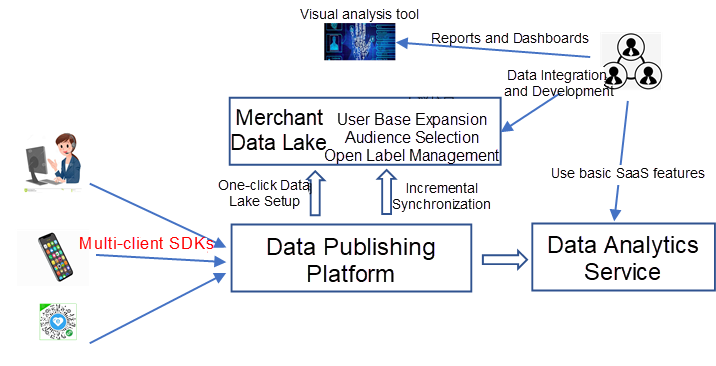

Therefore, we introduced a data lake to the SaaS model shown in Figure 20 to provide an infrastructure for data accumulation, modeling, and operations analytics. Figure 21 shows a SaaS-based data intelligence service model supported by a data lake.

Figure 21: SaaS-Based Data Intelligence Service Model Supported by a Data Lake

As shown in Figure 21, the platform allows each merchant to build its own data lake in one click. The merchant can synchronize its full tracking data and the data schema to the data lake, and also archive daily incremental data to the data lake in T+1 mode. The data lake-based service model not only provides traditional data analytics services, but also three major capabilities: data asset management, analytic modeling, and service customization.

A data lake is a more sophisticated big data processing infrastructure than a traditional big data platform. It is a technology that is better adapted to customers' businesses. A data lake provides more features than a big data platform, such as metadata, data asset catalogs, permission management, data lifecycle management, data integration and development, data governance, and quality management. All these features are easy to use and allow the data lake to better meet business needs. To meet your business needs at an optimal TCO, data lakes provide some basic technical features, such as the separate extension of storage and computing, a unified storage engine, and a multi-mode computing engine.

The data lake setup process is business-oriented and different from the process of building a data warehouse or data mid-end, which are also popular technologies. The difference is that a data lake is built in a more agile manner. It is operational during setup and manageable during use. To better understand the agility of the data lake setup, let's first review the process of building a data warehouse. A data warehouse can be built by using bottom-up and top-down approaches, which were proposed by Bill Inmon and Ralph Kimball, respectively. The two approaches are briefly summarized here:

1. Bill Inmon proposed the bottom-up approach, in which a data warehouse is built using the enterprise data warehouse and data mart (EDW-DM) model. ETL tools are used to transfer data from data sources of an operational or transactional system to the data warehouse's operational data store (ODS). Data in the ODS is processed based on the predefined EDW paradigm and then transferred to the EDW. An EDW is an enterprise- or organization-wide generic data schema, which is not suitable for direct data analytics performed by upper-layer applications. Therefore, business departments build DMs based on the EDW.

A DM is easy to maintain and highly integrated, but it lacks flexibility once its structure is determined and takes a long time to deploy due to the need to adapt to your business needs. The Inmon model is suitable for building data warehouses for relatively mature businesses, such as finance.

2. Ralph Kimball proposed the top-down (DM-DW) data schema. Data from data sources of an operational or transactional system is extracted or loaded to the ODS. Data in the ODS is used to build a multidimensional subject DM by using the dimensional modeling method. All DMs are associated through consistent dimensions to form an enterprise- or organization-wide generic data warehouse.

The top-down (DM-DW) data schema provides a fast warehouse setup, quick ROI, and agility. However, it is difficult to maintain as an enterprise resource and has a complex structure. In addition, DMs are difficult to integrate. The top-down (DM-DW) data schema applies to small and medium-sized and enterprises and Internet companies.

The division of implementation into top-down and bottom-up approaches is only theoretical. Whether you build an EDW or a DM first, you need to analyze data and design a data schema before building a data warehouse or data mid-end. Figure 22 illustrates the basic process of building a data warehouse or data mid-end.

Figure 22: Basic Process of Building a Data Warehouse or Data Mid-End

For a fast-growing Internet company, the preceding steps are cumbersome and impossible to implement especially data schema abstraction. In many cases, businesses are conducted through a trial-and-error exploration without a clear direction. This makes it impossible to build a generic data schema, without which the subsequent steps become unfeasible. This is one of the reasons why many fast-growing startups find it difficult to build a data warehouse or data mid-end to meet their needs.

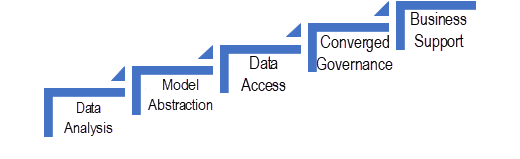

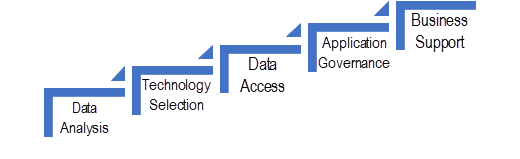

In contrast, a data lake can be built in an agile manner. We recommend building a data lake according to the following procedure.

Figure 23: Basic Data Lake Construction Process

Compared with Figure 22, which illustrates the basic process of building a data warehouse or data mid-end, Figure 23 illustrates a five-step process for building a data lake in a simpler and more feasible manner:

1. Data Analysis: Analyze basic information about the data, including the data sources, data types, data forms, data schemas, total data volume, and incremental data volumes. That is all that needs to be done in the data analysis step. A data lake stores full raw data, so it is unnecessary to perform in-depth design in advance.

2. Technology Selection: Select the technologies used to build a data lake based on the data analysis results. The industry already has many universal practices for data lake technology selection. You should follow three basic principles: separation of computing and storage, elasticity, and independent extensions. We recommend using a distributed object storage system, such as Amazon S3, Alibaba Cloud OSS, or OBS, and select computing engines by considering your batch processing needs and SQL processing capabilities. In practice, batch processing and SQL processing are essential for data processing. Stream computing engines will be described later. We also recommend using serverless computing and storage technologies, which can be evolved in applications to meet future needs. You can build a dedicated cluster when you need an independent resource pool.

3. Data Access: Determine the data sources to be accessed and complete full data extraction and incremental data access.

4. Application Governance: Application governance is the key to a data lake. Data applications in the data lake are closely related to data governance. Clarify your needs based on data applications, and generate business-adapted data during the data ETL process. At the same time, create a data schema, metrics system, and quality standards. A data lake focuses on raw data storage and exploratory data analytics and applications. However, this does not mean the data lake does not require any data schema. Business insights and abstraction can significantly promote the development and application of data lakes. Data lake technology enables agile data processing and modeling, helping you quickly adapt to business growth and changes.

From the technical perspective, a data lake is different from a big data platform in that the former provides sophisticated capabilities to support full-lifecycle data management and applications. These capabilities include data management, category management, process orchestration, task scheduling, data traceability, data governance, quality management, and permission management. In terms of computing power, current mainstream data lake solutions support SQL batch processing and programmable batch processing. You can use the built-in capabilities of Spark or Flink to support machine learning. Almost all processing paradigms use the DAG-based workflow model and provide corresponding integrated development environments. Support for stream computing varies in different data lake solutions. First, let's take a look at existing stream computing models:

1. **Real-Time Stream Computing:** This model processes one incoming data record at a time, which is called mini batch processing. It is often applied in online businesses, such as risk control, recommendations, and warnings.

2. **Quasi-Stream Computing:** This model is used to retrieve data changed after a specified time point, read data of a specific version, or read the latest data. It is often applied in exploratory data applications, such as for the analysis of daily active users, retention rates, and conversion rates.

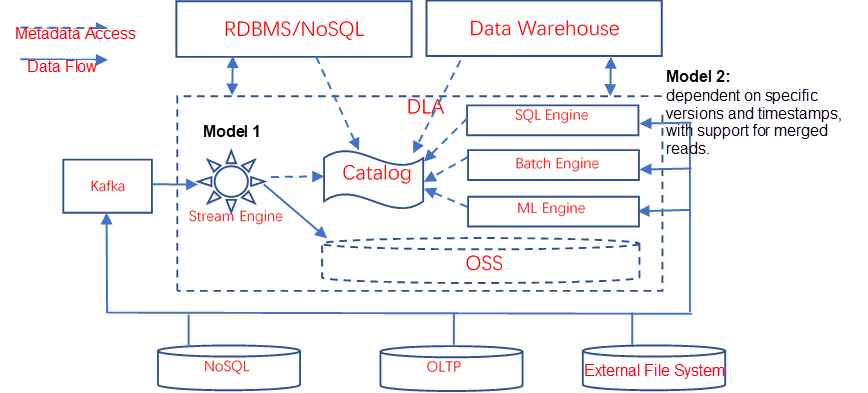

When the real-time stream computing model is processing data, the data is still in the network or memory but has not yet been stored in the data lake. The quasi-stream computing model only processes data that is already stored in the data lake. I recommend using the stream computing model illustrated in Figure 24.

Figure 24: Data Flow in a Data Lake

As shown in Figure 24, to apply the real-time stream computing model to the data lake, you can introduce Kafka-like middleware as the data forwarding infrastructure. A comprehensive data lake solution can direct the raw data flow to Kafka. A stream engine reads data from the Kafka-like component. Then, the engine writes the data processing results to OSS, a relational database management system (RDBMS), NoSQL database, or data warehouse as needed. The processed data can be accessed by applications. In a sense, you can introduce a real-time stream computing engine to the data lake based on your application needs. Note the following points when using a stream computing engine:

1. The stream engine must be able to conveniently read data from the data lake.

2. Stream engine tasks must be included in task management in the data lake.

3.Streaming tasks must be included in unified permission management.

The quasi-stream computing model is similar to batch processing. Many big data components, such as Apache Hudi, IceBerg, and Delta, support classic computing engines, such as Spark and Presto. For example, Apache Hudi provides special table types, such as COW and MOR, to allow you to access snapshot data (of the specified version), incremental data, and quasi-real-time data. AWS and Tencent have integrated Apache Hudi into their EMR services, and Alibaba Cloud DLA is also planning to introduce the DLA on Apache Hudi capability.

As mentioned above, data scientists and data analysts are the primary users of data lakes, mainly for exploratory analytics and machine learning. Real-time stream computing is typically applied to online businesses but is not essential for data lake users. The real-time stream computing model is essential for the online businesses of many Internet companies. A data lake not only provides centralized storage for an enterprise or organization's internal data but also a scalable architecture to integrate stream computing capabilities.

5. Business Support: Many data lake solutions provide standard access interfaces, such as JDBC, to external users. You can also use business intelligence (BI) report tools and dashboards to directly access the data in a data lake. In practice, we recommend pushing the data processed in a data lake to data engines that support online businesses to improve the application experience.

A data lake is the infrastructure for next-generation big data analytics and processing. It provides richer functions than a big data platform. Data lake solutions are likely to evolve in the following directions in the future:

1 . Separation of storage and computing, both of which are independently scalable

2 . Support for multi-modal computing engines, such as SQL, batch processing, stream computing, and machine learning

3 . Provision of serverless services, which are elastic and billed in pay-as-you-go mode

Alibaba Clouder - January 20, 2021

Alibaba Clouder - June 22, 2020

Alibaba Cloud MaxCompute - July 15, 2021

Alibaba Clouder - July 7, 2020

Apache Flink Community - May 10, 2024

Alibaba EMR - November 4, 2020

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by ApsaraDB