We have accumulated some best practices using the promotion and application of the cloud-native data warehouse named AnalyticDB for MySQL (hereinafter referred to as AnalyticDB) in various business lines of Alibaba Group and all walks of life. This article organizes those practices for your reference and hopes to be helpful to readers. This article summarizes the best practices in the design of AnalyticDB tables, data writing, efficient queries, and some common problems.

Note:

AnalyticDB is a distributed data warehouse that can bring excellent performance experience in the real-time analysis of large amounts of data. It is expected to pay attention to the following rules when designing tables to give full play to the performance of AnalyticDB in data analysis.

DISTRIBUTED BY BROADCAST. These tables store one copy of data in each node of a cluster. Therefore, the amount of data in a dimension table should not be too large. We recommend storing no more than 20,000 rows of data in each dimension table.Note: If the dimension table is too large, it will cause the expansion of data storage space. More nodes equal greater expansion. At the same time, it will cause performance degradation during real-time writes, and the IOPS will be relatively high.

If you want to create a common table in AnalyticDB, you must use the DISTRIBUTED BY HASH(column_name,...) statement to specify distribution keys. Then, the table is partitioned by the hash value of the columns specified by column_name.

AnalyticDB allows you to use multiple fields as distribution keys.

You can specify distribution keys based on the following rules:

Note: Uneven distribution keys can lead to uneven data distribution, affecting the efficiency of writing and querying. In addition, it is prone to cause a full single-node disk and make the entire cluster locked and unavailable. In addition to special business scenarios, we preferentially consider whether data is uniform when creating a table and the issue of JOIN KEY alignment.

You need to consider creating a level-2 partition table for tables with a large amount of data to split the data further. After setting the level-2 partition, it can also bring two benefits:

1) Perform Lifecycle Management on Data: For example, after a certain number of level-2 partitions are set, expired level-2 partitions will be eliminated automatically.

2) When the query condition includes a field related to the level-2 partition, the level-2 partition can be clipped to improve the query performance.

Notes on the Level-2 Partition:

Please plan the level-2 partition keys of all tables in instances in advance. Make full use of the level-2 partition. Do not let the amount of data of each level-2 partition be too small. If you perform level-2 partition by day and the amount of data per day is very small, you can consider performing level-2 partition by month. If the amount of data in the level-2 partition is too small, there will be a lot of metadata in the database that needs to save the partition data. The metadata is stored in the memory, and too much metadata will occupy much memory space, resulting in GC or OOM in the system and high IOPS of real-time writing.

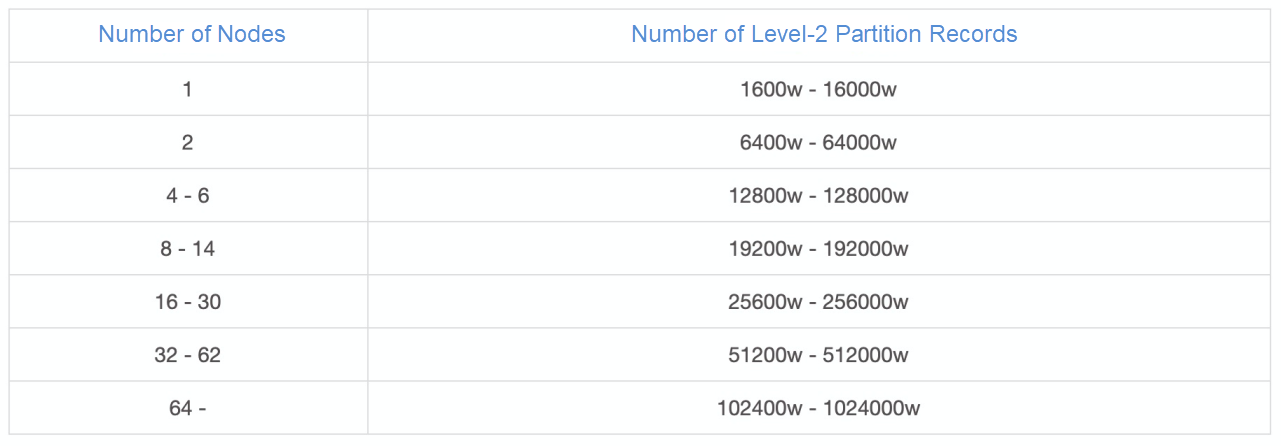

Recommendations for the amount of data of the level-2 partition:

Defining a primary key in a table can realize data deduplication (REPLACE INTO) and data update (DELETE and UPDATE). Operations (such as DELETE and UPDATE) are supported only for tables for which a primary key is specified.

You can specify a primary key based on the following rules:

Note: The field used as the primary key should not be too large, and the length of the field should not be too long. Otherwise, the write performance will be affected.

A clustered index sorts one or more fields to ensure data with the same or similar fields are stored in the same or similar places on the disk. When the fields in the clustered index are used as query conditions, the query results remain in the same places on the disk, which can reduce the I/O of the disk.

You can specify clustered indexes based on the following rule:

The fields of the filter conditions that the query must carry can be designed as clustered indexes. For example, on a data analysis platform for e-commerce sellers, they can only view their data. A seller ID can be defined as the clustered index to ensure data locality and improve data query performance.

Note: Currently, only one clustered index is supported, but one clustered index can contain multiple columns. Currently, clustered indexes are of little help to performance unless they perform point query on very scattered data.

We recommend using numeric data when possible but using fewer string data.

AnalyticDB performs far better in processing numeric data than in processing string data. Here are the reasons:

Suggestions for processing common string data:

If you do not know the data distribution characteristics of your business before creating a table, you can use optimization suggestions to optimize the data after importing the data. Please visit the table creation diagnosis page of the console for AnalyticDB to view related problems and optimization suggestions.

When writing data to a table, you can execute INSERT INTO and REPLACE INTO statements to write data in batch packaging mode to improve data write performance. Here are the considerations:

For example:

INSERT INTO test

(id, name,sex,age,login_time)

VALUES

(1,'dcs',0,23,'2018-03-02 10:00:00'),

(2,'hl',0,23,'2018-03-02 10:01:00'),

(3,'xx',0,23,'2018-03-02 10:02:00')

......;There are many ways to update data. The differences are listed below:

Note: UPDATE needs to look up the table to fill in the missing old values in the update, so it queries one more time than the REPLACE INTO. The performance is lower. Therefore, we do not recommend performing high-frequency and large-scale UPDATE operations. If the performance of the online UPDATE cannot meet the requirements, you need to consider replacing it with the REPLACE INTO and fill in the old values by the application end.

There are many ways to delete data. The differences are listed below:

DELETE FROM WHERE primary key = xxx.DELETE FROM WHERE.TRUNCATE PARTITION.TRUNCATE TABLE or DROP TABLE.INSERT OVERWRITE SELECT for batch importing and importing AnalyticDB from ODPS and OSS. There are two reasons. First, batch import is suitable for the large amount of data imports and has good performance. Second, batch import is suitable for data warehouse semantics. The old data can be checked during import, and the new data can be switched with one click after the import is completed. If the import fails, the new data will be rolled back without affecting the query of the old data.INSERT INTO SELECT for real-time import. If the amount is large, we recommend using INSERT OVERWRITE SELECT for batch import.INSERT OVERWRITE SELECT or INSERT INTO SELECT operations cannot be used for the same table at the same time. Otherwise, the data will be overwritten.The benefit of AnalyticDB is that it can achieve real-time online analysis when faced with complex queries in massive data scenarios. The query tuning of AnalyticDB is compatible with the general methods of database query optimization and provides some special optimization methods to make full use of its performance in distributed computing.

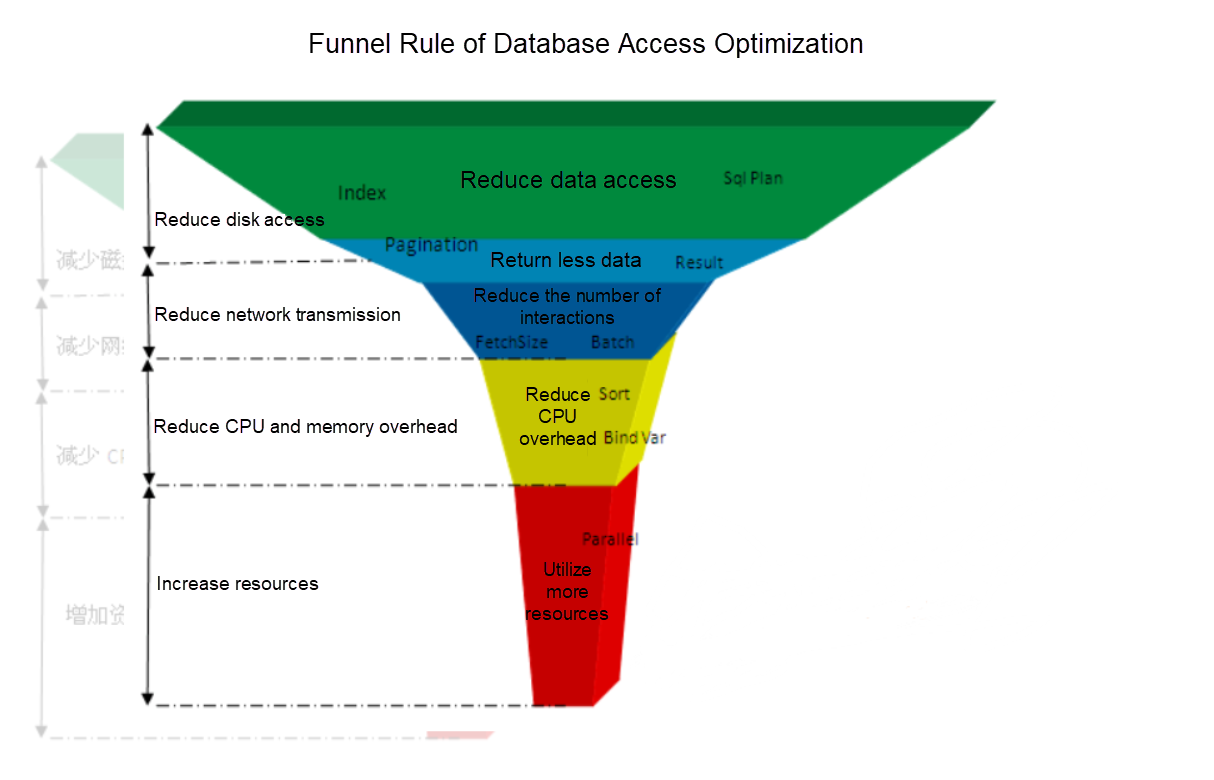

According to the article written by Ye Zhengsheng in ORACLE DBA Notes in the early years, data access optimization conforms to the following funnel rule:

Use filter conditions as much as possible to filter data in advance, thus reducing the amount of data involved in calculations. For example, filter data that can be filtered in advance in subqueries.

In OLAP databases, since the number of columns in a table is often large and it is based on column storage or column-row mixed storage, SELECT * operations will lead to more request I/O. Therefore, avoid SELECT * queries as much as possible.

We recommend using the batch import mentioned above to reduce the number of interactions.

Rational use of indexes is a very important method for database tuning, and AnalyticDB is no exception. Indexes are created for each column by default in AnalyticDB, but there are exceptions. If the value of cardinality in a column is low and the selectivity of indexes is low, the performance of index queries may be even worse. We recommend disabling the feature of automatic index creation when creating a table during this time. If the table has been created, you can use the following SQL statement to delete the index or use hints to bypass the index.

ALTER TABLE table_name DROP FULLTEXT INDEX index_name;

--Method 1: Delete the index of an enumerated column

/+no_index_columns=[t_order_content.fdelete;fdbid]/

--Method 2: Use hints to make the query bypass the indexWhen a query condition must contain a column, especially when the data in the column is stored very scattered, creating a clustered index for the column will significantly improve the performance. You can use an SQL statement similar to the following one to add a clustered index:

ALTER TABLE table_name ADD CLUSTERED INDEX index_cls (d_fdbid);Note: If there has been data in the table, the direct ADD CLUSTER INDEX will not sort the existing data. You need to rebuild the table and add the clustered column keyword when creating the table or perform a build operation on the table after adding the clustered index: build table table_name force=true.

Distributed databases give full play to the advantages of distributed computing but can increase the network overhead across nodes sometimes. In particular, when the amount of data requested is small, but the data is scattered in more nodes, the cross-network overhead is high. This article provides the following two ideas:

In terms of usage, the compatibility of AnalyticDB with MySQL is more than 99%. AnalyticDB supports multiple connection methods, including MySQL command lines, JDBC connection, Python connection, C# connection, and PHP connection.

Please refer to the official document for more detailed usage.

After considering the length of the article, we divided this article into two parts. Part 2 focuses on the Best Practices in Business Industry Online. Part 2 will help you understand the data warehouse AnalyticDB through different usage scenarios and discuss popular FAQs.

Database Autonomy Services Lead the New Era of Database Autonomous Driving

Senior Technical Experts from Alibaba Discuss Data Warehouse Tuning – Part 2

ApsaraDB - July 4, 2022

ApsaraDB - December 21, 2023

Alibaba Cloud MaxCompute - October 9, 2023

Alibaba Clouder - June 10, 2020

Alibaba Clouder - June 23, 2020

Proxima - April 30, 2021

AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Personalized Content Recommendation Solution

Personalized Content Recommendation Solution

Help media companies build a discovery service for their customers to find the most appropriate content.

Learn MoreMore Posts by ApsaraDB