Written Jointly by:

Yan Xun, Senior Engineer of Alibaba Cloud EDAS Team

Andy Shi, Alibaba Cloud Developer

Tom Kerkhove, Head of Codit Containerized Business, Azure Architect, KEDA Maintainer, and CNCF ambassador

You will think of some fields when you scale Kubernetes. However, if you are new to Kubernetes, you may find it difficult to cope.

This article will briefly explain how to make application auto scaling simple with KEDA and why Alibaba Cloud Enterprise Distributed Application Service (EDAS) is fully standardized on KEDA.

When managing Kubernetes clusters and applications, you need to carefully monitor various things, such as:

You usually set alerts or use auto scaling to achieve automation and get notifications. Kubernetes is a great platform to help you implement this instantly available feature.

You can scale a cluster easily using Cluster Autoscaler components. The component monitors the cluster to detect pods that cannot be scheduled due to a shortage of resources and starts to add or remove nodes accordingly.

Since Cluster Autoscaler only starts when pods are over-scheduled, you may have a time interval when your workloads are not starting and running.

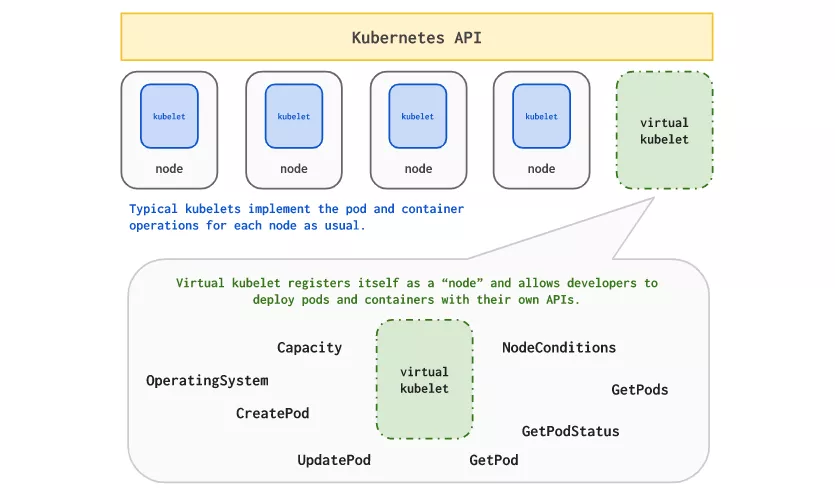

Virtual Kubelet, a CNCF sandbox project, allows you to add a virtual node to a Kubernetes cluster where a pod can be scheduled.

By doing so, platform suppliers, including Alibaba, Azure, and HashiCorp, allow you to overflow pending pods outside the cluster until it provides the required cluster capacity to alleviate the problem.

In addition to scaling clusters, Kubernetes allows you to scale applications easily:

All these provide a good start to scale applications.

Although HPA is a good start, it mainly focuses on pod metrics, allowing you to scale it based on CPU and memory. In other words, you can fully configure the way it auto-scales, which makes it powerful.

This is ideal for some workloads because you usually want to scale based on metrics from other fields, such as Prometheus, Kafka, cloud providers, or other events.

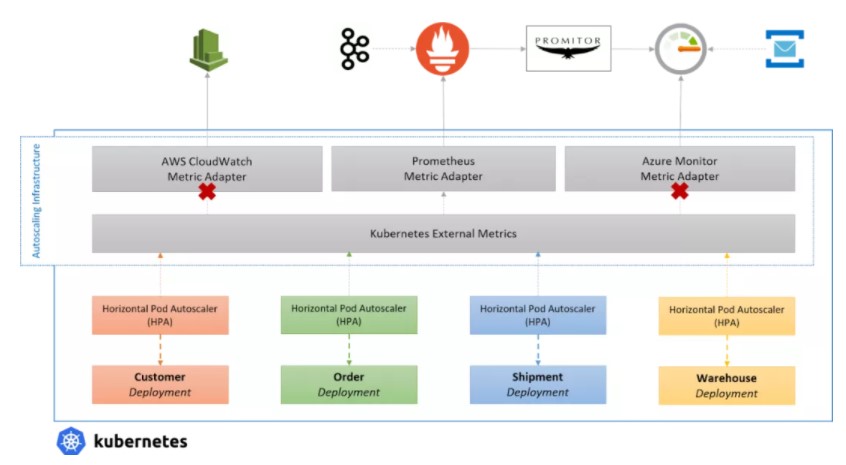

Thanks to external metrics support, you can install the metrics adapter to provide metrics from external services and scale them automatically using the metrics server.

Note: You can only run one metric server in a cluster, which means you must select the source of the custom metrics.

You can use Prometheus and tools, such as Promitor, to obtain your metrics from other providers and scale them as a single source. However, this requires a lot of plumbings and work to expand.

There is an easier way; it is Kubernetes Event-Driven Autoscaling (KEDA)!

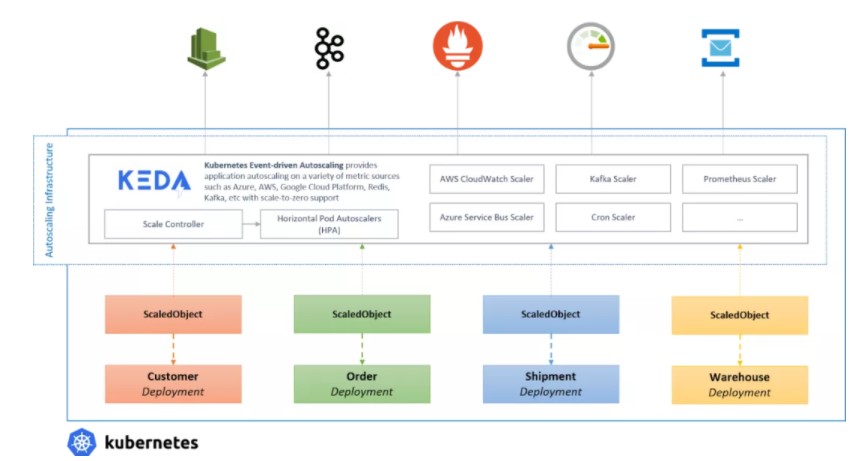

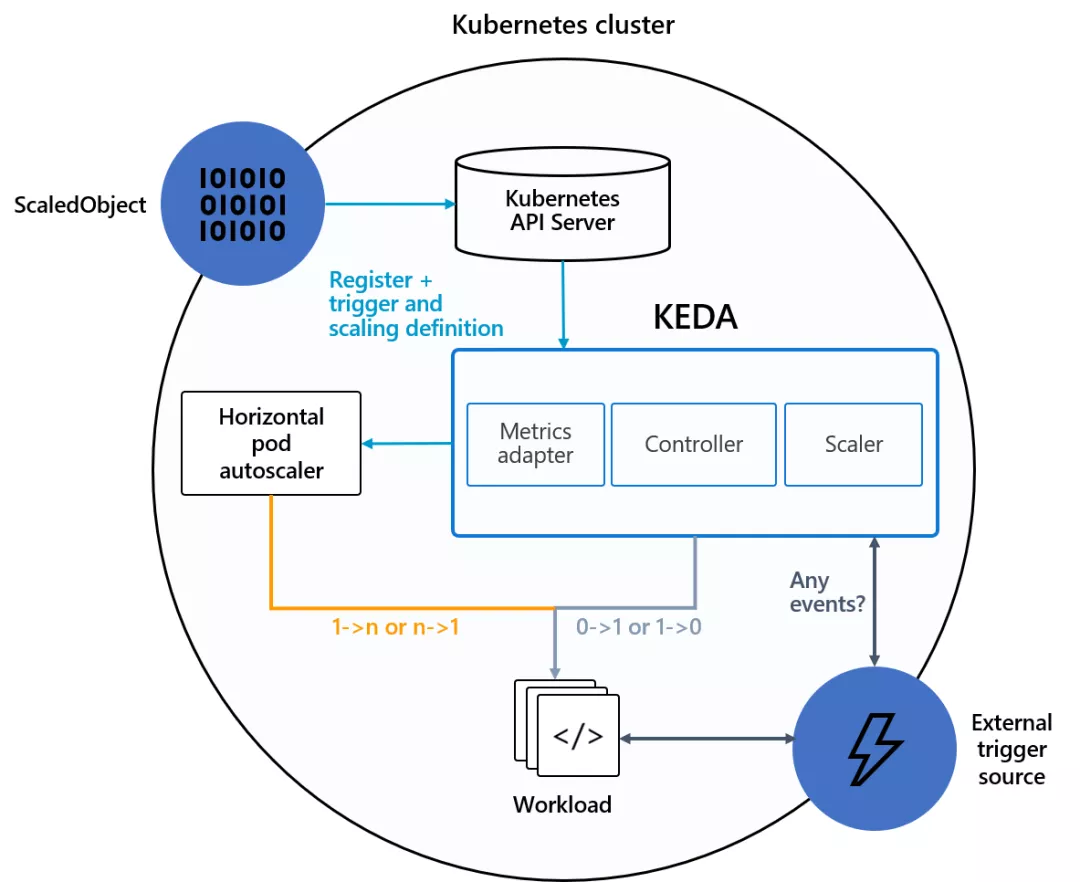

Kubernetes Event-Driven Autoscaling (KEDA) is a single-purpose event-driven autoscaler for Kubernetes that can be added to Kubernetes clusters easily to scale applications.

It aims to make application auto scaling extremely simple and optimize costs by supporting scale-to-zero.

KEDA manages everything for you without scaling infrastructure, allowing you to scale more than 30 systems for your scaler.

You only need to create ScaledObject or ScaledJob to define the object you want to scale and the trigger you want to use. Then, KEDA will handle everything else!

You can scale anything, even if it is the CRD of another tool you are using, as long as it implements or scales sub-resources.

Did KEDA reuse the adapter? No! Instead, it extends Kubernetes by HPA at the underlying level, and HPA uses external metrics provided by our metrics adapter, which replaces all other adapters.

Last year, KEDA joined CNCF. As a CNCF sandbox project, it plans to upgrade the proposal to the incubation phase later this year.

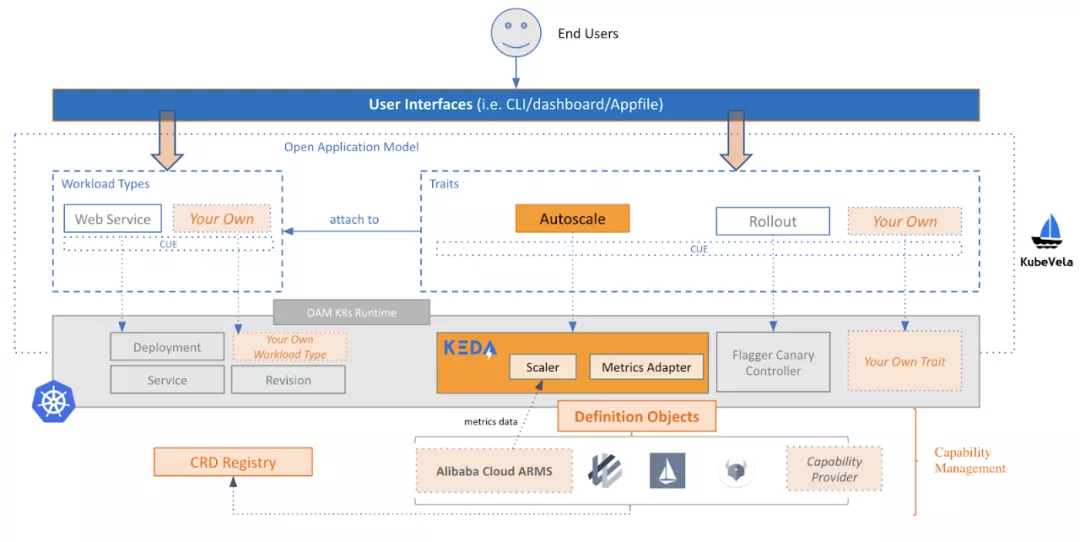

As a leading enterprise PaaS product on Alibaba Cloud, EDAS has been serving countless developers on the public cloud on a large scale for many years. From the perspective of architecture, EDAS was built in conjunction with the KubeVela project. The following figure shows the overall architecture:

In production, EDAS integrates ARMS with Alibaba Cloud to provide fine-grained metrics of monitoring and applications. The EDAS /team has added an ARMS Scaler to the KEDA project to perform auto scaling. Besides, some features are added, and some bugs in the KEDA version 1.0 version are fixed as well, including:

The EDAS Team is actively sending these fixes to the upstream KEDA, although some of them have been added to version 2.0.

When it comes to auto scaling, EDAS initially used the CPU and memory of the upstream Kubernetes HPA as two metrics. However, with the growth of user groups and the diversification of demand, the EDAS Team discovered the following limitations of the upstream HPA:

Based on these needs, the EDAS Team began to plan a new version of EDAS auto-scaling. Meanwhile, EDAS introduced OAM in early 2020 and reformed its underlying core components thoroughly. OAM provides EDAS with standardized and pluggable application definitions to replace the internal Kubernetes application CRD. The scalability of this model enables EDAS to integrate with any new features of the Kubernetes community easily. Therefore, the EDAS Team tried to combine the requirements for new EDAS auto scaling features with the standard implementation of the OAM auto scaling feature.

The EDAS Team defines three metrics based on the instances:

After a detailed evaluation, the EDAS Team chose the KEDA project. The project was made open-source by Microsoft and Red Hat and has been donated to CNCF. KEDA provides several useful scalers and out-of-the-box supports to achieve a scale-to-zero feature by default. It provides fine-grained auto scaling for applications. Moreover, it has the concept of scalar and metric adapters, supporting a powerful plug-in architecture and providing a unified API layer. Most importantly, KEDA only focuses on auto scaling, which allows it to be integrated easily as an OAM feature. In general, KEDA is very suitable for EDAS.

Alibaba is actively promoting the KEDA feature driven by AIOps to bring intelligent decision-making to its auto scaling behavior. This will leverage the newly implemented application QoS triggers and database metric triggers in Alibaba's KEDA component, essentially achieving auto scaling decisions based on expert systems and historical data analysis. Therefore, a more powerful, intelligent, and stable KEDA-based auto scaling feature is expected to be released in KEDA soon.

Use rocketmq-spring-boot-starter to Configure, Send, and Consume RocketMQ Messages

480 posts | 48 followers

FollowAliware - March 22, 2021

Alibaba Developer - November 18, 2020

Alibaba Cloud Native Community - December 29, 2023

Alibaba Cloud Native - January 6, 2023

Alibaba Developer - March 16, 2021

Alibaba Cloud Native Community - March 20, 2023

480 posts | 48 followers

Follow Auto Scaling

Auto Scaling

Auto Scaling automatically adjusts computing resources based on your business cycle

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More EDAS

EDAS

A PaaS platform for a variety of application deployment options and microservices solutions to help you monitor, diagnose, operate and maintain your applications

Learn MoreMore Posts by Alibaba Cloud Native Community