By Zhang Jie (Bingyu)

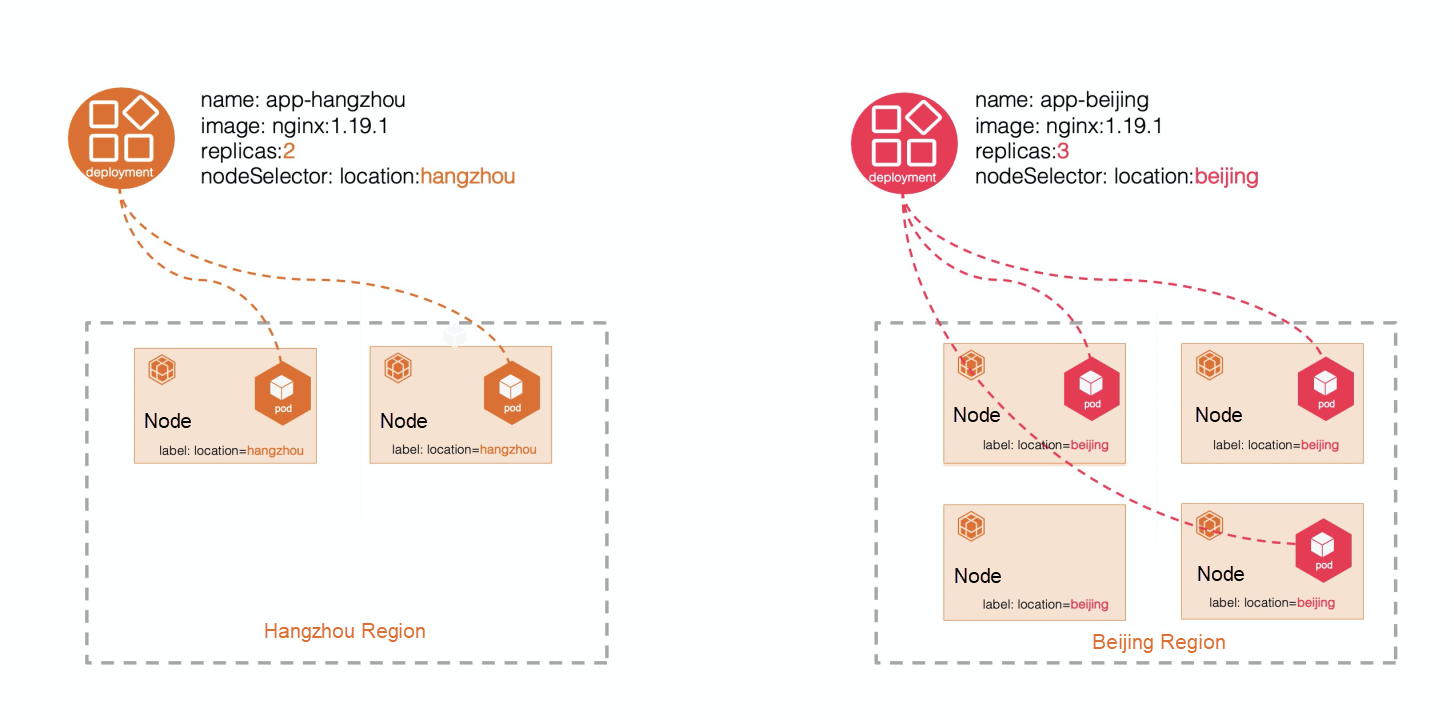

Let's review the concept and the design idea of unitized deployment at the beginning. In edge computing scenarios, computing nodes may be deployed across regions, and the same applications may run on nodes in different regions. Let's take Deployment as an example. As shown in the figure below, traditionally, the compute nodes of the same region are first set to the same label. Then, multiple Deployments are created where different Deployments have different labels selected by NodeSelectors. By doing so, the same application can be deployed to different regions.

However, as geographic distributions increase, its O&M becomes more complex, as shown in the following aspects:

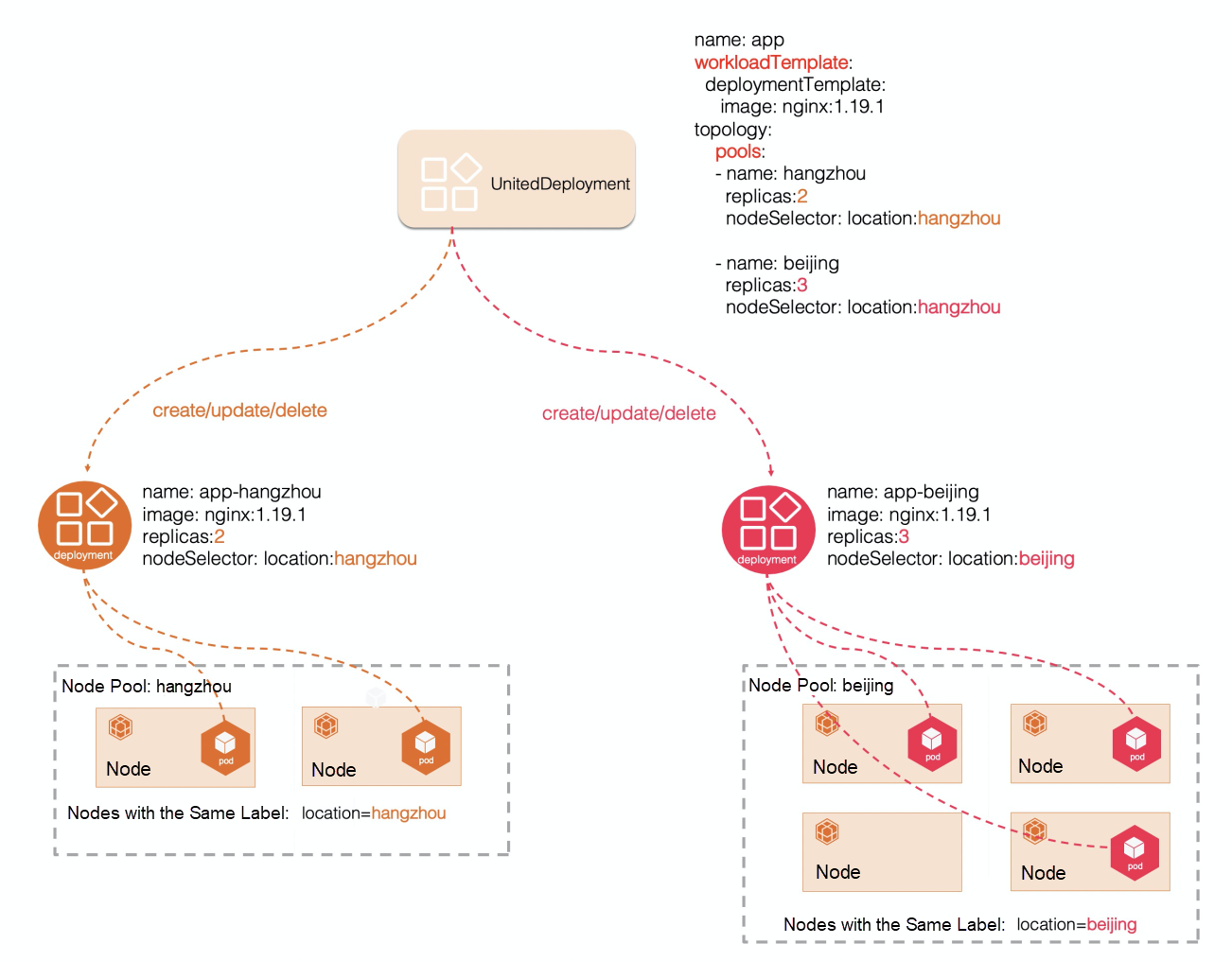

Based on the requirements and problems above, the yurt-app-manager of OpenYurt provides UnitedDeployment to centrally manage these sub-Deployments through a higher level of abstraction, such as automatic creation, update, and deletion, reducing the complexity of O&M significantly.

Yurt-app-manager components: https://github.com/openyurtio/yurt-app-manager

The following figure shows the specific information:

UnitedDeployment provides a higher level of abstraction for these workloads, consisting of two main configurations – WorkloadTemplate and Pools. The format of WorkloadTemplate can be Deployment or Statefulset. Pools are lists, and each list has the configuration of a Pool. Each Pool has its name, replicas, and nodeSelector configuration. Users can select a group of machines with nodeSelector. Therefore, in the edge scenarios, the Pool can be considered as a group of machines in a certain region. Users can distribute a Deployment or Statefulset application to different regions easily with WorkloadTemplate and Pools.

The following is a specific UnitedDeployment example:

apiVersion: apps.openyurt.io/v1alpha1

kind: UnitedDeployment

metadata:

name: test

namespace: default

spec:

selector:

matchLabels:

app: test

workloadTemplate:

deploymentTemplate:

metadata:

labels:

app: test

spec:

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- image: nginx:1.18.0

imagePullPolicy: Always

name: nginx

topology:

pools:

- name: beijing

nodeSelectorTerm:

matchExpressions:

- key: apps.openyurt.io/nodepool

operator: In

values:

- beijing

replicas: 1

- name: hangzhou

nodeSelectorTerm:

matchExpressions:

- key: apps.openyurt.io/nodepool

operator: In

values:

- hangzhou

replicas: 2The specific logic of the controller for the UnitedDeployment is listed below:

A UnitedDeployment CR has been defined, in which a DeploymentTemplate and two pools are defined.

apps.openyurt.io/nodepool=beijing. The UnitedDeployment controller needs to create a sub-Deployment, in which the replicas are 1, the nodeSelector is apps.openyurt.io/nodepool=beijing, and other configurations are inherited from the DeploymentTemplate.apps.openyurt.io/nodepool=hangzhou. The UnitedDeployment controller needs to create a sub-Deployment, in which the replicas are 2, nodeSelector is apps.openyurt.io/nodepool=hangzhou, and other configurations are inherited from the DeploymentTemplate.After detecting that a UnitedDeployment CR instance with the name test has been created, the UnitedDeployment controller generates a Deployment template object based on the configuration in the DeploymentTemplate first. Based on the configuration of Pool1 and Pool2 with the template object of Deployment, two deployment resource objects with the name prefix test-hangzhou- and test-beijing- are generated. These two Deployment resource objects have their own nodeSelector and replica configurations. By doing so, users can distribute workloads to different regions by combining the workloadTemplate and Pools without maintaining a large number of Deployment resources.

UnitedDeployment can maintain multiple Deployments or Statefulsets automatically through one UnitizedDeployment instance. Each Deployment or Statefulset follows a unified naming convention. Meanwhile, differentiated configurations, such as Name, NodeSelectors, and Replicas can also be implemented, simplifying O&M for users in edge scenarios.

UnitedDeployment can meet most of the needs of users. However, in the process of promotion, implementation, and discussion with community members, we found that the functions provided by UnitedDeployment are somewhat insufficient under some specific scenarios. For example:

These requirements drive the need for UnitedDeployment to provide the functionality to customize the configuration of each pool, allowing users to make personalized configurations, such as mirrors, requests, and limits, for pods based on the real-world conditions of the different node pools. We decide to add a Patch field in the pool to maximize the flexibility, allowing users to customize Patch content. However, this needs to follow a strategic merge patch for Kubernetes, which is similar to the commonly used kubectl patch.

Add a new patch in the pool, as shown in the following example:

pools:

- name: beijing

nodeSelectorTerm:

matchExpressions:

- key: apps.openyurt.io/nodepool

operator: In

values:

- beijing

replicas: 1

patch:

spec:

template:

spec:

containers:

- image: nginx:1.19.3

name: nginxThe content defined in the patch must follow the strategic merge patch for Kubernetes. If you have used kubectl patch, you will know how to write the patch content. For details, please see and use the kubectl patch to update Kubernetest api object.

There is a demonstration of the use of the UnitedDeployment patch below.

apps.openyurt.io/nodepool=beijing, and the other node is apps.openyurt.io/nodepool=hangzhou.Yurt-app-manager components: https://github.com/openyurtio/yurt-app-manager

cat <<EOF | kubectl apply -f -

apiVersion: apps.openyurt.io/v1alpha1

kind: UnitedDeployment

metadata:

name: test

namespace: default

spec:

selector:

matchLabels:

app: test

workloadTemplate:

deploymentTemplate:

metadata:

labels:

app: test

spec:

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- image: nginx:1.18.0

imagePullPolicy: Always

name: nginx

topology:

pools:

- name: beijing

nodeSelectorTerm:

matchExpressions:

- key: apps.openyurt.io/nodepool

operator: In

values:

- beijing

replicas: 1

- name: hangzhou

nodeSelectorTerm:

matchExpressions:

- key: apps.openyurt.io/nodepool

operator: In

values:

- hangzhou

replicas: 2

EOFThe workloadTemplate of the instance uses the Deployment template, and the image named nginx is nginx:1.18.0. Meanwhile, two pools defined in the topology are beijing and hangzhou, and their number of replicas is 1 and 2, respectively.

# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

test-beijing-rk8g8 1/1 1 1 6m4s

test-hangzhou-kfhvj 2/2 2 2 6m4sThe yurt-app-manager controller has created two deployments corresponding to the pools in beijing and hangzhou. The naming convention of deployments is prefixed with {UnitedDeployment name}-{pool name}. From the configurations of these two deployments, the Replicas and Nodeselector inherit the configuration of each corresponding Pool, while other configurations inherit the configuration of the workloadTemplate.

# kubectl get pod

NAME READY STATUS RESTARTS AGE

test-beijing-rk8g8-5df688fbc5-ssffj 1/1 Running 0 3m36s

test-hangzhou-kfhvj-86d7c64899-2fqdj 1/1 Running 0 3m36s

test-hangzhou-kfhvj-86d7c64899-8vxqk 1/1 Running 0 3m36s Note: One pod with the name prefix test-beijing and two pods with the name prefix test-hangzhou are created.

Run the kubectl edit ud test command to add a patch to the pool of beijing. In this patch, the version of the container image named nginx is modified to nginx:1.19.3.

The following sample code provides an example of the valid format:

- name: beijing

nodeSelectorTerm:

matchExpressions:

- key: apps.openyurt.io/nodepool

operator: In

values:

- beijing

replicas: 1

patch:

spec:

template:

spec:

containers:

- image: nginx:1.19.3

name: nginxRe-check the Deployment with the prefix test-beijing to see that the image configuration of the container has changed to 1.19.3.

kubectl get deployments test-beijing-rk8g8 -o yamlWorkloads can be distributed to different regions quickly by inheriting templates through the WorkloadTemplate and Pools of UnitedDeployment. Together with the Pool's patch capability, it can provide more flexible differentiated configurations while inheriting the configuration of the template, meeting the special needs of most customers in edge scenarios.

Cluster Images: Achieve Efficient Distributed Application Delivery

668 posts | 55 followers

FollowAlibaba Developer - January 20, 2021

Alibaba Cloud Native Community - January 9, 2023

Alibaba Container Service - April 3, 2025

Alibaba Developer - March 3, 2022

Alibaba Cloud Native Community - May 4, 2023

Alibaba Developer - October 19, 2021

668 posts | 55 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Link IoT Edge

Link IoT Edge

Link IoT Edge allows for the management of millions of edge nodes by extending the capabilities of the cloud, thus providing users with services at the nearest location.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn MoreMore Posts by Alibaba Cloud Native Community