By Wang Cheng & Kui Yu

Do you still remember the three critical security vulnerabilities (CVE-2021-25745, CVE-2021-25746, CVE-2021-25748) disclosed by K8s Ingress Nginx in 2022, when it announced the halt of new feature PRs to focus on fixing and improving stability?

Recently, five more security vulnerabilities have been disclosed, which attackers can exploit to take over your K8s cluster, referred to in the industry as #IngressNightmare.[1]

Recently, the cloud security platform Wiz Research disclosed five security vulnerabilities in Ingress Nginx, specifically CVE-2025-1097, CVE-2025-1098, CVE-2025-24514, and CVE-2025-1974. These are unauthenticated remote code execution vulnerabilities in the Kubernetes Ingress Nginx Controller, and the CVSS v3.1 base score for these vulnerabilities reaches as high as 9.8, referred to by Wiz as #IngressNightmare.

Attackers can exploit these vulnerabilities to access stored information in all namespaces without authorization, allowing them to take over the K8s cluster.

CVSS v3.1 (Common Vulnerability Scoring System version 3.1) is an industry-standard framework used to assess the severity of vulnerabilities in computer systems, maintained by the international organization FIRST (Forum of Incident Response and Security Teams).The maximum score is 10, with higher scores indicating more serious vulnerabilities.

The core issue of IngressNightmare lies in its impact on the admission controller component of the Kubernetes Ingress Nginx Controller. It is estimated that approximately 43% of cloud environments may be affected by these vulnerabilities.

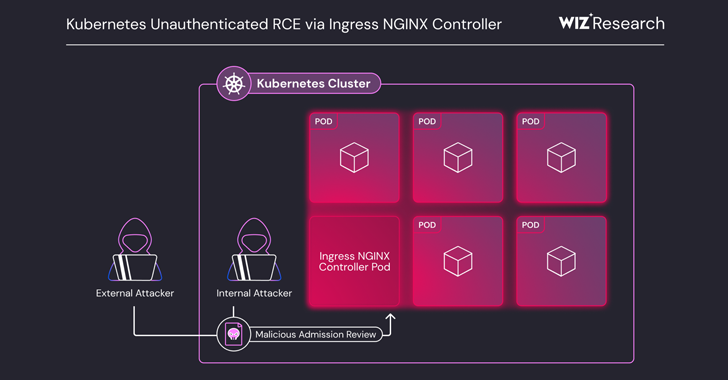

These vulnerabilities exploit the feature that admission controllers deployed in Kubernetes Pods can be accessed over the network without authentication.Specifically, attackers can send malicious Ingress objects (i.e., AdmissionReview requests) directly to the admission controller, allowing them to remotely inject arbitrary Nginx configurations and execute code on the Ingress Nginx Controller's Pods.

Wiz explained, "The high privileges and unrestricted network access of the admission controller create a critical path for privilege escalation. By exploiting this vulnerability, attackers can execute arbitrary code and access all cluster secret information across namespaces, ultimately leading to the potential takeover of the entire cluster."

The following are specific details of these vulnerabilities:

● CVE-2025-24514– auth-url annotation injection

● CVE-2025-1097– auth-tls-match-cn annotation injection

● CVE-2025-1098– mirror UID injection

● CVE-2025-1974– Nginx configuration code execution

In experimental attack scenarios, threat actors can exploit the client-body buffer feature of Nginx to upload malicious payloads as shared libraries to the Pod, then send AdmissionReview requests to the admission controller.The requests contain one of the aforementioned configuration command injections, causing the shared library to be loaded and thereby achieving remote code execution (RCE).

These vulnerabilities have been repaired in the Ingress NGINX Controller versions 1.12.1, 1.11.5, and 1.10.7.Users are recommended to update to the latest version as soon as possible and ensure that admission Webhook endpoints are not exposed externally.

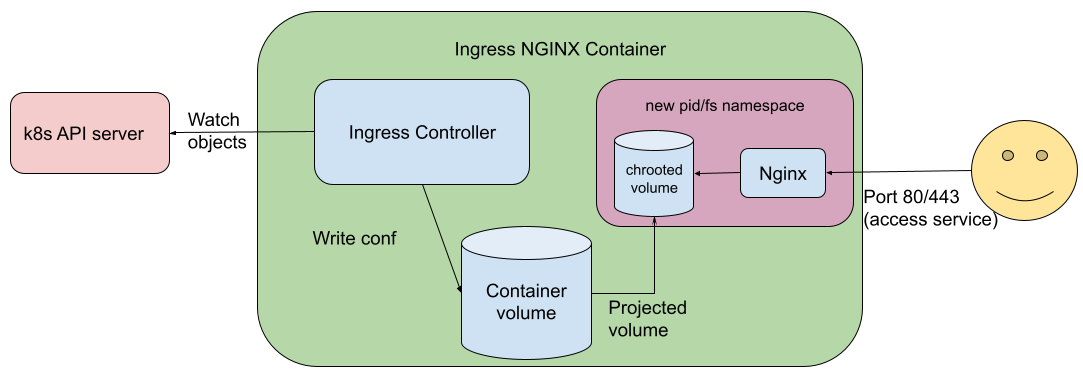

Ingress Nginx Container Architecture (Image from kubernetes.io)

Frequent security vulnerabilities in Ingress Nginx stem from its insecure architectural design: placing the control plane Ingress Controller component (a Go program) and the data plane Nginx component in the same container.Although the community has isolated the Nginx service as a container within the controller container, thereby reducing some security risks to a degree, it does not eliminate them completely! (The control plane and data plane still reside within a single large container.)

The control plane acts as the Admin, managing many sensitive pieces of information, including authentication credentials for communication with the K8s API Server, sensitive data in the Nginx configuration files, and access permissions for internal cluster services.Sharing a container for the data plane and the control plane gives attackers an opportunity to obtain these sensitive pieces of information through the data plane.

Taking the CVE-2025-1974 vulnerability as an example, its root cause lies in the input validation flaws when the Ingress Nginx Controller's admission controller processes AdmissionReview requests, specifically manifested in the following two key points:

● Malicious command injection: Attackers can construct special AdmissionReview requests to inject the ssl_engine directive into the Nginx configuration.This vulnerability exploits logical flaws in the Nginx configuration generation process, allowing attackers to bypass security restrictions and embed malicious commands into the configuration file.

● Abuse of the dynamic library loading mechanism: By combining with Nginx's client-body buffer feature, attackers can inject malicious payloads (such as shared libraries) into the controller's runtime environment.When the controller executes the nginx -t command to test the configuration, it loads the malicious library specified by the attacker, thus achieving remote code execution (RCE).

Moreover, the severity of the vulnerability is also attributed to the high privilege and network exposure of the admission controller:

● The admission controller typically has high permissions within the cluster, and if its network is exposed, attackers could exploit this vulnerability to move laterally within the cluster to access secrets in all namespaces or execute arbitrary code.

● This vulnerability can be exploited without authentication, further lowering the entry barrier for attackers.

In summary, this vulnerability is a combination of configuration validation flaws and dynamic library loading mechanism flaws, ultimately allowing attackers to take full control of the cluster by injecting malicious commands and libraries.

As Nginx Ingress handles a significant amount of ingress traffic for the cluster, high stability is required. Typically, we ensure this through a series of complex configurations and operational actions, such as:[2]

● Independently scheduling its Pods to ensure stability, such as setting taints on nodes and configuring tolerations in the Ingress Controller’s Pods to allow exclusive access to node resources;

● To enhance the reliability of the Ingress gateway, the number of replicas and resource allocation for Ingress should be set based on actual business load;

● For elasticity during peak periods at the gateway, HPA (Horizontal Pod Autoscaler) should be used to support horizontal scaling of the gateway Pods;

● The Nginx Ingress effectively provides external access capabilities via load balancers, necessitating considerations about whether the load balancing bandwidth meets peak demand.

Since the Nginx reverse proxy gateway is deployed within the K8s cluster, its performance is directly affected by Pod resource allocation and the host machine's performance.Moreover, if other business Pods exist on the node where the Nginx Ingress Controller Pod is located, resource preemption issues may arise when CPU load on the containers is high, leading to stability risks.

K8s provides livenessProbe and readinessProbe for health checks on Pods, and the official Nginx Ingress Controller's Deployment template also uses this mechanism for gateway health checks.

The health checks and liveness checks utilize the /healthz endpoint provided by the control plane Manager listening on port 10254. Since both the data plane and control plane of Nginx Ingress are in the same container, high load on the gateway during peak business times can likely lead to timeouts for the control plane’s health check interface.According to the livenessProbe mechanism, this can cause the Nginx Ingress gateway to continuously restart, leading to instability and loss of traffic.

Furthermore, the control plane Manager is responsible for collecting Prometheus monitoring metrics. During peak business periods, the control plane may not secure enough CPU, leading to OOM (Out of Memory) situations that result in container termination.

Although the two issues mentioned above have been closed in the community, the resource preemption problem caused by the absence of separation between the control plane and data plane remains challenging to prevent.

Updating the Nginx gateway routing rules via Nginx Ingress directly modifies the domain and path in the nginx.conf configuration file, necessitating an update to the Nginx configuration and a reload for it to take effect.

When applications have long connections, such as websockets, the reload operation can cause noticeable disconnections in business connections after a period of time.

As seen above, the Nginx Ingress gateway in K8s clusters has shortcomings such as high operational difficulty, resource competition caused by the lack of separation between the data plane and control plane, and detrimental effects on long connections during process reloads.When we need to build our own Nginx Controller, consider what other details need to be taken into account in K8s:

● Unstable Backend IPs: Pod IP addresses change frequently due to application restarts, migrations, and new version releases. Unstable backend IPs make configuration difficult to manage.

● Frequently Updated Configuration Files: Each change to the backend application requires manual maintenance of the Nginx configuration. When building a highly available multi-node Nginx service, manual efforts are needed to ensure the accuracy and consistency of configurations across multiple nodes.

● Configuration Persistence: Due to the instability of Pods, when Nginx services are deployed as Pods, any changes within Pods will be lost during destruction and recreation. Thus, configuration needs to be persistently saved and mounted to multiple Nginx Pods.

● Monitoring Panel Integration: Operational personnel must manually install Nginx monitoring modules and integrate them with external monitoring systems.

● Access Log Persistence: Persistent data disks need to be attached to Nginx services to save access logs.

● Higress: The kernel is based on Istio and Envoy, adopting a separated architecture for the control plane and data plane, utilizing xDS configuration delivery, with routing policies effective based on RDS/ECDS, having no impact on long connections during reloads, and supporting hot updates of plugins through WASM.This effectively avoids the security and stability issues of Ingress Nginx mentioned in this article.

● MSE Ingress: The commercial version of Higress, offering public cloud services, with advantages in stability, performance, usability among cloud products, and observability.

Next, we’ll take MSE Ingress as an example to conduct a comprehensive comparison between Nginx Ingress and MSE Ingress.

MSE Ingress consists of the MSE Ingress Controller and MSE Cloud Native Gateway, with the latter employing a separated architecture.

MSE Cloud Native Gateway: The MSE Cloud Native Gateway is created by the MSE Ingress Controller based on user-defined MseIngressConfig resources and includes the control plane and data plane.

The MSE Ingress Controller is responsible for monitoring the MseIngressConfig resources in the cluster and coordinating MSE Cloud Native Gateway instances to enforce the traffic management rules for Ingress resource descriptions.

Unlike the Nginx Ingress Controller, the MSE Ingress Controller manages the MSE Cloud Native Gateway instances and configurations, and MSE Ingress Controller Pods do not directly handle user request traffic. Routing and forwarding of user traffic are implemented by the MSE Cloud Native Gateway instances.

| Comparison Items | Nginx Ingress | MSE Ingress |

| Product Positioning | ● Provides seven-layer traffic processing capabilities and rich advanced routing features. ● Own components that can be highly customized according to requirements. |

● Combines traditional traffic gateways, microservice gateways, and security gateways into one, constructing a highly integrated, high-performance, easily scalable, and hot-update capable cloud-native gateway through hardware acceleration, WAF local protection, and plugin marketplace functionalities. ● Provides seven-layer traffic processing capabilities and rich advanced routing functions. Supports various service discovery modes and multiple service gray release strategies, including canary releases, A/B testing, blue-green deployments, and custom traffic proportion distribution. ● Specifically designed for application-layer load scenarios, deeply integrated with containers, and directly connects backend Pod IPs when forwarding requests. |

| Product Architecture | Based on Nginx + Lua plugin extension. | ● Based on the open-source Higress project, using Istiod for the control plane and Envoy for the data plane. ● User-exclusive instances. |

| Basic Routing | ● Routing based on content and source IP. ● Supports HTTP header rewriting, redirection, rewriting, rate limiting, cross-origin requests, session persistence, etc. ● Supports request direction forwarding rules and response direction forwarding rules, where response direction forwarding rules can be implemented using extended Snippet configuration. ● Forwarding rules are matched based on the longest path; in case of multiple path matches, the longest forwarding path has the highest priority. |

● Content-based routing. ● Supports HTTP header rewriting, redirection, rewriting, rate limiting, cross-domain issues, timeouts, and retries. ● Supports standard load balancing modes such as round-robin, random, minimum request count, consistent hashing, and warming preheating (gradually increasing traffic to a specific backend machine during a designated time window). ● Supports thousands of routing rules. |

| Supported Protocols | ● Supports HTTP and HTTPS protocols. ● Supports WebSocket, WSS, and gRPC protocols. |

● Supports HTTP and HTTPS protocols. ● Supports HTTP 3.0, WebSocket, and gRPC protocols. ● Supports HTTP/HTTPS to Dubbo protocol. |

| Configuration Changes | ● Non-backend endpoint changes require a reload process, impacting long connections. ● Endpoint changes use Lua for hot updates. ● Lua plugin changes require a reload process. |

● Supports hot updates of configurations, certificates, and plugins. ● Utilizes the List-Watch mechanism to realize real-time effectiveness for configuration changes. |

| Authentication and Authorization | ● Supports Basic Auth authentication method. ● Supports OAuth protocol. |

● Supports Basic Auth, OAuth, JWT, and OIDC authentication. ● Integrates with Aliyun IDaaS. ● Supports custom authentication. |

| Performance | ● Performance relies on manual tuning, requiring system parameter tuning and Nginx parameter tuning. ● Requires reasonable configuration of replicas and resource limits. |

● Compared to the open-source Nginx Ingress, TPS is about 90% higher when CPU usage is between 30% and 40%. ● Performance improves HTTPS by about 80% when hardware acceleration is enabled. |

| Observability | ● Supports log collection through Access Log. ● Supports monitoring and alert configuration through Prometheus. |

● Supports log collection through Access Log (integrates SLS and Aliyun Prometheus). ● Supports configuration of monitoring and alerting via Aliyun Prometheus. ● Supports Tracing (integrates TracingAnalysis and SkyWalking). |

| Operational Capabilities | ● Components must be maintained independently. ● Can scale in and out through HPA configuration. ● Requires proactive specification tuning. |

Fully managed gateway, no maintenance required. |

| Security | ● Supports HTTPS protocol. ● Supports black and white list functions. |

● Supports end-to-end HTTPS, SNI multi-certificate (integrates SSL), and configurable TLS versions. ● Supports WAF protection at the routing level (connecting with Aliyun Web Firewall). ● Supports routing-level black and white list functionality. |

| Service Governance | ● Service discovery supports K8s. ● Service gray release supports canary releases and blue-green releases. ● Service high availability supports rate limiting. |

● Service discovery supports K8s, Nacos, ZooKeeper, EDAS, SAE, DNS, and static IPs. ● Supports canary releases with more than 2 versions, tag routing, and can achieve full-link gray releases when combined with MSE service governance. ● Built-in integration of Sentinel from MSE service governance, supporting rate limiting, circuit breaking, and degradation. ● Service testing supports service mock. |

| Extensibility | Uses Lua scripts. | ● Uses Wasm plugins, allowing for multi-language development. ● Uses Lua scripts. |

| Cloud-Native Integration | Components must be maintained independently, used in conjunction with Aliyun ACK or ACK Serverless container services. | User-side components, used in conjunction with Aliyun ACK or ACK Serverless container services, and supports seamless conversion of Nginx Ingress core annotations. |

[1] https://thehackernews.com/2025/03/critical-ingress-nginx-controller.html

[2] https://mp.weixin.qq.com/s/rXND432WjsQCErESNjcu5g

[3] https://mp.weixin.qq.com/s/WCWVHUo3UxcckBriKOlKeQ

API is MCP Higress Launches MCP Marketplace to Accelerate Existing APIs into the MCP Era

639 posts | 55 followers

FollowAlibaba Cloud Native Community - November 14, 2025

Alibaba Container Service - September 17, 2025

Alibaba Cloud Native - September 4, 2023

Alibaba Container Service - June 12, 2019

Alibaba Cloud Native Community - January 6, 2026

Alibaba Cloud Native Community - May 17, 2022

639 posts | 55 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn More Security Solution

Security Solution

Alibaba Cloud is committed to safeguarding the cloud security for every business.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Cloud Native Community