By Zibai, an Alibaba Cloud Development Engineer and Xiheng, an Alibaba Cloud Technical Expert

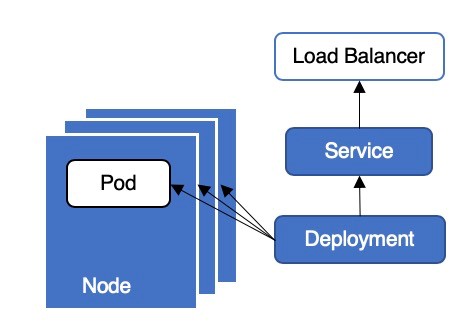

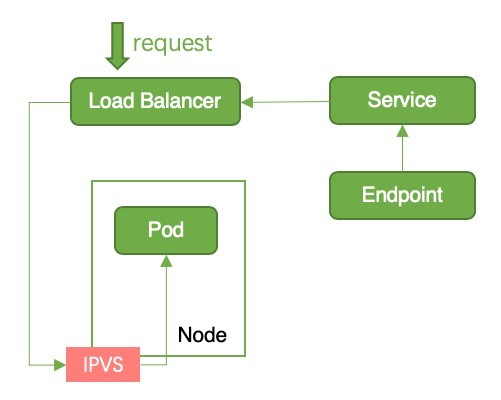

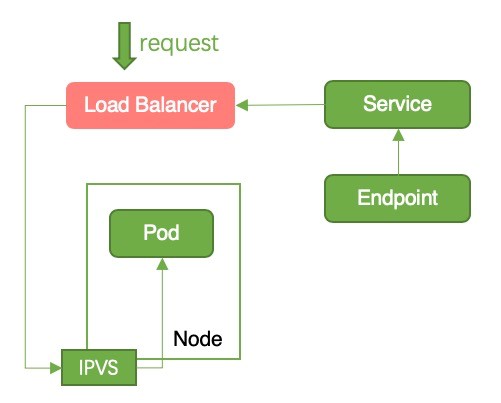

In Kubernetes clusters, business applications usually provide external services in the form of Deployment combined with the Load Balancer service. Figure 1 shows a typical deployment architecture. This architecture is very simple and convenient for deployment and O&M, but downtime may occur and cause online problems during application updates or upgrades. Today, let's take a close look at why this architecture may cause downtime during application updates and how to prevent downtime.

Figure 1: Deployment Architecture

A new pod will be created before deployment is updated in a rolling manner. The old pod will be deleted after the new pod starts to run.

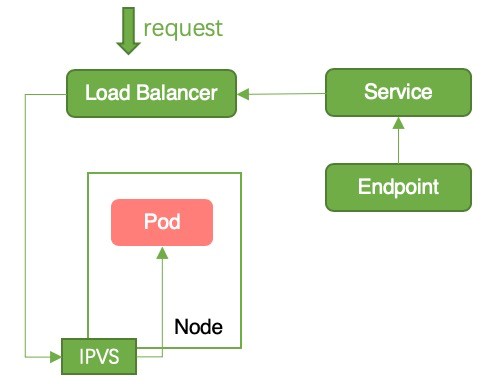

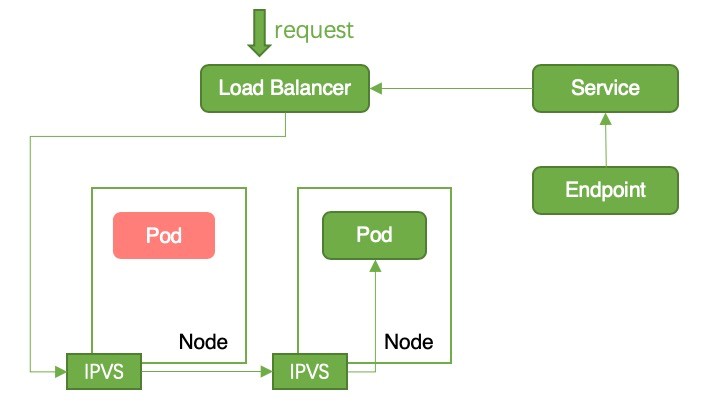

Figure 2: Downtime

Reason for Downtime: After the pod starts to run, it is added to endpoints. After detecting the change in the endpoints, Container Service for Kubernetes (ACK) adds the corresponding node to the backend of the Server Load Balancer (SLB) instance. At this time, the request is forwarded from the SLB instance to the pod. However, the application code in the pod has not yet been initialized, so the pod cannot handle the request. This results in downtime, as shown in Figure 2.

Solution: Configure readinessProbe for the pod, and add the corresponding node to the backend of the SLB instance after the application code in the pod is initialized.

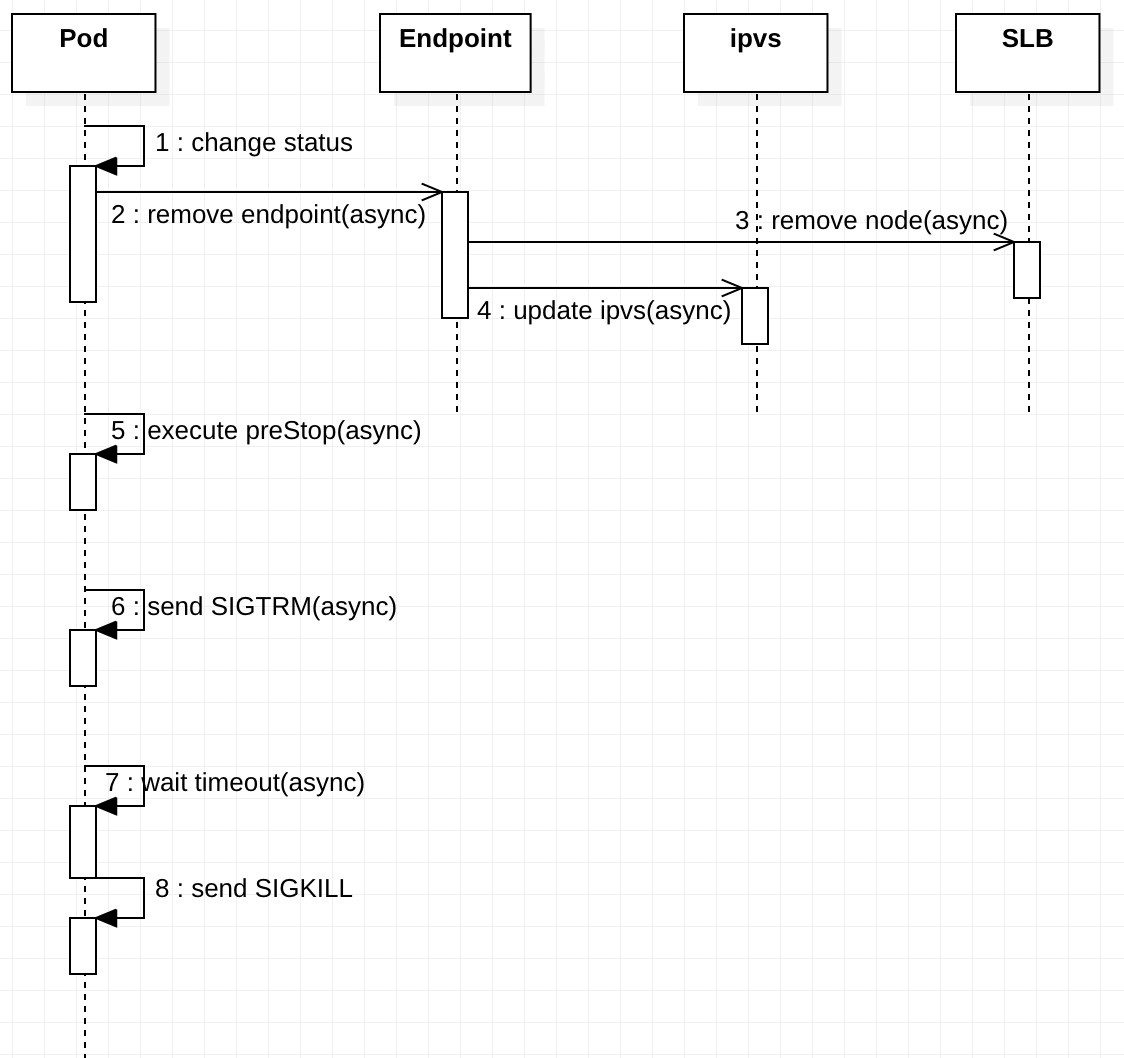

When you delete the old pod, you must synchronize the status of multiple objects, such as the endpoint, IPVS, iptables, and SLB instance. These sync operations are performed asynchronously. Figure 3 shows the overall synchronization process.

Figure 3: Sequence Diagram for Deployment Update

preStop hook: The preStop hook will be triggered when the pod is deleted. preStop supports bash scripts and TCP or HTTP requests.terminationGracePeriodSeconds field is used to control the wait time. The default value is 30 seconds. This step is performed simultaneously with the preStop hook, so the terminationGracePeriodSeconds value must be higher than the execution time of preStop. Otherwise, the pod may be killed before preStop is completely executed.Reason for Downtime: The preceding steps 1, 2, 3, and 4 are performed simultaneously, so it is possible that the pod has not been removed from endpoints after it received the SIGTERM signal and stopped working. At this time, the request is forwarded from the SLB instance to the pod, but the pod has already stopped working. Therefore, downtime may occur, as shown in Figure 4.

Figure 4: Downtime

Solution: Configure the preStop hook for the pod, so the pod can sleep for some time after it receives SIGTERM, instead of stopping working immediately. This ensures that the traffic forwarded from the SLB instance can be processed by the pod.

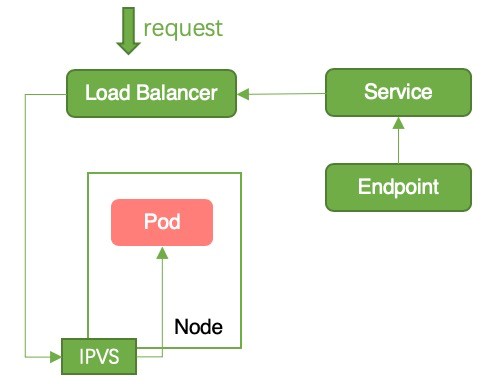

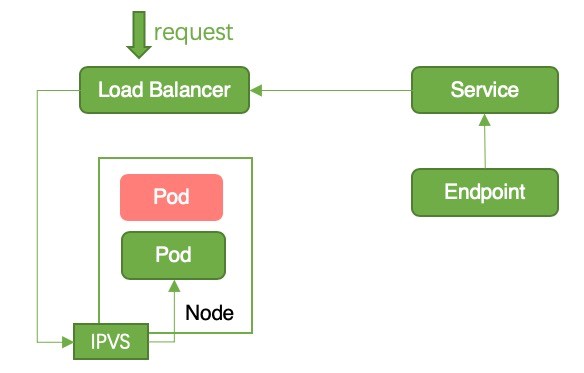

Reason for Downtime: When the pod status changes to Terminating, the pod will be removed from the endpoints of all services. kube-proxy cleans up corresponding iptables or IPVS rules. After detecting the change in the endpoints, ACK will call slbopenapi to remove the backend of endpoints. This operation will take several seconds. The two operations are performed simultaneously, so it is possible that the iptables or IPVS rules on the node have been cleaned up, whereas the node has not been removed from the backend of the SLB instance. At this time, traffic flows from the SLB instance. However, downtime occurs because there are not any corresponding iptables or IPVS rules on the node, as shown in Figure 5.

Figure 5: Downtime

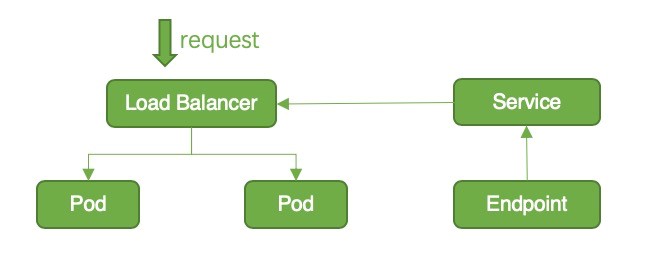

Figure 6: Request Forwarding in Cluster Mode

Figure 7: Request Forwarding During In-Place Upgrade in the Local Mode

Figure 8: Request Forwarding in ENI Mode

Figure 9: Downtime

Reason for Downtime: After detecting the change in the endpoints, ACK will remove the node from the backend of the SLB instance. When the node is removed from the backend of the SLB instance, the SLB instance will directly terminate persistent connections that are continuing in the node. This results in downtime.

Solution: Configure graceful persistent connection termination for the SLB instance (depending on specific cloud vendors.)

To avoid downtime, we can start with pod and service resources. Next, we will introduce the configuration methods corresponding to the preceding reasons for downtime.

apiVersion: v1

kind: Pod

metadata:

name: nginx

namespace: default

spec:

containers:

- name: nginx

image: nginx

# Liveness probe

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 30

successThreshold: 1

tcpSocket:

port: 5084

timeoutSeconds: 1

# Readiness probe

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 30

successThreshold: 1

tcpSocket:

port: 5084

timeoutSeconds: 1

# Graceful termination

lifecycle:

preStop:

exec:

command:

- sleep

- 30

terminationGracePeriodSeconds: 60Note: You must properly set the probe frequency, delay time, unhealthiness threshold and other parameters for readinessProbe. The startup time for some applications is long. If the set time is too short, the pod will be restarted repeatedly.

livenessProbe represents a probe for liveness. If the number of failures reaches failureThreshold, the pod will be restarted. For more information about specific configurations, see the official documentation.readinessProbe represents a probe for readiness. Only after the readiness probe is passed can the pod be added to endpoints. After detecting the change in the endpoints, ACK will mount the node to the backend of the SLB instance.preStop to the time required for the running pod to process all remaining requests and set the terminationGracePeriodSeconds value to at least 30 seconds higher than the execution time of preStop.externalTrafficPolicy: Cluster)apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: default

spec:

externalTrafficPolicy: Cluster

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: nginx

type: LoadBalancerACK will mount all nodes in the cluster to the backend of the SLB instance (except for backend servers configured with BackendLabel), so the SLB instance quota is consumed quickly. SLB limits the number of SLB instances that can be mounted to each Elastic Compute Service (ECS) instance. The default value is 50. When the quota is used up, listeners and SLB instances cannot be created.

In the Cluster mode, if the current node does not have a running pod, a request will be forwarded to another node. NAT is required by cross-node forwarding, so the source IP address may be lost.

externalTrafficPolicy: Local)apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: default

spec:

externalTrafficPolicy: Local

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: nginx

type: LoadBalancer

# Ensure that each node has at least one running pod during the update.

# Ensure in-place rolling update by modifying UpdateStrategy and using nodeAffinity.

# * Set Max Unavailable in UpdateStrategy to 0, so that a pod is terminated only after a new pod is started.

# * Label several particular nodes for scheduling.

# * Use nodeAffinity+ and a number of replicas that is greater than the number of related nodes to ensure in-place build of a new pod.

# For example,

apiVersion: apps/v1

kind: Deployment

......

strategy:

rollingUpdate:

maxSurge: 50%

maxUnavailable: 0%

type: RollingUpdate

......

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: deploy

operator: In

values:

- nginxBy default, ACK will add the node where the pod corresponding to the service is located to the backend of the SLB instance. Therefore, the SLB instance quota is consumed slowly. In the local mode, a request is directly forwarded to the node where the pod is located, and cross-node forwarding is not involved. Therefore, the source IP address is reserved. In the local mode, you can avoid downtime by using in-place upgrade. The yaml file is shown above.

apiVersion: v1

kind: Service

metadata:

annotations:

service.beta.kubernetes.io/backend-type: "eni"

name: nginx

spec:

ports:

- name: http

port: 30080

protocol: TCP

targetPort: 80

selector:

app: nginx

type: LoadBalancerIn the Terway network mode, you can create an SLB instance in the ENI mode by configuring the service.beta.kubernetes.io/backend-type: "eni" annotation. In the ENI mode, the pod will be directly mounted to the backend of the SLB instance, while traffic is not processed by kube-proxy, so downtime does not occur. A request is forwarded directly to the pod, so the source IP address can be reserved.

The following table shows the comparison among the three service modes.

| Cluster | Local | ENI | |

|---|---|---|---|

| How It Works | For the Cluster mode (externalTrafficPolicy: Cluster), ACK mounts all nodes in the cluster to the backend of the SLB instance (except those configured using the BackendLabel tag.) |

For the Local mode (externalTrafficPolicy: Local), ACK, by default, adds only the node where the pod corresponding to the service is located to the backend of the SLB instance. |

In the Terway network mode, you can use the service to add the service.beta.kubernetes.io/backend-type: "eni" annotation and mount the pod directly to the backend of the SLB instance. |

| SLB quota consumption | Very Fast | Slow | Slow |

| Support for reserving the source IP address | No | Yes | Yes |

| Downtime | No | Yes (Downtime can be avoided through in-place upgrade.) | No |

Figure 10: Service Comparison

You can choose the combination of the service in the ENI mode, graceful pod termination, and readinessProbe.

readinessProbe.readinessProbe, and in-place upgrade. Make sure that each node has a running pod during the upgrade.Dubbo's Cloud-Native Transformation: Analysis of Application-Level Service Discovery

Understanding OpenKruise Kubernetes Resource Update Mechanisms

634 posts | 55 followers

FollowAlibaba Cloud Storage - June 4, 2019

Alibaba Cloud Native Community - May 27, 2025

Alibaba Container Service - July 9, 2025

Alibaba Cloud Native Community - November 15, 2023

Alibaba Clouder - March 5, 2019

Alibaba Cloud Storage - June 4, 2019

634 posts | 55 followers

Follow Server Load Balancer

Server Load Balancer

Respond to sudden traffic spikes and minimize response time with Server Load Balancer

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn More Resource Management

Resource Management

Organize and manage your resources in a hierarchical manner by using resource directories, folders, accounts, and resource groups.

Learn MoreMore Posts by Alibaba Cloud Native Community