In modern cloud-native and distributed system O&M scenarios, Prometheus has become the de facto standard for monitoring and alerting systems. With its powerful time-series data analysis capabilities, PromQL serves as a core tool for O&M engineers and developers to diagnose system performance and locate faults. However, the complex syntax and highly structured nature of PromQL place high demands on the user's expertise. The steep learning curve of PromQL prevents O&M personnel from utilizing its functions efficiently, resulting in low monitoring efficiency and misoperation risks. Additionally, exponential growth in metric volumes, driven by cloud-native ecosystem expansion, has rendered manually written query statements inadequate for massive data and dynamic scenarios.

PromQL Copilot, built on Alibaba Cloud Observability platform infrastructure (SLS and CMS) and the Dify framework, implements an end-to-end closed loop from natural language understanding, knowledge graph, query generation, to execution verification. The system delivers comprehensive functionality, including PromQL generation, interpretation, diagnosis, and metric recommendation, and has been deployed in the CloudMonitor console and observability MCP service. This provides users with an intelligent monitoring query experience, empowering enterprises to lower the O&M threshold and improve AIOps capabilities.

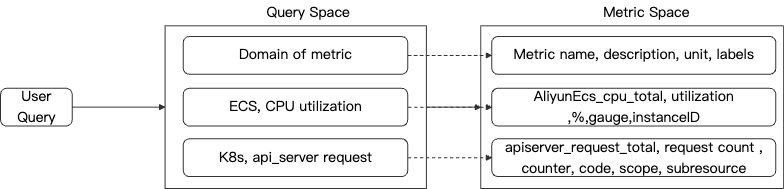

The core objective of generating PromQL from natural language is to convert natural language input by users (such as "check instances with service latency exceeding the threshold") into precise PromQL query statements. However, this process faces multiple technical challenges, spanning natural language understanding, domain knowledge integration, and performance optimization of large-scale metric data. The following sections start from the core challenges to analyze its technical difficulties and innovative solutions.

One of the core challenges for PromQL Copilot is converting highly ambiguous natural language intents into precise PromQL query statements. The contradiction between the ambiguity of natural language and the certainty of PromQL runs through the whole system design process.

In Prometheus scenarios, the ambiguity of natural language mainly manifests in the following forms:

Solutions:

The Prometheus metric system, query language (PromQL), and ecosystem components form a highly structured domain knowledge network. However, general large models (such as LLaMA and ChatGLM) lack in-depth understanding of this field, leading to issues like semantic deviation, syntax errors, or missing context when directly generating PromQL.

Examples:

avg() rather than rate().job="api-server", resulting in empty query results.Solutions:

To find the pod with the largest egress traffic, first calculate the egress traffic rate of each pod. Use `max by (pod_name)(rate(container_network_transmit_bytes_total{}[1m]))` to obtain the traffic rate, and then apply the `topk` function to select the pod with the largest traffic. The final PromQL statement is as follows:

topk (1, max by (pod_name)(rate(container_network_transmit_bytes_total{}[1m])))The Prometheus metric system has shown explosive growth: from Kubernetes core components (such as kube-apiserver and etcd) to custom metrics of microservices, and then to Node Exporter data at the hardware layer, scaling to tens of thousands of metrics. Faced with such a complex metric system, the metric knowledge base has become a core component of PromQL Copilot. It not only serves as a semantic bridge for converting natural language to PromQL but also provides key support for intelligent query recommendation, root cause analysis of exceptions, and cross-service dependency tracing.

Solutions:

The core value of PromQL Copilot lies in converting natural language intent into executable and valid PromQL queries. However, generating a syntactically correct PromQL does not mean that it can hit the data actually stored by the user. This challenge can be broken down into the following issues:

Solutions:

PromQL Copilot has been fully deployed in CloudMonitor and can be used in Prometheus instances. Using Observability MCP Server

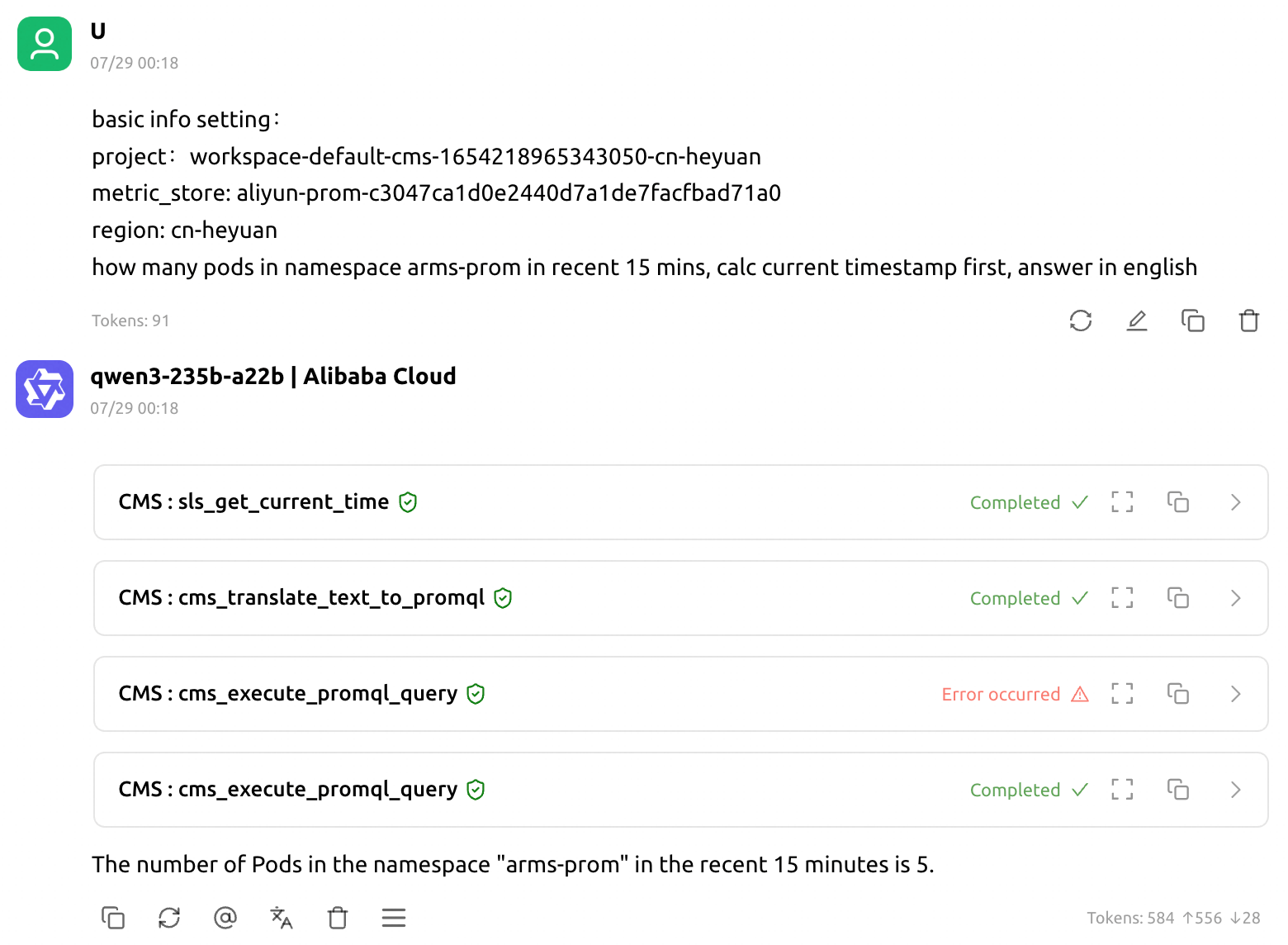

Alibaba Cloud Observability provides a unified MCP service, which can be obtained from the code repository on GitHub. This section takes Cherry Studio as an example to show how to use natural language to generate PromQL tools and query data.

The observability MCP Server is no different from the common MCP server. For more information about its configuration, see the open-source documentation.

After configuring the MCP Server, we can directly use observability-related tools. The following table shows the tools used in this section:

| Tool | Tool Description | Input Parameters | Output |

|---|---|---|---|

| cms_translate_text_to_promql | Generate PromQL from natural language | text, project, metricStore, and regionId | 1. PromQL statements 2. Thinking process |

| cms_execute_promql_query | Execute PromQL statements to implement data analysis and image generation based on SPL | project, metricStore, query, fromTimestampInSeconds, toTimestampInSeconds, and regionId | 1. Data of the latest point in each timeline within the query time range 2. A timeline chart at 1-minute intervals within the query time range |

| sls_get_current_time | Return the current time | None | 1. Current time string 2. Current timestamp (milliseconds) |

Parameter description:

| Parameter | Description |

|---|---|

| text | Natural language description of PromQL requirements |

| project | Project stored by SLS |

| metricStore | MetricStore stored by SLS |

| regionId | Alibaba Cloud region ID |

| query | PromQL |

| fromTimestampInSeconds | Start timestamp of the time range |

| toTimestampInSeconds | End timestamp of the time range |

In the local qwen-max-latest model, enter "Query the number of pods in the arms-prom namespace in the last 15 minutes" to experience the process where the large model thinks, plans, and executes based on the prompts from MCP Tools.

The details are as follows. The tool execution error occurs because the large model does not handle the symbol issues well when using PromQL as an input parameter. However, the large model itself has a self-repair capability, enabling it to execute smoothly and obtain results.

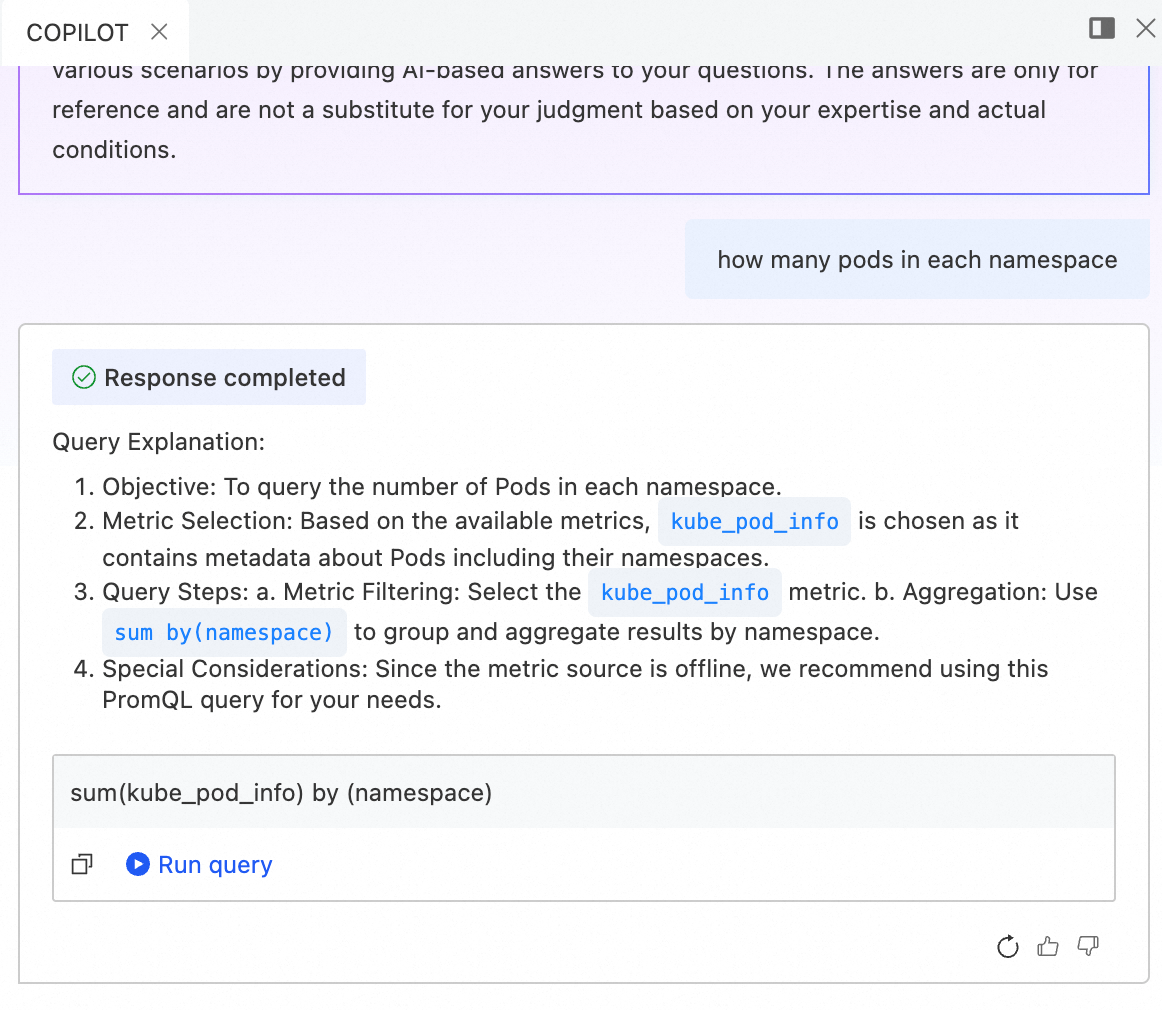

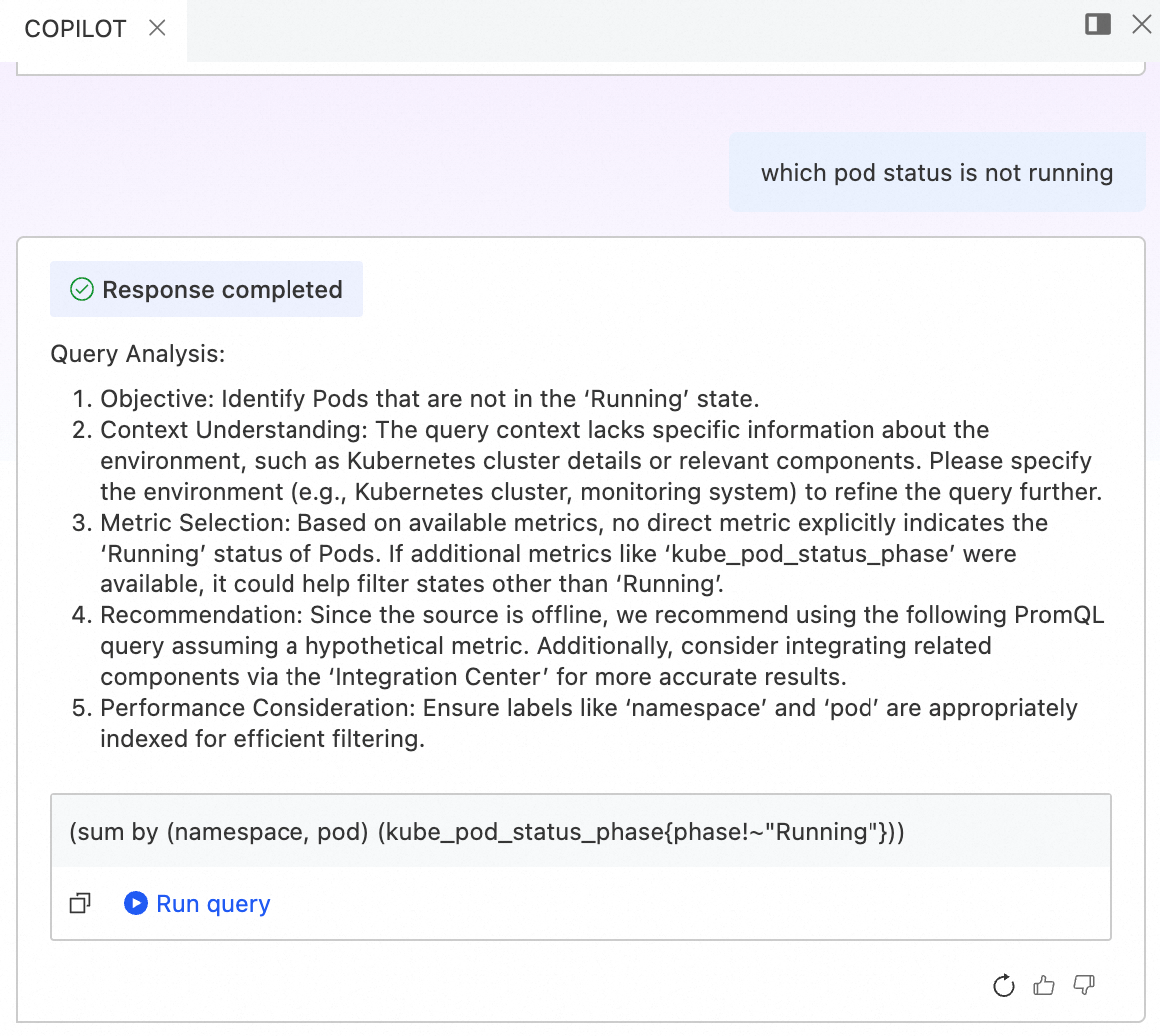

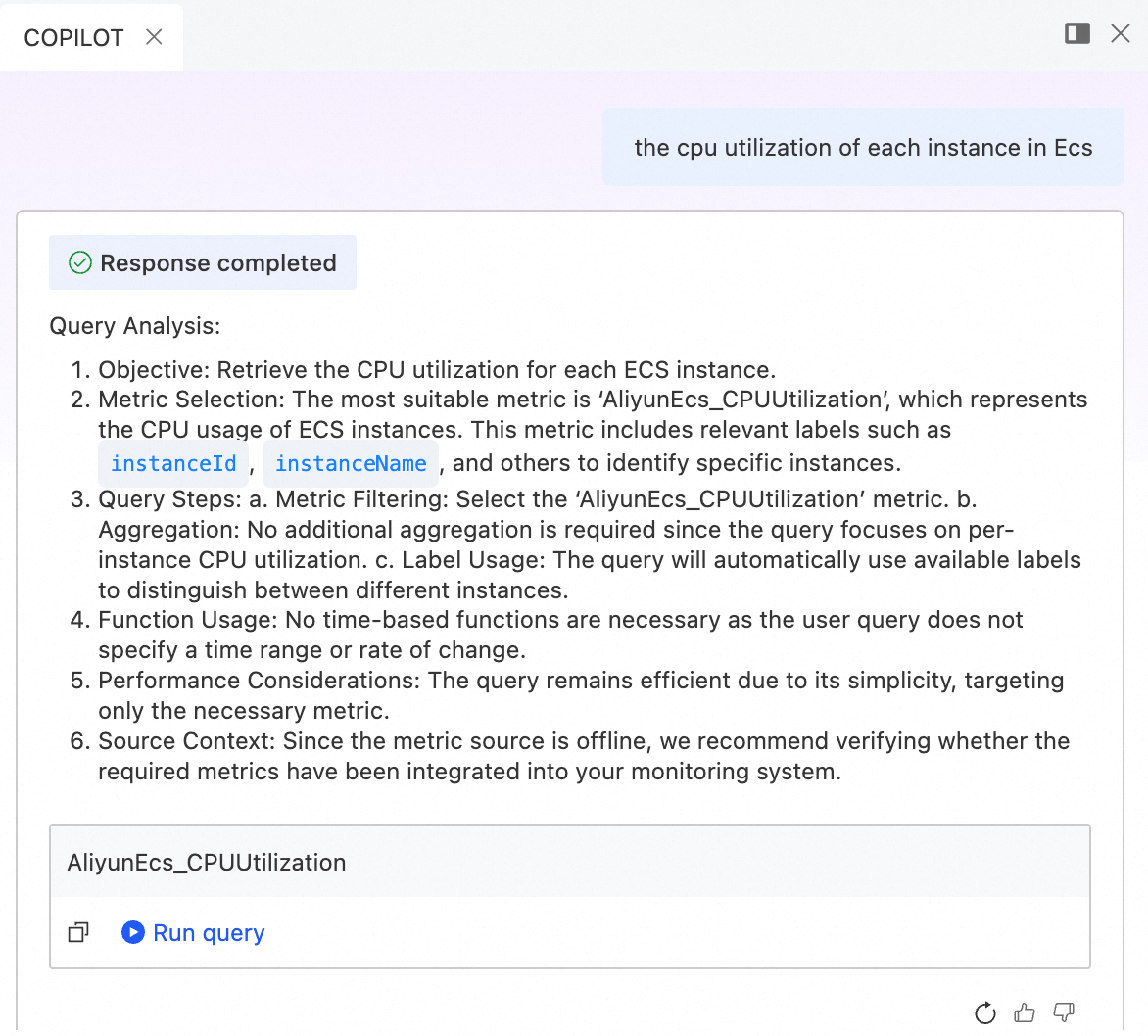

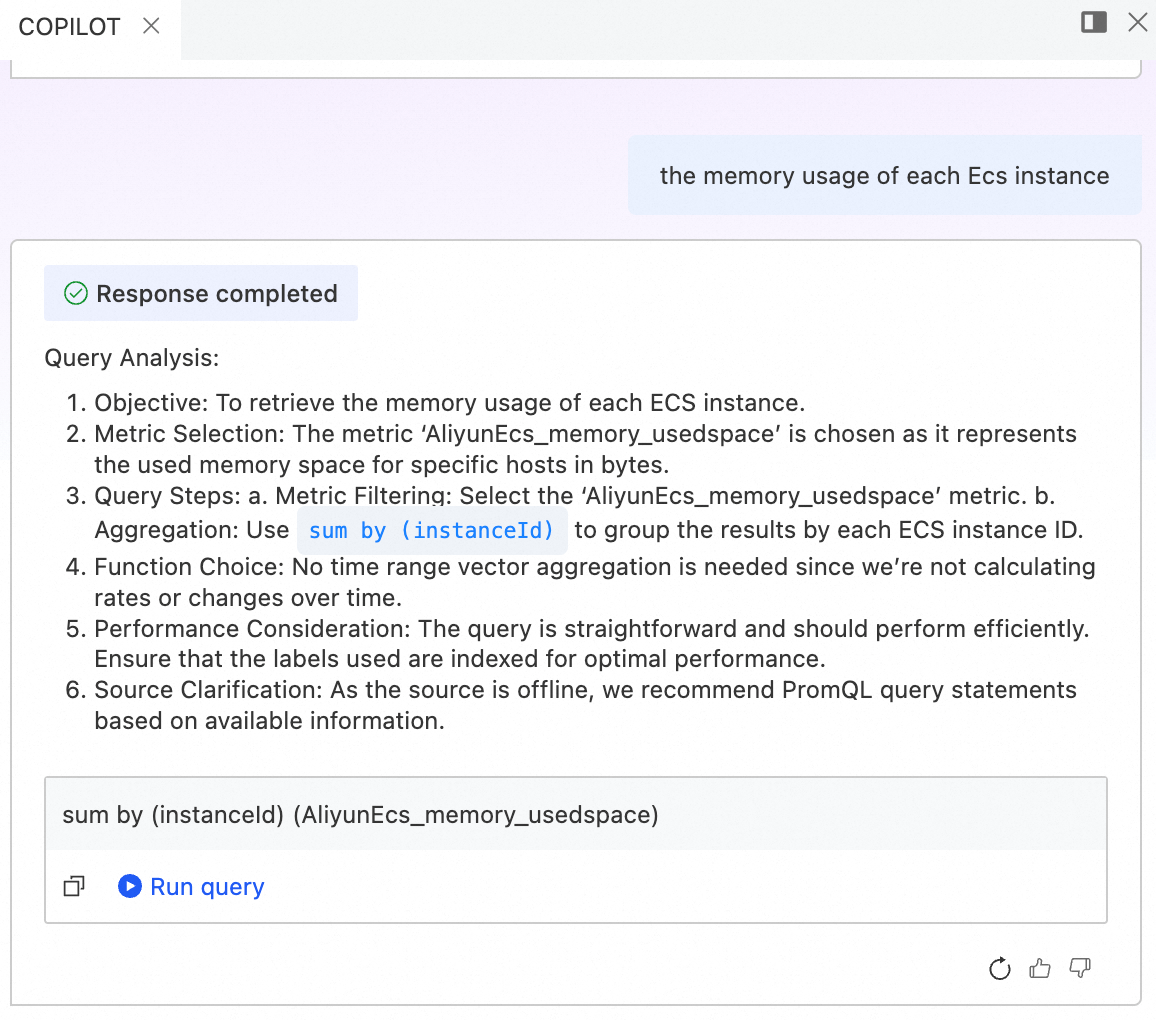

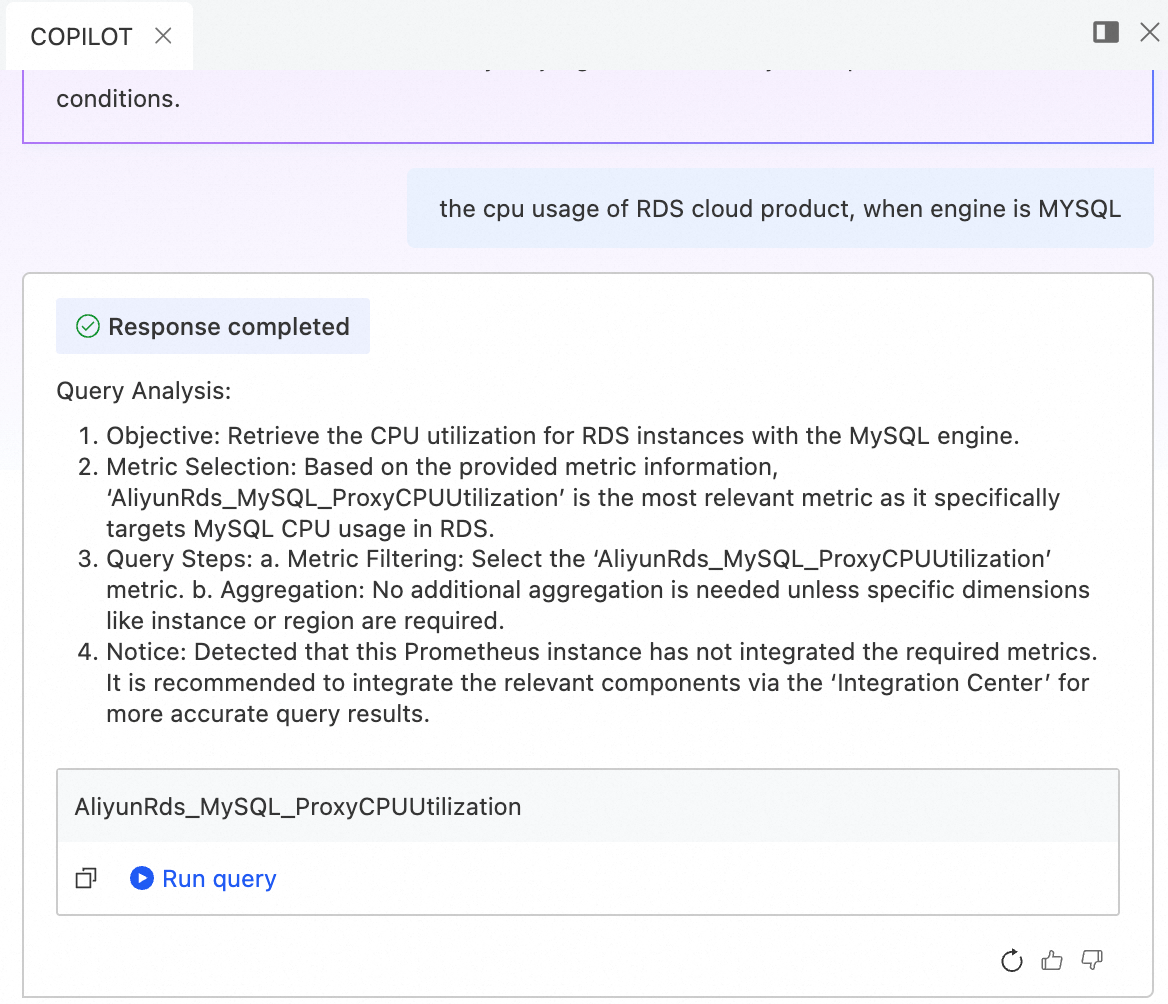

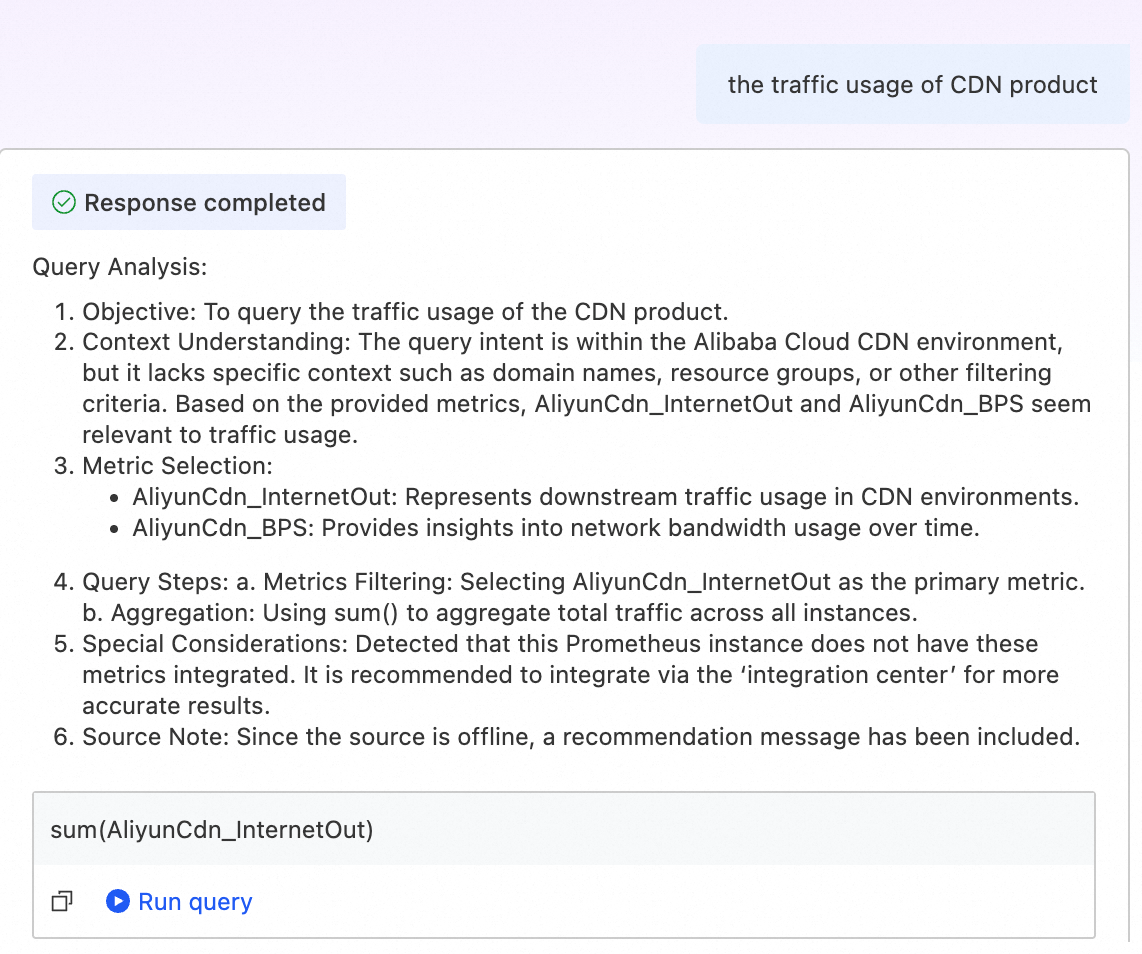

PromQL Copilot effectively answers users' questions in terms of open-source metrics and cloud service metrics. The following are some usage examples.

In the Kubernetes scenario, the use of cAdvisor and KSM metrics is shown.

Varying resource granularity in open-source metrics may yield different yet valid answers to the same question.

CloudMonitor supports monitoring of hundreds of cloud services or middleware. Different cloud services have their own defined metrics. Select Elastic Compute Service (ECS), ApsaraDB RDS, Alibaba Cloud Content Delivery Network (CDN), and Server Load Balancer (SLB) from cloud services in computing, storage, and network for demonstration.

As the core resource of a database, CPU is a key focus in daily O&M. Sustained high CPU utilization for a prolonged period causes slow database access responses, thereby causing business losses.

In the future, we will continue to optimize the accuracy, latency, and user experience of generating PromQL from natural language. Here are the aspects being optimized:

Starting from o11y 2.0: The "More, Faster, Better, Cheaper" Approach to Big Data Pipelines

MCP for Observability 2.0 - Six Practices for Making Good Use of MCP

654 posts | 55 followers

FollowDavidZhang - June 14, 2022

DavidZhang - December 30, 2020

DavidZhang - January 2, 2024

DavidZhang - July 5, 2022

Alibaba Cloud Storage - March 1, 2021

Alibaba Cloud Native Community - February 2, 2026

654 posts | 55 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by Alibaba Cloud Native Community