Observability 2.0 (o11y 2.0) has been a hot topic in DevOps over the past six months. HoneyComb introduced the article Introducing Observability 2.0, while CNCF quoted What is observability 2.0? defined by Middleware.

Regarding the problems that o11y 2.0 aims to solve, the following are some key insights:

● Break down silos between Log, Metric, and Trace, and establish a complete view of system health on a unified platform.

● The core is to enable engineers to more quickly understand system behavior, diagnose problems, and reduce downtime, including applying AI technologies to scenarios such as anomaly identification and fault location.

● With log data as the core, log can reconstruct facts by enriching information dimensions and context information (Wide Events).

● The cloud-native architecture provides an easy-to-use system with auto scaling and low-cost to query and analyze massive event data to identify and solve problems.

Observability 2.0 advocates an event-centric model with wide key-value pairs and structured events, supporting multi-dimensional and high-granularity data representation, so that system states and behaviors can be accurately described and queried. Therefore, the selection of an observability system should focus on:

● Storage system: handles massive real-time data streams with low latency and high write throughput, while supporting storage and accelerated queries for high-dimensional and structured data.

● Computing system: performs billion-level data statistics within seconds for real-time analysis. The system supports elastic real-time computing capabilities and dynamically associates data from multiple sources and dimension tables to process complex events.

The data pipeline is an important component in the computing system, positioned to produce high-quality Wide Events. It's like a refinery refining fuel, bitumen, and liquefied petroleum gas from crude oil.

Simple Log Service (SLS) is a core product of the Alibaba Cloud Observability portfolio. It provides fully managed observability data services. This article compiles the evolution of observability data pipelines and some thoughts using o11y 2.0 as a starting point.

SLS launched the data processing feature in 2019. We have been promoting the upgrade of data pipelines since 2024. What changes has the evolution brought to enterprise users? The author summarizes with the words "more, faster, better, and cheaper", which represent both achievements and aspirations.

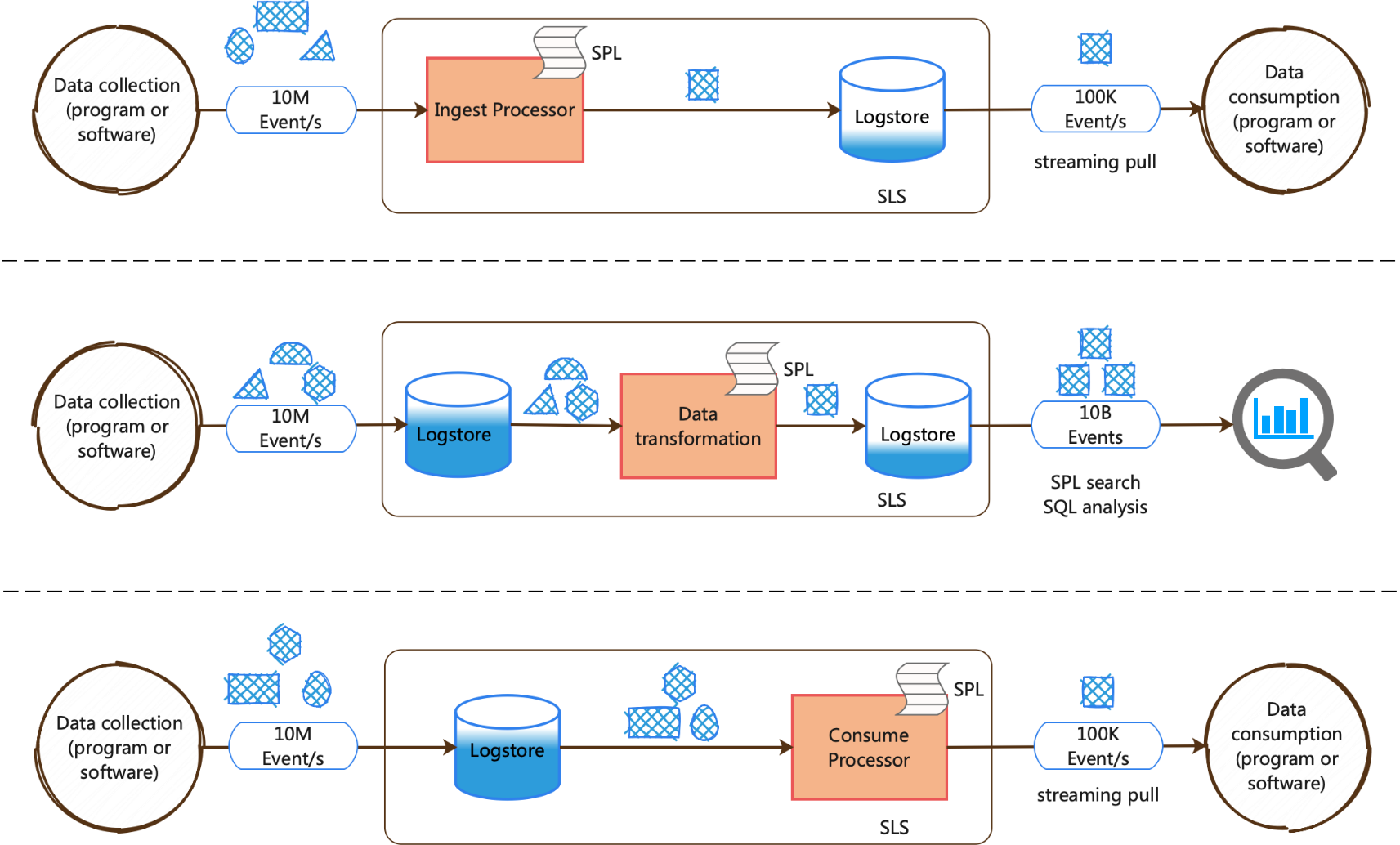

The upgraded SLS data pipeline service provides the following forms:

Do we really need so many forms? At the current development stage, these three forms meet reasonable needs in terms of scenario applicability, fault tolerance, cost, and ecosystem integration, each shouldering the mission of solving the problems in their respective scenarios.

|

Feature |

Ingest Processor |

Consumption Processor |

Data Processing |

|

Usage Form |

Users maintain the ingest client. SLS processes and stores data before responding to the client. |

Users maintain the consumption client. After receiving the request, SLS reads data for processing and then responds to the client. |

SLS is a fully managed service that reads data from the source database in streaming mode and writes data to the destination database after processing. |

|

SPL Operator Support |

Partially supported<br />Suitable for simple filtering and preprocessing scenarios |

Partially supported<br />Suitable for simple filtering and preprocessing scenarios |

Completely supported<br />Capabilities such as complex computing, lookup, and multi-target distribution |

|

Computing Fault Tolerance |

Medium<br />1. If the processing fails, users can upload the original text field.<br />2. Complex computing may increase the time spent on writing requests on the client. (hundreds of milliseconds level) |

High<br />1. Based on persistent data, computing can be done repeatedly.<br />2. Complex computing may increase the time spent on reading requests on the client. (hundreds of milliseconds level) |

High<br />1. Based on persistent data, computing can be done repeatedly.<br />2. Support SLB, shard discovery, and automatic retry on exceptions. |

|

Data Scenario Scalability |

Usage scenarios are relatively fixed. |

Data is written in the original format and can be processed individually in various consumption scenarios. |

|

|

Computing Cost |

Same |

||

|

Storage Cost |

Store the result data after computation. |

Store the original text data and the result data after computation.<br />The cost of storing the original data for one day is approximately equal to that of computing the data once. |

|

Data processing is a fully managed service, and the architecture is upgraded. Ingest and consumption processors are newly introduced features, offering more choices for upstream and downstream producers and consumers.

Take the consumption processor as an example. Flink is one of the most important ecosystems. It has been adopted by many big data customers in scenarios such as data filter pushdown, and cross-region data pulling and computing.

Is performance the most important factor for log ETL? Absolutely. On the one hand, due to real-time requirements, in observability scenarios, the data value varies greatly from seconds to hours of freshness. Whether it is traditional alert and exception log search, or fault root cause analysis and time series forecasting, real-time data is indispensable. A pipeline based on streaming data is a prerequisite for millisecond-level real-time data performance. On the other hand, since the tasks handled by log ETL are very complicated, analysts spend 60% of their time preparing high-quality data, such as field extraction, abnormal data filtering, data normalization, and dimension table data enrichment. Wide Events advocated by observability 2.0 also indirectly requires ETL performance, including associating more context information and processing larger events. Therefore, log ETL requires enhanced performance to enable more complex computations on more data.

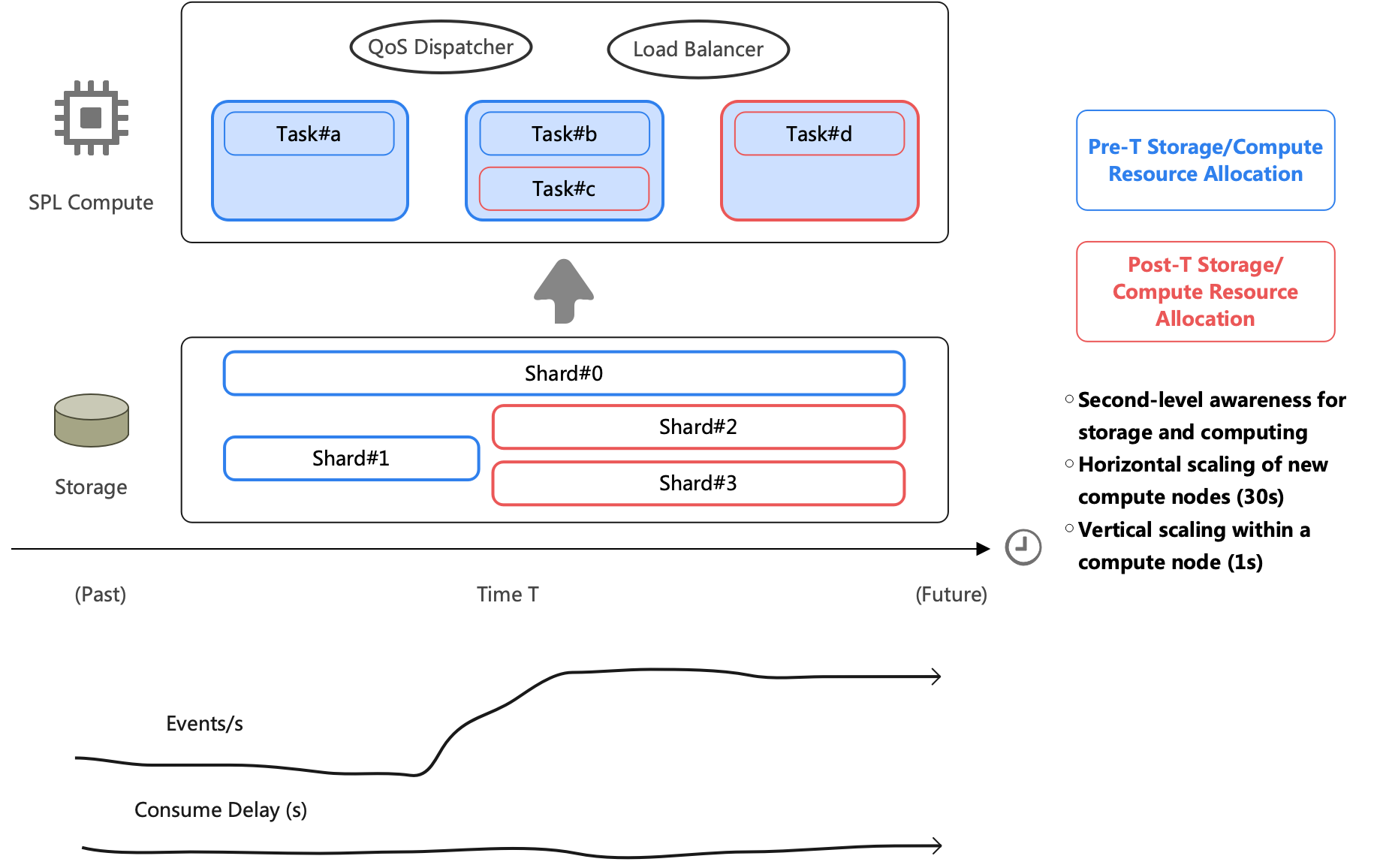

SLS uses the SPL engine as the kernel in the log pipeline, with advantages including column-oriented calculation, SIMD acceleration, and implementation in C++. Based on the distributed architecture of the SPL engine, we have redesigned an auto scaling mechanism, not only by instance (Kubernetes pod or service CU) granularity, but also by DataBlock granularity (MB level).

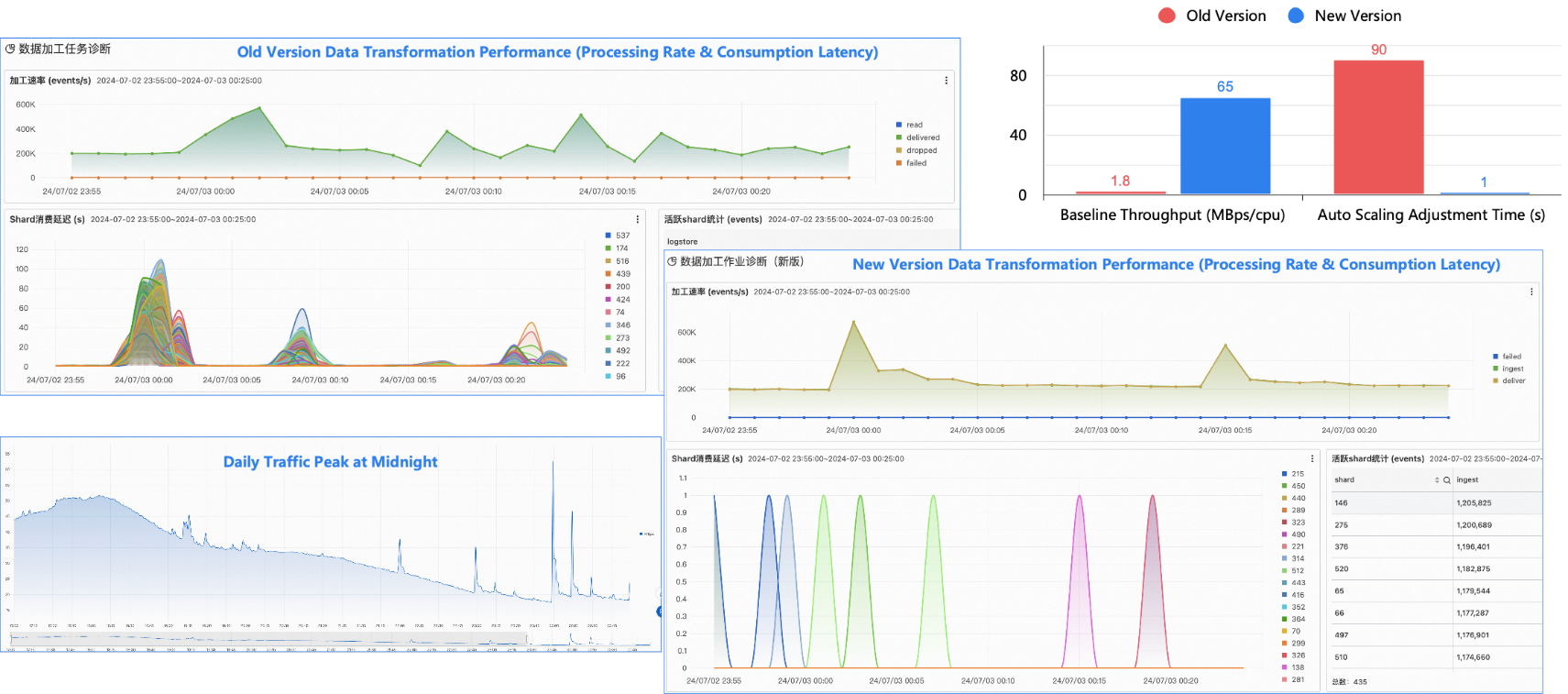

The following figure shows a real logging scenario. There is a log write pulse at 00:00 every day, which is doubled within a few seconds. The data processing of the old version performs poorly in such burst scenarios, incurring a two-minute latency during peak times. The new version is significantly optimized in CPU throughput. The rate of processing consumption data keeps increasing at the same frequency as the rate of log generation, limiting latency within 1 second.

Through horizontal scaling, in simple filtering scenarios, the new processing service currently handles a maximum single-job traffic of 1 PB/day (original size).

User experience is a rather subjective matter. SLS focuses on two improvements around SPL:

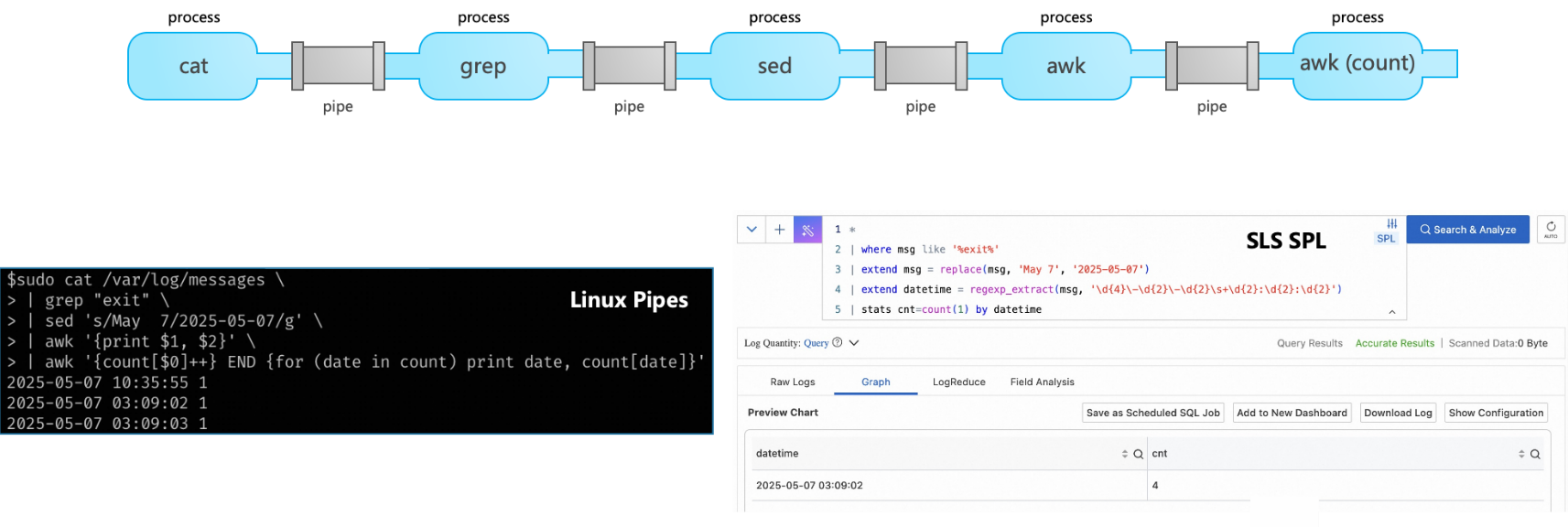

● Reduced language learning cost: SPL syntax completely reuses hundreds of functions provided by SLS SQL at the expression level, which offers more comprehensive capabilities than the old version of Python-DSL, and reduces the frequency of manual checking for SQL professionals. The SQL system requires a fixed data schema, which is not flexible enough in logging scenarios. In contrast, SPL extends some schema-free instructions (for example, parse-json expands an unspecified number of subfields to the first layer).

● Progressive low-code development: SPL is probably inspired by familiar Linux commands (grep/awk/sort) and pipelines, which have long been used by many observability vendors (such as Splunk, Azure Monitor, and Grafana). This year, Google extended the pipeline syntax in SQL, prompting some vendors to adopt similar approaches in the general analysis field.

It is worth mentioning that apart from being used in real-time query and analysis scenarios, SPL can be used for ingest processors, consumption processors, new data processing, and even Logtail.

For example, if an SPL data preprocessing statement works well on Logtail but requires higher horizontal scalability without consuming resources in the machine nodes where the business resides, you can simply copy the SPL statement to the new version of data processing and save it to achieve fully managed data processing with the same effect.

After the SPL engine achieved significant performance improvement over the previous Python-DSL engine, SLS lowered the pricing of the new version of the processing to one-third of that of the previous version.

From a TCO (Total Cost of Ownership) perspective, the savings go beyond computing costs. Large enterprises incur two additional costs when building data pipelines:

● Storage shard costs: Storage shards occupy physical storage and are often charged a reserved fee (or affect the service limit of self-managed software). If the computing performance is insufficient, you can increase the number of shards (to enhance the computing concurrency) or optimize the processing logic to improve the performance. Adding shards is the most common solution.

● Resource management costs: The shard expansion of a Logstore introduces emergency O&M costs. When the business peak-valley difference is more than twice, shard expansion operations across numerous Logstores can easily lead to chaos. In addition, for extremely complex ETL logic (more than dozens of lines per job), insufficient performance may require splitting some logic into new jobs, which makes it more difficult to manage Logstores and jobs.

SLS supports consumption integration for a variety of software (such as Flink, Spark, and Flume) and services (such as DataWorks, OSS, and MC). However, it is necessary to write code for personalized business requirements. This development process frequently involves repeated code logic, and the newly developed code needs significant time and manpower to ensure its efficiency and accuracy.

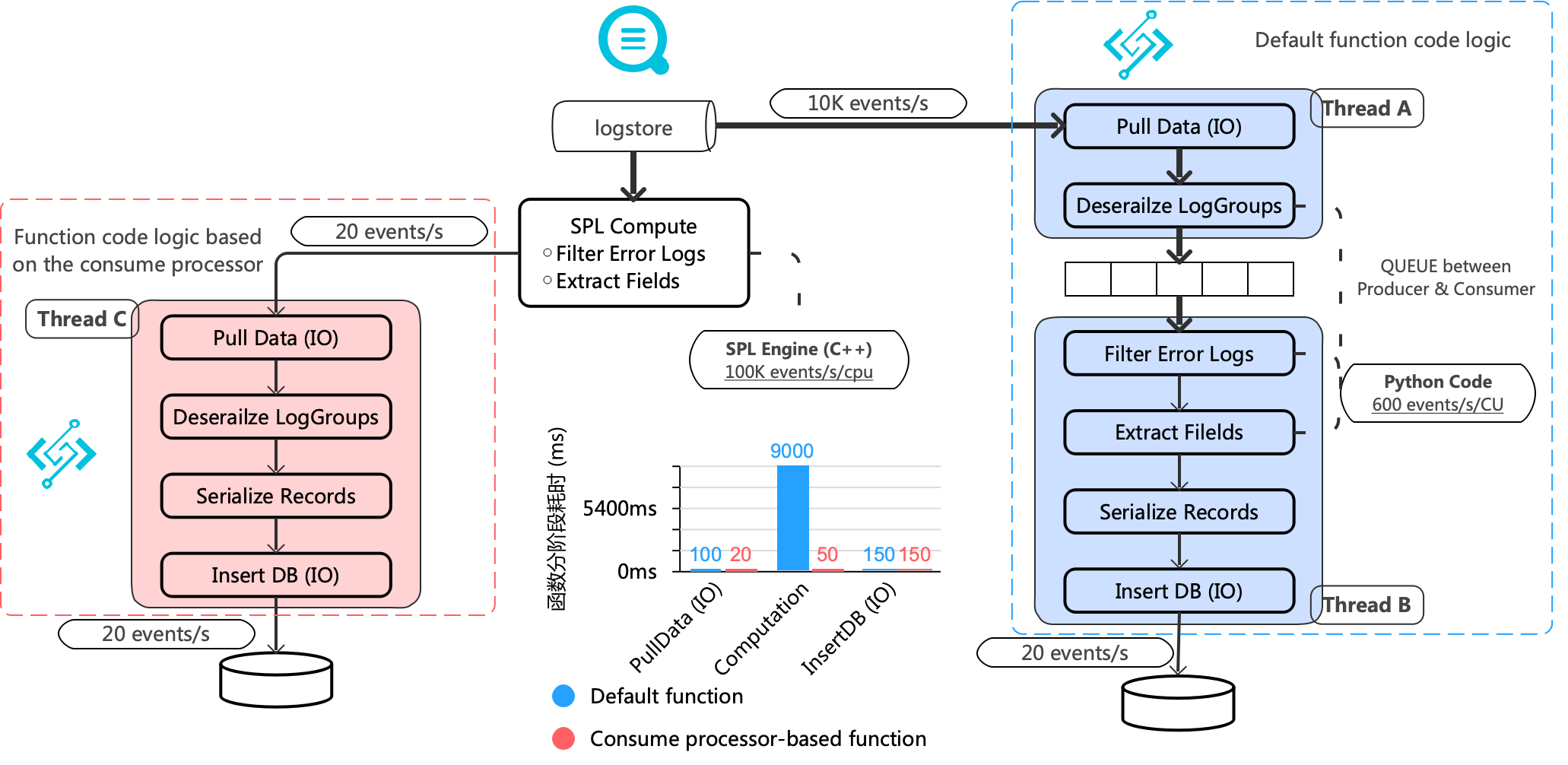

Take the integration of SLS and FC as an example. The requirement is to write a program on the Function Compute: read data from SLS, filter out error information (at a ratio of 2‰), extract structured fields from error logs, and insert the result data into the database.

The default method is full consumption, and all I/O and computing processes are completed in Python code (as shown in the following blue part).

It is much simpler to do the same thing through the SLS consumption processor (as shown in the red part above):

● Filtering and field extraction are commonly used logic, which is pushed down to SLS by using low-code SPL, greatly saving time on Python code development and testing.

● Filtering and field extraction are often CPU-intensive operations. SPL computing has a huge performance advantage over quickly modified Python code.

In a customer scenario with a high filter ratio, 10MB of data is processed, with the end-to-end function execution duration reducing from 10-15 seconds to 300 milliseconds. This resolves the consumption latency issue for the business. Additionally, in the pay-by-request mode of the function, the reduced execution duration also brings the function cost back to a reasonable level.

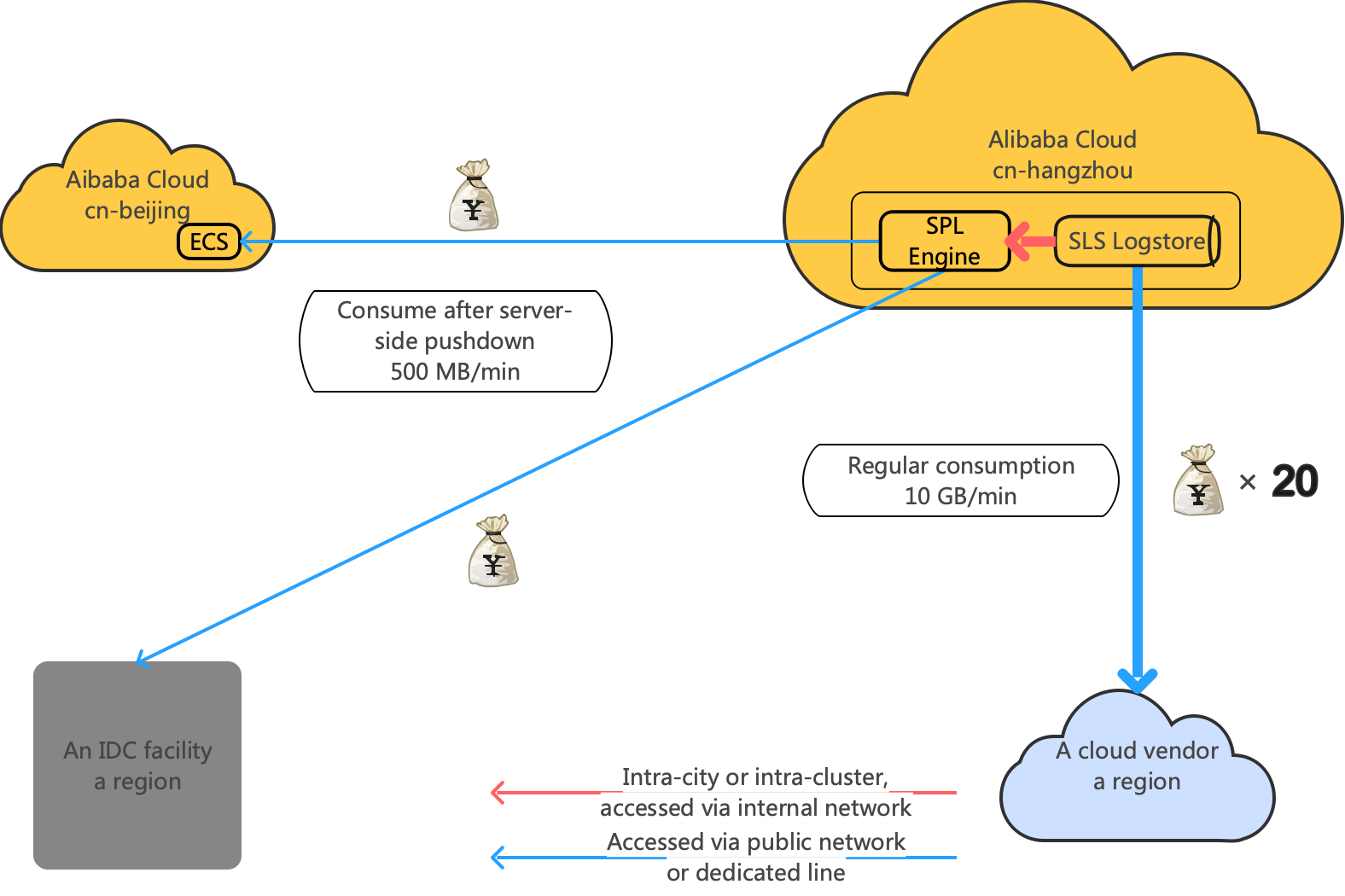

Compared with the computing cost, the network cost (Internet and leased line) introduced by the pipeline is often the larger expense. This is typically common in three scenarios:

● Deploy services in multiple Alibaba Cloud regions, requiring access to data across regions.

● Hybrid cloud architecture, where some cloud data needs to be transmitted to the data center

● Multi-cloud architecture

How can we optimize the high network cost? The first step is to enable data compression during transmission and use the most space-efficient compression algorithm (for example, ZSTD achieves a good balance between data size and CPU overhead). Furthermore, we can attempt some business-specific optimizations.

Logs have a two-dimensional structure, including rows (each log) and columns (a field of a single log). By transmitting only necessary data across regions, traffic costs can be affordable. The SLS consumption processor is recommended for optimization in the following two scenarios:

● Column projection: * | project time_local, request_uri, status, user_agent, client_ip

● Row filtering: * | where status != '200'

Beyond observability 2.0, the AI wave is driving the implementation of large model tools in production, and storage and computing of observability big data are their cornerstone. We believe that SPL-based observability pipelines boast strong advantages in schema-free data processing, Wide Events processing, real-time high performance, and flexible scalability. We are continuously enhancing the capabilities of SPL pipelines. Please stay tuned.

DeepWiki × LoongCollector: An Understanding of AI Reshaping Open-source Code

Don't Understand PromQL? AI Agents Help You with Large-scale Metric Data Analysis

639 posts | 55 followers

FollowApache Flink Community - December 17, 2024

Alibaba Cloud Big Data and AI - January 8, 2026

Alibaba Cloud Big Data and AI - December 29, 2025

Neel_Shah - September 17, 2025

Alibaba Clouder - December 2, 2020

Apache Flink Community - April 25, 2025

639 posts | 55 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn MoreMore Posts by Alibaba Cloud Native Community