By Che Yang, nicknamed Biran At Alibaba.

This is part of a series of articles that uses Alibaba Cloud Container Service as an example to help you get started with Kubeflow Pipelines.

The engineering associated with machine learning is complex due to common software development problems and the data-driven features of machine learning. As a result, the workflow becomes longer, data versions are out of control, experiments cannot be easily traced, results cannot be conveniently reproduced, and it is costly to iterate the model. To resolve these inherent issues in machine learning, many enterprises have built internal machine learning platforms to manage the machine learning lifecycle, such as Google's TensorFlow Extended platform, Facebook's FBLearner Flow platform, and Uber's Michelangelo platform. However, these platforms depend on the internal infrastructure of these enterprises. This means that they cannot be completely open-source. These platforms use the machine learning workflow as the framework. This framework enables data scientists to flexibly define their own machine learning pipelines and reuse existing data processing and model training capabilities to better manage the machine learning lifecycle.

Google has extensive experience in building machine learning workflow platforms. Its TensorFlow Extended platform supports Google's core businesses such as search, translation, and video playback. More importantly, Google has a profound understanding of engineering efficiency in the machine learning field. Google's Kubeflow team made Kubeflow Pipelines open-source at the end of 2018. Kubeflow Pipelines is designed in the same way as Google's internal TensorFlow Extended machine learning platform. The only difference is that Kubeflow Pipelines runs on the Kubernetes platform while TensorFlow Extended runs on Borg.

The Kubeflow Pipelines platform consists of the following components:

You can use Kubeflow Pipelines to achieve the following goals:

In view of the capabilities of Kubeflow Pipelines, are you eager to take a sneak peek? However, to use Kubeflow Pipelines in China, you must overcome the following challenges:

To allow users to install Kubeflow Pipelines in China, the Alibaba Cloud Container Service team provides a Kustomize-based deployment solution. Unlike basic Kubeflow services, Kubeflow Pipelines depends on stateful services such as MySQL and Minio. Therefore, data persistence and backup are required. In this example, we use standard Alibaba Cloud solid-state drives (SSDs) in the data persistence solution to automatically store MySQL and Minio data separately. You can deploy the latest version of Kubeflow Pipelines on Alibaba Cloud offline.

Kustomize.In Linux or Mac OS, run the following commands:

opsys=linux # or darwin, or windows

curl -s https://api.github.com/repos/kubernetes-sigs/kustomize/releases/latest |\

grep browser_download |\

grep $opsys |\

cut -d '"' -f 4 |\

xargs curl -O -L

mv kustomize_*_${opsys}_amd64 /usr/bin/kustomize

chmod u+x /usr/bin/kustomizeIn Windows, you can download and install kustomize_2.0.3_windows_amd64.exe.

Log on to the Kubernetes cluster through secure shell (SSH). For more information about this step, click Here.

Download the source code.

yum install -y git

git clone --recursive https://github.com/aliyunContainerService/kubeflow-aliyunSpecify security configurations.

Configure a transport layer security (TLS) certificate. If you do not have any TLS certificates, run the following commands to generate one:

yum install -y openssl

domain="pipelines.kubeflow.org"

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout kubeflow-aliyun/overlays/ack-auto-clouddisk/tls.key -out kubeflow-aliyun/overlays/ack-auto-clouddisk/tls.crt -subj "/CN=$domain/O=$domain"If you have a TLS certificate, upload the private key and certificate to kubeflow-aliyun/overlays/ack-auto-clouddisk/tls.key and kubeflow-aliyun/overlays/ack-auto-clouddisk/tls.crt, respectively.

Set a password for the admin account.

yum install -y httpd-tools

htpasswd -c kubeflow-aliyun/overlays/ack-auto-clouddisk/auth admin

New password:

Re-type new password:

Adding password for user adminUse Kustomize to generate a .yaml deployment file.

cd kubeflow-aliyun/

kustomize build overlays/ack-auto-clouddisk > /tmp/ack-auto-clouddisk.yamlCheck the region and zone of the Kubernetes cluster, and replace the zone as required. For example, if your cluster is in the cn-hangzhou-g zone, you can run the following commands:

sed -i.bak 's/regionid: cn-beijing/regionid: cn-hangzhou/g' \

/tmp/ack-auto-clouddisk.yaml

sed -i.bak 's/zoneid: cn-beijing-e/zoneid: cn-hangzhou-g/g' \

/tmp/ack-auto-clouddisk.yamlWe recommend that you check whether the /tmp/ack-auto-clouddisk.yaml file is updated.

Change the container image address from gcr.io to registry.aliyuncs.com.

sed -i.bak 's/gcr.io/registry.aliyuncs.com/g' \

/tmp/ack-auto-clouddisk.yamlWe recommend that you check whether the /tmp/ack-auto-clouddisk.yaml file is updated.

Adjust the disk space, for example, to 200 GB.

sed -i.bak 's/storage: 100Gi/storage: 200Gi/g' \

/tmp/ack-auto-clouddisk.yamlVerify the .yaml file of the Kubeflow Pipelines.

kubectl create --validate=true --dry-run=true -f /tmp/ack-auto-clouddisk.yamlDeploy the Kubeflow Pipelines service through kubectl.

kubectl create -f /tmp/ack-auto-clouddisk.yamlLet's look at how to access the Kubeflow Pipelines service. Here, we use Ingress to expose the Kubeflow Pipelines service. In this example, the IP address of the Kubeflow Pipelines service is 112.124.193.271. The URL of the Kubeflow Pipelines console is [https://112.124.193.271/pipeline/]().

kubectl get ing -n kubeflow

NAME HOSTS ADDRESS PORTS AGE

ml-pipeline-ui * 112.124.193.271 80, 443 11mGain access to the Kubeflow Pipelines console.

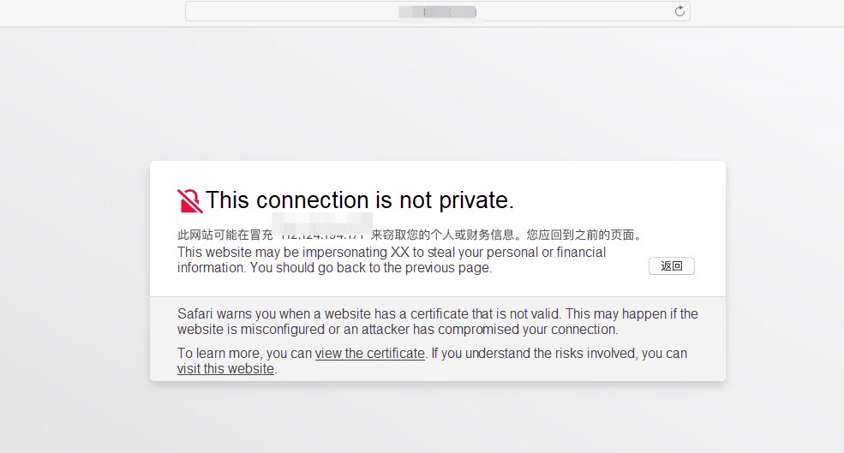

If you are using a self-signed certificate, the system will notify you that the connection is not private. You can click Advanced to view details and then click Visit to visit the website.

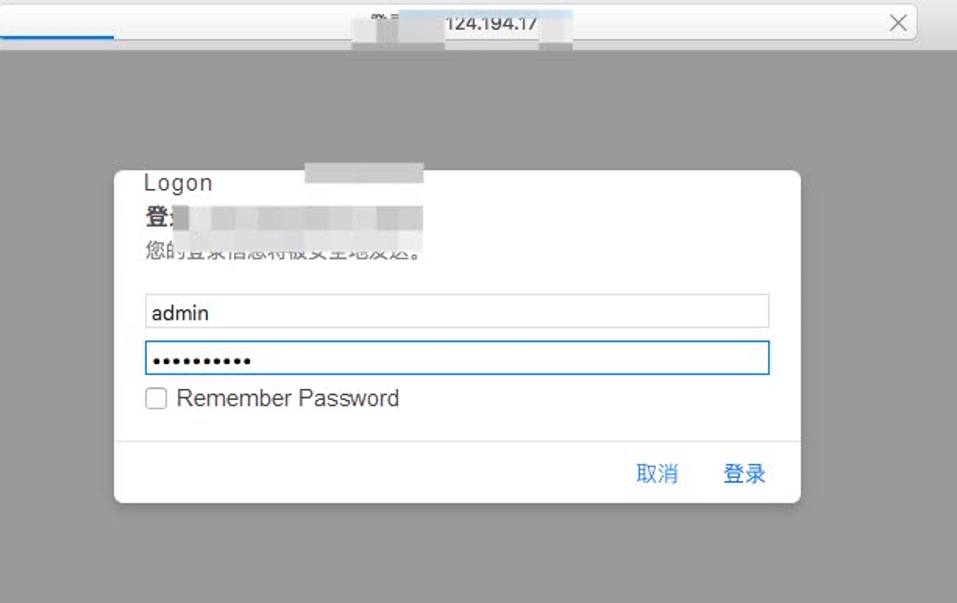

Enter the username admin and the password you specified before.

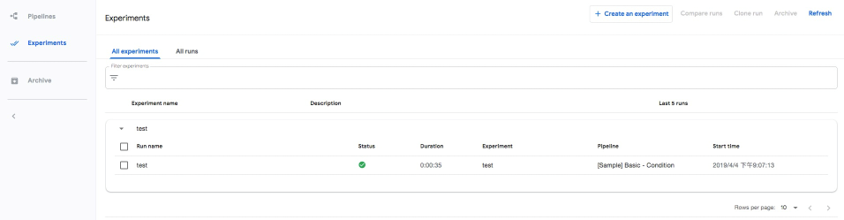

Now, you can manage and run training tasks in the Kubeflow Pipelines console.

1. Why do we use standard Alibaba Cloud SSDs in this example?

Standard Alibaba Cloud SSDs have several advantages. For example, you can schedule standard Alibaba Cloud SSDs to periodically back up the metadata of Kubeflow Pipelines to prevent data loss.

2. How can I back up the data of a disk?

If you want to back up the data stored in a disk, you can manually create snapshots of the disk or apply an automatic snapshot creation policy to the disk to automatically create snapshots on a schedule.

3. How can I undeploy Kubeflow Pipelines?

To undeploy Kubeflow Pipelines, follow these steps:

kubectl delete -f /tmp/ack-auto-clouddisk.yaml4. How can I use an existing disk as a database storage instead of automatically creating a disk?

For a detailed answer to this question, click here.

This document has gone over what Kubeflow Pipelines are, covered some of the issues resolved by Kubeflow Pipelines, and the procedure for using Kustomize on Alibaba Cloud to quickly build Kubeflow Pipelines for machine learning. In the future, we will share the procedure for developing a complete machine learning workflow based on Kubeflow Pipelines.

Experience RAPIDS Data Science Acceleration in Alibaba Cloud Container Service

224 posts | 33 followers

FollowAlibaba Clouder - January 13, 2021

Farruh - March 29, 2023

Alibaba Developer - June 17, 2020

Apache Flink Community China - September 27, 2020

Alibaba EMR - April 27, 2021

Alibaba Clouder - May 11, 2021

224 posts | 33 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Elastic Container Instance

Elastic Container Instance

An agile and secure serverless container instance service.

Learn MoreMore Posts by Alibaba Container Service