In a deep learning system, trained models provide services through the Serving service. This document describes how to construct a Serving service that supports auto scaling in a Kubernetes cluster.

Kubernetes uses Horizontal Pod Autoscaler (HPA) to perform auto scaling based on metrics such as CPU and memory metrics by default. The native Heapster-based HPA module of Kubernetes does not support auto scaling based on GPU metrics, but supports auto scaling based on custom metrics. You can deploy a Prometheus Adapter as a CustomMetricServer. This server will provide the Prometheus metric registration API for HPA to call. You can then configure HPA to use GPU metrics as custom metrics for auto scaling.

Note: After the native Heapster-based HPA module is configured to use custom metrics for auto scaling, it will be unable to use CPU and memory metrics for auto scaling.

Log on to the active nodes and execute the script for generating the Prometheus Adapter certificate.

#!/usr/bin/env bash

set -e

set -o pipefail

set -u

b64_opts='--wrap=0'

# go get -v -u github.com/cloudflare/cfssl/cmd/...

export PURPOSE=metrics

openssl req -x509 -sha256 -new -nodes -days 365 -newkey rsa:2048 -keyout ${PURPOSE}-ca.key -out ${PURPOSE}-ca.crt -subj "/CN=ca"

echo '{"signing":{"default":{"expiry":"43800h","usages":["signing","key encipherment","'${PURPOSE}'"]}}}' > "${PURPOSE}-ca-config.json"

export SERVICE_NAME=custom-metrics-apiserver

export ALT_NAMES='"custom-metrics-apiserver.monitoring","custom-metrics-apiserver.monitoring.svc"'

echo "{\"CN\":\"${SERVICE_NAME}\", \"hosts\": [${ALT_NAMES}], \"key\": {\"algo\": \"rsa\",\"size\": 2048}}" | \

cfssl gencert -ca=metrics-ca.crt -ca-key=metrics-ca.key -config=metrics-ca-config.json - | cfssljson -bare apiserver

cat <<-EOF > cm-adapter-serving-certs.yaml

apiVersion: v1

kind: Secret

metadata:

name: cm-adapter-serving-certs

data:

serving.crt: $(base64 ${b64_opts} < apiserver.pem)

serving.key: $(base64 ${b64_opts} < apiserver-key.pem)

EOF

kubectl -n kube-system apply -f cm-adapter-serving-certs.yamlDeploy the Prometheus Adapter as a CustomMetricServer.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: custom-metrics-apiserver

name: custom-metrics-apiserver

spec:

replicas: 1

selector:

matchLabels:

app: custom-metrics-apiserver

template:

metadata:

labels:

app: custom-metrics-apiserver

name: custom-metrics-apiserver

spec:

serviceAccountName: custom-metrics-apiserver

containers:

- name: custom-metrics-apiserver

image: registry.cn-beijing.aliyuncs.com/test-hub/k8s-prometheus-adapter-amd64

args:

- --secure-port=6443

- --tls-cert-file=/var/run/serving-cert/serving.crt

- --tls-private-key-file=/var/run/serving-cert/serving.key

- --logtostderr=true

- --prometheus-url=http://prometheus-svc.kube-system.svc.cluster.local:9090/

- --metrics-relist-interval=1m

- --v=10

- --config=/etc/adapter/config.yaml

ports:

- containerPort: 6443

volumeMounts:

- mountPath: /var/run/serving-cert

name: volume-serving-cert

readOnly: true

- mountPath: /etc/adapter/

name: config

readOnly: true

- mountPath: /tmp

name: tmp-vol

volumes:

- name: volume-serving-cert

secret:

secretName: cm-adapter-serving-certs

- name: config

configMap:

name: adapter-config

- name: tmp-vol

emptyDir: {}

---

kind: ServiceAccount

apiVersion: v1

metadata:

name: custom-metrics-apiserver

---

apiVersion: v1

kind: Service

metadata:

name: custom-metrics-apiserver

spec:

ports:

- port: 443

targetPort: 6443

selector:

app: custom-metrics-apiserver

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: custom-metrics-server-resources

rules:

- apiGroups:

- custom.metrics.k8s.io

resources: ["*"]

verbs: ["*"]

---

apiVersion: v1

kind: ConfigMap

metadata:

name: adapter-config

data:

config.yaml: |

rules:

- seriesQuery: '{uuid!=""}'

resources:

overrides:

node_name: {resource: "node"}

pod_name: {resource: "pod"}

namespace_name: {resource: "namespace"}

name:

matches: ^nvidia_gpu_(.*)$

as: "${1}_over_time"

metricsQuery: ceil(avg_over_time(<<.Series>>{<<.LabelMatchers>>}[3m]))

- seriesQuery: '{uuid!=""}'

resources:

overrides:

node_name: {resource: "node"}

pod_name: {resource: "pod"}

namespace_name: {resource: "namespace"}

name:

matches: ^nvidia_gpu_(.*)$

as: "${1}_current"

metricsQuery: <<.Series>>{<<.LabelMatchers>>}

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: custom-metrics-resource-reader

rules:

- apiGroups:

- ""

resources:

- namespaces

- pods

- services

verbs:

- get

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: hpa-controller-custom-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: custom-metrics-server-resources

subjects:

- kind: ServiceAccount

name: horizontal-pod-autoscaler

namespace: kube-systemAssign permissions to roles. If you use a namespace other than custom-metric, you need to change the value of the namespace parameter in the template.

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta1.custom.metrics.k8s.io

namespace: kube-system

spec:

service:

name: custom-metrics-apiserver

namespace: kube-system # Change this value if a namespace other than custom-metric is used.

group: custom.metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: custom-metrics-resource-reader

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: custom-metrics-resource-reader

subjects:

- kind: ServiceAccount

name: custom-metrics-apiserver

namespace: kube-system # Change this value if a namespace other than custom-metric is used.

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: custom-metrics:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: custom-metrics-apiserver

namespace: kube-system # Change this value if a namespace other than custom-metric is used.

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: custom-metrics-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: custom-metrics-apiserver

namespace: kube-systemCall the APIServer through the CustomMetricServer to verify that the Prometheus Adapter has been successfully deployed as a CustomMetricServer.

# kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/pods/*/temperature_celsius_current"

{"kind":"MetricValueList","apiVersion":"custom.metrics.k8s.io/v1beta1","metadata":{"selfLink":"/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/pods/%2A/temperature_celsius_current"},"items":[]}Modify the controller-manager configurations to use custom metrics for auto scaling. Log on to each of the three active nodes and execute the script for modifying the HPA configurations on the APIServer.

sed -i 's/--horizontal-pod-autoscaler-use-rest-clients=false/--horizontal-pod-autoscaler-use-rest-clients=true/g' /etc/kubernetes/manifests/kube-controller-manager.yamlTest the modified configurations.

# kubectl -n kube-system describe po -l component=kube-controller-manager | grep 'horizontal-pod-autoscaler-use-rest-clients'

--horizontal-pod-autoscaler-use-rest-clients=true

--horizontal-pod-autoscaler-use-rest-clients=true

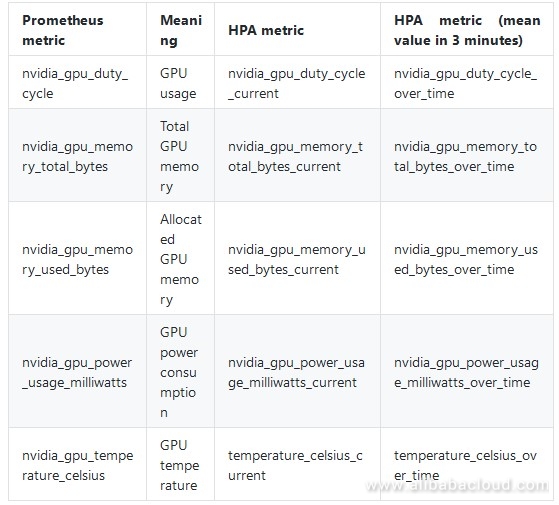

--horizontal-pod-autoscaler-use-rest-clients=trueAfter a Prometheus CustomMetricServer is deployed, use the configMap named adapter-config to configure the metrics that are to be exposed by the Prometheus CustomMetricServer to the APIServer. The following GPU metrics are supported:

Deploy the deployment

apiVersion: v1

kind: Service

metadata:

name: fast-style-transfer-serving

labels:

app: tensorflow-serving

spec:

ports:

- name: http-serving

port: 5000

targetPort: 5000

selector:

app: tensorflow-serving

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: fast-style-transfer-serving

labels:

app: tensorflow-serving

spec:

replicas: 1

template:

metadata:

labels:

app: tensorflow-serving

spec:

containers:

- name: serving

image: "registry.cn-hangzhou.aliyuncs.com/tensorflow-samples/fast-style-transfer-serving:la_muse"

command: ["python", "app.py"]

resources:

limits:

nvidia.com/gpu: 1Create an HPA that supports auto scaling based on GPU metrics.

kind: HorizontalPodAutoscaler

apiVersion: autoscaling/v2beta1

metadata:

name: gpu-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: fast-style-transfer-serving

minReplicas: 1

maxReplicas: 10

metrics:

- type: Pods

pods:

metricName: duty_cycle_current # Average GPU usage of the pod.

targetAverageValue: 40View HPA metrics and their values.

# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

gpu-hpa Deployment/fast-style-transfer-serving 0 / 40 1 10 1 37sUse the fast-style-transfer algorithm to deploy a stress testing application. This application will send images to Serving continuously.

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: fast-style-transfer-press

labels:

app: fast-style-transfer-press

spec:

replicas: 1

template:

metadata:

labels:

app: fast-style-transfer-press

spec:

containers:

- name: serving

image: "registry.cn-hangzhou.aliyuncs.com/xiaozhou/fast-style-transfer-press:v0"

env:

- name: SERVER_IP

value: fast-style-transfer-serving

- name: BATCH_SIZE

value: "100"

- name: TOTAL_SIZE

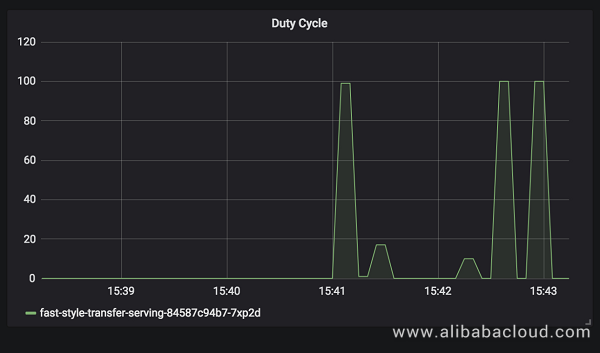

value: "12000"After the application is deployed, you can observe the GPU metric changes on the monitoring panel.

You can also observe the metric changes through HPA.

# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

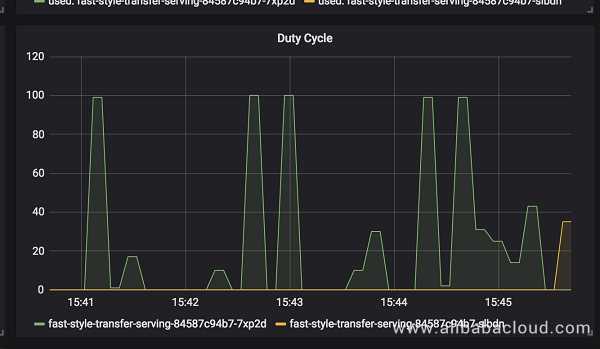

sample-gpu-hpa Deployment/demo-service 63 / 30 1 10 1 3mAfter the test has started, you can see that the pod has been scaled out.

NAME READY STATUS RESTARTS AGE

fast-style-transfer-press-69c48966d8-dqf5n 1/1 Running 0 4m

fast-style-transfer-serving-84587c94b7-7xp2d 1/1 Running 0 5m

fast-style-transfer-serving-84587c94b7-slbdn 1/1 Running 0 47sYou can also see the scaled-out pod and GPU metrics on the monitoring panel.

Stop the stress testing application. Run the following command to stop the stress testing application:

kubectl scale deploy fast-style-transfer-press --replicas=0 # Reduce the number of replicas to 0.(You can also perform the scaling operation on the console.)

Verify that the value of dutyCycle has been changed to 0 in HPA.

kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

gpu-hpa Deployment/fast-style-transfer-serving 0 / 40 1 10 3 9mAfter a period of time, check whether the pod has been scaled in.

kubectl get po

NAME READY STATUS RESTARTS AGE

fast-style-transfer-serving-84587c94b7-7xp2d 1/1 Running 0 10mTo learn more about Alibaba Cloud Container Service for Kubernetes, visit https://www.alibabacloud.com/product/kubernetes

Accessing gRPC Services through Container Service for Kubernetes Ingress Controller

120 posts | 26 followers

FollowAlibaba Container Service - April 11, 2019

Alibaba Cloud Native - June 9, 2022

Alibaba Clouder - January 19, 2021

Alibaba Cloud Native Community - December 2, 2021

Alibaba Cloud Native - October 18, 2023

Alibaba Cloud Native Community - September 20, 2023

120 posts | 26 followers

Follow Backup and Archive Solution

Backup and Archive Solution

Alibaba Cloud provides products and services to help you properly plan and execute data backup, massive data archiving, and storage-level disaster recovery.

Learn More Drive and Photo Service

Drive and Photo Service

A low-code, high-availability, and secure platform for enterprise file management and application

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Sensitive Data Discovery and Protection

Sensitive Data Discovery and Protection

SDDP automatically discovers sensitive data in a large amount of user-authorized data, and detects, records, and analyzes sensitive data consumption activities.

Learn MoreMore Posts by Alibaba Container Service