By Peng Li (Yuanyi)

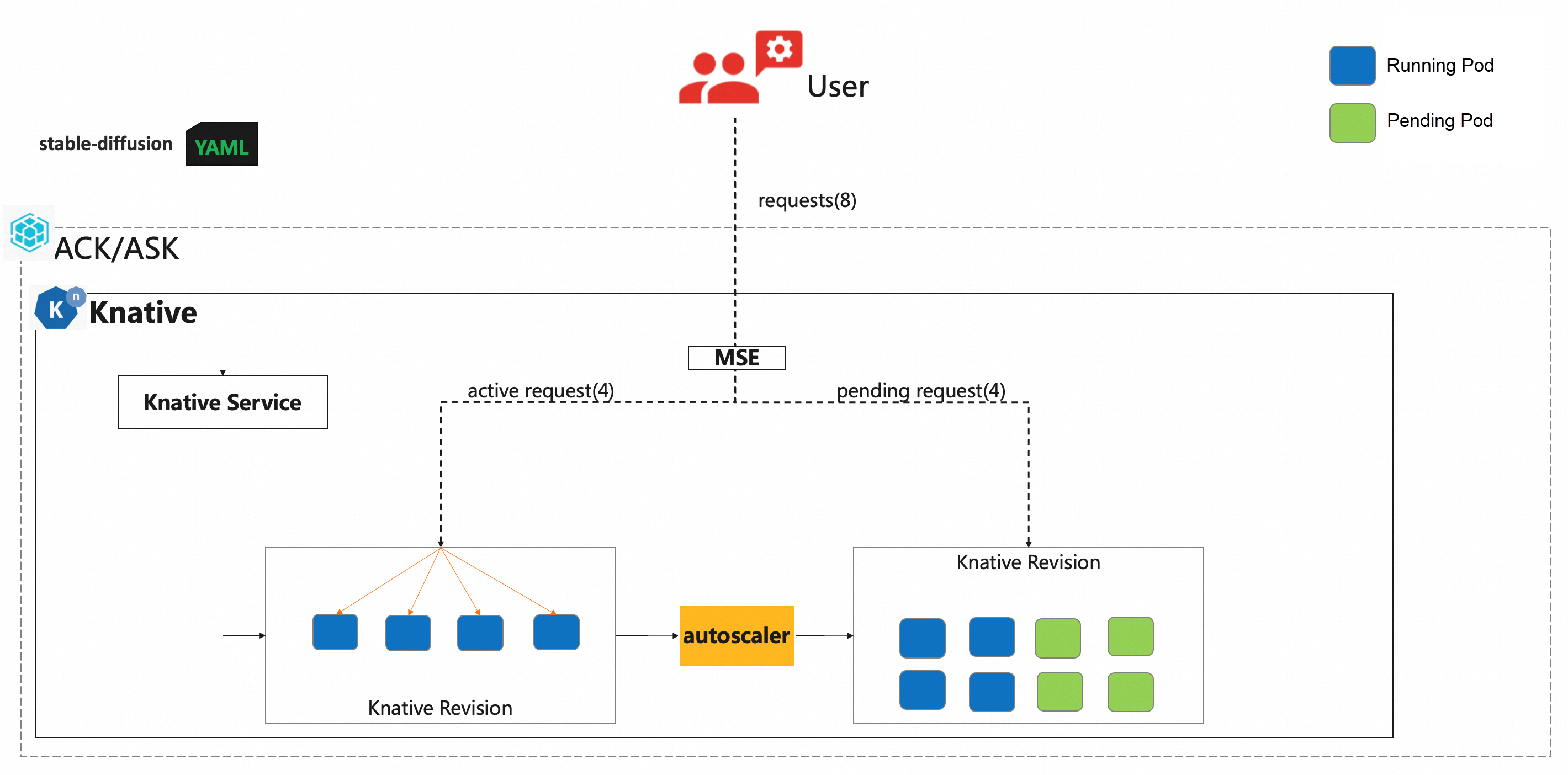

As generative AI capabilities improve, more attention is being paid to enhancing research and development efficiency through AI models. As a well-known project in the AI Generative Content (AIGC) field, Stable Diffusion can help users quickly and accurately generate desired scenes and pictures. However, the current use of Stable Diffusion faces the following problems:

• The processing capacity of a single pod is limited, and multiple requests forwarded to the same pod can overburden the server. Therefore, it is important to accurately control the number of concurrent requests processed by a single pod.

• GPU resources are precious, and it is essential to use resources on demand and release GPU resources in a timely manner during low business hours.

Based on these issues, we offer the Knative solution, which enables precision processing based on concurrent request numbers and auto scaling to create a Stable Diffusion service that is production-ready.

We provide the Knative + Microservices Engine (MSE) solution in Alibaba Cloud Kubernetes to address the preceding problems:

• Use MSE gateways to implement accurate forwarding based on the amount of concurrency.

• Use Knative to enable auto scaling based on request.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: knative-sd-demo

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/class: mpa.autoscaling.knative.dev

autoscaling.knative.dev/maxScale: '10'

autoscaling.knative.dev/targetUtilizationPercentage: "100"

k8s.aliyun.com/eci-extra-ephemeral-storage: 50Gi

k8s.aliyun.com/eci-use-specs: ecs.gn5-c4g1.xlarge,ecs.gn5i-c8g1.2xlarge,ecs.gn5-c8g1.2xlarge

spec:

containerConcurrency: 1

containers:

- args:

- --listen

- --skip-torch-cuda-test

- --api

command:

- python3

- launch.py

image: yunqi-registry.cn-shanghai.cr.aliyuncs.com/lab/stable-diffusion@sha256:1c9c8fa97bc70901fc992679fd90ad622a6e54e484659766d5eab127207e9d32

imagePullPolicy: IfNotPresent

ports:

- containerPort: 7860

name: http1

protocol: TCP

name: stable-diffusion

readinessProbe:

tcpSocket:

port: 7860

initialDelaySeconds: 5

periodSeconds: 1

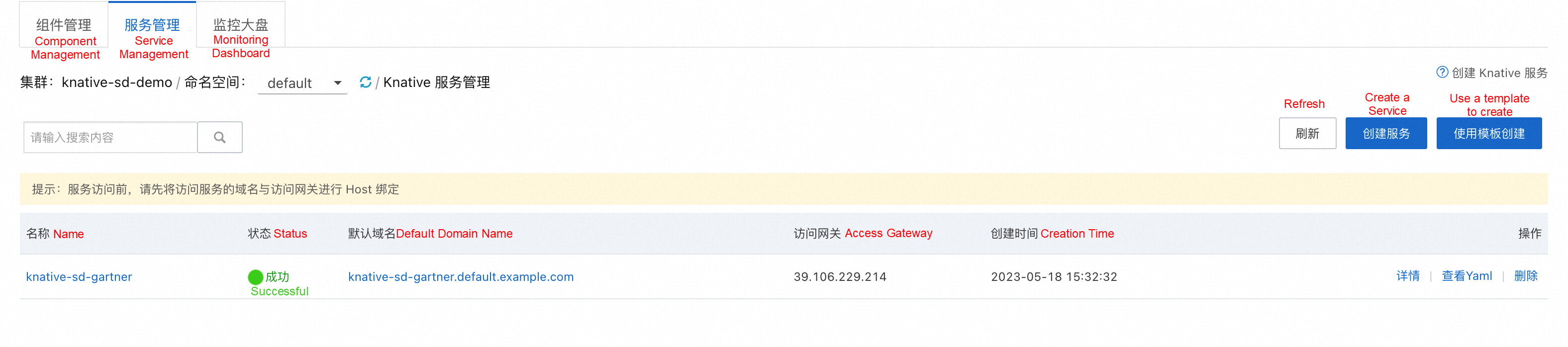

failureThreshold: 3On the Services tab, you can see the deployment status of knative-sd-demo.

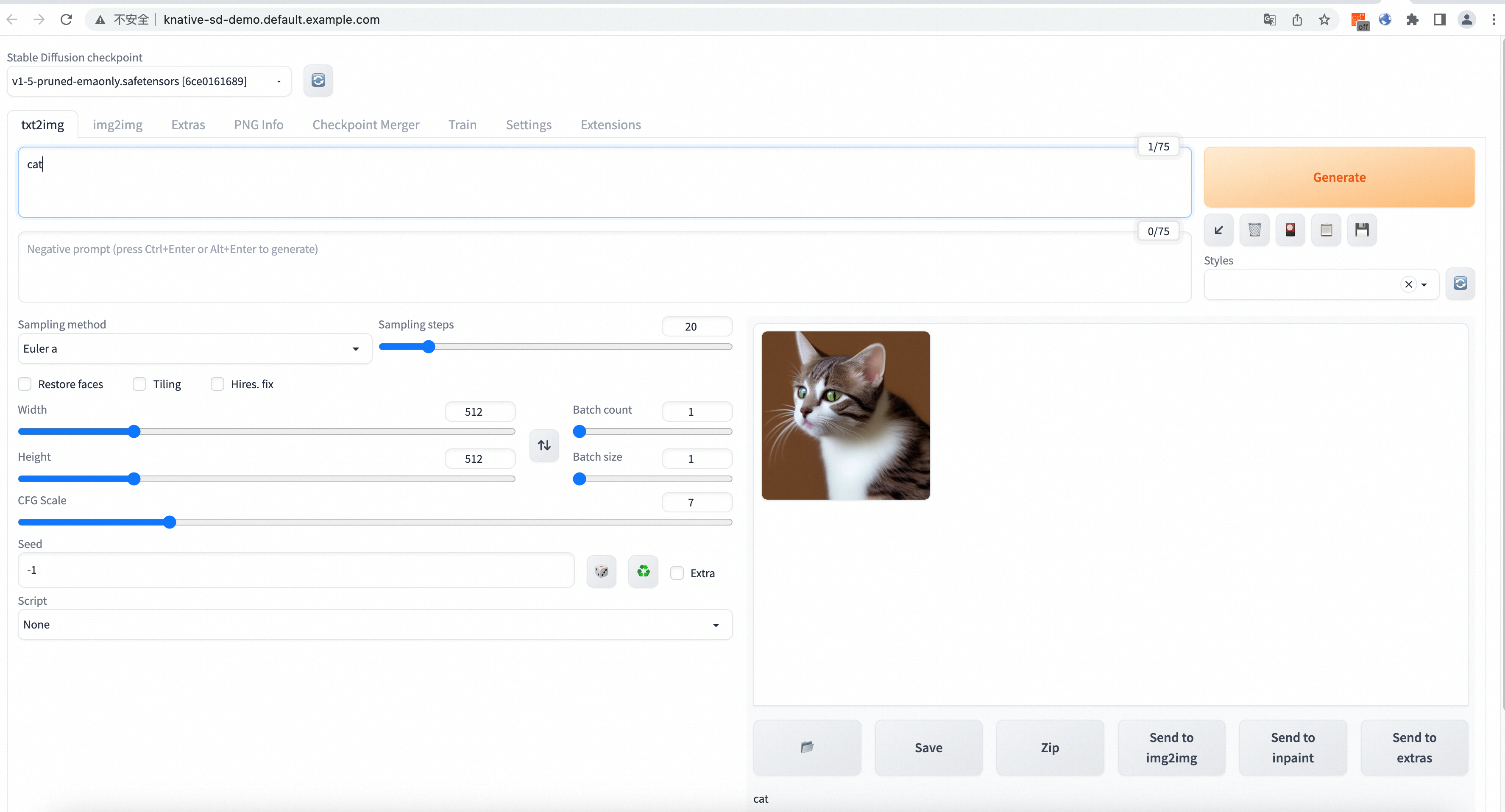

After the hosts are bound to 47.96.XX.XX knative-sd-demo.default.example.com, enter the domain name http://knative-sd-demo.default.example.com/ in the browser to access the Stable Diffusion service.

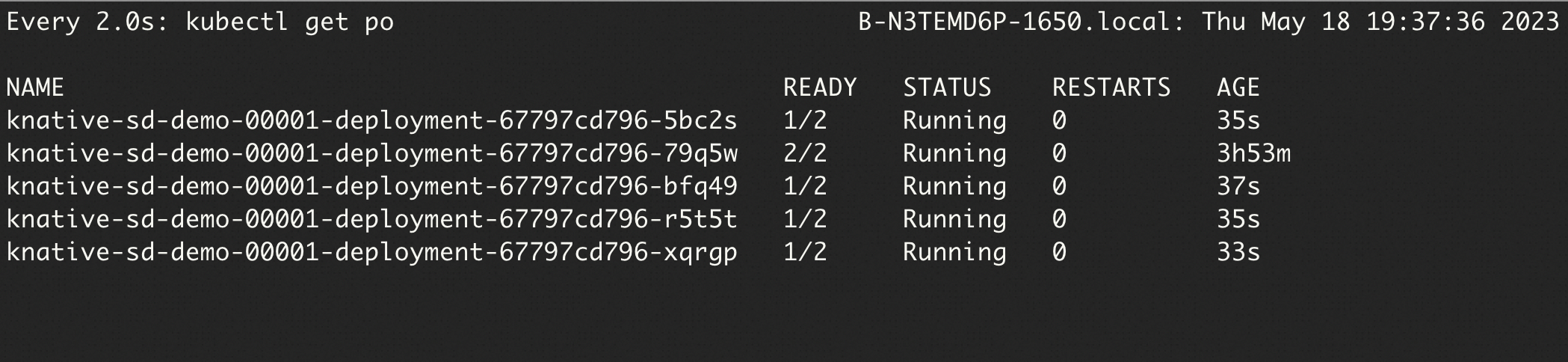

Run the hey command to perform a stress test. The concurrency is set to 5, then 50 requests are sent, and the request timeout period is 180 seconds.

./hey -n 50 -c 5 -t 180 -m POST -T "application/json" -d '{"prompt": "pretty dog"}' http://knative-sd-demo.default.example.com/sdapi/v1/txt2imgRun the watch command to observe pod scaling in real time.

watch -n 1 'kubectl get po'

./hey -n 50 -c 5 -t 180 -m POST -T "application/json" -d '{"prompt": "pretty dog"}' http://knative-sd-demo.default.example.com/sdapi/v1/txt2img

Summary:

Total: 252.1749 secs

Slowest: 62.4155 secs

Fastest: 9.9399 secs

Average: 23.9748 secs

Requests/sec: 0.1983

Response time histogram:

9.940 [1] |■■

15.187 [17] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

20.435 [9] |■■■■■■■■■■■■■■■■■■■■■

25.683 [11] |■■■■■■■■■■■■■■■■■■■■■■■■■■

30.930 [1] |■■

36.178 [1] |■■

41.425 [3] |■■■■■■■

46.673 [1] |■■

51.920 [2] |■■■■■

57.168 [1] |■■

62.415 [3] |■■■■■■■

Latency distribution:

10% in 10.4695 secs

25% in 14.8245 secs

50% in 20.0772 secs

75% in 30.5207 secs

90% in 50.7006 secs

95% in 61.5010 secs

0% in 0.0000 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0424 secs, 9.9399 secs, 62.4155 secs

DNS-lookup: 0.0385 secs, 0.0000 secs, 0.3855 secs

req write: 0.0000 secs, 0.0000 secs, 0.0004 secs

resp wait: 23.8850 secs, 9.9089 secs, 62.3562 secs

resp read: 0.0471 secs, 0.0166 secs, 0.1834 secs

Status code distribution:

[200] 50 responsesEffect: As the maximum concurrency of a single pod is set to 1 (containerConcurrency: 1), you can see that five pods are created during the stress test. In addition, after 50 requests are continuously sent, the request success rate is 100%.

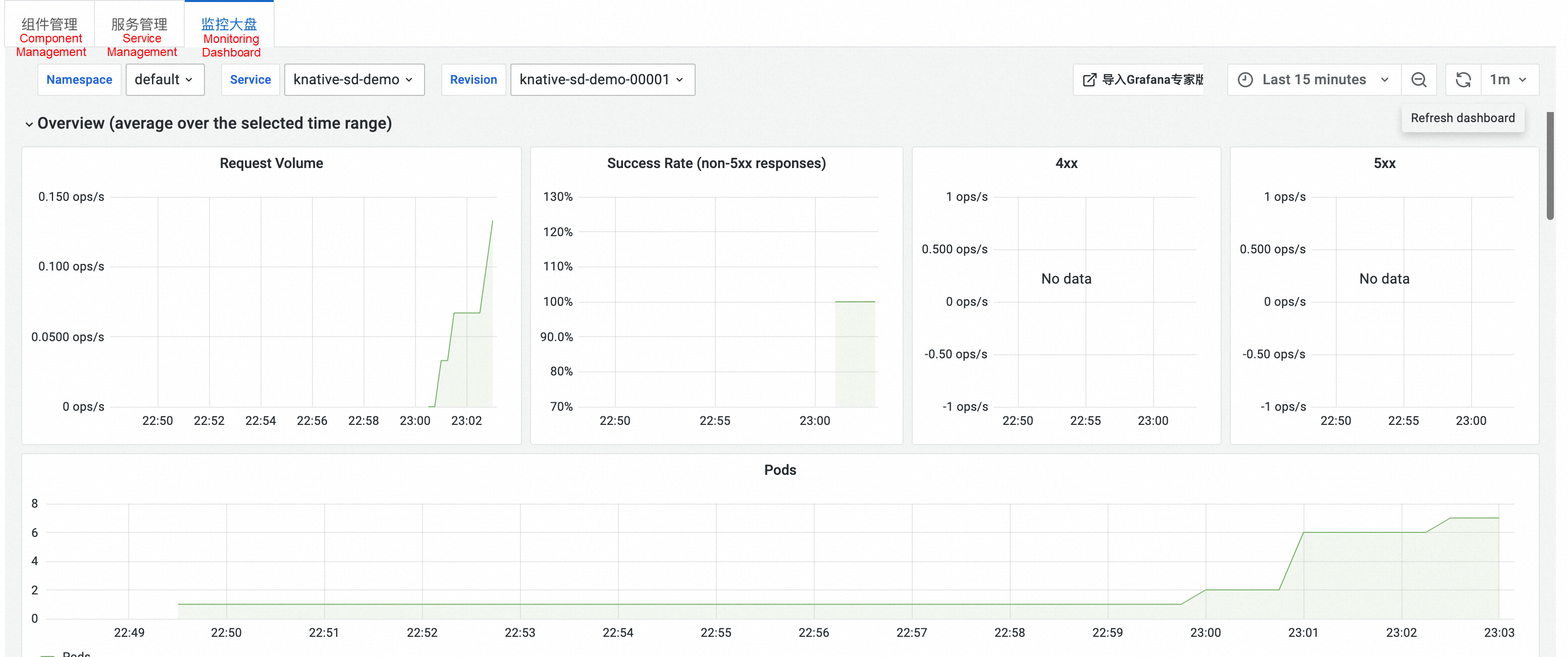

Knative provides out-of-the-box observability. On the Knative page, click the Monitoring Dashboard tab. You can see the monitoring data of the Stable Diffusion service, including the request volume, success rate, 4xx (client error), 5xx (server error), and pod scaling trend.

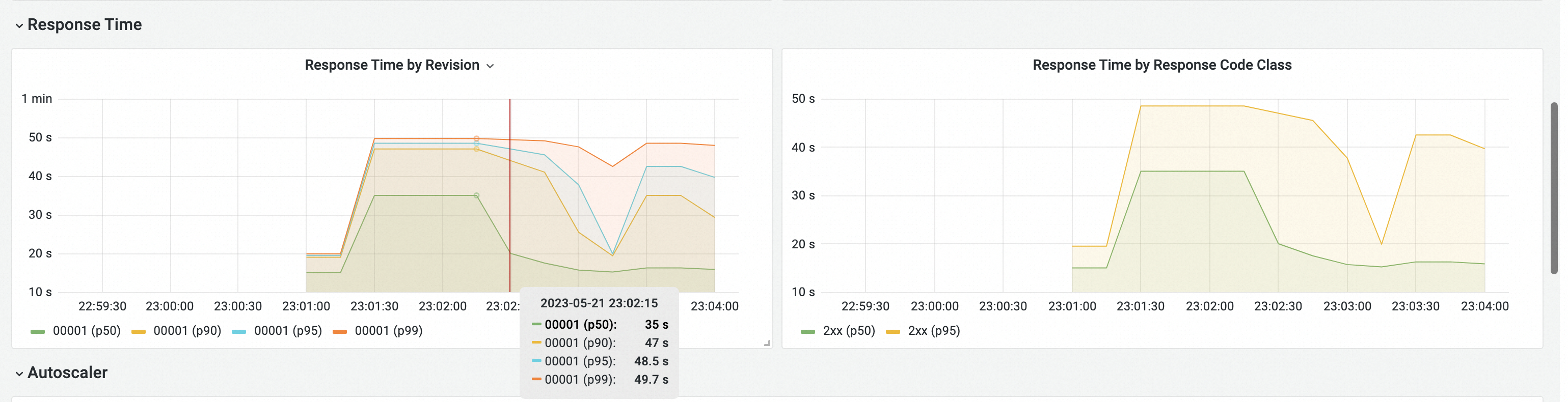

In the Response Time section, you can see the response latency data of Knative, including P50, P90, P95 and P99.

With the accurate request processing and auto scaling capabilities of Knative, Stable Diffusion is production-ready and can meet the serverless requirements in the AIGC field.

O&M and Component Installation for the Registered Cluster of ACK One

228 posts | 33 followers

FollowAlibaba Cloud Native Community - September 19, 2023

Alibaba Cloud Community - July 11, 2024

Alibaba Container Service - August 1, 2023

Alibaba Cloud Native Community - September 20, 2023

Alibaba Cloud Native Community - November 23, 2023

Alibaba Container Service - July 31, 2024

228 posts | 33 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn MoreMore Posts by Alibaba Container Service