Prometheus is an project that is used to monitor cloud-native applications. This topic describes how to deploy Prometheus in a Container Service for Kubernetes (ACK) cluster.

Background information

This topic describes how to efficiently monitoring system components and resource entities in a Kubernetes cluster. A monitoring system monitors the following types of object:

Resource: resource utilization of nodes and applications. In a Kubernetes cluster, the monitoring system monitors the resource usage of nodes, pods, and the cluster.

Application: internal metrics of applications. For example, the monitoring system dynamically counts the number of online users who are using an application, collects monitoring metrics from application ports, and enables alerting based on the collected metrics.

In a Kubernetes cluster, the monitoring system monitors the following objects:

Cluster components: The components of the Kubernetes cluster, such as API server, cloud-controller-manager, and etcd. To monitor cluster components, specify the monitoring methods in configuration files.

Static resource entities: The status of resources on nodes and kernel events. To monitor static resource entities, specify the monitoring methods in configuration files.

Dynamic resource entities: Entities of abstract workloads in Kubernetes, such as Deployments, DaemonSets, and pods. To monitor dynamic resource entities in a Kubernetes cluster, you can deploy Prometheus in the Kubernetes cluster.

Custom objects in applications: For applications that require customized monitoring of data and metrics, specific configurations need to be set to meet unique monitoring requirements. This can be achieved by combining port exposure with the Prometheus monitoring solution.

Step 1: Deploy open source Prometheus

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side navigation pane, choose .

On the Helm page, click Deploy. In the Chart section of the Deploy panel, find and select ack-prometheus-operator, use the default values for other parameters, and click Next.

The chart will be installed in the monitoring namespace by default using the default application name.

To use a custom name and namespace, configure the Application Name and Namespace parameters in the Basic Information step.

On the Parameters wizard page, select 12.0.0 for the chart version, configure the parameters, and then click OK. Chart 12.0.0 supports alarm configuration. You can set monitoring and alarm conditions by using the built-in function.

You can customize the following optional parameters based on your business requirements:

Alert configuration: Alert notifications can be send by using DingTalk messages or emails.

Mount a custom ConfigMap to Prometheus: You can configure a custom ConfigMap based on your business requirements.

Mount the dashboard file to Grafana: You can use custom dashboards to enhance data visualization.

After deployment, you can view the application status on the Helm page.

Step 2: View Prometheus collection tasks

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side pane, choose .

On the Services page, select the namespace in which the ack-prometheus-operator is deployed (monitoring by default). Find ack-prometheus-operator-prometheus and click Update in the Actions column.

In the Update Service dialog box, set Service Type to SLB. Select Create Resource and set Access Method to Public Access. Select Pay-as-you-go for the Billing Method parameter, configure other parameters as needed, and click OK.

See Classic Load Balancer (CLB) billing for details.

After the update is complete, copy its external IP address, and access Prometheus by entering the

IP address: port numberin the address bar of a browser. Example:47.XX.XX.12:9090.In the top navigation bar of the Prometheus page, choose to view all data collection tasks. Tasks in the UP state are running properly.

To view alert rules, click Alerts in the top navigation bar.

Step 3: View Grafana aggregated data

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side pane, choose .

On the Services page, select the namespace in which the ack-prometheus-operator deployment is deployed (monitoring by default). Find ack-prometheus-operator-grafana and click Update in the Actions column.

In the Update Service dialog box, set Service Type to SLB. Select Create Resource and set Access Method to Public Access. Select Pay-as-you-go (Pay-by-CU) for the Billing Method parameter, configure other parameters as needed, and click OK.

See CLB billing for details.

After the update is complete, copy its external IP address, and access Grafana by entering

IP address: port numberin the address bar of a browser. By default, the port number is 80. Example:47.XX.XX.12:80.

Alert configurations

You can configure prometheus-operator to send alert notifications by using DingTalk messages or emails:

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side navigation pane, choose .

On the Helm page, click Deploy. In the Basic Information step, find and select ack-prometheus-operator in the Chart section, configure other parameters as needed, and click Next. In the Parameters step, configure the alert parameters based on the following content:

If ack-prometheus-operator is installed, click its name from the list on the Helm page, and click the Parameters tab to configure.

Configure DingTalk notifications

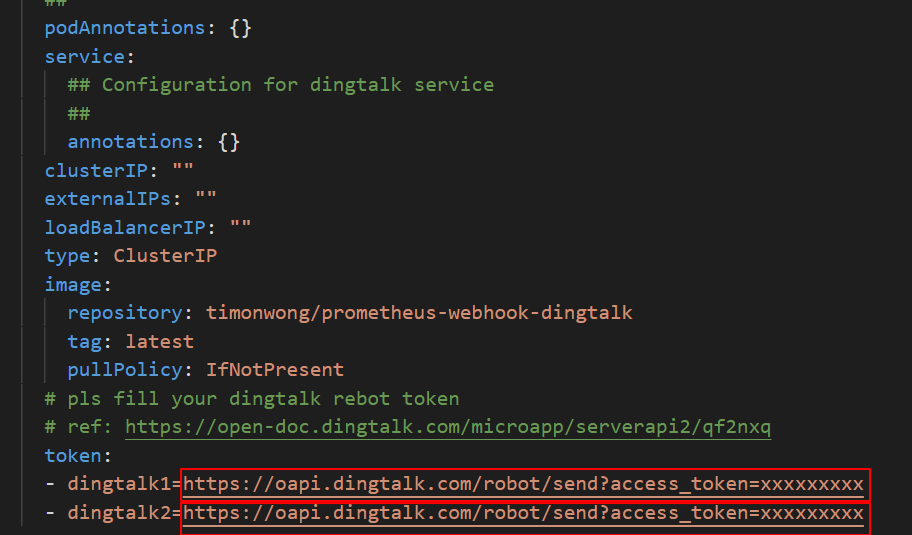

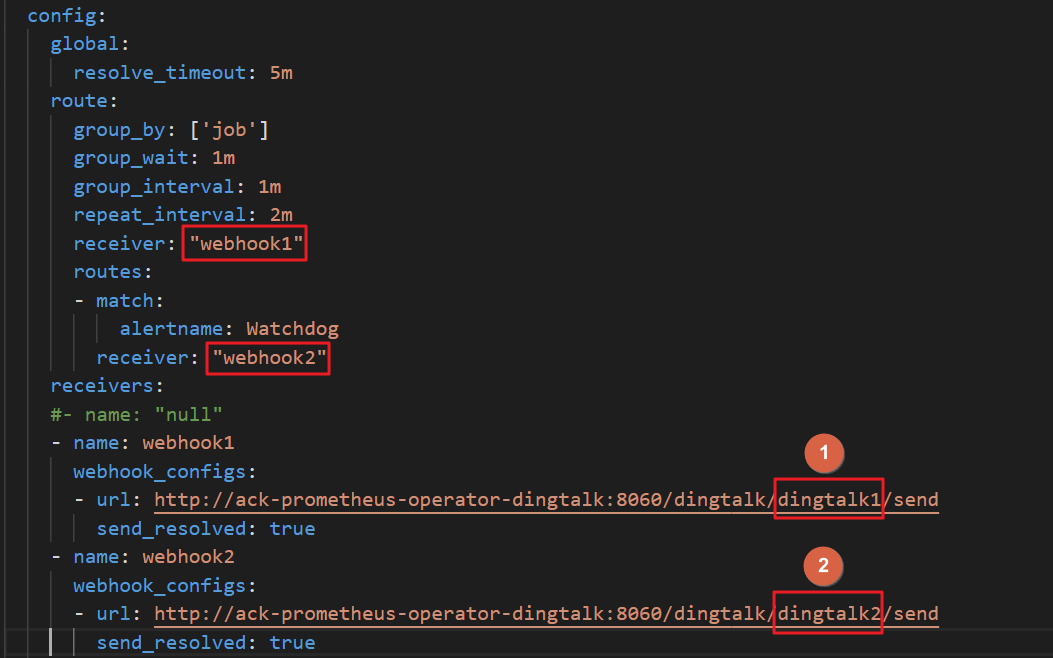

Find the

dingtalkfield in the configuration file and set theenabledtotrue.Enter the webhook URL of your DingTalk chatbot in the

tokenfield. For more information about how to obtain the webhook URL, see Scenario 3: Implement Kubernetes monitoring and alerting with DingTalk chatbot.In the

alertmanagersection, find thereceiverparameter in theconfigfield, and enter the DingTalk chatbot name that you specified for thereceiversfield. By default,webhookis used.

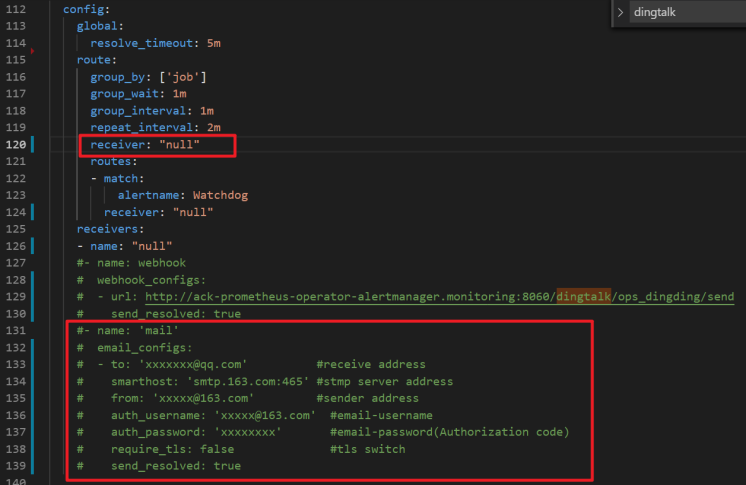

Configure email notifications

Enter the details about your email address in the red box of the following figure.

Find the

configfield in thealertmanagersection of the configuration file, findreceiverand enter the email you defined in thereceiversfield. By default,mailis used.

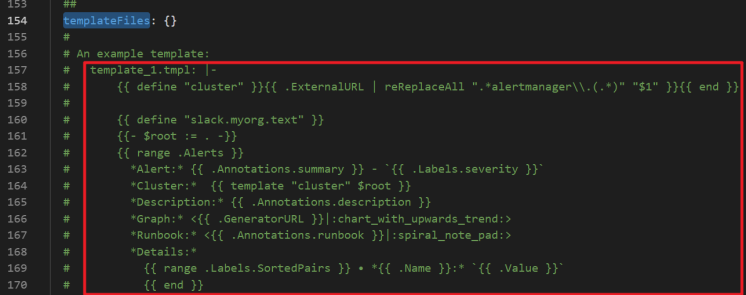

Set alert notification templates

You can customize the alert notification template in the

templateFilesfield of thealertmanagersection, as shown in the following figure:

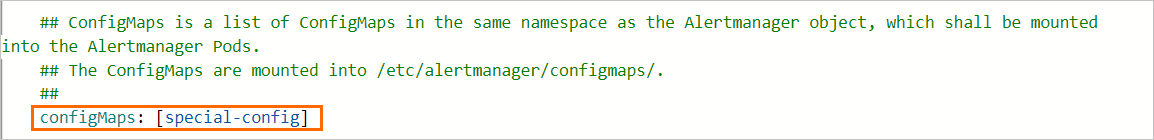

Mount a custom ConfigMap to Prometheus

This section describes how to mount a configuration file via a ConfigMap named special-config in Prometheus, and pass it using the --config.file parameter during pod startup.

Create a ConfigMap.

Mount a ConfigMap.

In the Parameters step, add the following content to the

configmapsfield to mount the specified ConfigMap to the/etc/prometheus/configmaps/path in the Prometheus pod.

The following figure shows the sample configuration of the

configmapsfield in theprometheussection:

Configure Grafana

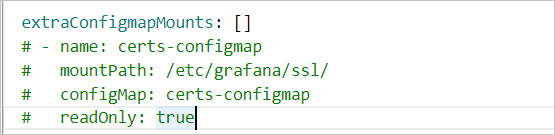

Mount the dashboard configuration files to Grafana

To mount dashboard files into the Grafana pod via ConfigMap, locate the extraConfigmapMounts field in the parameter configuration wizard. Ensure:

Ensure:

The dashboard exists in the cluster as a ConfigMa, and the labels of the ConfigMap are in the same format as those of other ConfigMaps.

Populate the

extraConfigmapMountsfield with the dashboard's ConfigMap information and mount details:mountPath: Set to/tmp/dashboards/ConfigMap: Set to the name of your custom ConfigMap.name: Set to the name of the JSON file that stores the dashboard configuration.

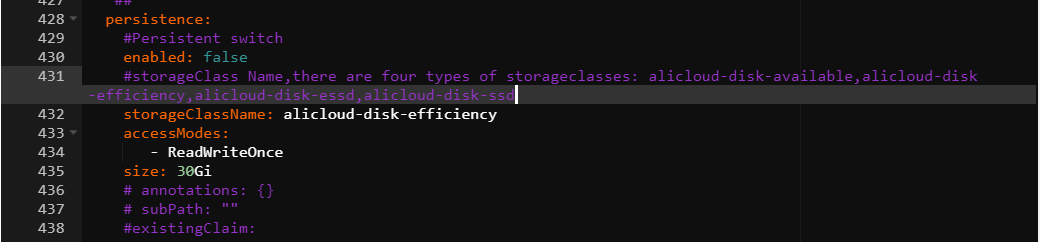

Enable data persistence for dashboards

To export a dashboard locally, save the target dashboard as a JSON file to your local machine. For details, see Export a dashboard as JSON.

Log on to the ACK console. In the navigation pane on the left, click Clusters.

On the Clusters page, find the cluster you want and click its name. In the left-side pane, choose .

Find ack-prometheus-operator, click Update in the Actions column, and configure the

persistencefield in thegrafanasection as shown in the following figure:

Related operations

Uninstall the open-source Prometheus component

Check the Helm chart version of the open source Prometheus component and perform the following steps to uninstall the open source Prometheus component. This helps you prevent residual resources and unexpected exceptions. You need to manually delete the related Helm release, namespace, CustomResourceDefinitions (CRDs), and kubelet Service.

If the kubelet Service cannot be automatically deleted when you uninstall the ack-prometheus-operator component, refer to the following sections. For more information about this issue, see #1523.

Chart v12.0.0

Use the ACK console

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side navigation pane of the cluster details page, perform the following operations:

Uninstall the Helm release: Choose . On the Helm page, find the ack-prometheus-operator release and click Delete in the Actions column. In the Delete dialog box, select Clear Release Records and click OK.

Delete the related namespace: Click Namespaces and Quotas. On the Namespace page, select the monitoring namespace and click Delete in the lower part of the page. In the Confirm message, confirm the information and click Confirm Deletion.

Delete the related CRDs: Choose . On the Custom Resources page, click the CRDs tab. Select all CRDs that belong to the

monitoring.coreos.comAPI group and click Delete in the lower part of the page. In the Confirm message, confirm the information and click Confirm Deletion. The following list describes the CRDs in the API group:AlertmanagerConfig

Alertmanager

PodMonitor

Probe

Prometheus

PrometheusRule

ServiceMonitor

ThanosRuler

Delete the kubelet Service: Choose Network > Services. On the Services page, select the ack-prometheus-operator-kubelet Service in the kube-system namespace and click Delete in the lower part of the page. In the Confirm message, confirm the information and click Confirm Deletion.

Use kubectl

Uninstall the related Helm release.

helm uninstall ack-prometheus-operator -n monitoringDelete the related namespace.

kubectl delete namespace monitoringDelete the related CRDs.

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com kubectl delete crd alertmanagers.monitoring.coreos.com kubectl delete crd podmonitors.monitoring.coreos.com kubectl delete crd probes.monitoring.coreos.com kubectl delete crd prometheuses.monitoring.coreos.com kubectl delete crd prometheusrules.monitoring.coreos.com kubectl delete crd servicemonitors.monitoring.coreos.com kubectl delete crd thanosrulers.monitoring.coreos.comDelete the kubelet Service.

kubectl delete service ack-prometheus-operator-kubelet -n kube-system

Chart v65.1.1

Use the ACK console

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side navigation pane of the cluster details page, perform the following operations:

Uninstall the Helm release: Choose . On the Helm page, select the ack-prometheus-operator release and click Delete in the Actions column. In the Delete dialog box, select Clear Release Records and click OK.

Delete the related namespace: Click Namespaces and Quotas. On the Namespace page, select the monitoring namespace and click Delete in the lower part of the page. In the Confirm message, confirm the information and click Confirm Deletion.

Delete the related CRDs: Choose . On the Custom Resources page, click the CRD tab. Select all CRDs that belong to the

monitoring.coreos.comAPI group and click Delete in the lower part of the page. In the Confirm message, confirm the information and click Confirm Deletion. The following list describes the CRDs in the API group:AlertmanagerConfig

Alertmanager

PodMonitor

Probe

PrometheusAgent

Prometheus

PrometheusRule

ScrapeConfig

ServiceMonitor

ThanosRuler

Delete the kubelet Service: Choose Network > Services. On the Services page, select the ack-prometheus-operator-kubelet Service in the kube-system namespace and then click Delete in the lower part of the page. In the Confirm message, confirm the information and click Confirm Deletion.

kubectl

Uninstall the related Helm release.

helm uninstall ack-prometheus-operator -n monitoringDelete the related namespace.

kubectl delete namespace monitoringDelete the related CRDs.

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com kubectl delete crd alertmanagers.monitoring.coreos.com kubectl delete crd podmonitors.monitoring.coreos.com kubectl delete crd probes.monitoring.coreos.com kubectl delete crd prometheusagents.monitoring.coreos.com kubectl delete crd prometheuses.monitoring.coreos.com kubectl delete crd prometheusrules.monitoring.coreos.com kubectl delete crd scrapeconfigs.monitoring.coreos.com kubectl delete crd servicemonitors.monitoring.coreos.com kubectl delete crd thanosrulers.monitoring.coreos.comDelete the kubelet Service.

kubectl delete service ack-prometheus-operator-kubelet -n kube-system

Configure alert suppression

You can suppress specific alerts by configuring silence rules. When an alert matches the rule conditions, notifications will be withheld until the silence period ends or the rule is manually deleted.

Run the following command to expose Alertmanager on local port 9093 for external access:

kubectl --address 0.0.0.0 port-forward svc/alertmanager-operated 9093 -n monitoringAssociate an EIP with your Elastic Compute Service (ECS) instance, then access via

<EIP>:9093in your browser.Ensure the ECS security group allows your local IP and port 9093. For details, see Add a security group rule.

Click Silence and follow the on-screen instructions to configure alert suppression.

FAQs

What do I do if I fail to receive DingTalk alert notifications?

Obtain the webhook URL of your DingTalk chatbot. For more information, see Scenario 3: Implement Kubernetes monitoring and alerting with DingTalk chatbot.

On the Parameters wizard page, find the dingtalk section, set enabled to true, and then specify the webhook URL of your DingTalk chatbot in the token field. For more information, see Configure DingTalk alert notifications in Alert configurations.

What do I do if an error message appears when I deploy prometheus-operator in a cluster?

The following error message appears:

Can't install release with errors: rpc error: code = Unknown desc = object is being deleted: customresourcedefinitions.apiextensions.k8s.io "xxxxxxxx.monitoring.coreos.com" already existsThe error message indicates that the cluster fails to clear custom resource definition (CRD) objects of the previous deployment. Run the following commands to delete the CRD objects. Then, deploy prometheus-operator again:

kubectl delete crd prometheuses.monitoring.coreos.com

kubectl delete crd prometheusrules.monitoring.coreos.com

kubectl delete crd servicemonitors.monitoring.coreos.com

kubectl delete crd alertmanagers.monitoring.coreos.comWhat do I do if I fail to receive email alert notifications?

Make sure that the value of smtp_auth_password is the SMTP authorization code instead of the logon password of the email account. Make sure that the SMTP server endpoint includes a port number.

What do I do if the console prompts the following error message after I click Update to update YAML templates: The current cluster is temporarily unavailable. Try again later or submit a ticket?

If the configuration file of Tiller is overlarge, the cluster cannot be accessed. To solve this issue, you can delete some annotations in the configuration file and mount the file to a pod as a ConfigMap. You can specify the name of the ConfigMap in the configMaps fields of the prometheus and alertmanager sections. For more information, see the second method in Mount a ConfigMap to Prometheus.

How do I enable the features of prometheus-operator after I deploy it in a cluster?

After prometheus-operator is deployed, you can perform the following steps to enable the features of prometheus-operator. Go to the cluster details page and choose in the left-side navigation pane. On the Helm page, find ack-prometheus-operator and click Update in the Actions column. In Update Release panel, configure the code block to enable the features. Then, click OK.

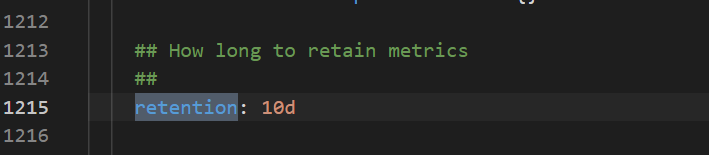

How do I select data storage: TSDB or disks?

TSDB storage is available to limited regions. However, disk storage is supported in all regions. The following figure shows how to configure the data retention policy.

What do I do if a Grafana dashboard fails to display data properly?

Go to the cluster details page and choose in the left-side navigation pane. On the Helm page, find ack-prometheus-operator and click Update in the Actions column. In the Update Release panel, check whether the value of the clusterVersion field is correct. If the Kubernetes version of your cluster is earlier than 1.16, set clusterVersion to 1.14.8-aliyun.1. If the Kubernetes version of your cluster is 1.16 or later, set clusterVersion to 1.16.6-aliyun.1.

What do I do if I fail to install ack-prometheus after I delete the ack-prometheus namespace?

After you delete the ack-prometheus namespace, the related resource configurations may be retained. In this case, you may fail to install ack-prometheus again. You can perform the following operations to delete the related resource configurations:

Delete role-based access control (RBAC)-related resource configurations.

Run the following commands to delete the related ClusterRoles:

kubectl delete ClusterRole ack-prometheus-operator-grafana-clusterrole kubectl delete ClusterRole ack-prometheus-operator-kube-state-metrics kubectl delete ClusterRole psp-ack-prometheus-operator-kube-state-metrics kubectl delete ClusterRole psp-ack-prometheus-operator-prometheus-node-exporter kubectl delete ClusterRole ack-prometheus-operator-operator kubectl delete ClusterRole ack-prometheus-operator-operator-psp kubectl delete ClusterRole ack-prometheus-operator-prometheus kubectl delete ClusterRole ack-prometheus-operator-prometheus-pspRun the following commands to delete the related ClusterRoleBindings:

kubectl delete ClusterRoleBinding ack-prometheus-operator-grafana-clusterrolebinding kubectl delete ClusterRoleBinding ack-prometheus-operator-kube-state-metrics kubectl delete ClusterRoleBinding psp-ack-prometheus-operator-kube-state-metrics kubectl delete ClusterRoleBinding psp-ack-prometheus-operator-prometheus-node-exporter kubectl delete ClusterRoleBinding ack-prometheus-operator-operator kubectl delete ClusterRoleBinding ack-prometheus-operator-operator-psp kubectl delete ClusterRoleBinding ack-prometheus-operator-prometheus kubectl delete ClusterRoleBinding ack-prometheus-operator-prometheus-psp

Run the following command to delete the related CRD objects:

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com kubectl delete crd alertmanagers.monitoring.coreos.com kubectl delete crd podmonitors.monitoring.coreos.com kubectl delete crd probes.monitoring.coreos.com kubectl delete crd prometheuses.monitoring.coreos.com kubectl delete crd prometheusrules.monitoring.coreos.com kubectl delete crd servicemonitors.monitoring.coreos.com kubectl delete crd thanosrulers.monitoring.coreos.com