By Yu Zhuang

Container technology, represented by Kubernetes, has revolutionized the application delivery model and is rapidly becoming a standardized API for data centers worldwide.

In application architecture design, stability, uninterrupted user access, high availability, and elasticity are persistent goals. Multi-cluster architecture naturally possesses these capabilities. However, it is through the unified and standardized API of Kubernetes that the true value of multi-cluster and hybrid cloud capabilities is realized.

In the previous article, Simplifying Kubernetes Multi-cluster Management with the Right Approach, we focused on the application scenarios, architectural implementation, security reinforcement of registered clusters in Distributed Cloud Container Platform for Kubernetes (ACK One), as well as the powerful observability of Alibaba Cloud Container Service for Kubernetes (ACK) in both cloud-based and self-built Kubernetes clusters, enabling unified operations and maintenance (O&M) of Kubernetes clusters.

In this article, we focus on another important use scenario of ACK One registered clusters - cloud elasticity.

The cloud elasticity of ACK One registered clusters is beneficial in the following scenarios:

1. Rapid business growth: Kubernetes clusters deployed in on-premises IDCs often face limitations in scaling due to limited computing resources. Additionally, the procurement and deployment of computing resources can be time-consuming, making it difficult to accommodate the rapid growth of business traffic.

2. Periodic or sudden business growth: The fixed number of computing resources in on-premises IDCs cannot effectively handle periodic business peaks or sudden surges in traffic.

The elasticity of computing resources provides a fundamental solution to these scenarios by dynamically scaling resources based on changes in business traffic. This ensures that business requirements are met while optimizing costs.

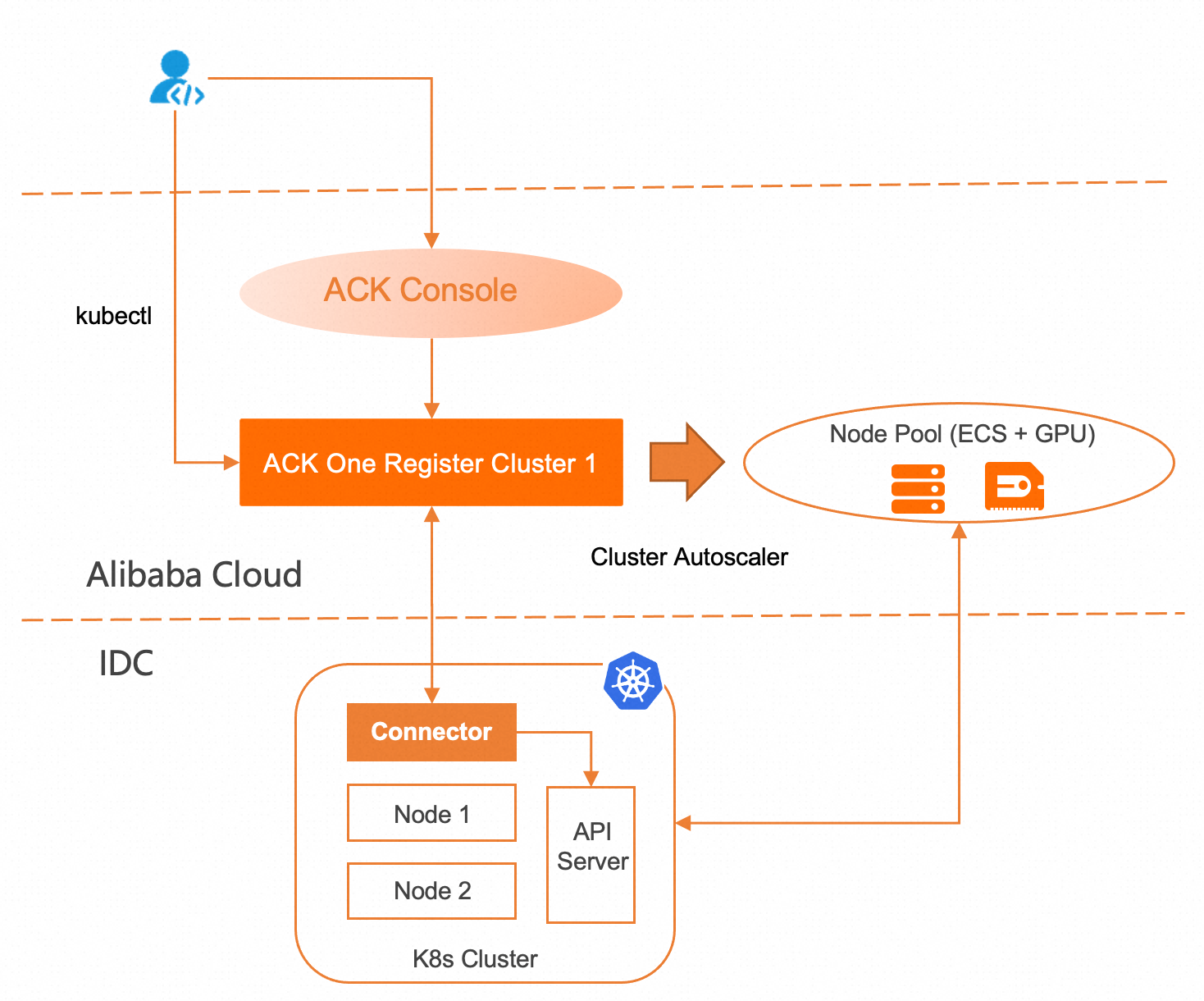

The following figure shows the architecture for cloud elasticity in ACK One registered clusters.

Through ACK One registered clusters, Kubernetes clusters in on-premises IDCs can elastically scale out the node pool of Alibaba Cloud ECS instances. This takes advantage of the exceptional elasticity provided by Alibaba Cloud Container Service, enabling businesses to scale out and effectively handle increased traffic, while also scaling in to reduce costs. Specifically for AI scenarios, ACK One registered clusters enable the connection of GPU-accelerated machines from the cloud to Kubernetes clusters in on-premises IDCs.

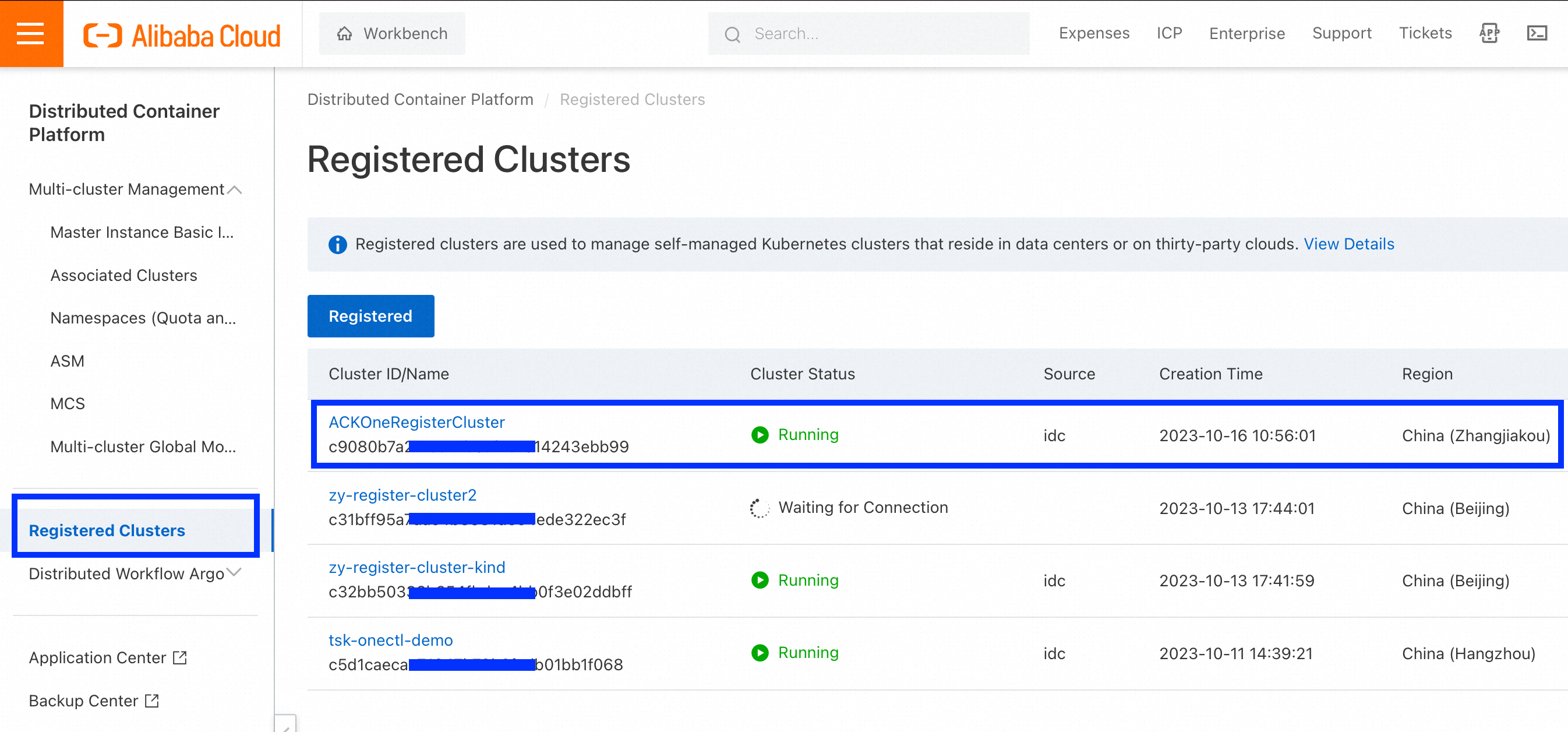

Visit the Register Cluster page in the ACK One console. The registered cluster ACKOneRegisterCluster1 has been created and connected to a Kubernetes cluster in an on-premises IDC. See Simplifying Kubernetes Multi-cluster Management with the Right Approach

The registered clusters page in the ACK One console:

https://account.aliyun.com/login/login.htm?oauth_callback=https%3A%2F%2Fcs.console.aliyun.com%2Fone

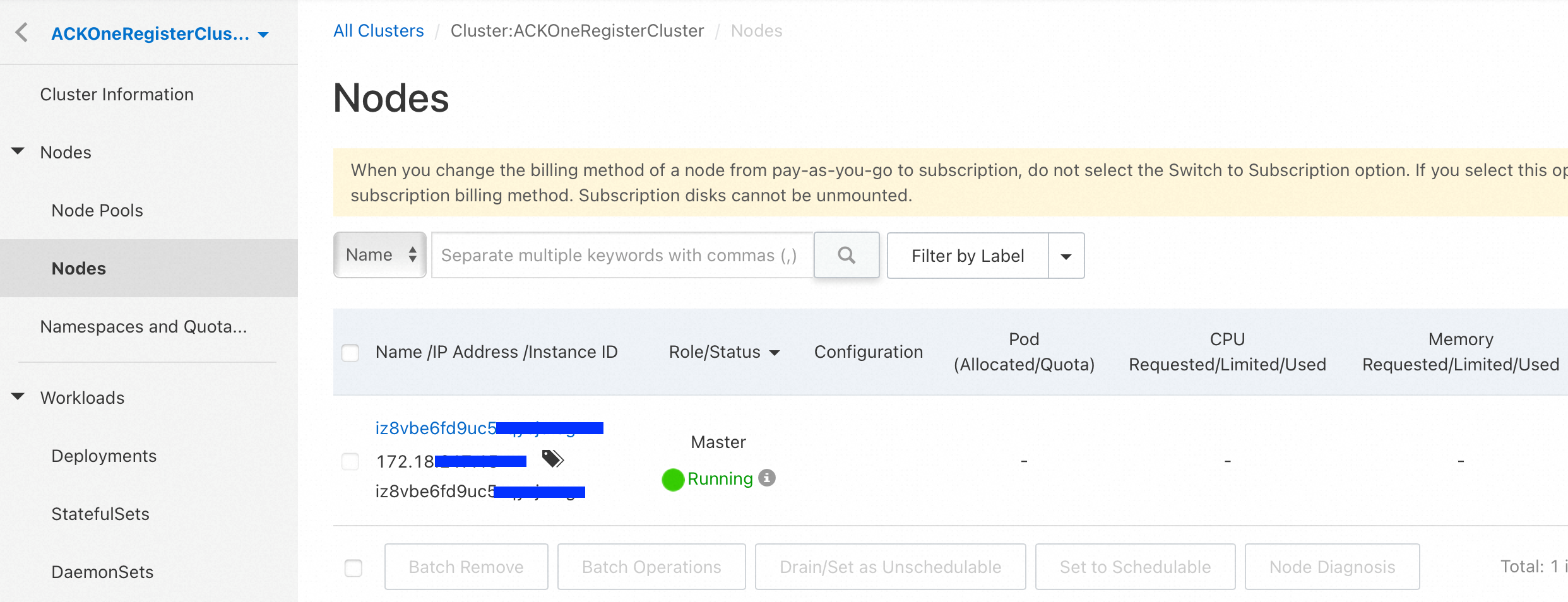

After the connection, you can view the on-premises IDC Kubernetes cluster in the ACK One console. Currently, there is only one master node.

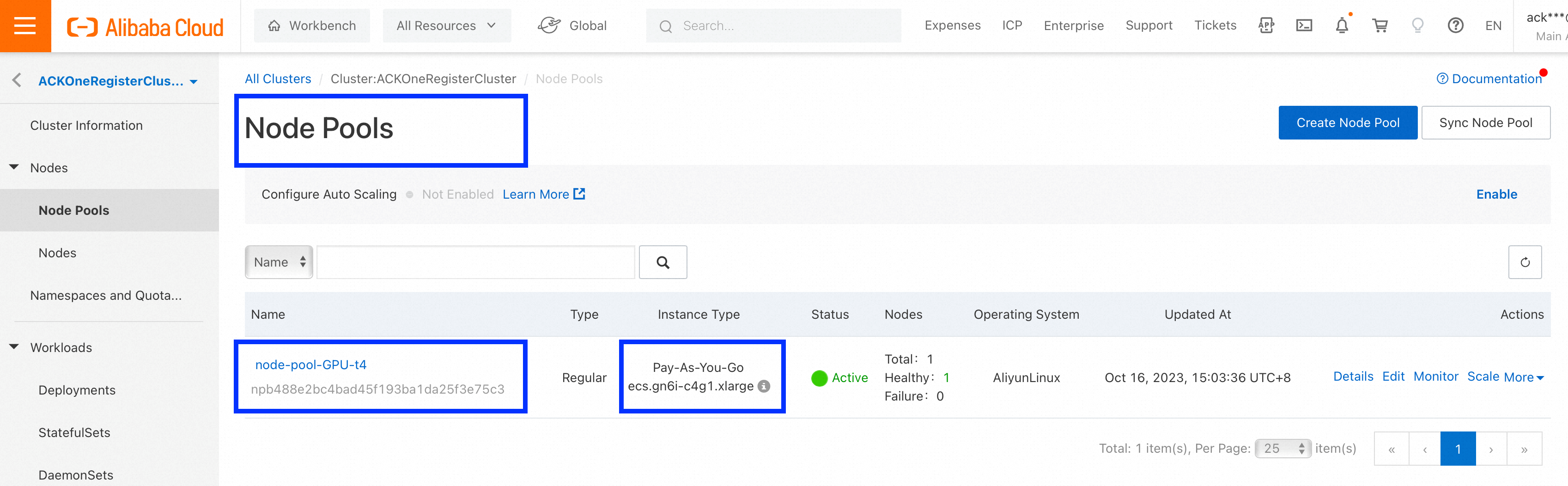

Create the node pool GPU-P100 in the registered cluster and add a GPU-accelerated machine on the cloud to the Kubernetes cluster in the IDC.

Run kubectl in the IDC Kubernetes cluster to view the node information.

kubectl get node

NAME STATUS ROLES AGE VERSION

cn-zhangjiakou.172.16.217.xx Ready <none> 5m35s v1.20.9 // GPU-accelerated machine on the cloud

iz8vb1xtnuu0ne6b58hvx0z Ready master 20h v1.20.9 // IDC machine

k describe node cn-zhangjiakou.172.16.217.xx

Name: cn-zhangjiakou.172.16.217.xx

Roles: <none>

Labels: aliyun.accelerator/nvidia_count=1 //nvidia labels

aliyun.accelerator/nvidia_mem=16280MiB //nvidia labels

aliyun.accelerator/nvidia_name=Tesla-P100-PCIE-16GB //nvidia labels

beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=cn-zhangjiakou.172.16.217.xx

kubernetes.io/os=linux

Capacity:

cpu: 4

ephemeral-storage: 123722704Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 30568556Ki

nvidia.com/gpu: 1 //nvidia gpu

pods: 110

Allocatable:

cpu: 4

ephemeral-storage: 114022843818

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 30466156Ki

nvidia.com/gpu: 1 //nvidia gpu

pods: 110

System Info:

OS Image: Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://19.3.13

Kubelet Version: v1.20.9

Kube-Proxy Version: v1.20.9

......Submit the GPU test task to the Kubernetes cluster in the IDC. The test passed.

> cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

spec:

restartPolicy: Never

containers:

- name: cuda-container

image: acr-multiple-clusters-registry.cn-hangzhou.cr.aliyuncs.com/ack-multiple-clusters/cuda10.2-vectoradd

resources:

limits:

nvidia.com/gpu: 1 # requesting 1 GPU

EOF

> kubectl logs gpu-pod

[Vector addition of 50000 elements]

Copy input data from the host memory to the CUDA device

CUDA kernel launch with 196 blocks of 256 threads

Copy output data from the CUDA device to the host memory

Test PASSED

DoneAlibaba Cloud provides priority-based resource scheduling to meet the elasticity requirements in pod scheduling. When you deploy or scale out an application, you can customize a resource policy to determine the order in which pods are scheduled to different types of node resources. When you scale in the application, pods are deleted from nodes in reverse order.

By following the demonstration above, you can create node pools using ECS resources on the cloud through the registered ACK One cluster and add them to the IDC cluster. You have the option to label the node pools or nodes and use nodeAffinity or nodeSelector to decide whether pods should run on the on-premises IDC nodes or the ECS nodes on the cloud. In this mode, you will need to modify the configuration of the application pod. If the production system has a large number of applications to process, you will need to write scheduling rules for custom scheduling scenarios. For example, you can schedule a GPU training task with a specific CUDA version to a particular GPU ECS instance on the cloud.

To simplify the use of ECS resources on the cloud by the IDC Kubernetes clusters, registered ACK One clusters provide the multi-level elastic scheduling feature. By installing ack-co-scheduler components, you can define ResourcePolicy CR objects to utilize this multi-level elastic scheduling feature.

ResourcePolicy CR is a namespace resource. The important parameters are as follows:

• selector: declares that the ResourcePolicy is applied to the selected pods which have the key1=value1 label in the same namespace.

• strategy: the scheduling policy. Currently only support prefer.

• units: the schedulable units. During a scale-out activity, pods are scheduled to nodes based on the priorities of the nodes listed under units in descending order. During a scale-in activity, pods are deleted from the nodes based on the priorities of the nodes in ascending order.

• resource: the type of elastic resources. Currently, IDC, ECS, and ECI are supported.

• nodeSelector: uses the label of the node to identify the nodes in the scheduling units. This parameter only applies to ECS resources..

• max: the maximum number of pods that can be deployed by using the resources.

ResourcePolicy supports the following scenarios:

apiVersion: scheduling.alibabacloud.com/v1alpha1

kind: ResourcePolicy

metadata:

name: cost-balance-policy

spec:

selector:

app: nginx // Select an application pod.

strategy: prefer

units:

- resource: idc // Prioritize the node resources in IDCs.

- resource: ecs // When IDC node resources are insufficient, use ECS resources on the cloud. You can use nodeSelector to select nodes.

nodeSelector:

alibabacloud.com/nodepool-id=np7b30xxxapiVersion: scheduling.alibabacloud.com/v1alpha1

kind: ResourcePolicy

metadata:

name: load-balance-policy

spec:

selector:

app: nginx

strategy: prefer

units:

- resource: idc

max: 2 // Start a maximum of two application instances in the IDC nodes.

- resource: ecs

nodeSelector:

alibabacloud.com/nodepool-id=np7b30xxx

max: 4 // Start a maximum of four application instances in the ECS node pool.In the demonstration, we added the Alibaba Cloud GPU P100 machine to the Kubernetes cluster in the IDC to enhance the GPU computing power of the IDC.

Through an ACK One registered cluster, you can:

[1] Overview of Registered Clusters

https://www.alibabacloud.com/help/en/doc-detail/155208.html

[2] Create an ECS Node Pool

https://www.alibabacloud.com/help/en/doc-detail/208054.html

[3] Configure Auto Scaling of ECS Nodes

https://www.alibabacloud.com/help/en/doc-detail/208055.html

[4] Multi-level Elastic Scheduling

https://www.alibabacloud.com/help/en/doc-detail/446694.html

Simplifying Kubernetes Multi-cluster Management with the Right Approach

How Do Kubernetes Clusters in On-premises IDCs Use Cloud Resources in Serverless Mode

212 posts | 13 followers

FollowAlibaba Cloud Native - October 18, 2023

Alibaba Cloud New Products - November 10, 2020

Alibaba Cloud Community - February 14, 2023

Alibaba Cloud Native Community - July 6, 2022

ApsaraDB - November 26, 2024

Alibaba Container Service - April 12, 2024

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native

5395694827629328 December 7, 2023 at 2:22 pm

☺