Ray is an open-source unified framework for scaling AI and Python applications. Ray is widely adopted in the machine learning sector. You can quickly create a Ray cluster in a Container Service for Kubernetes (ACK) cluster and integrate the Ray cluster with Simple Log Service, Managed Service for Prometheus, and ApsaraDB for Redis to optimize log management, observability, and availability. The Ray autoscaler can work with the ACK autoscaler to improve the efficiency of computing resource scaling and increase the resource utilization.

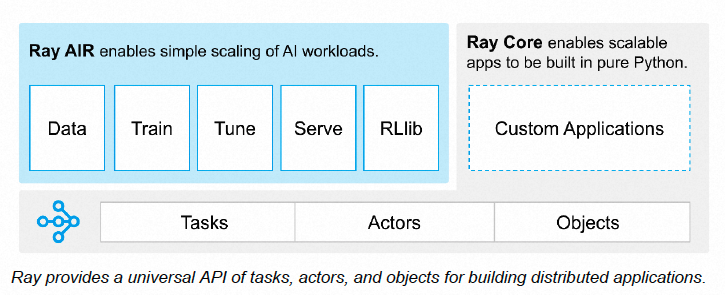

Ray is an open-source unified framework for scaling AI and Python applications. It provides an API to simplify distributed computing to help you efficiently develop parallel processing and distributed Python applications. Ray is widely adopted in the machine learning sector. The unified computing framework of Ray consists of the Ray AI Libraries, Ray Core, and Ray Clusters layers.

An open source, Python, and domain-specific set of libraries that provide ML engineers, data scientists, and researchers with a scalable and unified toolkit for machine learning applications.

An open-source, Python, general-purpose, and distributed computing library that allows machine learning engineers and Python developers to scale Python applications and accelerate machine learning workloads. Ray Core provides core primitives, including tasks, actors, and objects. Tasks are asynchronous Ray functions executed on workers. Actors extend the Ray API from tasks to objects. In Ray, tasks and actors are created and computed on objects. For more information, see Ray Framework and What is Ray Core?.

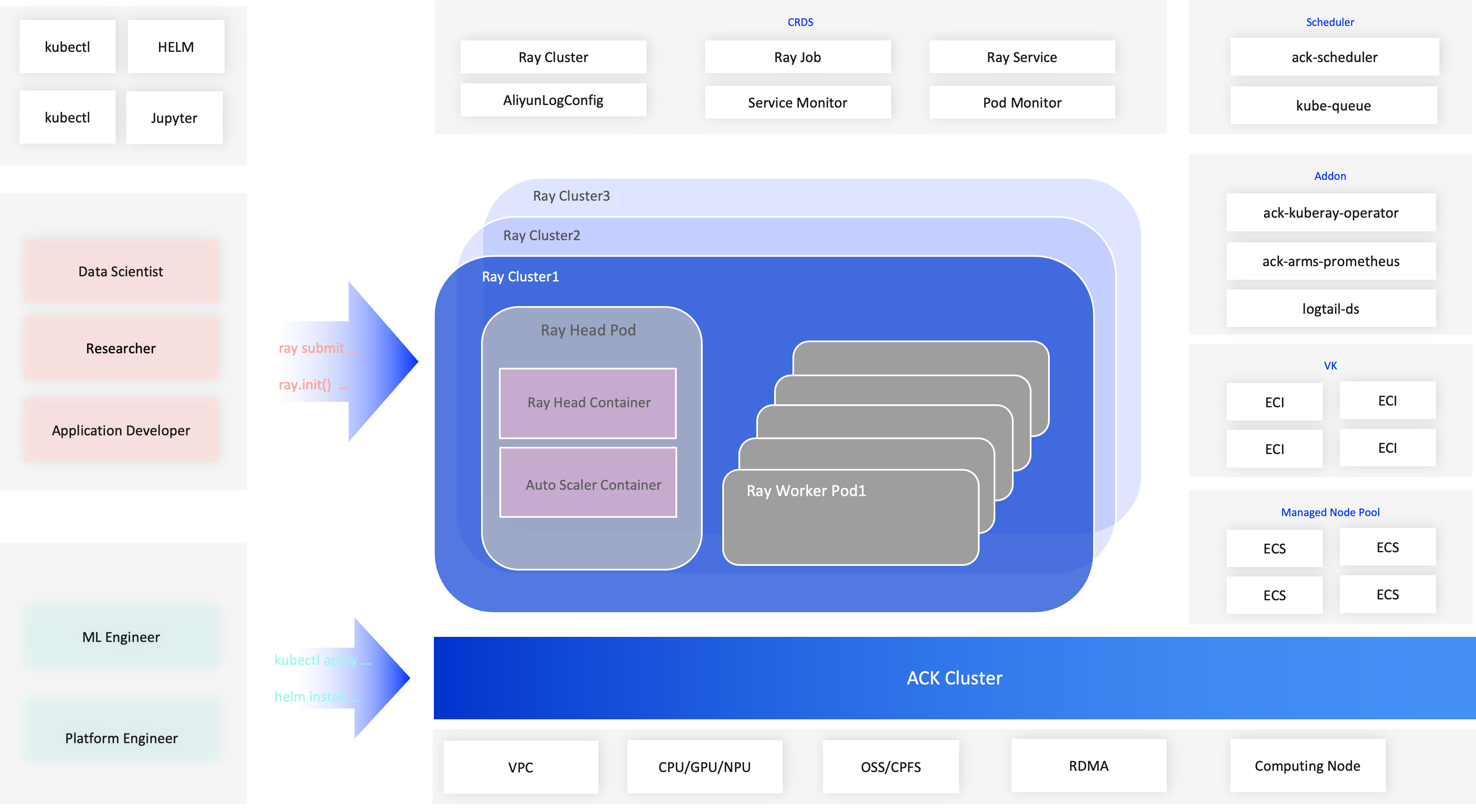

A Ray cluster consists of a head node and multiple worker nodes. The head node manages the Ray cluster and the worker nodes run computing tasks. Worker nodes communicate and collaborate with the head node through network connections. You can deploy a Ray cluster on physical machines, virtual machines, Kubernetes, or cloud computing platforms. For more information, see Ray Cluster Overview and Key Concepts.

To deploy a Ray application across multiple machines in a production environment, you must first create a Ray cluster that consists of a head node and worker nodes. Ray nodes run as pods in Kubernetes. You can use the Ray autoscaler to perform auto scaling.

To deploy a Ray cluster in Kubernetes, we recommend that you use KubeRay. For more information, see Getting Started with KubeRay - Ray 2.9.1.

The following figure shows the architecture of a Ray cluster.

The KubeRay operator provides a Kubernetes-native way to manage Ray clusters. You can use the KubeRay operator to deploy Ray clusters in Kubernetes environments, including ACK clusters. When you install the KubeRay operator, you need to deploy the operator Deployment and the RayCluster, RayJob, and RayService CustomResourceDefinitions (CRDs).

Ray on Kubernetes can greatly simplify the deployment and management of distributed applications. Ray on Kubernetes provides the following benefits. For more information, see Ray on Kubernetes.

Container Service for Kubernetes (ACK) is one of the first services to participate in the Certified Kubernetes Conformance Program in the world. ACK provides high-performance containerized application management services and supports lifecycle management for enterprise-class containerized applications. You can use KubeRay to create Ray clusters in ACK clusters in the same way you create ACK clusters in the cloud.

After you create a Ray cluster in an ACK cluster, you can use Simple Log Service, Managed Service for Prometheus, and ApsaraDB for Redis to improve log management, observability, and availability. In addition to fees incurred by ACK, you must also pay for other resources. For more information about billing, see the following topics:

An ACK Pro cluster is created. The cluster meets the following requirements.

For more information about how to create an ACK cluster, see Create an ACK managed cluster. For more information about how to update an ACK cluster, see Update an ACK cluster.

(Optional) An ApsaraDB for Redis instance is created. The instance meets the following requirements.

To deploy a Ray cluster that supports high availability and fault tolerance, an ApsaraDB for Redis instance is used in this example. You can choose to create an ApsaraDB for Redis instance on demand.

The ack-kuberay-operator component is available in the marketplace of the ACK console. This component integrates the open source KubeRay component, enhances the KubeRay component, and minimizes the permissions of the KubeRay component.

After ack-kuberay-operator is deployed, you are redirected to the Helm page. You can view the Helm information of ack-kuberay-operator on this page.

Check whether ack-kuberay-operator runs as normal

Run the following command to verify that the status of the operator pod in the kuberay-system namespace is running:

kubectl get pod -n kuberay-systemThe output indicates that ack-kuberay-operator is installed.

NAME READY STATUS RESTARTS AGE

ack-kuberay-operator-88c879859-f467l 1/1 Running 0 3m48sThe ack-ray-cluster component provides additional Value configurations to allow you to integrate with Alibaba Cloud services, such as Simple Log Service, Managed Service for Prometheus, and ApsaraDB for Redis. You can use ack-ray-cluster to deploy a Ray cluster to run Ray tasks.

In this step, a Ray cluster named myfirst-ray-cluster is created in the raycluster namespace.

1. Run the following command to add an aliyunhub helm repo source:

helm repo add aliyunhub https://aliacs-app-catalog.oss-cn-hangzhou.aliyuncs.com/charts-incubator/

helm repo update aliyunhub2. Run the following command to install the ack-ray-cluster chart:

helm search repo ack-ray-clusterThe output indicates that the ack-ray-cluster chart is installed.

NAME CHART VERSION APP VERSION DESCRIPTION

aliyunhub/ack-ray-cluster 1.0.0 1.0.0 A ray cluster for Alibaba CloudNote

By default, the KubeRay autoscaler is enabled for ack-ray-cluster. By default, Ray clusters use the official imagerayproject/ray:2.7.0provided by the Ray community. For other settings, refer to the Value configurations in theack-ray-clusterchart.

3. Run the following command to configure environment variables:

export RAY_CLUSTER_NAME='myfirst-ray-cluster'

export RAY_CLUSTER_NS='raycluster'4. Run the following command to create a namespace:

kubectl create ns ${RAY_CLUSTER_NS}The output indicates that the namespace is created.

namespace/raycluster created5. Run the following command to create a Ray cluster in the ${RAY_CLUSTER_NS} namespace:

helm install ${RAY_CLUSTER_NAME} aliyunhub/ack-ray-cluster -n ${RAY_CLUSTER_NS}The output indicates that the Ray cluster is created.

NAME: myfirst-ray-cluster

LAST DEPLOYED: Tue Feb 6 09:48:29 2024

NAMESPACE: raycluster

STATUS: deployed

REVISION: 1

TEST SUITE: None6. Run the following command to check whether the Ray cluster, Service, and pods are created.

a) Query the Ray cluster.

kubectl get rayclusters.ray.io -n ${RAY_CLUSTER_NS}The output indicates that the Ray cluster is created.

NAME DESIRED WORKERS AVAILABLE WORKERS STATUS AGE

myfirst-ray-cluster 49sb) Query Services.

kubectl get svc -n ${RAY_CLUSTER_NS}The output indicates that a ClusterIP Service is created.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myfirst-ray-cluster-head-svc ClusterIP 192.168.36.189 <none> 10001/TCP,8265/TCP,8080/TCP,6379/TCP,8000/TCP 82sc) Query pods.

kubectl get pod -n ${RAY_CLUSTER_NS}The output indicates that pods are created for the Ray cluster.

NAME READY STATUS RESTARTS AGE

ray-cluster-01-head-zx88p 2/2 Running 0 27s

ray-cluster-01-worker-workergroup-nt9wv 1/1 Running 0 27s7. (Optional) Run the following command to create another Ray cluster named mysecond-ray-cluster in the default namespace.

Note

You can use theack-ray-clustercomponent to create multiple Ray clusters in an ACK cluster.

helm install mysecond-ray-cluster aliyunhub/ack-ray-cluster You can integrate Simple Log Service with a Ray cluster to persist logs.

1. Run the following command to create a global AliyunLogConfig object to enable the Logtail component in the ACK cluster to collect logs generated by the pods of Ray clusters and deliver the logs to a Simple Log Service project.

cat <<EOF | kubectl apply -f -

apiVersion: log.alibabacloud.com/v1alpha1

kind: AliyunLogConfig

metadata:

name: rayclusters

namespace: kube-system

spec:

# The name of the Logstore. If the specified Logstore does not exist, Simple Log Service automatically creates a Logstore.

logstore: rayclusters

# Configure Logtail.

logtailConfig:

# The type of data source. If you want to collect text logs, you must set the value to file.

inputType: file

# The name of the Logtail configuration. The name must be the same as the resource name that is specified in metadata.name.

configName: rayclusters

inputDetail:

# Configure Logtail to collect text logs in simple mode.

logType: common_reg_log

# The path of the log file.

logPath: /tmp/ray/session_*-*-*_*/logs

# The name of the log file. You can use wildcard characters such as asterisks (*) and question marks (?) when you specify the log file name. Example: log_*.log.

filePattern: "*.*"

# If you want to collect container text logs, you must set dockerFile to true.

dockerFile: true

# The conditions that are used to filter containers.

advanced:

k8s:

IncludeK8sLabel:

ray.io/is-ray-node: "yes"

ExternalK8sLabelTag:

ray.io/cluster: "_raycluster_name_"

ray.io/node-type : "_node_type_"

EOF| Parameter | Description |

logPath |

Collect all logs in the /tmp/ray/session_*-*-*_*/logs directory of the pods. You can specify a custom path. |

advanced.k8s.ExternalK8sLabelTag |

Add tags to the collected logs for log retrieval. By default, the _raycluster_name_ and _node_type_ tags are added. |

For more information about the AliyunLogConfig parameters, see Use CRDs to collect container logs in DaemonSet mode. Simple Log Service is a paid service. For more information, see Billing overview.

2. Log on to the ACK console and click Clusters in the left-side navigation pane.

3. On the Clusters page, click the name of the cluster that you want to manage and click Cluster Information in the left-side navigation pane.

4. On the Cluster Information page, click the Cluster Resources tab. Click the hyperlink next to Log Service Project to access the Simple Log Service project.

5. Select the Logstore that corresponds to rayclusters and view the log content.

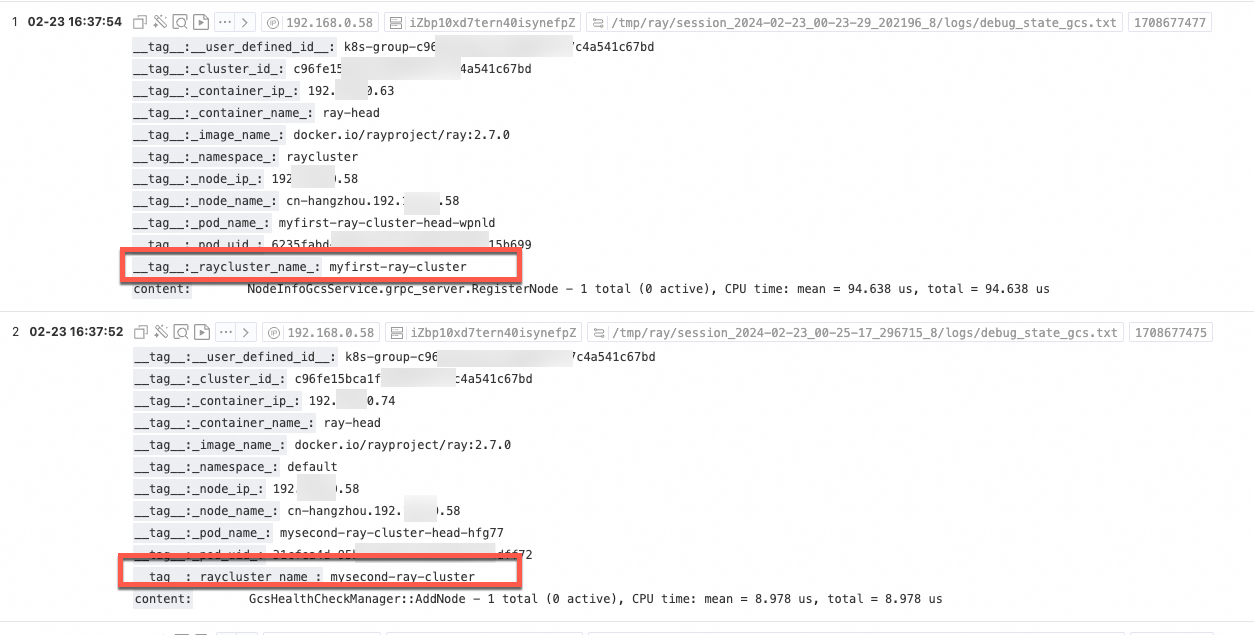

You can view the logs of different Ray clusters based on tags, such as _raycluster_name_.

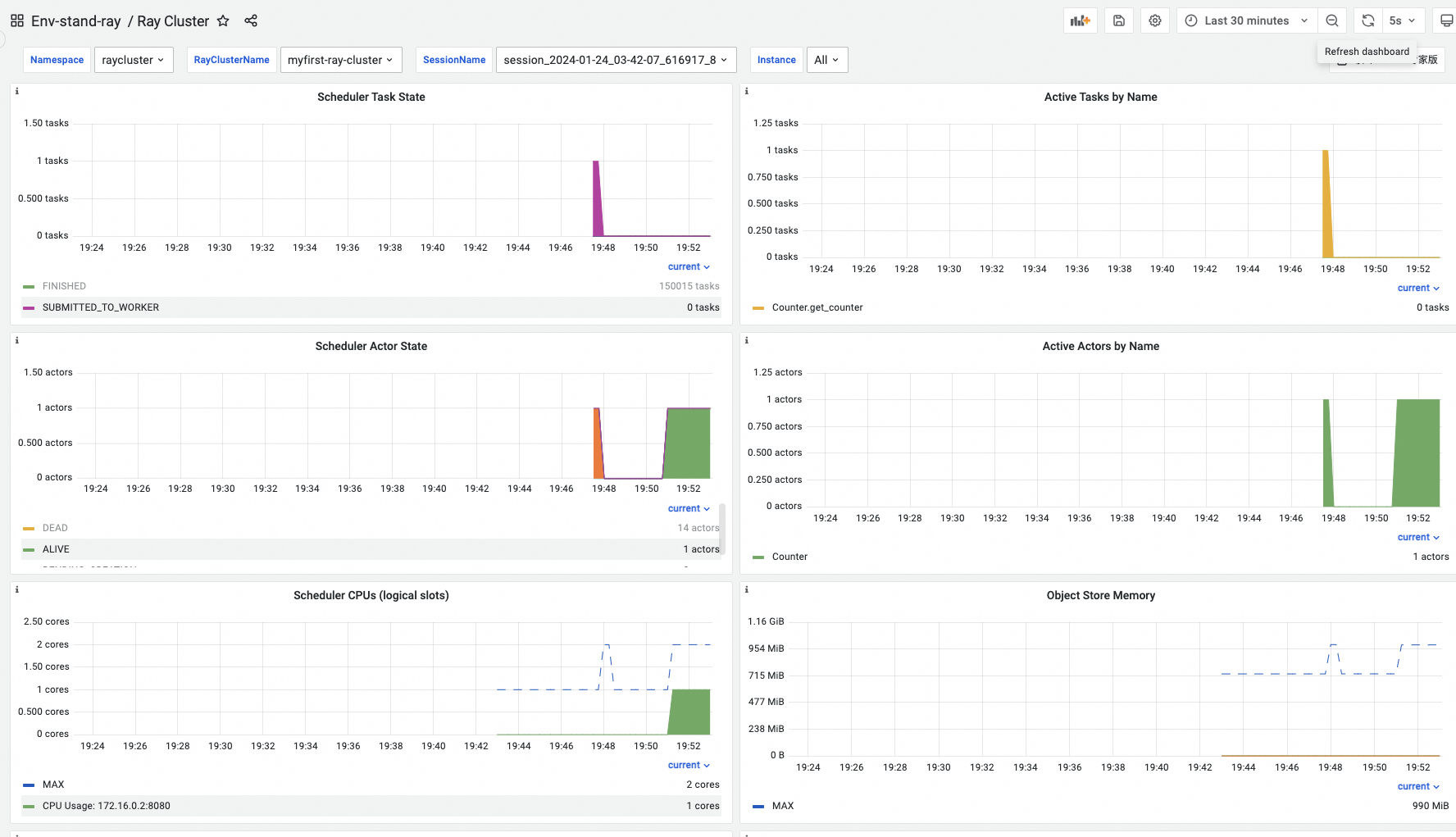

The ack-ray-cluster component is integrated with Managed Service for Prometheus. To use the Ray cluster monitoring feature, perform the following steps to install ack-ray-cluster. For more information, see Managed Service for Prometheus.

1. Run the following command to install ack-ray-cluster and set armsPrometheus.enable in the values configuration to true.

helm uninstall ${RAY_CLUSTER_NAME} -n ${RAY_CLUSTER_NS}

helm install ${RAY_CLUSTER_NAME} aliyunhub/ack-ray-cluster -n ${RAY_CLUSTER_NS} --set armsPrometheus.enable=true2. Log on to the ARMS console.

3. In the left-side navigation pane, click Integration Center. On the Infrastructure page, find and select Ray. In the Ray panel, select the ACK cluster that you created and click OK.

4. After the ACK cluster is integrated with Managed Service for Prometheus, click Integration Management to go to the Integration Management page.

5. On the Component Management tab, click Dashboards in the Component Type section and click Ray Cluster.

6. Specify Namespace, RayClusterName, and SessionName to filter the monitoring data of tasks that run in the Ray clusters.

The Global Control Service (GCS) component is used to manage the metadata of Ray clusters. GCS stores all data in memory, which is not fault-tolerant. When GCS is down, the entire Ray cluster fails. To enable fault tolerance for GCS, you need to create highly-available ApsaraDB for Redis instance for the Ray cluster. When GCS restarts, GCS can retrieve all data from the ApsaraDB for Redis instance so that GCS can function as normal. The ack-ray-cluster component supports integration with ApsaraDB for Redis to enable fault tolerance for GCS. For more information, see KubeRay GCS Fault Toleration Config and GCS Fault Toleration in KubeRay.

1. Run the following command to create a Secret to store the endpoint and password of the ApsaraDB for Redis instance.

Replace REDIS_PASSWORD and RAY_REDIS_ADDRESS with the endpoint and password of the ApsaraDB for Redis instance. Specify RAY_REDIS_ADDRESS in the redis://{Endpoint of the ApsaraDB for Redis instance}:6379 format. For more information about how to obtain the password of the ApsaraDB for Redis instance, see Change or reset the password.

export REDIS_PASSWORD='your redis password'

export RAY_REDIS_ADDRESS='redis://<Endpoint of the ApsaraDB for Redis instance>:6379'

kubectl create secret generic ${RAY_CLUSTER_NAME}-raycluster-redis -n ${RAY_CLUSTER_NS} --from-literal=address=${RAY_REDIS_ADDRESS} --from-literal=password=${REDIS_PASSWORD}Note

The Secret is named in the${RAY_CLUSTER_NAME}-raycluster-redisformat. Do not change the Secret name.

2. Run the following command to create a Ray cluster:

helm uninstall ${RAY_CLUSTER_NAME} -n ${RAY_CLUSTER_NS}

helm install ${RAY_CLUSTER_NAME} aliyunhub/ack-ray-cluster -n ${RAY_CLUSTER_NS} --set armsPrometheus.enable=true --set gcsFaultTolerance.enable=trueWhen gcsFaultTolerance.enable is set to true, the ray.io/ft-enabled: "true" annotation is added to the Ray cluster to enable GCS fault tolerance and mount the Secret to the Ray cluster through environment parameters.

3. Run the following command to query the Ray cluster:

kubectl get rayclusters.ray.io ${RAY_CLUSTER_NAME} -n ${RAY_CLUSTER_NS}

# Expected output:

NAME DESIRED WORKERS AVAILABLE WORKERS STATUS AGE

myfirst-ray-cluster 0 0 1 11m4. Run the following command to query the pods of the Ray cluster:

kubectl get pod -n ${RAY_CLUSTER_NS}

# Expected output:

NAME READY STATUS RESTARTS AGE

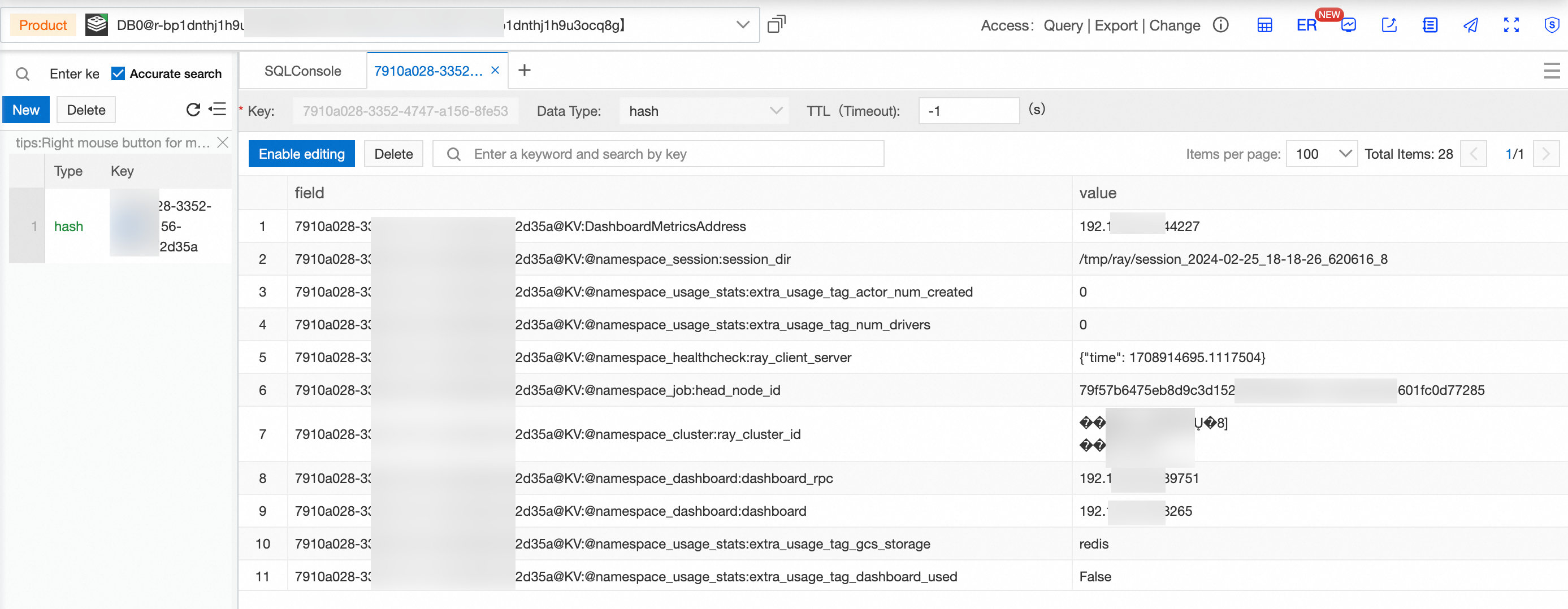

myfirst-ray-cluster-head-vrltd 2/2 Running 0 12m5. View data on the ApsaraDB for Redis instance. For more information, see Manage ApsaraDB for Redis instances by using DMS.

The figure indicates that the GCS information of the Ray cluster is stored on the ApsaraDB for Redis instance. When you delete the Ray cluster, the relevant GCS information is deleted from the ApsaraDB for Redis instance.

Ray provides the Ray autoscaler, which allows you to dynamically adjust the computing resources of the Ray cluster based on workloads. Container Service for Kubernetes (ACK) also provides the ACK autoscaler to implement auto scaling. This component can automatically adjust the number of nodes based on the workloads in the cluster. The auto scaling feature based on the Ray autoscaler and ACK autoscaler completely leverages the elasticity capability of cloud computing and improves the efficiency and cost-effectiveness of computing resources.

1. Run the following command to deploy a Ray cluster by using Helm in the ACK cluster:

helm uninstall ${RAY_CLUSTER_NAME} -n ${RAY_CLUSTER_NS}

helm install ${RAY_CLUSTER_NAME} aliyunhub/ack-ray-cluster -n ${RAY_CLUSTER_NS} 2. Run the following command to view the status of resources in the Ray cluster:

kubectl get pod -n ${RAY_CLUSTER_NS}

NAME READY STATUS RESTARTS AGE

myfirst-ray-cluster-head-kvvdf 2/2 Running 0 22m3. Run the following command to log on to the head node and view the cluster status:

Replace the value with the actual pod name of the Ray cluster.

kubectl -n ${RAY_CLUSTER_NS} exec -it myfirst-ray-cluster-head-kvvdf -- bash

(base) ray@myfirst-ray-cluster-head-kvvdf:~$ ray statusExpected output:

======== Autoscaler status: 2024-01-25 00:00:19.879963 ========

Node status

---------------------------------------------------------------

Healthy:

1 head-group

Pending:

(no pending nodes)

Recent failures:

(no failures)

Resources

---------------------------------------------------------------

Usage:

0B/1.86GiB memory

0B/452.00MiB object_store_memory

Demands:

(no resource demands)4. Submit and run the following jobs in the Ray cluster:

The following code starts 15 tasks, each of which requires one vCPU. By default, the value of --num-cpus for the head pod is 0, which means that task scheduling is not allowed. The CPU and memory of the worker pod are set to 1 vCPU and 1 GB by default. Therefore, the Ray cluster automatically creates 15 worker pods. Due to insufficient node resources in the ACK cluster, pods in the pending state automatically trigger the node auto scaling feature.

import time

import ray

import socket

ray.init()

@ray.remote(num_cpus=1)

def get_task_hostname():

time.sleep(120)

host = socket.gethostbyname(socket.gethostname())

return host

object_refs = []

for _ in range(15):

object_refs.append(get_task_hostname.remote())

ray.wait(object_refs)

for t in object_refs:

print(ray.get(t))5. Run the following command to query the status of pods in the Ray cluster:

kubectl get pod -n ${RAY_CLUSTER_NS} -w

# Expected output:

NAME READY STATUS RESTARTS AGE

myfirst-ray-cluster-head-kvvdf 2/2 Running 0 47m

myfirst-ray-cluster-worker-workergroup-btgmm 1/1 Running 0 30s

myfirst-ray-cluster-worker-workergroup-c2lmq 0/1 Pending 0 30s

myfirst-ray-cluster-worker-workergroup-gstcc 0/1 Pending 0 30s

myfirst-ray-cluster-worker-workergroup-hfshs 0/1 Pending 0 30s

myfirst-ray-cluster-worker-workergroup-nrfh8 1/1 Running 0 30s

myfirst-ray-cluster-worker-workergroup-pjbdw 0/1 Pending 0 29s

myfirst-ray-cluster-worker-workergroup-qxq7v 0/1 Pending 0 30s

myfirst-ray-cluster-worker-workergroup-sm8mt 1/1 Running 0 30s

myfirst-ray-cluster-worker-workergroup-wr87d 0/1 Pending 0 30s

myfirst-ray-cluster-worker-workergroup-xc4kn 1/1 Running 0 30s

...6. Run the following command to query the node status:

kubectl get node -w

# Expected output:

cn-hangzhou.172.16.0.204 Ready <none> 44h v1.24.6-aliyun.1

cn-hangzhou.172.16.0.17 NotReady <none> 0s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.17 NotReady <none> 0s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.17 NotReady <none> 0s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.17 NotReady <none> 1s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.17 NotReady <none> 11s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.16 NotReady <none> 10s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.16 NotReady <none> 14s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.17 NotReady <none> 31s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.17 NotReady <none> 60s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.17 Ready <none> 61s v1.24.6-aliyun.1

cn-hangzhou.172.16.0.16 Ready <none> 64s v1.24.6-aliyun.1

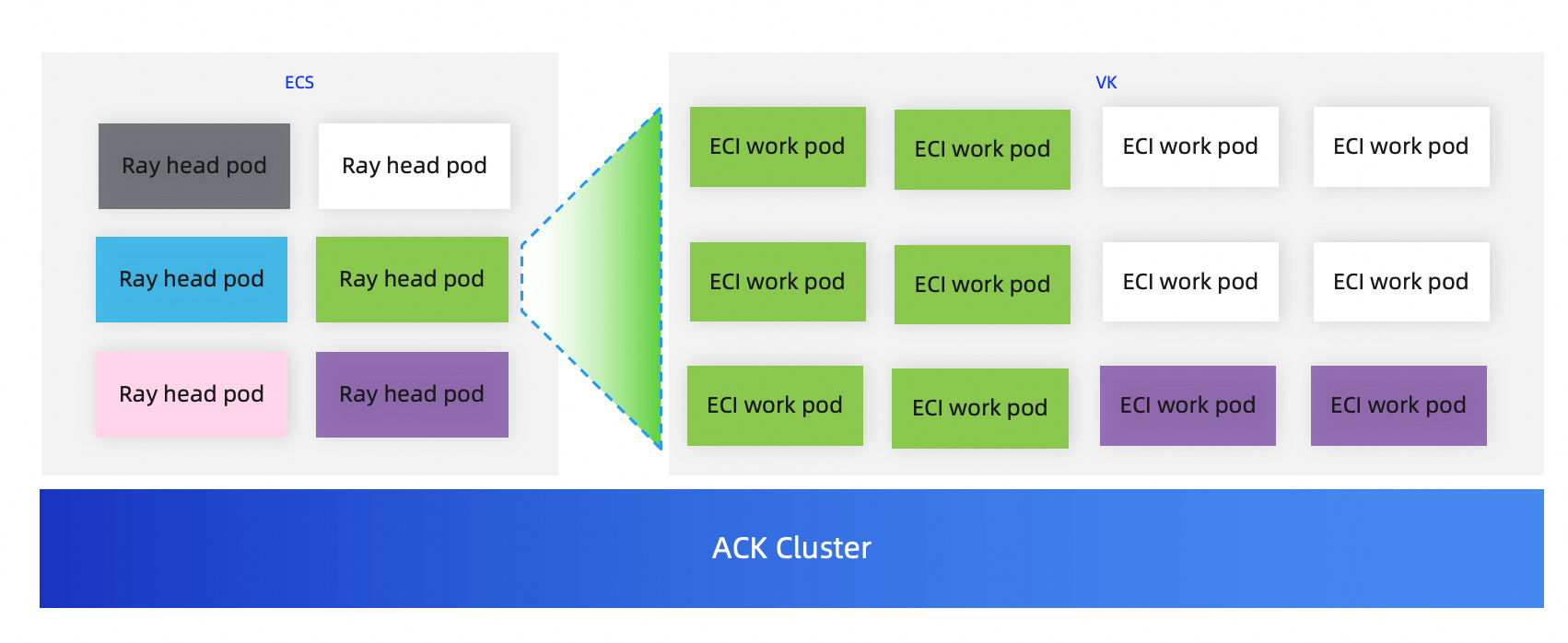

...By integrating with the Ray autoscaler, you can create a Ray cluster in a Container Service for Kubernetes (ACK) cluster and configure it to automatically scale Elastic Container Instance nodes in the Ray cluster. This allows you to benefit from a container runtime environment that is maintenance-free, isolated, and quick to start up. Elastic Container Instance allows you to run containers without the need to purchase or manage the underlying Elastic Compute Service(ECS) instances. You can focus on containerized applications rather than the maintenance of the underlying infrastructure. You are charged only for the resources used by your containers.

1. Run the following command to query the node and check whether the virtual-kubelet virtual node is added:

kubectl get nodeExpected output:

NAME STATUS ROLES AGE VERSION

cn-hangzhou.172.XX.XX.20 Ready <none> 19h v1.26.3-aliyun.1

cn-hangzhou.172.XX.XX.236 Ready <none> 82m v1.26.3-aliyun.1

cn-hangzhou.172.XX.XX.41 Ready <none> 19h v1.26.3-aliyun.1

virtual-kubelet-cn-hangzhou-k Ready agent 16m v1.26.3-aliyun.12. Run the following command to create a file named values.yaml:

cat > values.yaml <<EOF

worker:

groupName: workergroup

labels:

alibabacloud.com/eci: "true"

EOF3. Run the following command to deploy a Ray cluster that supports Elastic Container Instance:

helm uninstall ${RAY_CLUSTER_NAME} -n ${RAY_CLUSTER_NS}

helm install ${RAY_CLUSTER_NAME} aliyunhub/ack-ray-cluster -n ${RAY_CLUSTER_NS} -f values.yaml4. Run the following command to check whether the pod of the Ray cluster runs as normal:

kubectl get podExpected output:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myfirst-ray-cluster-head-7fgp4 2/2 Running 0 7m2s 172.16.0.241 cn-hangzhou.172.16.0.240 <none> <none>5. Run the following command to log on to the head node:

Replace the value with the actual pod name of the Ray cluster.

kubectl -n ${RAY_CLUSTER_NS} exec -it myfirst-ray-cluster-head-7fgp4 – bash6. Submit and run a Python job in the Ray cluster.

The following code starts two tasks, each of which requires one vCPU. By default, the value of --num-cpus for the head pod is 0, which means that task scheduling is not allowed. The CPU and memory of the worker pod are set to 1 vCPU and 1 GB by default. Therefore, the Ray cluster automatically creates two Elastic Container Instance worker pods.

import time

import ray

import socket

ray.init()

@ray.remote(num_cpus=1)

def get_task_hostname():

time.sleep(120)

host = socket.gethostbyname(socket.gethostname())

return host

object_refs = []

for _ in range(2):

object_refs.append(get_task_hostname.remote())

ray.wait(object_refs)

for t in object_refs:

print(ray.get(t))7. Run the following command to check whether the pods run as expected:

kubectl get pod -o wide

# Expected output:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myfirst-ray-cluster-head-7fgp4 2/2 Running 0 4m56s 172.16.0.241 cn-hangzhou.172.16.0.240 <none> <none>

myfirst-ray-cluster-worker-workergroup-6s2cl 0/1 Init:0/1 0 4m5s 172.16.0.17 virtual-kubelet-cn-hangzhou-k <none> <none>

myfirst-ray-cluster-worker-workergroup-l9qgb 1/1 Running 0 4m5s 172.16.0.16 virtual-kubelet-cn-hangzhou-k <none>ACK One Argo Workflows: Implementing Dynamic Fan-out/Fan-in Task Orchestration

228 posts | 33 followers

FollowAlibaba Container Service - September 17, 2025

Alibaba Container Service - April 8, 2025

Alibaba Container Service - June 23, 2025

Alibaba Cloud Community - March 22, 2024

Alibaba Container Service - November 15, 2024

Alibaba Container Service - July 25, 2025

228 posts | 33 followers

Follow Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Container Service