By Libing and Yuanqi

Alibaba Cloud's ApsaraDB RDS for MySQL has officially launched its DuckDB-based analytical instances. Leveraging columnar storage and vectorized execution, these instances deliver a performance leap of up to 100x for complex analytical queries. This provides enterprises with real-time analytical capabilities on massive datasets, accelerating data-driven decision-making.

A wide table is a common performance optimization technique in online analytical processing (OLAP). The technique involves flattening data from multiple related tables into a single, large table to improve query performance. Let's use the TPC-H benchmark data model to illustrate the pros and cons of this technique.

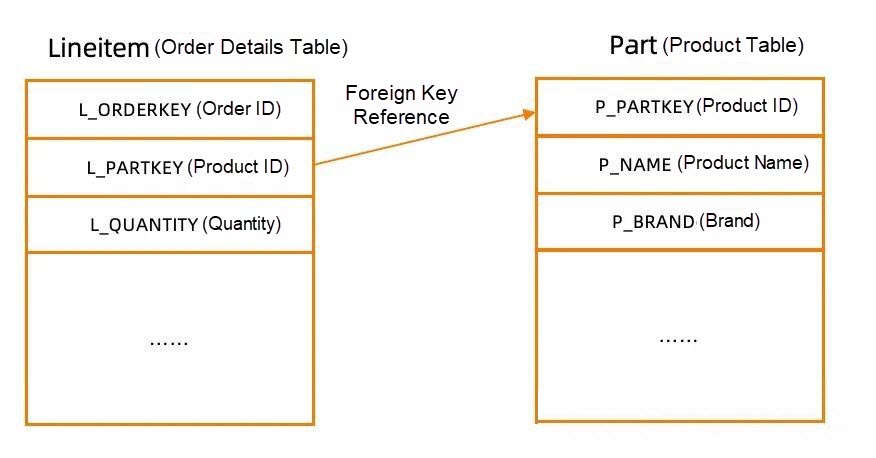

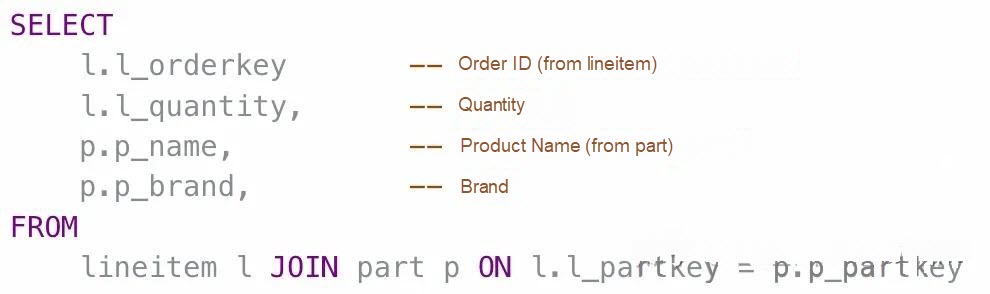

As shown in the preceding figure, the TPC-H data model includes an order details table and a product table. To query the product information for a specific order detail, you would need to execute the following SQL statement, which requires a JOIN:

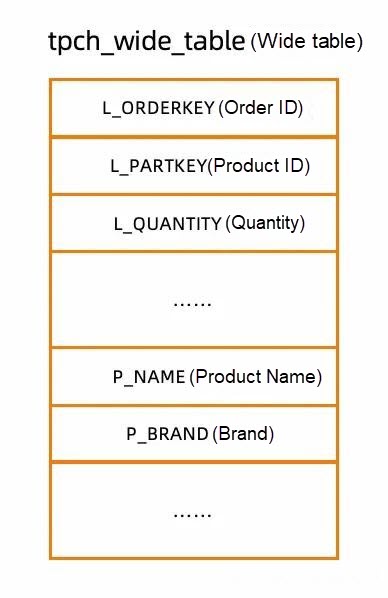

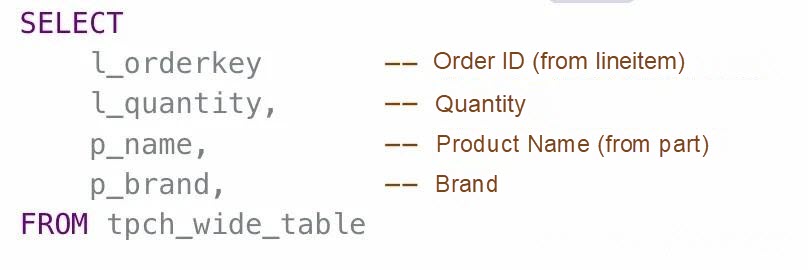

Creating a wide table involves denormalizing these related tables by merging them into a single, wider table, as shown in the following figure.

A query on this wide table can now avoid the JOIN operation, as shown in the following sample code:

Although wide tables can improve query efficiency, they also introduce several issues.

Dimensional information such as product and customer information is duplicated for each associated fact record (order item). For example, converting the TPC-H SF100 dataset to a wide table in MySQL DuckDB increases the occupied storage space from 26 GB to 164 GB. A similar conversion in ClickHouse sees storage usage grow from 40 GB to 191 GB.

A larger storage footprint means more storage I/O. Consequently, to achieve their expected optimization effect, wide tables require more memory for data caching.

Building and maintaining wide tables requires complex extract, transform, and load (ETL) jobs, which demand significant effort in both design and maintenance.

• The process must extract data from each source table and perform a massive JOIN operation (Fact table Dimension table 1 Dimension table 2 ...), resulting in significant computational overhead.

• Handling slowly changing dimensions (SCDs) is particularly challenging. A minor update in a dimension table, such as a change in a customer's address, can require cascading updates to all relevant historical fact records in the wide table. Alternatively, it may necessitate complex logic to manage historical snapshots, which makes ETL jobs cumbersome and slow.

• The approach lacks flexibility. If business requirements change and a new dimension or dimension attribute needs to be added, the schema of the wide table must be altered, and the entire historical dataset must be reprocessed to populate the new columns. This often requires a complete redesign of the ETL process.

The inefficiency and long duration of data updates during the ETL process can lead to high data replication latency. This is unacceptable for many real-time or near-real-time analytical applications.

The wide table is essentially a compromise made in pursuit of query performance, forcing users to trade storage redundancy and complex ETL processes for faster queries. If a database is powerful enough, however, it can significantly reduce user complexity and costs. MySQL DuckDB delivers excellent performance, and building an analysis system with it can greatly reduce the reliance on wide tables.

We used the TPC-H SF100 dataset to build wide tables on both a DuckDB-based analytical instance for ApsaraDB RDS for MySQL and a ClickHouse instance for a performance comparison.

• Elastic Compute Service (ECS) instance: ecs.g8i.8xlarge (32 CPU cores, 128 GB of memory)

• Test dataset: TPC-H SF100 (26 GB)

• MySQL DuckDB version: AliSQL (DuckDB-1.3.1)

• ClickHouse version: 25.8.1 Community Edition

| Query | DuckDB Multi-table join | DuckDB Wide table | CK Multi-table join | CK Wide table |

|---|---|---|---|---|

| Q5 (Six-table join) | 0.54 | 0.29 | 3.71 | 0.25 |

| Q8 (Eight-table join) | 0.73 | 0.24 | 5.58 | 0.51 |

| Q9 (Six-table join) | 1.88 | 1.75 | 8.89 | 2.42 |

| Q21 (Six-table join) | 1.83 | 1.68 | OOM | OOM |

Out of the 22 TPC-H queries, we selected four with a high number of JOINs for this comparison. The results, shown in the preceding figure, lead to the following conclusions:

• On MySQL DuckDB, the performance of the wide table is slightly better, but it offers no significant advantage over multi-table joins. The minor performance gain does not justify the added complexity and learning and maintenance costs associated with wide tables. Therefore, when using MySQL DuckDB, users can forgo reliance on wide tables.

• In ClickHouse, the performance of the wide table is far superior to that of multi-table joins. This explains why the ETL and wide table combination is the most common usage pattern for ClickHouse.

• MySQL DuckDB's multi-table JOIN performance is significantly better than ClickHouse's. We will analyze the reasons for MySQL DuckDB's superior JOIN performance in the next section.

Of the 22 queries in the TPC-H benchmark, 18 involve JOIN operations. For multi-table joins, the join order is crucial. For any given SQL query, a good database optimizer can automatically use statistical information to calculate the join order with the lowest execution cost and reorder the tables accordingly, which can dramatically shorten the execution duration.

We used these 18 queries to evaluate the join reordering algorithms of MySQL DuckDB and ClickHouse. The method is straightforward: we rewrote each query to force ClickHouse to use the same join order that MySQL DuckDB selected, and then compared the execution duration before and after the rewrite.

In this example, TPC-H Query 3 is used to illustrate our evaluation method. This query joins three tables named customer, orders, and lineitem, and then performs an aggregation:

SELECT l_orderkey, sum(l_extendedprice * (1 - l_discount)) AS revenue

, o_orderdate, o_shippriority

FROM customer, orders, lineitem

WHERE c_mktsegment = 'BUILDING'

AND c_custkey = o_custkey

AND l_orderkey = o_orderkey

AND o_orderdate < DATE '1995-03-15'

AND l_shipdate > DATE '1995-03-15'

GROUP BY l_orderkey, o_orderdate, o_shippriority

ORDER BY revenue DESC, o_orderdate

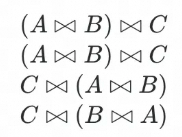

LIMIT 10;MySQL DuckDB executes this query with the join order:  . ClickHouse, however, uses the join order:

. ClickHouse, however, uses the join order:  . To force ClickHouse to use the same join order as MySQL DuckDB, we rewrote the query using a derived table:

. To force ClickHouse to use the same join order as MySQL DuckDB, we rewrote the query using a derived table:

SELECT l_orderkey, sum(l_extendedprice * (1 - l_discount)) AS revenue

, o_orderdate, o_shippriority

FROM lineitem

JOIN (

SELECT o_orderkey, o_orderdate, o_shippriority

FROM orders

JOIN customer ON o_custkey = c_custkey

WHERE c_mktsegment = 'BUILDING'

) tmp0

ON l_orderkey = o_orderkey

WHERE o_orderdate < DATE '1995-03-15'

AND l_shipdate > DATE '1995-03-15'

GROUP BY l_orderkey, o_orderdate, o_shippriority

ORDER BY revenue DESC, o_orderdate

LIMIT 10;In this rewritten SQL, the orders and customer tables are joined first to generate a derived table tmp0. Then, the lineitem table is joined with tmp0. This forces ClickHouse to execute the query with the join order  .

.

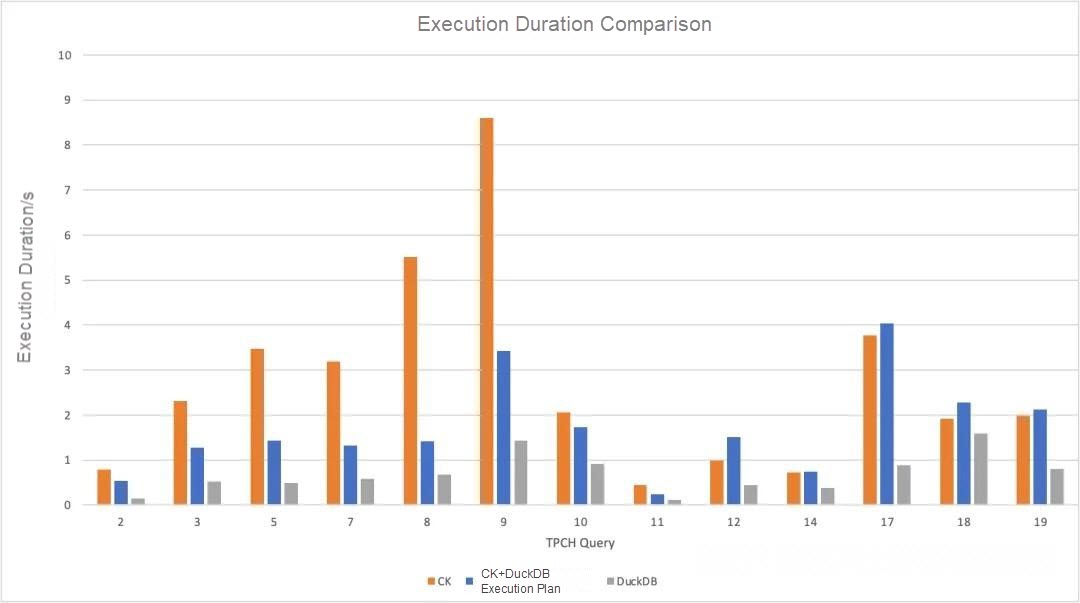

Of these 18 queries, the join order differed significantly for 13 of them. We rewrote these 13 queries to match MySQL DuckDB's join order and then ran them in ClickHouse. The following figure compares the execution duration before and after the rewrite.

Among these 13 queries, Queries 2, 3, 5, 7, 8, 9, 10, 11, and 18 involve joins on more than two tables, while the others involve only two-table joins. From this comparison, we can observe the following:

• For the queries involving multi-table joins, applying MySQL DuckDB's join order resulted in significant performance improvements in ClickHouse.

• For the two-table joins, the effect was negligible, and some queries even showed a slight performance degradation. This is because the JOIN operation itself was not the performance bottleneck in these specific queries.

• Even after applying MySQL DuckDB's join order, ClickHouse's performance still lagged significantly behind MySQL DuckDB's.

From this comparison, we can conclude that MySQL DuckDB's join reordering algorithm is substantially better than that of ClickHouse. We will now analyze this algorithm by examining its implementation in the source code.

Two primary factors influence join orders within the optimizer: the join reordering algorithm and cardinality estimation. MySQL DuckDB holds a significant advantage in both areas.

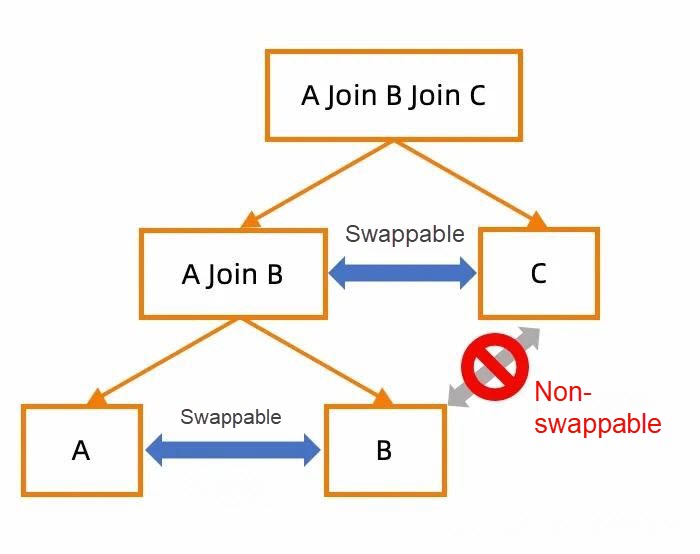

MySQL DuckDB uses the advanced DPhyp algorithm. This algorithm converts join relationships into a hypergraph and, by enumerating its connected components, can quickly explore all possible join orders. As a result, it is guaranteed to find the optimal join order. In contrast, ClickHouse represents join relationships as a left-deep tree, as shown in the following figure. Its algorithm only swaps tables at the same level based on cardinality information. Tables at different levels cannot be swapped. Therefore, ClickHouse's reordering algorithm is likely to miss the optimal join order.

For example, with a simple  operation, ClickHouse is limited to finding the optimal solution among only the following four potential join orders: If the optimal join order is

operation, ClickHouse is limited to finding the optimal solution among only the following four potential join orders: If the optimal join order is  , it will be missed.

, it will be missed.

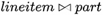

Both MySQL DuckDB and ClickHouse have access to row count statistics for base tables. However, ClickHouse has defects in how it estimates the row count of operator results. If a query contains operators such as GROUP BY or DISTINCT, ClickHouse treats the resulting row count as unknown. It often fails to correctly estimate row counts even for FILTER operators, again treating the result as unknown. Consequently, ClickHouse cannot optimize the join order and can only execute the query based on the join order specified by the user. Let's illustrate this with two simple queries:

-- Query 1

SELECT COUNT(*)

FROM part JOIN lineitem

ON part.p_partkey = lineitem.l_partkey;

-- Query 2

SELECT COUNT(*)

FROM part JOIN lineitem

ON part.p_partkey = lineitem.l_partkey

WHERE part.p_name LIKE '%green%';Query 1 executes in 1.208s in ClickHouse, while Query 2 takes 3.749s in ClickHouse. Adding a filter condition to part.p_name in Query 2 unexpectedly increases the execution duration. In Query 1, ClickHouse knows the row counts for both the part and lineitem tables. Because the part table has lower cardinality, ClickHouse uses it to build a hash table, and then performs  . In Query 2, however, adding the LIKE filter condition to part causes ClickHouse to lose track of the cardinality of the filtered dataset. As a result, it does not perform the query based on the join order of the original SQL statement but incorrectly builds a hash table on the lineitem table, leading to a longer execution duration. We consider this a bug in ClickHouse. Even if ClickHouse sets the cardinality of the filtered dataset to the number of rows of the original part table, it would have still chosen the correct join order.

. In Query 2, however, adding the LIKE filter condition to part causes ClickHouse to lose track of the cardinality of the filtered dataset. As a result, it does not perform the query based on the join order of the original SQL statement but incorrectly builds a hash table on the lineitem table, leading to a longer execution duration. We consider this a bug in ClickHouse. Even if ClickHouse sets the cardinality of the filtered dataset to the number of rows of the original part table, it would have still chosen the correct join order.

Furthermore, MySQL DuckDB's optimizer implements many additional join optimizations, such as Join-Dependent Filter and Join Filter Pushdown, which ClickHouse does not support.

As we saw in the previous test, even when forcing ClickHouse to use the same join order as MySQL DuckDB, its execution duration was still much longer. To check this, we used a simple SQL query to compare the performance of their respective JOIN operators.

The test uses the following query:

SELECT COUNT(*)

FROM part JOIN lineitem

ON part.p_partkey = lineitem.l_partkey

WHERE part.p_partkey < 200000000;By fixing the join order, this query forces the database to use lineitem to build a hash table and part to probe the hash table. The test was run on the TPC-H SF100 dataset, where lineitem has 600,037,902 rows and part has 20 million rows. This query therefore creates a very large hash table that is then probed multiple times.

Due to an implementation mechanism in its optimizer, ClickHouse uses lineitem to build a hash table and part to probe the hash table without requiring you to perform manual operations. In MySQL DuckDB, however, the optimizer automatically reorders the join to accelerate the query. Therefore, we need to disable join reordering optimization in MySQL DuckDB to ensure a like-for-like comparison.

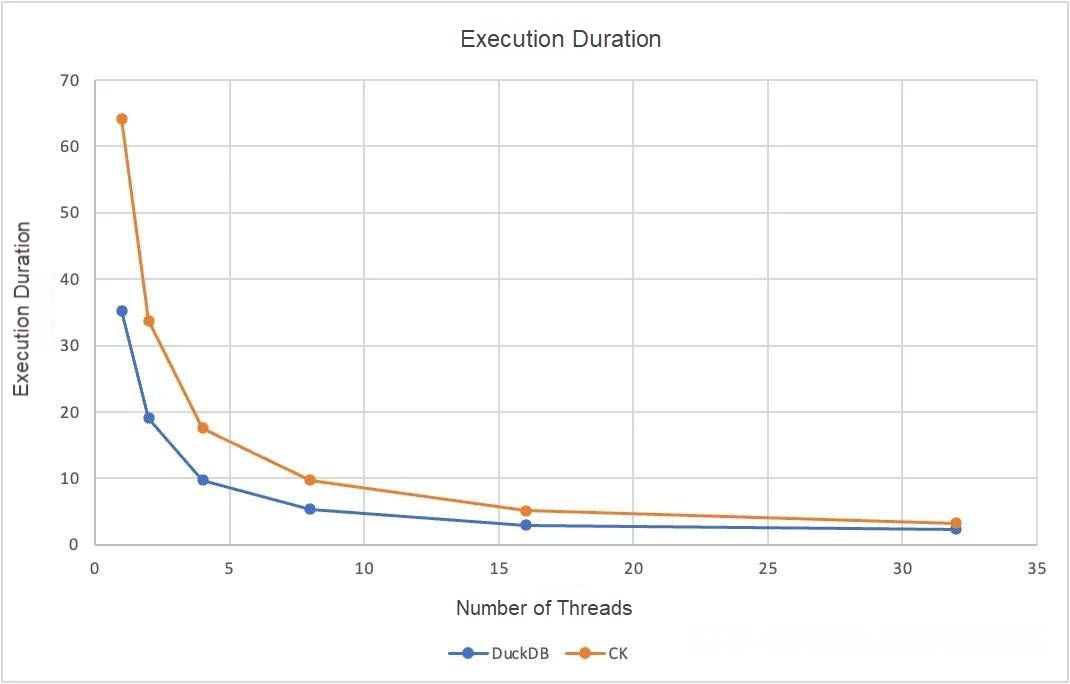

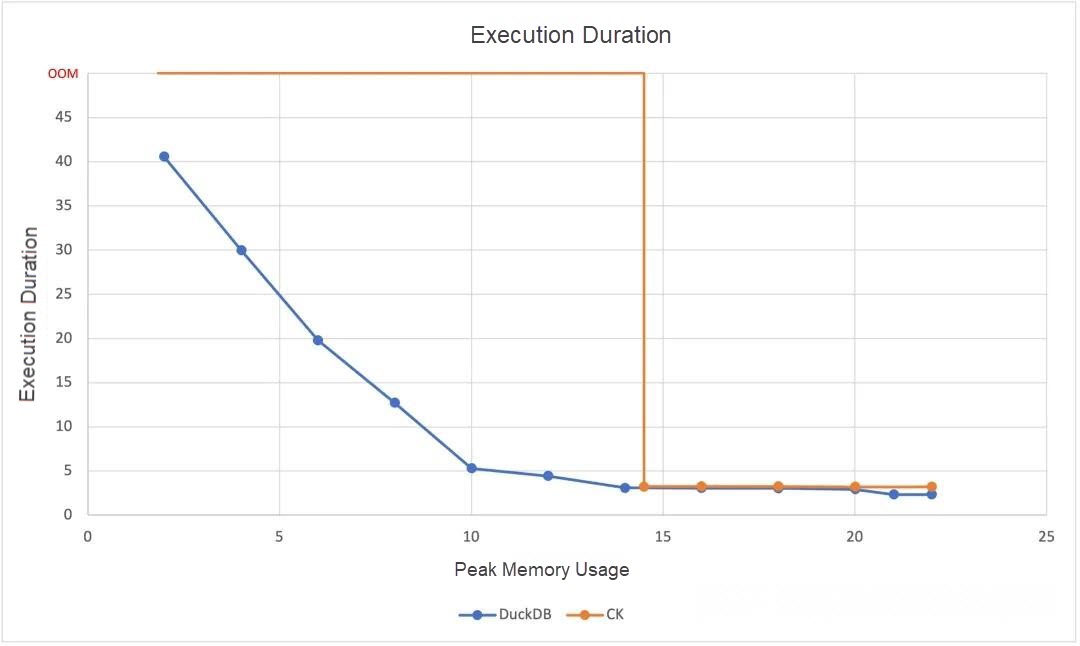

SET disabled_optimizers = 'join_order,build_side_probe_side';The following figure shows the test results.

As the results show, from single-threaded to 32-threaded parallel execution, MySQL DuckDB's execution duration is consistently lower than ClickHouse's. Across different thread counts, ClickHouse's execution duration is generally 1.5 to 1.8 times that of MySQL DuckDB. The test was run with sufficient memory, ensuring that hash tables were built entirely in memory. Therefore, the observed performance gap is primarily due to the differing efficiencies of their hash table creation and probing implementations.

During testing, we found that ClickHouse frequently encountered out-of-memory (OOM) errors. An analysis of the source code reveals why: In ClickHouse, a hash join requires loading all referenced columns of the build-side table (the table used to create the hash table) into memory. The complete hash table itself must also be stored in memory. This consumes a large amount of memory, often resulting in OOM errors. In contrast, MySQL DuckDB builds its hash tables in partitions. At any given time, only a single hash partition needs to reside in memory. Table data is cached in blocks, and the buffer pool evicts data based on a least recently used (LRU) policy. As a result, MySQL DuckDB can complete queries with significantly lower memory consumption. In a 32-thread concurrent scenario, we controlled the memory available to both MySQL DuckDB and ClickHouse and obtained the following memory usage data.

In MySQL DuckDB, we progressively lowered the duckdb_memory_limit parameter to reduce available memory. The query failed once the limit was reduced to 1.10 GB, at which point peak system memory usage was 1.99 GB.

In ClickHouse, we progressively lowered the max_memory_usage parameter to reduce available memory. The query failed when the limit was reduced to 14.17 GB, at which point peak system memory usage was 14.69 GB.

As the system memory limit was gradually relaxed, MySQL DuckDB's execution duration decreased significantly. This rate of improvement slowed after available memory exceeded 10 GB. When given a similar amount of memory, its execution duration was comparable to ClickHouse's. MySQL DuckDB reached its optimal performance with a peak memory usage of 21.01 GB, at which point its execution duration was shorter than that of ClickHouse.

The wide table is fundamentally a space-for-time compromise, employed when underlying database performance is insufficient. This approach, however, introduces the heavy burdens of storage redundancy, complex ETL, and data latency. MySQL DuckDB breaks this compromise. Its advanced optimizer (featuring the DPhyp algorithm and precise cardinality estimation), coupled with a highly efficient operator implementation (offering low memory consumption and high concurrency), delivers exceptional multi-table JOIN performance. For users seeking efficient, flexible, and low-cost analytics, choosing an engine like MySQL DuckDB with its powerful native JOIN capabilities means they can abandon the complex and cumbersome wide table model. Instead, they can run efficient queries directly against normalized star or snowflake schemas, significantly simplifying architecture, reducing costs, and improving agility. MySQL DuckDB provides a superior path for real-time, interactive, and complex analysis.

[Infographic] Highlights | Database New Features in October 2025

ApsaraDB - September 27, 2025

ApsaraDB - January 15, 2026

ApsaraDB - September 10, 2025

ApsaraDB - August 19, 2025

ApsaraDB - August 8, 2024

ApsaraDB - June 18, 2021

ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn MoreMore Posts by ApsaraDB