By Biran

Algorithms, data, and computing power are the three major components of AI. Without computing power, AI cannot be implemented. The computing power of the NVIDIA GPU makes it the top choice for accelerating AI model training, but it is expensive. The solution composed of Alibaba Cloud Container Service, Elastic Container Instance (ECI), and Arena provides an easy, effective, and cost-effective way to take advantage of the computing power of NVIDIA GPU.

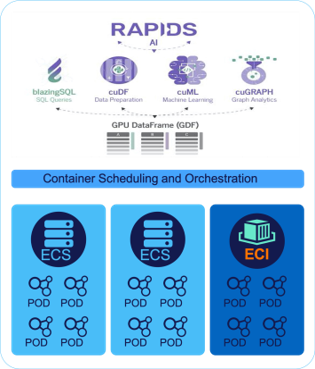

Deep Learning is the first thing that strikes anyone while mentioning the NVIDIA GPU. Traditional machine learning and data analysis methods rarely use GPUs. In fact, NVIDIA has an excellent project called RAPIDS, which is a GPU-accelerated library launched by NVIDIA for data science and machine learning. For more information about RAPIDS, visit the official RAPIDS website. This project aims to bring GPU acceleration to traditional algorithms and provides the same operations and user experience as Pandas and scikit-learn. RAPIDS has three modules: cuDF is equivalent to Pandas, cuML is equivalent to scikit-learn, and cuGraph is used to process graph data. Given its sound compatibility, combine RAPIDS with the deep learning framework, use cuDF to accelerate data processing through a GPU, and then use the TensorFlow and PyTorch deep learning model frameworks to perform tasks.

This article shows how to use TensorFlow and RAPIDS to perform search by image on Alibaba Cloud Container Service and use ECI to apply for GPU resources. GPU resources are prepared in seconds and released when no longer needed. This implies that there is no need to prepare GPU instances in advance. Additionally, there is no need to deal with the Kubernetes infrastructure. Run Arena commands to build and run a RAPIDS environment that contains GPUs and manage the GPU infrastructure.

To begin with, prepare a managed Kubernetes cluster. A managed Kubernetes cluster controls nodes running on Alibaba Cloud resources, with Alibaba Cloud being responsible for operations and maintenance (O&M) costs. Meanwhile, this cluster creates a virtual Kubelet node.

In case, a Kubernetes cluster for container service is already created, select a managed Kubernetes cluster.

As the system component container needs to be run, the node must contain at least one worker node.

1) For more information on installing virtual nodes, see Virtual Nodes Documentation.

2) Configure virtual-kubelet-autoscaler. If the GPU resources in the cluster are insufficient, use virtual-kubelet-autoscaler to remove ECI container groups that are using the GPU.

1) Install Arena using the commands below.

$ wget http://kubeflow.oss-cn-beijing.aliyuncs.com/arena-installer-0.3.0-b556a36-linux-amd64.tar.gz

$ tar -xvf arena*.tar.gz

$ cd arena-installer

$ ./install.sh2) Now, run an Arena command to view the cluster's GPU resources. As the following snippet shows, this user's cluster has one real node and does not contain GPU resources. In addition, a virtual node is present, which does not physically exist and therefore is not billed. This node provides unlimited GPU resources, which can be scaled.

$ arena top node

arena top node

NAME IPADDRESS ROLE STATUS GPU(Total) GPU(Allocated)

cn-shanghai.192.168.1.248 192.168.1.248 <none> ready 0 0

virtual-kubelet 172.20.2.18 agent ready 1000 0

-----------------------------------------------------------------------------------------

Allocated/Total GPUs In Cluster:

0/1000 (0%)3) Before submitting the RAPIDS task, complete the necessary preparation to accelerate the creation process and simplify access operations.

3.1 Set the method to access LoadBalancer. Note that this method is used only for the sake of simplicity. We recommend that you disallow external IP addresses to access the production environment.

$ find /charts/ -name "*.yaml" | xargs sed -i "s/NodePort/LoadBalancer/g"3.2 Next, accelerate the startup speed using the step below.

3.2.1 The GPU container image is normally very large in size. For example, the RAPIDS container image used in this experiment has a capacity of 14.7 GB. Generally, the startup time is about 10 minutes. However, the image caching function may reduce this time to 20 seconds.

docker images | grep rapids

registry.cn-shanghai.aliyuncs.com/tensorflow-samples/rapids-samples 0.8.2-cuda10.0-runtime-ubuntu16.04 4597a0334d41 12 days ago 14.7GB3.2.2 In serverless Kubernetes, just create an ImageCache CRD to directly use the image caching function.

$ cat > imagecache.yaml << EOF

apiVersion: eci.alibabacloud.com/v1

kind: ImageCache

metadata:

name: imagecache-rapids

spec:

images:

- registry.cn-shanghai.aliyuncs.com/tensorflow-samples/rapids-samples:0.8.2-cuda10.0-runtime-ubuntu16.04

imageCacheSize:

50

EOF

$ kubectl create -f imagecache.yaml3.2.3 Wait a moment after submission. Check the status of ImageCache. The CACHEID may use the snapshot-id specified earlier while submitting a previous task.

$ kubectl get imagecache

NAME AGE CACHEID PHASE PROGRESS

imagecache-rapids 3d9h imc-uf6dxdji7txxxxx Ready 100%4) Submit the RAPIDS development environment as shown below.

$ arena serve custom \

--name=rapids \

--selector=type=virtual-kubelet \

--toleration=all \

--annotation=k8s.aliyun.com/eci-image-snapshot-id=imc-uf6dxdji7txxxxx \

--annotation=k8s.aliyun.com/eci-instance-type=ecs.gn5i-c8g1.2xlarge \

--gpus=1 \

-e=PASSWORD=mypassw0rd \

--restful-port=80 \

--image=registry.cn-shanghai.aliyuncs.com/tensorflow-samples/rapids-samples:0.8.2-cuda10.0-runtime-ubuntu16.04

configmap/rapids-201912011815-custom-serving created

configmap/rapids-201912011815-custom-serving labeled

service/rapids-201912011815 created

deployment.extensions/rapids-201912011815-custom-serving createdLet's take a quick look at the commnds used in the preceding code snippet.

--selector=type=virtual-kubelet: It indicates that the pod is started by using a virtual node.--annotation=k8s.aliyun.com/eci-instance-type=ecs.gn5i-c8g1.2xlarge: It specifies the ECI container group type.ecs.gn5i-c8g1.2xlarge: It refers to the Alibaba Cloud P4 model. For more information about the detailed specifications, see the relevant document.--annotation=k8s.aliyun.com/eci-image-snapshot-id=imc-uf6dxdji7txxxxx: It specifies the CACHEID in Step 3.2.3.-e=PASSWORD=mypassw0rd: It indicates that RAPIDS notebook is accessed by setting the PASSWORD environment variable.--gpus=1: It indicates the number of GPUs applied for.5) Now, view the access address, which is a combination of ENDPOINT_ADDRESS and PORTS. In this example, its value is 106.15.173.2:80. At the same time, see that this task switches to the Running state in 32 seconds.

$ arena serve list

NAME TYPE VERSION DESIRED AVAILABLE ENDPOINT_ADDRESS PORTS

rapids CUSTOM 201911181827 1 1 105.13.58.3 restful:80

$ arena serve get rapids

arena serve get rapids

NAME: rapids

NAMESPACE: default

VERSION: 201912011815

DESIRED: 1

AVAILABLE: 1

SERVING TYPE: CUSTOM

ENDPOINT ADDRESS: 106.15.173.2

ENDPOINT PORTS: restful:80

AGE: 32s

INSTANCE STATUS AGE READY RESTARTS NODE

rapids-201912011815-custom-serving-6b54d5cd-swcwz Running 32s 1/1 0 N/A

6) Check the GPU usage of the cluster again. Note that the GPU resources are already being used.

$ arena top node

NAME IPADDRESS ROLE STATUS GPU(Total) GPU(Allocated)

cn-shanghai.192.168.1.248 192.168.1.248 <none> ready 0 0

virtual-kubelet 172.20.2.20 agent ready 1000 1

-----------------------------------------------------------------------------------------

Allocated/Total GPUs In Cluster:

1/1000 (0%)

7) To query the pods that use this GPU, append "-d" to the original command to view specific pod names.

$ arena top node -d

NAME: cn-shanghai.192.168.1.248

IPADDRESS: 192.168.1.248

ROLE: <none>

Total GPUs In Node cn-shanghai.192.168.1.248: 0

Allocated GPUs In Node cn-shanghai.192.168.1.248: 0 (0%)

-----------------------------------------------------------------------------------------

NAME: virtual-kubelet

IPADDRESS: 172.20.2.20

ROLE: agent

NAMESPACE NAME GPU REQUESTS

default rapids-201912011815-custom-serving-6b54d5cd-swcwz 1

Total GPUs In Node virtual-kubelet: 1000

Allocated GPUs In Node virtual-kubelet: 1 (0%)

-----------------------------------------------------------------------------------------

Allocated/Total GPUs In Cluster: 1/1000 (0%)

8) Use the access address and port from Step 4 to visit the address in the local browser. Enter http://{ENDPOINT ADDRESS}:{ENDPOINT PORT}. In this example, the address is http://105.13.58.3:80.

Note: We recommend using the Chrome browser.

9) Enter the logon password set in the preceding command and then click on the Log in button. In this example, the password is mypassw0rd.

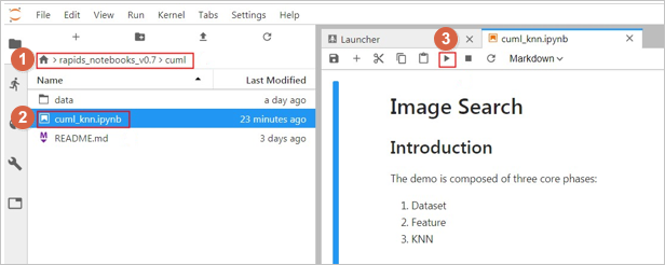

1) Go to the cuml directory where the demo is located.

2) Double-click the cuml_knn.ipynb file.

3) Click on start icon.

Note: Click once to run one cell. Click until the demo is fully executed. For more detailed instructions, refer to the following section on Demo execution process.

The image search demo process contains the following three steps. The demo results demonstrate the different performance of GPU-accelerated RAPIDS cuml KNN and scikit-learn KNN that uses only CPUs.

1) Process the Dataset

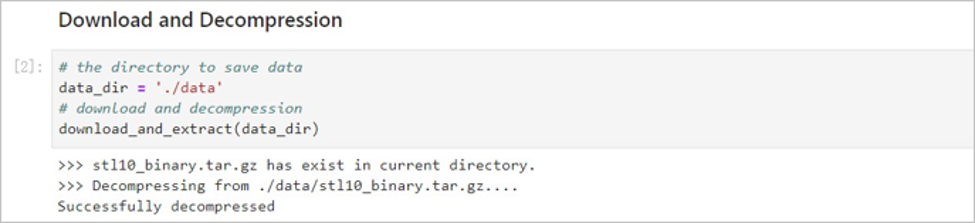

1.1) Download and Decompress the Dataset

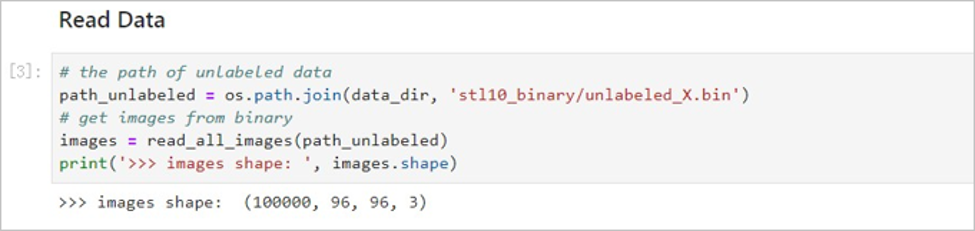

In this demo, the STL-10 dataset contains 100,000 unlabeled images with the dimensions of 96 x 96 x 3. Use other datasets to extract image features, but make sure that these datasets use images of the same size.

Use the download_and_extract(data_dir) method to download and decompress the STL-10 dataset. In the RAPIDS image, the dataset is downloaded to the ./data directory. Use the download_and_extract() method to decompress the dataset.

1.2) Read the Images.

Data decompressed from the dataset is binary. Use the read_all_images(path_to_data) method to load and convert the data to the NHWC format (batch, height, width, and channels). This format allows Tensorlow to extract image features.

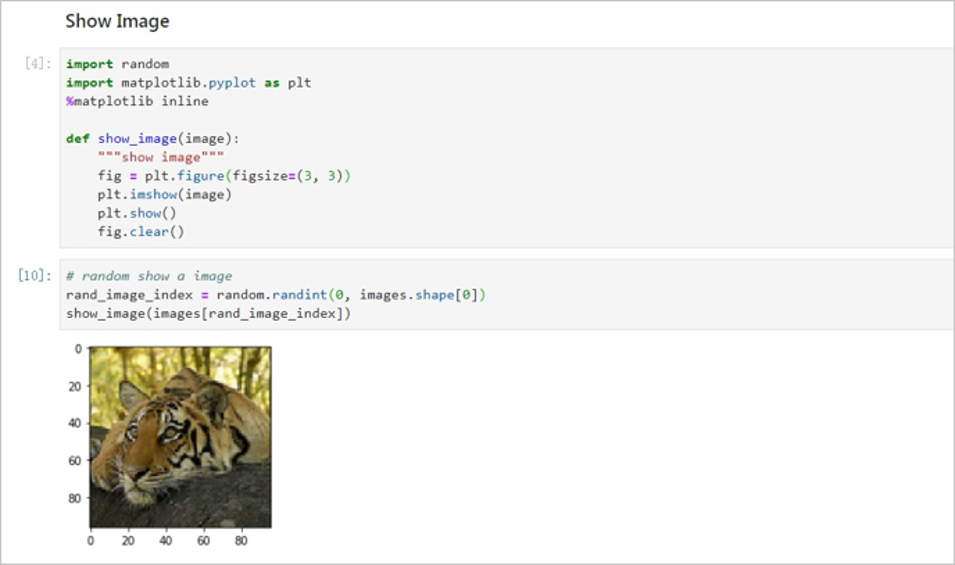

1.3) Display an Image.

Use the show_image(image) method to display a random image from the dataset.

1.4) Split the Dataset.

Split the dataset into two parts at a 9:1 ratio. One part is used to create image index libraries, and the other is used to search for images.

2) Extract Image Features

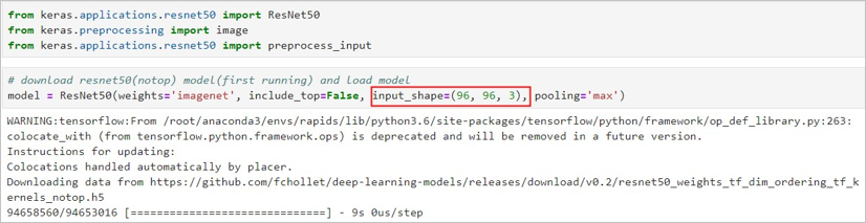

Use TensorFlow and Keras to extract image features. Use the pre-trained model ResNet50 (notop), which is based on the ImageNet dataset.

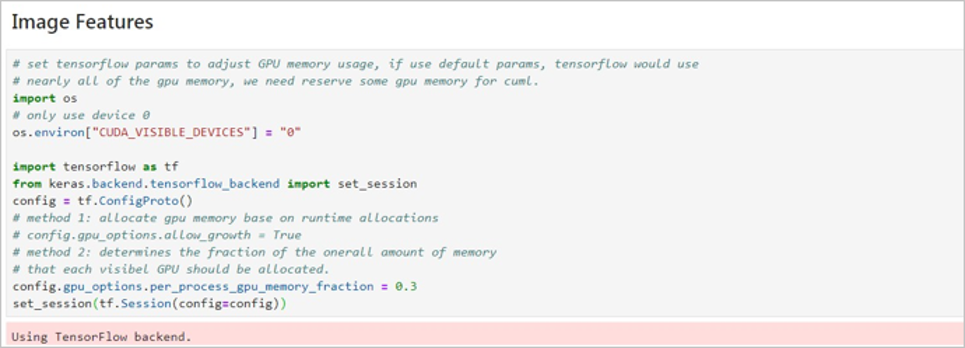

2.1) Set TensorFlow Parameters.

By default, TensorFlow uses all GPU memory capacities. Reserve some GPU memory capacities for cuML. Use the following methods to set the GPU memory parameters:

config.gpu_options.allow_growth = TrueThis demo uses method 2 and sets the ratio to 0.3. This indicates that TensorFlow may use 30% of the GPU memory. Adjust the ratio as needed.

config.gpu_options.per_process_gpu_memory_fraction = 0.3

2.2 Download the pre-trained model ResNet50 (notop). Connect TensorFlow to the public network and download the model. The size of the model is about 91 MB. The model will be downloaded to the /root/.keras/models/ directory.

| Parameter | Description |

|---|---|

| weights | Valid values: - None: Initializes the weight to a random value. - imagenet: Sets the initial value to the weight of the model that is pre-trained by ImageNet. In this demo, this parameter is set to imagenet. |

| include_top | Valid values: - True: The last fully connected layer that contains the entire ResNet50 network structure. - False: The last fully connected layer that does not contain the entire ResNet50 network structure. This demo uses the neural network model ResNet50 to extract image features instead of classifying images. Therefore, set this parameter to False. |

| input_shape | This optional parameter specifies the input shape of the images. It takes effect only when the include_top parameter is set to False. You must set three input channels for the images. The width and height of the images must be at least 32 millimetres. Set this parameter to (96, 96, 3). |

| pooling | You must set a pooling layer model when the include_top parameter is set to False. Valid values: - None: Output a 4D tensor. - avg: Indicates the average pooling and outputs a 2D tensor. - max: Indicates the maximum pooling and outputs a 2D tensor. In this demo, this parameter is set to max. |

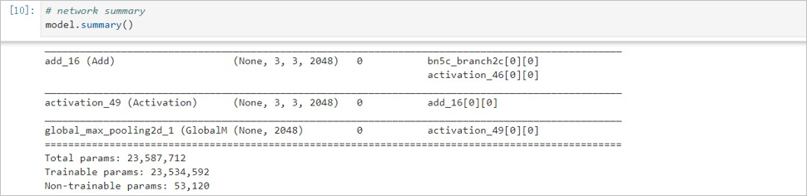

Now, run the model.summary() method to view the network structure of your model.

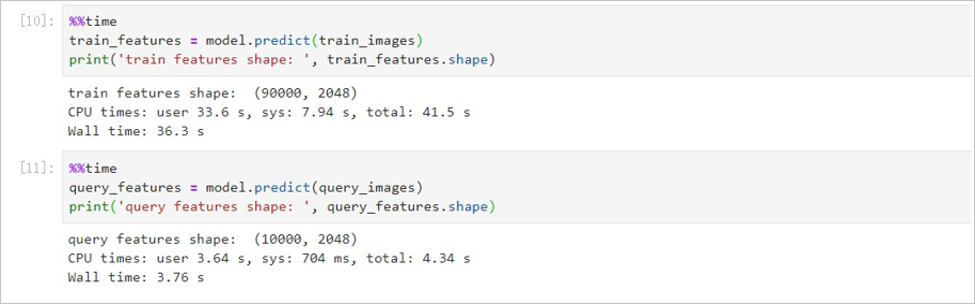

2.3 Extract Image Features

Call the model.predict() method on the split datasets to extract image features.

3) Search for Similar Images

3.1 Use cuML KNN to Search for Similar Images.

Set K to 3 (k=3) to search for the three most similar images. Adjust the value of K as needed. Use the knn_cuml.fit() method while creating indices. Use the knn_cuml.kneighbors() method while searching for neighbors.

It takes 791 milliseconds for KNN to retrieve vectors.

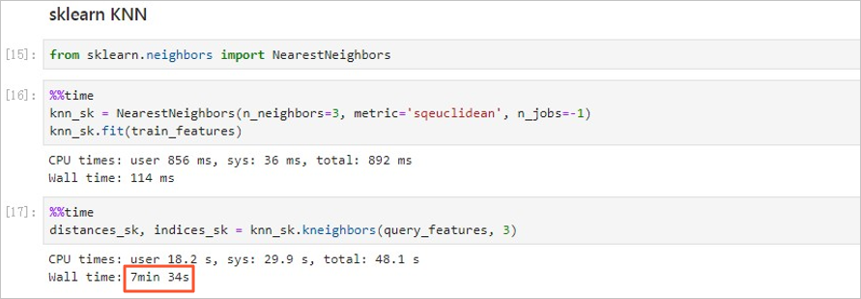

Use scikit-learn KNN to search for similar images. Set K to 3 (n_neighbors=3), and specify n_jobs=-1 to use all the CPUs to search for the nearest neighbors.

Note: The ecs.gn5i-c8g1.2xlarge model is configured with 8 vCPUs.

It takes 7 minutes and 34 seconds for KNN to retrieve vectors.

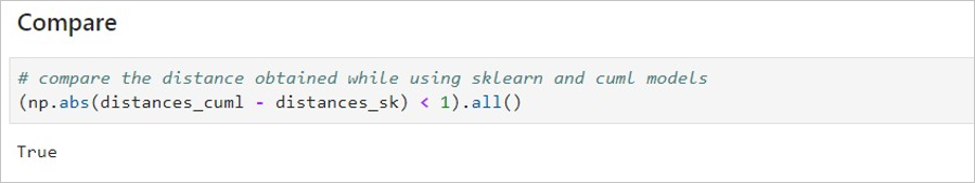

Compare the search results of cuML KNN and scikit-learn KNN. Compare the vector retrieval speeds of cuML KNN and scikit-learn KNN. GPU-accelerated cuML KNN takes only 791 milliseconds, whereas scikit-learn KNN that uses CPUs takes 7 minutes and 34 seconds. The retrieval speed of cuML KNN is nearly 600 times faster than that of scikit-learn KNN.

Check whether the search results of cuML KNN and scikit-learn KNN are the same. Compare the following output arrays:

distance.shape=(10000,3).

indices.shape=(10000, 3).The dataset used in this demo contains identical images. Identical images may have different indices. Therefore, you must use distances instead of indices to compare the results. A calculation deviation may exist. If the deviation of the three smallest distance values for the 10,000 images is smaller than 1 in both methods, the results are considered to be identical.

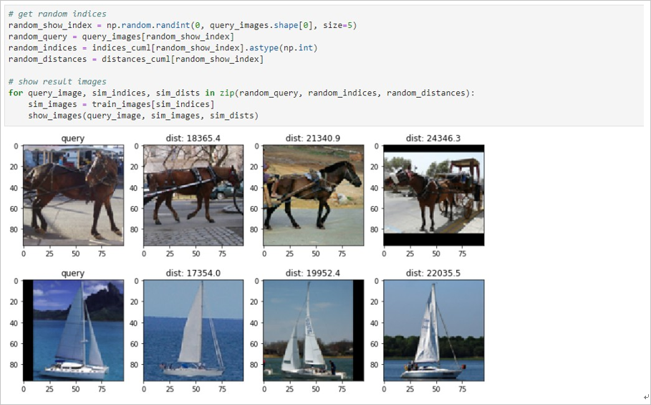

This demo selects five random images from the 10,000 images and uses them to search for similar images. The search results are displayed in five rows and four columns.

The first column displays the original five images. The second, third, and fourth columns display similar images. From the second column to the fourth column, the similarity between the original and similar images decreases. The title of each similar image is the calculated distance. A larger value indicates a lower similarity.

Execute the following commands to clean up.

$ arena serve delete rapids

service "rapids-201912011815" deleted

deployment.extensions "rapids-201912011815-custom-serving" deleted

configmap "rapids-201912011815-custom-serving" deleted

INFO[0000] The Serving job rapids with version 201912011815 has been deleted successfullyThis article describes how to experience fast and simple use of the RAPIDS accelerated data science solution at a low cost through Arena and Alibaba Cloud Serverless Kubernetes.

224 posts | 33 followers

FollowAlibaba Clouder - April 22, 2021

Alibaba Clouder - July 17, 2020

Alibaba Cloud MaxCompute - June 22, 2020

Alibaba Clouder - September 25, 2020

Alibaba Clouder - October 18, 2019

Alibaba Clouder - October 29, 2019

224 posts | 33 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Elastic Container Instance

Elastic Container Instance

An agile and secure serverless container instance service.

Learn MoreMore Posts by Alibaba Container Service