Whole database real-time synchronization combines full migration and incremental capture to synchronize source databases (such as MySQL or Oracle) to destination systems with low latency. This task supports full historical data synchronization, automatically initializing the destination schema and data. It then seamlessly switches to real-time incremental mode, using technologies like CDC to capture and synchronize data changes. This feature is suitable for scenarios such as real-time data warehousing and data lake construction. This topic describes how to configure a task, using the synchronization of a MySQL database to MaxCompute in real time as an example.

Prerequisites

Prepare data sources

Create the source and destination data sources. For configuration details, see Data Source Management.

Ensure that the data source supports whole database real-time synchronization. See Supported data source types and synchronization operations.

Enable logs for specific data sources, such as Hologres and Oracle. Methods vary by data source. For more information, see the data source configuration: Data source list.

Resource group: Purchase and configure a Serverless resource group.

Network connectivity: Ensure that Network connectivity configuration is established between the resource group and the data source.

Entry point

Log on to the DataWorks console. In the top navigation bar, select the desired region. In the left-side navigation pane, choose . On the page that appears, select the desired workspace from the drop-down list and click Go to Data Integration.

Limitations

DataWorks supports two modes: whole database real-time and whole database full & incremental (near real-time). Both modes synchronize historical data and automatically switch to real-time incremental mode. However, they differ in latency and destination table requirements:

Latency: Whole database real-time offers latency ranging from seconds to minutes. Whole Database Full & Incremental (Near Real-time) offers T+1 latency.

Target table (MaxCompute): Whole database real-time supports only Delta Table table types. Whole Database Full & Incremental (Near Real-time) supports all types.

Configure the task

Step 1: Create synchronization task

Create a synchronization task using one of these methods:

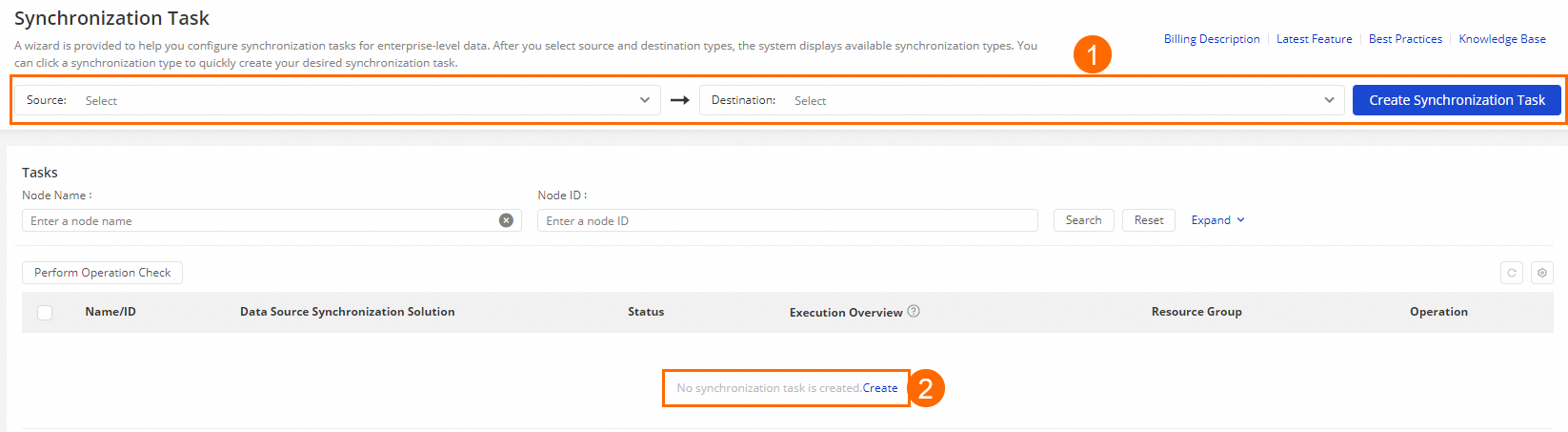

Method 1: On the Synchronization Task page, select Source and Destination, and click Create Synchronization Task. In this example, select MySQL as the source and MaxCompute as the destination.

Method 2: On the Synchronization Task page, if the task list is empty, click Create.

Step 2: Configure basic information

Configure basic information such as the task name, task description, and owner.

Select the synchronization type: Data Integration displays the supported Task Type based on the source and destination database types. In this example, select Real-time migration of entire database.

Synchronization steps:

Structural migration: Automatically creates destination database objects (tables, fields, data types) matching the source, without transferring data.

Full initialization (Optional): Replicates historical data from specified source objects (such as tables) to the destination. This is typically used for initial data migration or initialization.

Incremental Synchronization (Optional): Continuously captures changed data (inserts, updates, deletes) from the source after full synchronization is complete and synchronizes it to the destination.

Step 3: Configure network and resources

In the Network and Resource Configuration section, select the Resource Group to be used by the synchronization task. You can allocate the Task Resource Usage (CUs) for the task.

Select the added

MySQLdata source for Source, select the addedMaxComputedata source for Destination, and then click Test Connectivity.

Ensure that the connectivity test succeeds for both data sources, and then click Next.

Step 4: Select tables to synchronize

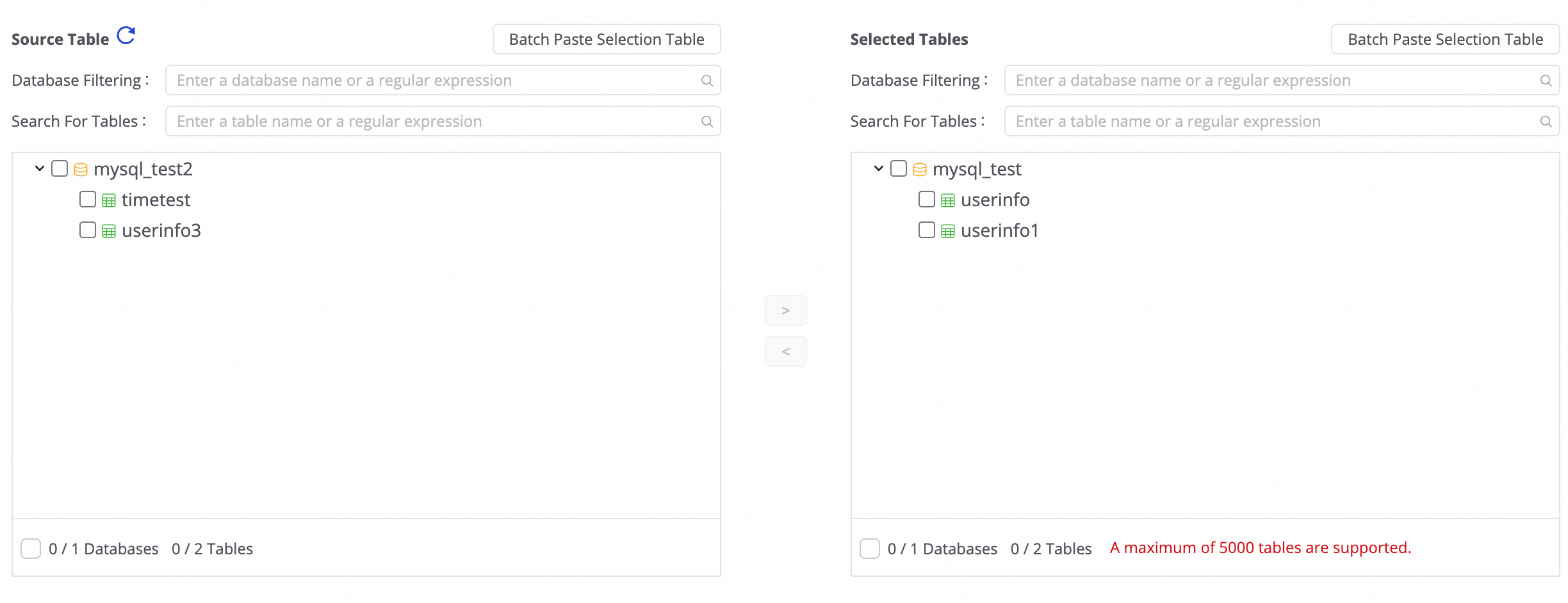

In the Source Table area, select the tables to sync from the source data source. Click the ![]() icon to move the tables to the Selected Tables list.

icon to move the tables to the Selected Tables list.

If you have a large number of tables, use Database Filtering or Search For Tables to select tables via regular expressions.

Step 5: Map destination tables

Define mapping rules between source tables and destination tables, and configure options such as primary keys, dynamic partitions, and DDL/DML handling to determine how data is written.

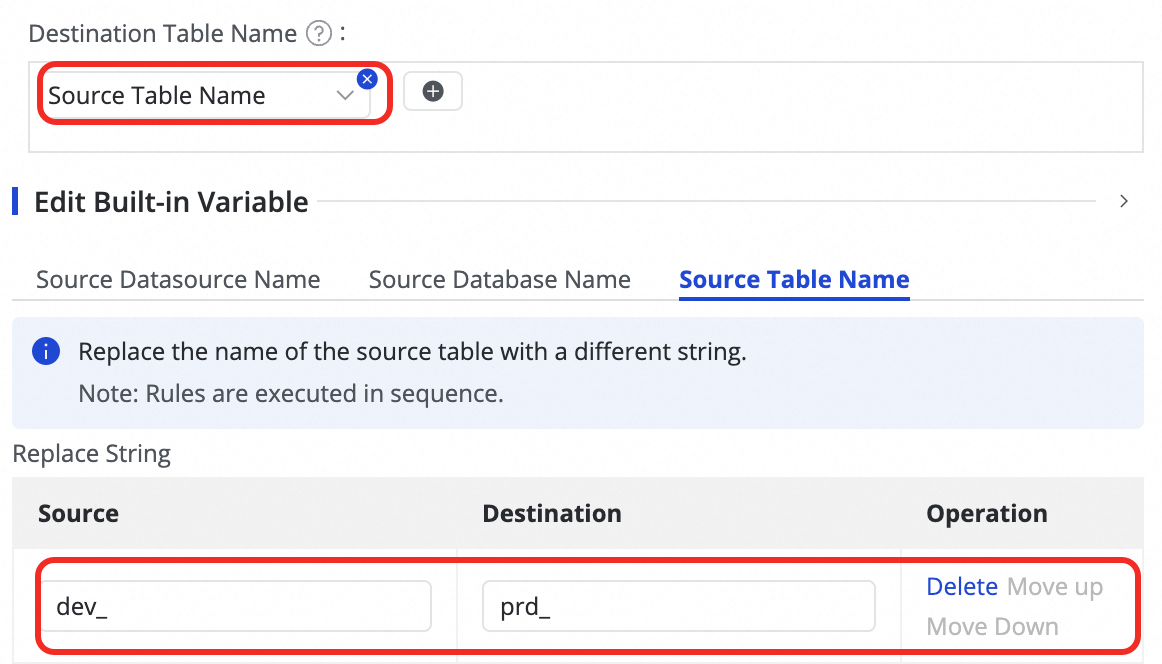

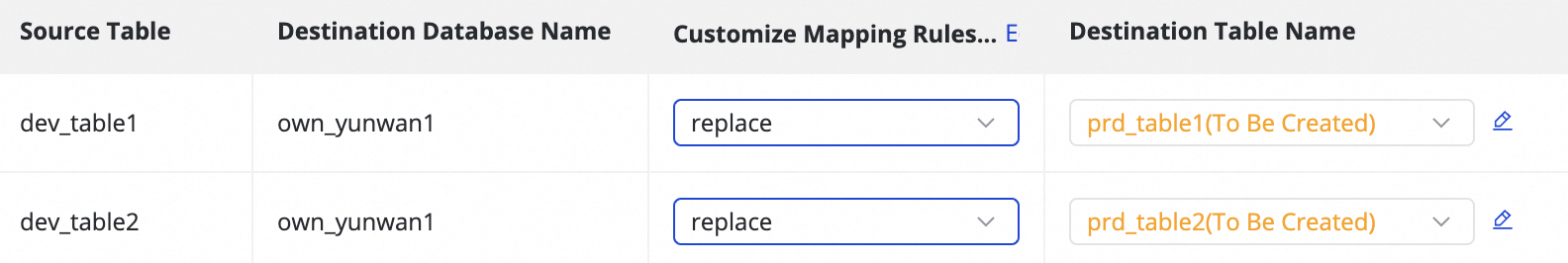

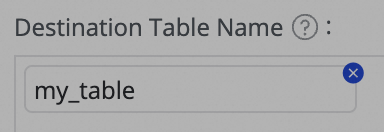

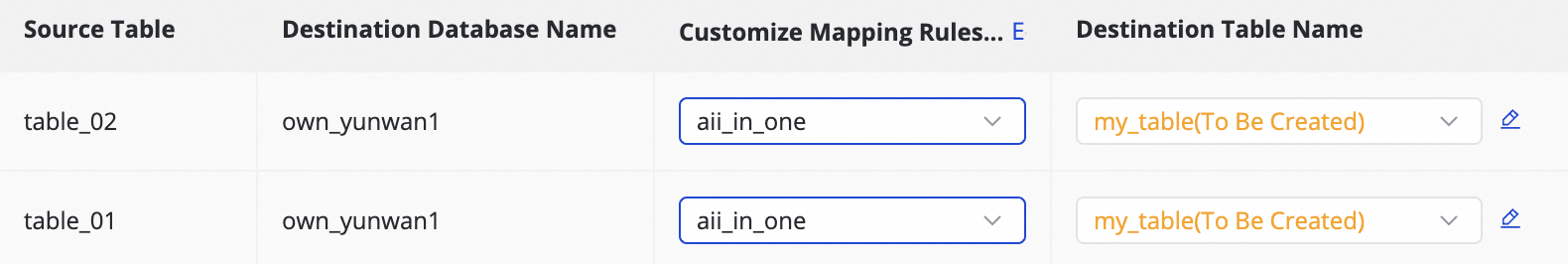

Parameter | Description | ||||||||||||

Refresh Mapping Results | The system lists the selected source tables. However, destination attributes only take effect after you refresh and confirm the mapping.

| ||||||||||||

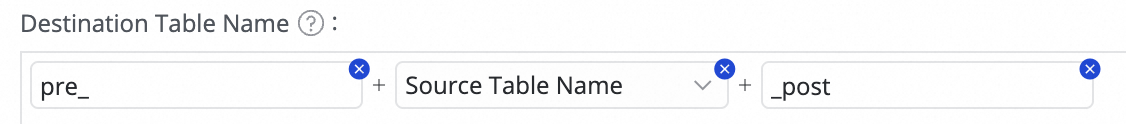

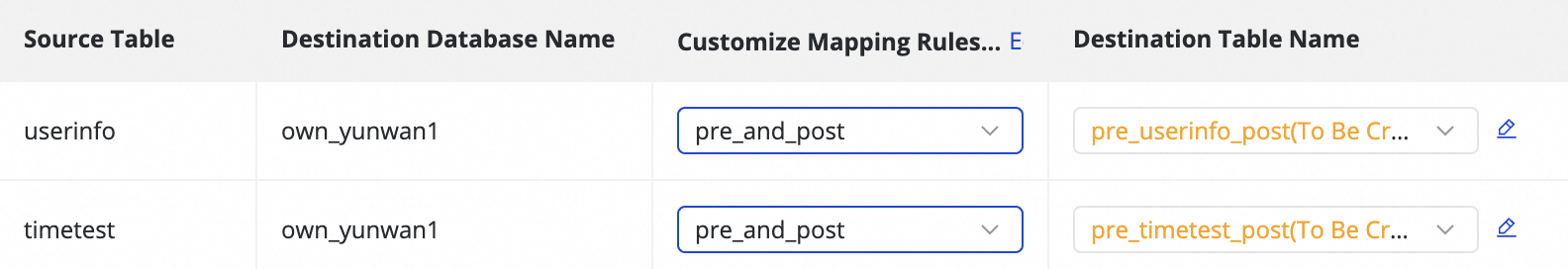

Customize Mapping Rules for Destination Table Names (Optional) | The system uses a default rule to generate table names:

Supported scenarios:

| ||||||||||||

Edit Mapping of Field Data Types (Optional) | The system provides default mappings between source and destination field types. You can click Edit Mapping of Field Data Types in the upper-right corner of the table to customize the mapping relationship, and then click Apply and Refresh Mapping. Note: Incorrect field type conversion rules can cause conversion failures, data corruption, or task interruptions. | ||||||||||||

Edit Destination Table Structure (Optional) | The system automatically creates destination tables that do not exist or reuses existing tables with the same name based on the custom table name mapping rules. DataWorks generates the destination schema based on the source schema. Manual intervention is usually unnecessary. You can also modify the table schema in the following ways:

For existing tables, you can only add fields. For new tables, you can add fields, partition fields, and set table types or properties. For more information, see the editable area in the interface. | ||||||||||||

Value assignment | Native fields are automatically mapped based on fields with the same name in the source and destination tables. You must manually assign values to Added Fields and Partition Fields. The procedure is as follows:

Assign constants or variables, and switch the type in Assignment Method. The supported methods are as follows:

Note Note: Excessive partitions reduce synchronization efficiency. Creating over 1,000 partitions daily causes failure and task termination. Estimate generated partitions when defining assignments. Use second-level or millisecond-level partition creation methods with caution. | ||||||||||||

Source Split Column | You can select a field from the source table in the drop-down list or select Not Split. When the synchronization task is executed, it is split into multiple tasks based on this field to allow concurrent and batched data reading. We recommend that you use the table primary key as the source split key. Types such as string, float, and date are not supported. Currently, source split keys are supported only when the source is MySQL. | ||||||||||||

Execute Full Synchronization | If full synchronization is configured in Step 3, you can individually cancel full data synchronization for a specific table. This applies to scenarios where full data has already been synchronized to the destination by other means. | ||||||||||||

Full Condition | Filter the source data during the full phase. You only need to write the where clause here, without the WHERE keyword. | ||||||||||||

Configure DML Rule | DML message processing allows for refined filtering and control of captured data changes ( | ||||||||||||

Others |

For more information about Delta Table, see Delta Table. |

Step 6: Configure DDL capabilities

Certain real-time synchronization tasks detect metadata changes in the source table structure and synchronize updates or take other actions such as alerting, ignoring, or terminating execution.

Click Configure DDL Capability in the upper-right corner of the interface to set processing policies for each change type. Supported policies vary by channel.

Normal Processing: The destination processes the DDL change information from the source.

Ignore: The change message is ignored, and no modification is made at the destination.

Error: The whole database real-time synchronization task is terminated, and the status is set to Error.

Alert: An alert is sent to the user when such a change occurs at the source. You must configure DDL notification rules in Configure Alert Rule.

When DDL synchronization adds a source column to the destination, existing records are not backfilled with data for the new column.

Step 7: Other configurations

Alarm configuration

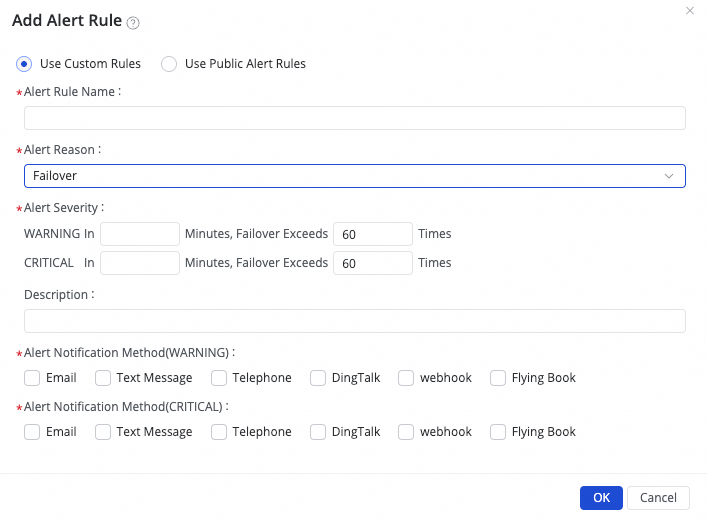

1. Add Alarm

(1) Click Create Rule to configure alarm rules.

Set Alert Reason to monitor metrics like Business delay, Failover, Task status, DDL Notification, and Task Resource Utilization for the task. You can set CRITICAL or WARNING alarm levels based on specified thresholds.

By setting Configure Advanced Parameters, you can control the time interval for sending alarm messages to prevent alert fatigue and message backlogs.

If you select Business delay, Task status, or Task Resource Utilization as the alarm reason, you can also enable recovery notifications to notify recipients when the task returns to normal.

(2) Manage alarm rules.

For created alarm rules, you can use the alarm switch to control whether the alarm rule is enabled. Send alarms to specific recipients based on the alarm level.

2. View Alarm

Expand in the task list to enter the alarm event page and view the alarm information that has occurred.

Resource group configuration

You can manage the resource group used by the task and its configuration in the Configure Resource Group panel in the upper-right corner of the interface.

1. View and switch resource groups

Click Configure Resource Group to view the resource group currently bound to the task.

To change the resource group, switch to another available resource group here.

2. Adjust resources and troubleshoot "insufficient resources" errors

If the task log displays a message such as

Please confirm whether there are enough resources..., the available computing units (CUs) of the current resource group are insufficient to start or run the task. You can increase the number of CUs occupied by the task in the Configure Resource Group panel to allocate more computing resources.

For recommended resource settings, see Data Integration Recommended CUs. Adjust the settings based on actual conditions.

Advanced parameter configuration

For custom synchronization requirements, click Configure in the Advanced Settings column to modify advanced parameters.

Click Advanced Settings in the upper-right corner of the interface to enter the advanced parameter configuration page.

Modify the parameter values according to the prompts. The meaning of each parameter is explained after the parameter name.

Understand parameters fully before modification to prevent issues like task delays, excessive resource consumption blocking other tasks, or data loss.

Step 8: Execute synchronization task

After you complete the configuration, click Save or Complete to save the task.

On the , find the created synchronization task and click Deploy in the Operation column. If you select Start immediately after deployment in the dialog box that appears and click Confirm, the task is executed immediately. Otherwise, you must manually start the task.

NoteData Integration tasks must be deployed to the production environment before they can be run. Therefore, you must deploy a new or modified task for the changes to take effect.

Click the Name/ID of the task in the Tasks to view the execution details.

Edit task

On the page, find the created synchronization task, click More in the Operation column, and then click Edit. Modify task information following the task configuration steps.

For tasks that are not running, modify, save, and publish the configuration.

For tasks in the Running state, if you do not select Start immediately after deployment when editing and deploying the task, the original operation button becomes Apply Updates. Click this to apply changes online.

Clicking Apply Updates triggers a "Stop, Publish, and Restart" sequence for the changes.

If the change involves adding a table or switching an existing table:

You cannot select a checkpoint when applying updates. After you click Confirm, schema migration and full initialization are performed for the new table. After full initialization is complete, incremental operations start together with other original tables.

If other information is modified:

Selecting a checkpoint is supported when applying updates. After you click Confirm, the task continues running from the specified checkpoint. If not specified, it starts running from the last stop time checkpoint.

Unmodified tables are not affected and will continue running from the last stop time point after the update restart.

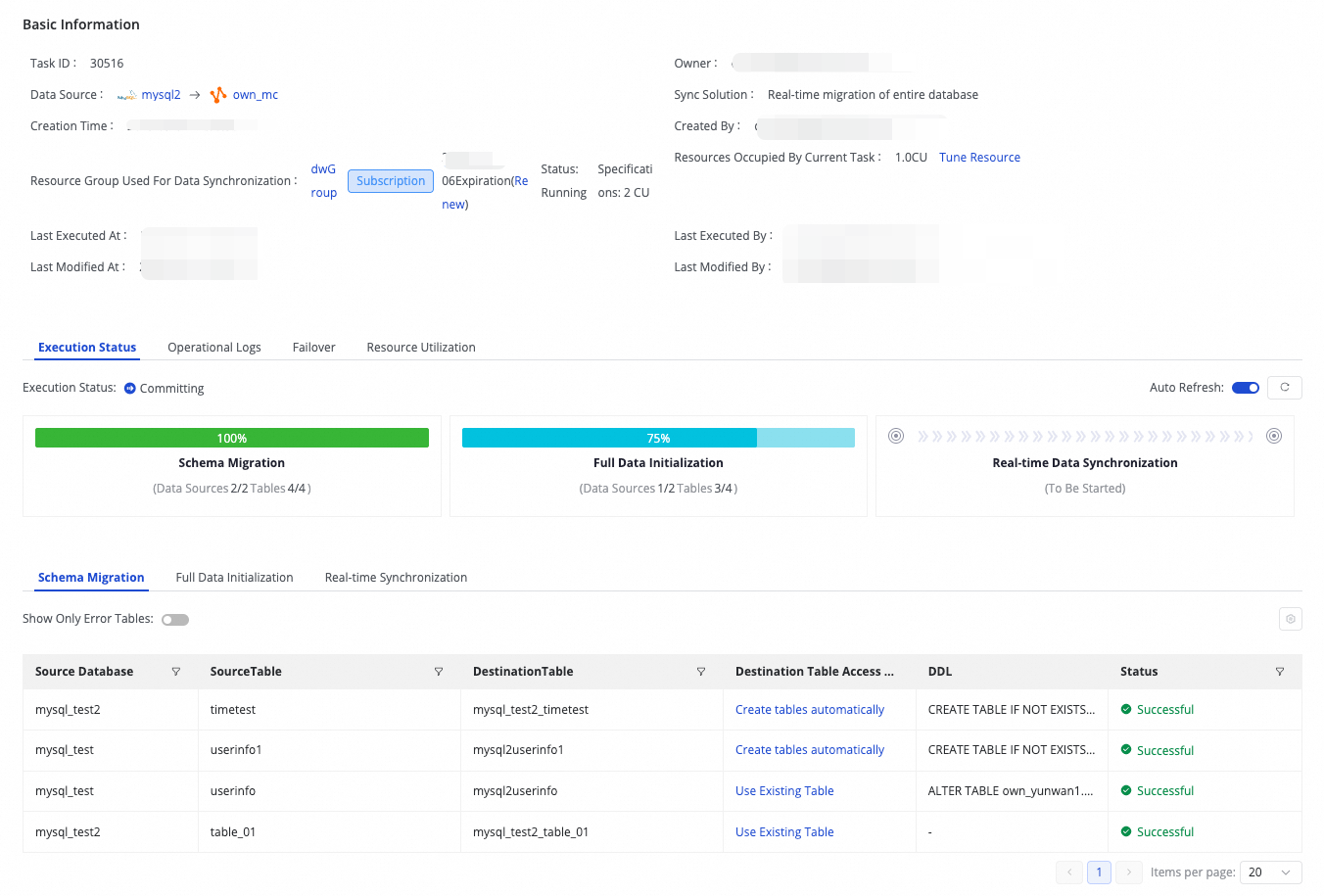

View task

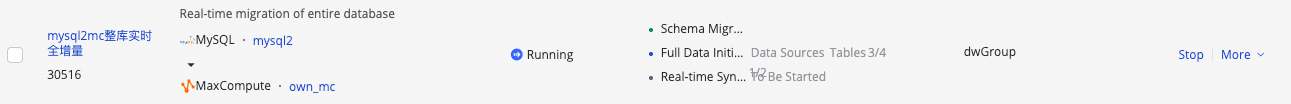

After creating a synchronization task, you can view the list of created synchronization tasks and their basic information on the Synchronization Task page.

You can Start or Stop the synchronization task in the Operation column. In More, you can perform operations such as Edit and View.

For started tasks, you can view the basic running status in Execution Overview, or click the corresponding overview area to view execution details.

Resume from breakpoint

Applicable scenarios

Reset checkpoints during startup or restart in the following scenarios:

Task recovery: Specify an interruption time point to ensure accurate data recovery after errors.

Troubleshooting and backtracking: If you detect data loss or anomalies, you can reset the checkpoint to a time before the issue occurred to replay and repair the data.

Major task configuration changes: After making major adjustments to the task configuration (such as target table structure or field mapping), we recommend that you reset the checkpoint to start synchronization from a clear time point to ensure data accuracy under the new configuration.

Operation description

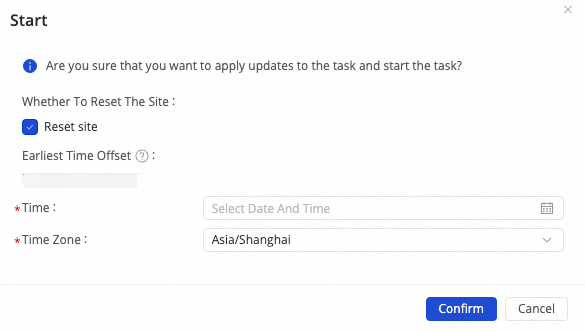

Click Start. In the dialog box, select Whether to reset the site:

Do not reset: The task resumes from the last checkpoint recorded before it stopped.

Reset and select time: Start running from the specified time checkpoint. Ensure the time is within the source Binlog range.

If a checkpoint error or non-existence message appears when you execute a synchronization task, try the following solutions:

Reset checkpoint: When starting a real-time synchronization task, reset the checkpoint and select the earliest available checkpoint in the source database.

Adjust log retention time: If the database checkpoint has expired, consider adjusting the log retention time in the database, for example, setting it to 7 days.

Data synchronization: If data is already lost, consider performing a full synchronization again or configuring an offline synchronization task to manually synchronize the lost data.

FAQ

For common questions about whole database real-time synchronization, see Real-time synchronization and Full and incremental synchronization.

More cases

Synchronize all data in a MySQL database to AnalyticDB for MySQL V3.0 in real time

Synchronize all data in a MySQL database to ApsaraDB for OceanBase in real time

Real-time synchronization of MySQL database to Data Lake Formation

Real-time synchronization of the entire MySQL database to DataHub

Real-time synchronization from MySQL database to Elasticsearch

Synchronize all data in a MySQL database to Hologres in real time

Synchronize an entire MySQL database to LogHub (SLS) in real time

Real-time synchronization of an entire ApsaraDB for OceanBase database to MaxCompute

Synchronize an entire Oracle database to MaxCompute in real time

Synchronize all data in a MySQL database to a data lake in OSS in real time

Real-time synchronization of an entire MySQL database to an OSS-HDFS data lake

Synchronize all data in a MySQL database to SelectDB in real time

Real-time synchronization of the entire MySQL database to StarRocks

button and selecting Manual Input or Built-in Variable for concatenation. Supported variables include the source data source name, source database name, and source table name.

button and selecting Manual Input or Built-in Variable for concatenation. Supported variables include the source data source name, source database name, and source table name.

button in the Destination Table Name column to add a field.

button in the Destination Table Name column to add a field. tooltip in the interface.

tooltip in the interface.