By Jianyi, Alibaba Cloud Serverless Senior Development Engineer

Whether in the field of big data processing or message processing, task systems have a critical capability – task triggered deduplication. This capability is essential in some scenarios that require high accuracy (such as in the financial field). As a serverless platform, Serverless Task needs to guarantee that accurate task can trigger semantics at the user application level and within its system.

This article focuses on the topic of message processing reliability to introduce the technical details of Function Compute asynchronous task functions and shows how to use the capabilities provided by Function Compute (FC) to enhance the reliability of task execution in practical applications.

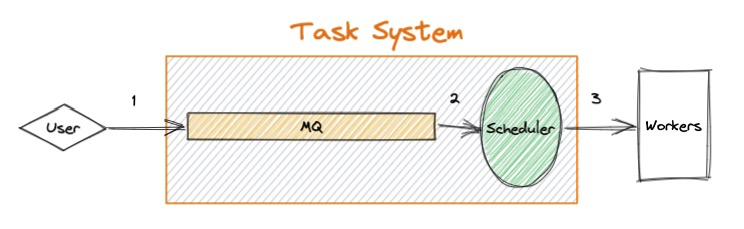

When discussing asynchronous message processing systems, the basic semantics cannot be bypassed. In an asynchronous message processing system (task system), a message processing procedure is simplified (as shown in the following figure):

Figure 1

A user sends a task → Enters the queue → The task processing unit monitors and obtains the message → Schedules it to the actual worker for execution.

Downtime or other problems that happened to the component (procedure) during the message forwarding process of the task may lead to message delivery errors. A typical task system provides up to three levels of message processing semantics:

Function Compute can provide the Exactly Once semantics of task distribution, which means, in any case, duplicate tasks will be considered the same trigger by the system. Then, only one task distribution will be performed.

In combination with Figure 1, if you want to achieve task deduplication, the system needs to provide at least two dimensions of guarantee:

Next, we will combine the simplified Serverless Task system architecture to discuss how Function Compute achieves the capabilities above.

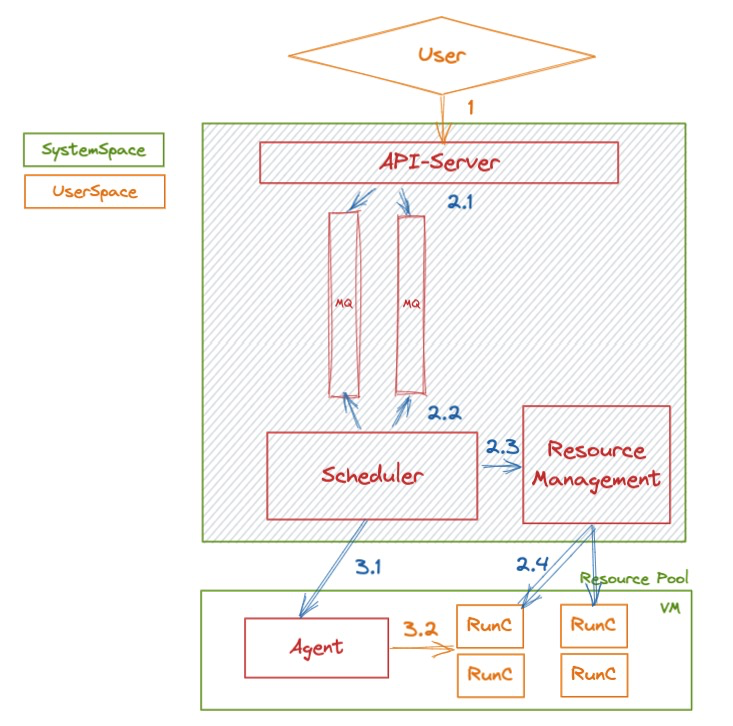

The following figure shows the architecture of the Function Compute task system:

Figure 2

First, the user invokes the Function Compute API to send a task (step 1) into the API-Server of the system. The API-Server passes the message into the internal queue after verification (step 2.1).

There is an asynchronous module in the background to monitor the internal queue in real-time (step 2.2). After that, the Resource Management module is invoked to obtain run time resources (steps 2.2-2.3).

After the run time resource is obtained, the scheduling module sends the task data to the VM-level client (step 3.1), and the client forwards the task to the actual user running resource (step 3.2).

We need to support the following levels to achieve the two dimensions mentioned above:

After the user's message enters the Function Compute system (complete step 2.1), the user's request will receive a response from the HTTP status code 202, and the user can consider that the task has been submitted once. From the time the task message enters MQ, its lifecycle is maintained by Scheduler, so the stability of Scheduler and the stability of MQ will affect the implementation of the system Exactly Once.

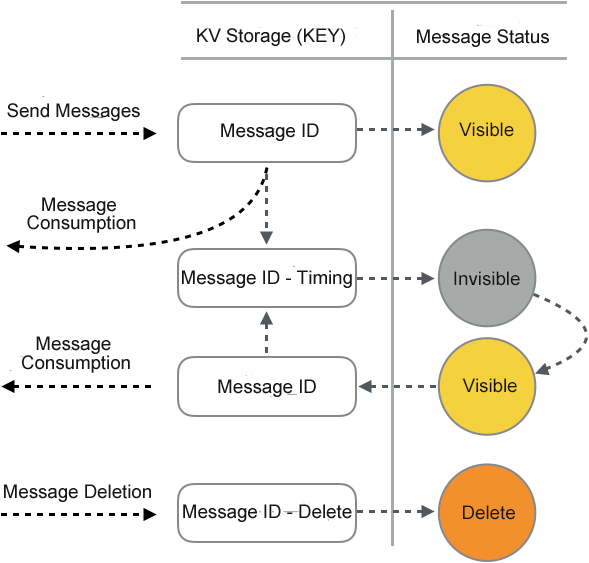

In most open-source messaging systems (such as MQ and Kafka), messages are stored in multiple replicas and are consumed uniquely. The same is true for the message queue used by Function Compute (RocketMQ at the bottom). The three-replica implementation of the underlying storage eliminates the need to pay attention to the stability of message storage. In addition, the message queue used in Function Compute has the following characteristics:

Therefore, the entire lifecycle of a message in the queue is shown in the following figure:

Figure 3

The scheduler is mainly responsible for message processing, and its tasks consist of the following parts:

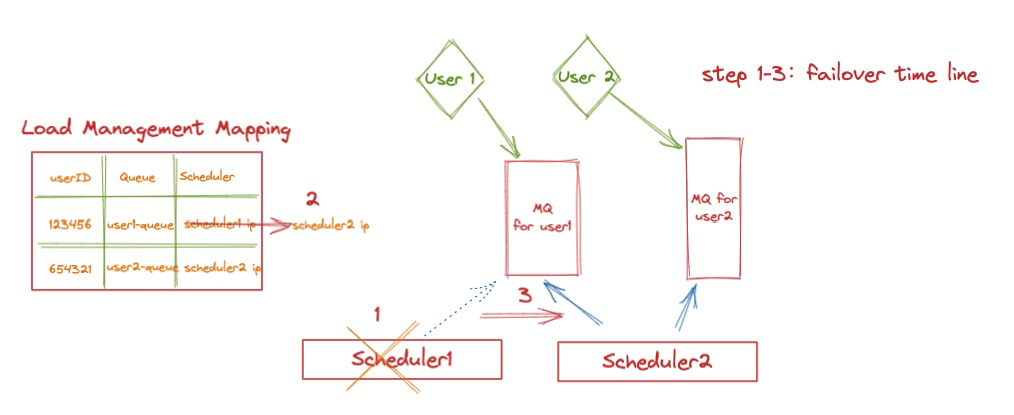

In terms of the queue scheduling model, Function Compute adopts a single queue management model for the common user, which means all async execution requests of every user are separated by a single queue and responsible by a Scheduler. The mapping of this load is managed by the Function Compute load balancing service, as shown in the following figure (We will introduce this part in detail in subsequent articles):

Figure 4

When Scheduler 1 is down or upgraded, the task consists of two execution states:

The Function Compute system can accurately consume each message under a single point of failure, but if the user side repeatedly triggers function execution for the same piece of business data, Function Compute cannot identify whether different messages are logically the same task. This situation often occurs in network partitions. In Figure 2, if the user invokes 1 to time out, there are two possible situations:

The user will retry the submission in most cases. In case 2, the same task will be submitted and executed multiple times. Therefore, Function Compute needs to provide a mechanism to ensure the accuracy of the business in this scenario.

Function Compute provides the task concept (StatefulAsyncInvocationID) of TaskID. The ID is globally unique. You can specify an ID each time you submit a task. When a request timeout occurs, the user can make an unlimited number of retries.

All repeated retries will be verified on the Function Compute side. The Function Compute uses DB to store the Metadata of the task. When the same ID enters the system, the request is rejected, and a 400 error is returned. At this time, the client knows the task submission status.

Let’s use Go SDK as an example. You can edit the following code to trigger a task:

import fc "github.com/aliyun/fc-go-sdk"

func SubmitJob() {

invokeInput := fc.NewInvokeFunctionInput("ServiceName", "FunctionName")

invokeInput = invokeInput.WithAsyncInvocation().WithStatefulAsyncInvocationID("TaskUUID")

invokeOutput, err := fcClient.InvokeFunction(invokeInput)

...

}Then, a unique task is submitted.

This article describes the technical details of task triggered deduplication of Function Compute Serverless Task to support scenarios that have strict requirements for task execution accuracy.

After using Serverless Task, you do not need to worry about failover of any system components. Each task you submit is exactly executed once. You can set the globally unique ID of the task when submitting the task to support service-side semantic distribution deduplication. You can use the capabilities provided by Function Compute (FC) to help deduplicate the task.

Function Compute HTTP Triggers Support Async to Free Manual Operations of Building Web Services

99 posts | 7 followers

FollowAlibaba Cloud Serverless - September 29, 2022

Alibaba Cloud Native Community - April 23, 2023

Alibaba Cloud Serverless - August 23, 2022

Alibaba Cloud Serverless - September 29, 2022

Apache Flink Community China - July 3, 2023

Apache Flink Community China - December 25, 2020

99 posts | 7 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn MoreMore Posts by Alibaba Cloud Serverless