By Mingwei Shi (Senior Technical Expert at Alibaba Cloud Intelligence)

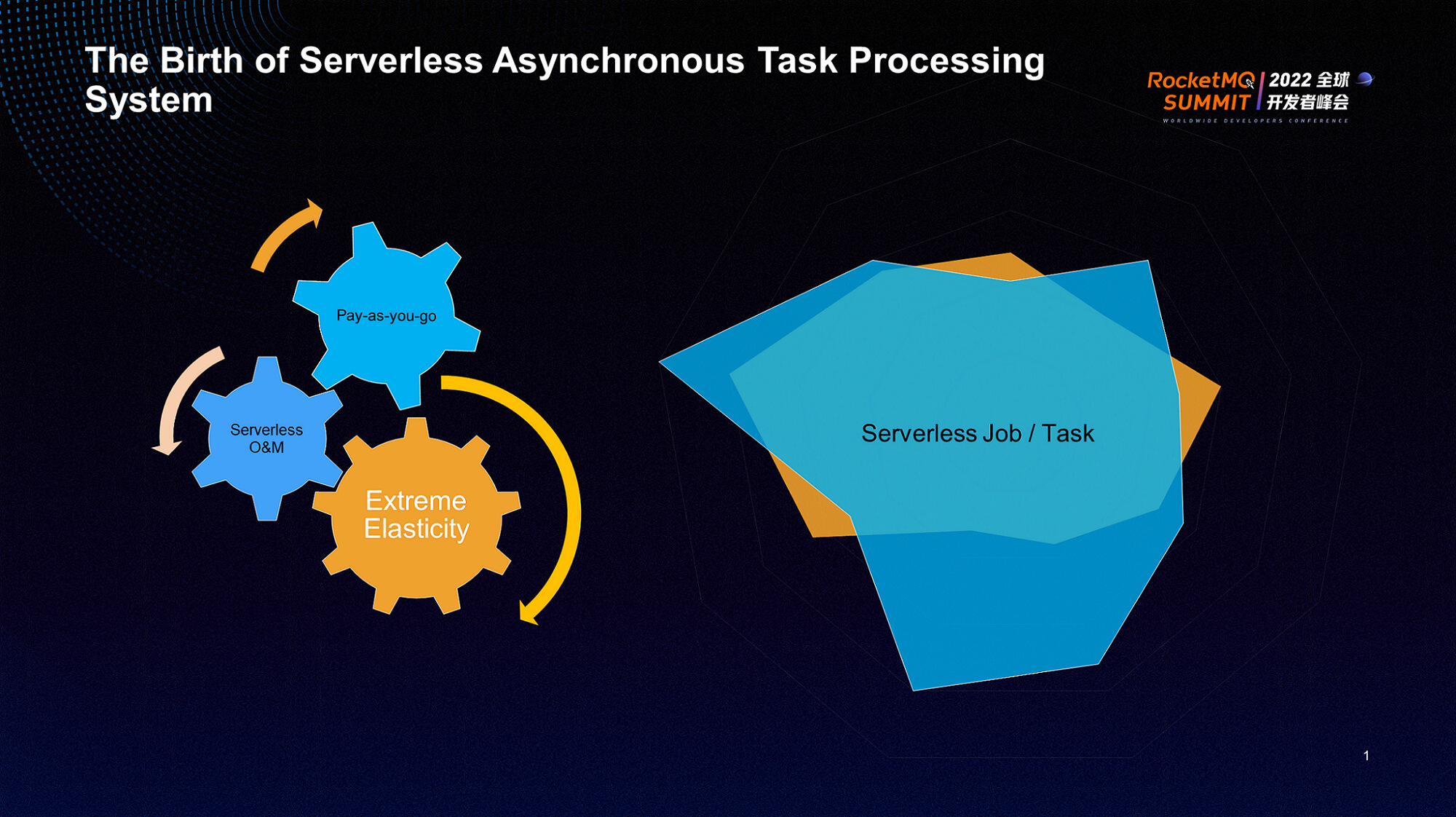

The three concepts of Serverless (extreme elasticity, Serverless O&M, and pay-as-you-go) have been deeply rooted in the hearts of cloud developers and enterprise customers trying to upgrade their businesses. However, what Serverless can do and how to do it are still the most common problems.

Technical teams usually focus on elasticity and cold start acceleration in the initial stage of Serverless R&D. They hope to highlight the technical competitiveness of products through elasticity, establish the leading position of products in the market, and rely on these capabilities to attract developers and enterprise customers to use Serverless. At this stage, they rely on technical influence to guide everyone to explore Serverless.

As we continue to deepen our understanding of Serverless and the improvement of elasticity capabilities, we will think more about other values besides Serverless elasticity. At this time, elasticity will be used as the basic capability of the system to penetrate all aspects of the product. We need to consider more systematically what Serverless can do and what it can bring to customers. How do we focus the business on those parts that have to be customized?

Systematic means we need to consider the value of Serverless systems to customer business from multiple dimensions, including resource supply, elastic scheduling, application framework, capacity evaluation, and O&M observability. Which one of them requires user participation or less participation, and which one needs to be provided to customers as a service capability? For those customers that must participate, the platform needs to provide convenient development tools to meet customer needs at the development stage. In the implementation of specific business logic, the flexible extensibility of Serverless can help developers connect to other cloud services as quickly as possible. Stable and efficient access is the core value Serverless can bring to customers.

In the face of actual customer requirements, products need to consider a variety of business scenarios: time-consuming task execution in offline scenarios, high concurrent request processing in online scenarios, and event processing in event-driven scenarios. How to meet the requirements of many business scenarios on one computing platform is the biggest challenge we face.

Based on the continuous in-depth understanding of Serverless and the continuous accumulation of products in elastic scheduling, we have started to build a Serverless asynchronous task processing system based on the multi-tenancy architecture. It uses asynchronous access methods to help users manage requests and helps users quickly execute tasks and handle exceptions in a service-oriented way to provide a reliable execution guarantee. Combined with asynchronous result delivery capabilities, we hope to be event-driven. Online business processing, Serverless job/task, and other complex business scenarios bring more value to customers.

Building a Serverless asynchronous task processing system for multi-tenancy scenarios will face various challenges. At this time, we need to simplify the complexity and analyze what the task system needs to do: task distribution, task scheduling, and task execution.

Serverless is a multi-tenancy and time-sharing business model. The challenges of Serverless asynchronous task processing systems, combined with the functional requirements of task processing systems, can be summarized into three aspects:

The preceding challenges are also the core issues that enterprises face when building distributed business systems. From the perspective of products, we hope to help users solve these common typical problems in distributed systems through the construction of asynchronous task systems. Customers only need to pay attention to their business request submission and execution results and do not need to care about the Serverless elasticity, resource scheduling, system traffic control and reliable execution, error retry, and other details involved in the process from request submission to execution. This truly realizes the core concepts Serverless advocates (extreme elasticity, Serverless O&M, and pay-as-you-go) and ultimately helps realize the business value of Serverless for customers.

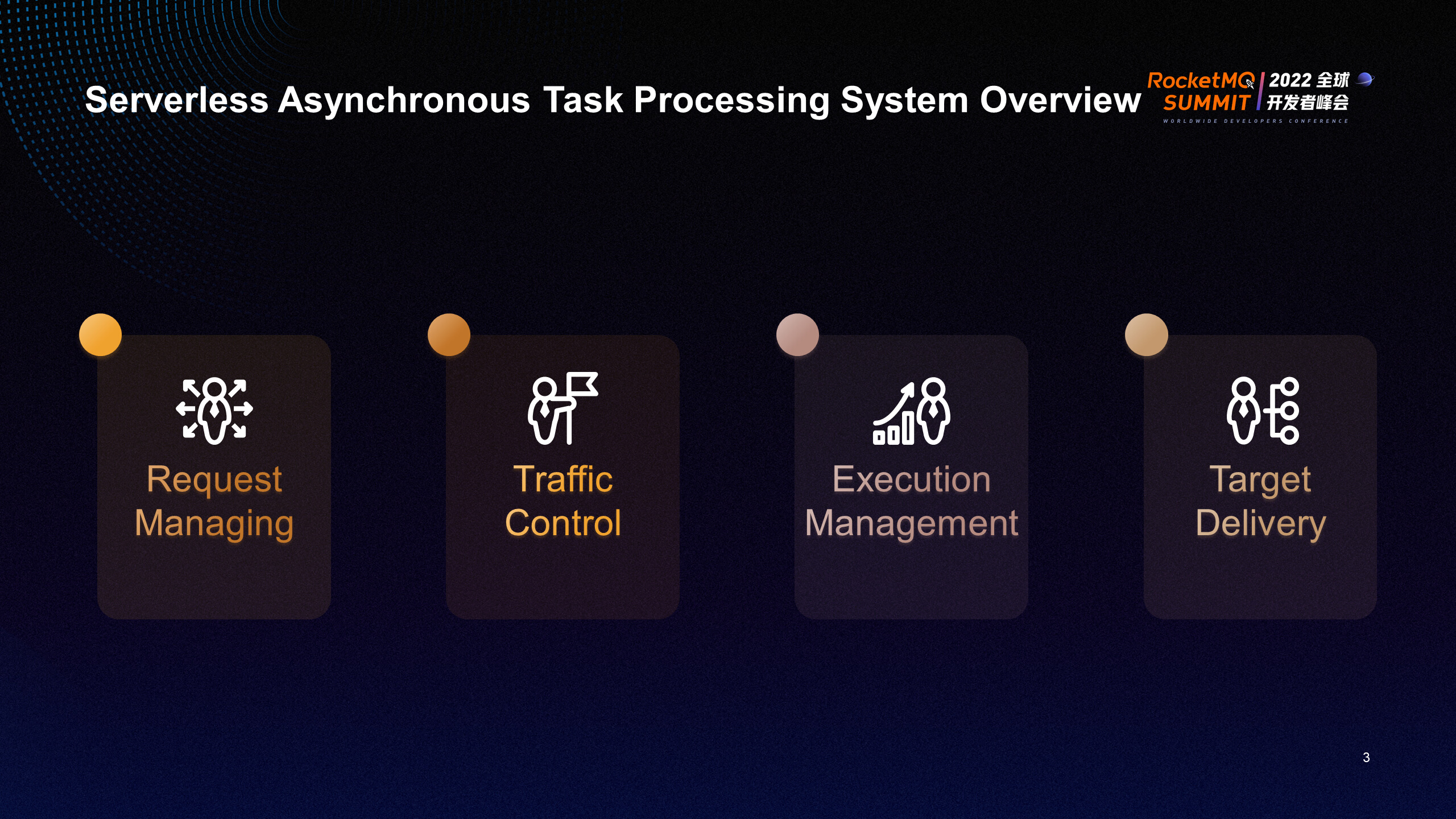

After discussing the various challenges faced by the construction of Serverless asynchronous task systems, the functions of Serverless asynchronous task processing systems can be briefly summarized into the following four core modules:

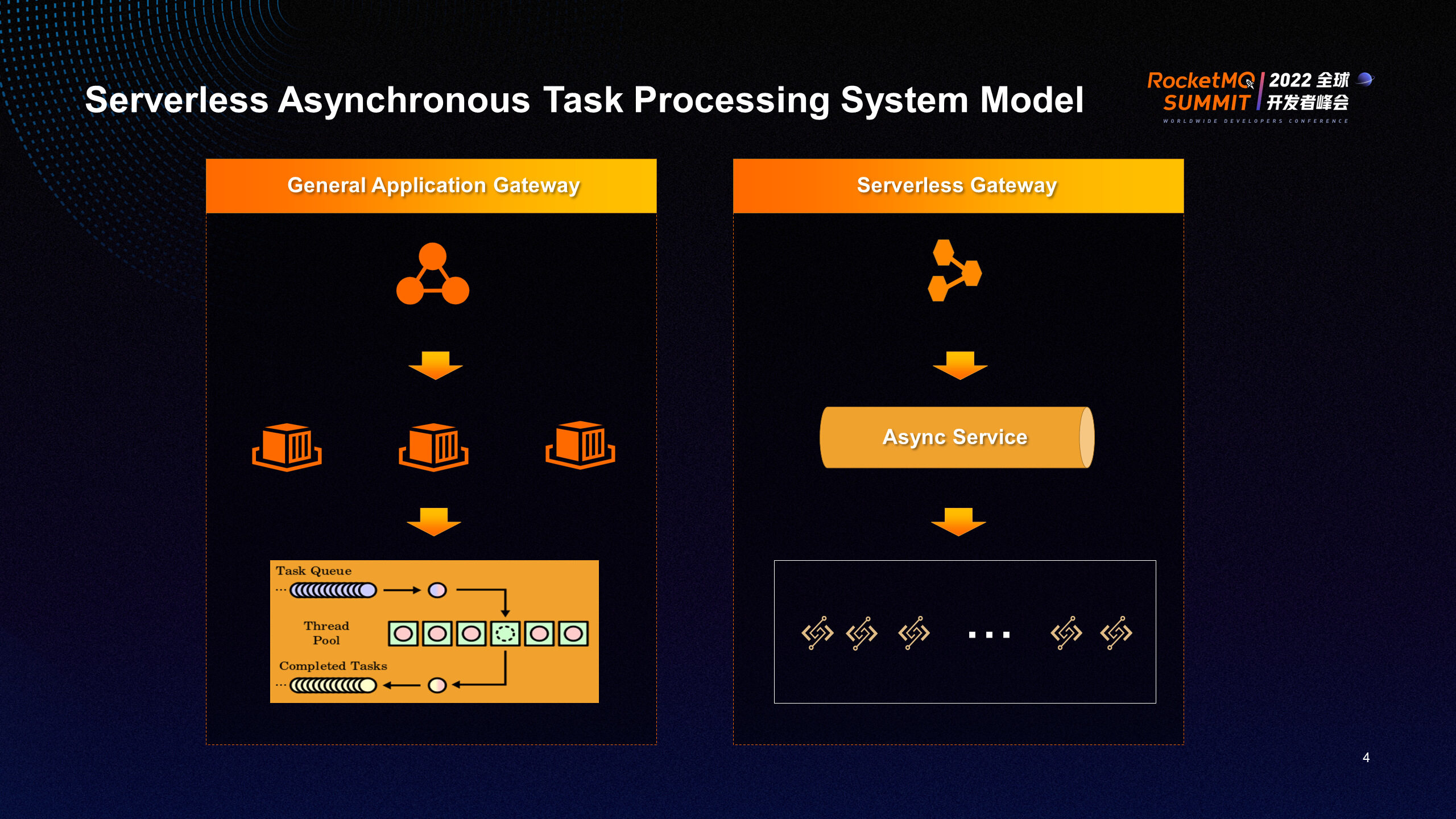

The following figure shows the basic model of a typical asynchronous task processing system, which uses APIs to submit tasks, schedule tasks, execute tasks, and deliver execution results.

The service gateway is usually used to build the capabilities of task scheduling, load balancing, and traffic control policies in the traditional task processing framework, which is also the most basic, most complex, and most important part of the distributed system construction. The backend implementation is usually based on the memory queue of process granularity and the thread pool model of runtime level to complete specific task dispatch and execution.

The process of asynchronous task processing in Serverless says users distribute tasks through APIs. After requests arrive at Serverless Service Gateway, they are stored in asynchronous request queues. Async Service starts to take over these requests, requests are scheduled to obtain backend resources, and these requests are allocated to specific backend resources for execution. In the architecture diagram, Async Service is responsible for the implementation of request dispatcher, load balancing, traffic control policy, and resource scheduling in the traditional architecture. At this time, the function cluster is equivalent to the abstract distributed thread pool model. Under the Function Compute model, instances are isolated from each other, and resources have the ability of horizontal scaling, which can avoid the thread pool capacity problem and resource scheduling bottleneck problem caused by single-machine resource limitation in the traditional application architecture. At the same time, the execution environment of tasks is not limited by the run time of the overall business system. This is also the value of Serverless asynchronous task systems compared with traditional task systems.

From the perspective of the architecture of the Serverless task processing system, its processing logic is simple. Most of the capabilities that the distributed system depends on are implemented transparently by the Async Service system role. For users, the implementation logic of task processing is provided through functional programming. The overall architecture avoids dependence on language-based run-time thread pools. The entire Function Compute cluster provides a thread pool with unlimited capacity. Through service, users only need to submit requests, and other concurrent processing, traffic control, and backlog processing are all completed by the Serverless platform. In the actual execution process, you need to configure the concurrency, error retry policy, and result delivery of asynchronous task processing based on business characteristics.

Next, we will focus on some technical details in the construction of a Serverless asynchronous task processing system. First, we describe the construction process of a Serverless asynchronous task system from task distribution and request managing.

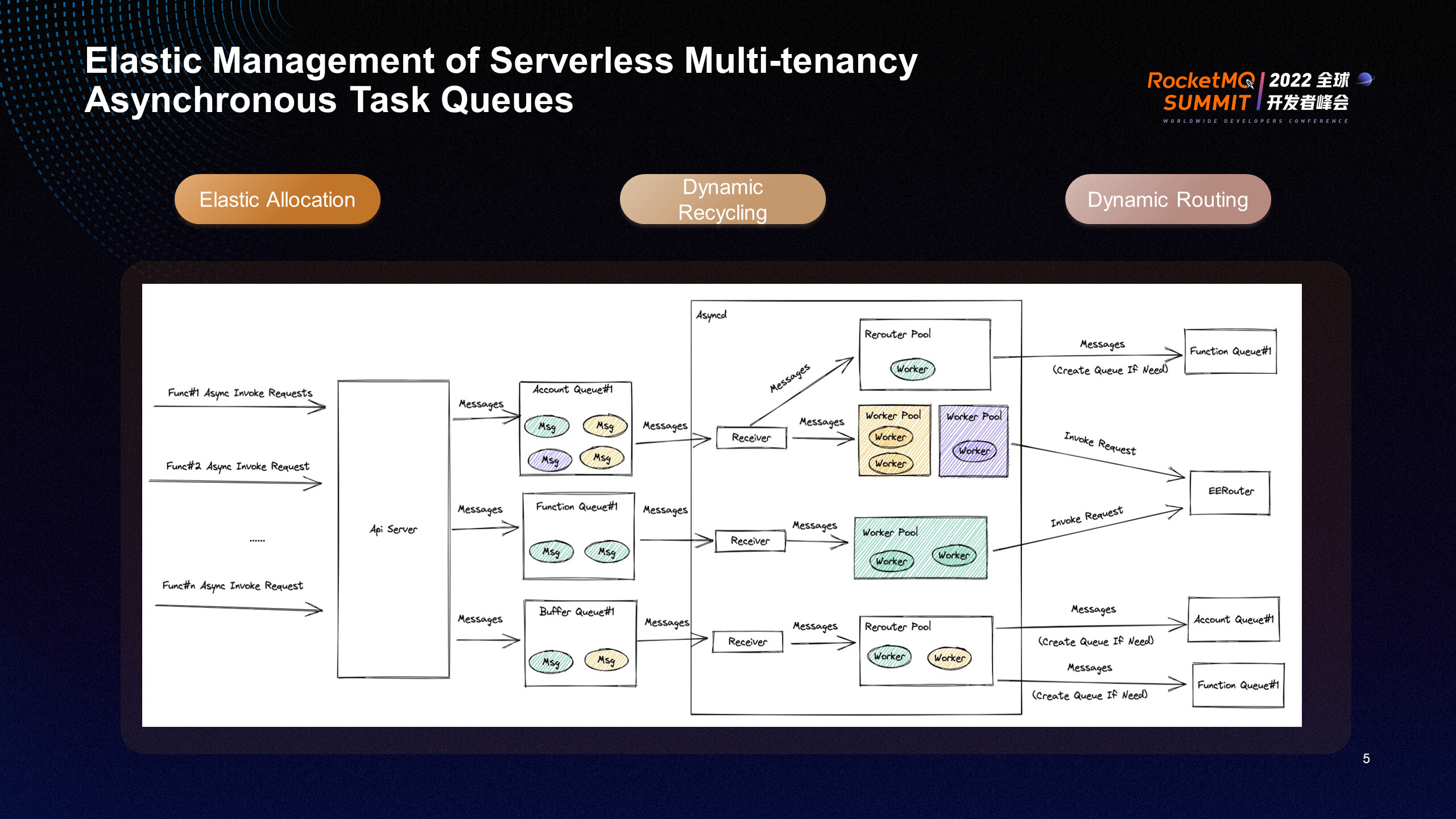

In the Serverless multi-tenancy architecture, asynchronous call requests first arrive at the API Gateway of the system, and API Gateway places the received asynchronous requests into asynchronous queues for managing. API Gateway is a stateless architecture that supports load balancing and dynamic scaling.

Simple asynchronous task request managing in the actual system implementation needs to consider the request isolation between tenants and the isolation requirements between different functions under the same tenant. Combined with the objective request isolation requirements, the real-time requirements of request processing, and the use cost of balancing queue resources, we designed a set of multiple queue types to support a dynamic recycling queue management system. Based on such a system, it balances the isolation requirements, execution efficiency, and isolation costs under multi-tenancy architecture.

These queue models include the account-granularity queue model, the function-granularity queue model, and the multi-account sharing-dimension queue model. The account-granularity queue serves as a basic execution guarantee. When a function request execution exception in the queue may affect other function requests, the function-granularity queue is dynamically allocated to the queue, and the requests related to the function are routed to the dedicated queue for processing. If the corresponding function has no requests for a long period, it will dynamically reclaim the previously allocated queue resources to achieve efficient use of queue resources.

In addition to defining multiple types of queue models, the system provides the dynamic routing capability for task requests in the face of task queue switching, which can automatically route requests to different types of queues and distribute them to different partitions for quick execution, solving the consumption backlog due to Noise Neighbor problems and consumption delay caused by uneven request load.

Why not assign a separate request queue to each function at the beginning? In the cloud computing environment, the queue itself is a resource. Assigning a queue resource to each function under each account seems to solve the problem of request isolation. Since the magnitude of the function is unequal to the magnitude of the queue that the queue system can provide, considering the specific implementation of the downstream system, combined with the underlying consumption processing logic to meet real-time, a function may need to allocate multiple queue resources. Consume multiple queues in parallel to meet the implementation requirements of request processing. From a practical point of view, the allocation performance of queues, the impact of queue resource allocation on downstream systems, and the management cost of a large number of queue resources need to be considered. The cost of holding a queue per function is also huge. Due to the different loads of different functions, the call frequency is different. To some extent, allocating independent queues is a waste of resources. The backend consumption logic closely related to queues also causes unnecessary consumption of a large number of system resources. These are a huge waste of system resources for Serverless systems.

After discussing the underlying design logic of request managing, we will explain the traffic control policy for asynchronous request processing links.

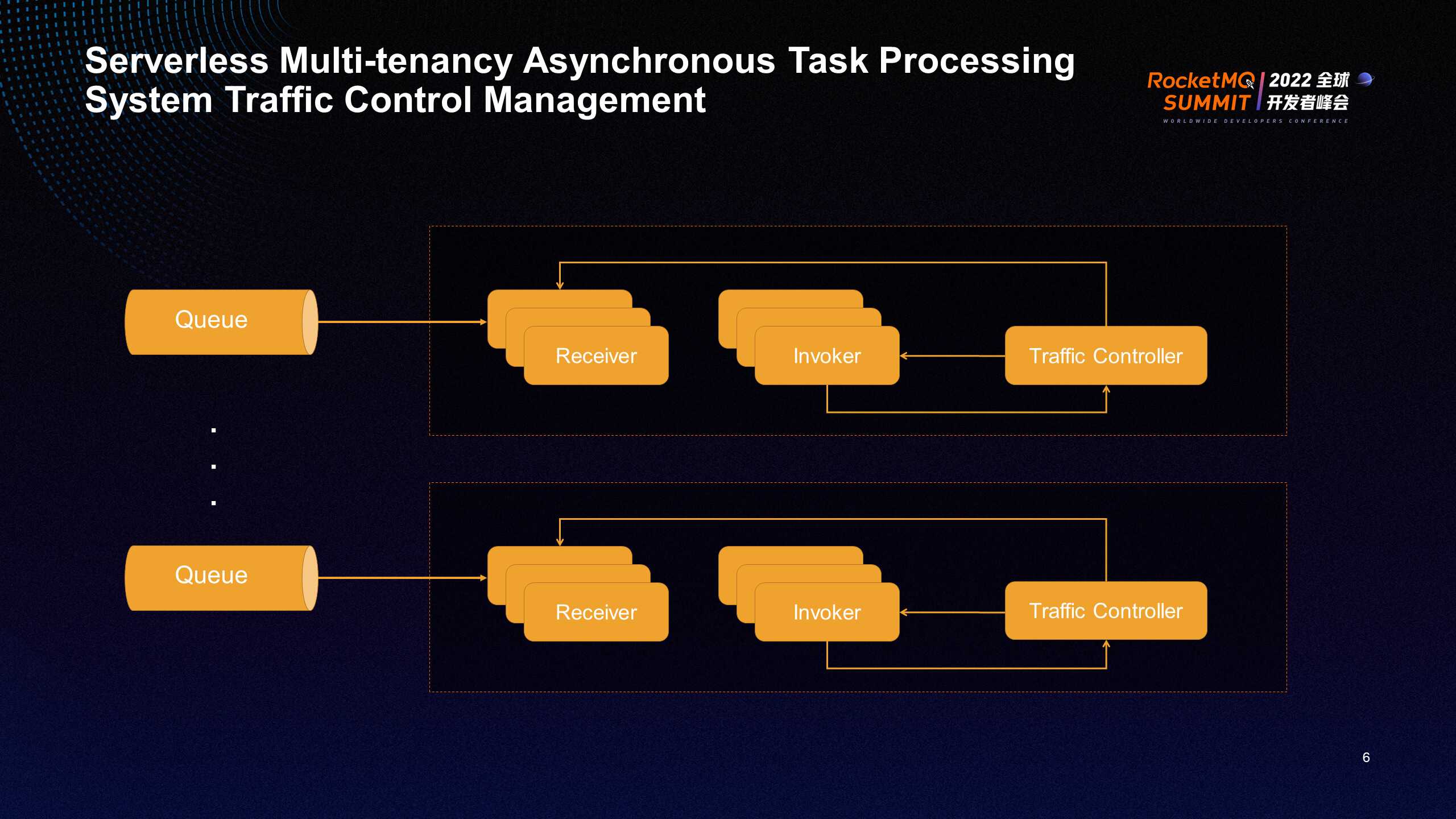

Traffic control mainly includes two parts. One part is the dynamic load capacity for task request consumption, mainly the dynamic scaling-in and out of the receiver capacity. The other part is the feedback capacity for backend task execution scheduling. The system adapts to the backend resources to achieve balance by returning the processing results of backend tasks to the Receiver in time and adjusting the rate at which Receiver obtains task requests.

In the specific implementation, the system adopts the feedback control algorithm of AIMD, which is the growth of the sum and the reduction of the multiplication. This algorithm can implement finer-grained control in the high-frequency request or multi-tenancy architecture of Serverless. In the whole process, Receiver and Invoker can be regarded as a Pool. After combining the linear growth of Pool Size and the multiplicative reduction of Pool Size when the backend consumption capacity is insufficient with negative feedback, the Pool Size dynamically converges to a size matching the backend processing capacity, thus realizing the traffic control management of the system and avoiding the impact of continuous acquisition of upstream requests on downstream resource scheduling. It also avoids system starvation caused by backend resource scheduling problems in extreme cases.

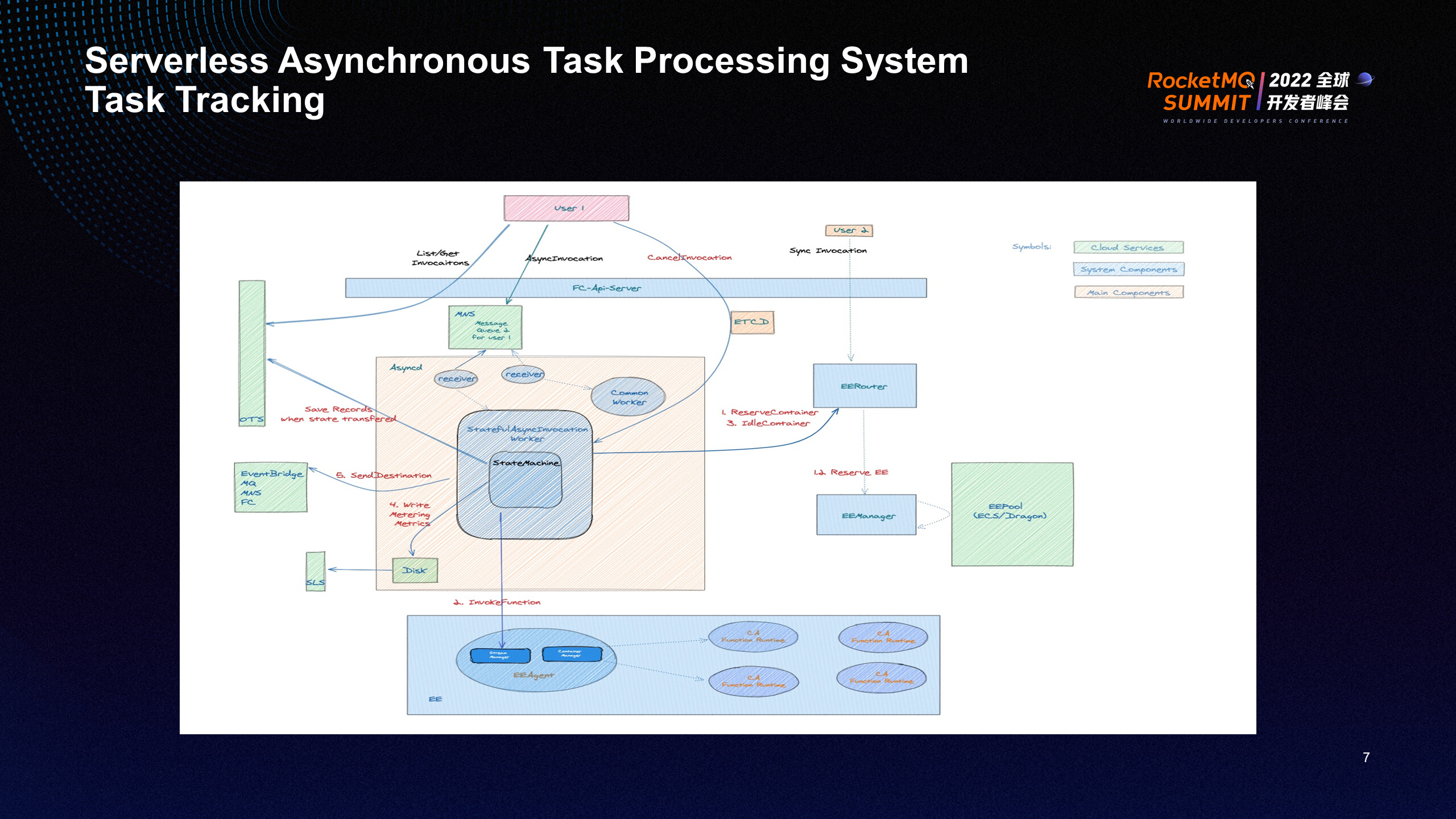

In the Job/Task mode, the business needs to track the execution status of jobs and task requests. At the same time, customers have some requirements for advanced management of task execution (such as task suspension, cancellation, and deduplication). We have added the design of a task execution state machine to the basic framework of Serverless asynchronous tasks. After introducing a state machine, we can track the complete status of each task. You can perform fine-grained control on task execution based on state tracking or implement advanced asynchronous operations based on state control (such as task deletion, recovery, deduplication, and other advanced task management functions). State tracking can reflect the running status of the task lifecycle better.

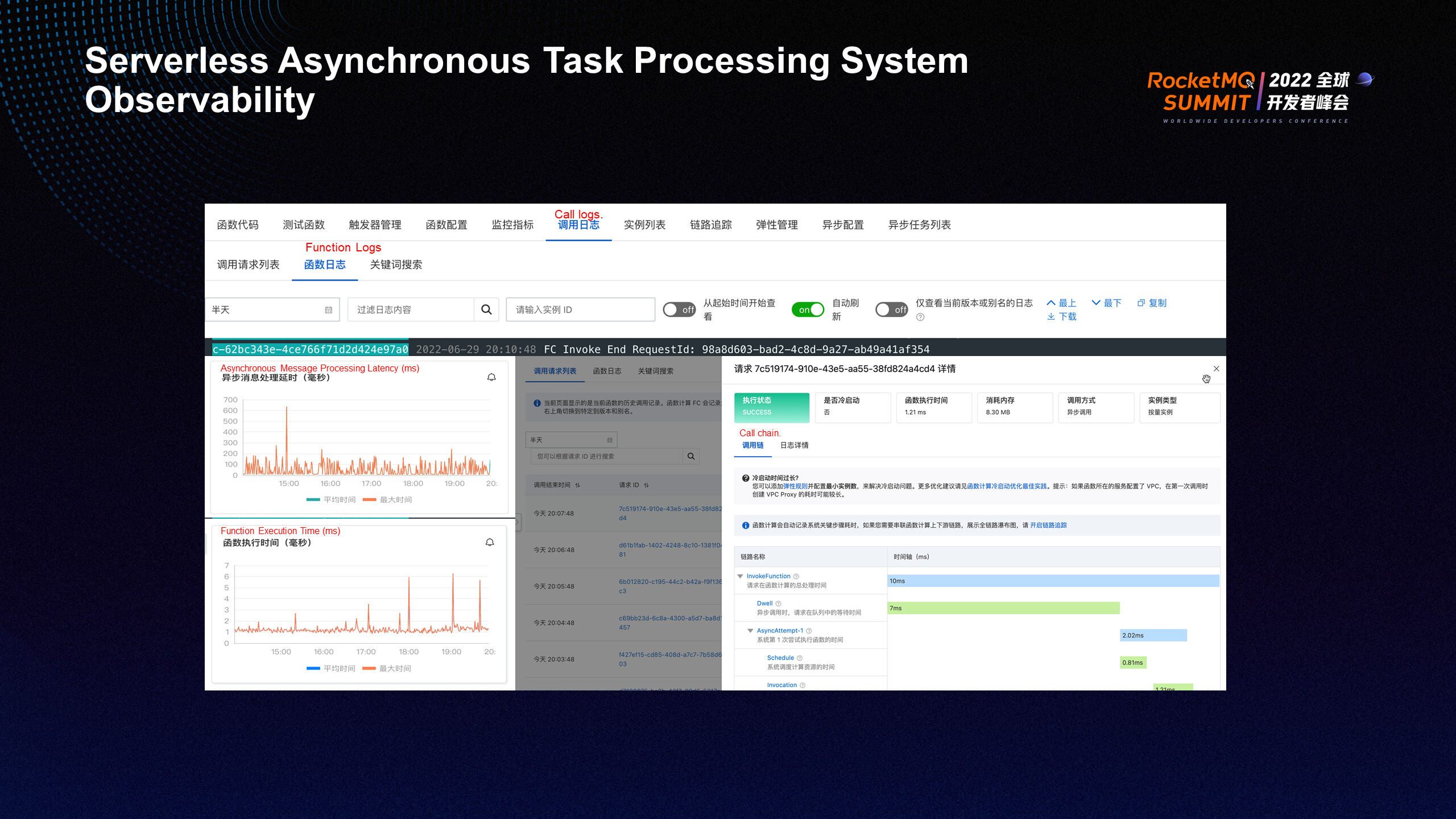

A task processing system needs to help customers complete task requests and execution but needs to pay attention to debugging during task execution or querying task execution status and various execution metrics.

The Serverless system provides customers with complete observability capabilities, including task request processing and task execution duration. It also provides a query of the task execution request list. Users can log on to the executing context with the request ID. This provides users with a transition close to traditional operation habits. In addition, the Serverless system realizes the time-consuming display of each stage of the lifecycle during task execution. It provides users with complete traceability from request to execution.

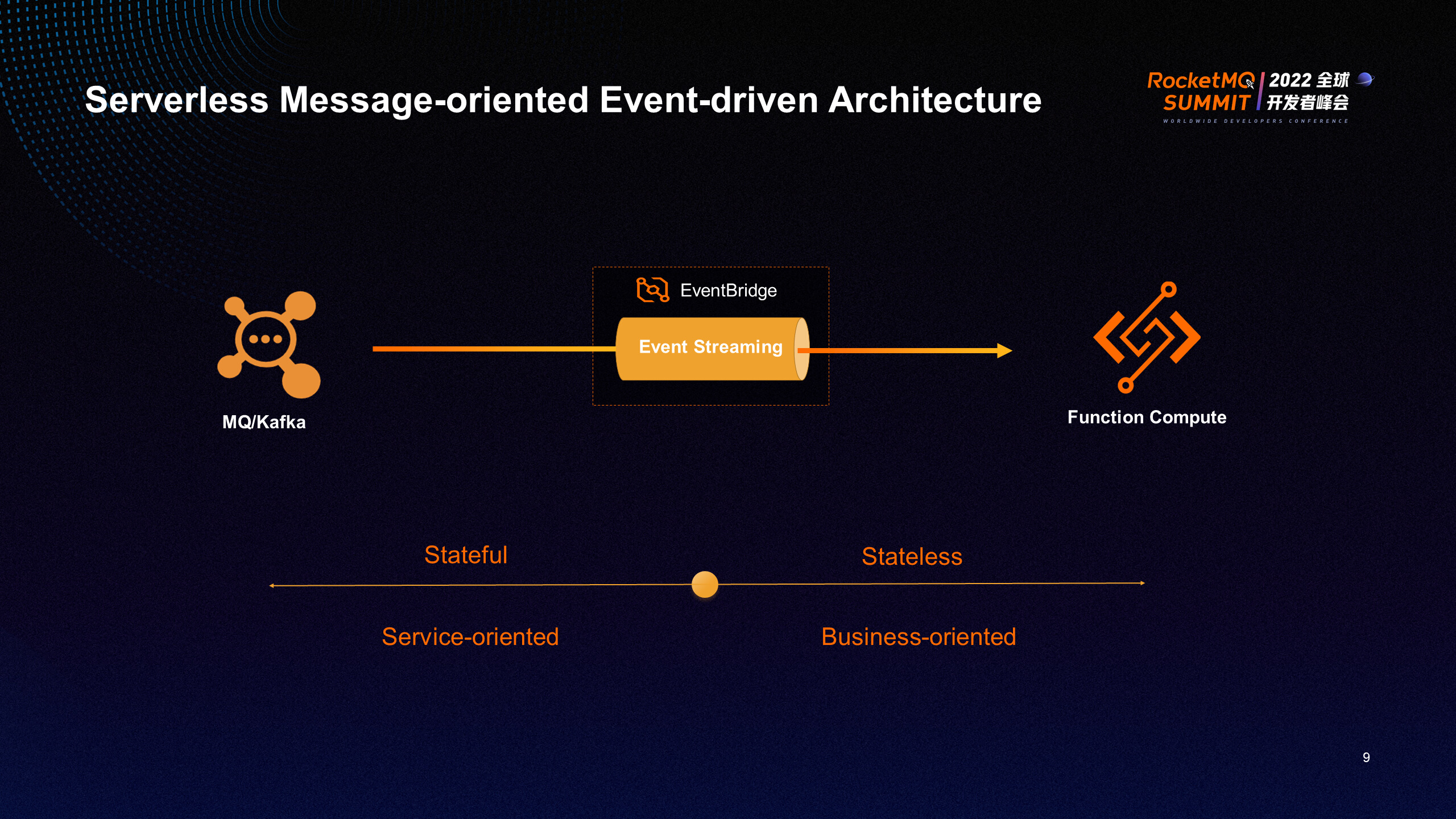

In the Serverless asynchronous task processing system, user requests are first written to asynchronous queues through the gateway. The essence of an asynchronous queue is an MQ. The essence of an asynchronous task processing system is to build a complete producer-consumer model through a series of distributed consumption policies and complete the consumption of each request in the queue. This system model is further abstracted. In addition to the asynchronous request of the consumption Function Compute, for any MQ system (such as a general consumption processing layer can be constructed), which means customers do not need to pay attention to the system implementation logic of consumption and only need to provide the service processing logic for the message. The deep integration of EventBridge and Function Compute systematically separates the logic of message consumption and message processing just as the asynchronous task system does, finally realizing Serverless message processing.

According to the new Serverless messaging architecture, users do not need to implement the implementation logic of the consumer side by themselves and do not need to worry about distributed system issues (such as load balancing and traffic management related to consumption). For the business logic of messages and changes to the downstream data status, customers only need to use Function Compute to quickly implement their business logic to achieve the purpose of quickly building business systems. This is a new choice for customers in Serverless messaging scenarios.

In terms of implementation, the underlying layer is based on the EventStreaming mode. The consumer component directly pulls messages from the message source without transferring them to the target function at the intermediate BUS layer, realizing efficient message processing. The overall architecture separates the stateful message consumption logic from the stateless message processing logic.

With its flexible extensibility and rich link capabilities, the Function Compute system can quickly help customers build their business systems. This also puts forward many requirements for customers. For example, they need to develop code according to the functional programming paradigm and follow the Function Compute operation mode. Overall, customers need to transform and adapt their business systems based on the Serverless architecture.

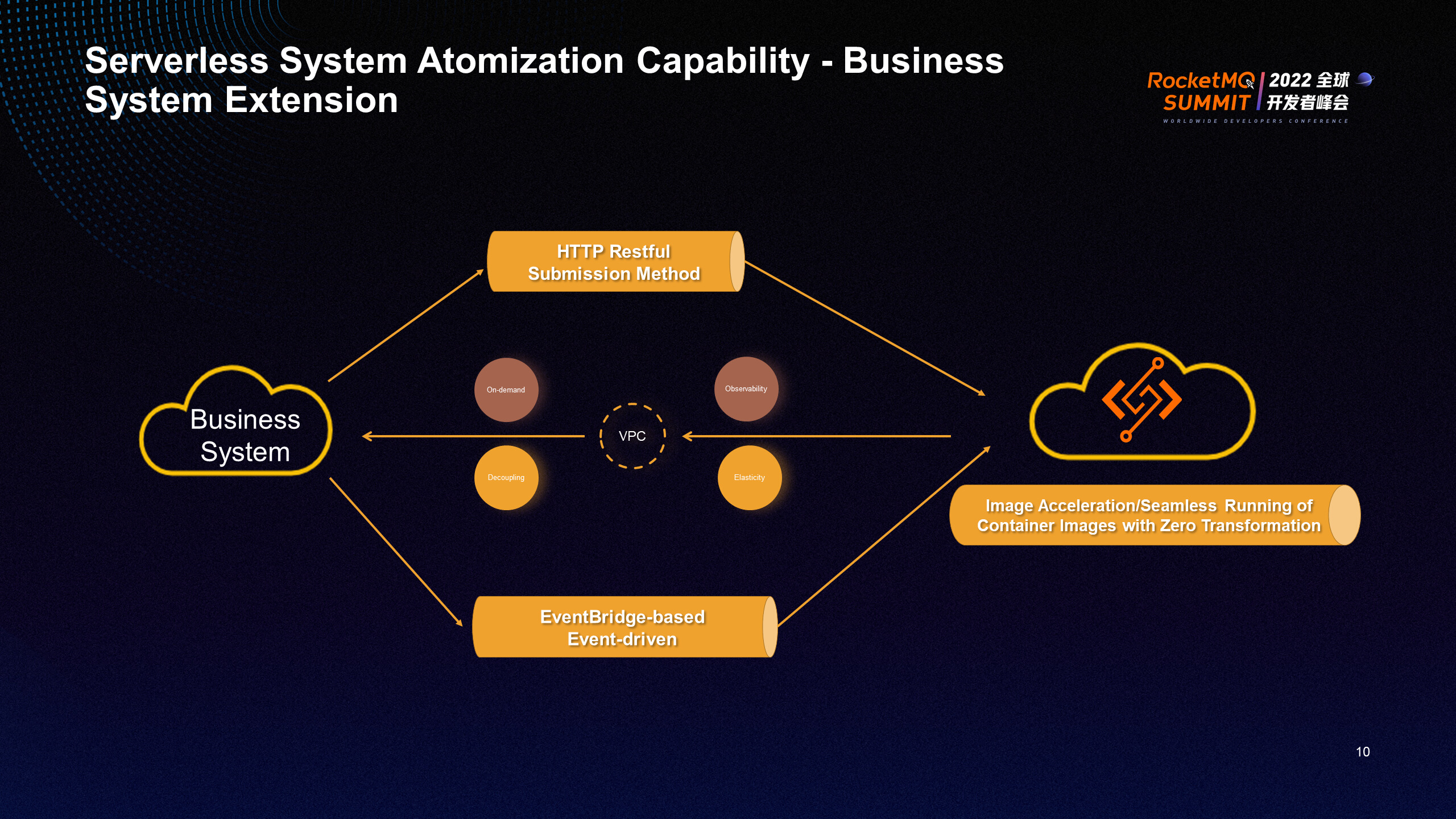

In addition to the agile development and flexible extensibility provided at the micro level, we believe that the real value of the Function Compute system lies in its overall system atomization capability, which can be used as an out-of-the-box extensibility of the customer system in the public cloud environment at the macro level, enabling the enterprise system architecture to enjoy the resource elasticity and flexible extensibility brought by the Function Compute. In order to help enterprises extend their business systems using the atomic capabilities of Serverless systems, we have implemented a simpler access method to support Serverless access and Serverless execution environments.

Function Compute provides HTTP access at the request submission level. Customers only need to integrate the URL provided by the function in their systems to submit tasks to the Function Compute engine in the public cloud environment. At the same time, Function Compute builds a common PaaS event-driven capability based on EventBridge, customer business systems can deliver relevant events to a common infrastructure, and trigger the execution of business logic through EventBridge.

Serverless provides multiple access methods to support the runtime of business systems. For example, it supports the running of traditional custom images. Customer images do not need to be modified. They can manage their tasks and related images on Function Compute after simple configuration, realizing fast access to business systems. Flexible and rapid extensibility of the system is the goal pursued by the current cloud-native architecture.

664 posts | 55 followers

FollowAlibaba Cloud Community - November 9, 2021

Alibaba Cloud MaxCompute - June 23, 2022

Alibaba Cloud Serverless - February 17, 2023

Alibaba Cloud Native Community - December 17, 2025

Alibaba Cloud Serverless - November 10, 2022

Alibaba Cloud Native Community - April 13, 2023

664 posts | 55 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Alibaba Cloud Native Community