By Li Kang

This article mainly describes the CheckPoint mechanism, backpressure mechanism, and memory model of Flink. They are the basis of Flink optimization. This article introduces Flink from the following aspects:

Flink periodically persists states to implement fault tolerance and exactly-once semantics. The persistence process is called a checkpoint. It is the snapshot of the global state of a Flink Job at a certain moment.

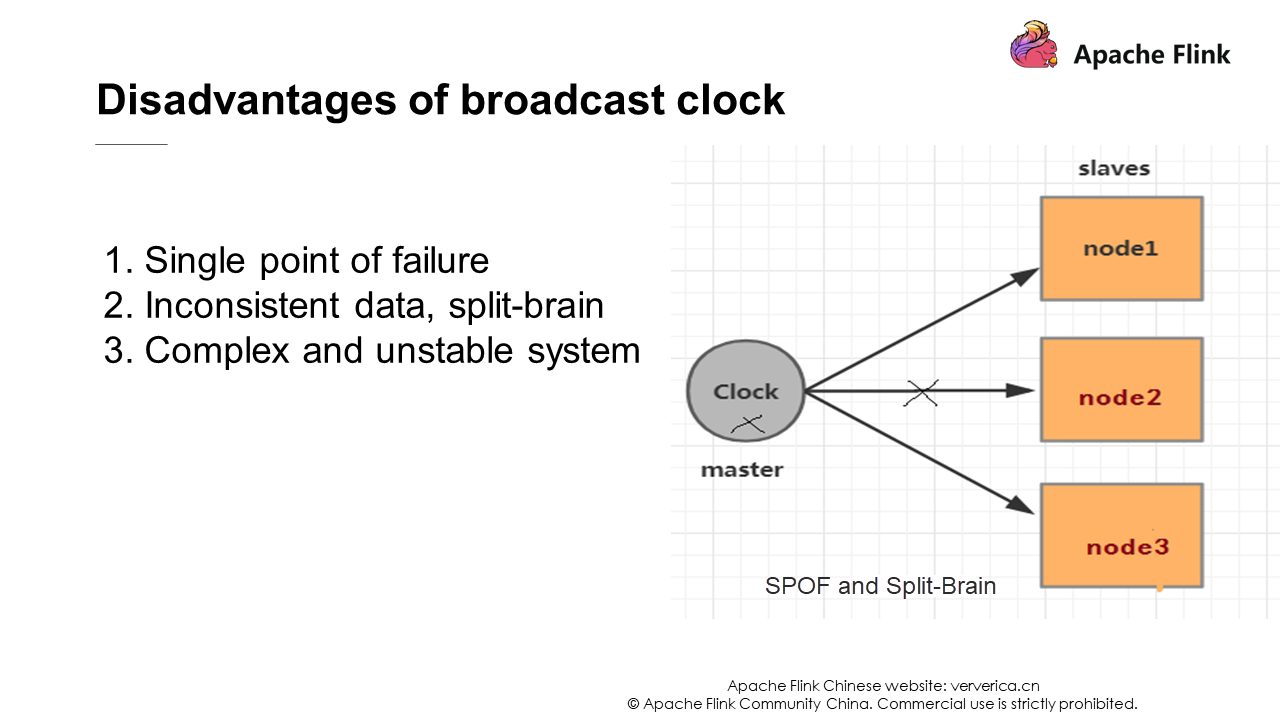

When you want to implement a global state retention function for a distributed system, the traditional solution introduces a unified clock and broadcasts the clock to each secondary node through the primary node in the distributed system. When a node receives the unified clock, it records its current status.

However, the unified clock method also has some problems. If the GC time of a node is long or if the networks of the primary and secondary nodes fluctuate at the time and cause a delay or failure in clock transmission, data inconsistency between this secondary node and other nodes will eventually lead to split-brain. To solve this problem, a high availability (HA) solution for the primary and secondary nodes is required. However, the more complex a system is, the more unstable it is and the higher the maintenance costs are.

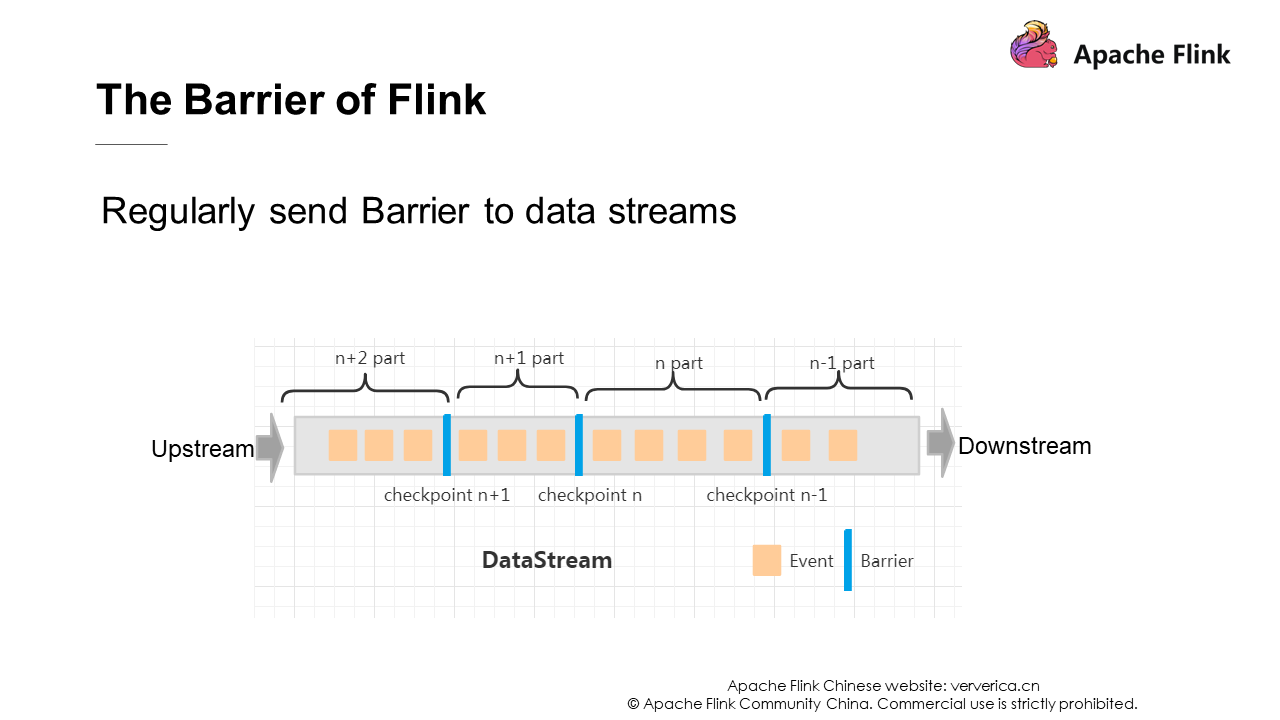

Flink puts checkpoints into a stream named Barrier.

The preceding figure shows an example of Barrier. The save snapshot function is triggered every time a task passes the blue barrier in the figure, starting from the first upstream task to the last downstream task. Let's use an example to explain this briefly.

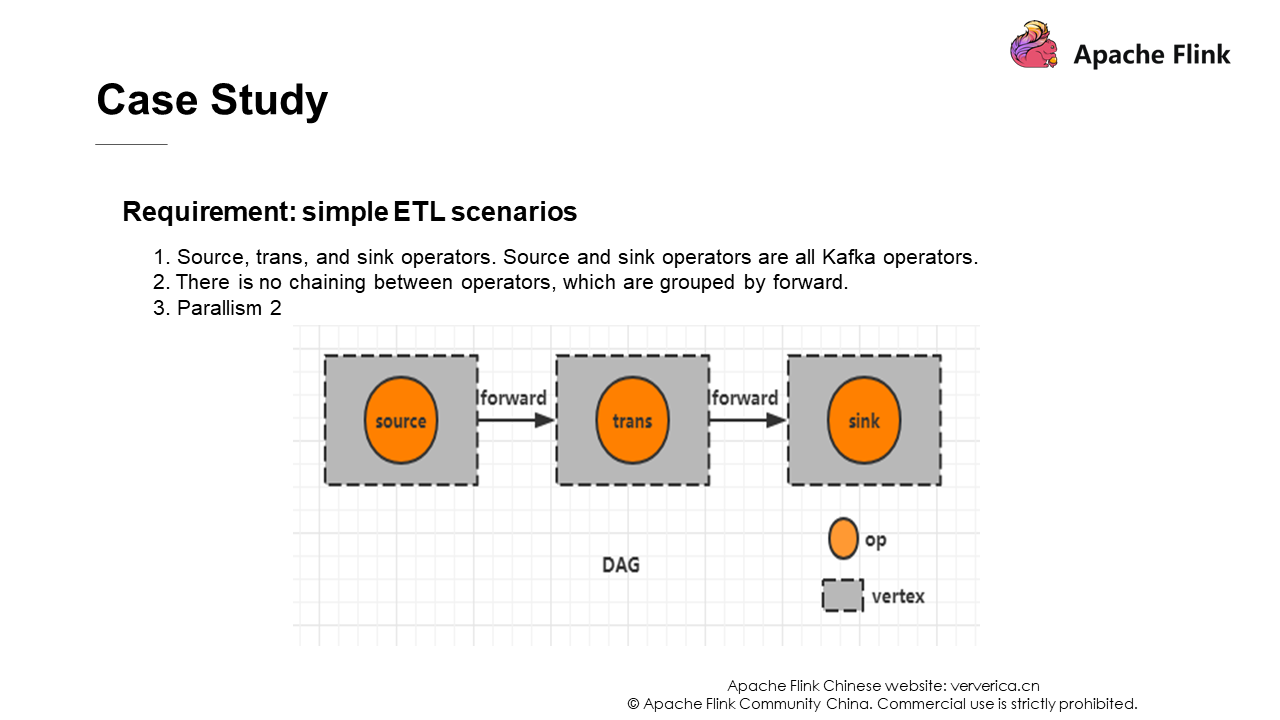

This is a simple extract, transform, load (ETL) process. First, take data from Kafka for a conversion operation, and then send it to a downstream Kafka.

In this example, chaining is not performed. The forward strategy is adopted here, that is, the output of a task is sent to only another task as an input. This has the following advantage: If both tasks are in the same JVM, unnecessary network overhead can be avoided.

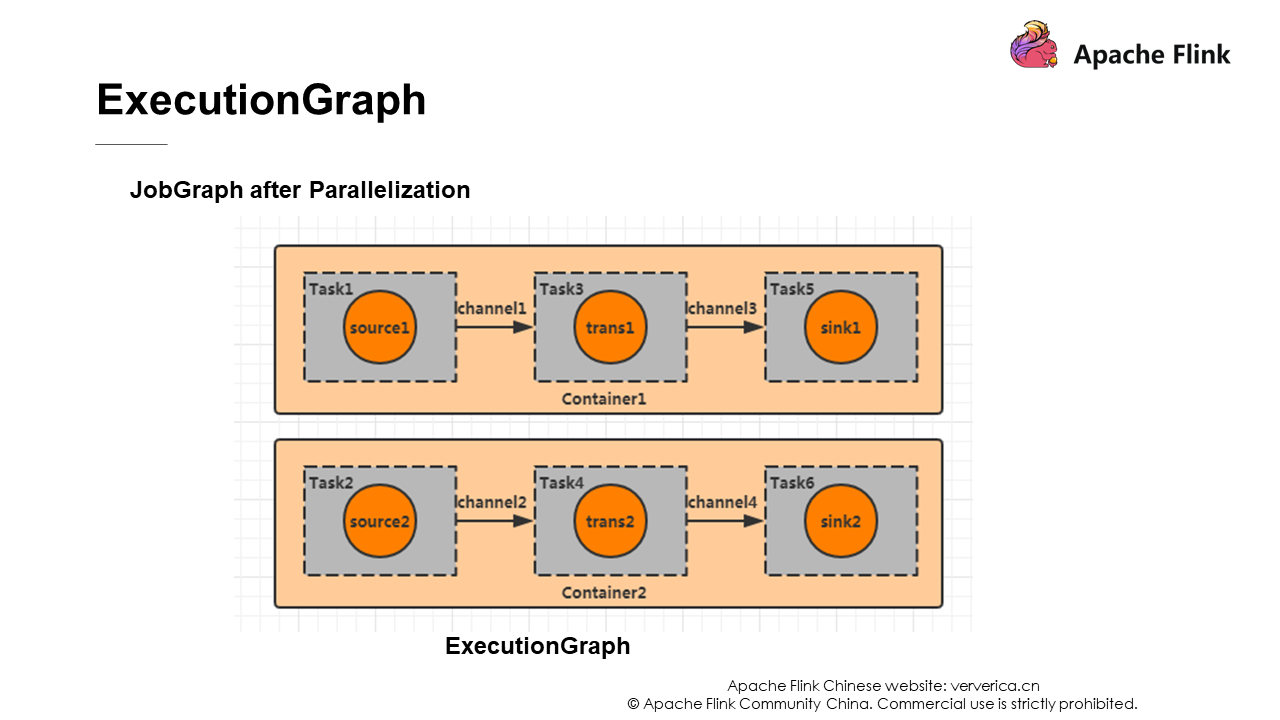

Set parallelism to 2. The following figure shows the DAG diagram.

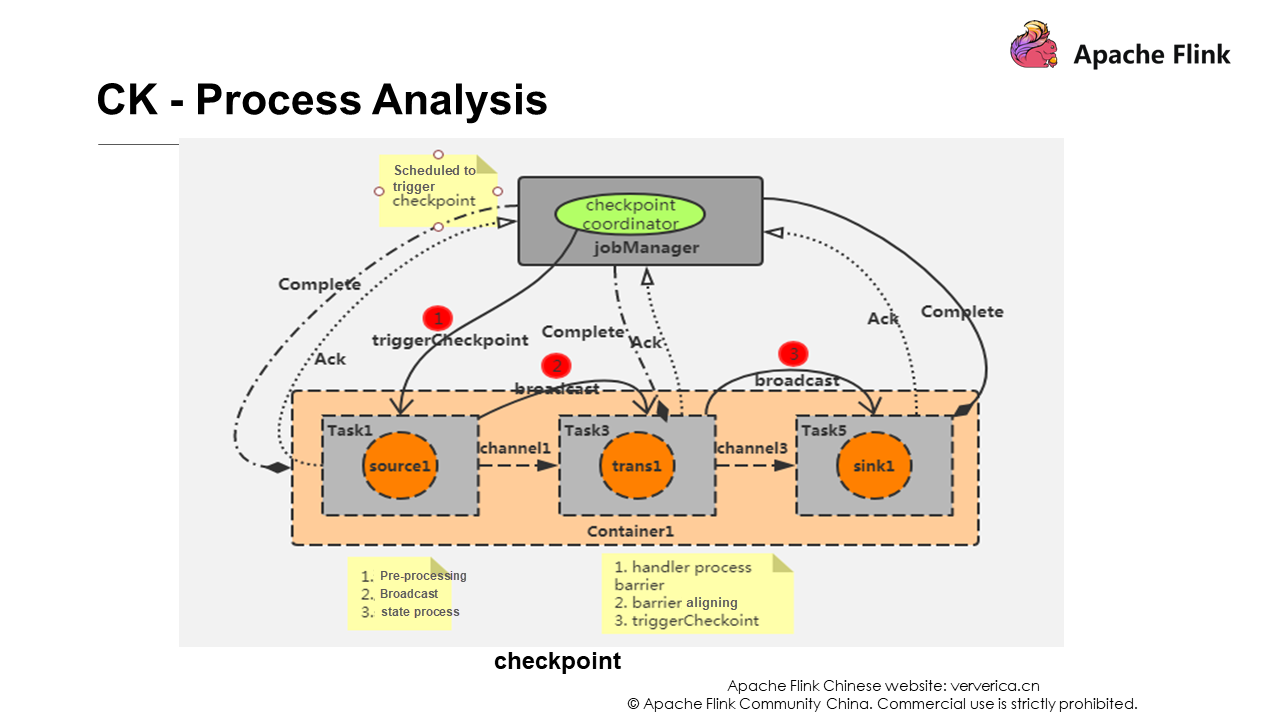

Each Flink job has a JobManager, which has a checkpoint coordinator to manage the entire checkpoint process. You can set a time interval for the checkpoint coordinator to send a checkpoint event to the source task in each container. The source task is the first task corresponding to task 1 and task 2 in the figure ExecutionGraph.

When a source operator receives a Barrier, it suspends its data processing, produces a snapshot of its current state, then saves the snapshot to the specified persistent storage, and finally, asynchronously sends an ack (the acknowledge character) to the checkpoint coordinator. At the same time, the source operator broadcasts the Barrier to all its downstream operators and then resumes its data processing.

Each operator creates snapshots as described previously and broadcasts them to the downstream operators until the Barrier is finally passed to the sink operator. At this point, the snapshots are created. Note that the upstream operator may have multiple data sources. In this case, a checkpoint is triggered only when all barriers have been passed to the sink operator. Therefore, if the checkpoint time is long, the data alignment may take a long time.

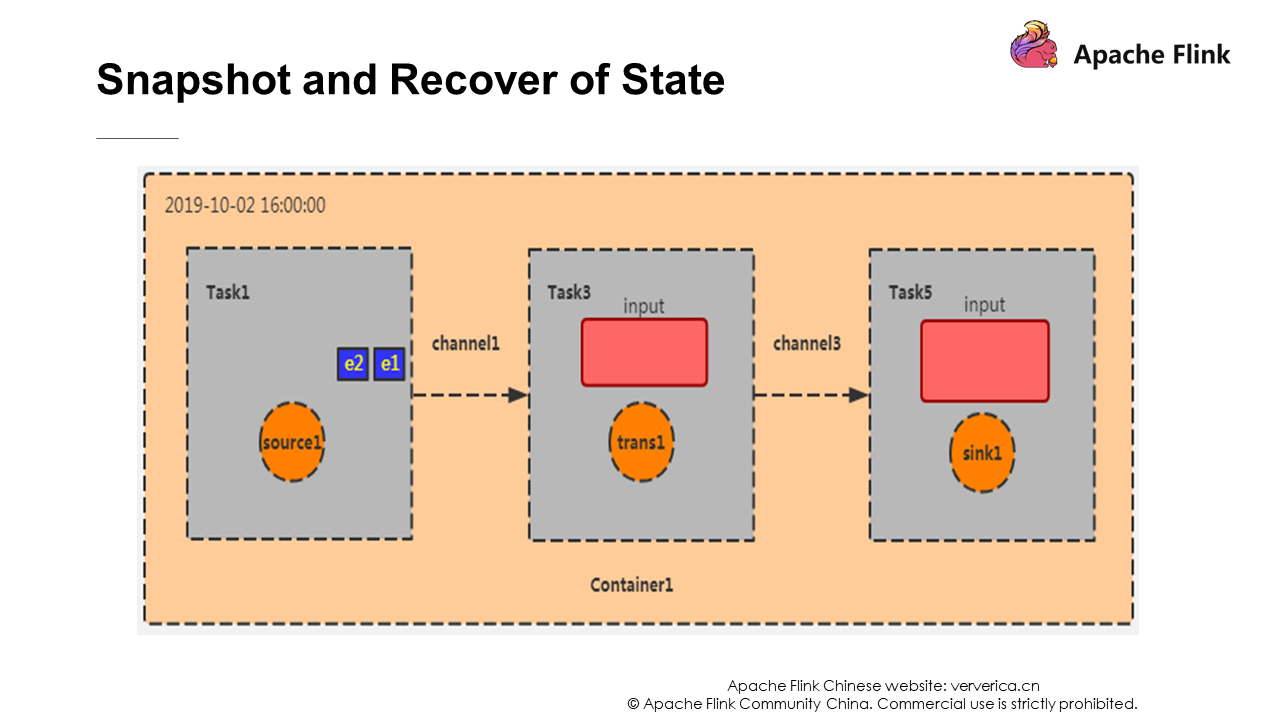

As shown in the preceding figure, this is the initialization stage of the container. In Task 1, e1 and e2 are data that has just been consumed from Kafka. At the same time, The checkpoint coordinator sends Barrier to it.

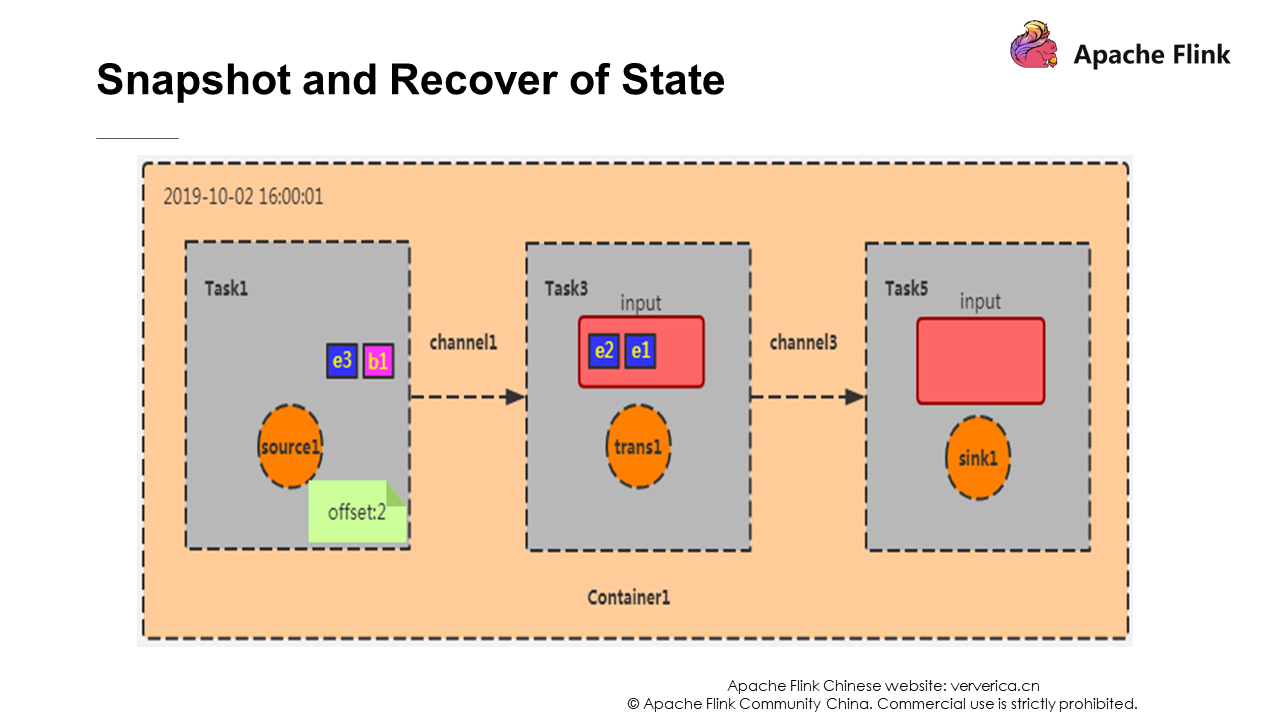

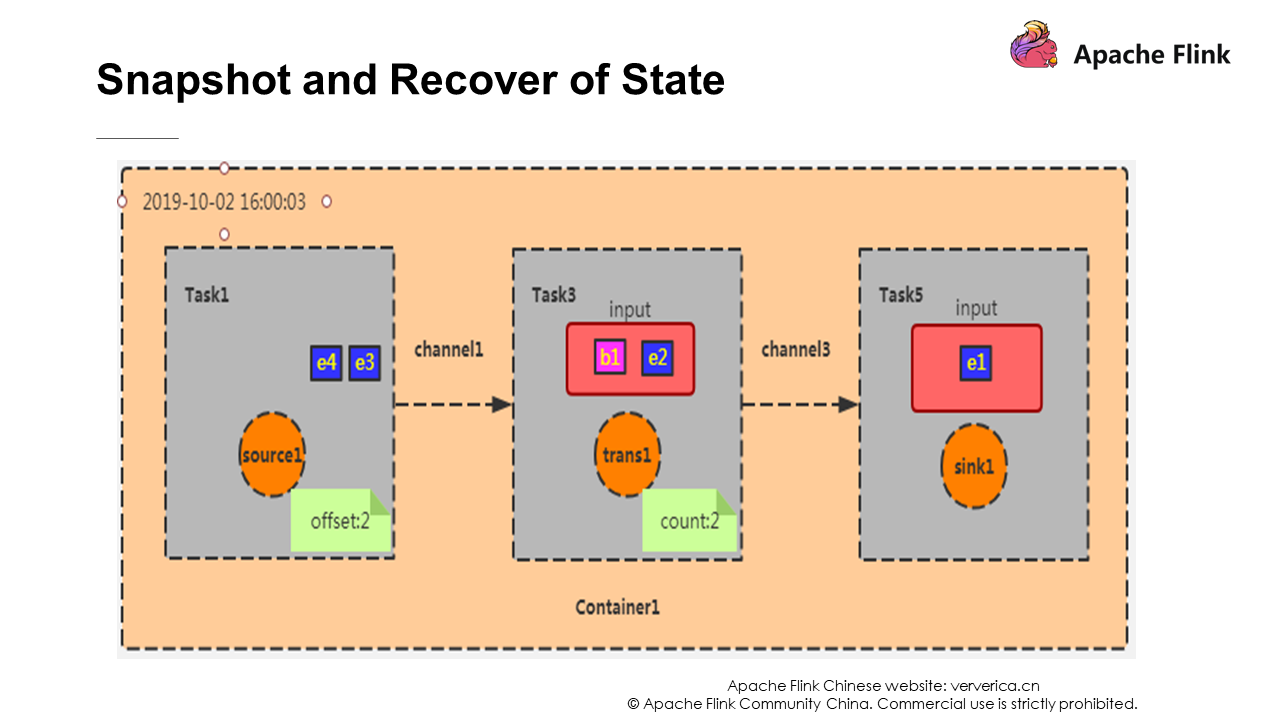

At this point, Task 1 has completed its checkpoint process. The effect is to record offset as 2 (e1, e2), and then broadcast Barrier to the downstream operators. The input of Task 3 is the output of Task 1. Suppose that the function of this program is to count the number of records. At this time, the checkpoint effect of Task 3 is to record the number of data entries as 2 (because the data from Task 1 is e1 and e2), and then broadcast Barrier to the downstream operators. When Barrier is passed to the sink operator, snapshot creation is completed.

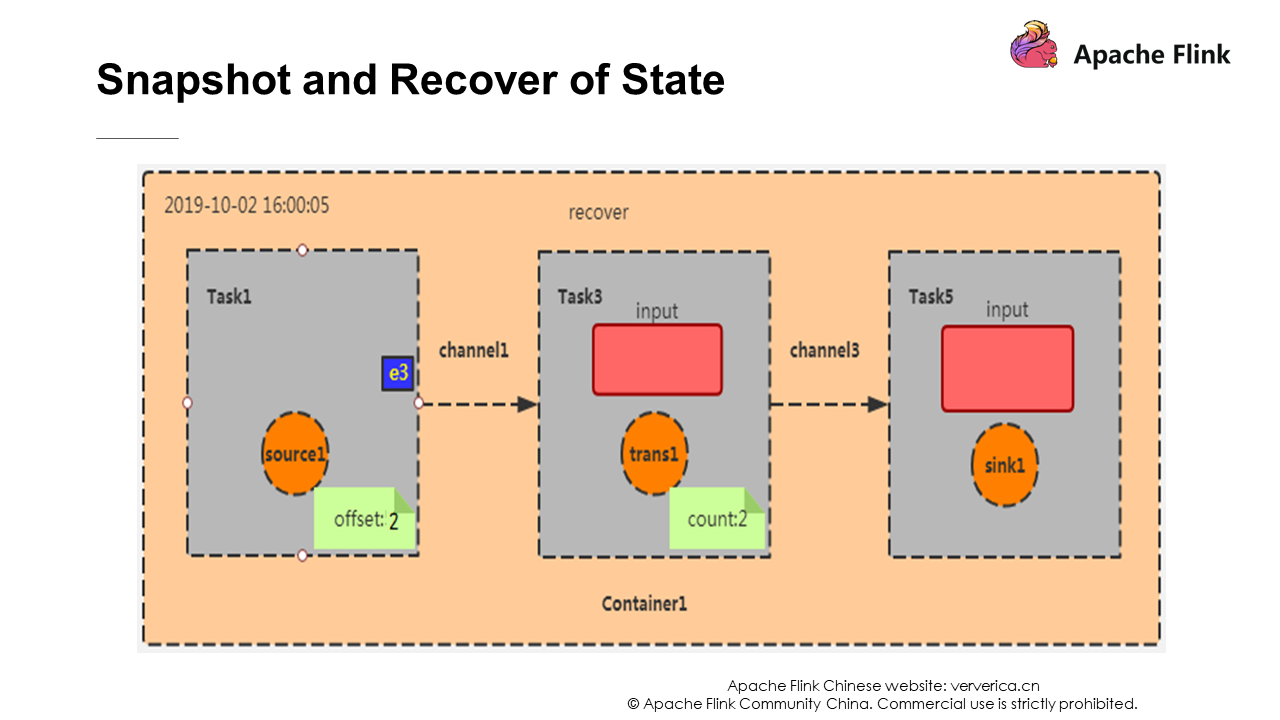

Data is continuously generated in the source and new checkpoints are also generated. If the container is down and restarted, data restoration is required. In the checkpoint that is just completed, the offset is 2 and the count is 2, and the recovery process is performed according to this state. Task 1 starts consumption from e3. This is the recover operation.

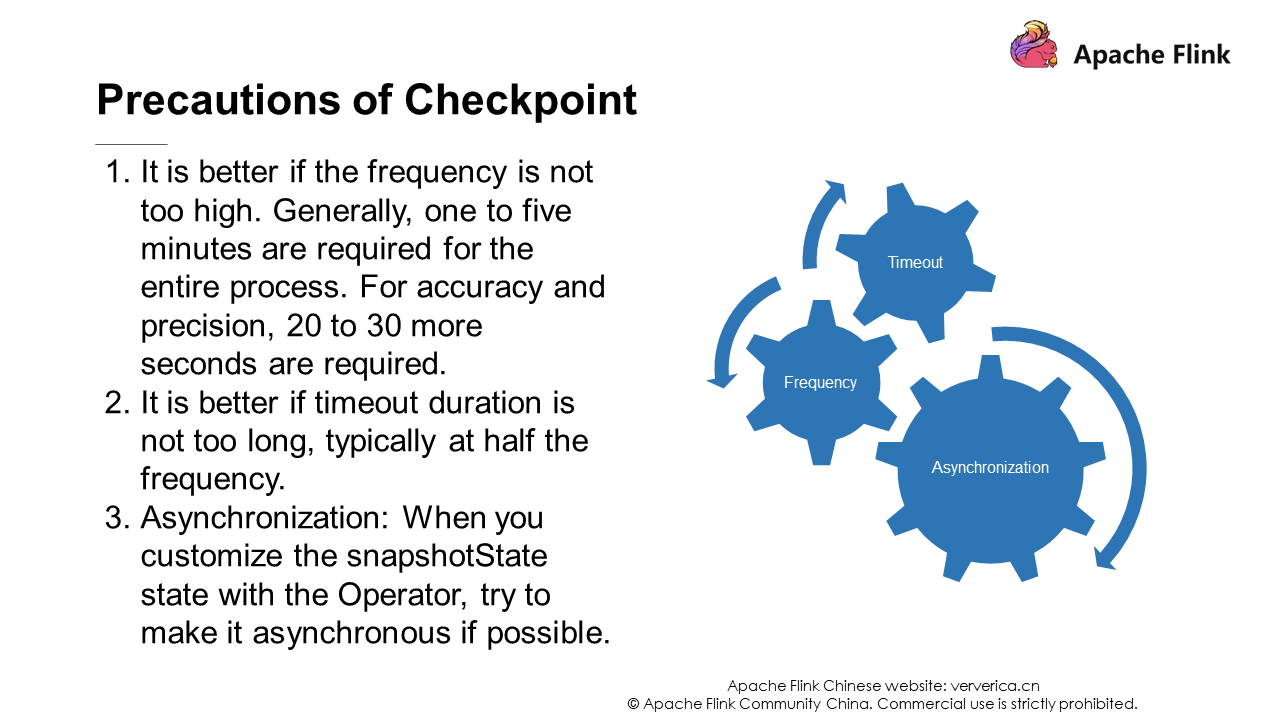

The following three considerations will affect the system throughput. Pay attention to them during the development process:

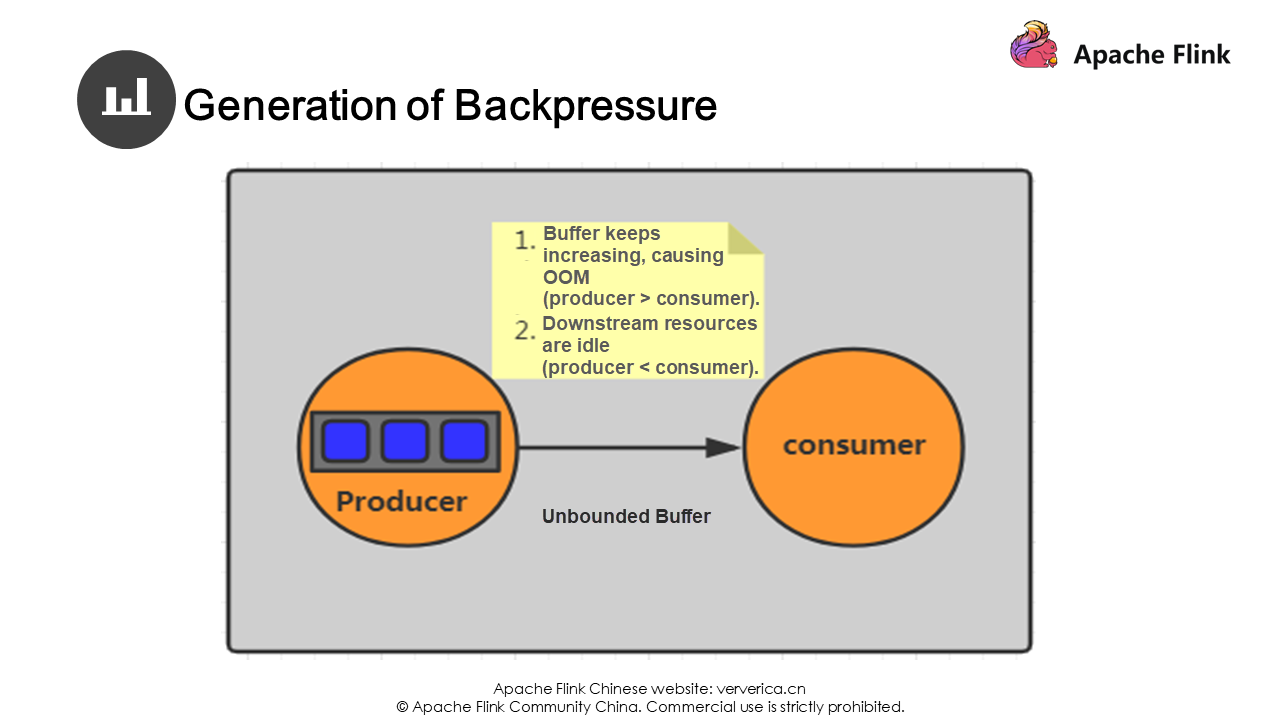

In distributed systems, data usually needs to be exchanged between multiple JVMs and multiple tasks. We use producers and consumers to illustrate this.

Assume that your current producer uses an unbounded buffer for storage. If the production speed of your producer is much higher than the consumption speed of consumers, the data on the production end will be backlogged due to the low consumption capability of the consumers, eventually causing out of memory (OOM).

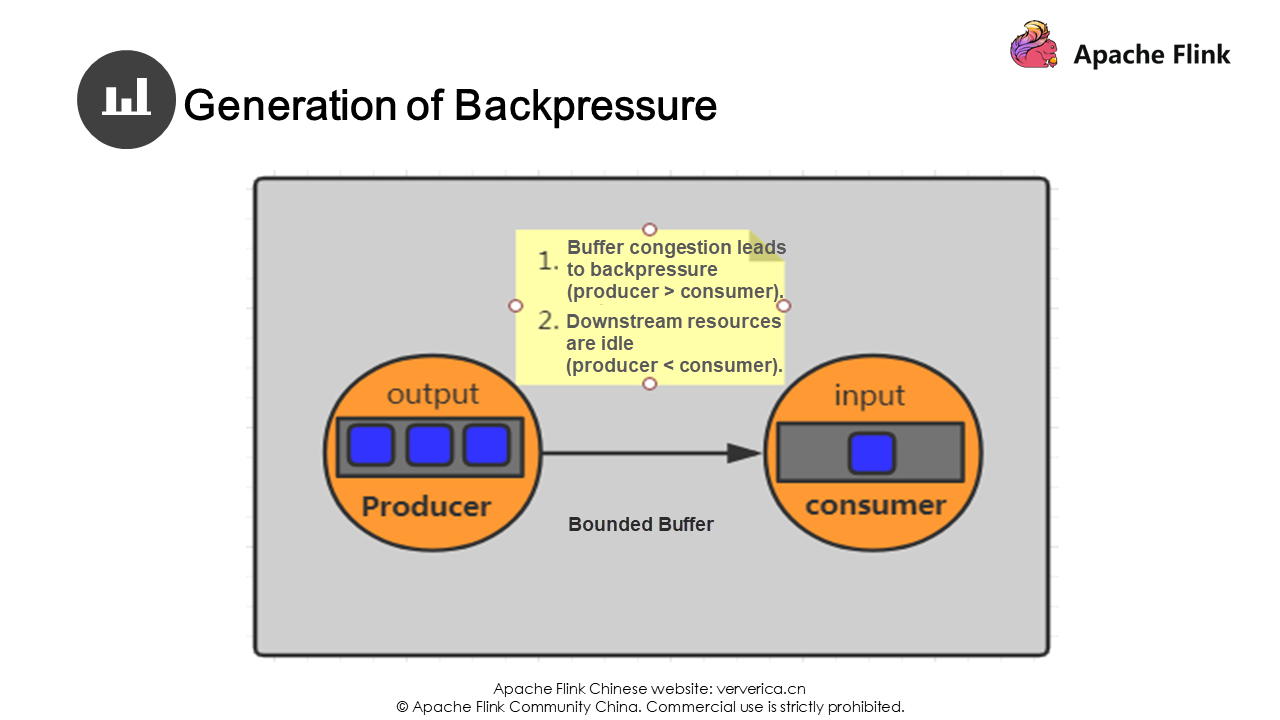

Even if a bounded buffer is used, when the buffer is full due to a low consumption capability, the producer will stop producing. This cannot completely solve our problem. Therefore, adjustment needs to be made based on different situations.

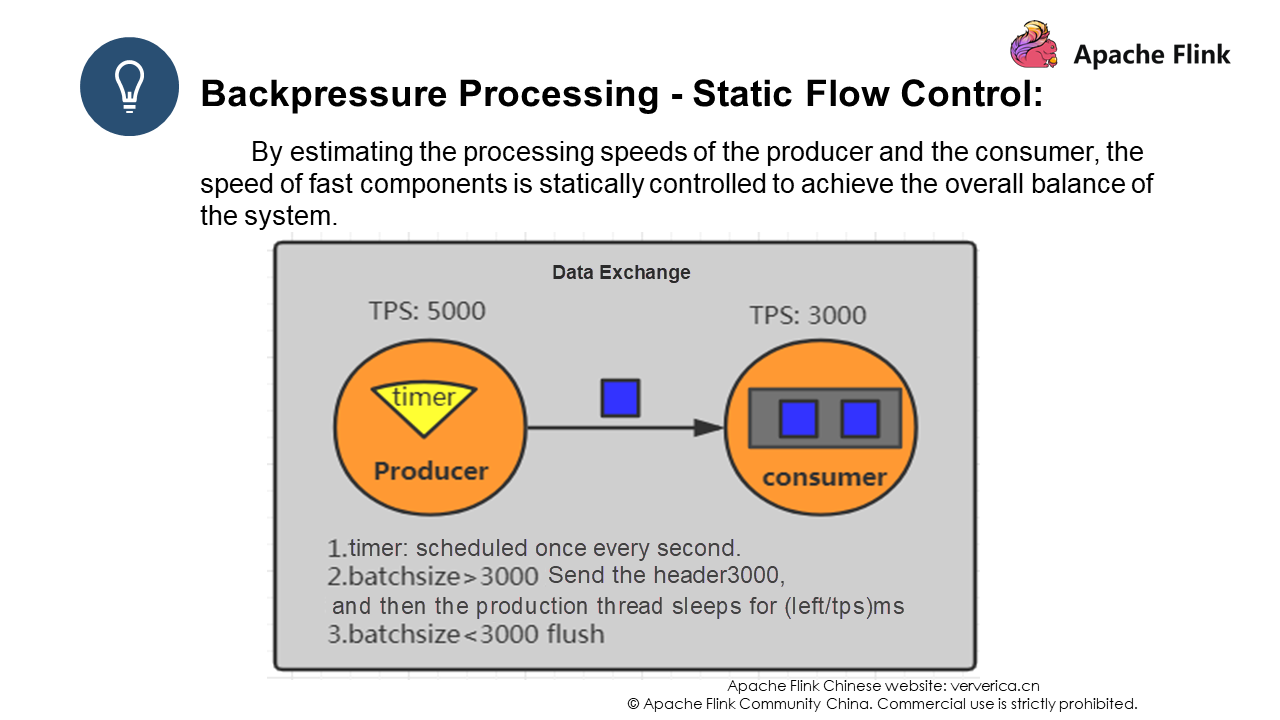

Flink uses a bounded buffer to exchange data between different TaskManagers. Two methods are used: static flow control and dynamic flow control.

In short, when the producer has a higher TPS than the consumer, overflow writing is used. That is, batch is used to encapsulate data, and then data is sent in batches. The producer sleeps for a period of time after each transmission. This period of time is calculated as Left (remaining data)/TPS. However, it is difficult to estimate the system situation.

The flow control in Flink of versions earlier than 1.5 was implemented based on the TCP sliding window, which has been mentioned in previous courses. However, this mechanism has been deprecated in Flink 1.5 and later versions, and will not be explained here. In this network model, the data generation node can only decide whether to send data to the consumer by checking whether the current channel is writable, and it does not know the actual capacity of the downstream data consumer. As a result, when the data generation node finds that the channel is no longer writable, it is possible that the downstream consumer node has accumulated a lot of data.

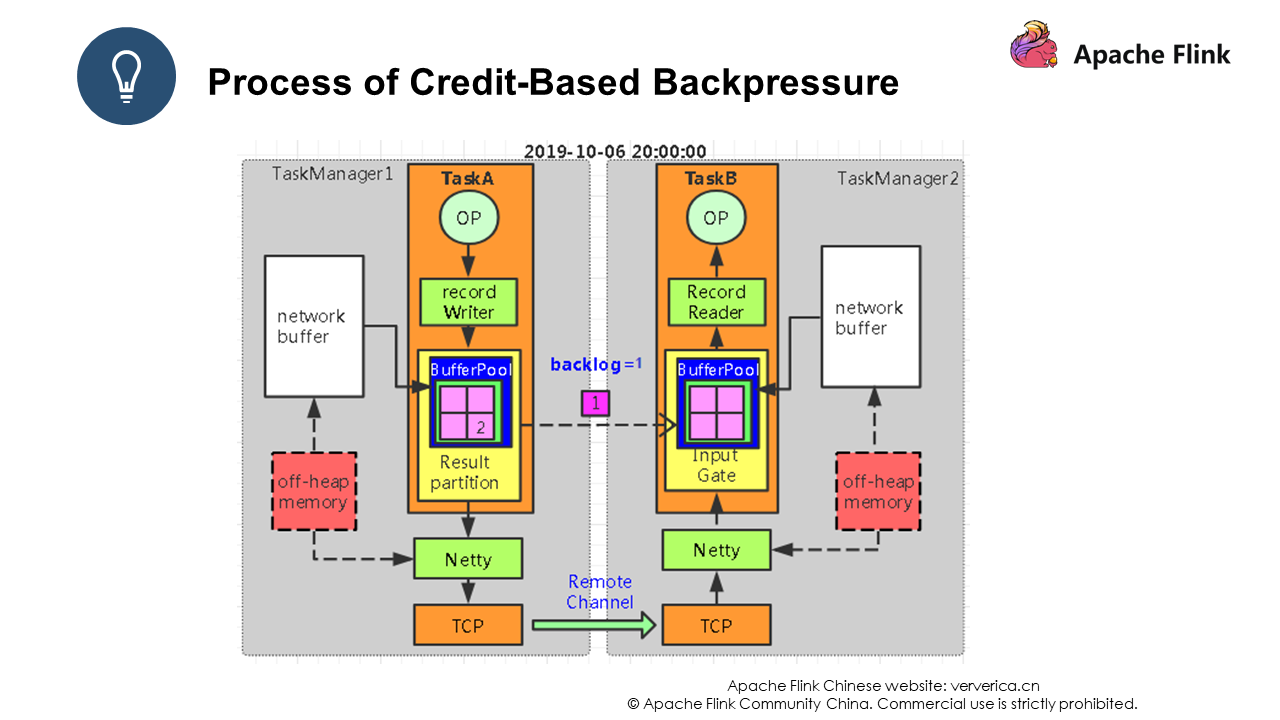

Let's use the following example to explain credit-based data exchange:

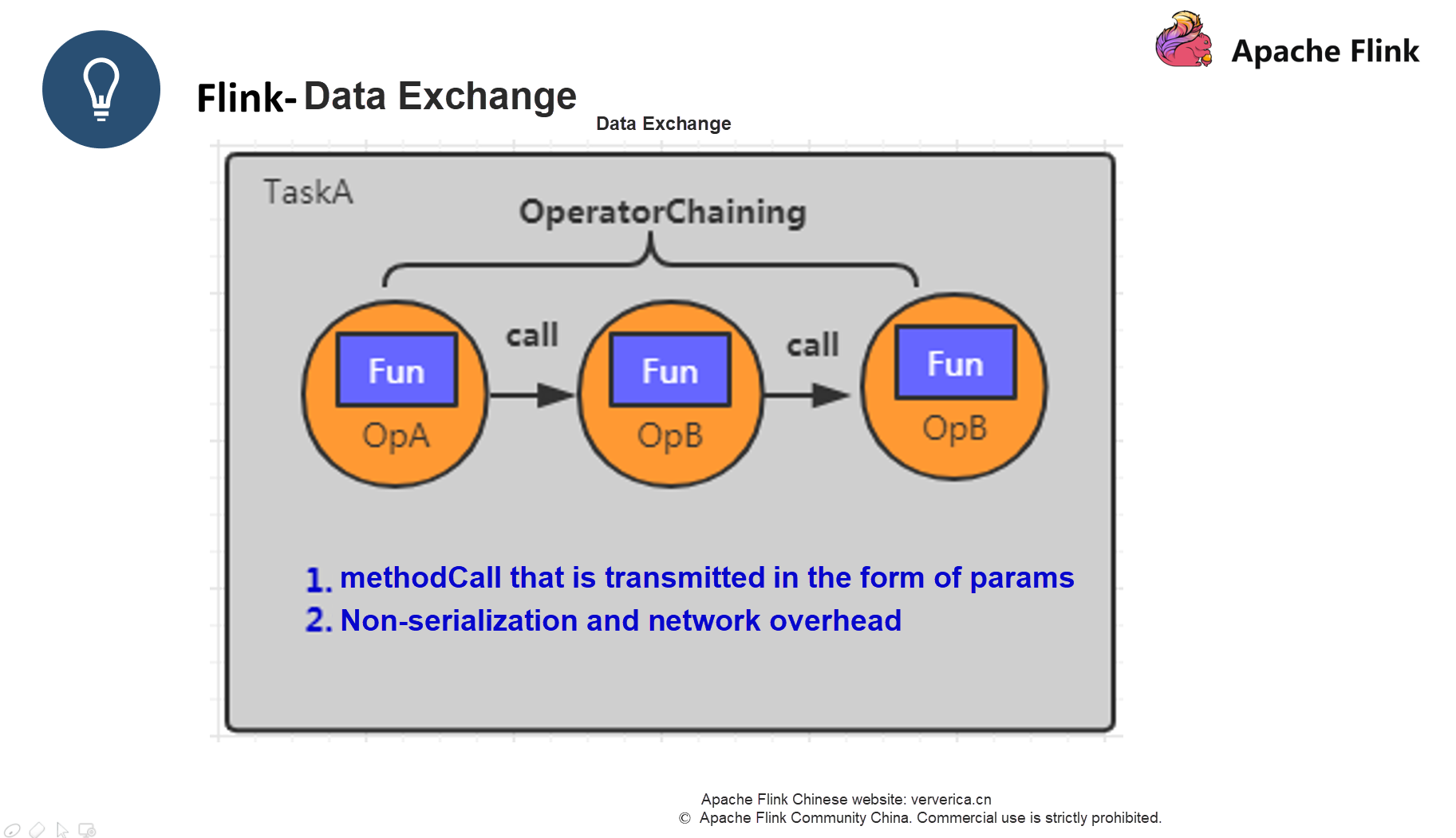

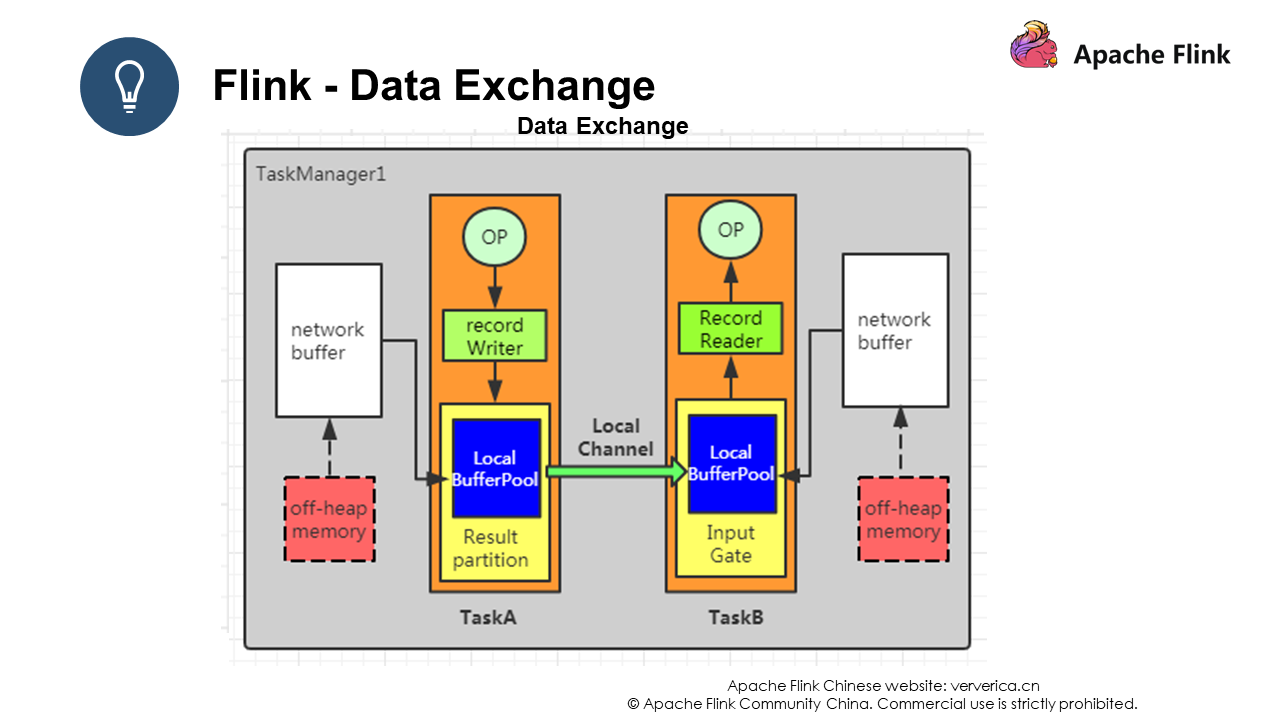

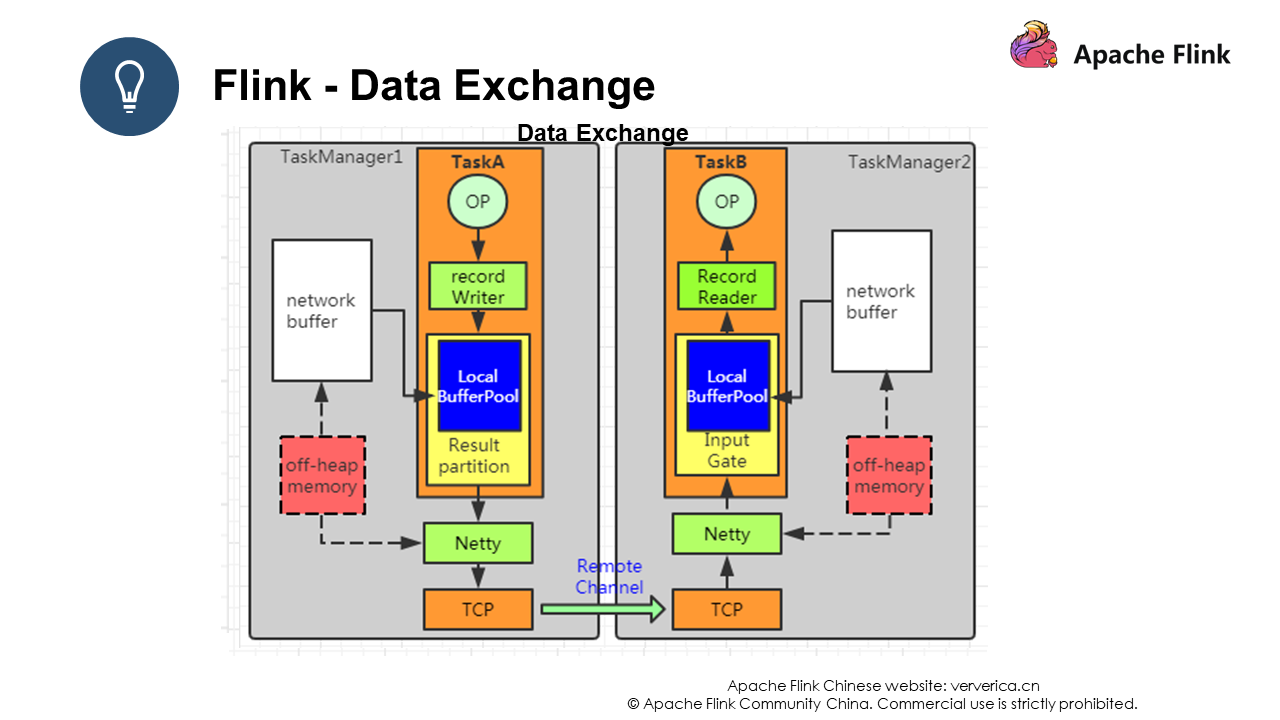

There are three types of data exchange in Flink: data exchange within a task, data exchange between tasks within a JVM, and data exchange between JVMs.

Data exchange within a task is implemented by using the forward strategy mentioned earlier, which avoids serialization and network overhead.

The second data exchange method is to exchange data through a Record Writer, where the data is serialized and then transferred to the Result Partition. Then, the data is passed to the Input Gate of another task through the Local Channel, deserialized, and then pushed to the Record Reader for operation.

The third data exchange method involves different JVMs, and therefore there will be a certain network overhead. The difference with the second type of data exchange is that data is first pushed to Netty, which then pushes the data to a remote task.

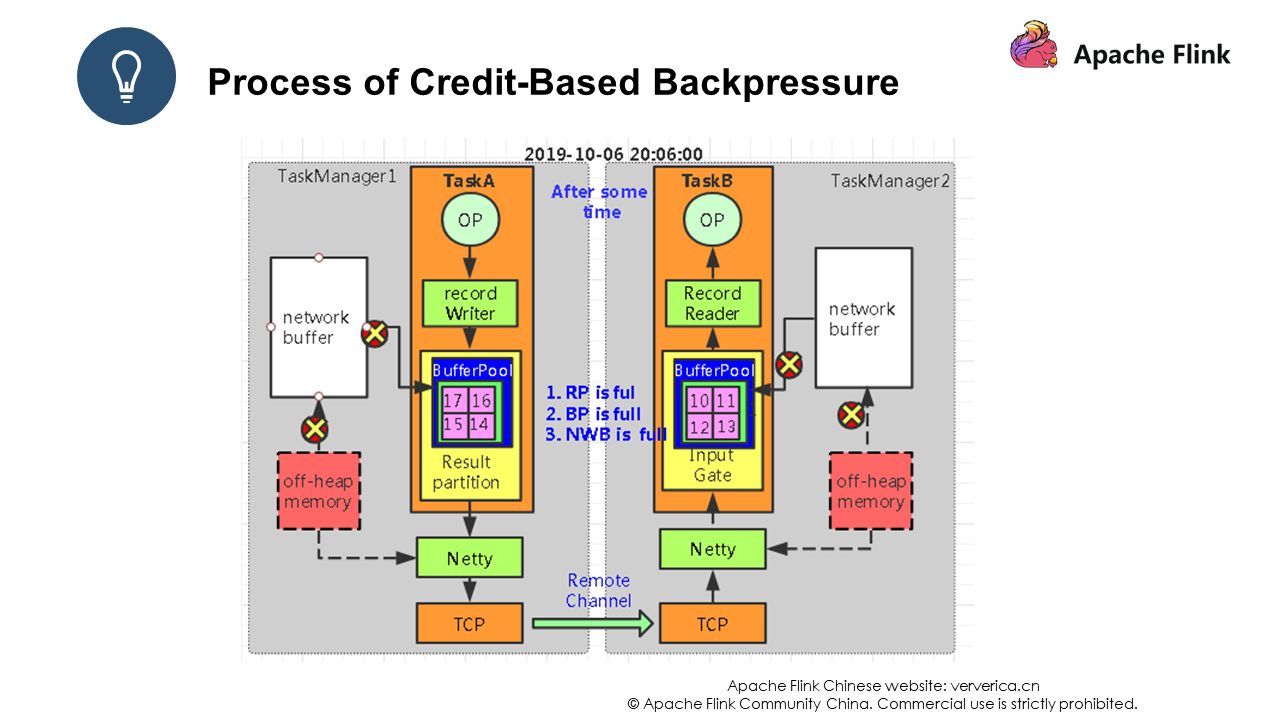

As you can see, event 1 has been pushed to Task B with a backlog whose value is 1. The backlog is actually used to let the consumer perceive the situation at the production end.

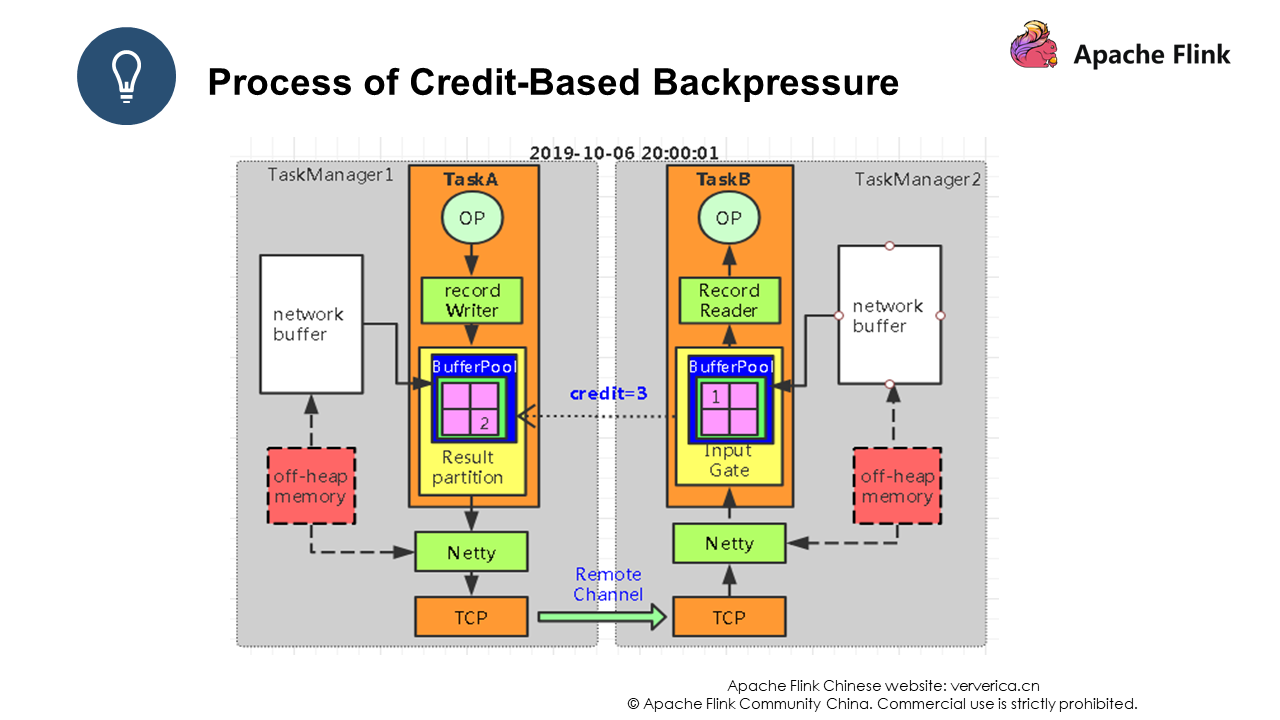

After event 1 is received by Task B, Task B returns an ack and a credit whose value is 3 to Task A. The credit is used to inform Task A of the number of data records Task B can receive. In this way, Flink allows both the producer and the consumer to perceive each other's status.

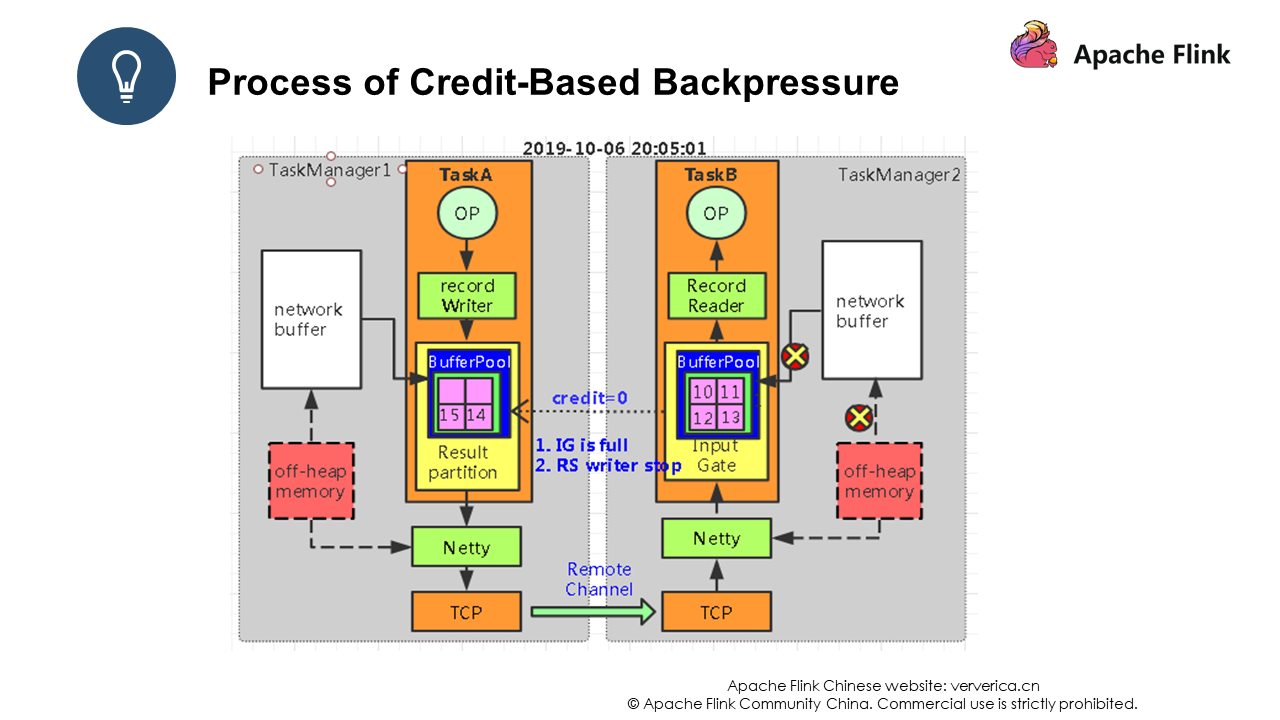

After a period of time, the bounded buffer in Task B is full, and Task B replies Task A with a credit whose value is 0. At this time, the channel will stop working, and Task A will no longer send data to Task B.

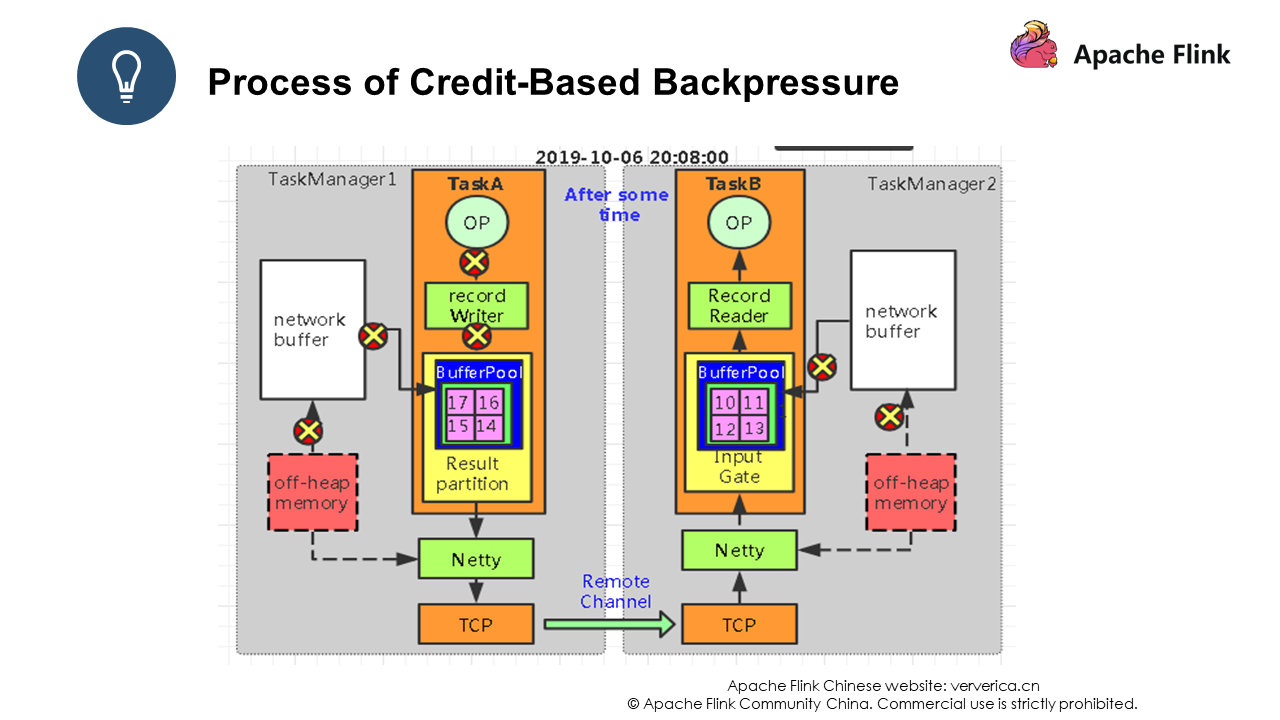

After another period of time, the bounded buffer in Task A encounters data backlog. Then, common problems such as throughput decrease and processing delays occur. This is because the entire system is in a stagnant state at this time. As shown in Figure 2, all processes are marked with "X", indicating that all processes have stopped working.

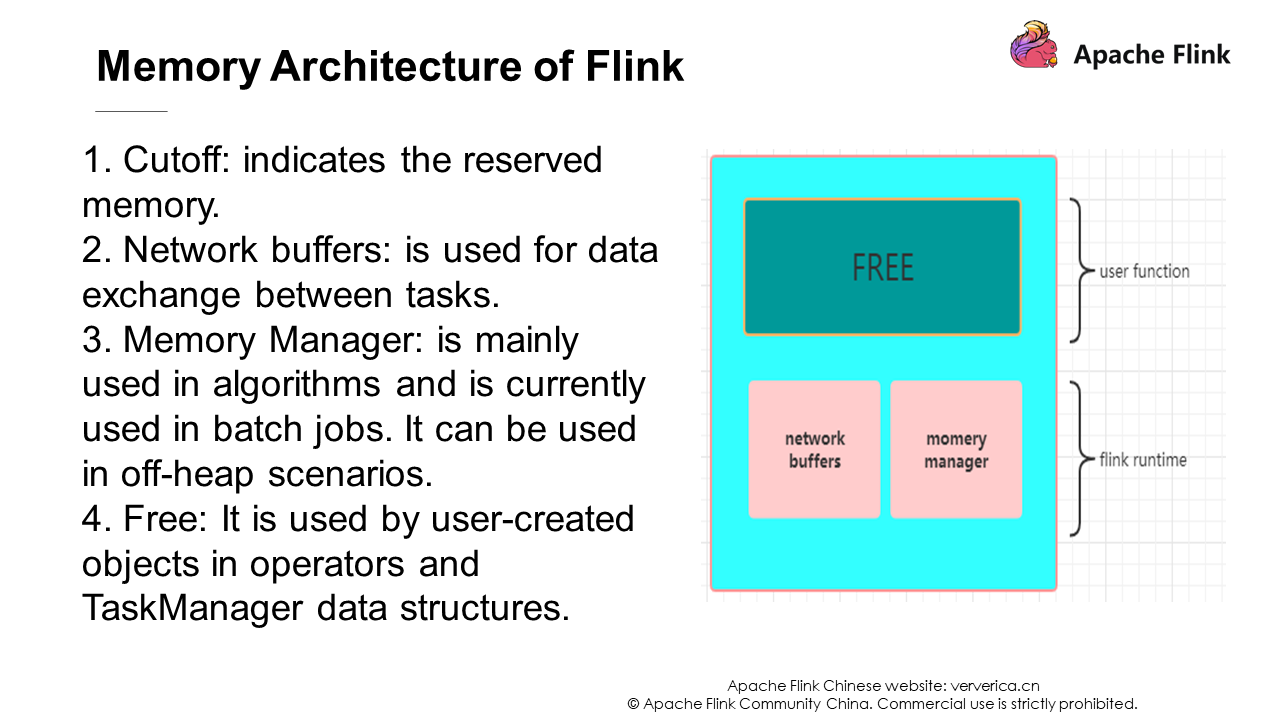

JVM is a very complex system. Insufficient memory in a JVM may cause OOM, resulting in system crash. After Flink obtains the allocated memory, Flink will allocate a cutoff reserved memory space to ensure system security. Network buffers correspond to the bounded buffers that we mentioned earlier. The memory manager is a memory pool, and this part of memory can be set as on-heap or off-heap memory. In a streaming job, it is usually set as off-heap memory, and the free part is the memory block provided to users.

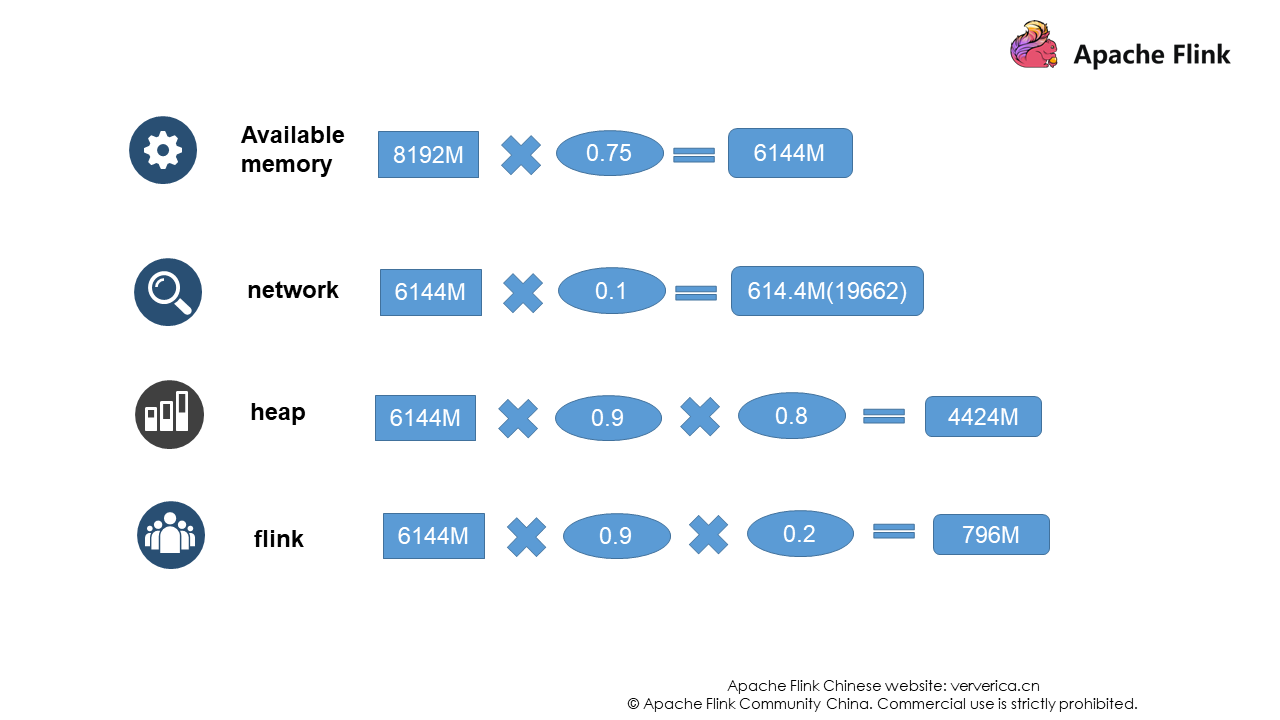

Now assume that the memory allocated to this TaskManager is 8 GB.

However, the actual situation is that Flink first knows the size of the heap memory and then deduces the size of other memory.

"Check the backpressure, checkpoint, and metrics in sequence. The latency and throughput are the core problems. Pay close attention to the amount of resources. Troubleshooting starts with GC."

This is a commonly practiced "formula" when locating for problems in Flink jobs. You should check the backpressure first when you troubleshoot problems. Then check whether the checkpoint is aligned for a long time and whether the state is large. These are closely related to the system throughput. The metrics indicate the metrics demonstrated in Flink UI. We mainly need to check the latency, throughput, system resources, and GC logs.

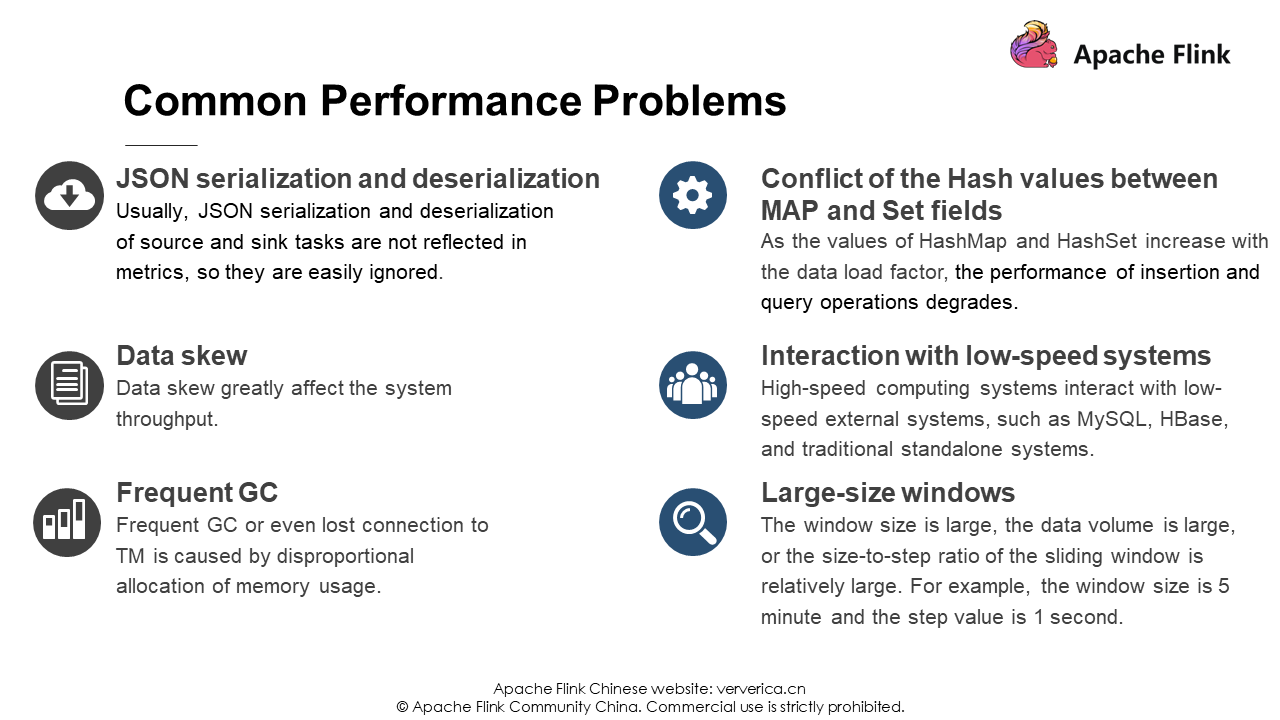

The following briefly explains common performance problems:

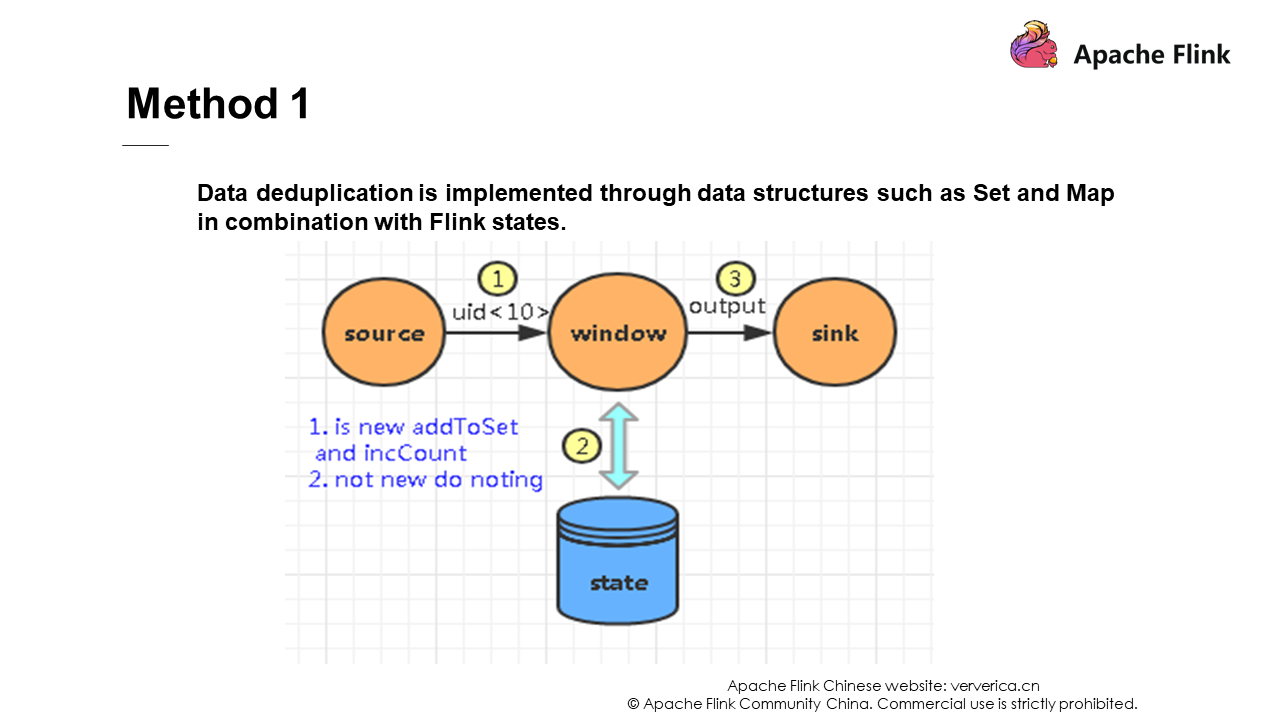

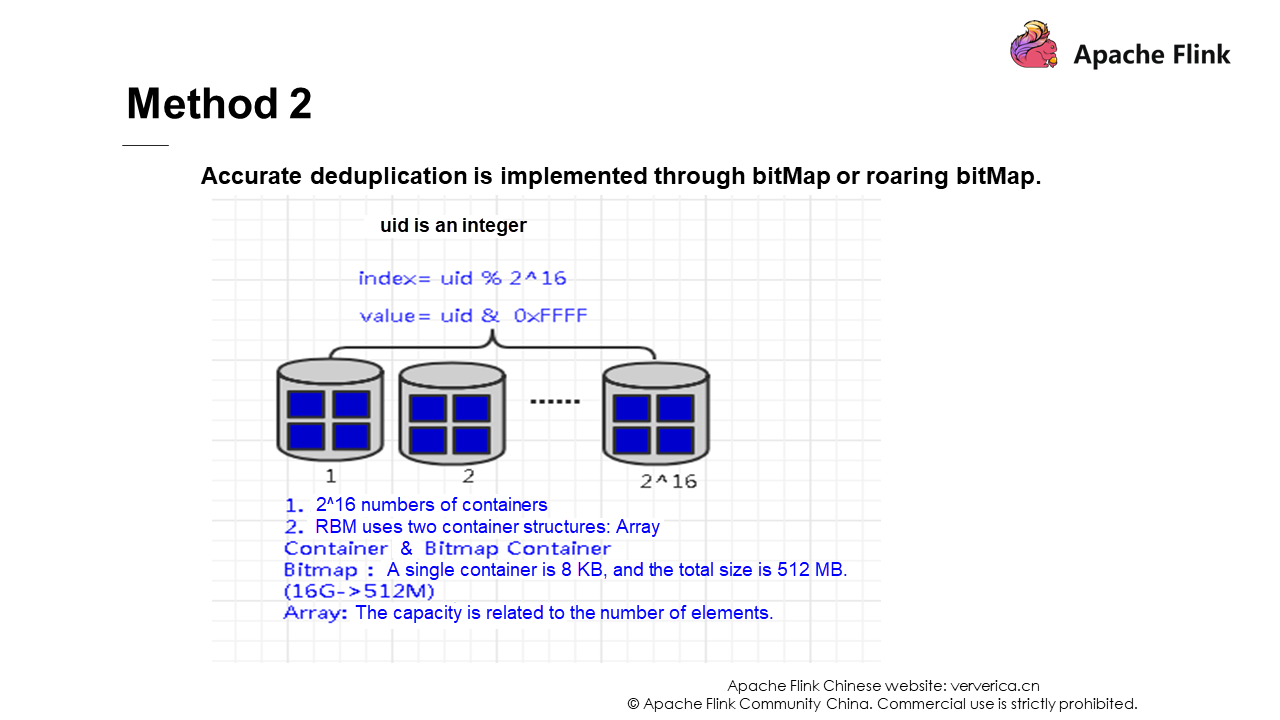

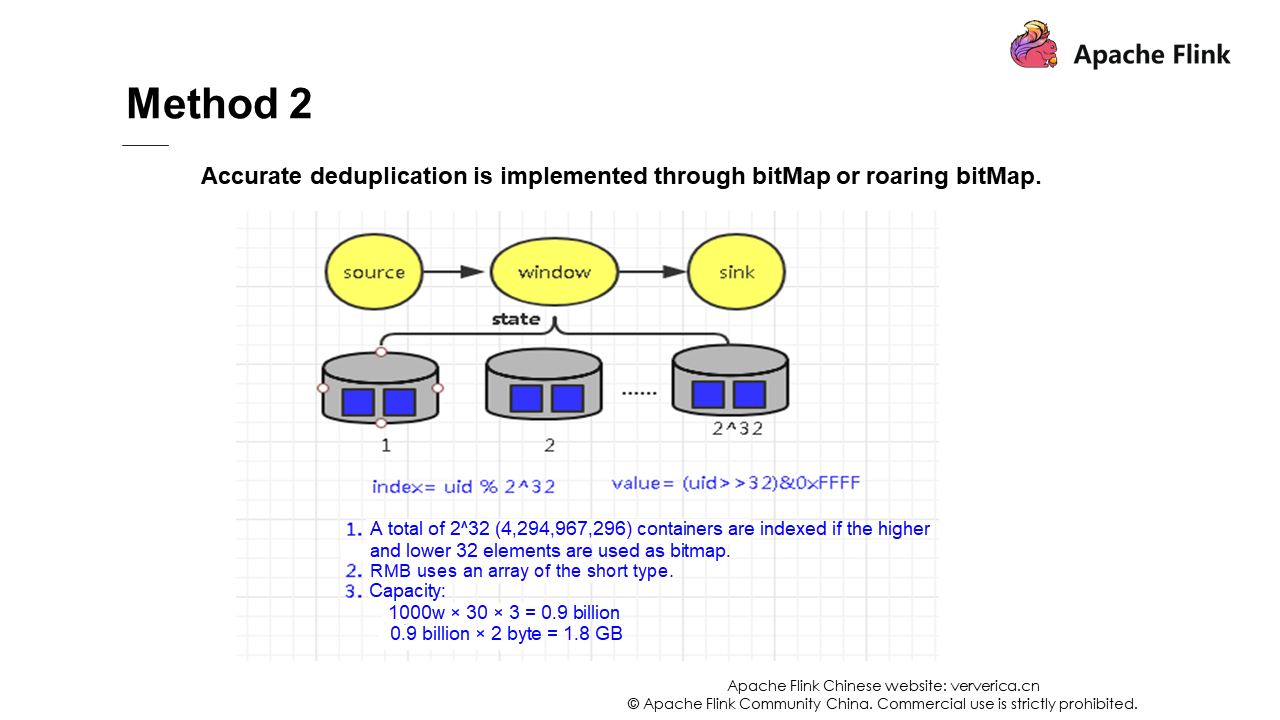

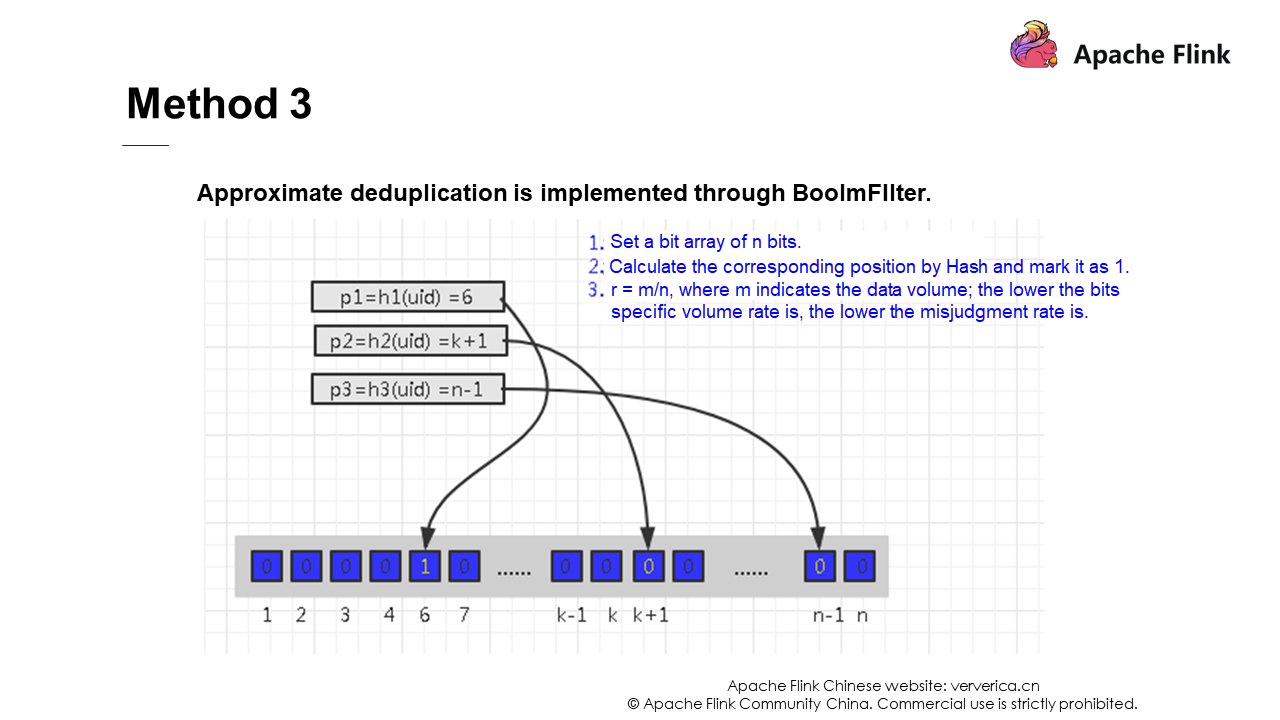

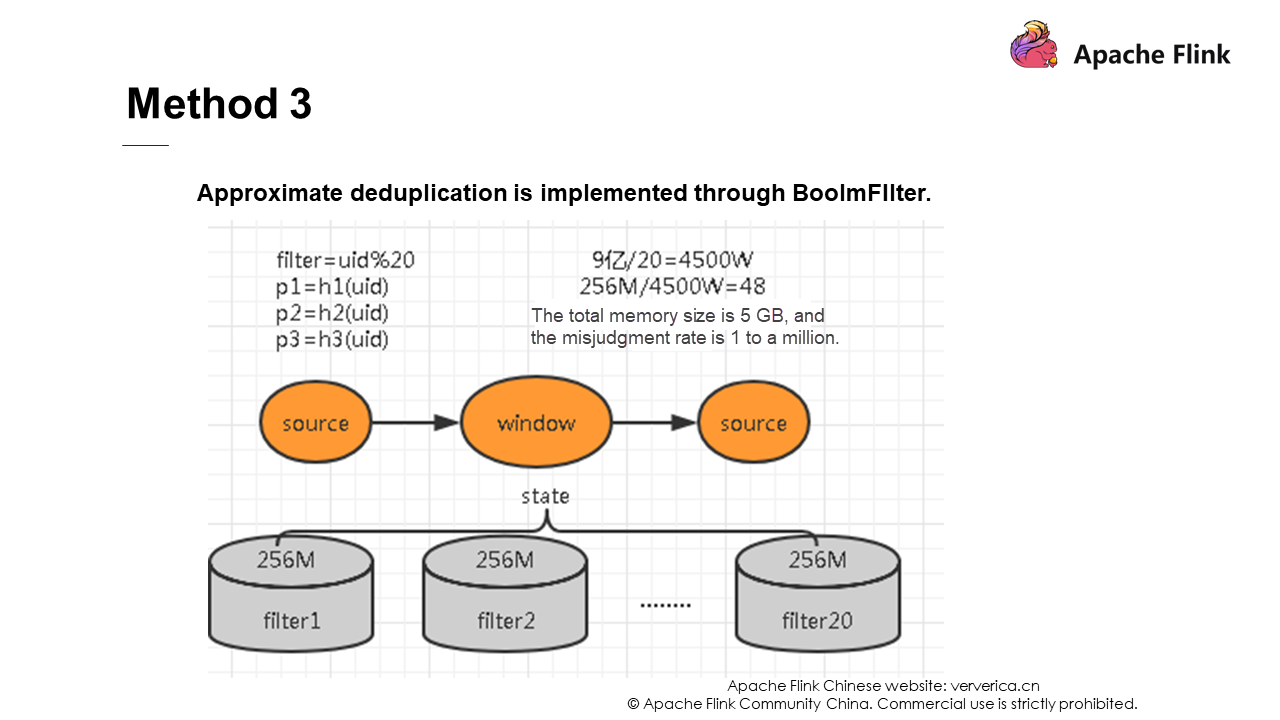

We can use some data structures, such as Set or Map, in combination with Flink states for deduplication. However, these deduplication solutions cause dramatic performance drops as the data size increases. Examples of problems include the performance problems of the write operations caused by hash conflicts, the GC problem caused by excessive memory, and the lost connection with TaskManger.

Solution 2 and solution 3 use data structures to deduplicate data. If you are interested, you can learn about them yourself. They will not be described in detail here.

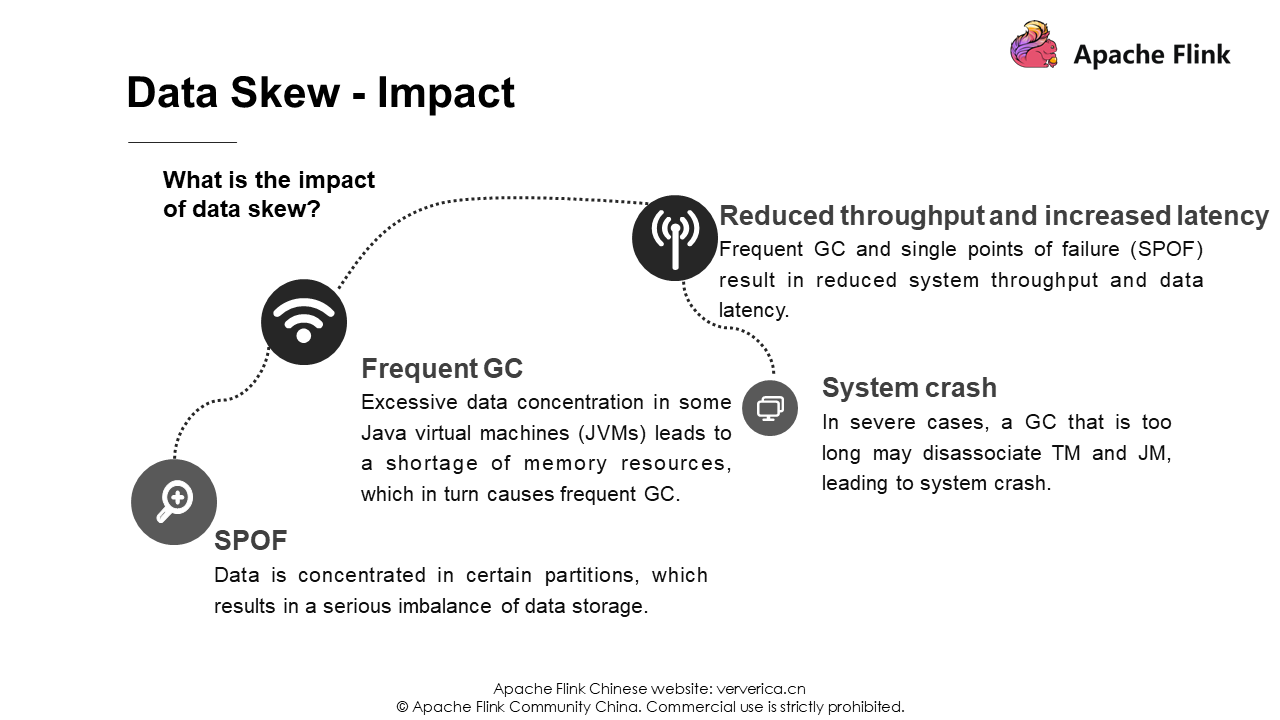

Data skew is a frequent problem that everyone will encounter, and there are many solutions.

The first scenario is that a concurrency setting is lower than the number of partitions, resulting in uneven consumption mentioned previously.

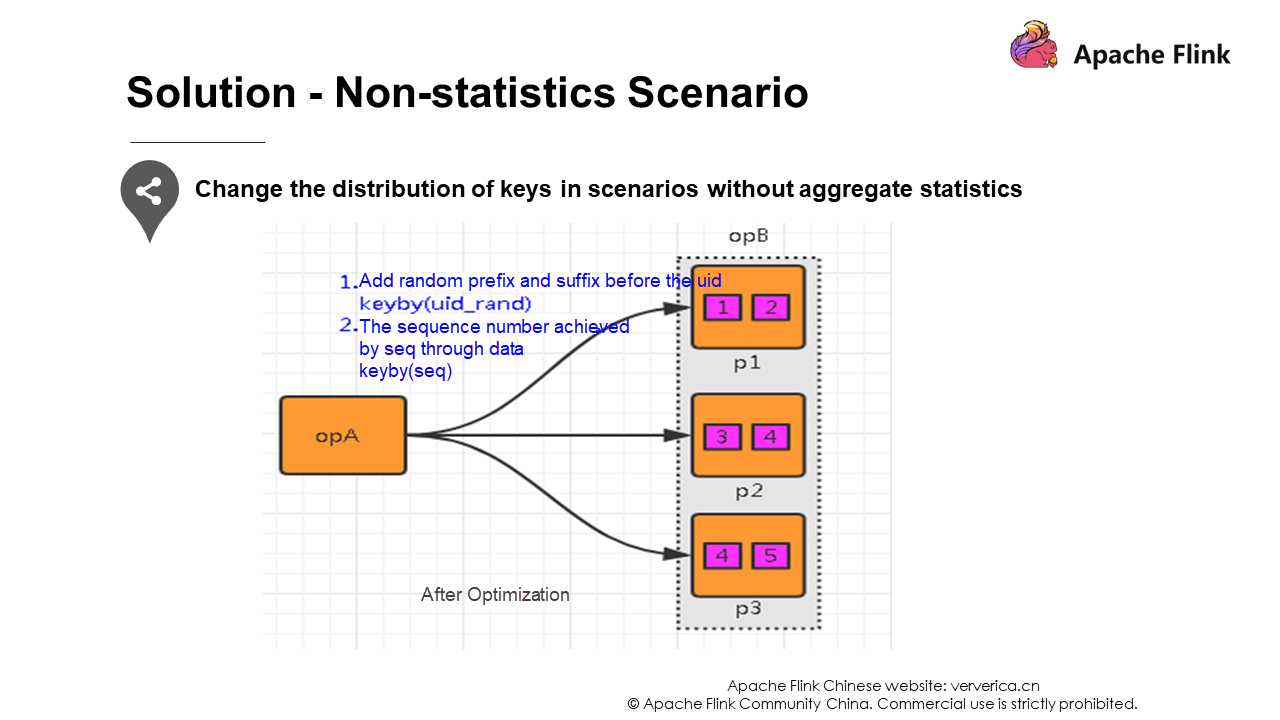

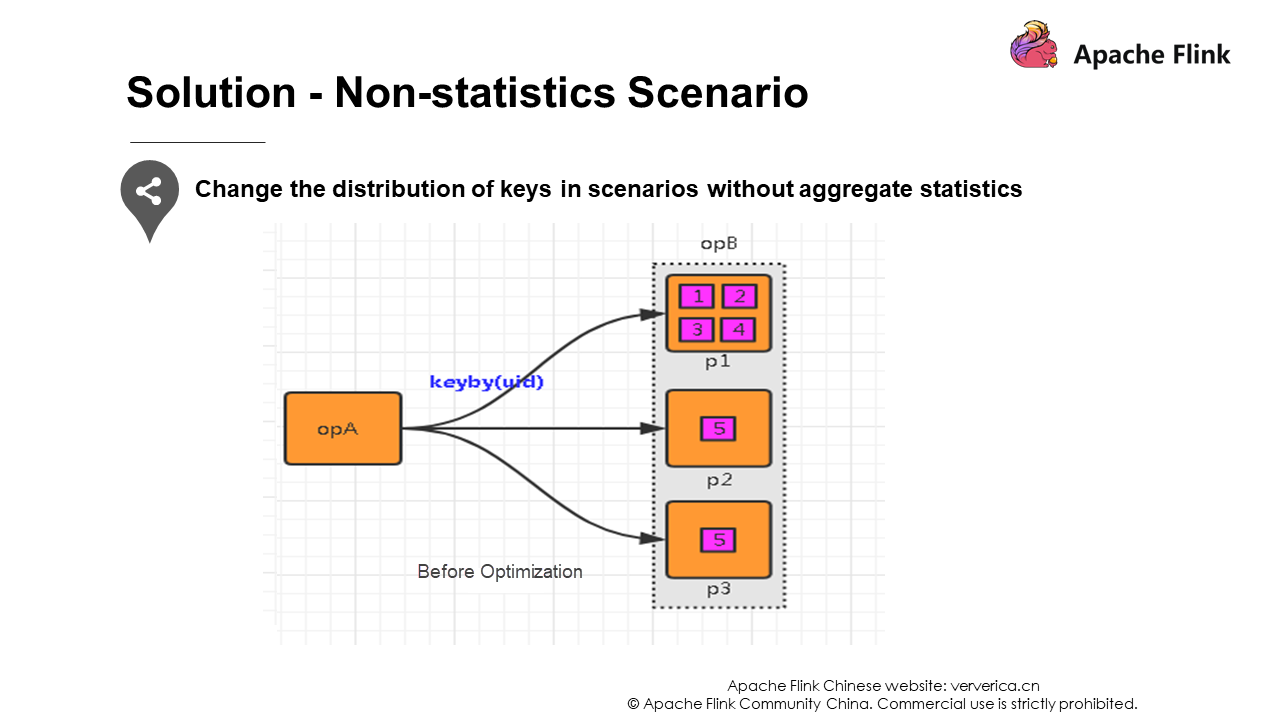

The second scenario is when keys are distributed unevenly. You can add random prefixes to break apart their distributions so that the data will not be concentrated in several tasks.

Perform a local aggregation on the same key in each node, similar to the local combiner in MapReduce. After map-side pre-aggregation, each node only has one identical key locally, because multiple identical keys are aggregated. When other nodes are pulling the same key on all nodes, the amount of data to be pulled is greatly reduced, therefore reducing disk I/O operations and network transmission overhead.

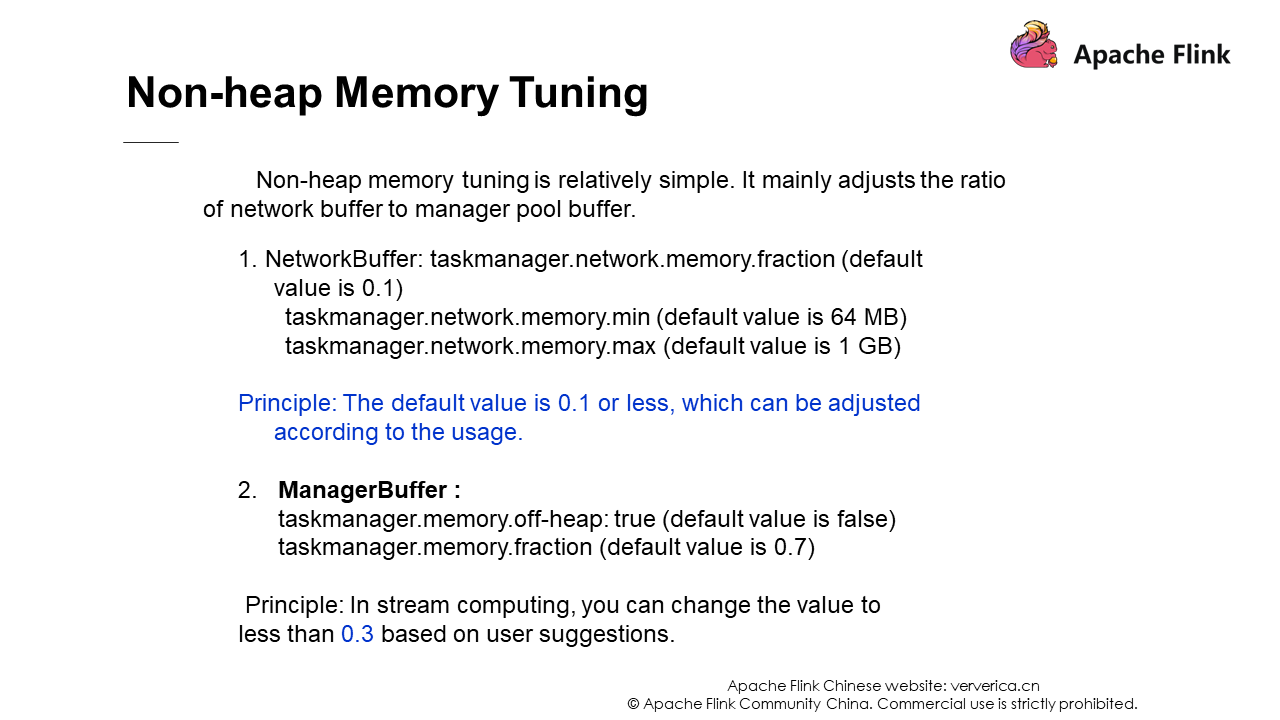

We have just mentioned the memory structure of Flink. So, we know that tuning mainly involves tuning the heap memory, the network buffer and manager pool of non-heap memory. These are basically controlled by parameters.

We need to adjust these parameters according to the actual situation. Here are only some suggestions. Flink's streaming jobs do not use ManagerBuffer much, so ManagerBuffer is usually set to be smaller than 0.3.

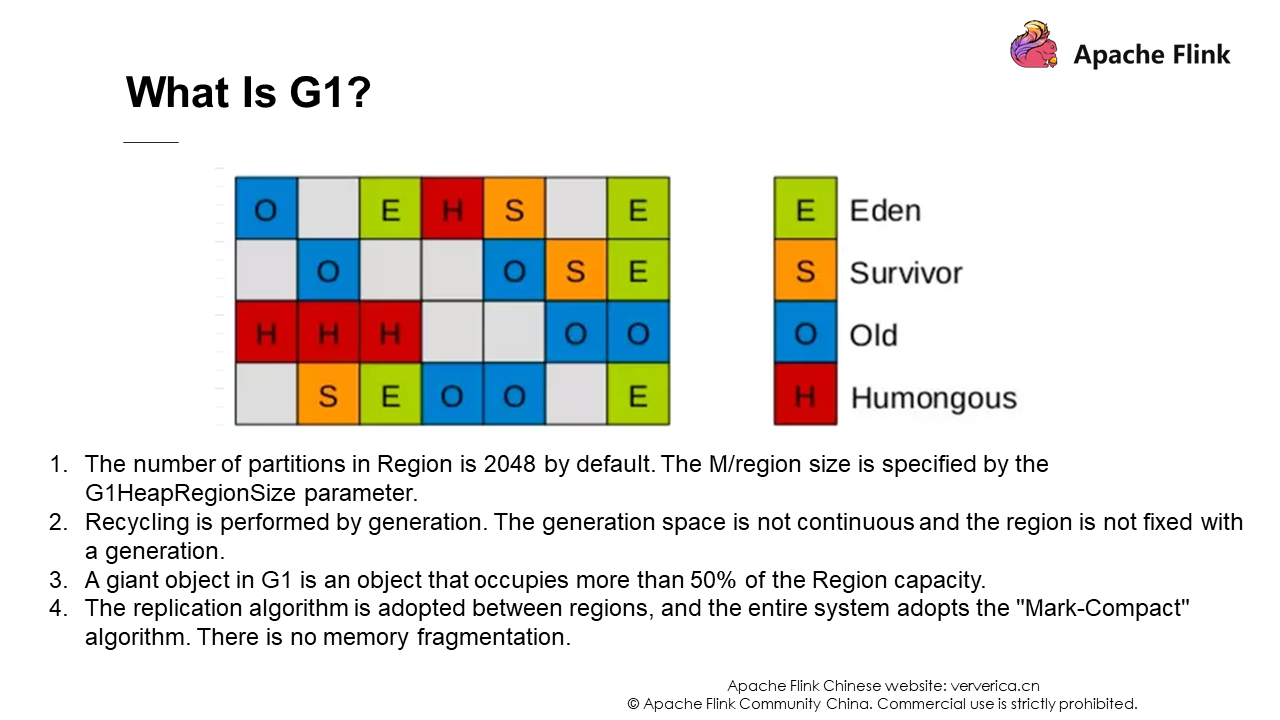

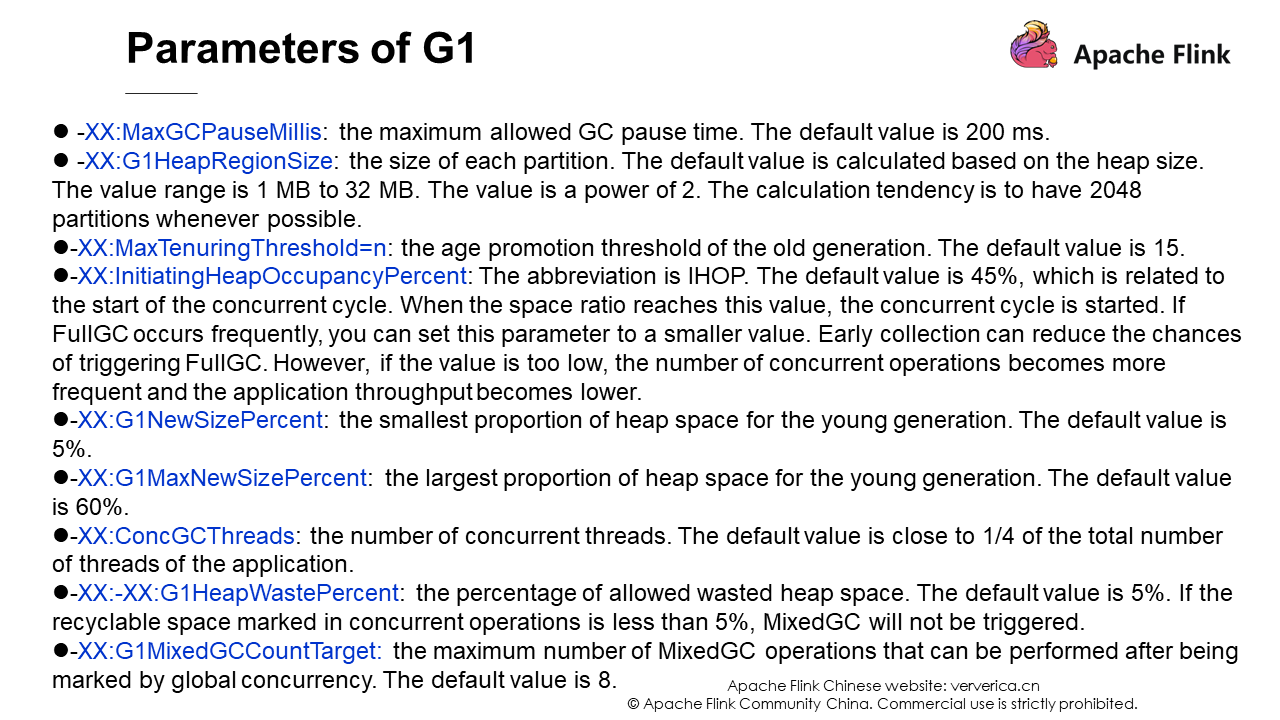

The tuning of heap memory is related to JVM and mainly changes the default garbage collector to G1. This is because the default Parallel Scavenge has a serialization problem for the old-generation GC, and its full GC takes a long time. The following is an introduction to G1. You can find a lot of information about G1 on the Internet, so it will not be elaborated here.

This article explains Flink's checkpoint mechanism, backpressure mechanism, and memory model, and analyzes some tuning policies based on the memory model.

Data Warehouse: In-depth Interpretation of Flink Resource Management Mechanism

206 posts | 54 followers

FollowApache Flink Community China - September 27, 2020

Apache Flink Community China - September 27, 2020

Apache Flink Community China - September 27, 2020

Apache Flink Community China - September 16, 2020

Apache Flink Community China - January 11, 2022

Apache Flink Community China - September 27, 2020

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Apache Flink Community