Alibaba Cloud is a cloud computing provider that offers a wide range of cloud products and services, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). One of their flagship offerings is the Machine Learning Platform for AI (PAI), which provides comprehensive AI capabilities to developers and enterprises. PAI-EAS (Elastic Algorithm Service) is a component of PAI that allows users to deploy and manage AI applications easily.

Llama 2 is an open-source large language model (LLM) developed by Meta. It is a powerful model that can generate text based on a given prompt. Llama 2 has different versions with varying parameters, including 7 billion, 13 billion, and 70 billion. Compared to its predecessor, Llama 1, Llama 2 has more training data and a longer context length, making it more versatile and accurate in generating natural language.

Generative AI is a branch of artificial intelligence that focuses on creating AI models (like Llama 2) capable of generating new content, such as text, images, and music. It is widely used in various applications, including chatbots, virtual assistants, content generation, and more.

We will implement Llama on PAI-EAS, which includes the PAI-Blade inference accelerator. Let's get to know the revolutionary PAI Blade, a game-changer in accelerating LLM inference speed on PAI-EAS.

The PAI Blade is a specially designed hardware accelerator engineered to optimize the performance of LLMs. Leveraging advanced technologies and algorithms allows the PAI Blade to supercharge the inference speed of LLMs up to six times faster than traditional methods. This means you can process more data, generate responses quicker, and achieve enhanced efficiency in your language-based applications.

Now, let's turn our attention to the cost-saving potential that the PAI Blade brings to the table. With its accelerated inference speed, the PAI Blade enables you to achieve higher throughput while utilizing fewer computing resources. This translates to a reduced need for expensive GPU instances, resulting in significant cost savings for your deployment.

You can witness a remarkable boost in performance without compromising your budget by implementing Llama 2 on PAI-EAS, which harnesses the power of the PAI Blade. The combination of PAI-EAS and the PAI Blade creates a synergy that unleashes the true potential of Llama 2, making it an ideal choice for scenarios with demanding language processing requirements.

Unleash the full potential of Llama 2 on PAI-EAS with the PAI Blade and fuel your language-based applications with unprecedented power, efficiency, and cost-effectiveness.

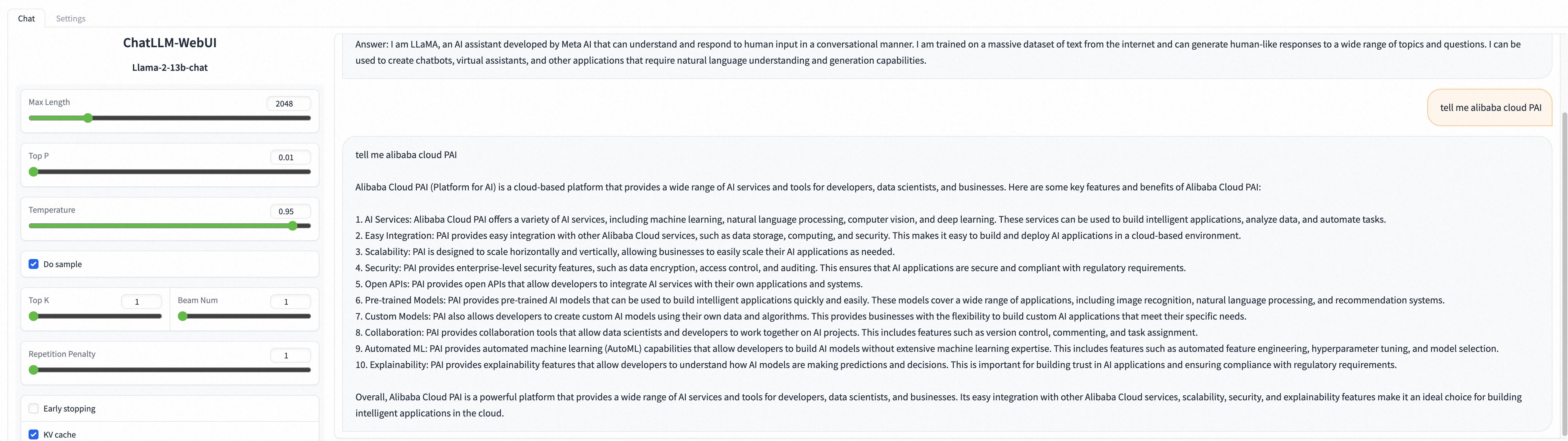

PAI-EAS provides a convenient way to deploy and run AI applications, including Llama 2 models. There are two methods to run Llama 2 on PAI-EAS: WebUI and API. The WebUI method allows you to interact with the model through a web interface, while the API method enables programmatic access to the model for integration with other applications.

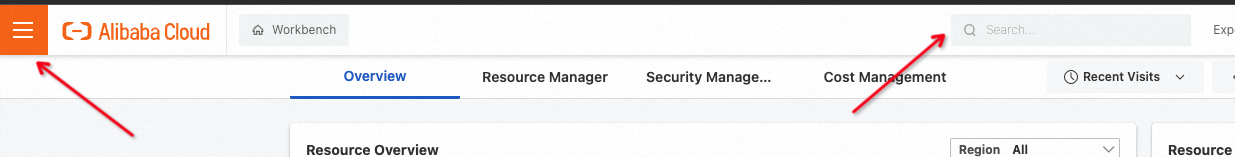

1. Log into the Alibaba Cloud Console, your gateway to powerful cloud computing solutions.

2. Navigate to the PAI page, where the magic of Machine Learning Platform for AI awaits. You can search for it or access it through the PAI console.

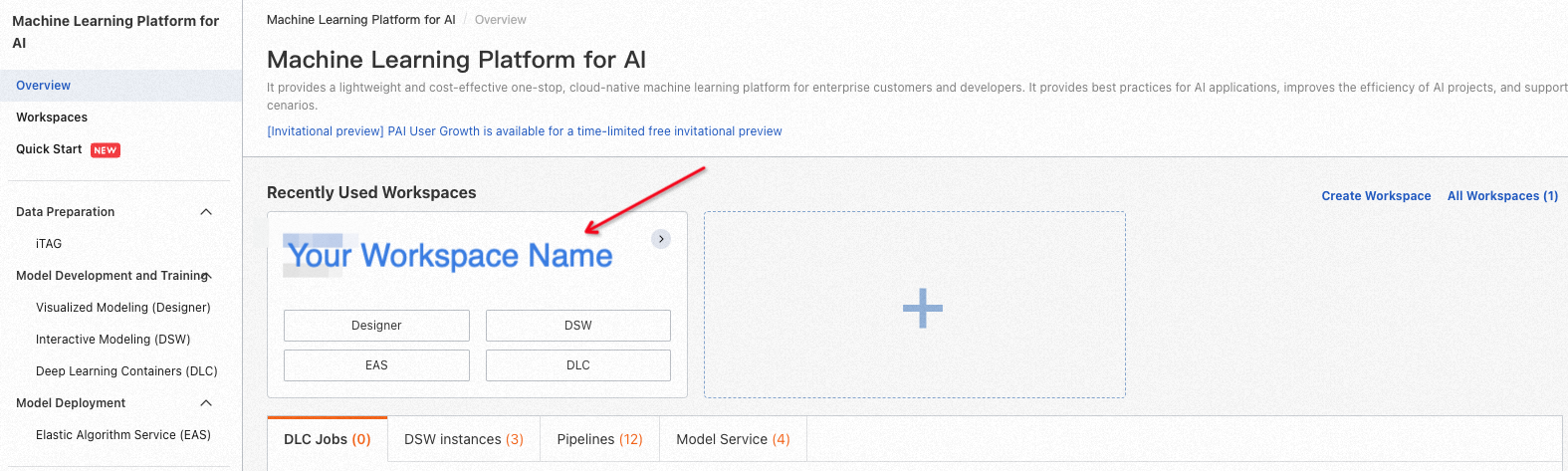

3. If you're new to PAI, fear not! Creating a workspace is a breeze. Just follow the documentation provided, and you'll be up and running in no time.

4. Immerse yourself in the world of workspaces as you click on the name of the specific workspace you wish to operate in. It's in the left-side navigation pane.

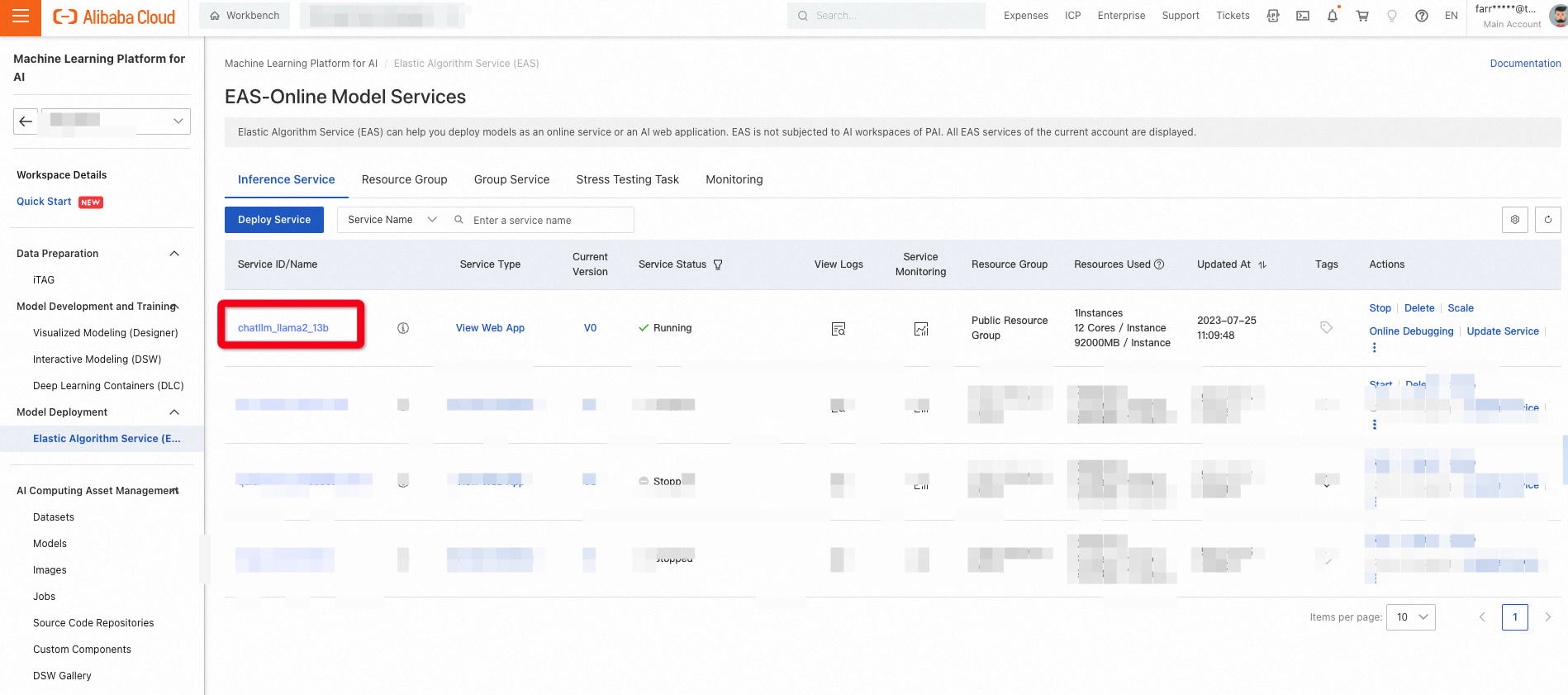

5. Continue your exploration by delving into the depths of model deployment. Within the workspace page, navigate to Model Deployment and select Model Online Services (EAS).

6. Click the Deploy Service button, beckoning you with promises of innovation and efficiency.

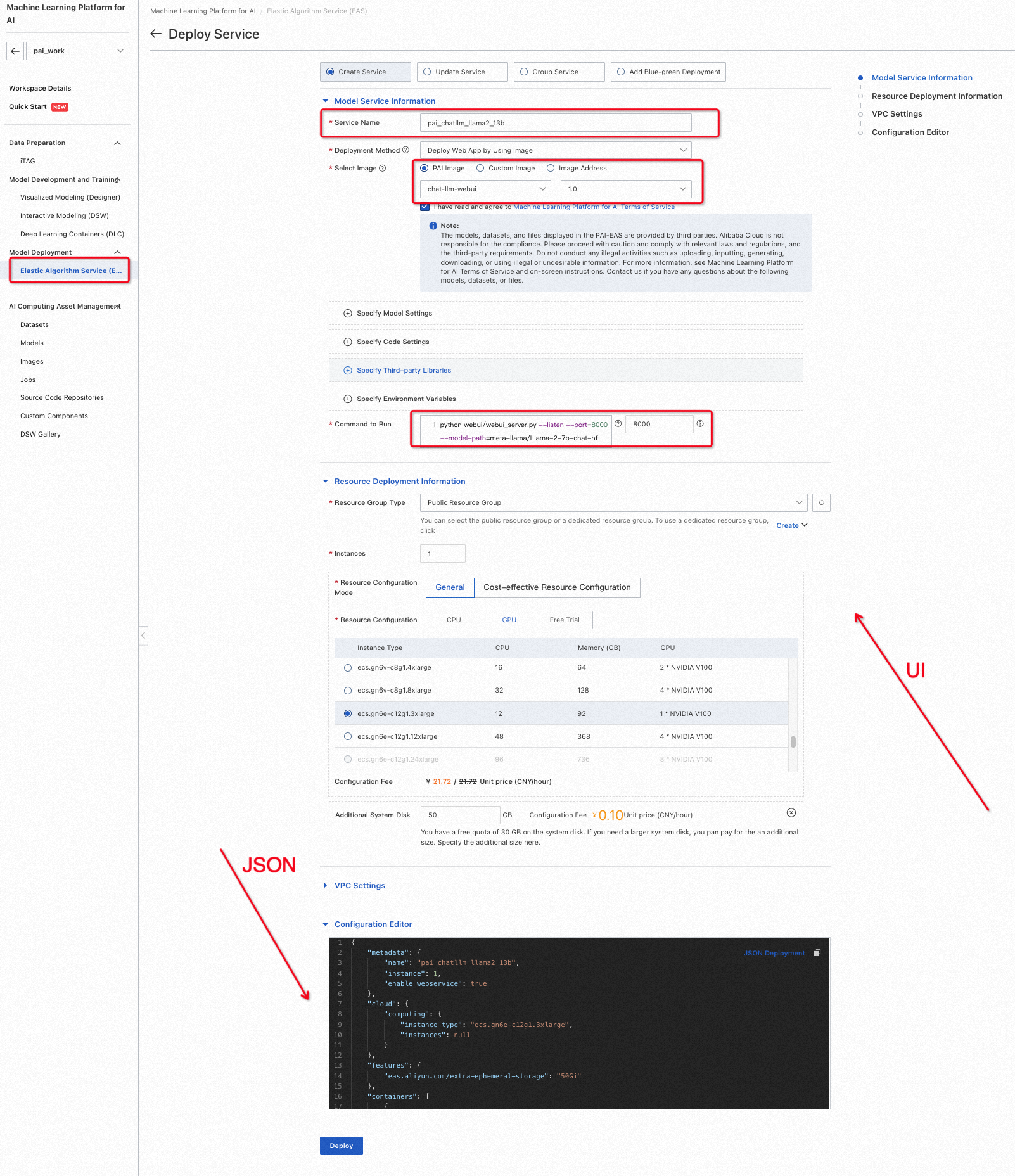

| Parameter | Depaint |

|---|---|

| Service name | The name of the custom service. The sample value used in this example is pai_chatllm_llama_2_13b. |

| Deployment method | Select Image deployment AI-Web application |

| Image selection | In the PAI platform image list, select chat-llm-webui and select 1.0 as the image version. Due to rapid version iteration, you can select the highest version of the image during deployment. |

| Run command | Run the following command: - If using the 13b model for deployment:_python webui/webui_server.py --listen --port=8000 --model-path=meta-llama/Llama-2-13b-chat-hf --precision=fp16_ - If using the 7b model for deployment:_python webui/webui_server.py --listen --port=8000 --model-path=meta-llama/Llama-2-7b-chat-hf_ |

| Port number | 8000 The WebUI command line parameter --listen is used to bind a program to an external service connection. |

| Resource group type | Select a public resource group |

| Resource configuration method | Select general resource configuration |

| Resource configuration | The GPU type must be selected. ecs.gn6e-c12g1.3xlarge is recommended for instance types. - The 13b model must run on gn6e or higher instance type. - The 7b model can run on the A10/GU30 instance type. |

| Additional system disk | Select 50GB |

For those who prefer a simpler approach, I will present the convenience of working with JSON format to configure your EAS deployment settings. Create a JSON file with the desired parameters, and you're good to go. All you need to do is update the name to suit your preferences. Here's an example of how your JSON file could look:

{

"cloud": {

"computing": {

"instance_type": "ecs.gn6e-c12g1.3xlarge",

"instances": null

}

},

"containers": [

{

"image": "eas-registry-vpc.cn-hangzhou.cr.aliyuncs.com/pai-eas/chat-llm-webui:1.0",

"port": 8000,

"script": "python api/api_server.py --precision=fp16 --port=8000 --model-path=meta-llama/Llama-2-13b-chat-hf"

}

],

"features": {

"eas.aliyun.com/extra-ephemeral-storage": "50Gi"

},

"metadata": {

"instance": 1,

"name": "your_custom_service_name",

"rpc": {

"keepalive": 60000,

"worker_threads": 1

}

},

"name": "your_custom_service_name"

}

Update the your_custom_service_name field with your desired custom service name, and you're all set. Embrace the simplicity and efficiency of working with JSON to streamline your EAS deployment process.

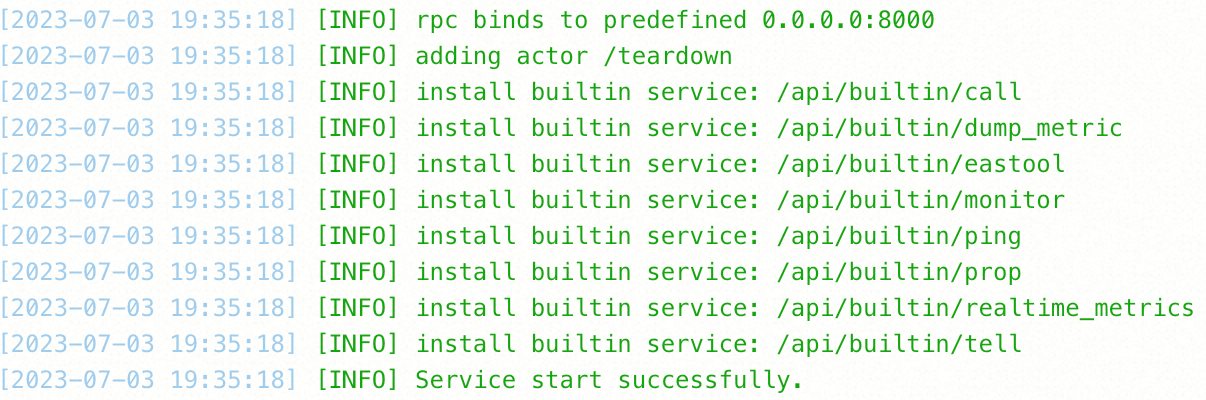

7. Click the Deploy button as the model deployment takes shape before your eyes. Patience is key as you await completion.

8. Start WebUI for model inference:

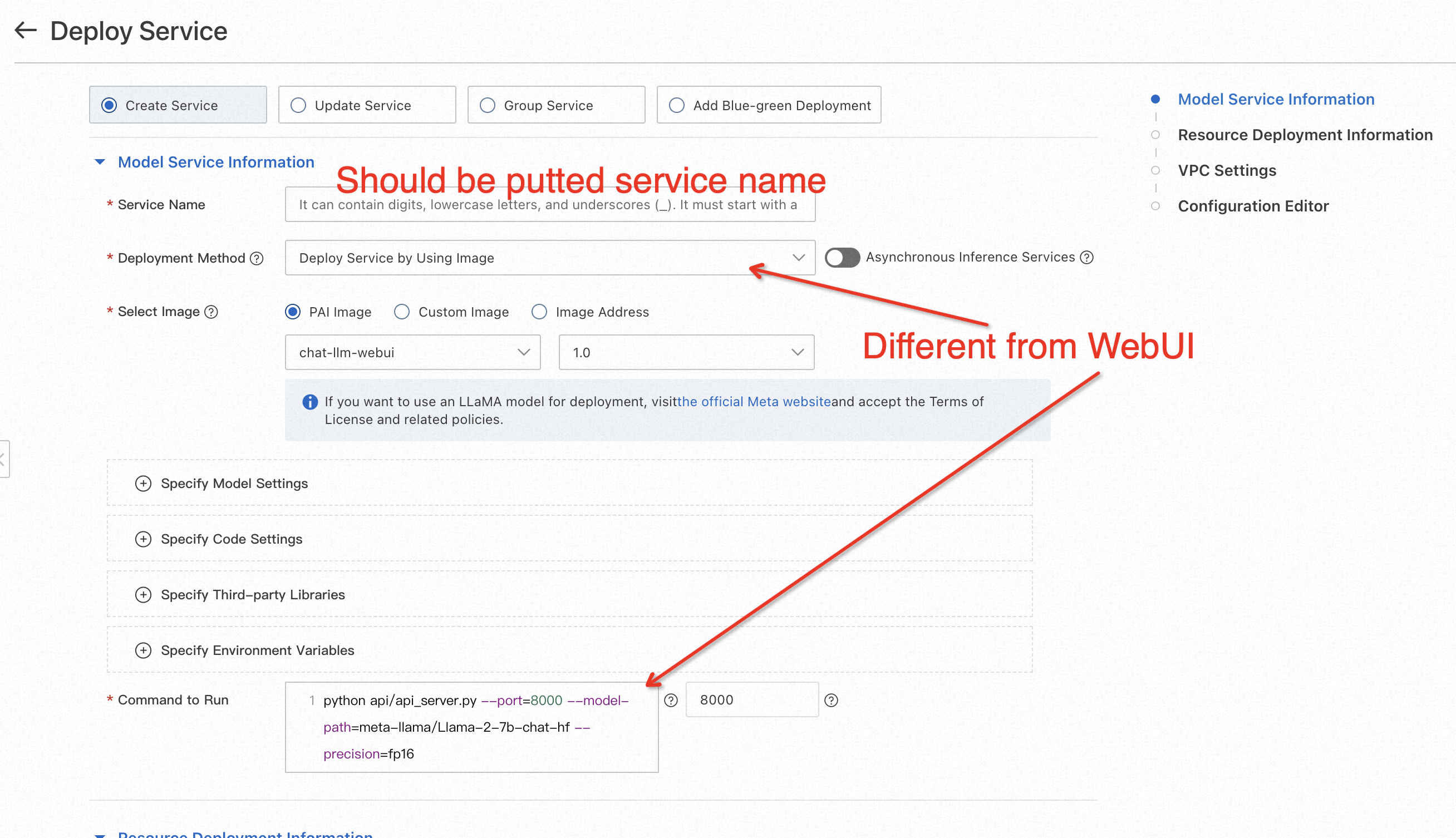

In order to extend the capabilities of the Llama 2 model on PAI-EAS, let's explore the process of deploying it with API support. Follow these steps to harness the power of API-driven deployment:

1. Configure the deployment parameters (following steps 1-3 from the previous method)

2. The deployment method should be "Deploy Service by Using Image."

3. In the Run command field, use the following command to run the Llama 2 model with API support:

python api/api_server.py --port=8000 --model-path=meta-llama/Llama-2-13b-chat-hf --precision=fp16 --keepalive=5000000

python api/api_server.py --port=8000 --model-path=meta-llama/Llama-2-7b-chat-hf --precision=fp16 --keepalive=5000000

4. The configuration editor should be changed by adding keep-alive time. Depending on GPU-server power, it can be a lower or bigger value.

{

...

...

...

"metadata": {

"instance": 1,

"name": "your_custom_service_name",

"rpc": {

"keepalive": 5000000,

"worker_threads": 1

}

},

"name": "your_custom_service_name"

}

5. Click the Deploy button and wait for the model deployment to complete.

6. Now, let's utilize the power of the API for model inference. Send HTTP POST requests to the endpoint URL of the deployed service. The API supports JSON payload for input and output.

The provided code is a Python implementation to use the pai_llama class for generating responses using the Llama 2 model deployed on PAI-EAS. It's recommended to intall websocket-client, pip install websocket-client.

import websocket

import json

import struct

class pai_llama:

def __init__(self, api_key):

self.api_key = api_key

self.input_message = ""

self.temperature = 0.0

self.round = 1

self.received_message = None

# host url should begin with "ws://"

host = "ws://you_host_url"

self.host = host

def on_message(self, ws, message):

self.received_message = message

def on_error(self, ws, error):

print('error happened .. ')

print(str(error))

def on_close(self, ws, a, b):

print("### closed ###", a, b)

def on_pong(self, ws, pong):

print('pong:', pong)

def on_open(self, ws):

print('Opening WebSocket connection to the server ... ')

params_dict = {}

params_dict['input_ids'] = self.input_message

params_dict['temperature'] = self.temperature

params_dict['repetition_penalty'] = self.repetition_penalty

params_dict['top_p'] = self.top_p

params_dict['max_length'] = self.max_length

params_dict['num_beams'] = self.num_beams

raw_req = json.dumps(params_dict)

ws.send(raw_req)

# for i in range(self.round):

# ws.send(self.input_message)

ws.send(struct.pack('!H', 1000), websocket.ABNF.OPCODE_CLOSE)

def generate(self, message, temperature=0.95, repetition_penalty=1, top_p=0.01, max_length=2048, num_beams=1):

self.input_message = message

self.temperature = temperature

self.repetition_penalty= repetition_penalty

self.top_p = top_p

self.max_length = max_length

self.num_beams = num_beams

ws = websocket.WebSocketApp(

self.host,

on_open=self.on_open,

on_message=self.on_message,

on_error=self.on_error,

on_pong=self.on_pong,

on_close=self.on_close,

header=[

f'Authorization: {self.api_key}'],

)

# setup ping interval to keep long connection.

ws.run_forever(ping_interval=2)

return self.received_message.split('<br/>')[1:]

client = pai_llama(api_key="your_token_as_API")

res = client.generate(

message="tell me about alibaba cloud PAI",

temperature=0.95

)

print(f"Final: {res}")

print(" ")You need to replace you_host_url with the actual host URL of your deployed Llama 2 model on PAI-EAS to use this code. Additionally, provide your API key as the api_key parameter when creating an instance of the pai_llama class. Adjust the input message and other parameters according to your requirements.

Follow these steps to find the host URL and token required for the code:

After completing these steps, you will have the correct host URL and token to use in the code, ensuring a successful connection and interaction with your deployed Llama 2 model on PAI-EAS.

The HTTP access method is suitable for single-round questions and answers. The specific methods are listed below:

curl $host -H 'Authorization: $authorization' --data-binary @chatllm_data.txt -vWhere:

$Host: You need to replace it with the service access address. On the service details page, click View call information to obtain it on the public address call tab.$Authorization: Replace it with a service Token. You can click View call information on the service details page to obtain the call information on the public IP address call tab.chatllm_data.txt: Create a chatllm_data.txt file and enter specific questions in the fileIf you require a custom model for inference (such as a fine-tuned or downloaded model), you can easily mount it on Llama 2 deployed on PAI-EAS. This capability provides the flexibility to leverage your models for specific use cases. Here's how you can mount a custom model using Object Storage Service (OSS):

1. Configure your custom model and its related configurations in the huggingface or modelscope model format. Store these files in your OSS directory. The file structure should follow the instance file format.

2. Click Update Service in the actions column of the EAS service for your Llama 2 deployment.

3. In the model service information section, configure the following parameters:

4. In the run command, include the --model-path=/llama2-finetune parameter. For example:

python webui/webui_server.py --listen --port=8000 --model-path=/Llama2-finetuneAdjust the command according to your specific deployment requirements.

After completing these steps, you can seamlessly integrate your custom model into the Llama 2 deployment on PAI-EAS. This capability empowers you to leverage your fine-tuned or downloaded models, unlocking endless possibilities for personalized and specialized language processing tasks.

We explained the seamless integration of Llama 2 models on Alibaba Cloud’s PAI-EAS platform by leveraging PAI Blade, which has an incredible speed boost of up to six times faster inference, leading to substantial cost savings for users.

The article also discussed the additional capabilities provided by Llama 2 on PAI-EAS (such as the ability to mount custom models using OSS and the flexibility to customize parameters for generating responses). These features empower developers to tailor their language-based applications to specific requirements and achieve personalized and specialized language-processing tasks.

The author invites readers to learn more about PAI, delve into generative AI projects, and reach out for further discussions. Whether you have questions, need assistance, or are interested in exploring the potential of generative AI, the author encourages readers to contact Alibaba Cloud and discover the endless possibilities that await.

Experience the power of Llama 2 on Alibaba Cloud's PAI-EAS, unlock the efficiency of PAI Blade, and embark on a journey of accelerated performance and cost-savings. Contact Alibaba Cloud to explore the world of generative AI and discover how it can transform your applications and business.

Simplify Your Delivery Process in EasyDispatch with Generative AI and AnalyticDB

Tutorial: Building an Exciting Journey for Your GenAI Application with Llama 2, AnalyticDB, PAI-EAS

Alibaba Cloud Community - January 4, 2024

Farruh - January 22, 2024

ApsaraDB - May 15, 2024

Alibaba Cloud Community - August 4, 2023

Farruh - August 11, 2023

Alibaba Cloud Indonesia - November 22, 2023

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Farruh