We are on the cusp of a new era in artificial intelligence. With multimodal AI, the synergy between audio, visual, and textual data is not just an idea but an actionable reality, in which the Qwen Family of Large Language Models (LLMs) plays a pivotal role. This blog will serve as your gateway to understanding and implementing multimodal AI using Alibaba Cloud's Model Studio, Qwen-Audio, Qwen-VL, Qwen-Agent, and OpenSearch (LLM-Based Conversational Search Edition).

At its core, the multimodal AI we discuss today hinges on the following technological pillars:

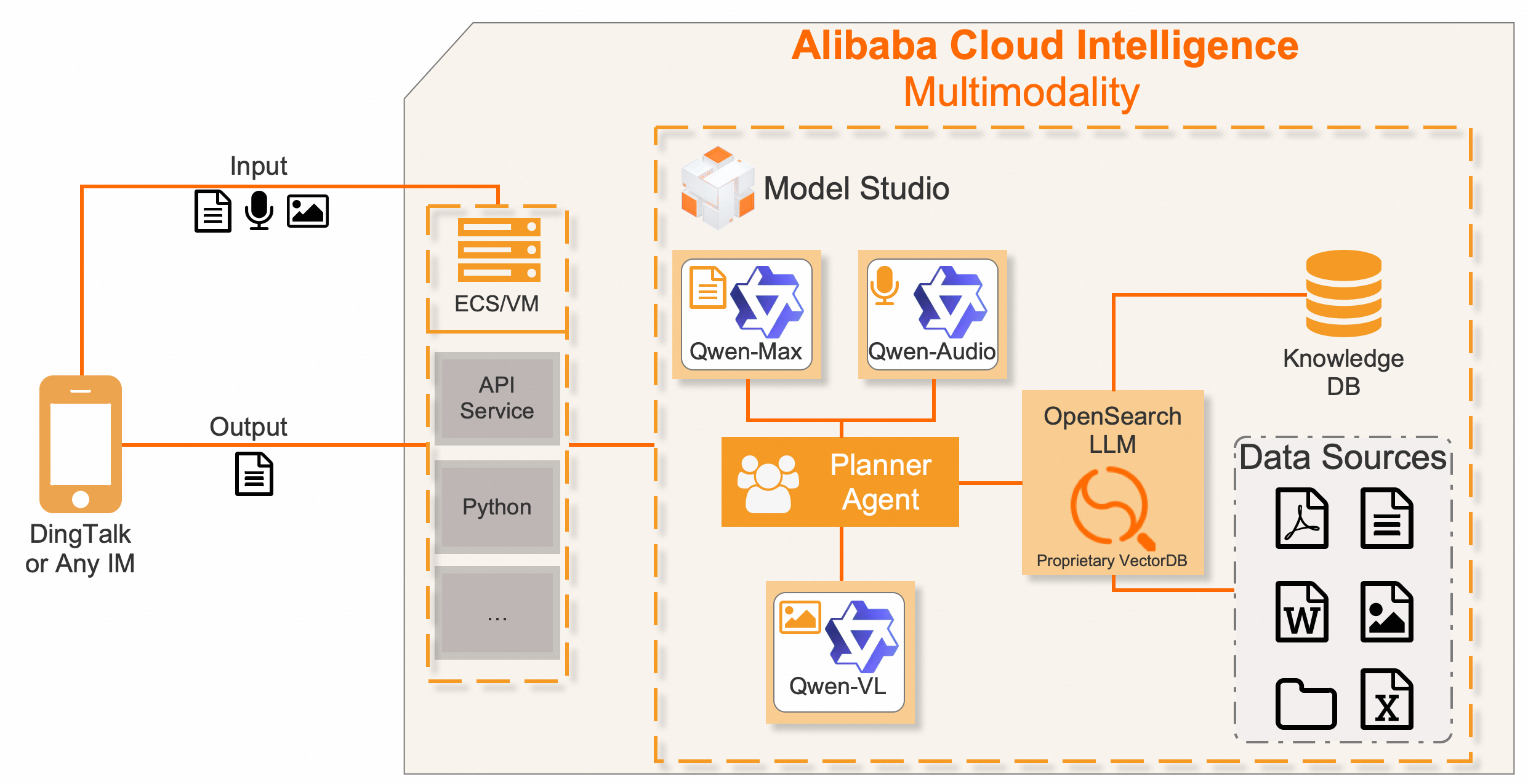

We used a planner agent that controls all solutions and the logic between them. The Planner Agent on Model Studio integrates all solutions into one Generative AI pipeline. Above this, with Python, an API will be created, ready for deployment on Alibaba Cloud's Elastic Computing Service (ECS), and connected to DingTalk IM or any other IM platform you choose.

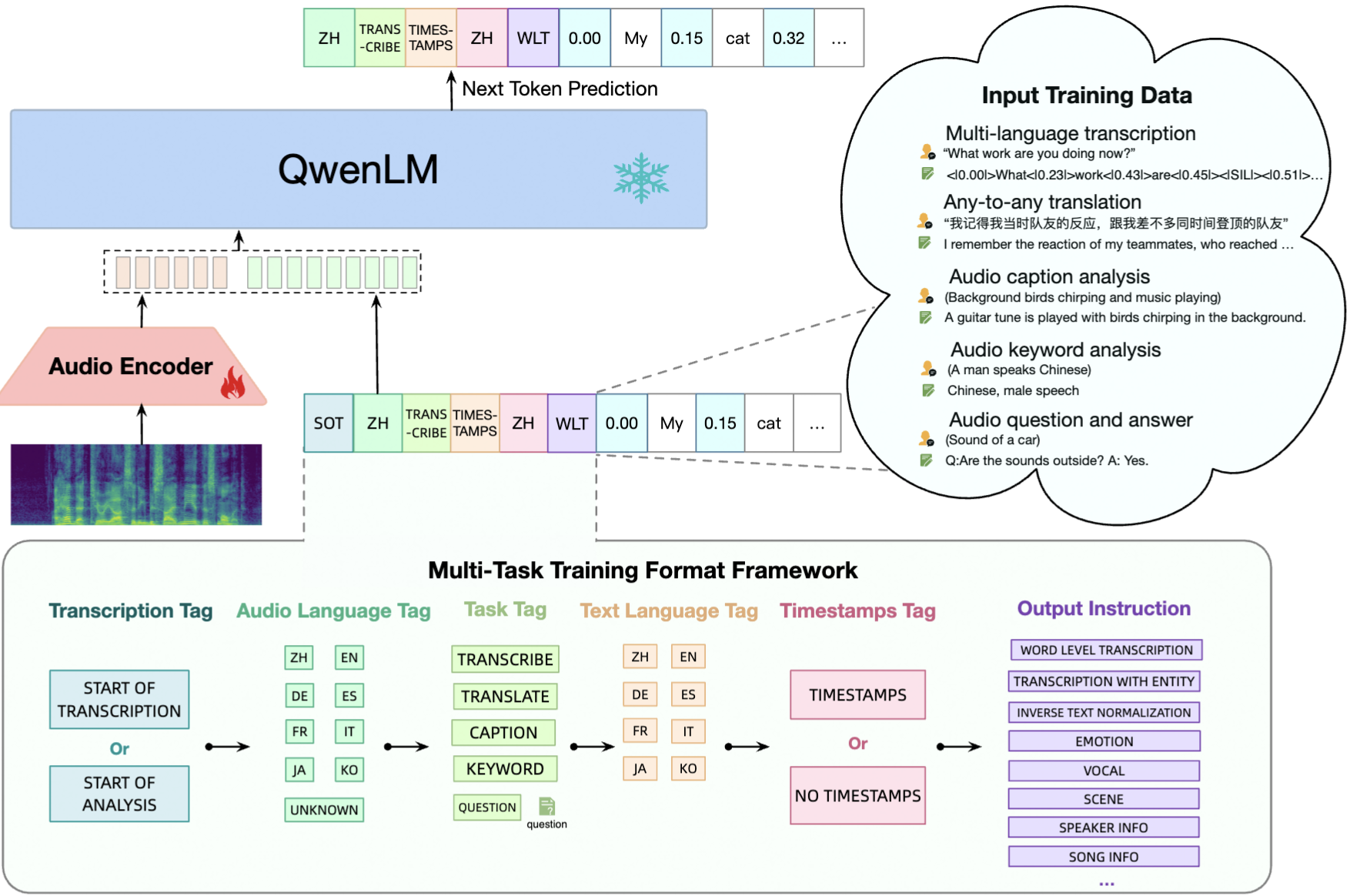

Qwen-Audio is not just an audio processing tool — it's an auditory intelligence that speaks the language of sound with unparalleled fluency. It deals with everything from human speech to the subtleties of music, transforming audio to text with remarkable acuity, redefining how we interact with machines using sound as a medium.

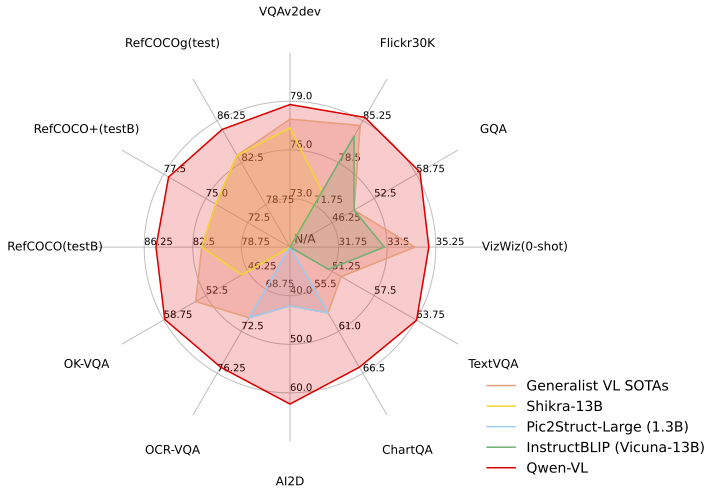

In the realm of vision, Qwen-VL stands tall with models like Qwen-VL-Plus and Qwen-VL-Max that set new benchmarks in image processing. These models not only match but exceed the capabilities of industry giants, offering an extraordinary level of visual understanding. Whether it's recognizing minute details in a million-pixel image or comprehending complex visual scenes, Qwen-VL is your lens to clarity.

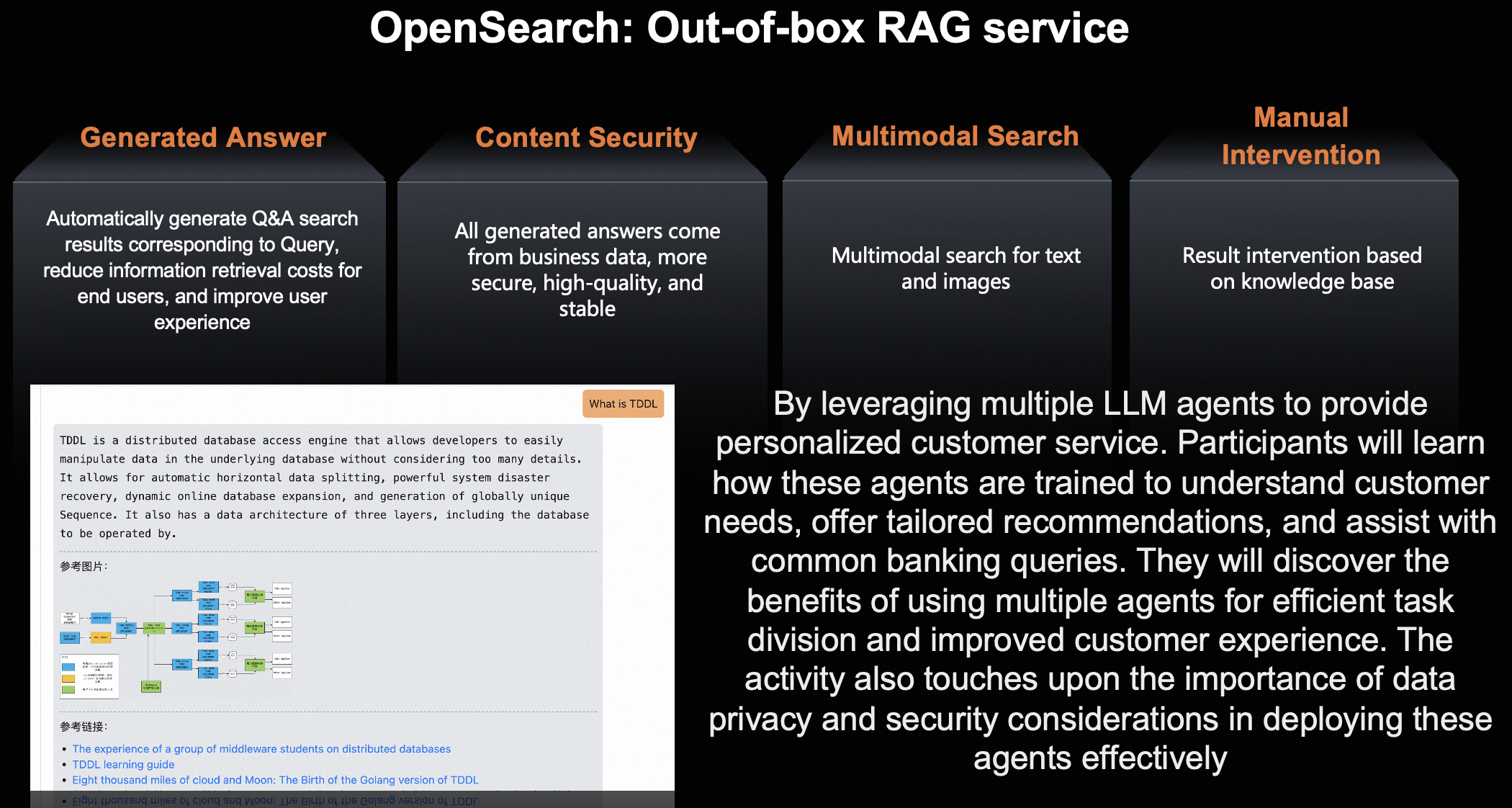

OpenSearch (LLM-Based Conversational Search Edition) embodies the quest for precision in a sea of data. It's the beacon that enterprises need to navigate the complexities of industry-specific Q&A systems. The solution is elegant — vectorize your business data, index it, and let OpenSearch find the answers that are as accurate as they are relevant to your enterprise.

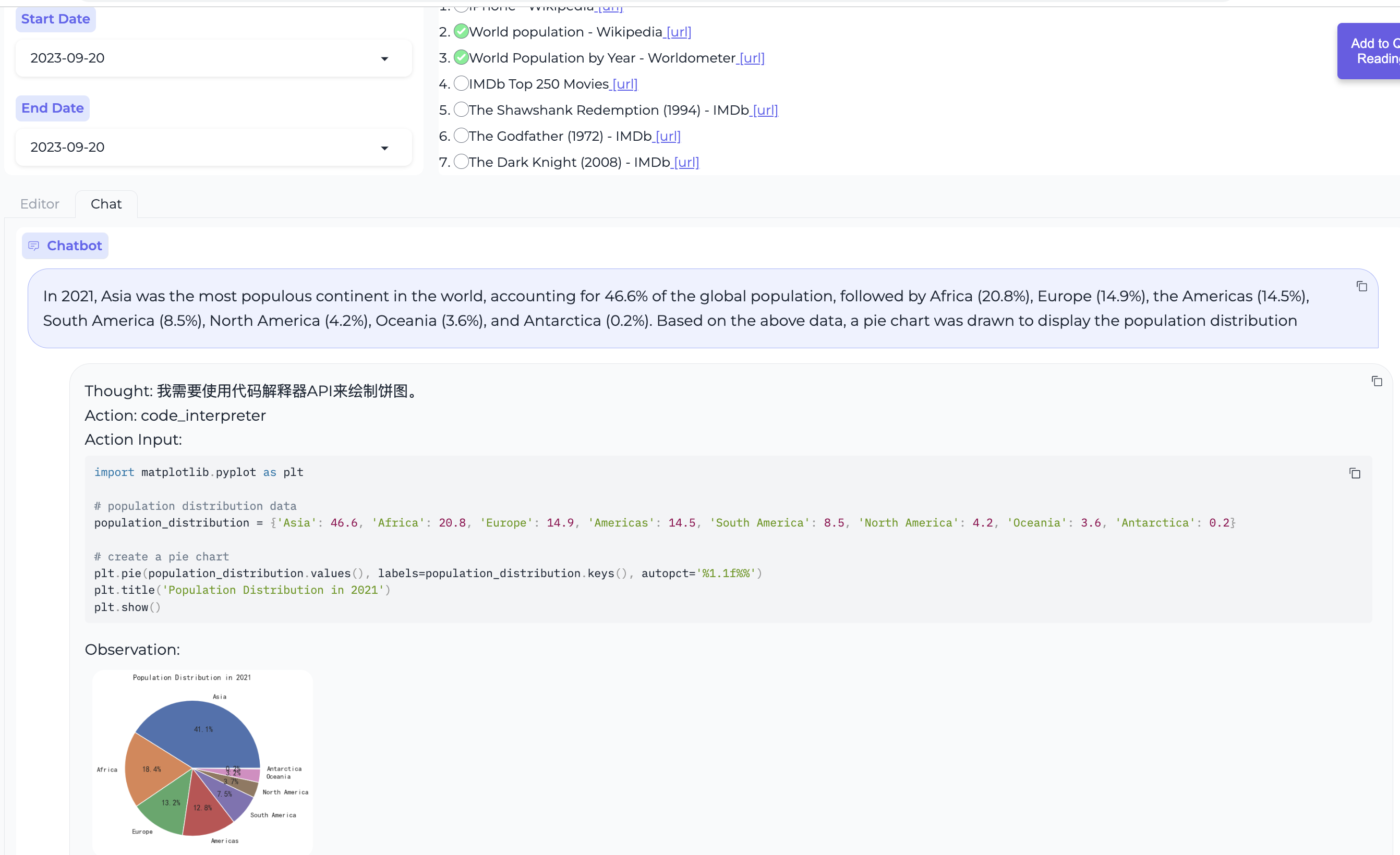

The Qwen-Agent framework is where the building blocks of intelligence are assembled to create something truly special. With it, developers can construct agents that not only understand instructions but can use tools, plan, and remember. It's not just an AI — it's a digital being that can learn and evolve to meet your application's needs.

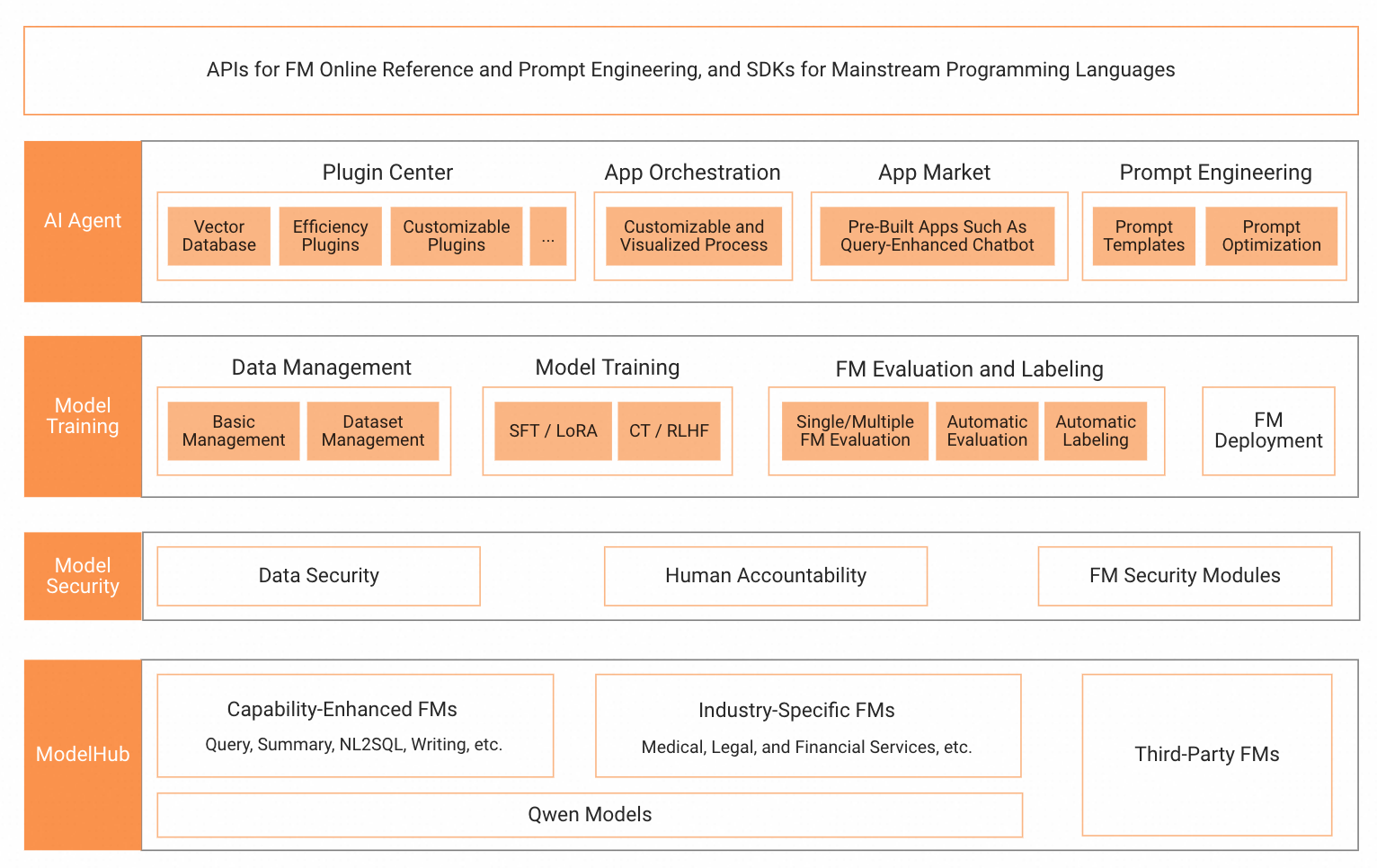

At the heart of this ecosystem lies Model Studio, Alibaba Cloud's generative AI playground. This is where models are not just trained but born, tailored to the unique requirements of each application. It's where the full spectrum of AI — from data management to deployment — comes together in a secure, responsible, and efficient manner.

The final act in our symphony is the creation of a unified API. Using Python and FlaskAPI, we will encapsulate the intelligence of our multimodal models into an accessible, scalable, and robust service. Deployed on ECS, this API will become the bridge that connects your applications to the intelligent orchestration of Qwen LLMs, ready to be engaged via DingTalk IM or any IM service of your preference.

Integrating Qwen Family LLMs with Model Studio overall steps can be described below:

Detail step-by-step tutorials where by following you will become adept at creating AI applications that can see, hear, and understand the world in ways that were previously unimaginable.

Multimodal AI isn't a distant dream — it's already unlocking new opportunities across various industries. Here are some real-world applications where the Qwen Family LLMs and Model Studio integration can make a significant impact:

Imagine a customer service system that not only understands the text queries but can also interpret the customer's voice through Qwen-Audio. It can analyze input images by using Qwen-VL, providing a more personalized and responsive service experience.

In healthcare, multimodal AI can revolutionize patient care. Qwen-VL can assist radiologists by identifying anomalies in medical imaging, while Qwen-Audio can transcribe and analyze patient interviews, and OpenSearch can deliver swift, accurate answers to complex medical inquiries.

Multimodal AI can tailor educational content to individual learning styles. Qwen-Audio can evaluate and give feedback on language pronunciation, Qwen-VL can analyze written assignments, and OpenSearch can provide students with in-depth explanations and study materials.

In retail, multimodal AI can create immersive shopping experiences. Customers can use natural language to search for products using voice commands, and Qwen-VL can recommend items based on visual cues, such as colors or styles, from a photo or video.

Law firms and compliance departments can leverage multimodal AI to sift through vast amounts of legal documents. Qwen-Agent, powered by OpenSearch, can provide precise legal precedents and relevant case law, streamlining legal research and decision-making.

The convergence of multimodal AI technologies is paving the way for applications that can engage with the world in a human-like manner. The Qwen Family LLMs, each specialized in their domain, represent the building blocks of this intelligent future. With Model Studio as your development hub, the ability to create advanced, intuitive, and responsive AI applications is now at your fingertips.

Embark on this journey with us as we explore the limitless potential of multimodal AI. Stay tuned for "Multimodality Unleashed: Integrating Qwen Family LLMs with Model Studio," the tutorial that will transform the way you think about and implement AI in your projects.

Start your multimodal AI adventure here

Thank you for joining me on this exploration of multimodal AI. Your journey into the next dimension of artificial intelligence starts now.

GenAI Model Optimization: Guide to Fine-Tuning and Quantization

Alibaba Cloud Community - September 27, 2025

Alibaba Cloud Community - September 6, 2024

Regional Content Hub - May 20, 2024

Farruh - April 8, 2025

Farruh - July 18, 2024

Regional Content Hub - April 21, 2025

Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Farruh