In the era of Artificial Intelligence (AI), extracting meaningful knowledge from vast datasets has become critical for both businesses and individuals. Enter Retrieval-Augmented Generation (RAG), a breakthrough that has turbocharged the capabilities of AI, empowering systems to not only generate human-like text but also pull in relevant information in real-time. This fusion produces responses that are both rich in context and precise in detail.

As we set sail on the exciting voyage through the vast ocean of Artificial Intelligence (AI), it's essential to understand the three pillars that will be our guiding stars: Generative AI, Large Language Models (LLMs), LangChain, Hugging Face, and the useful application on this RAG (Retrieval-Augmented Generation).

At the core of our journey lie Large Language Models (LLMs) and Generative AI - two potent engines driving the innovation vessel forward.

LLMs, such as Qwen, GPT, and others, are the titans of text, capable of understanding and generating human-like language on a massive scale. These models have been trained on extensive corpora of text data, allowing them to predict and produce coherent and contextually relevant strings of text. They are the backbone of many natural language processing tasks, from translation to content creation.

Generative AI is the artful wizard of creation within the AI realm. It encompasses technologies that generate new data instances that resemble the training data, such as images, music, and, most importantly for our voyage, text. In our context, Generative AI refers to the ability of AI to craft novel and informative responses, stories, or ideas that have never been seen before. It enables AI to not just mimic the past but to invent, innovate, and inspire.

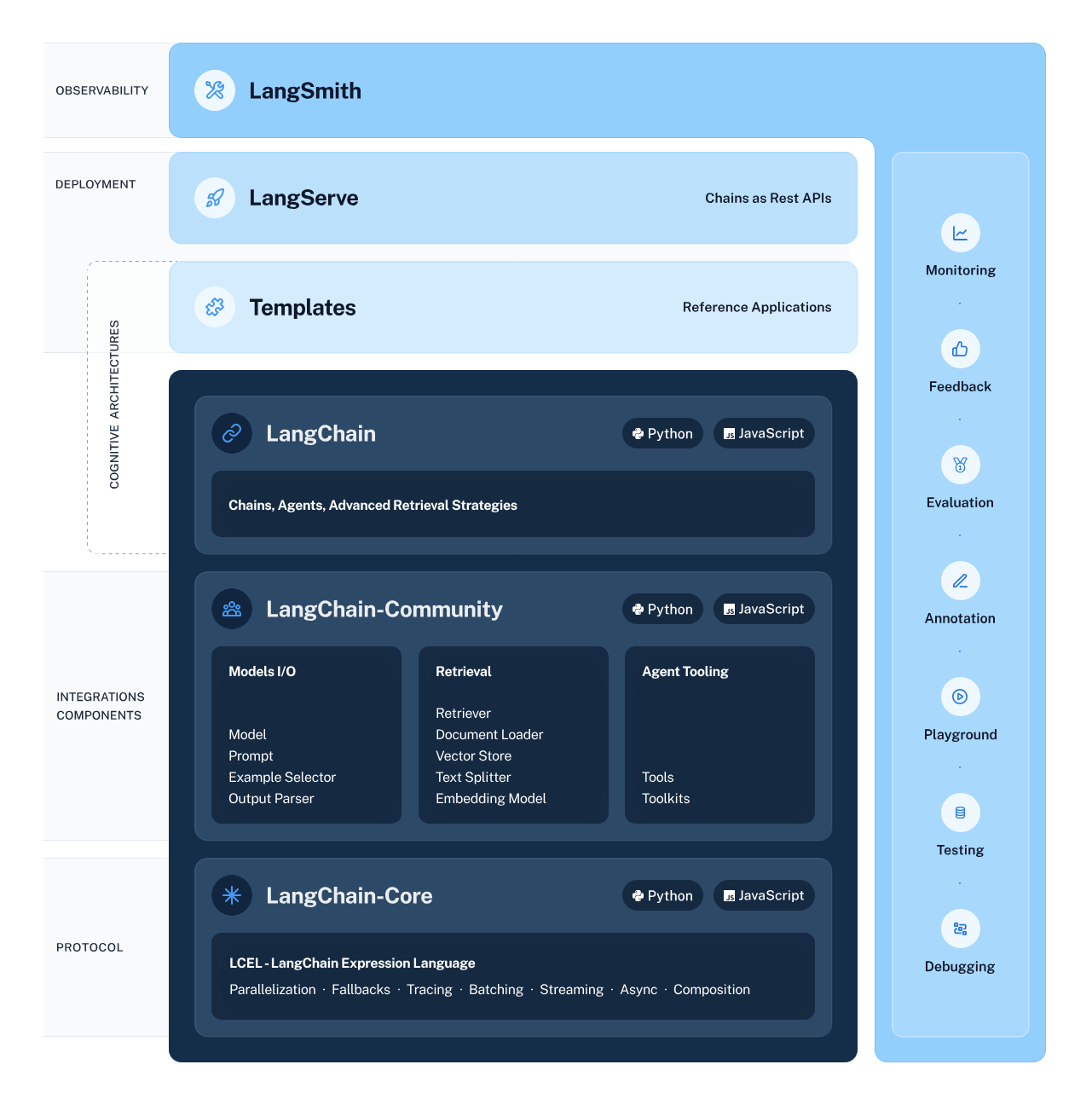

LangChain serves as the architect of our AI workflow, meticulously designing the structure that allows for seamless integration and interaction between various AI components. This framework simplifies the complex process of chaining together data flow from intelligent subsystems, including LLMs and retrieval systems, making tasks such as information extraction and natural language understanding more accessible than ever before.

Hugging Face stands as a bustling metropolis where AI models thrive. This central hub offers a vast array of pre-trained models, serving as a fertile ground for machine learning exploration and application. To gain entry to this hub and its resources, you must create a Hugging Face account. Once you take this step, the doors to an expansive world of AI await you — just visit Hugging Face and sign up to begin your adventure.

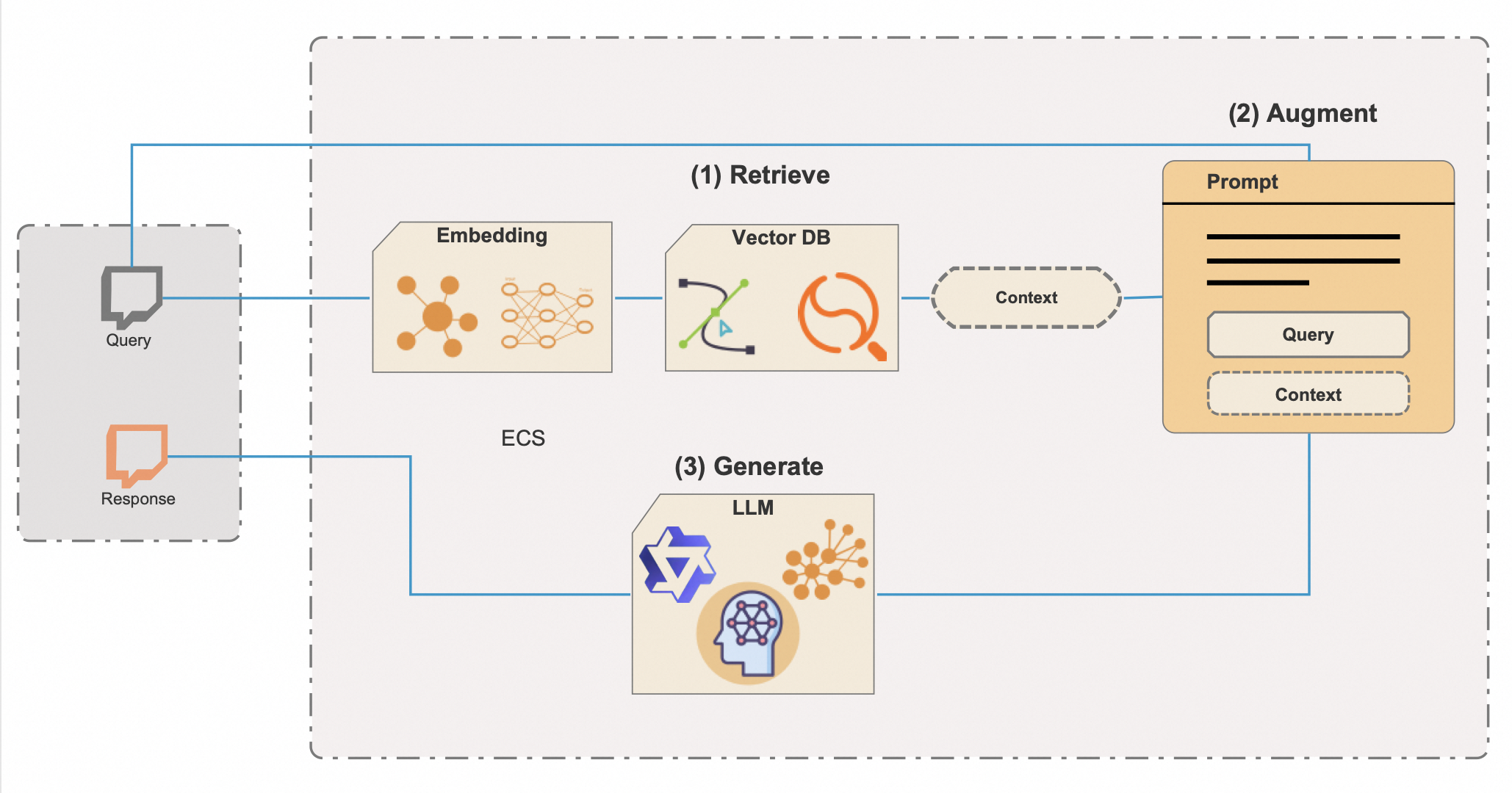

Retrieval-Augmented Generation (RAG) is a sophisticated AI technique that marries the inventive power of Generative AI with the precision of knowledge retrieval, creating a system that's not only articulate but also deeply informed. To unlock the full potential and efficiency of RAG, it integrates vector databases—a powerful tool for speedily sifting through vast information repositories. Here's an enhanced breakdown of how RAG operates with vector databases:

The integration of vector databases is key to RAG's efficiency. Traditional metadata search methods can be slower and less precise, but vector databases facilitate near-instantaneous retrieval of contextually relevant information, even from extremely large datasets. This approach not only saves valuable time but also ensures that the AI's responses are grounded in the most appropriate and current information available.

RAG's prowess is especially advantageous in applications like chatbots, digital assistants, and sophisticated research tools — anywhere where the delivery of precise, reliable, and contextually grounded information is crucial. It's not simply about crafting responses that sound convincing; it's about generating content anchored in verifiable data and real-world knowledge.

Armed with an enriched comprehension of LangChain, Hugging Face, LLMs, GenAI, and the vector database-enhanced RAG, we stand on the brink of a coding adventure that will bring these technologies to life. The Python script we'll delve into represents the synergy of these elements, demonstrating an AI system capable of responding with not just creativity and context but also with a depth of understanding once thought to be the domain of science fiction. Prepare to code and experience the transformative power of RAG with vector databases.

Before we set sail on this tech odyssey, let's make sure you've got all your ducks in a row:

Got all that? Fabulous! Let's get our hands dirty (figuratively, of course).

By carefully managing your Python dependencies, you ensure that your AI project is built on a stable and reliable foundation. With the dependencies in place and the environment set up correctly, you're all set to run the script and witness the power of RAG and LangChain in action.

Now, you can execute the Python script provided in the repository to see RAG in action.

Before we can embark on our exploration of AI with the LangChain framework and Hugging Face's Transformers library, it's crucial to establish a secure and well-configured environment. This preparation involves importing the necessary libraries and managing sensitive information such as API keys through environment variables.

from torch import cuda

from langchain_community.vectorstores import FAISS

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnablePassthrough

from langchain_community.embeddings.huggingface import HuggingFaceEmbeddings

from transformers import AutoModelForCausalLM, AutoTokenizer

from langchain_community.llms.huggingface_pipeline import HuggingFacePipeline

from transformers import pipeline

from dotenv import load_dotenv

load_dotenv()When working with AI models from Hugging Face, you often need access to the Hugging Face API, which requires an API key. This key is your unique identifier when making requests to Hugging Face services, allowing you to load models and use them in your applications.

Here's what you need to do to securely set up your environment:

HUGGINGFACE_API_KEY=your_api_key_hereReplace your_api_key_here with the actual API key you obtained from Hugging Face.

modelPath = "sentence-transformers/all-mpnet-base-v2"

device = 'cuda' if cuda.is_available() else 'cpu'

model_kwargs = {'device': device}Here, we set the path to the pre-trained model that will be used for embeddings. We also configure the device setting, utilizing a GPU if available for faster computation, or defaulting to CPU otherwise.

embeddings = HuggingFaceEmbeddings(

model_name=modelPath,

model_kwargs=model_kwargs,

)

# Made up data, just for fun, but who knows in a future

vectorstore = FAISS.from_texts(

["Harrison worked at Alibaba Cloud"], embedding=embeddings

)

retriever = vectorstore.as_retriever()We initialize an instance of HuggingFaceEmbeddings with our chosen model and configuration. Then, we create a vectorstore using FAISS, which allows us to perform efficient similarity searches in high-dimensional spaces. We also instantiate a retriever that will fetch information based on the embeddings.

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)Here, we define a chat prompt template that will be used to structure the interaction with the AI. It includes placeholders for context and a question, which will be dynamically filled during the execution of the chain.

In the world of AI and natural language processing, the tokenizer and language model are the dynamic duo that turn text into meaningful action. The tokenizer breaks down language into pieces that the model can understand, while the language model predicts and generates language based on these inputs. In our journey, we're using Hugging Face's AutoTokenizer and AutoModelForCausalLM classes to leverage these capabilities. But it's important to remember that one size does not fit all when it comes to choosing a language model.

The size of the model is a critical factor to consider. Larger models like Qwen-72B have more parameters, which generally means they can understand and generate more nuanced text. However, they also require more computational power. If you're equipped with high-end GPUs and sufficient memory, you might opt for these larger models to get the most out of their capabilities.

On the other hand, smaller models like Qwen-1.8B are much more manageable for standard computing environments. Even this tiny model should be able to run on IoT and mobile devices. While they may not capture the intricacies of language as well as their larger counterparts, they still provide excellent performance and are more accessible for those without specialized hardware.

Another point to consider is the nature of your task. If you're building a conversational AI, using a chat-specific model such as Qwen-7B-Chat might yield better results as these models are fine-tuned for dialogues and can handle the nuances of conversation better than the base models.

Larger models not only demand more from your hardware but may also incur higher costs if you're using cloud-based services to run your models. Each inference takes up processing time and resources, which can add up if you're working with a massive model.

When deciding which model to use, weigh the benefits of a larger model against the available resources and the specific requirements of your project. If you're just starting out or developing on a smaller scale, a smaller model might be the best choice. As your needs grow, or if you require more advanced capabilities, consider moving up to a larger model.

Remember, the Qwen series is open-source, so you can experiment with different models to see which one fits your project best. Here's how the model selection part of the script could look if you decided to use a different model:

# This can be changed to any of the Qwen models based on your needs and resources

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen-7B", trust_remote_code=True)

model_name_or_path = "Qwen/Qwen-7B"

model = AutoModelForCausalLM.from_pretrained(model_name_or_path,

device_map="auto",

trust_remote_code=True)We load a tokenizer and a causal language model from Hugging Face with the AutoTokenizer and AutoModelForCausalLM classes, respectively. These components are crucial for processing natural language inputs and generating outputs.

This pipeline is designed to generate text using a language model and a tokenizer that have been previously loaded. Let's break down the parameters and understand their roles in controlling the behavior of the text generation:

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=8192,

do_sample=True,

temperature=0.7,

top_p=0.95,

top_k=40,

repetition_penalty=1.1

)

hf = HuggingFacePipeline(pipeline=pipe)After setting up the pipeline with the desired parameters, the next line of code:

hf = HuggingFacePipeline(pipeline=pipe)Wraps the pipe object in a HuggingFacePipeline. This class is a part of the LangChain framework and allows the pipeline to be integrated seamlessly into LangChain's workflow for building AI applications. By wrapping the pipeline, we can now use it in conjunction with other components of the LangChain, such as retrievers and parsers, to create more complex AI systems.

The careful selection of these parameters allows you to fine-tune the behavior of the text generation to suit the specific needs of your application, whether you're looking for more creative and varied outputs or aiming for consistently coherent and focused text.

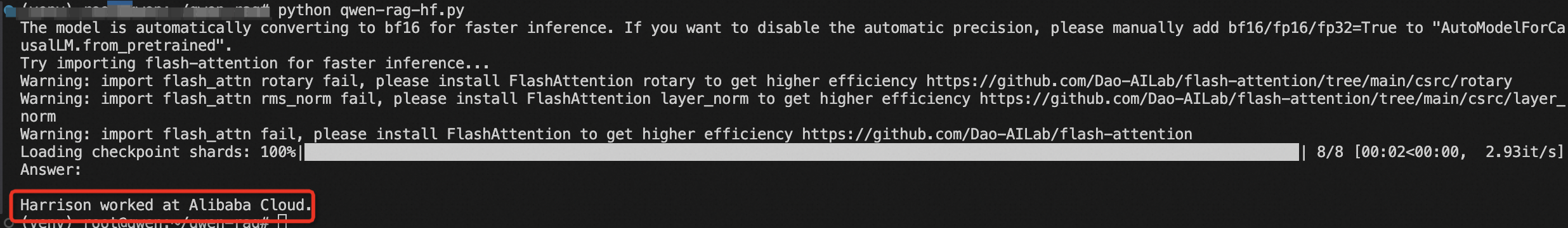

The below code snippet represents a complete end-to-end RAG system where the initial question prompts a search for relevant information, which is then used to augment the generative process, resulting in an informed and contextually relevant answer to the input question.

1. Chain Construction:

chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| hf

| StrOutputParser()

)Here's what's happening in this part of the code:

The use of the | (pipe) operator suggests that this code is using a functional programming style, specifically the concept of function composition or a pipeline pattern where the output of one function becomes the input to the next.

2. Chain Invocation:

results = chain.invoke("Where did Harrison work?")In this line, the chain is being invoked with a specific question: "Where did Harrison work?" This invocation triggers the entire sequence of operations defined in the chain. The retriever searches for relevant information, which is then passed along with the question through the prompt and into the Hugging Face model. The model generates a response based on the inputs it receives.

3. Printing Results:

print(results)The generated response is then parsed by the StrOutputParser() and returned as the final result, which is then printed to the console or another output.

Finally, we construct the RAG chain by linking the retriever, prompt template, Hugging Face pipeline, and output parser. We invoke the chain with our question, and the results are printed.

You've just taken a giant leap into the world of AI with RAG and LangChain. By understanding and running this code, you're unlocking the potential to create intelligent systems that can reason and interact with information in unprecedented ways.

Deploy Your Own AI Chat Buddy - The Qwen Chat Model Deployment with Hugging Face Guide

GenAI Model Optimization: Guide to Fine-Tuning and Quantization

Alibaba Cloud Community - September 6, 2024

Farruh - July 18, 2024

Alibaba Cloud Community - March 8, 2024

Regional Content Hub - April 15, 2024

Regional Content Hub - May 6, 2024

Regional Content Hub - April 15, 2024

Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Farruh