By Farruh

Managing a delivery business can be challenging, with many tasks that require constant attention. From managing drivers to tracking deliveries, many aspects need to be managed effectively to ensure customer satisfaction. In today's digital age, customers expect quick and efficient service, and businesses need to keep up with the latest technologies to provide a seamless experience. Generative AI (GenAI) and vector database retrieval are two technologies that can be integrated with a delivery business to provide a personalized and efficient experience for customers. This article explores how integrating GenAI and vector database retrieval can benefit a delivery business and provides use cases for vector database retrieval.

Generative AI refers to a type of artificial intelligence (AI) designed to generate new and original content (such as images, videos, text, and music) without human intervention. It involves using deep learning algorithms and neural networks to learn patterns and rules from large datasets and then generate new content based on this learned knowledge. Generative AI can be used in various fields, including art, music, gaming, and marketing. It can potentially revolutionize the creative industry by enabling machines to create innovative and original content by itself.

A large language model (LLM) is a type of AI model trained on vast amounts of text data to generate human-like language. It uses deep learning techniques to learn the statistical patterns and relationships between words, phrases, and sentences in a given language.

LLMs can be used for a variety of natural language processing (NLP) tasks (such as text completion, text classification, sentiment analysis, and language translation). They work by breaking down text into smaller units (such as words or characters) and analyzing the relationships between them.

Large language models have many applications in areas (such as chatbots, content generation, and language translation) and are likely to become increasingly important in the AI field as the demand for more advanced NLP solutions grows.

Vector database retrieval is a technology that uses mathematical algorithms to retrieve information from a database. It uses a vector space model to represent data in a multi-dimensional space, where each dimension corresponds to a unique feature of the data. Vector database retrieval enables businesses to retrieve information from large databases quickly and accurately.

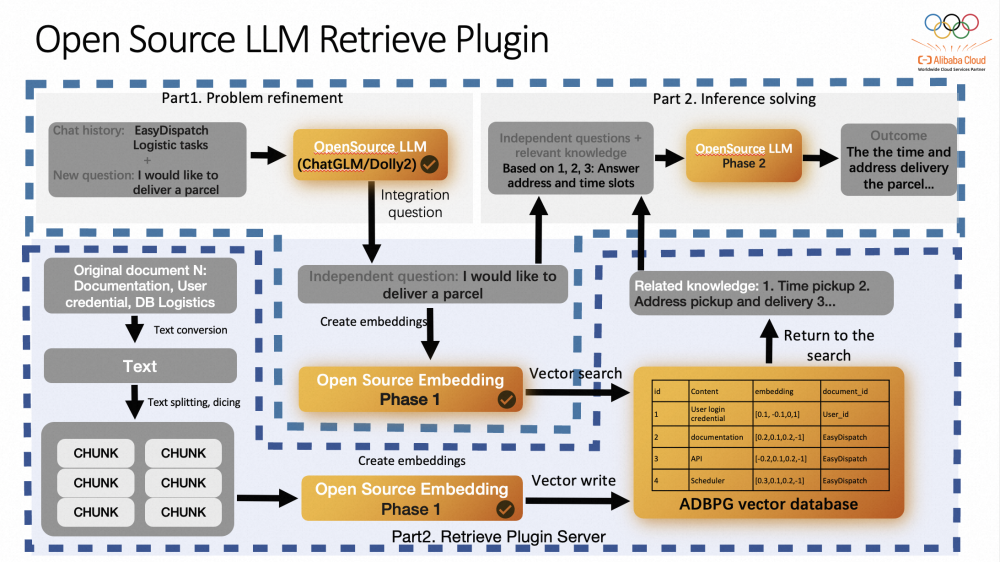

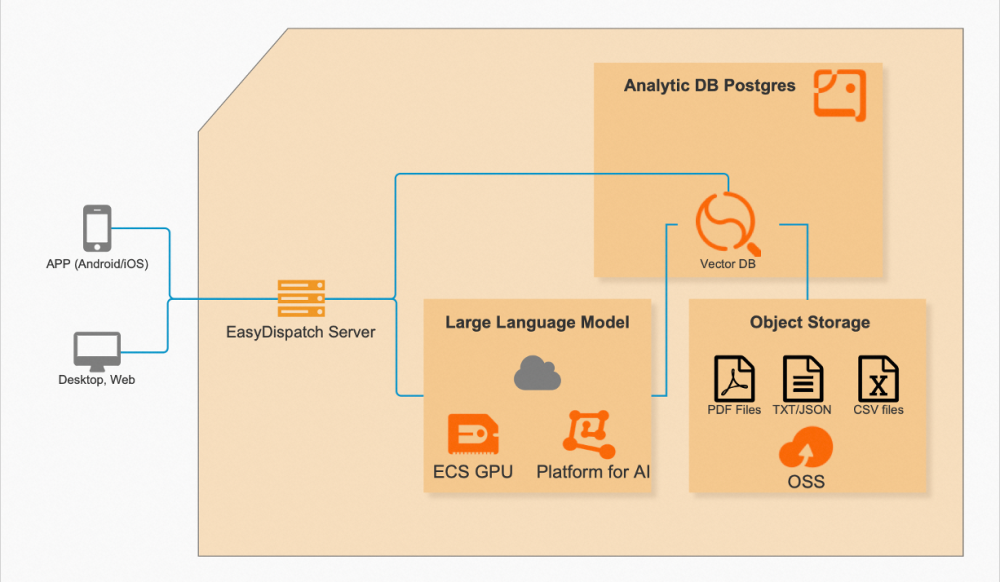

Here is a step-by-step explanation of how the LLM Retrieve Plugin’s overall architecture with the example of EasyDispatch on how to solve the problem of delivering a parcel:

The Open Source LLM Retrieve Plugin is a powerful tool that uses open-source embedding and vector search technology to help with logistic tasks (like delivering parcels). It can provide accurate and relevant information by searching through a database of logistics information and using related knowledge to provide comprehensive answers to the user's questions.

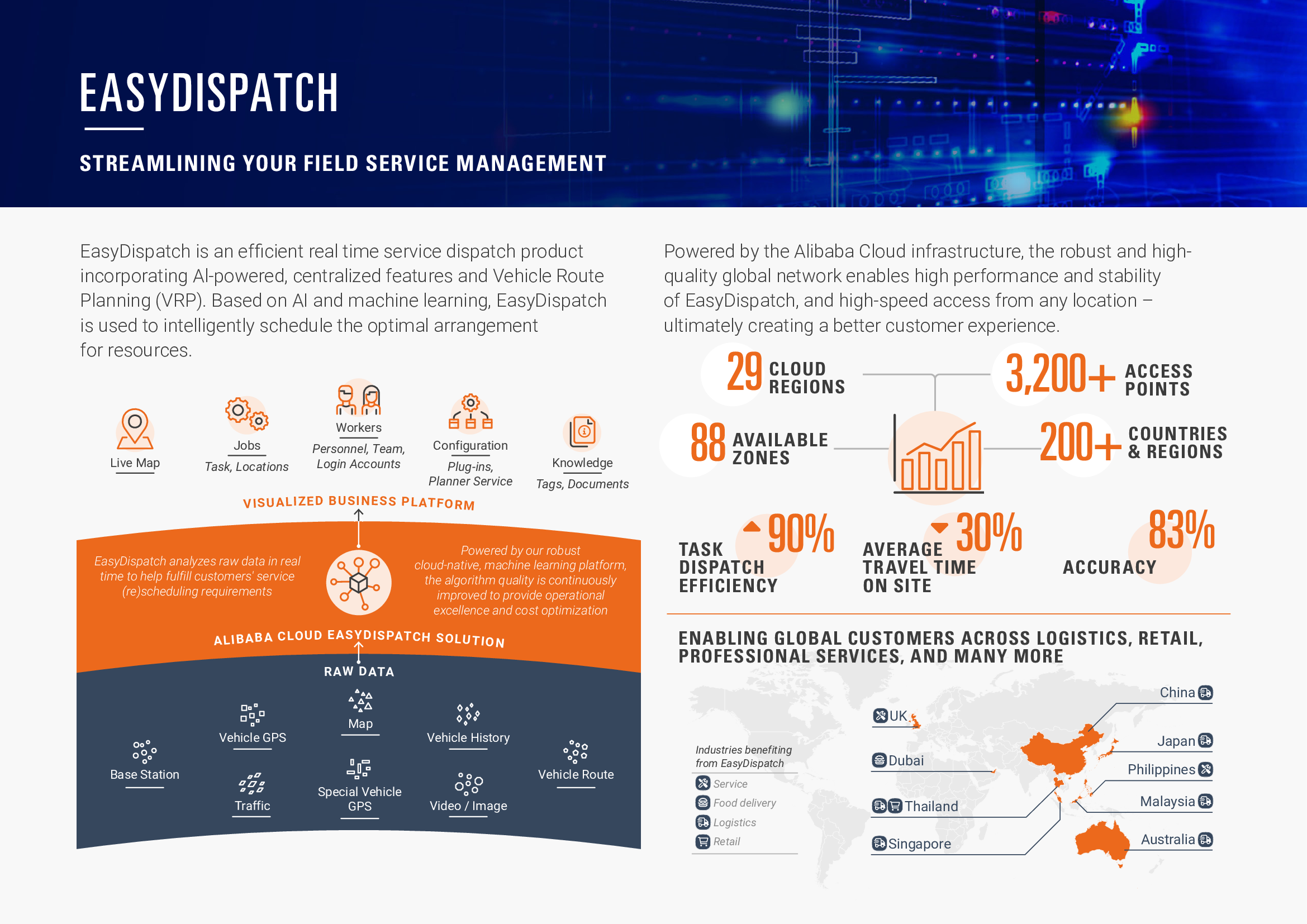

EasyDispatch is a big data and AI-powered logistics management platform developed by Alibaba Cloud. It enables businesses to optimize their logistics operations by providing real-time parcel tracking and analysis, intelligent dispatching, and predictive maintenance capabilities. The platform uses advanced algorithms to optimize delivery routes, minimize transportation costs, and improve overall efficiency. It also offers a range of features (such as order management, driver management, and customer service management), making it a comprehensive solution for businesses looking to streamline their logistics operations.

EasyDispatch can use vector retrieval and LLM technology, as Alibaba Cloud offers a range of AI and big data services for its customers. Vector retrieval is a technique for retrieving similar items from large sets of data based on their mathematical representation as vectors, while large language models are deep learning models trained on vast amounts of text data to generate human-like language.

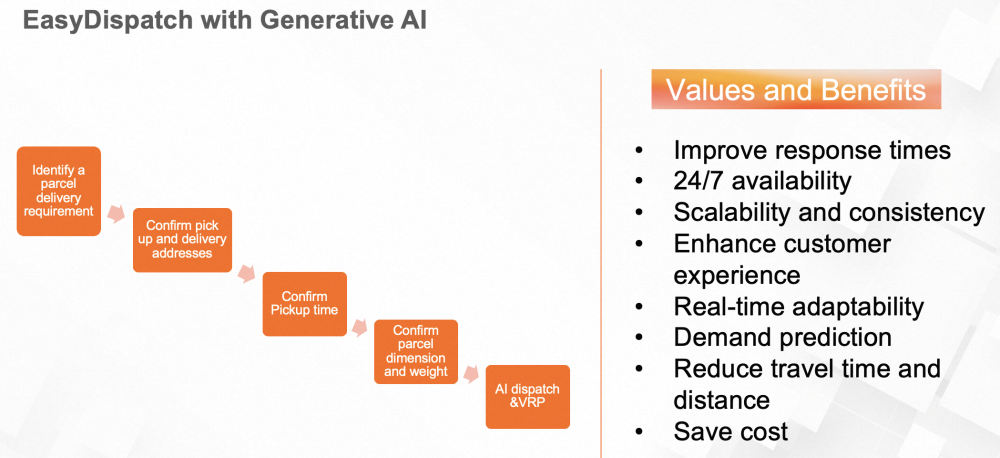

By incorporating these technologies, EasyDispatch could potentially enhance its capabilities in areas (such as predicting delivery times, optimizing routing efficiency, and improving customer service) through natural language processing and sentiment analysis. However, it ultimately depends on how Alibaba Cloud chooses to implement these technologies within the EasyDispatch platform.

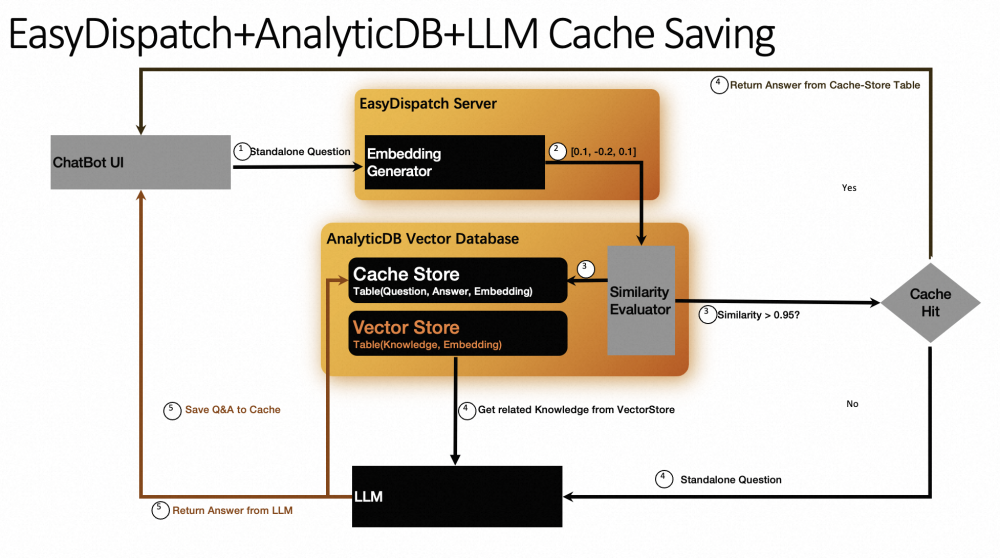

EasyDispatch AnalyticDB LLM Cache Saving is a feature that allows users to save cache in AnalyticDB's large-scale data warehousing system, making data retrieval faster and more efficient. Here's how it works step by step:

The system can quickly retrieve previously generated answers if the user asks a similar question by using a cache store. This reduces the processing load on the system and provides faster response times to users. The use of vector embeddings and the AnalyticDB vector database allows the system to find relevant knowledge for a given question quickly, making the LLM more accurate and efficient.

EasyDispatch is a logistics management platform developed by Alibaba Cloud that uses big data and AI to optimize logistics operations. One of its features is the ability to connect to AnalyticDB, Alibaba Cloud Object Storage Service (OSS), and LLMs to automate and streamline logistics processes.

In this integration, the EasyDispatch server connects to AnalyticDB and other Alibaba Cloud services (such as OSS and LLM). The LLM is used to provide natural language processing capabilities to EasyDispatch, allowing users to interact with the platform through text-based commands.

Using the LLM, users can create orders, jobs, workers, and items and add depots and locations to the EasyDispatch system. The LLM runs tasks based on EasyDispatch documentation and input files' location, automatically processing user requests and generating appropriate actions within the system.

For example, a user could send a text command (such as Add a new worker to the system with the name John Smith and assign him to a job in location X.) The LLM would parse this command and create the new worker in the EasyDispatch system, assign them to the specified job, and update the system accordingly.

The platform becomes more user-friendly and accessible by integrating EasyDispatch with LLM technology, allowing users to interact with the system through natural language commands and automating many of the manual tasks required in logistics management.

The EasyDispatch AnalyticDB LLM Cache Saving feature combines cloud-based data warehousing and AI-powered caching technology to enable faster and more efficient data retrieval, which can be a significant advantage for businesses looking to optimize their logistics operations.

Businesses can provide personalized and efficient customer service, improve delivery times, and enhance overall efficiency by leveraging LLM and AnalyticDB technologies on EasyDispatch. Here are some use cases where vector database retrieval can be used in a delivery business:

1. Delivery Tracking

Delivery tracking is a critical aspect of any delivery business, and customers expect accurate and real-time updates on their deliveries. Businesses can provide customers with personalized and accurate delivery tracking information by integrating vector database retrieval with LLM. The vector database retrieval can be used to retrieve information on delivery times, driver location, and other relevant data, which can be used by LLM to provide real-time updates to customers.

2. Customer Service

Customer service is another critical aspect of any delivery business, and businesses need to provide quick and efficient service to customers. Businesses can provide personalized and accurate responses to customer inquiries by integrating LLM with vector database retrieval. The vector database retrieval can be used to retrieve information on customer preferences, order history, and other relevant data, which can be used by LLM to provide relevant and personalized responses to customer inquiries.

3. Delivery Optimization

Delivery optimization is another area where vector database retrieval can be used in a delivery business. Businesses can optimize their delivery routes and reduce delivery times by leveraging vector database retrieval to retrieve data on driver locations, traffic patterns, and other relevant data. This feature can improve efficiency and reduce costs, enabling businesses to provide faster and more efficient delivery services to their customers.

4. Inventory Management

Inventory management is another area where vector database retrieval can be used in a delivery business. Businesses can optimize their inventory management processes by leveraging vector database retrieval to retrieve data on inventory levels, product availability, and other relevant data. This feature can minimize waste, reduce costs, and ensure the right products are available at the right time.

In conclusion, integrating LLM and vector database retrieval with a delivery business can provide many benefits, including improved customer service, faster delivery times, and enhanced efficiency. Leveraging these technologies allows businesses to provide personalized and efficient service to their customers, enabling them to grow and thrive in today's digital age. Use cases where vector database retrieval can be used include delivery tracking, customer service, delivery optimization, and inventory management. If you're looking to enhance your delivery business operations, LLM and vector database retrieval integration are worth considering.

We believe AnalyticDB in the Generative AI era can potentially revolutionize the way businesses and organizations analyze and use data. If you're interested in learning more about our software solution and how it can benefit your organization, please don't hesitate to contact us. We're always happy to answer your questions and provide a demo of our software.

Mastering Generative AI - Run Llama2 Models on Alibaba Cloud's PAI with Ease

Alibaba Cloud Community - January 4, 2024

Alibaba Cloud Indonesia - July 5, 2023

Alibaba Cloud Community - June 30, 2023

Rupal_Click2Cloud - October 19, 2023

ApsaraDB - July 6, 2023

Farruh - September 22, 2023

AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn MoreMore Posts by Farruh

Dikky Ryan Pratama June 26, 2023 at 12:43 am

Awesome!