Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights reserved to the original author.

In the previous articles, we introduced some methods to improve the performance of distributed computing frameworks. This way, while scalability continues to bring computing power, we can use that computing power more efficiently.

This solves a key problem in big data application development: execution efficiency.

However, there is another issue that affects the efficiency of big data applications, development efficiency, which still bothers developers.

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount {

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}The section above shows the code for the MapReduce version of WordCount on the Hadoop website. It's too long and complicated.

However, this is the simplest WordCount. A little more complicated logic probably requires more complicated MapReduce programs.

This development and commissioning cost is unacceptable for enterprise production, so we must think of a way out.

MapReduce is created like this, but we can find problems from it to guide new solutions.

The most fundamental reason that the code for the MapReduce version is so complicated lies in the Programming Paradigm.

There are many types of programming paradigms (such as the most common process-oriented, object-oriented, and functional expressions). The following is what impacts MapReduce:

Imperative programming focuses more on the bottom and is more detailed. You can have full control, but you also have to bear the complexity that comes with it. Declarative programming gives up control over repetitive work in exchange for the liberation of the workforce.

The complicated code writing of MapReduce is caused by imperative programming. In the big data field, MapReduce is in the same boat as Storm in the stream processing field.

However, it should be emphasized that declarative programming is essentially a way of encapsulating specific targets based on imperative programming. This is because machines only accept instructions, and do not understand them.

Since specific targets are limited, imperative programming and declarative programming cannot replace each other. Instead, like programming languages, each has its own applicable scenario.

It is clear that we need declarative programming in the scenario of big data application development.

The two most typical subclasses of declarative programming are DSL and functional programming. As we mentioned earlier in this series, MapReduce draws on map() and reduce() from functional programming, so why is it now borrowing from imperative programming?

The reason is that MapReduce is written in Java. In Java, class and function are not first-class citizens, so they cannot be written in a few lines like the typical functional programming language. Class and function/method can only be defined line by line. We have no choice. Java is famous for its verbosity.

Java is also improving. With the introduction of Lambda, Java is more functional. In combination with the Stream API, the programming in MapReduce can also be very simple:

public class WordCount {

public static void main(String[] args) throws IOException {

Path path = Paths.get("src/main/resources/book.txt");

Map<String, Long> wordCount = Files.lines(path).flatMap(line -> Arrays.stream(line.trim().split("\s")))

.map(word -> word.replaceAll("[^a-zA-Z]", "").toLowerCase().trim())

.filter(word -> word.length() > 0)

.map(word -> new SimpleEntry<>(word, 1))

.collect(groupingBy(SimpleEntry::getKey, counting()));

wordCount.forEach((k, v) -> System.out.println(String.format("%s ==>> %d", k, v)));

}

}The SQL-based MapReduce led to the creation of Hive:

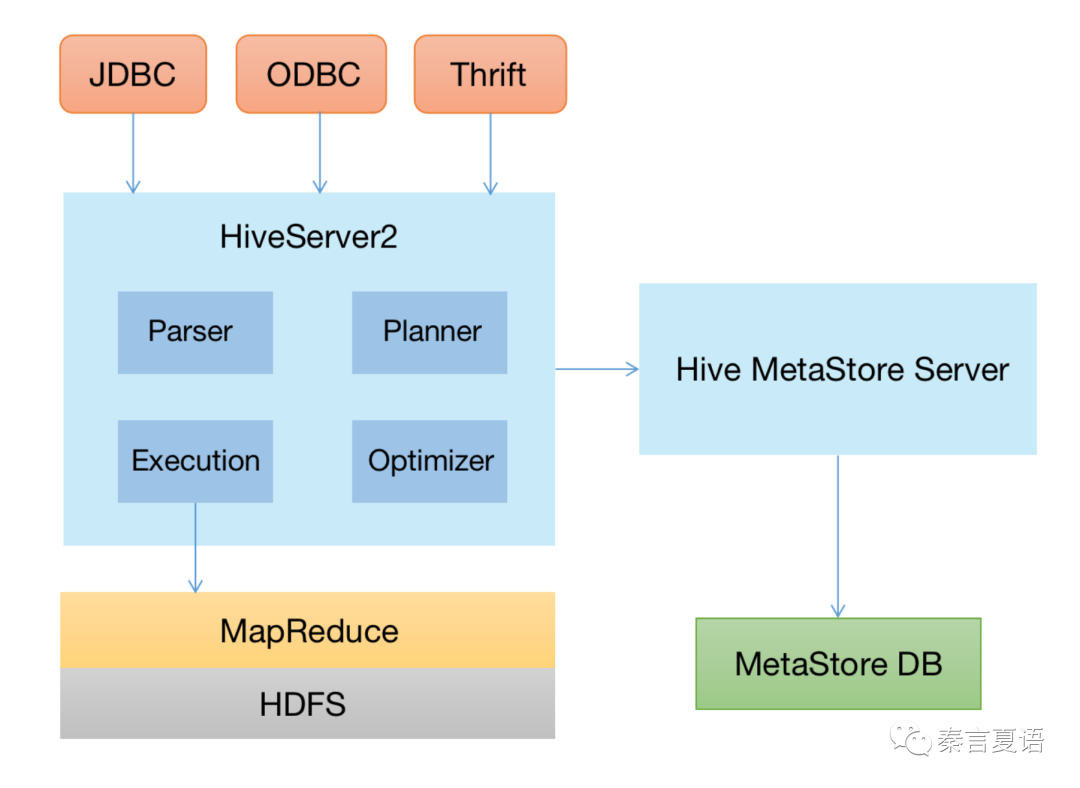

We can extract the following important points from the Hive architecture diagram above:

In general, Hive can be described as a distributed database:

We can create the following table for the WordCount example:

CREATE TABLE tmp.word_count (

word string

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ' '

LOCATION '<some_hdfs_location>'Then, the following SQL statement (called HQL in Hive) is required to get the desired result:

SELECT

word, count(*)

FROM

tmp.word_count

GROUP BY

word;There is no doubt that the process is much simpler.

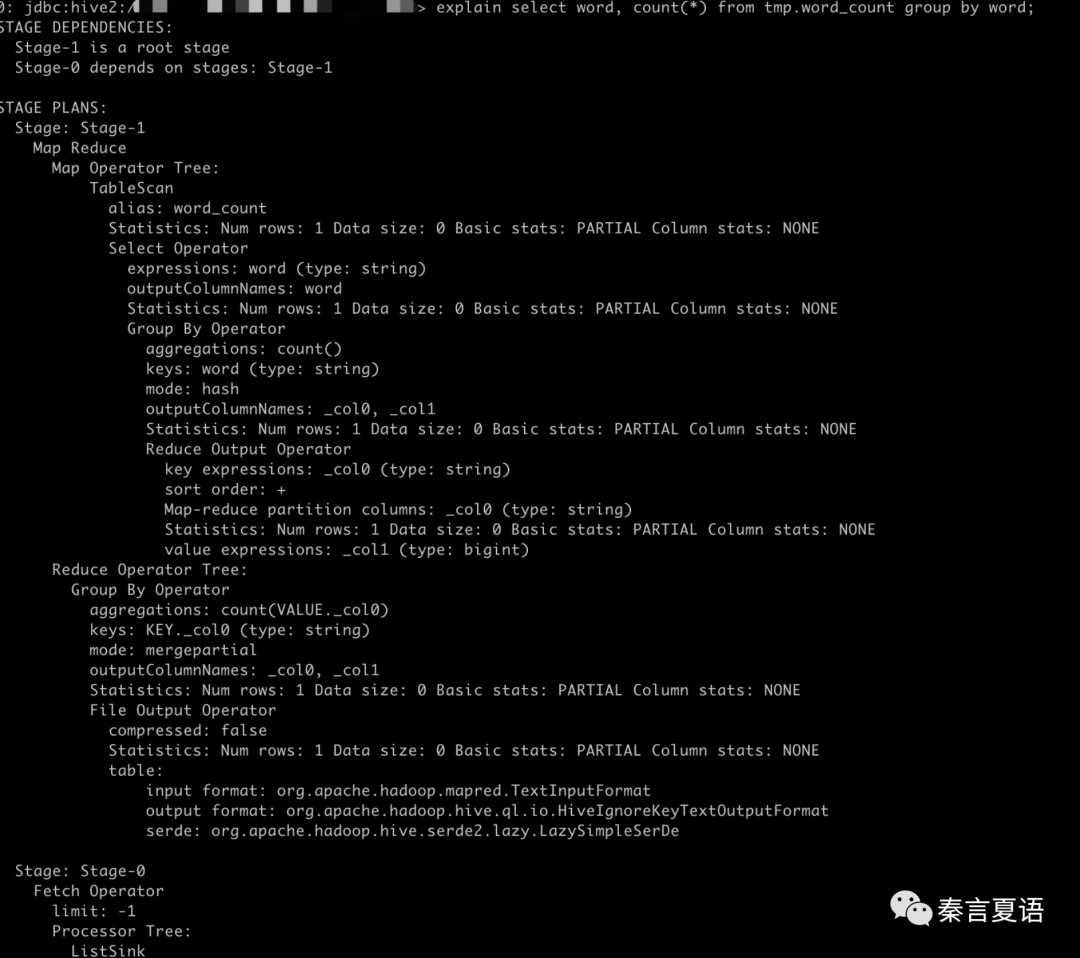

Let's look at how SQL statements become MapReduce Tasks using the EXPLAIN command.

You can clearly see the Map and Reduce phases and the group and count operations. As mentioned earlier, complex logic requires multiple MapReduce tasks to be completed. However, most of the complex logic can be implemented in Hive with only one SQL statement, but at the bottom layer, the SQL statement will be parsed into many stages, and each stage may (but not necessarily) correspond to one MapReduce task.

In addition to the simple code writing, Hive is an interactive environment that eliminates the cumbersome compilation, debugging, and deployment process, which undoubtedly further reduces the development cost.

Hive quickly becomes the default choice for SQL on Hadoop, significantly reducing the cost of data processing in Hadoop and becoming widely adopted. At the same time, Hive attracts some analysts who are not very good at programming but are proficient in SQL, thus expanding the popularity of Hadoop.

In previous articles, we said MapReduce is slow and thus introduced Spark. Can we only improve one of the two: development efficiency and execution efficiency?

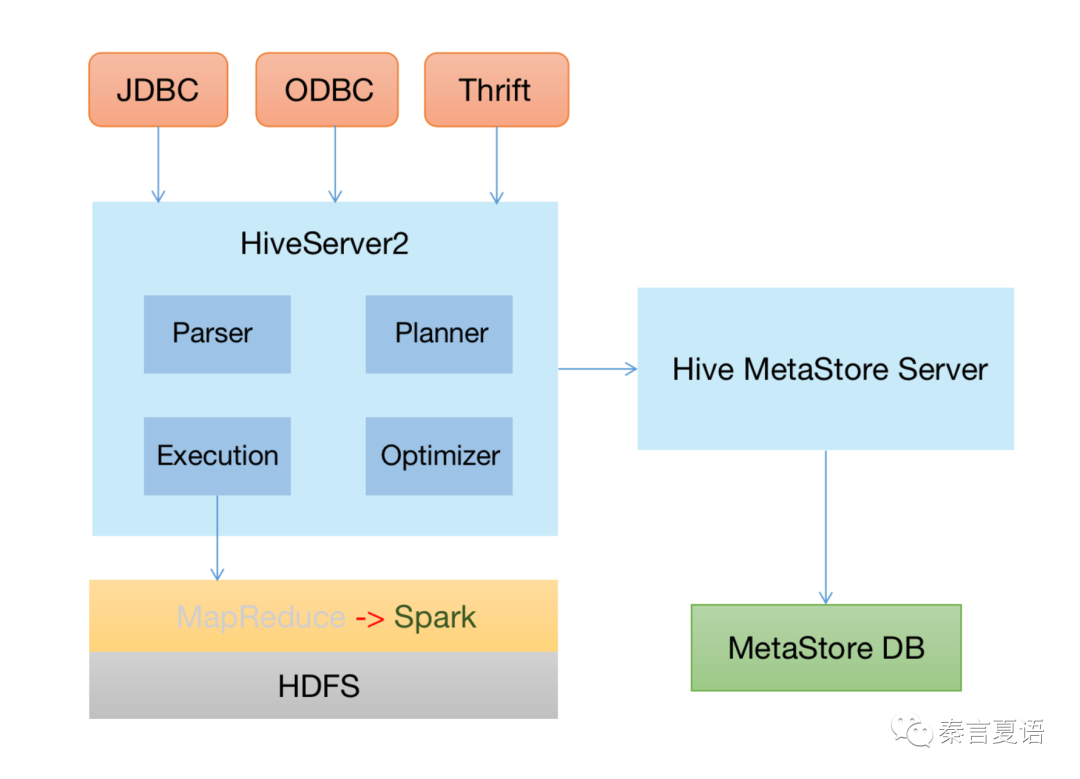

We can certainly improve both. As mentioned above, Hive can be divided into (meta) data storage and computing. Since the computing in MapReduce is slow, why don't we perform computing with Spark?

As shown in the figure above, changing the execution engine from MapReduce to Spark is what Shark (another famous product from AMPLab) does. The main developer of Shark is also the core developer of Spark.

Spark is an ambitious project, and the limitations of Hive are increasingly hindering Shark's development. So, the Shark Team stopped developing Shark and started a new business by developing Spark SQL entirely based on Spark. It retains compatibility with Hive.

The Hive community did not stop the evolution of Hive. Inspired by Shark, it has also begun to promote the Hive on XX project. Different execution engines are supported at the bottom layer, including MapReduce, Spark, and Tez. However, the development of Hive on Spark is not as good as Spark SQL.

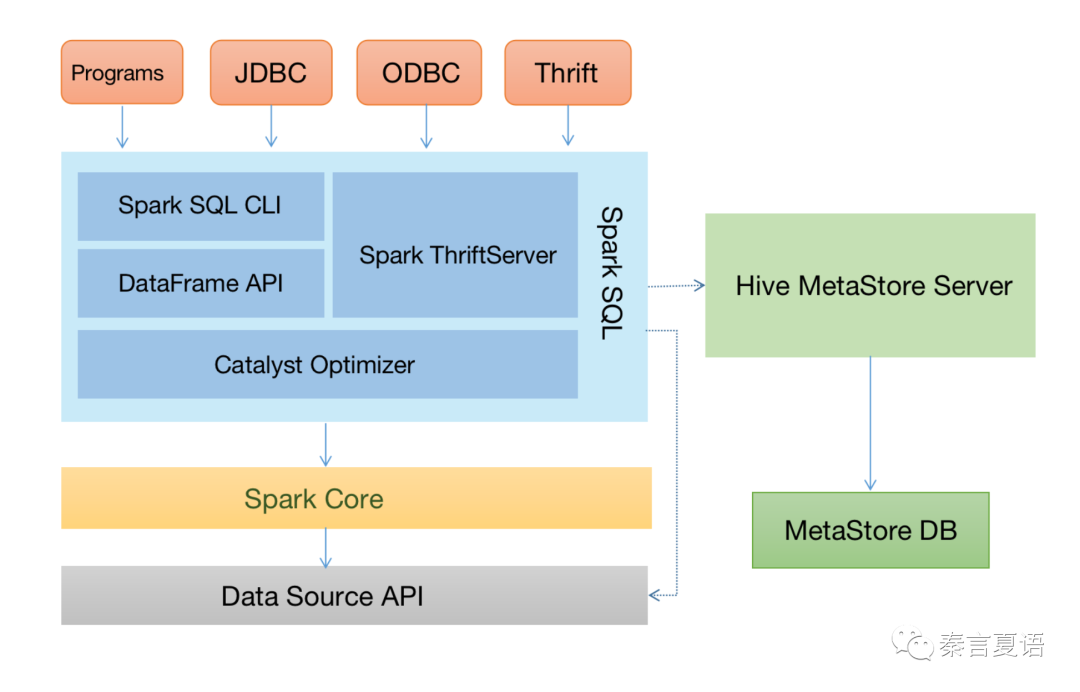

The preceding figure shows the architecture of Spark SQL. The key points are listed below:

It is worth mentioning that Spark SQL's SQL is equivalent to HQL, so you can write SQL statements directly to do interactive queries. While DataFrame/Dataset API is roughly equivalent to MapReduce's Lambda + Stream method:

df.groupBy('word').count()Also, you can write it like this:

df.sql("select word, count(*) from table tmp.word_count")You may find that SQL can only process the data with schema. This kind of data is structured data.

For unstructured data, Spark's RDD or Hadoop MapReduce is required for processing.

With Spark SQL for processing structured data and Spark Core (RDD) for processing unstructured data, Spark can replace Hive and MapReduce, respectively. This is also the choice we recommend.

Moreover, the schema information brought by structured data makes it easy for us to process data through SQL and provides the possibility for performance optimization.

In the next article, let's learn about SQL performance optimization.

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a storytelling way. Stay tuned for the next one!

Learning about Distributed Systems – Part 19: Performance-Impacting Operations in SQL

64 posts | 59 followers

FollowApsaraDB - June 7, 2022

Alibaba Cloud Native Community - March 1, 2022

Alibaba Developer - December 14, 2021

Alibaba Clouder - June 3, 2020

Alibaba Cloud_Academy - October 7, 2023

Alibaba Cloud_Academy - October 7, 2023

64 posts | 59 followers

Follow Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud_Academy