The authors of this article are Zhang Ying and Duan Xuehao of the Jingdong Algorithm Service Department. Li Rui, an Alibaba Technical Expert in Apache Hive PMC, helped proofread it. The main contents are listed below:

Visit the GitHub page. You are welcome to give it a like and stars!

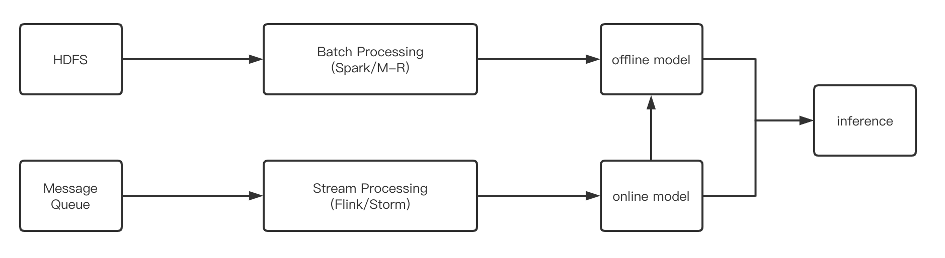

The current data processing process recommended by Jingdong Search is shown in the figure above. We can see that real-time and offline data are separated. Most of the offline data processing uses Hive/Spark, while most of the real-time data processing uses Flink/Storm.

As a result, users need to maintain two sets of environments and two sets of codes in a business engine. Many common characters cannot be reused, and it is difficult to guarantee the quality and consistency of data. Moreover, since the underlying data models of the stream batch are inconsistent, it needs a large amount of patchwork logic. For data consistency, a large amount of data comparison, such as year-on-year, link relative ratio, and secondary processing needs to be carried out, which is extremely inefficient and prone to errors.

Flink SQL, which supports batch and stream integration, can solve this pain point. Therefore, we decided to introduce Flink to solve this problem.

In most jobs, especially Flink jobs, the optimization of execution efficiency has been the key to Flink task optimization, which is especially important in the case of daily data increment of Jingdong reaching petabytes.

Developers that have written some SQL work know that for Flink SQL, the same UDF will be called repeatedly in some cases, which is very unfriendly to some resource-consuming tasks. In addition, the impact on execution efficiency can be measured by shuffle, join, and failover strategy perspectives. The Flink task debugging process is also very complicated, especially for some enterprises with isolated online machines.

For this purpose, we have implemented embedded Derby as a metadata storage database of Hive (allowEmbedded). In terms of task recovery, batch jobs do not have a checkpoint mechanism to implement failover, but the unique region strategy of Flink can enable batch jobs to recover quickly. This article also introduces relevant optimization measures such as object reuse.

The following situation occurs in Flink SQL tasks: If the same UDF appears in both LogicalProject and Where conditions, the UDF will be called multiple times (please see this link). However, if the UDF consumes a lot of CPU or memory, this redundant calculation will affect the performance significantly. Therefore, we hope to cache the UDF results for direct use next time. It is important to consider when designing. (Very Important Note: Please ensure that LogicalProject and subtask chain of Where conditions are joined together.)

taskmanager, so this cache is either thread (THREAD LOCAL) level or tm level.According to the considerations above, we use guava cache to cache the UDF results and then go to the cache directly to get the data when calling, which may reduce the task consumption as much as possible. The following is a simple use case (the maximum use size and timeout are set at the same time, but there is no write lock):

public class RandomFunction extends ScalarFunction {

private static Cache<String, Integer> cache = CacheBuilder.newBuilder()

.maximumSize(2)

.expireAfterWrite(3, TimeUnit.SECONDS)

.build();

public int eval(String pvid) {

profileLog.error("RandomFunction invoked:" + atomicInteger.incrementAndGet());

Integer result = cache.getIfPresent(pvid);

if (null == result) {

int tmp = (int)(Math.random() * 1000);

cache.put("pvid", tmp);

return tmp;

}

return result;

}

@Override

public void close() throws Exception {

super.close();

cache.cleanUp();

}

}You may wonder why unit testing is also included in optimization. We know the Flink task debugging process is very complicated, especially for some enterprises with isolated online machines. Jingdong's local environment cannot access the task server, so we spent a lot of time uploading jar packages, checking logs, and doing other behaviors in the initial stage of debugging tasks.

The embedded Derby is implemented as a metadata storage database of Hive (allowEmbedded) to reduce the debugging time of the task and increase the development efficiency of the code developer, which is a method to optimize the development time. The logic includes the following steps:

First, create a Hive Conf:

public static HiveConf createHiveConf() {

ClassLoader classLoader = new HiveOperatorTest().getClass().getClassLoader();

HiveConf.setHiveSiteLocation(classLoader.getResource(HIVE_SITE_XML));

try {

TEMPORARY_FOLDER.create();

String warehouseDir = TEMPORARY_FOLDER.newFolder().getAbsolutePath() + "/metastore_db";

String warehouseUri = String.format(HIVE_WAREHOUSE_URI_FORMAT, warehouseDir);

HiveConf hiveConf = new HiveConf();

hiveConf.setVar(

HiveConf.ConfVars.METASTOREWAREHOUSE,

TEMPORARY_FOLDER.newFolder("hive_warehouse").getAbsolutePath());

hiveConf.setVar(HiveConf.ConfVars.METASTORECONNECTURLKEY, warehouseUri);

hiveConf.set("datanucleus.connectionPoolingType", "None");

hiveConf.set("hive.metastore.schema.verification", "false");

hiveConf.set("datanucleus.schema.autoCreateTables", "true");

return hiveConf;

} catch (IOException e) {

throw new CatalogException("Failed to create test HiveConf to HiveCatalog.", e);

}

}Next, create Hive Catalog (call the embedded interface by reflection):

public static void createCatalog() throws Exception{

Class clazz = HiveCatalog.class;

Constructor c1 = clazz.getDeclaredConstructor(new Class[]{String.class, String.class, HiveConf.class, String.class, boolean.class});

c1.setAccessible(true);

hiveCatalog = (HiveCatalog)c1.newInstance(new Object[]{"test-catalog", null, createHiveConf(), "2.3.4", true});

hiveCatalog.open();

}Create tableEnvironment: (It is the same as the official website.)

EnvironmentSettings settings = EnvironmentSettings.newInstance().useBlinkPlanner().inBatchMode().build();

TableEnvironment tableEnv = TableEnvironment.create(settings);

TableConfig tableConfig = tableEnv.getConfig();

Configuration configuration = new Configuration();

configuration.setInteger("table.exec.resource.default-parallelism", 1);

tableEnv.registerCatalog(hiveCatalog.getName(), hiveCatalog);

tableEnv.useCatalog(hiveCatalog.getName());Finally, close the Hive Catalog:

public static void closeCatalog() {

if (hiveCatalog != null) {

hiveCatalog.close();

}

}In addition, building a suitable data set is also an important function for unit testing. We have implemented CollectionTableFactory to build a suitable data set using the following methods:

CollectionTableFactory.reset();

CollectionTableFactory.initData(Arrays.asList(Row.of("this is a test"), Row.of("zhangying480"), Row.of("just for test"), Row.of("a test case")));

StringBuilder sbFilesSource = new StringBuilder();

sbFilesSource.append("CREATE temporary TABLE db1.`search_realtime_table_dump_p13`(" + " `pvid` string) with ('connector.type'='COLLECTION','is-bounded' = 'true')");

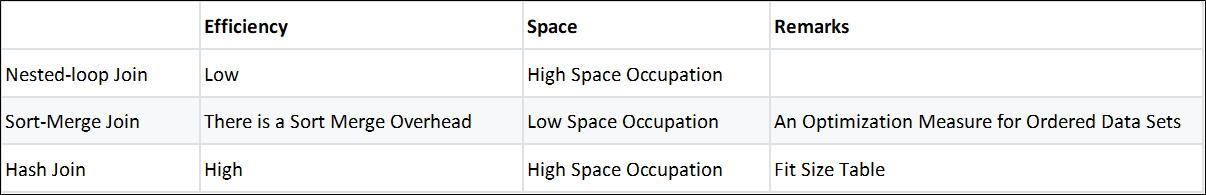

tableEnv.executeSql(sbFilesSource.toString());Traditional offline Batch SQL (SQL for bounded data sets) has three basic implementations, Nested-loop Join, Sort-Merge Join, and Hash Join.

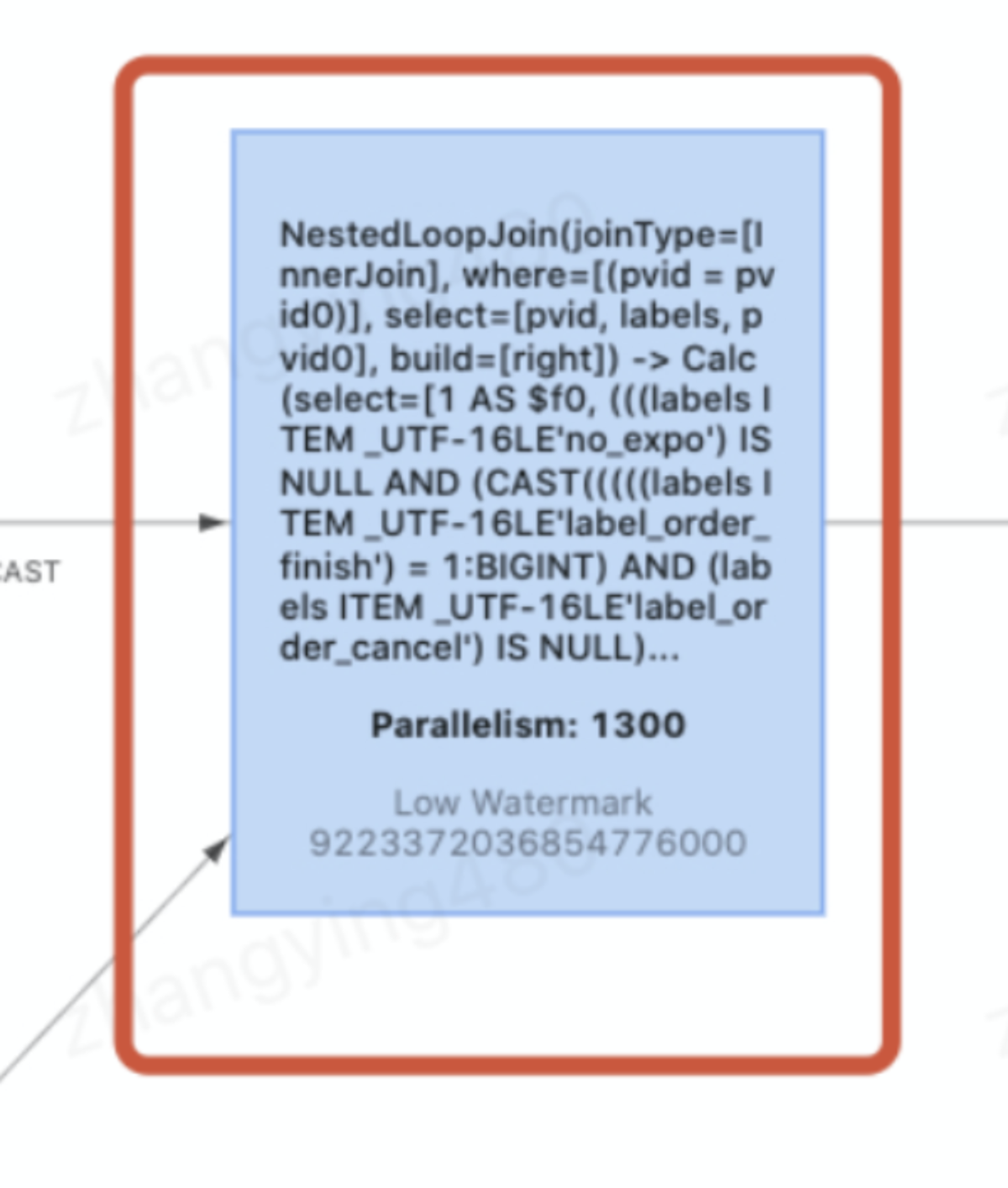

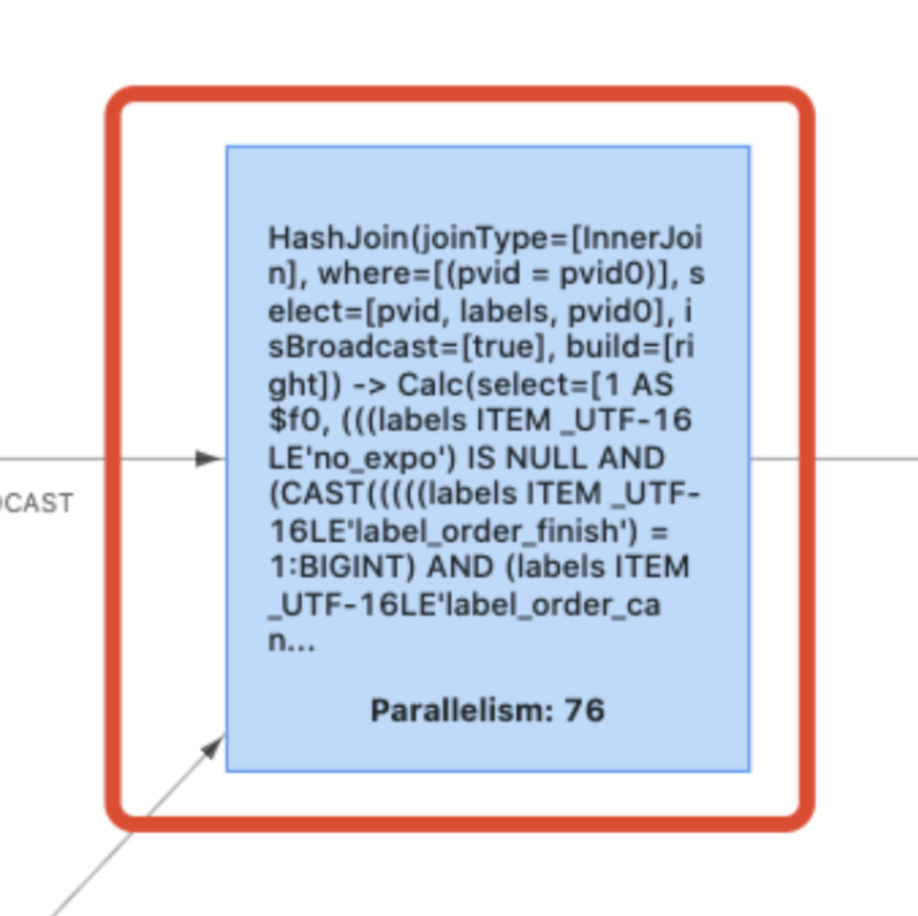

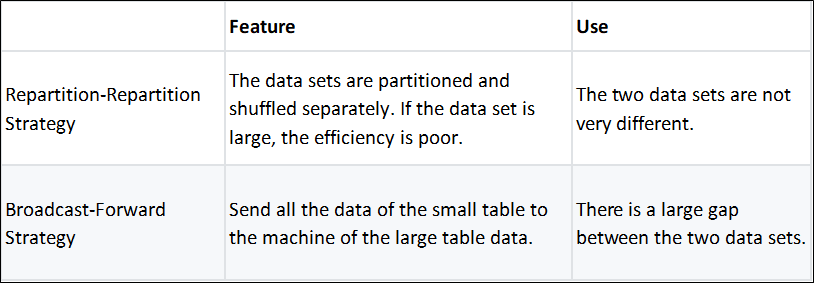

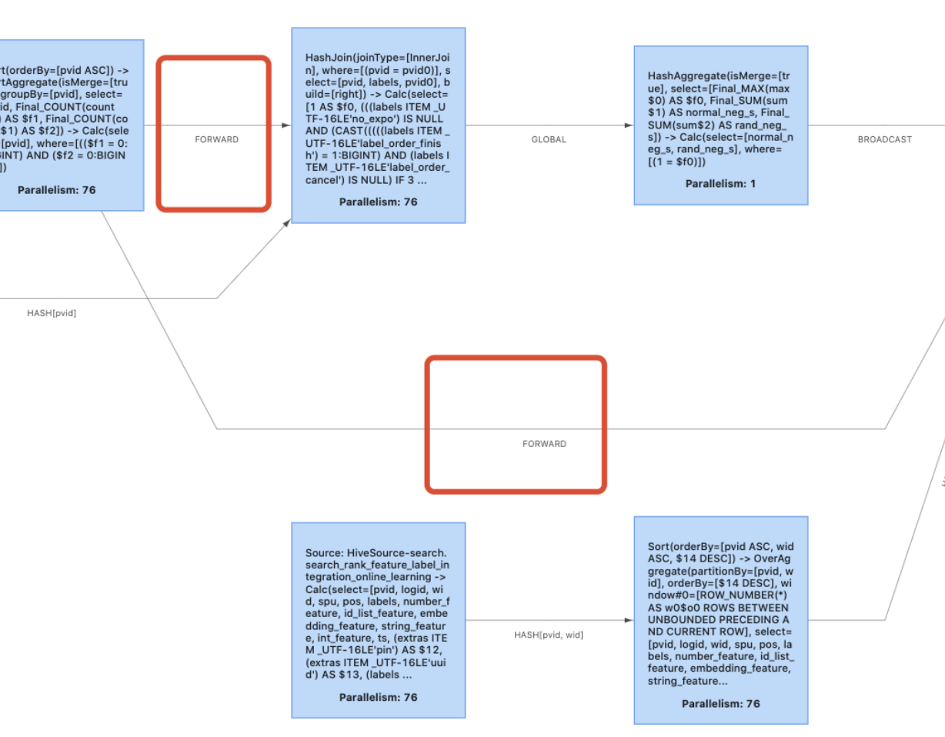

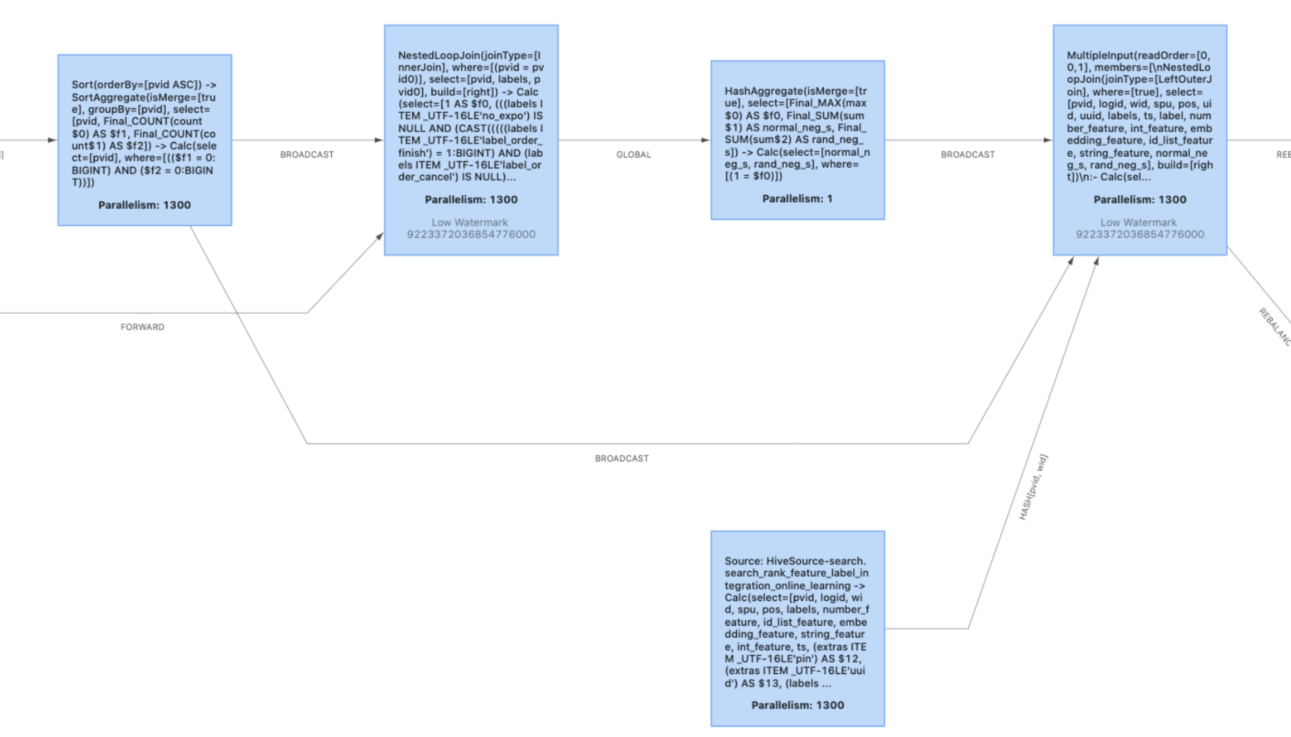

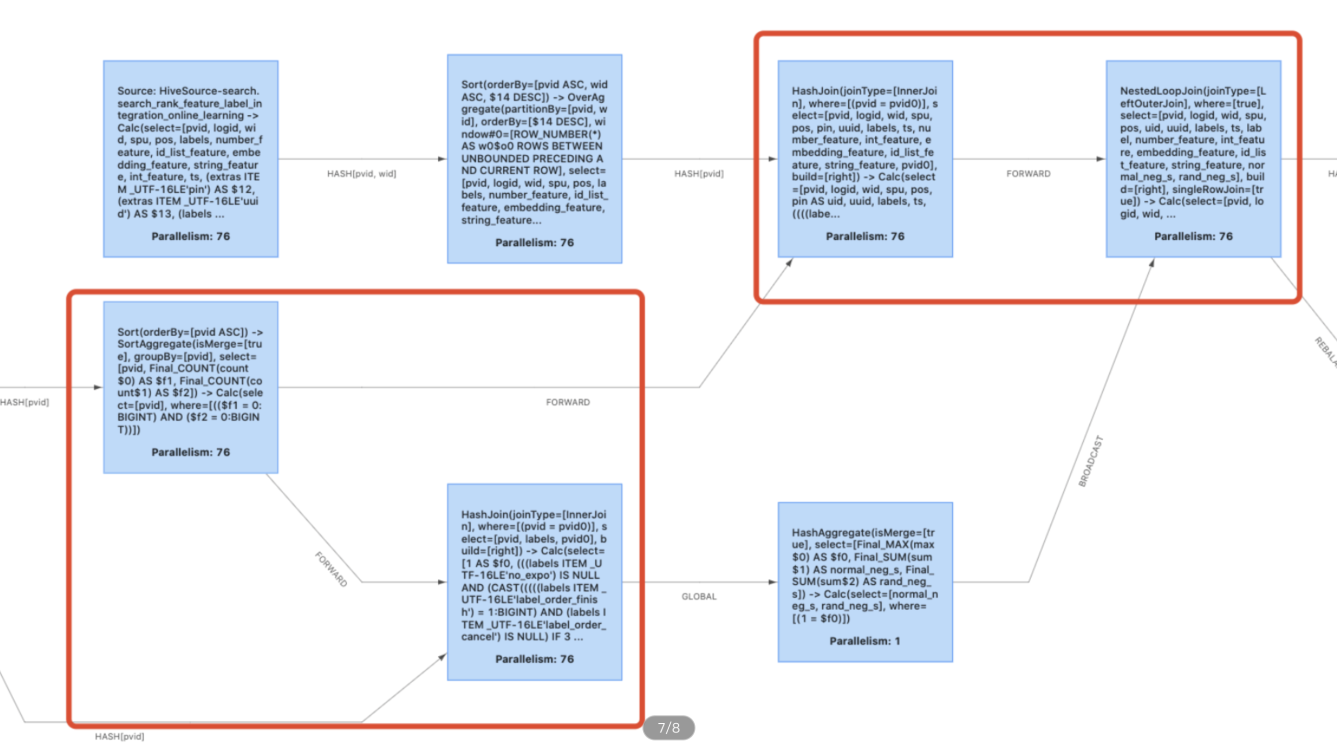

NestedLoopJoin has the lowest time and space efficiency, and it can be disabled using: table.exec.disabled-operators:NestedLoopJoin.The following two pictures show the results before and after disabling (if the disabling does not take effect, check if it is Equi-Join first):

Hash Join is also divided into two phases: First, one dataset is converted to a Hash Table, and then another dataset element is traversed and matched with the elements in the Hash Table.

Hash Join is more efficient but requires a large space. It is usually used as an optimization scheme when one of the join tables is a small one suitable for putting into memory (Disk overflow is allowed.)

Note: Sort-Merge Join and Hash Join apply to Equi-Join. (They both use equals as comparison operators for Join conditions.)

Flink has made some subdivisions on top of join, including:

As we all know, batch shuffle is time-consuming:

We can use the: table.optimizer.join.broadcast-threshold to set the table size that uses broadcast. If it is set to "-1," broadcast is disabled.

The following figure shows the results before and after disabling:

In Flink SQL tasks, reducing shuffle can improve the throughput of SQL tasks. In real-world business scenarios, we often encounter situations that the upstream data have met the data distribution requirements. (For example, multiple join operators are in succession, where the keys are the same.) In this case, a forward shuffle of Flink is a redundant shuffle, and we hope to chain these operators together. Flink 1.12 introduces the features of multiple input, which can eliminate most of the unnecessary forward shuffles and chain the source operators together.

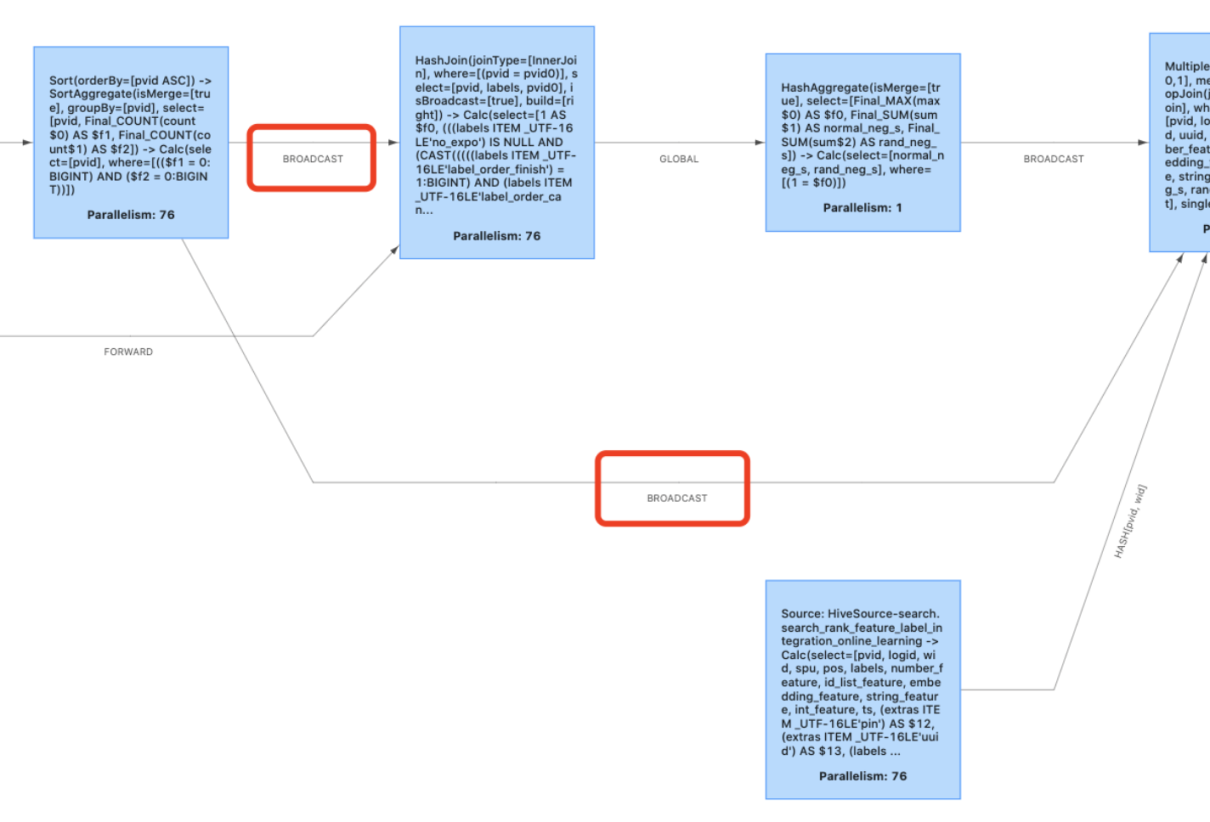

table.optimizer.multiple-input-enabled: trueThe following figure shows the topology graph with multiple input and without it. (The operator chain function has been turned on.)

The upstream and downstream operators go through the serialization/deserialization/copy stages for data transmission. This behavior greatly affects the performance of Flink SQL programs. We can improve the performance by enabling object reuse. However, it is very dangerous in DataStream because modifying the object in the next operator could accidentally affect the object in the operator above.

However, the Table/SQL API of Flink is very secure and can be enabled in the following ways:

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.getConfig().enableObjectReuse();It can also be enabled by setting: pipeline-object-reuse:true

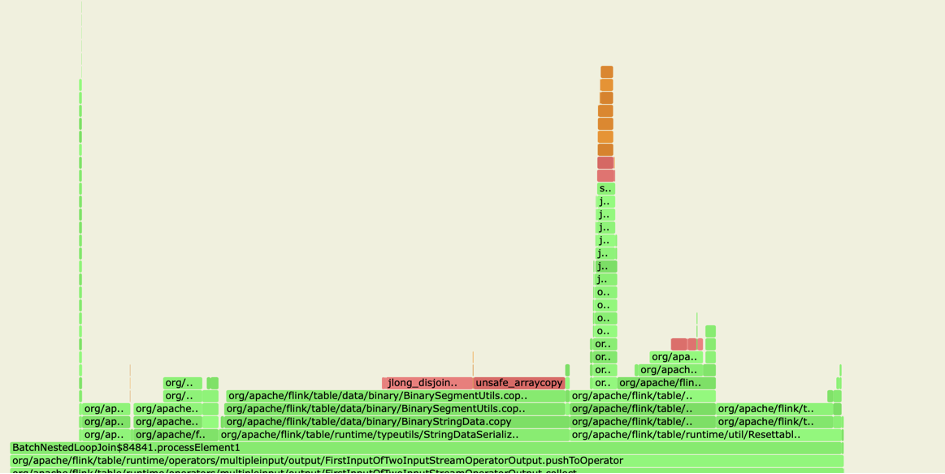

Why does enabling object reuse bring such a big performance boost? In Blink planner, data exchange between two operators of the same task will eventually call BinaryString#copy. Looking at the implementation code, we can find that BinaryString#copy needs to copy the bytes of the underlying MemorySegment. It can improve efficiency by enabling object reuse to avoid replication.

The following figure shows the corresponding flame graph when there is no open object reuse:

In batch task mode, checkpoint and its related features are all unavailable. Therefore, the checkpoint-based failover policies for real-time tasks cannot be used in batch tasks. However, batch tasks allow Tasks to communicate through Blocking Shuffle. When a Task fails due to unknown reasons, Blocking Shuffle stores all the data required by this Task. Therefore, we only need to restart this Task and all downstream tasks connected to it through Pipeline Shuffle:

jobmanager.execution.failover-strategy:region (The finished operator can be restored directly.)

table.exec.shuffle-mode:ALL_EDGES_BLOCKING (shuffle policy)

The shuffle in Flink is divided into pipeline shuffle and blocking shuffle.

Blocking shuffle is the traditional batch shuffle, which will put data on a disk. This shuffle has good fault tolerance, but it will generate a large number of disks and network IO. (It is suggested to use blocking shuffle to reduce the concerns.) Blocking shuffle is divided into hash shuffle and sort shuffle.

Corresponding Control Parameters:

For table.exec.shuffle-mode, this parameter contains multiple parameters. The default is ALL_EDGES_BLOCKING, which means all edges will use blocking shuffle. However, we can try the POINTWISE_EDGES_PIPELINED, which means forward and rescale edges will start pipeline mode automatically.

For taskmanager.network.sort-shuffle.min-parallelism, we set this parameter to less than the parallelism, so we can open the sort-merge shuffle. The setting of this parameter requires you to consider some other situations, which can be set according to the official website.

This article focuses on the optimization measures of Jingdong in Flink SQL tasks, focusing on the aspects of shuffle, join mode selection, object reuse, and UDF reuse. I would like to thank all my colleagues (especially Fu Haitao of the Jingdong Real-Time Computing Research and Development Department) for their support and help.

Zeppelin Notebook: An Important Tool for PyFlink Development Environment

Kwai Builds Real-Time Data Warehouse Scenario-Based Practice on Flink

206 posts | 56 followers

FollowApache Flink Community China - January 11, 2021

ApsaraDB - May 25, 2023

Apache Flink Community China - August 11, 2021

Alibaba Cloud MaxCompute - November 15, 2021

Apache Flink Community China - March 17, 2023

Alibaba Clouder - December 2, 2020

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Apache Flink Community