By Alibaba Cloud Realtime Compute Team

The year 2020 has been an unusual year for us due to the global pandemic. Never the less, Flink ushed into a new era, from many different aspects.

On December 13 to 15, the 2020 Flink Forward Asia (FFA) Conference was successfully held. FFA is a meeting officially authorized by Apache and supported by Apache Flink Community China. After two years of continuous upgrades and improvements, FFA has become the largest and top-notch Apache project conference in China. It is a big annual event for Flink developers and users.

This year, due to travel restrictions amid the pandemic, FFA hosted meetings online and offline simultaneously, attracting more participants to the event. This year's event recorded a total number of participants (UV) of over 92,000 (3-day total), and the highest number of viewers (UV) in a single day exceeded 40,000.

In this article, we will briefly summarize the key topics presented at the 2020 FFA conference. This blog aims to give a general introduction to FFA; please visit the official website to watch the playback of the live broadcast (in Chinese).

The 2020 FFA Conference summarized Flink's achievements in the past year from three aspects: community development, influence in the industry, and Flink's improvement on engine and its ecosystem.

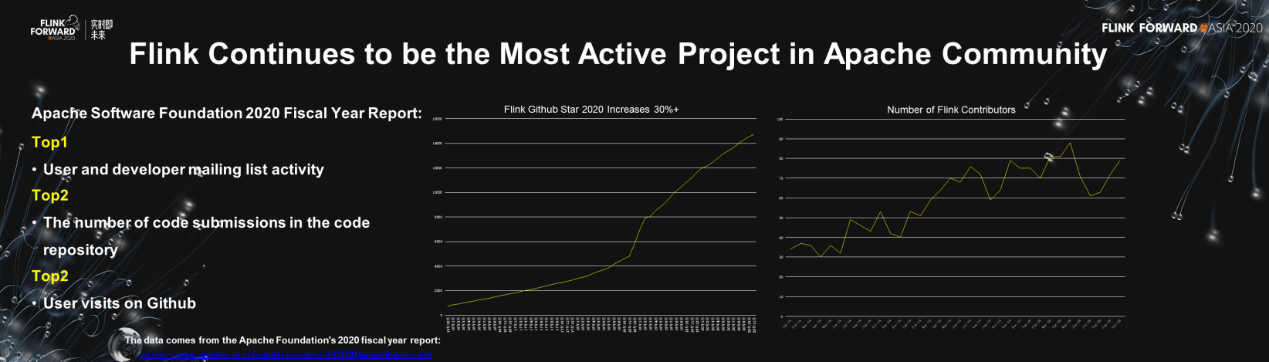

In terms of community, the result is illustrated in the above figure. Flink was continuously crowned as the most active project in the Apache community in 2020, according to the fiscal year report of Apache Foundation. In addition, the star number (standing for the popularity) of Flink Github and the number of code contributors in Flink community have grown at an average annual rate of over 30% during the past few years.

It is worth mentioning in particular the prosperity and development of the Chinese Flink community. Currently, Flink is the only project among the top Apache projects that has enabled the Chinese emailing list. The Chinese mailing list became more active than the English emailing list in the past year. Moreover, the Flink official account subscription has exceeded 30,000, and more than 200 news about Flink technology, ecosystem, and practices were pushed throughout the year.

The official Flink Chinese learning website has also been launched during the last year. This educational website contains Flink-related learning materials, scenario cases, and activity information, ideal for those who are interested in Flink.

In terms of industry influence, Flink has become the de facto standard in the real-time computing industry domestically and internationally after several years of development. Most mainstream technical companies have adopted Flink as their real-time computing solutions. This year's FFA featured more than 40 leading domestic and foreign companies sharing their practical experience of Flink. The figure above displays the logos of some of these companies, showing how widespread Flink has been applied to all walks of life and into our daily life. This includes fields from knowledge sharing to online education; from financial services to financial investment; from long and short videos to live streaming; and from real-time recommendation and searches to e-commerce services.

In terms of improvements on engine and ecosystem in 2020, Flink achieved great results in these four main directions, streaming engine kernel, stream-batch unification, Flink-AI Integration, and cloud native. In particular, with three major releases published this year (Flink-1.10, 1.11, and 1.12), Flink has further conducted upgrades and improvements on stream-batch unification. For the first time, stream-batch unification was formally used in the Tmall marketing activity analysis [1] during Double 11. After the baptism of Double 11, stream-batch unification will become the starting point for wide promotion in the industry, signaling a new era of stream-batch unification!

Before diving into the keynote speech, I'd like to discuss a couple of interesting topics at the conference. The first is Jia Yangqing's sharing. He is the vice president of Alibaba Group, leader of Alibaba Cloud Intelligent Computing platform, and the creator of the AI computing framework Caffe. He shared his thoughts on open source and the cloud. He pointed out that open source makes the cloud more standardized, while the unification of big data and AI is an inevitable trend. Obviously, Flink plays an extremely important role in this process as a top open-source project and the real-time computing standard. He also put forward expectations on Flink for computing generalization and data intelligence in the future, so that Flink's pine cones take root and sprout in all walks of life.

The second is the award ceremony for the second Apache Flink "geek challenge" jointly organized by Alibaba Cloud Tianchi and Intel. The challenge focused on epidemic prevention and supported deep learning application on the Apache Flink platform. It attracted 3,840 players from 705 universities and 1,327 enterprises in 14 countries and regions. Awards were presented by Yang Qing, li Wen and Xiangwen.

Now let's get back to the keynote.

The first topic was presented by Mowen, initiator of the Apache Flink Community China and the head of Alibaba Cloud's intelligent real-time computing and open platform. He mainly introduced the achievements of the Flink community in 2020 and its future development directions, mainly including: streaming engine kernel, stream-batch unification, Flink + AI integration, and Cloud Native. He also shared Alibaba's experiences and insights of launching stream-batch unification in core business scenarios during the Double 11. Alibaba is the largest user and at the same time promoter of Flink. Alibaba's experiences can be inspiring to many readers with similar needs.

Technological innovation is the core of the continuous development of open-source projects. Therefore, the first part focused on the innovation made by the Flink community on its streaming computing engine kernel.

1) Unaligned Checkpoint

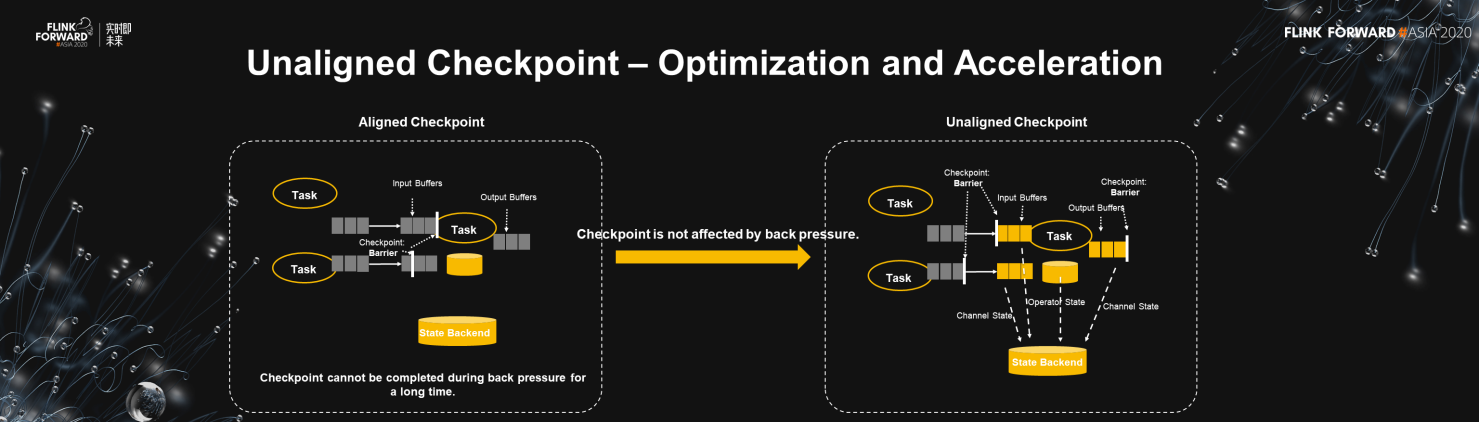

It is well-known that one core part of Flink is to make checkpoints based on the lightweight distributed Snapshot algorithm [2]. Based on the algorithm, Flink can ensure "exactly once" semantics. In this algorithm, each task takes a snapshot of its own state upon receiving all barriers from upstream tasks and then passes barriers to downstream tasks afterwards. When barriers finally reach the sink, a complete global snapshot (checkpoint) is obtained. However, when backpressure occurs, barriers cannot flow to the sink, and as a result, checkpoints fail to be completed. Unaligned Checkpoint is designed to solve the problem that checkpoints cannot complete under backpressure. In the unaligned checkpoint mode, Flink snapshots the task state as well as the channel state and output buffer of each task, so that barriers can be quickly pushed forward to the sink. Hence, checkpoint is no longer affected by back pressure. The Unaligned Checkpoint and aligned checkpoint (existing checkpoint mode) can be automatically switched according to alignment timeout, as shown in the following figure.

(2) Approximate Failover - More flexible fault tolerance

Another improvement is Approximate Single-Node Failover. Under the "exactly once" semantics, the failure of a single node will cause the restart and rollback of all nodes in the execution graph. However, in some scenarios, especially AI training for example, the requirements for exactly-once are not high at all, while that for availability is critical. Therefore, the community has introduced the Approximate Failover. In this failover mode, the failure of a single node only causes the restart and recovery of the failed node, while the rest of the pipeline is not interrupted. Approximate Failover is a strong demand in AI training and recommendation scenarios, as mentioned by both Kuaishou and ByteDance in their talks.

3) Nexmark - Streaming Benchmark

Currently, real-time streaming compute does not have a standard benchmark that is recognized in the industry. To fill this gap, Flink released the first benchmark tool Nexmark based on NEXMark[3]. Nexmark contains 16 standard ANSI SQL queries and is easy to use, with no dependency on external systems. Nexmark is currently open-sourced. Nexmark's GitHub page. It can be used for performance comparison of different streaming compute engines.

The second part focused on stream-batch unification. We mentioned at the beginning of this article that 2020 marks a new era of stream-batch unification. Why? Mowen answered this question from three aspects: architecture evolution of stream-batch unification, Flink batch processing performance, and the data ecosystem of stream-batch unification.

1) Architecture Evolution of Stream-Batch Unification

Flink-1.10 and 1.11 have improved stream-batch unification at the SQL and Table layers, and have make stream-batch unification production ready. The recently released Flink 1.12 solves stream-batch unification at the DataStream layer. Starting from Apache Flink 1.13, the DataSet APIs will be phased out gradually. In the new stream-batch unification architecture, Flink realizes unified stream-batch expression, unified stream-batch execution, and unified pluggable runtime support.

2) Batch processing performance

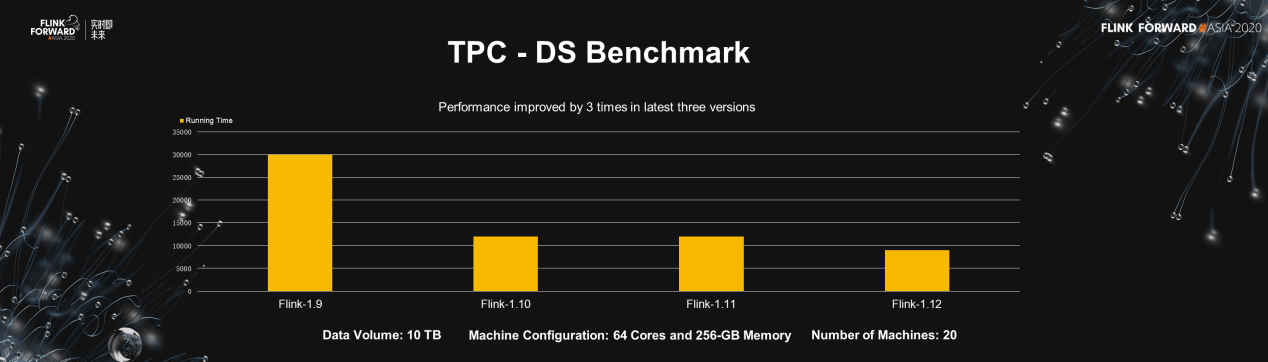

Based on TPC-DS, the batch processing performance of Flink 1.12 is three times faster than that of Flink 1.9 (last year's version)! With a data volume of 10 TB and 20 instances of 64-core CPUs, the execution time of TPC-DS is reduced to less than 10,000 seconds. This means that the performance of Flink batch processing equivalent, if not better than, that of any mainstream batch engine in the industry.

3) Stream-batch unification data ecosystem

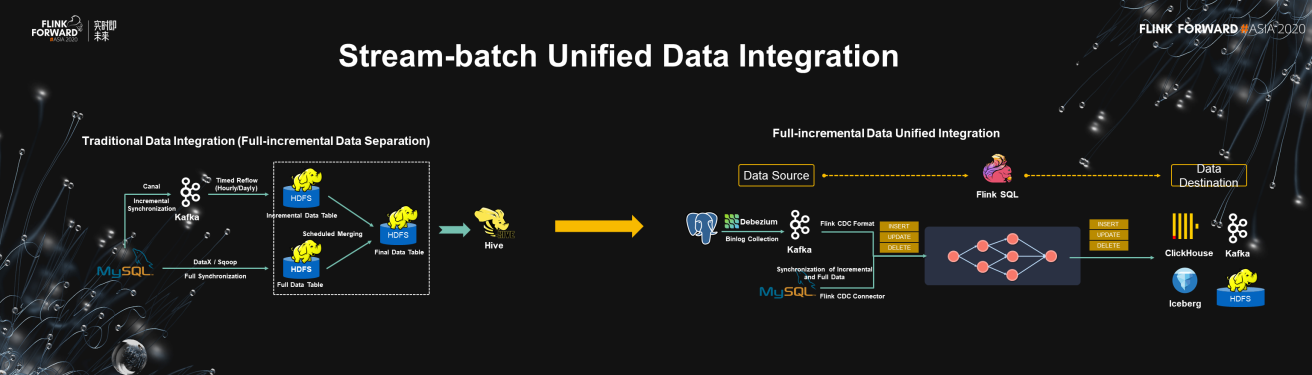

Mowen points out that the stream-batch unification is not only a technical issue, but also plays a profound part in the evolution of the data ecosystem in the industry. Typical scenarios include data synchronization (synchronization of data from databases to data warehouses), and stream-batch unified data warehouse/Data Lake architecture. Traditional data synchronization merges full data set with its incremental part periodically, while Flink provides hybrid connectors to support unified synchronization of both full and incremental data. After reading the full set of data from databases, hybrid connectors automatically switch to an incremental mode, and read binlogs through CDC for incremental synchronization. The switch between a full and an incremental mode is seamless and automatic.

A traditional data warehouse architecture maintains a separate set of data workflow for real-time and offline data processing respectively. As a result, problems may occur, such as redundant development processes (two sets of real-time and offline development processes), redundant data pipelines (two times of data cleansing, filling, and filtering), and inconsistent data computation results (different real-time and offline computing results). Flink-based architecture unifies real-time and offline workflow altogether and can completely solve the three aforementioned problems.

In addition, Flink's unified stream-batch computation perfectly solves the problem that Data Lake faces: unified stream-batch storage. Currently, the mainstream Data Lake solutions, such as Iceberg and Hudi, have been integrating with Flink. Among them, Flink has completely integrated with Iceberg. The integration of Flink + Hudi is also actively being implemented.

The third major part is integration with AI. Mowen summarized the integration progress of Flink with AI in 2020 from three aspects: language, algorithm, and workflow management of big data and AI. For languages, PyFlink, Flink's Python interface, is gradually maturing.

Python is the major development language for AI data scientists. Hence it is important that Flink supports Python in many dimensions. Flink's DataStream API and Table API can be built based on Python. Users can use pure Python to develop Flink programs. Moreover, Flink SQL supports Python UDF/UDTF, and PyFlink integrates with many commonly used Python libraries, such as Pandas. Pandas UDF/UDAF can be directly called in PyFlink.

In terms of algorithms, Alink (see https://github.com/alibaba/alink ) was made open-source last year. Alink is a traditional machine learning algorithm library based on the Flink. In 2020, dozens of new algorithms were open-sourced and were added to Alink. Besides, Alink can provide large-scale distributed training based on parameter servers, and makes the training process and prediction services smoother.

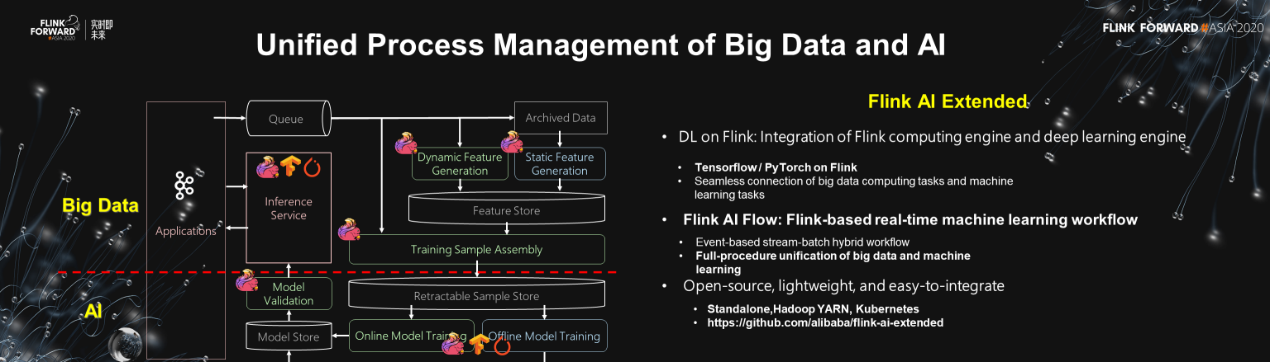

The workflow management of big data and AI is also worth exploring. The essence of this issue is how to design the management architecture for unified online and offline machine learning. It also aims to design a unified workflow management for big data and machine learning. The complete solution, Flink AI Extended, supports the integration of the deep learning engine and the Flink computing engines (TensorFlow and PyTorch on Flink). Currently, Flink AI Extended is open-sourced, and can be accessed on this page.

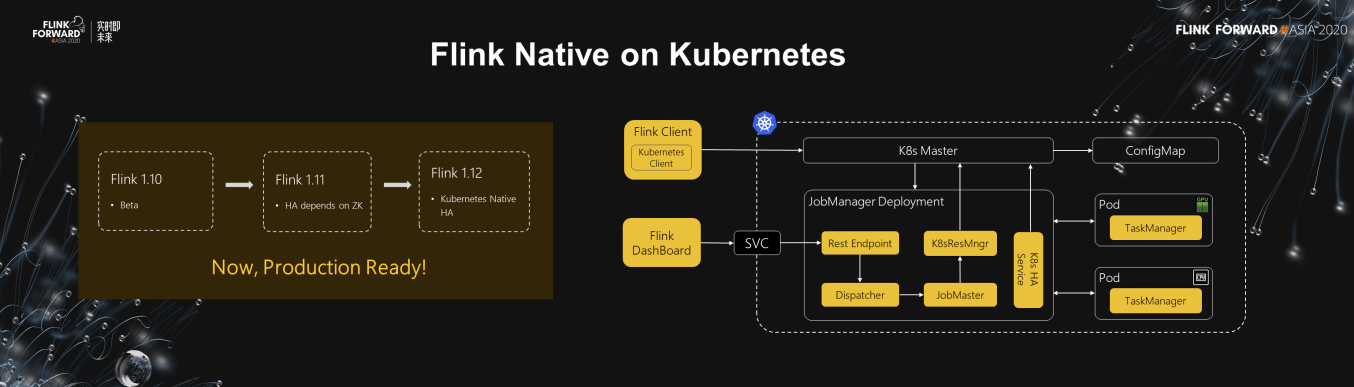

Furthermore, another important direction is deep integration of Flink and Cloud Native Kubernetes. Kubernetes is widely used in a variety of online services, and its ecosystem is developing rapidly. It can provide Flink with better O&M in production. After three releases starting from Flink-1.10 to Flink-1.12, Flink has been able to run natively on Kubernetes. Now, Flink can use the Kubernetes native HA solution and is not dependent on ZooKeeper any more. Flink on K8S is production ready! In addition, JobManager of Flink can directly communicate with the Master of Kubernetes, supporting dynamic scaling and GPU resource management.

Next, Mowen shared the development of Flink in Alibaba, which is also the largest user and promoter of Flink. In 2016, Flink made its debut in the Double 11 search and recommendation scenario, and it was used for real-time search, recommendation and online learning. In 2017, Flink became the standard solution for real-time computing in Alibaba Group. In 2018, Flink was officially available in ali cloud to better serve small and medium-sized enterprises.

In 2019, Alibaba acquired Flink's start-up company Ververica, and donated Blink back to the community in the same year. By 2020, Flink has become the global de facto standard for real-time computing. Major cloud providers like Alibaba Cloud and AWS, and big data providers such as Cloudera, have adopted Flink as their standard cloud products. By this year's Double 11, Flink is the standard real-time solution for all Alibaba business units, including Ant, DingTalk, and Cainiao, with millions of CPU cores in total. This year, Flink doubled the business capability without increasing any resource. The peak of real-time data processing during this year's Double 11 reached to 4 billion records per second.

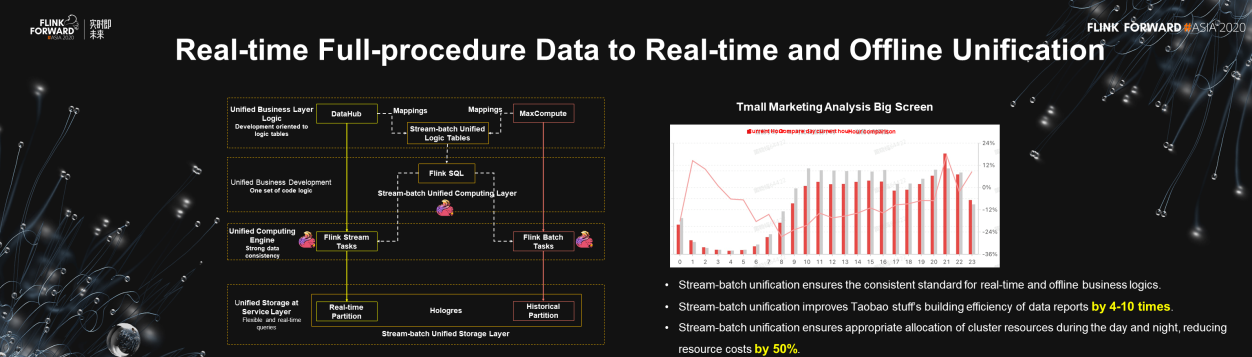

Mowen stressed that "Flink as real-time computation standard" is just the beginning. Alibaba's goal is "real-time and offline unification". In 2020, for the first time, stream-batch unification was formally used in Tmall's big screen marketing analysis during Double 11 --- the Double 11 core scenario. It brought with huge benefits. The unified real-time and offline data logic ensured consistent data results; as a result, this helped improve the business development efficiency by 4-10 times. In addition, Resource costs were reduced by 50% due to the off-peak scheduling of stream and batch tasks, as shown in the above figure. Currently, many companies, such as ByteDance, Meituan, Kuaishou, Zhihu, and NetEase are all exploring the landing of Flink stream-batch unification on their businesses.

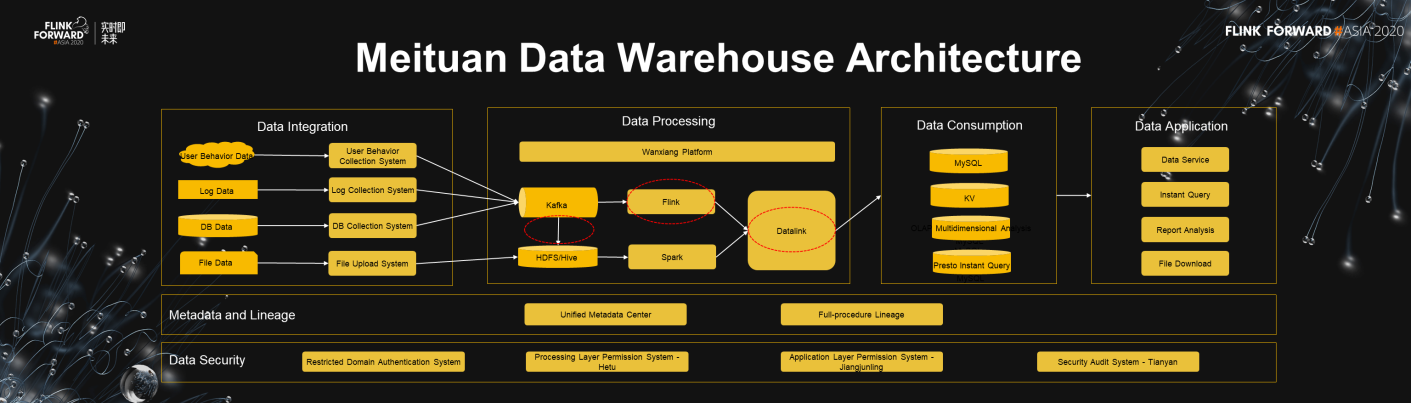

The second topic come from Ju Dasheng, head of Meituan real-time computing. He shared the application of Flink in Meituan. Ju first presented the overall structure of the Meituan data warehouse, as shown in the following figure. The data architecture of Meituan includes four parts: data integration system, data processing system, data consumption, and data application. Flink is mainly used in Kafka2Hive, real-time data processing, and Datalink (the red circles in the figure). The main scenarios Flink is used for Meituan include real-time data warehouses, real-time analysis, search and recommendation, risk monitoring and control, and security and audit. These are typical scenarios for Flink application today. In Meituan, the daily peak data processed by Flink reaches 180 million records per second.

There were two interesting parts in Meituan's sharing. First, Ju proposed the concept of "incremental data production". This is actually similar to the idea of unifying full-set and incremental data processing mentioned by Mowen. However, this concept is slightly different in that it includes the tradeoff among processing latency, data quality, and production costs. In other words, how to control costs and improve data quality in a data warehouse when timeliness is met.

Second, Meituan shared the experiences how they solved the synchronization problem of distributed heterogeneous data sources based on the Flink (Datalink). The Flink-based synchronization system can distribute synchronization tasks to clusters through the Task Manager, improving the extensibility of the overall architecture. In addition, offline and real-time synchronization tasks can be unified in the Flink framework, so all offline and real-time synchronization components can be shared. At present, Meituan has not fully realized the stream-batch unification at the data processing layer. Therefore, Ju said that the future goal was to achieve stream-batch unification in both data processing and data storage.

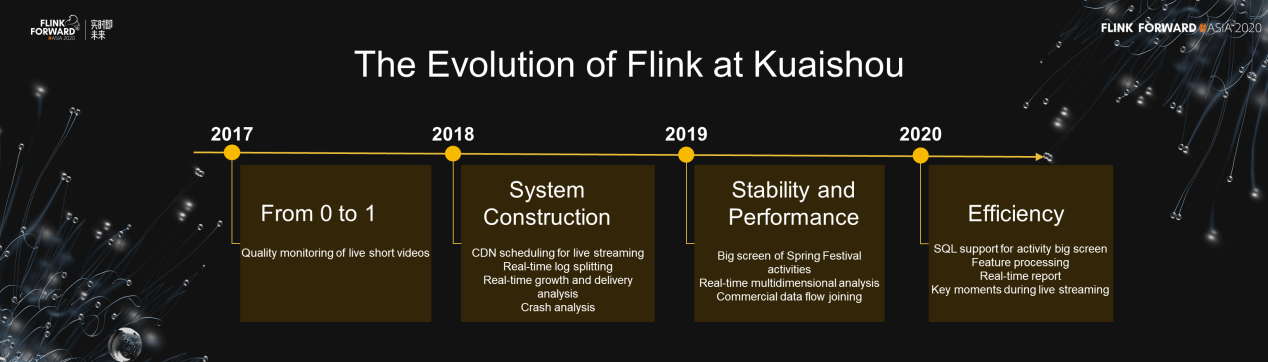

The third topic was presented by Zhao Jianbo, head of the Kuaishou big data architecture team. He mainly shared the reasons for choosing Flink for Kuaishou's real-time computing, the internal application scenarios of Flink in Kuaishou, and the related technical improvements in these application scenarios.

The reason why Flink was selected as a standard real-time computing engine by Kuaishou actually explains why Flink becomes the standard for real-time computing in the entire industry as well. 1) Sub-second processing latency is a strict requirement for Kuaishou internal real-time applications. 2) Various window computing modes, built-in standard state storage, and exactly-once consistency guarantee can greatly simplify business development and debugging complexity. 3) The evolution of the stream-batch unification architecture further simplifies the complexity of data and business architectures.

Kuaishou expressed great expectation in landing stream-batch unification of Flink in all its data scenarios.

Kuaishou has been using Flink since 2017, and this year is the fourth year. The development process of Flink in Kuaishou is shown in the above figure. The main scenarios that Flink is used by Kuaishou include real-time ETL data integration, real-time reports, real-time monitoring, and real-time feature processing/engineering (AI). Currently, the peak of 600 million records is reached per day. Kuaishou shared very detailed examples for each of the preceding scenarios, especially feature processing/engineering, which is representative in AI scenarios.

Zhao also presented SlimBase, a state storage developed and used internally within Kuaishou. SlimBase has three layers: State Interface layer, KV Cache layer, and Distributed File System layer. KV Cache is the key to accelerating read operations. When SlimBase KV Cache layer is hit, SlimBase increases the read/write efficiency by 3-9 times compared with RocksDB. If the KV Cache layer cannot all be hit, in which case file system accesses needed, read performance decreases.

In addition to SlimBase, Zhao shared some solutions used in Kuaishou to improve Flink's stability in cases of hardware faults, dependent service exceptions, and task overload. For the future planning, Zhao said they would definitely promote Flink's stream-batch unification within Kuaishou. Kuaishou would also complete real-time AI data flow with Flink's stream-batch unification to speed up the iteration of model training. As Flink has been used in more and more business, Kuaishou puts more requirements on the stability of Flink, for example, the capability of fast failover. Therefore, Kuaishou will invest more in this aspect as well.

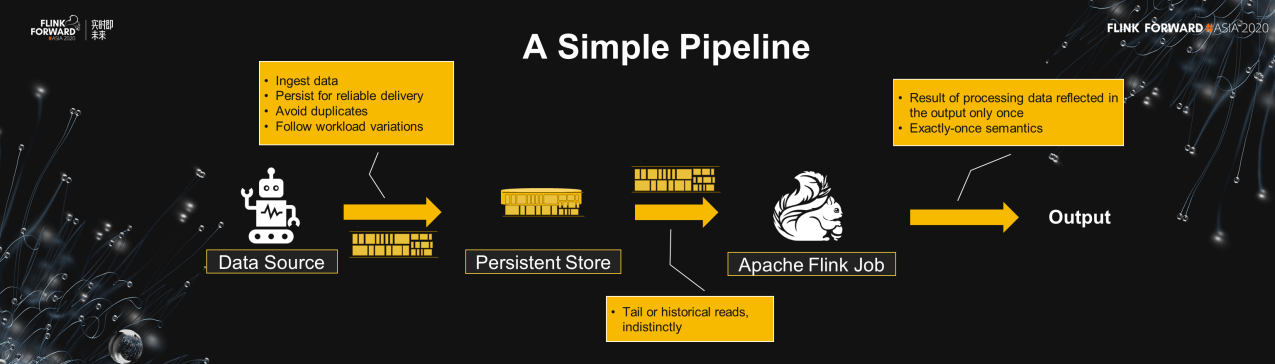

The last topic is about streaming storage Pravega, brought by Teng Yu, the software development director of Dell Technology Group. This topic discusses the abstraction of streaming storage named Stream Abstraction. The traditional file system is not an ideal abstraction for streaming storage. Reasons are as follows: 1) The size of a file is limited, but streaming data is continuously injected, without a bound. 2) The parallelism also needs to be dynamically adjusted during continuous data injection, which involves the O&M of multiple files. 3) Addressing of ordered streaming data cannot be efficiently supported in file system interfaces. 4) The hybrid abstraction of Kafka + file systems that is commonly used currently does not reduce the difficulty of development and maintenance.

To fulfill the preceding requirements, Dell Technology Group designed the streaming storage system, Pravega, based on a Stream Abstraction. Under this stream abstraction, Pravega can dynamically scale streaming storage, ensure the logical order of stream data after scaling to ease solving the addressing problem. To encapsulate these problems under the Stream Abstraction, streaming storage can seamlessly integrate with the streaming computing engine. At the same time, stream computing is free from the complexity of streaming storage. Therefore, the end-to-end exactly-once data processing is greatly simplified, as shown in the preceding figure. Pravega is currently a CNCF open-source project. In the latest Pravega official blog, Pravega released various performance indicators based on the OpenMessaging Benchmark compared with Kafka and Pulsar.

In addition to the above four talks from Alibaba, Meituan, Kuaishou, and Dell Technology Group, more than 40 enterprises and organizations made sharing about industry practice, core technology, open source ecology, financial industry, machine learning, and real-time warehouse. Tmall, ByteDance, Amazon, LinkedIn, iQiyi, Ant, TAL, Weibo, Tencent, Zhihu, Jingdong, PingCAP, NetEase, and 360 participated in these sharing. There will be more discussion on these sub-topic sharing in the future.

The year 2020 was an unusual year, but "every cloud has a silver lining." Despite the widespread epidemic, the Flink community continued to prosper in 2020. Flink has been one of the most active Apache projects for several years. Flink has also become the de facto real-time computing standard globally. In the past year, Flink has achieved good results in stream engine kernel, stream-batch unification, AI integration, and Cloud Native. More efforts will be made in these four directions in the future.

The year 2020 marked a new era for Flink. The stream-batch unification was first applied to the core business scenarios of Alibaba's Double 11, making it the starting point for the large-scale application of stream-batch unification in the industry. Let's work together to seize the opportunity, face the challenges, and create a better 2021 for Flink!

206 posts | 54 followers

FollowApache Flink Community China - April 13, 2022

Apache Flink Community China - January 20, 2021

Alibaba Cloud Indonesia - January 24, 2025

Apache Flink Community - May 28, 2024

Apache Flink Community - July 28, 2025

Apache Flink Community - June 14, 2024

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Apache Flink Community