Based on the Flink Forward Asia 2025 talk by Calvin Tran and Yuanzhe Liu, Senior Software Engineers at Grab.

In today's data-driven world, the speed of insight is a critical competitive advantage. For a company operating at the scale of Grab—Southeast Asia's leading super-app—this isn't just a goal; it's a necessity. With millions of transactions generating a torrent of data every minute, the pressure on Grab's internal data platform is immense. This platform must support over 600 data pipelines running on 200+ Kafka streams, posing a clear challenge: how do you transform this raw data into timely, accurate, and actionable insights for everyone, from data scientists to business analysts?

At Flink Forward Asia 2025, engineers from Grab detailed their journey of building a robust real-time analytics ecosystem. Their solution rests on two powerful pillars built with Apache Flink:

Let's dive into how Grab tackled these challenges to turn their data streams into a strategic asset.

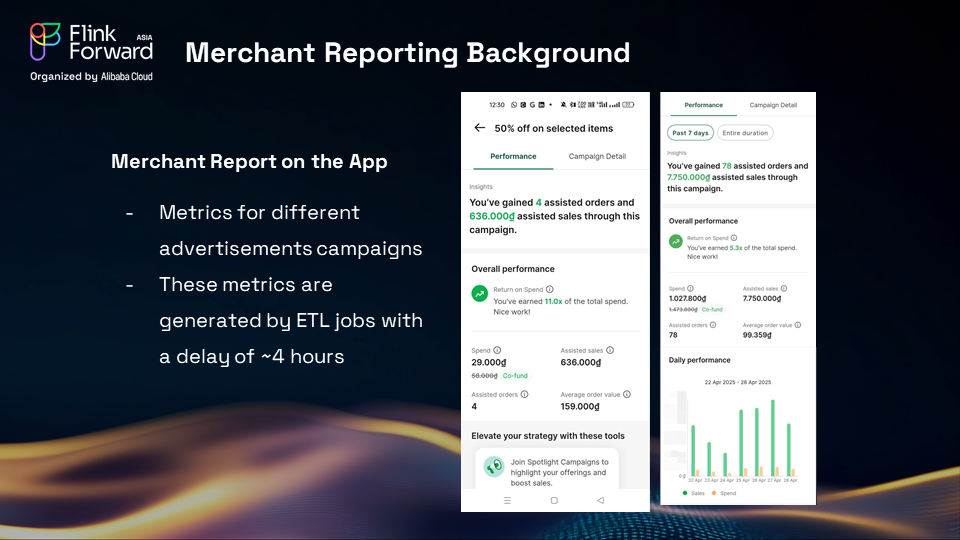

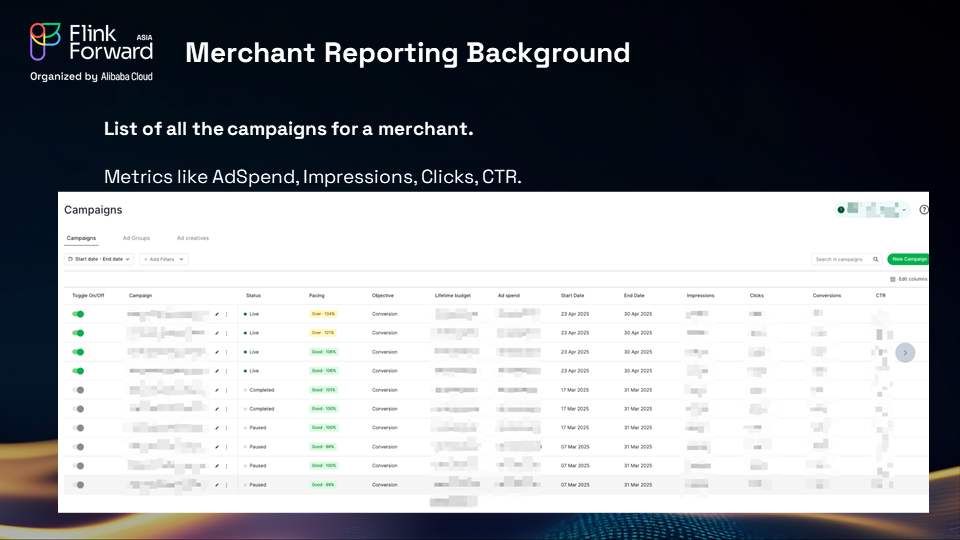

One of Grab's key services is providing merchants—such as restaurant owners using GrabFood—with tools to manage their business. This includes an advertising platform where they can launch campaigns to attract more customers. To succeed, these merchants need to understand campaign performance in near real-time.

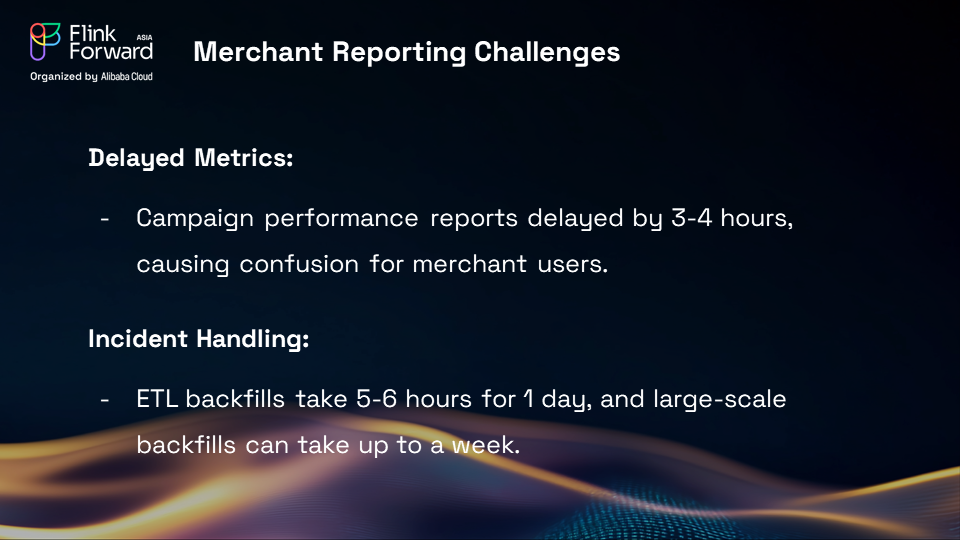

However, their initial reporting pipeline was built on a data lake, which introduced a delay of three to four hours. This lag created significant problems:

To solve this, Grab built a self-service analytics platform with a simple but powerful philosophy. As the speaker noted, the motivation came from asking "who is our user who can use our platform?" The answer included data scientists and business users, leading them to a key insight. They came to the conclusion that since "everyone inside Grab knows SQL," they just needed to pick the right platform—Apache Flink—to provide a self-service tool that "everyone can use." By abstracting the underlying complexity of stream processing, they could enable a wider audience to build their own real-time pipelines.

The platform's workflow is elegantly simple:

This "set and forget" model removes the engineering team as a dependency for creating reports, freeing them to focus on improving the platform itself.

With the new platform, the merchant reporting system was transformed. Now, merchants and advertisers have access to dashboards with metrics like ad spending, impressions, and clicks, all updated in real-time. This allows them to:

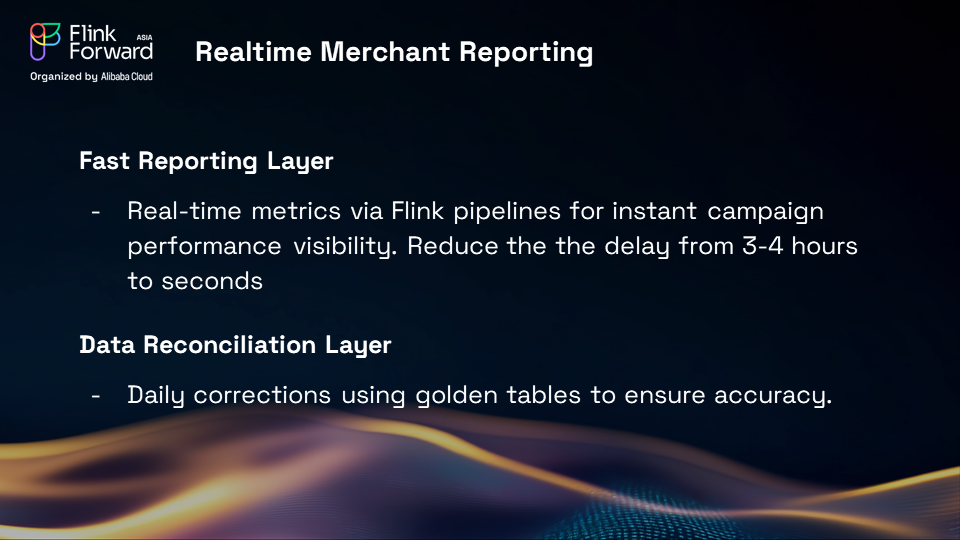

The core issue was latency: ETL processes delayed critical metrics by 3-4 hours, severely impacting business decisions.

"If a food staller looks at the app four hours later and says, 'Why don't I have any sales even though I'm running campaigns?' — it doesn't feel right," Tran illustrates.

When metrics calculations needed adjustments, backfill operations could take:

This delay frustrated merchants and hindered campaign optimization efforts.

Grab implemented a fast reporting layer using Flink, transforming reporting latency from hours to milliseconds. The architecture includes:

While reporting might appear straightforward, the underlying logic can be highly complex:

"All of the logic comes from the user," Tran emphasizes. "Without SQL knowledge, users couldn't create these reports, and we couldn't handle all the requests manually."

Real-time data is only useful if it's correct. As Grab's platform empowered more users to build real-time pipelines, a new question emerged: how can we guarantee the accuracy and quality of the data flowing through these systems?

Yuanzhe Liu identified three core challenges in monitoring streaming data quality:

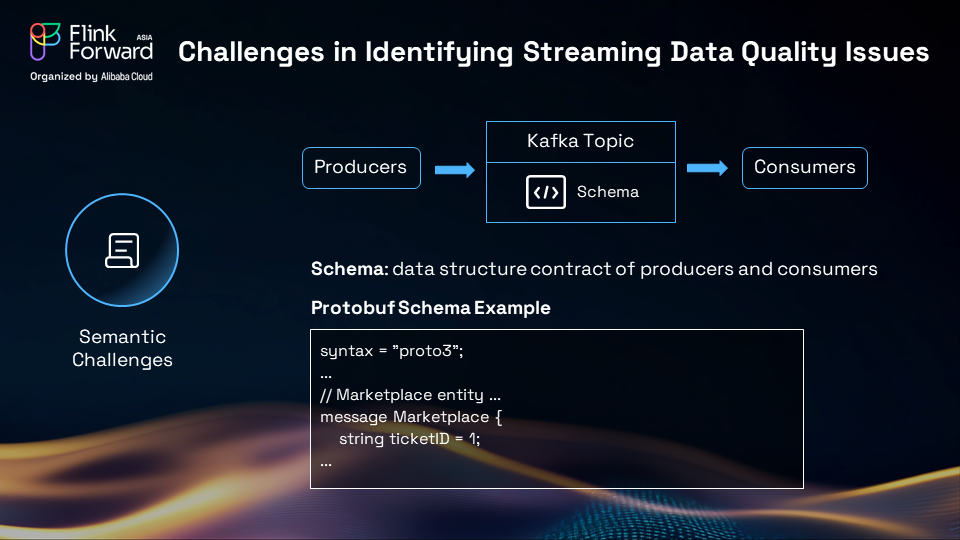

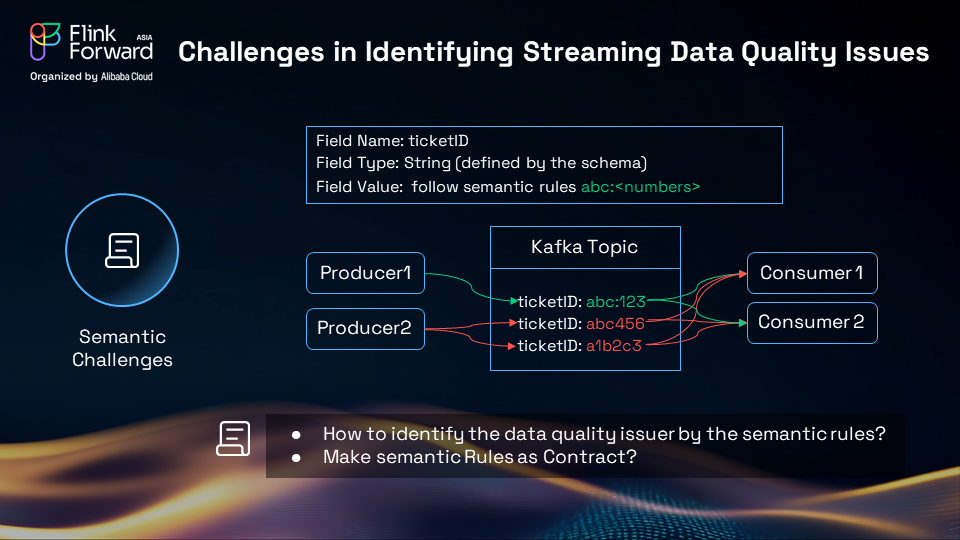

Semantic Correctness

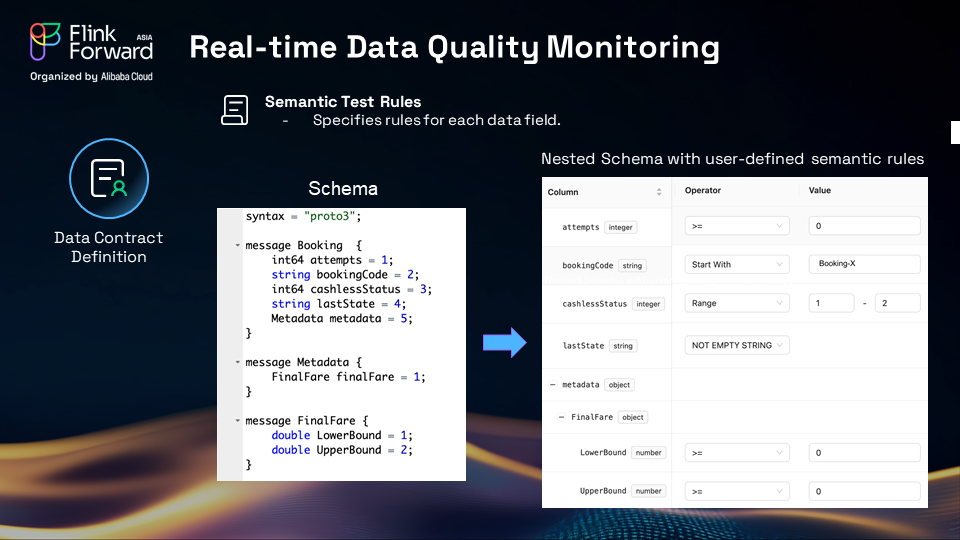

The first challenge lies in differentiating schema correctness from semantic correctness. As Liu explained, a schema is a structural contract. For instance, a schema might define a ticket_id field as a string. However, the business logic might require a specific semantic rule, such as the ticket_id must follow a pattern like ABC:[numbers]. The problem arises when multiple producers use the same, correct schema, but one of them sends semantically invalid data. Liu describes this scenario: "the other producers using the same correct schema but instead... trying to produce something that violates the rules... both of them... so during the execution there won't be any issue but the result is there are some... wrong data that violates the rules exist on the same topic which might affect the downstream users." This creates a situation where structurally valid "poison data" flows undetected into the system.

Timeliness

The second challenge is the slow, reactive cycle of issue detection and resolution. Liu painted a clear picture of the typical workflow: "producer... produce some poison data into our Kafka... after some time our... consumer... identify this poison data then they report this kind of data to the producer side then after the producer... receive this kind of complaints then they try to fix this kind of data issue." The critical problem is the delay. As Liu emphasized, "the entire flow in my take a few hours or even days from the poison data producing to the... producer... fix[ing] their logic." This latency means bad data can corrupt analytics and downstream systems for an extended period before a fix is even attempted.

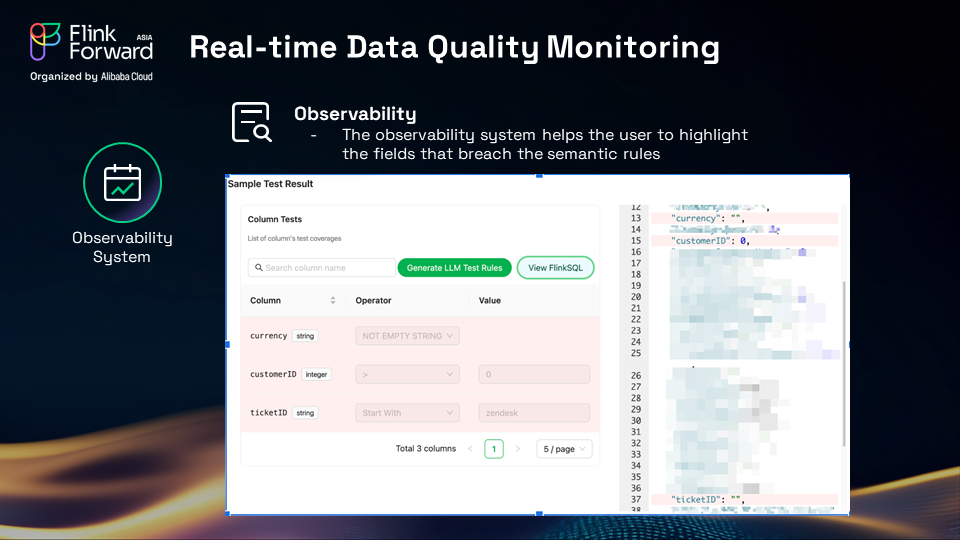

Observability and Root Cause Analysis

Finally, even after an issue is flagged, the challenge is not over. The third critical hurdle, as Liu put it, is "once we identified there is a data quality issue, how fast can we figure out what's the cause of the issue?" Simply knowing that bad data exists is insufficient. The true bottleneck is the manual, time-consuming process of debugging to find the root cause. Without immediate context and clear examples of the failing data, developers are left to sift through logs and samples, delaying the fix and prolonging the impact of the data quality problem.

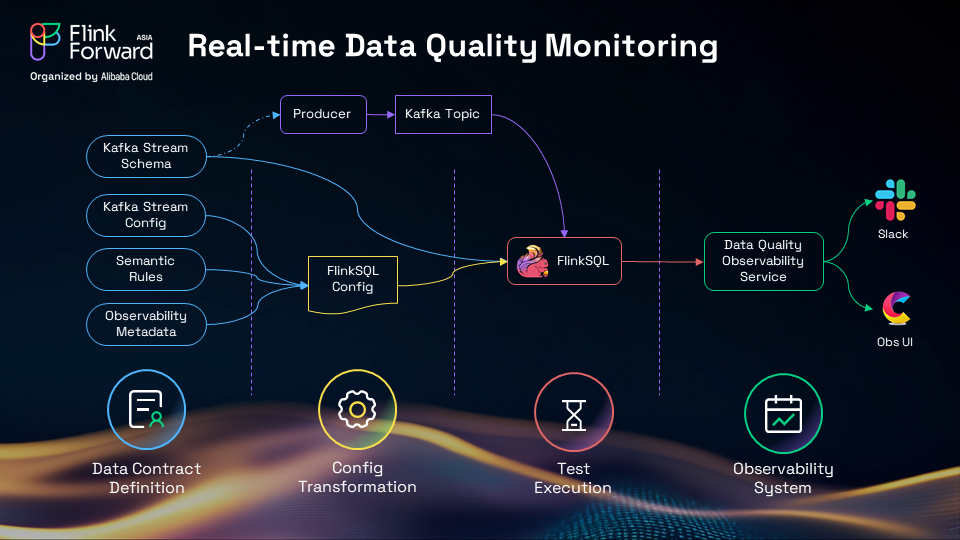

To address these challenges, Grab developed a sophisticated real-time data quality monitoring system built around the concept of Data Contracts. A data contract is a formal agreement, defined as code, that specifies the semantic rules and quality expectations for a data stream.

Their solution consists of four key components:

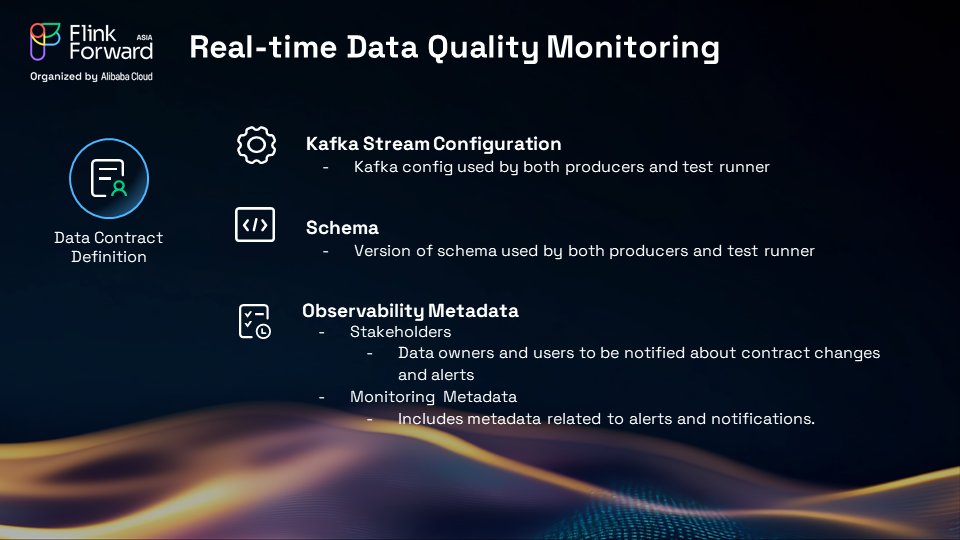

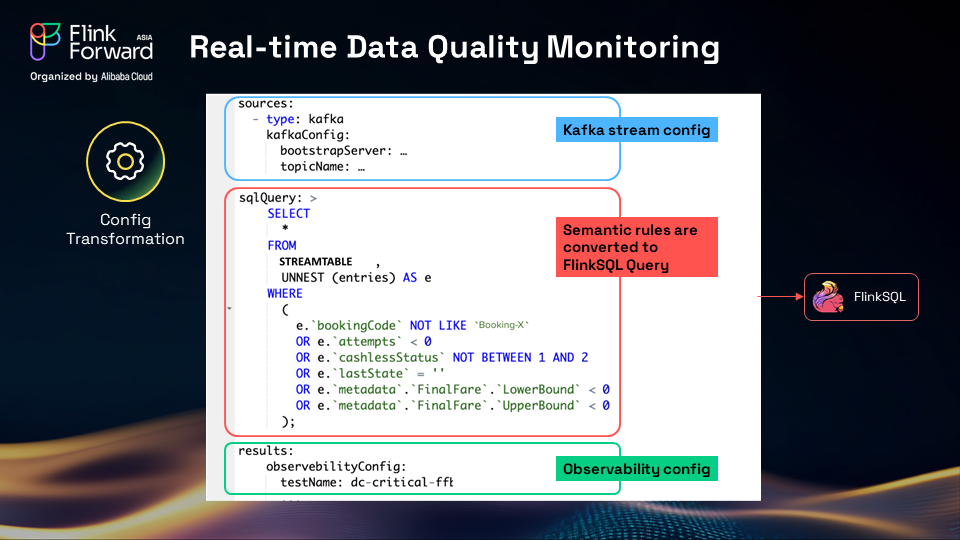

Users define their data contract in a simple configuration file. This includes:

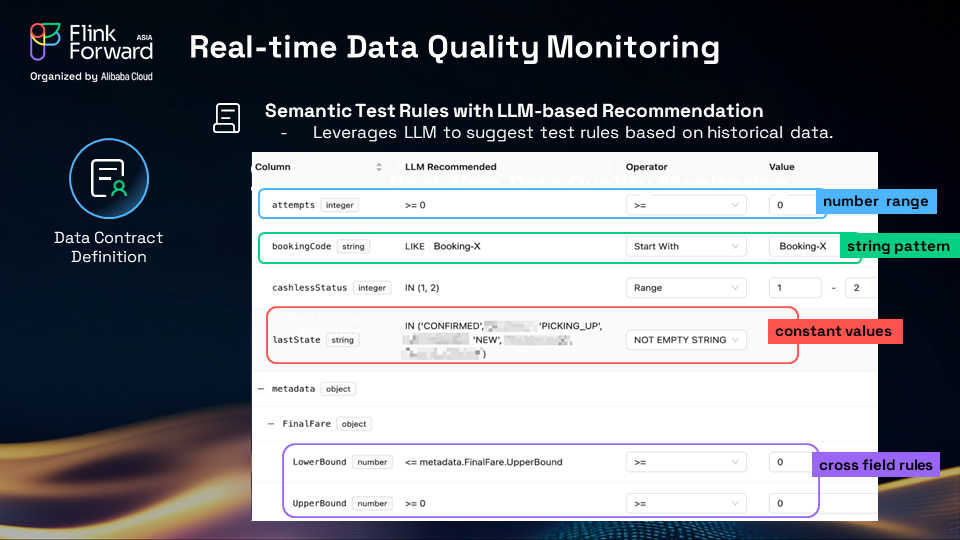

To make this even easier, the system can recommend validation rules by analyzing historical data for common patterns, constant values, and even cross-field relationships (e.g., end_time must be after start_time).

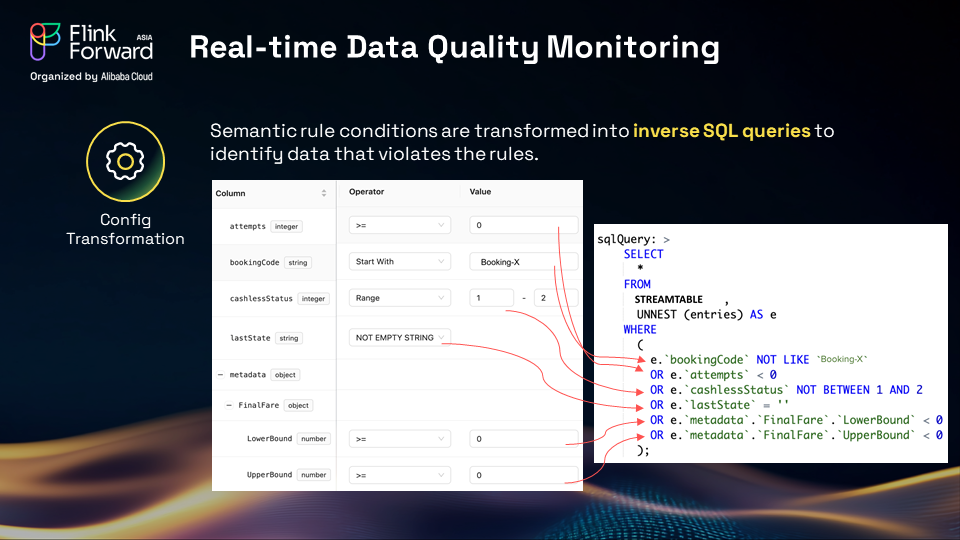

This is the most ingenious part of the system. Instead of writing queries to find valid data, the platform automatically transforms the user-defined rules into inverse Flink SQL queries. These queries are designed specifically to find the bad data that violates the contract.

This approach is highly efficient, as Flink only needs to process and flag the exceptions.

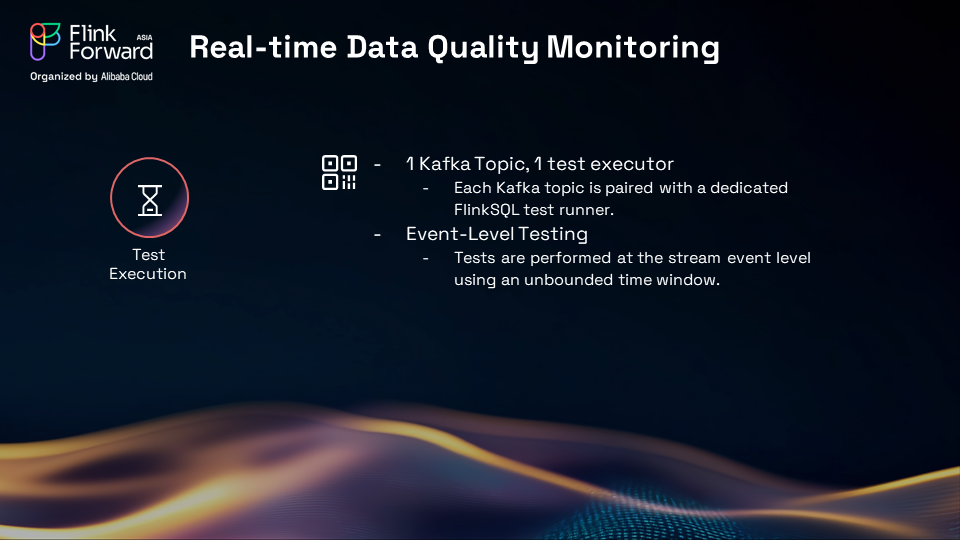

The generated inverse SQL queries are executed as a standard Flink job. This "Test Runner" job consumes data from the live production stream and, if it finds any records matching the inverse query (i.e., bad data), it immediately passes them to the observability system.

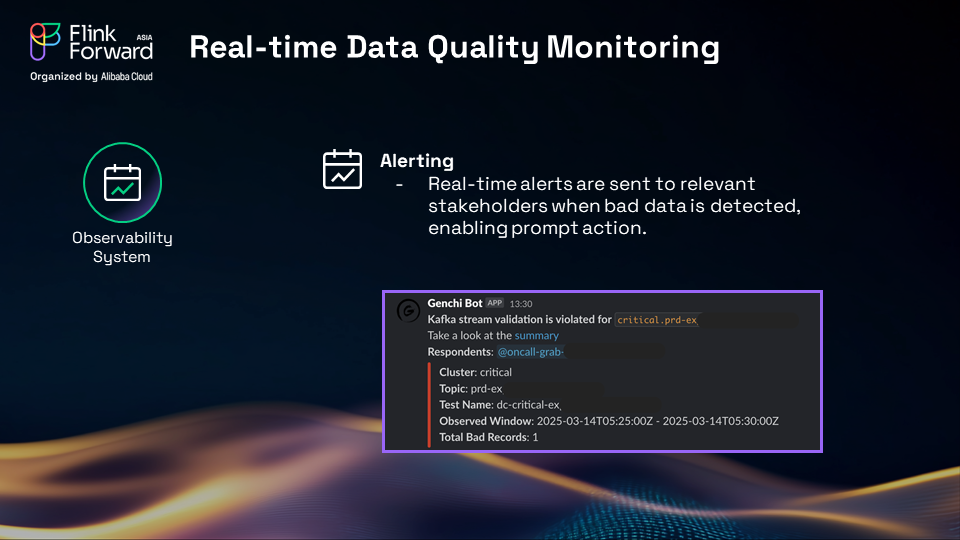

When bad data is detected, two things happen instantly:

Actionable Dashboard: The alert includes a link to an observability dashboard that provides all the context needed for debugging. The dashboard shows:

This complete feedback loop reduces debugging time from hours or days to just a few minutes, enabling teams to fix issues before they have a significant downstream impact.

Grab's journey offers a powerful blueprint for any organization looking to build a robust and user-friendly real-time analytics ecosystem with Apache Flink. The key principles are:

By combining the raw power of Apache Flink with thoughtful platform design, Grab has successfully transformed its massive data streams from a complex challenge into a source of clear, reliable, and actionable insights.

Flink Agents: An Event-Driven AI Agent Framework Based on Apache Flink

Apache Flink FLIP-15: Smart Stream Iterations & Optimization

206 posts | 56 followers

FollowApache Flink Community - August 1, 2025

Apache Flink Community - March 7, 2025

Apache Flink Community - July 28, 2025

Apache Flink Community - April 17, 2024

Apache Flink Community - July 28, 2025

Apache Flink Community - August 14, 2025

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn More ApsaraVideo Media Processing

ApsaraVideo Media Processing

Transcode multimedia data into media files in various resolutions, bitrates, and formats that are suitable for playback on PCs, TVs, and mobile devices.

Learn MoreMore Posts by Apache Flink Community