In some data processing scenarios, data needs to be processed multiple times to achieve the final result. For example, in machine learning training, the same batch of data needs repeated iterations to obtain the optimal model; in graph computation, multiple rounds of propagation are needed to get the final result. This cyclic processing pattern is also common in Flink's stream processing. However, if system failure occurs during iteration, how do we ensure data isn't lost or duplicated? This is the problem FLIP-16 attempts to solve.

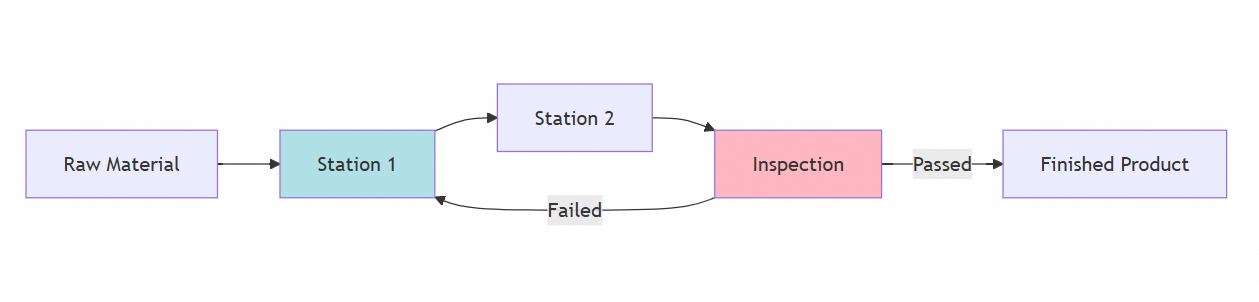

Let's understand this problem with a simple analogy. Imagine a factory production line. Ordinary assembly lines are linear: raw materials enter from one end and emerge as finished products from the other end. But some special products might need repeated processing at certain stages, forming a circular production line.

In this circular production line, if the system needs to create a backup (called checkpoints in Flink), it faces a tricky problem: how to handle products currently in the loop?

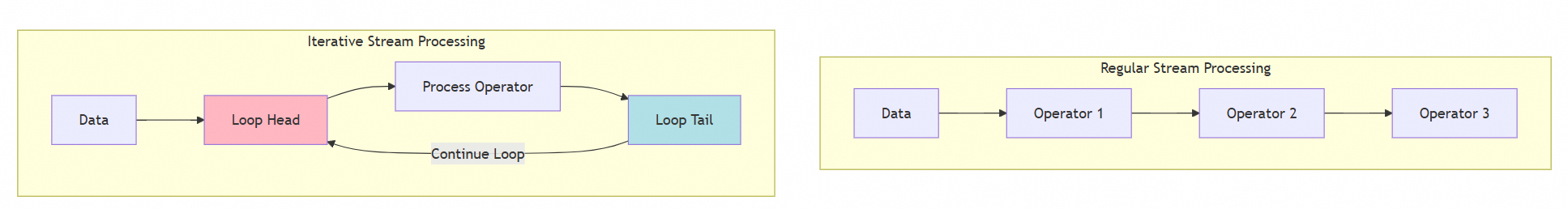

In regular stream processing, Flink uses a method called "asynchronous barrier snapshots" to create checkpoints. It's like placing a marker on the assembly line and only backing up after all products before the marker are processed. But this method faces challenges in iterative processes:

Waiting for all data in the loop to finish processing before creating a checkpoint could take a very long time, possibly forever. It's like waiting for a never-ending game to finish.

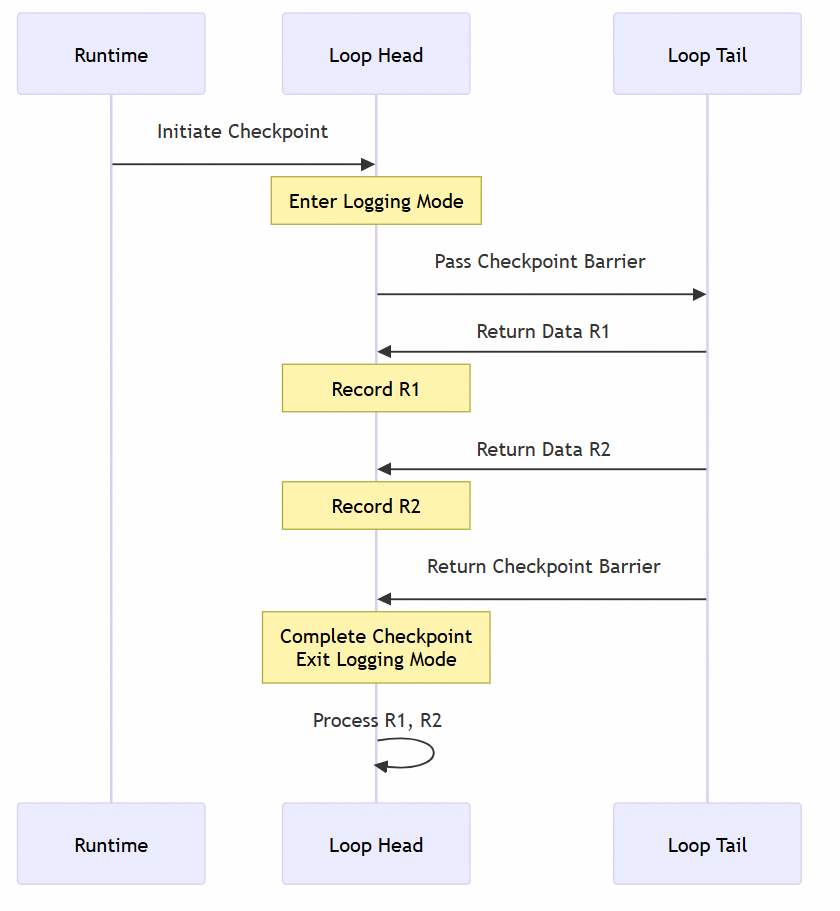

FLIP-16 proposed a clever solution. The core idea is: rather than waiting for all iterative data to complete processing, temporarily store it instead. Specifically:

This process can be shown in the following sequence diagram:

Although this solution looked promising, it was ultimately abandoned for several reasons:

Although this FLIP wasn't adopted, its exploration process provides valuable insights:

It highlights that data consistency in iterative stream processing remains a challenging technical problem.

The exploration process demonstrates the complexity of distributed system design - an apparently elegant solution may face unexpected challenges in practical application.

The case also shows that system design must consider not only functional correctness but also implementation feasibility and operational efficiency.

For scenarios requiring iterations in stream processing, current recommendations are:

FLIP-16 attempted to solve a challenging problem in distributed stream processing: ensuring reliability in iterative data processing. Although its proposed solution was ultimately abandoned due to implementation difficulties and performance issues, the exploration process provided valuable reference for future research.

Building a Unified Lakehouse for Large-Scale Recommendation Systems with Apache Paimon at TikTok

206 posts | 56 followers

FollowApache Flink Community China - January 9, 2020

Apache Flink Community China - September 15, 2022

Apache Flink Community - March 20, 2025

Alibaba Clouder - December 2, 2020

Apache Flink Community - April 25, 2025

Apache Flink Community - August 29, 2025

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More ApsaraDB for SelectDB

ApsaraDB for SelectDB

A cloud-native real-time data warehouse based on Apache Doris, providing high-performance and easy-to-use data analysis services.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn MoreMore Posts by Apache Flink Community