This article was compiled from a speech by Wu Chong (Yunxie) (Apache Flink PMC & Committer) at the Apache Flink Meetup on September 24. The main contents include:

- The Motivation for Hive SQL Migration

- The Challenges of Hive SQL Migration

- The Practice of Hive SQL Migration

- The Demonstration of Hive SQL Migration

- Planning

Flink is the de facto standard for stream computing. Currently, Flink and Flink SQL are selected for real-time computing or stream computing domestically and abroad. Flink is also a well-known big data computing engine that integrates stream and batch processing.

However, Flink is facing challenges at the moment. For example, large-scale applications are mainly based on stream computing, but Flink batch computing is not widely used. In order to further promote the real integration of stream and batch, we need to promote the industry to implement Flink batch computing and embrace the existing offline ecosystem actively. Currently, the offline ecosystem of the industry is based on Hive. We have made many Hive-related integrations in the past versions, including Hive Catalog, Hive syntax compatibility, Hive UDF compatibility, and streaming writing to Hive. In Flink 1.16, we improved the compatibility of HiveSQL and supported the protocol compatibility of HiveServer2.

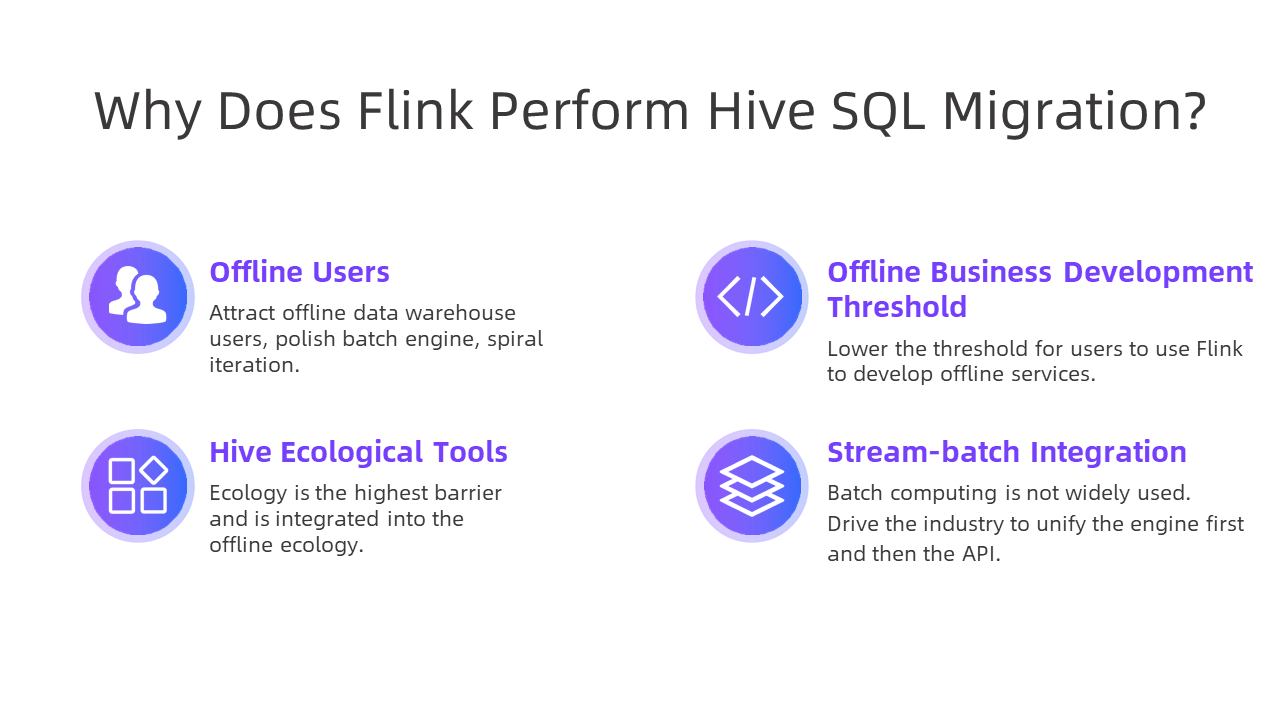

Why does Flink support Hive SQL migration? On the one hand, we hope to attract more Hive offline data warehouse users and use users to continuously polish the batch computing engine and align it to the mainstream batch computing engine. On the other hand, it is compatible with Hive SQL to lower the threshold for existing offline users to use Flink to develop offline services. In addition, ecology is the biggest threshold for open-source products. Flink already has a wealth of real-time ecosystem tools but still lacks an offline ecosystem. The Hive-compatible ecosystem can be quickly integrated into Hive offline ecosystem tools and platforms, reducing user access costs. Finally, this is an important part of realizing the integration of stream and batch. We hope to promote the industry to try unified stream computing and batch computing engines, unified stream computing, and batch computing SQL.

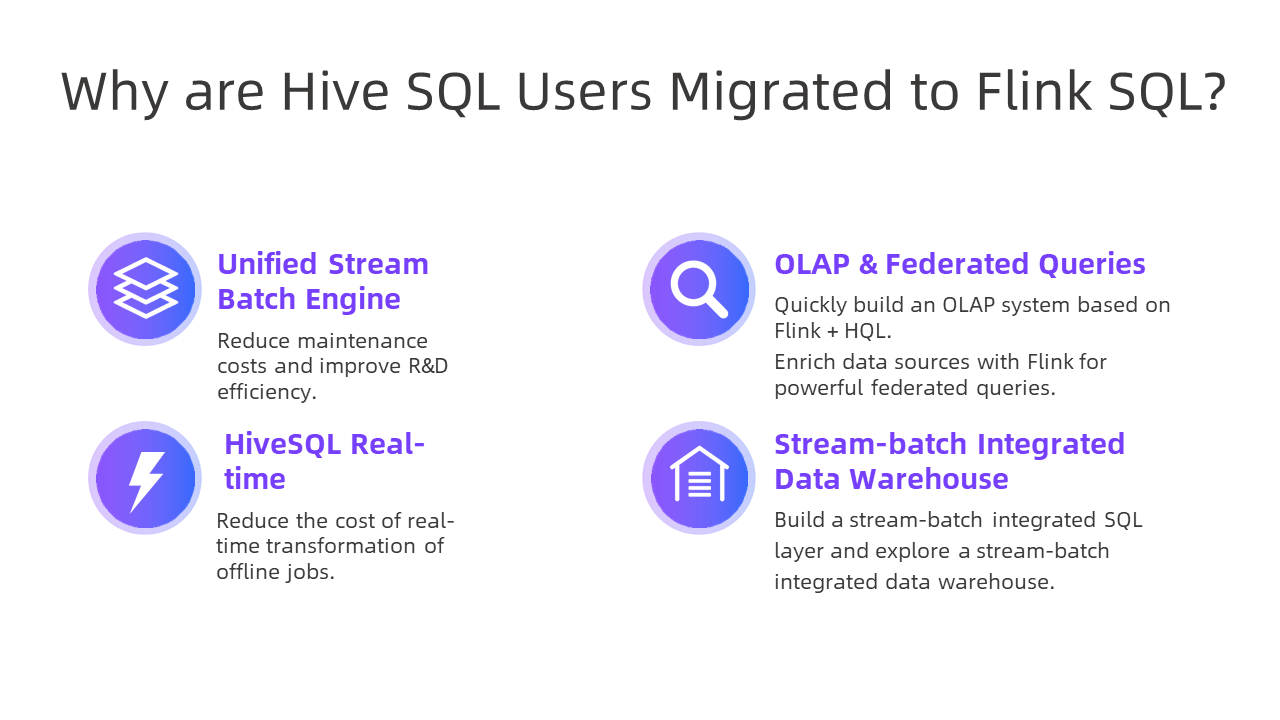

From the users' perspective, why should Hive SQL be migrated to Flink SQL?

From the platform side, the unified stream and batch computing engine only needs to maintain one set of Flink engines, which can reduce maintenance costs and improve team R&D efficiency. You can use Flink + Gateway + HiveSQL to build an OLAP system quickly. Another advantage of Flink is that it has a rich connector ecosystem and can use Flink's rich data sources to implement powerful federated queries. For example, you can perform ad-hoc queries in Hive data warehouses and federated queries between Hive table data and data sources (such as MySQL, HBase, Iceberg, and Hudi).

You can use Hive SQL to write stream computing jobs for offline data warehouse users, which significantly reduces the cost of real-time transformation. The HiveSQL syntax is still used, but it can be run in streaming mode. On this basis, you can explore the construction of the stream-batch integrated SQL layer and the stream-batch integrated data warehouse layer.

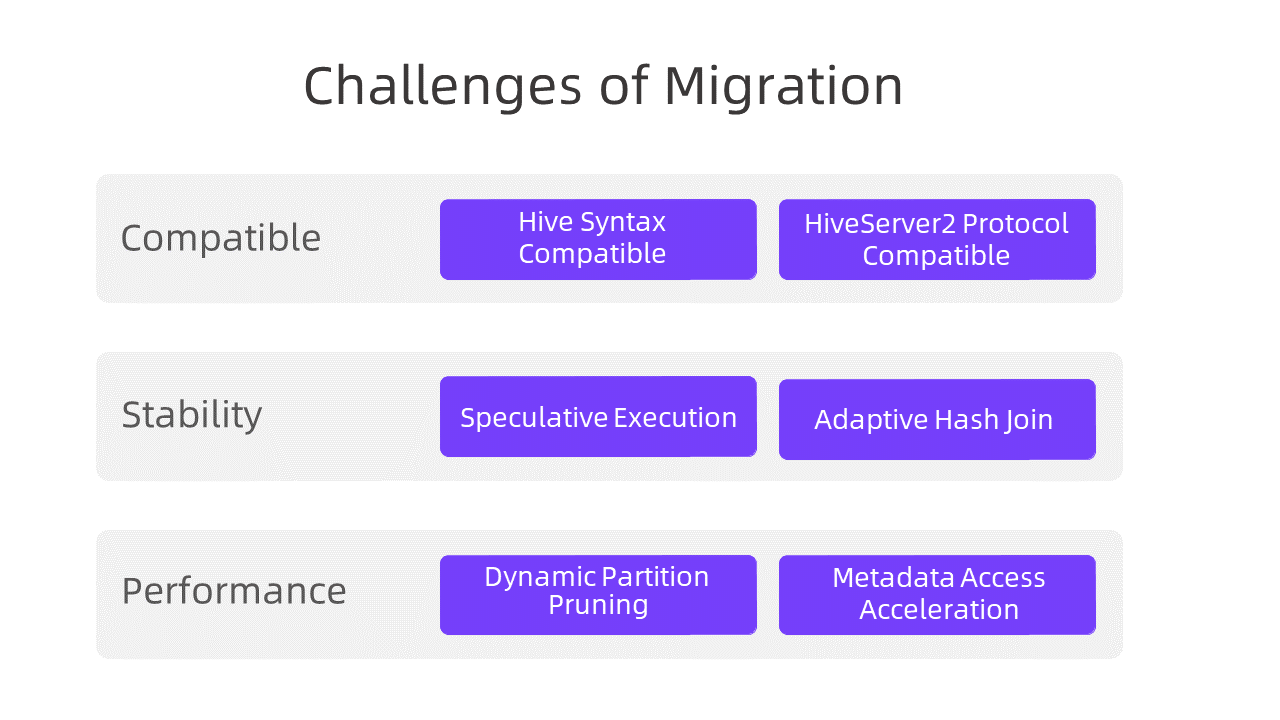

However, Flink's support for HiveSQL migration faces many challenges, mainly in the following three aspects:

Next, we will focus on the work related to Hive compatibility.

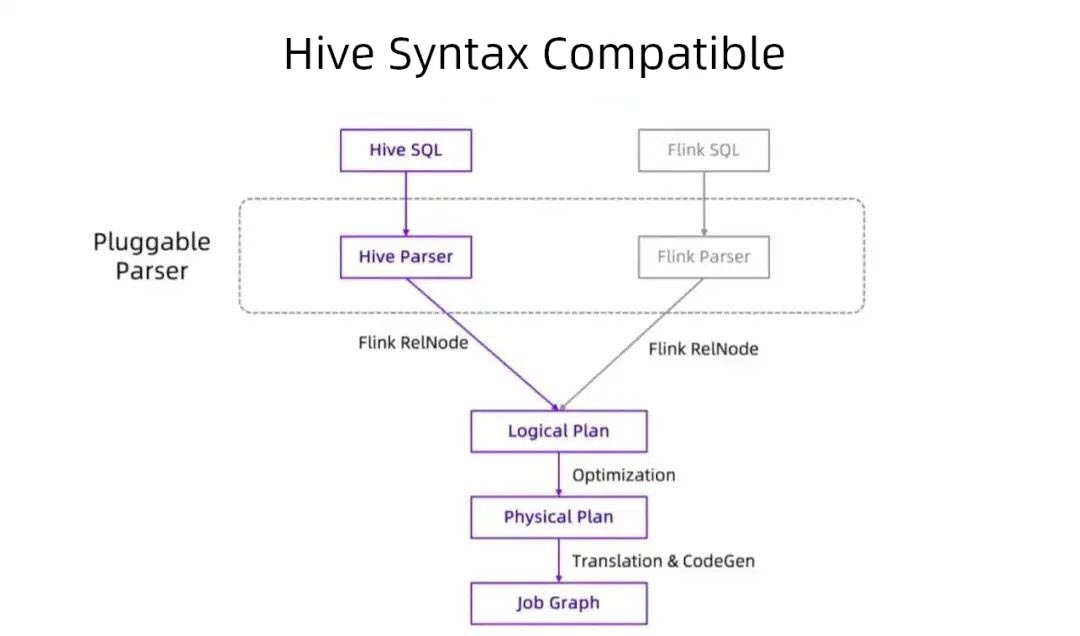

The compatibility of Hive syntax does not completely create a new set of SQL engines but reuses many core processes and codes of Flink SQL. We have abstracted the pluggable parser layer to support and extend different syntaxes. Flink SQL is converted into a Flink RelNode using Flink Parser and optimized to a Physical Plan through Logical Plan. Finally, it is converted into a Job Graph for submission and execution. In order to support Hive syntax compatibility, we have introduced the Hive Parser component to convert Hive SQL into Flink RelNode. Most of Hive's existing SQL parsing logic is reused in this process to ensure the compatibility of the syntax layer (all based on Calcite). Then, RelNode reuses the same process and code to convert it into LogicalPlan, Physical Plan, and JobGraph. Then, submit for execution.

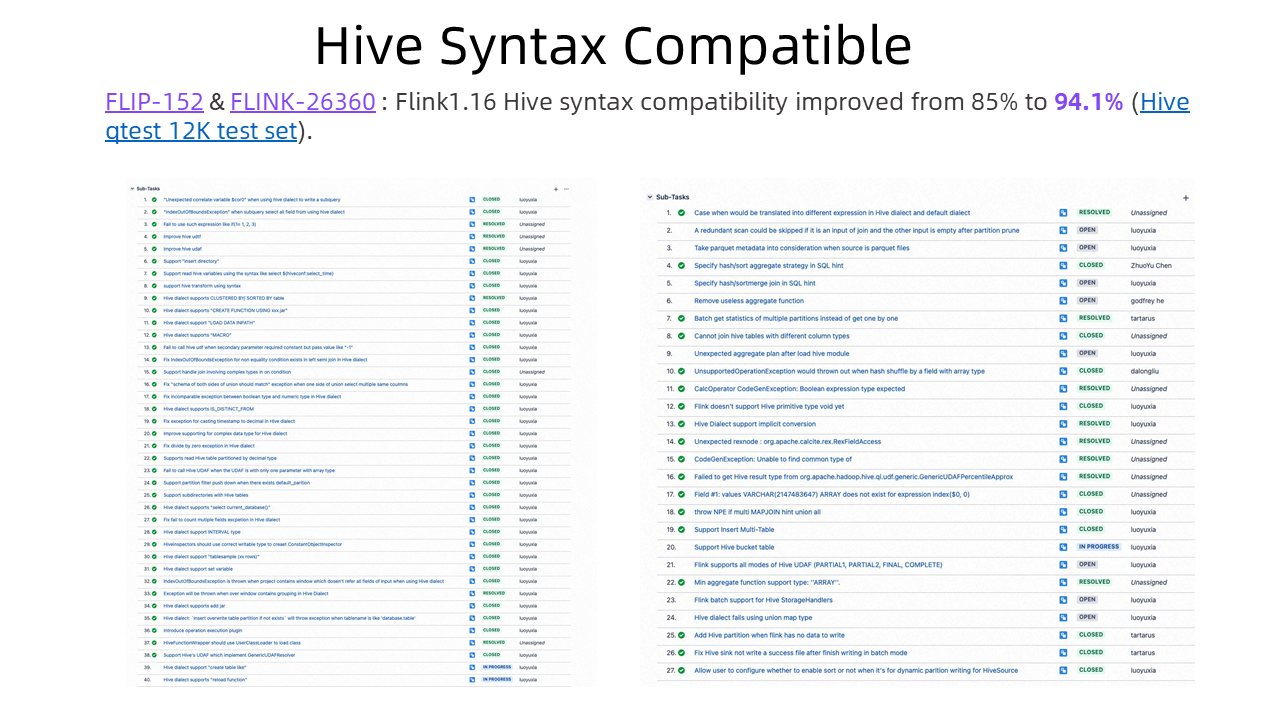

From an architectural point of view, Hive syntax compatibility is not complicated, but there are many details. The preceding figure shows some issues related to Flink Hive compatibility in Flink 1.16, including query compatibility, type system, semantics, behavior, DDL, DML, and auxiliary query commands. The cumulative number of issues completed has reached nearly 100.

Flink 1.16 improves Hive compatibility from 85% to 94.1%. Compatibility testing relies on the Hive qtest set, which contains more than 12,000 test cases and covers all current mainstream syntax functions of Hive. The part that is incompatible includes ACID functions (which are rarely used in the industry). If ACID functions are removed, the compatibility can be more than 97%.

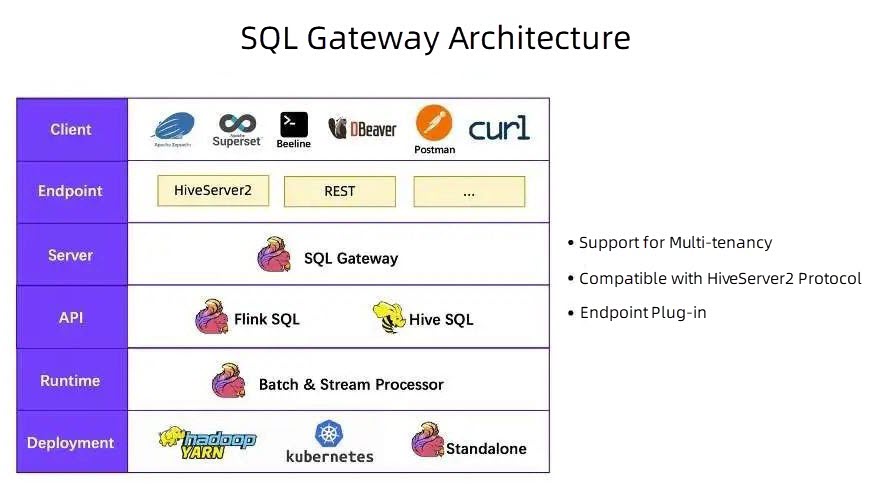

SQLGateway is a server-layer component of Flink SQL. It is a separate process aimed at the HiveServer2 component. In terms of the overall architecture of Flink, SQLGateway is in the middle.

Looking down, Flink SQL and Hive SQL of the user API are encapsulated. Both Flink SQL and Hive SQL are executed by Flink integrated stream-batch Runtime. It can be run in batch mode or stream mode. Flink resources can be deployed and run on YARN, Kubernetes, and Flink standalone clusters.

Looking up, SQLGateway provides pluggable protocol layer endpoints. Currently, HiveServer2 and REST are implemented. Users can connect many tools and components of the Hive ecosystem using HiveServer2 endpoints (such as Zeppelin, Superset, Beeline, and DBeaver) to the SQL Gateway to provide unified streaming and batch SQL services, which are compatible with Hive SQL. Through REST protocol, users can use Postman, curl command, or Python and Java programming to access, providing perfect and flexible stream computing services. Endpoint capabilities will continue to expand in the future. For example, higher-performance gRPC protocols or PG-compatible protocols can be provided.

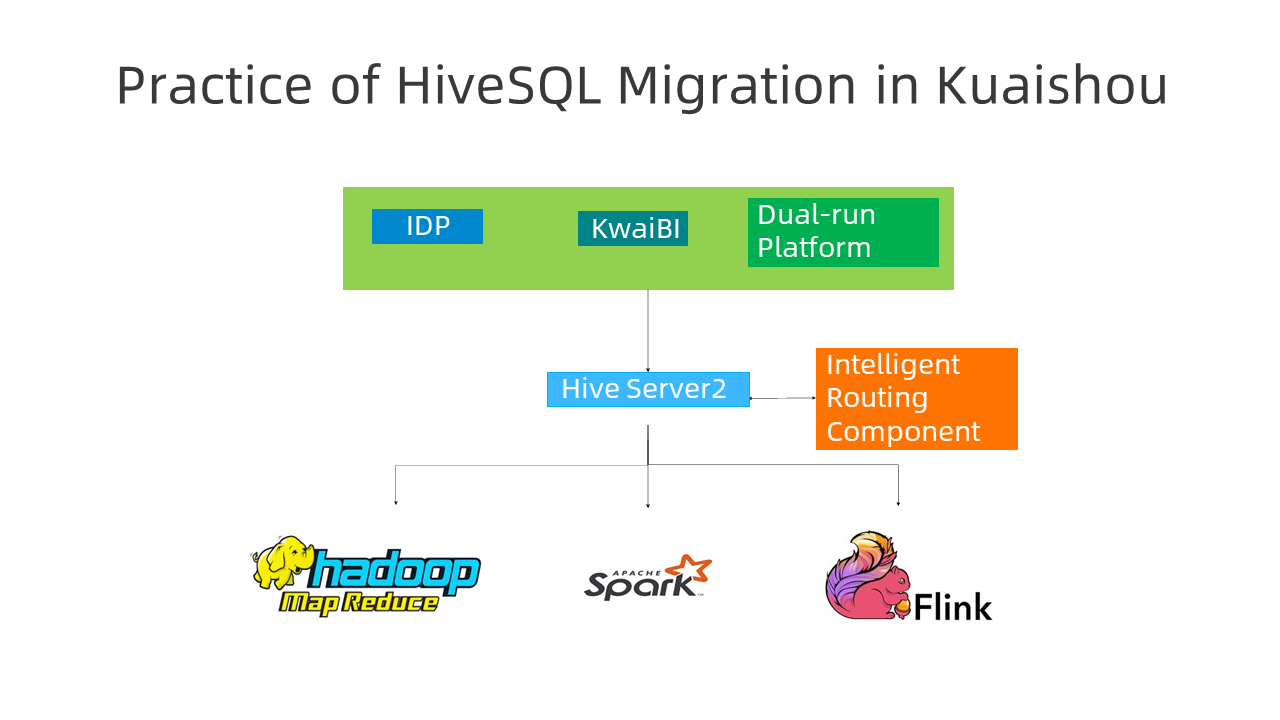

Kuaishou is working closely with the Flink community to promote the integration of stream and batch. Kuaishou has made initial progress in migrating Hive SQL jobs to Flink SQL jobs. Thousands of jobs have been migrated. The main migration strategy of Kuaishou is running on two platforms and existing services. The dual-run platform has intelligent routing components. You can identify jobs by specifying rules or patterns and delivering them to MapReduce, Spark, or Flink for running. The initial operation is cautious. You will use the whitelist mechanism to specify that certain jobs run in Flink first, observe their stability and performance, compare their result consistency, and then gradually use rules to increase the volume.

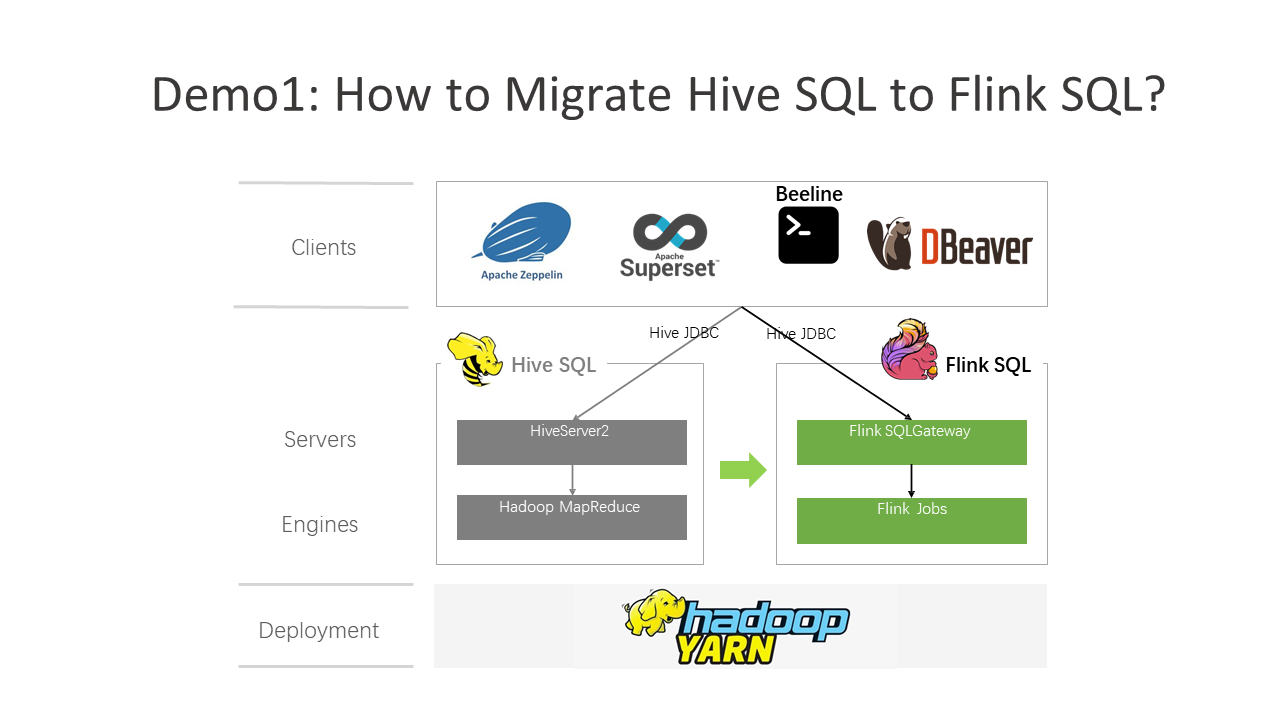

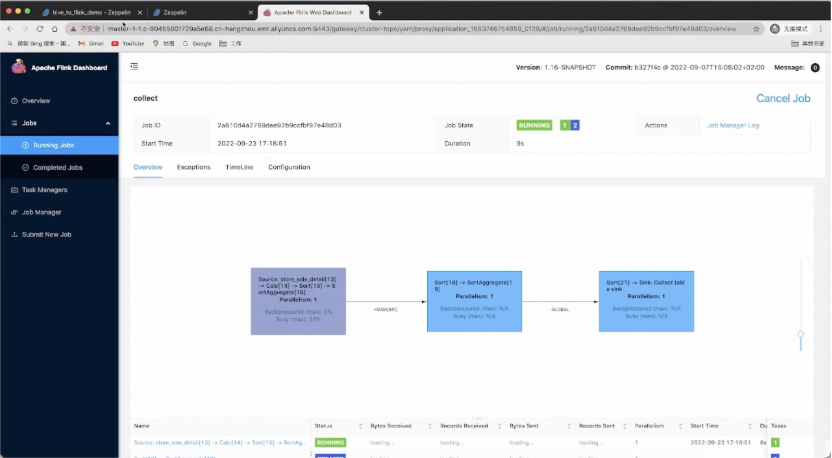

Next, Let's demonstrate how to migrate Hive SQL to Flink SQL. We have built a YARN cluster and Hive-related components, including HiveServer2 services. We use Zeppelin for data visualization and SQL query. We will demonstrate how to migrate Hive SQL to Flink SQL only by changing one row of addresses. The Zeppelin experience is the same, and SQL does not need to be modified.

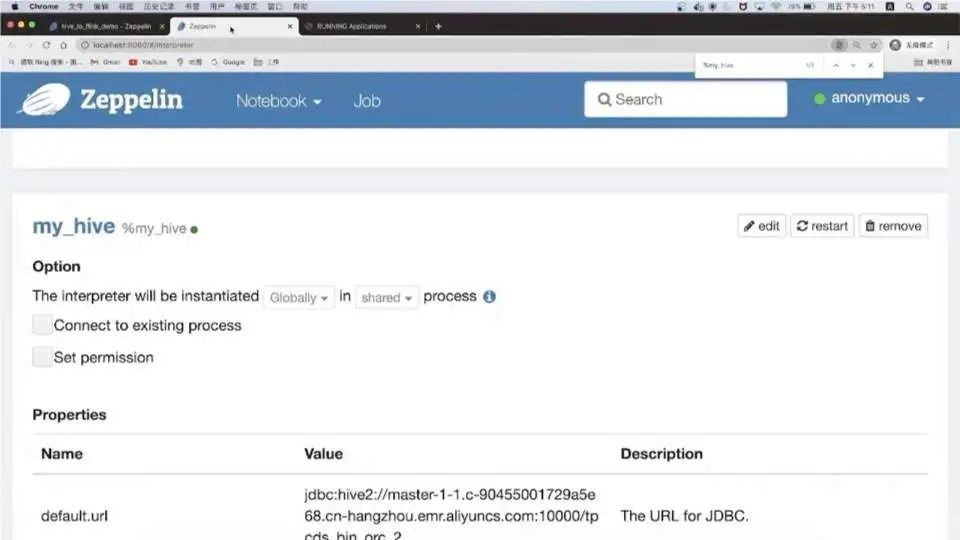

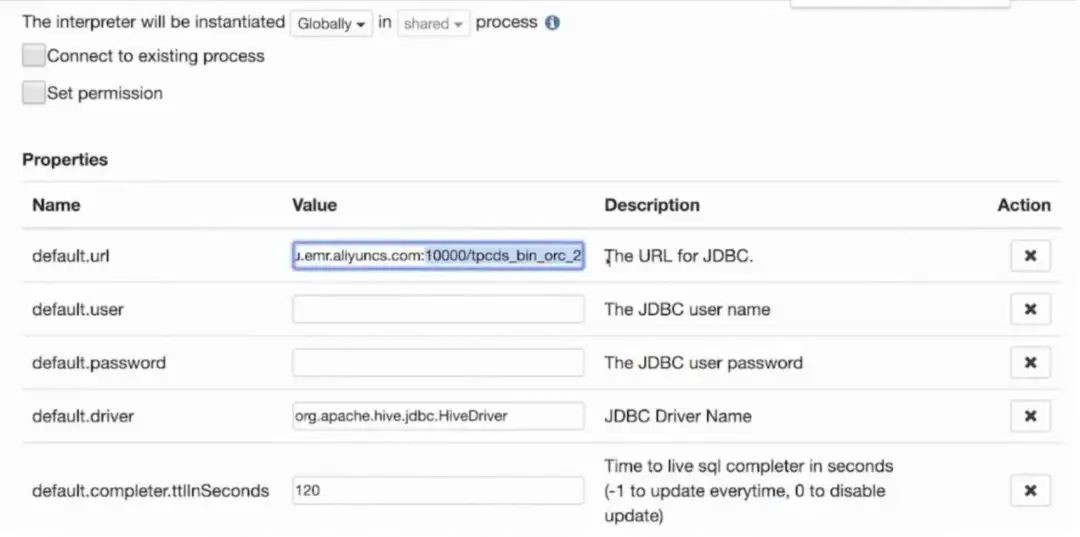

First, configure Hive Interpreter in Zeppelin and enter the JDBC address and port, username and password, and driver of HiveServer2.

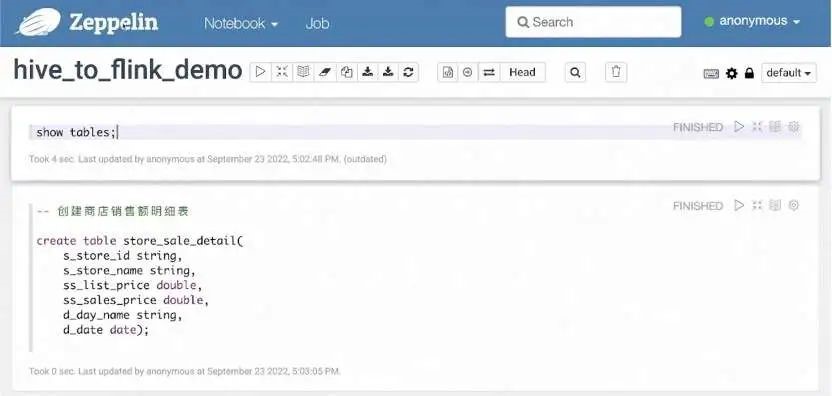

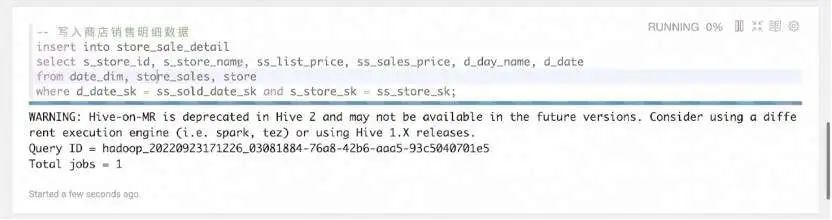

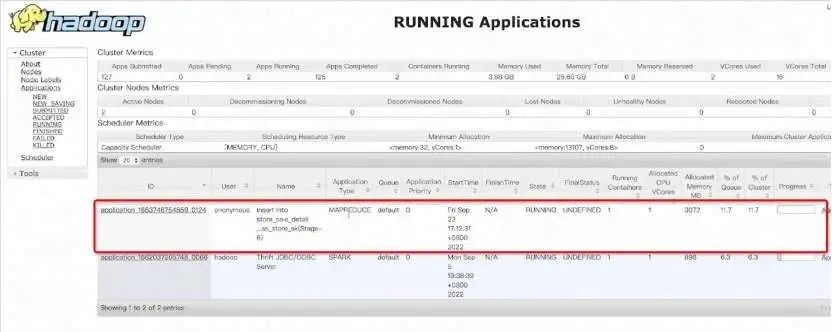

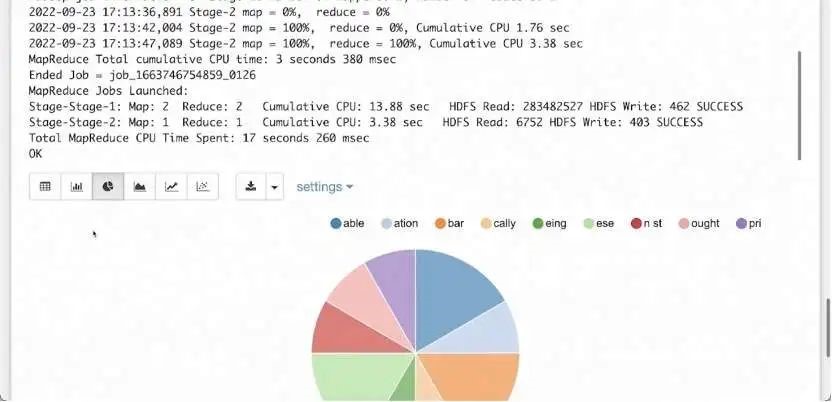

Using the current Hive Interpreter, we can run the Hive DDL command to create a widened store_sale_detail table. Use the Hive SQL syntax to associate three tables of store_sales, date_dim, and store, and write them into a wide table to store_sale_detail. After executing the INSERT INTO statement, you can view the running MapReduce tasks on the Hadoop platform.

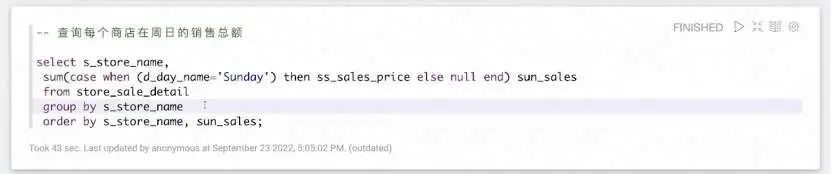

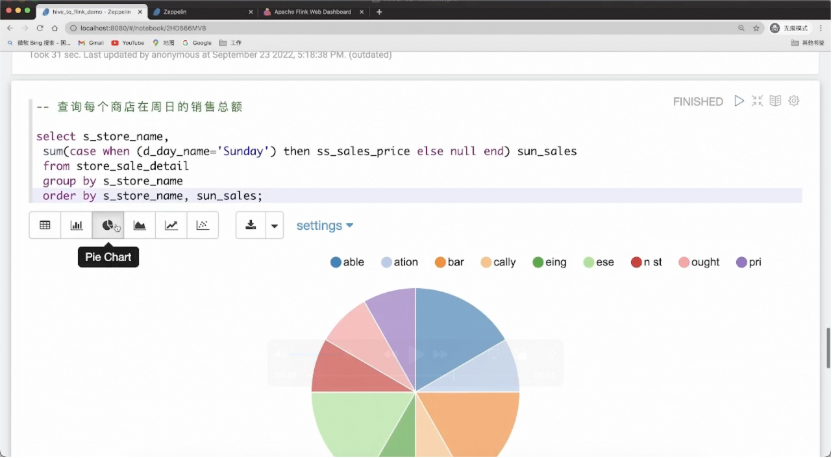

After the store_sale_detail wide table is produced, we can perform queries and analysis, such as checking the sales volume of each store on Sunday. After running, you can display the results in various forms (such as pie charts).

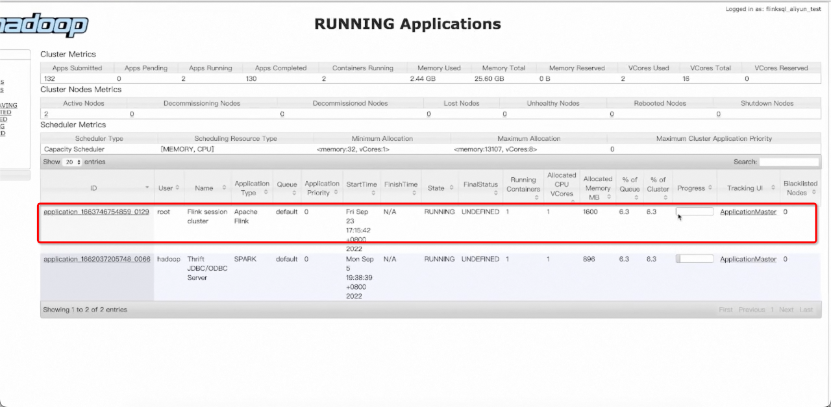

The preceding briefly demonstrates using Hive for data production and data analysis. The computing engine uses Hive native Hadoop MapReduce jobs, which run on YARN clusters. Next, we start migrating to Flink SQL. The jobs are still running on the YARN cluster.

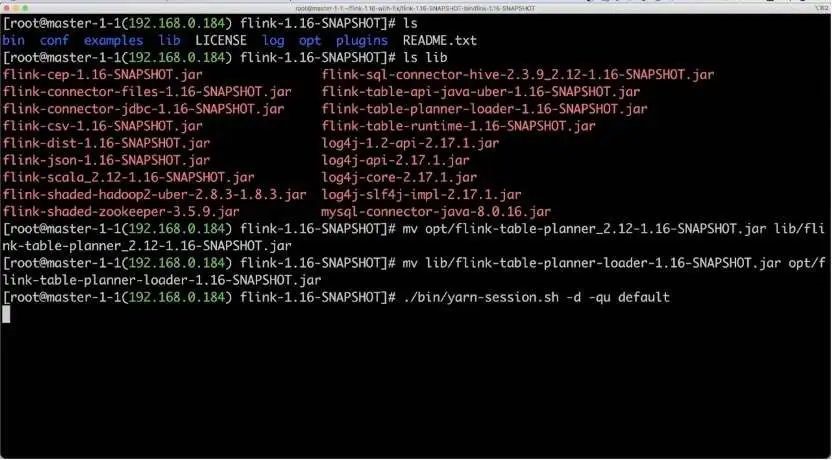

First, build a Flink SQL cluster and start SQLGateway. We have downloaded and decompressed Flink 1.16. The Hive connector, JDBC connector, and MySQL driver have been prepared in advance under the lib folder. You need to replace the flink-table-planner-loader with the flink-table-planner JAR package in the opt/directory and then start the YARN session cluster. After the session cluster is started, you can see the session application of Flink on YARN.

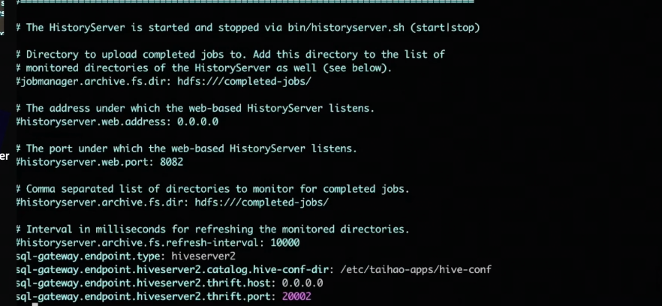

Before you start SQLGateway, you must modify the configurations to configure the HiveServer2 endpoints.

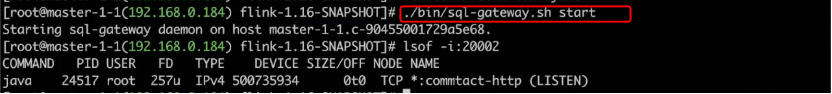

In this example, the endpoint type of SQLGateway is HiveServer2. Three additional configurations are required: HiveServer2 hive-conf-dir, thrift.host, and thrift.port. Note: The port number we started is 20002. Then, run the sql-gateway.sh start command to start the SQL Gateway service.

You can migrate after startup. Since HiveServer2 runs on the same machine, you only need to modify the port number. Change the port number 10000 to 20002 that you just started, which is the port of Flink SQLGateway. No other changes are required. Now, restart the interpreter, and the migration is complete!

Then, we can re-execute the Hive SQL statement in Zeppelin. We can see that the results are all consistent.

As shown in the preceding figure, it is the result of querying the total sales of each store on Sunday. The pie chart result is the same as the result of using the Hive engine query. The difference is that this query is run on the Flink engine.

After Hive SQL is migrated to Flink SQL, you can achieve better performance and obtain additional capabilities provided by Flink SQL, including richer federated query and stream-batch integrated capabilities.

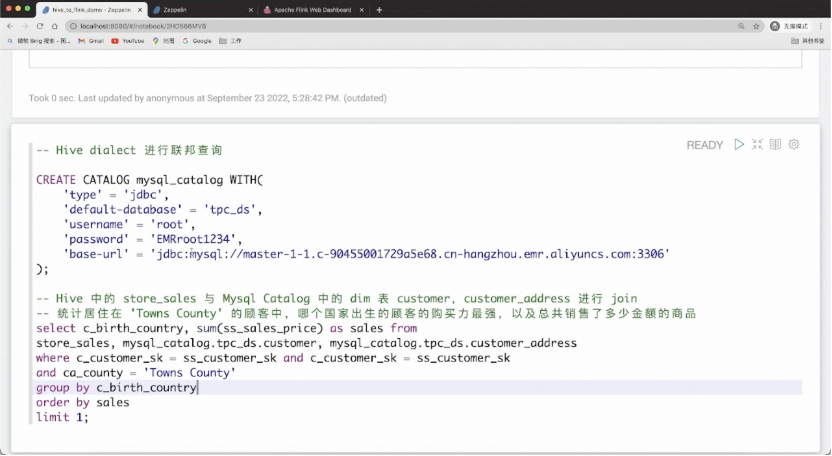

We can use Flink DDL to create a new catalog. For example, there is additional dimension information in the MySQL table, which is not in Hive. We hope to associate it with new data exploration. You only need to use the CREATE CATALOG statement of Flink to create a MySQL catalog to implement federated queries. At the same time, Flink pushes down the projections and filters that can be pushed down to MySQL for cropping.

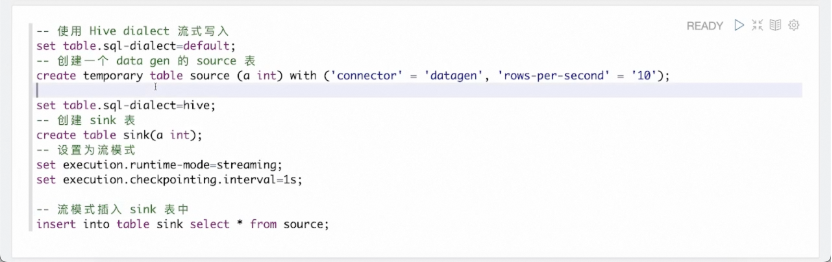

In addition, you can use Hive SQL to experience stream computing capabilities. Use the Flink syntax to create a datagen table that can continuously generate random data. Switch back to the Hive syntax to create a Hive result table sink. Change the running mode to streaming and execute the INSERT INTO statement to submit a streaming job. The job continuously writes data generated in datagen to Hive.

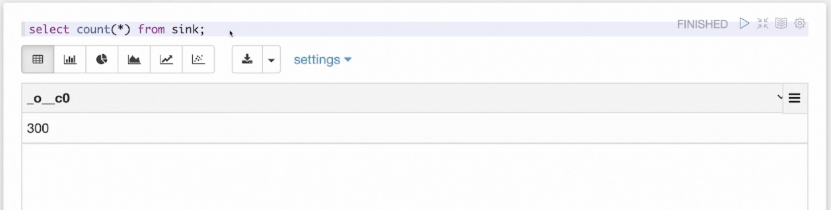

We can use the Hive syntax to query the written table to verify that the Hive result table has been written data by streaming jobs. As shown in the preceding figure, by continuously executing the count(*) statement, you can see that the table is writing data. Therefore, the query results are changing.

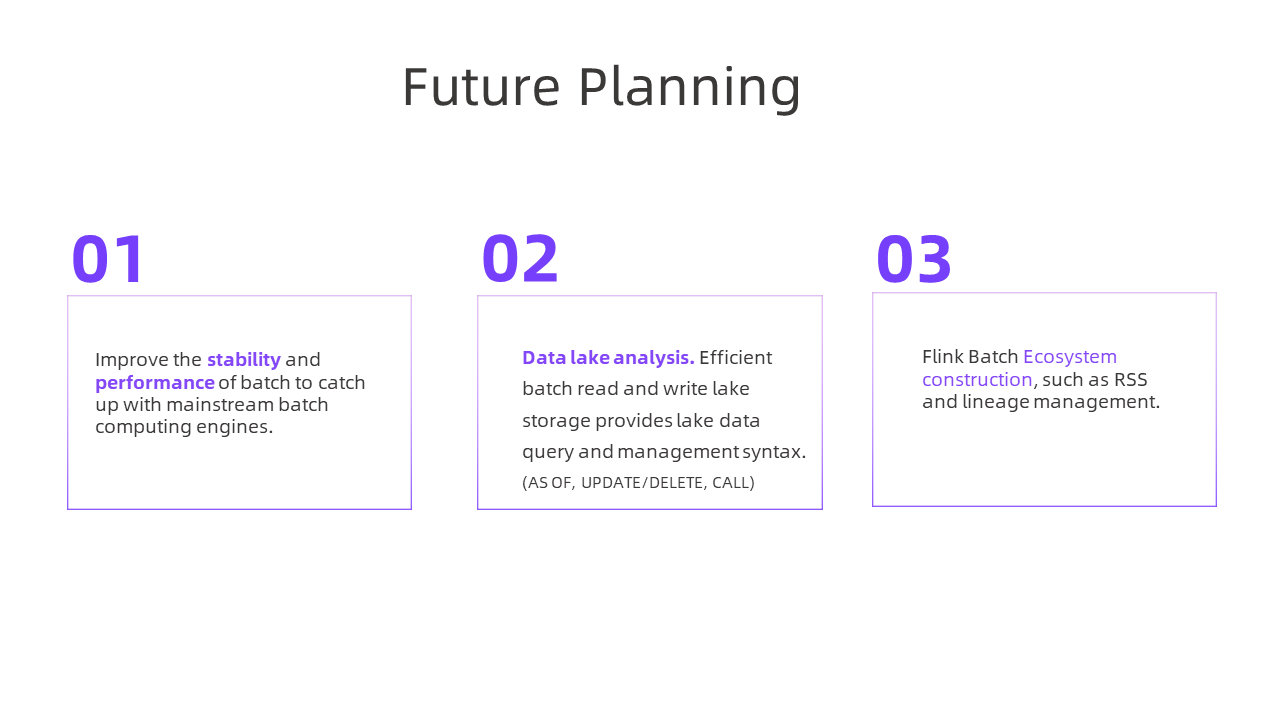

In the future, Flink will continue to evolve in the following three aspects.

Q: Hive writes are executed by Flink. If Hive has a large amount of data, are there errors, such as insufficient memory and OOM?

A: Currently, all operators have a memory management mechanism in batch mode. Data does not exist in Flink as Java objects but opens up a separate memory space in Java memory for use. If the memory is full, disk and spill are performed. The speed may decrease slightly, but memory OOM is not generated.

Q: Does Flink support Hive UDF functions? What about the migration cost?

A: It supports them. You can migrate data directly.

Q: Is there a risk in moving an existing offline data warehouse from Hive to Flink? What are the precautions for smooth migration?

A: Currently, smooth migration uses the dual-run platform. Some jobs are selected through the mechanism to be migrated first. The migrated jobs run on both platforms at the same time. It is necessary to verify whether the behaviors and results are consistent. Then, the jobs on the old platform are gradually offline and become single-run. The whole process needs to be gradual and usually takes six months to one year.

Q: In the demo, one SQL query uses the Hive on the MR engine. After the migration, does it use Flink SQLGateway or Hive in MR mode?

A: After the migration, the SQL request is Flink SQL Gateway since the configured port is the Flink SQL Gateway port. Gateway compiles Hive SQL into a Flink job and submits it to the YARN cluster for running.

Q: When Flink runs batch tasks, do we specify the number of TaskManagers? Are they automatically generated?

A: For standalone mode, including standalone mode running on Kubernetes, the number of TM is specified by the user. In other modes, Flink determines and pulls the number of TM, including yarn/kubernetes application mode, yarn session mode, yarn per-job mode, and native kubernetes session mode. The number of TMs pulled up is related to the number of slots requested by the job. The taskmanager.numberOfTaskSlots parameter determines the mapping between the number of slots and the number of TMs. The number of slots is related to the concurrency of the scheduled job nodes.

Q: When Flink is running on Kubernetes, if dynamic resource allocation is enabled, will shuffle data be stored on the POD disk?

A: You can store it on TM or RemoteShuffleService.

Q: Does it support with as syntax and the partition by syntax after offline jobs are migrated?

A: The WITH AS syntax is still supported. The PARTITIONED BY syntax in CREATE TABLE is also supported.

You are welcome to experience the Hive SQL migration capabilities.

Streaming Data Warehouse Storage: Requirements and Architecture

206 posts | 56 followers

FollowApache Flink Community China - April 17, 2023

Apache Flink Community - July 18, 2024

Apache Flink Community China - November 8, 2023

Apache Flink Community - June 28, 2024

Apache Flink Community China - July 28, 2020

Apache Flink Community - May 27, 2024

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Apache Flink Community