Modern data environments require architectures that seamlessly blend the flexibility of data lakes with the performance characteristics of traditional data warehouses. As enterprises increasingly adopt real-time analytics to drive business decisions, the combination of Apache Flink as a stream processing engine with Apache Paimon as a lake storage format has emerged as a compelling solution for building powerful real-time lakehouse platforms.

At the recent Apache CommunityOverCode Asia 2025, Xuannan Su, Alibaba Cloud Technical Expert and Apache Flink Committer, shared profound insights into the continuous evolution of Flink real-time lakehouse solutions built on Paimon. This technical deep-dive explores key optimizations and architectural improvements developed to address real-world challenges encountered in implementing large-scale streaming analytics platforms.

As the volume of structured and semi-structured data grows, traditional data processing approaches often struggle with performance, cost-efficiency, and operational complexity. The discussed enhancements represent production-tested, practical solutions for organizations seeking to modernize their data infrastructure, providing a clear implementation path for scalable, real-time data pipelines.

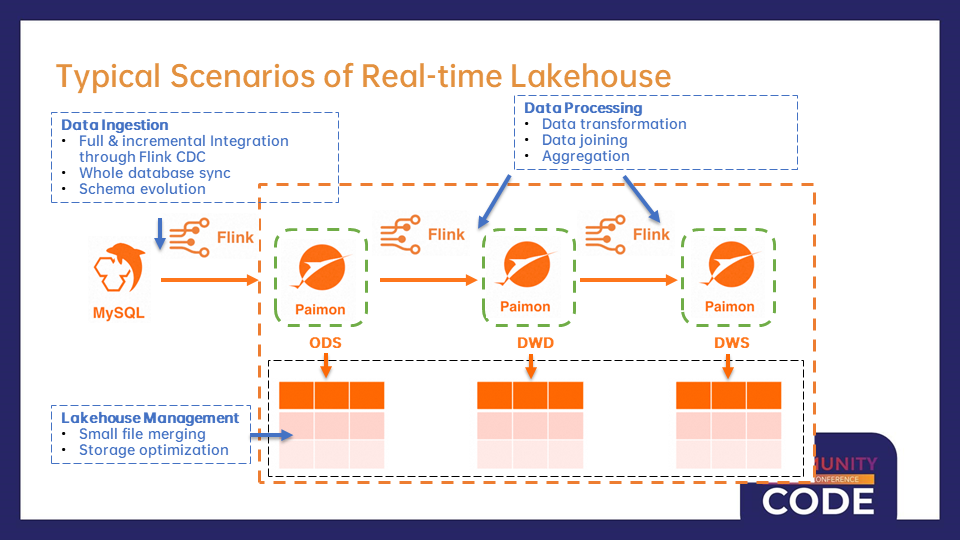

Before diving into technical optimizations, it’s essential to understand the typical architecture patterns formed around the integration of Flink and Paimon. The real-time lakehouse approach represents a fundamental shift from traditional batch-oriented data warehouse models, embracing a paradigm where data is processed continuously as it arrives.

Modern real-time lakehouses built on Flink and Paimon typically consist of interconnected processing layers, each serving distinct purposes while maintaining seamless data flow across the system. At the foundational layer, Flink CDC (Change Data Capture) plays a critical role in establishing unified capabilities for full-load and incremental data synchronization.

Flink CDC has proven particularly valuable in bridging operational databases and analytical systems. Unlike complex ETL pipelines requiring fixed schedules, Flink CDC enables organizations to capture changes in real-time from source systems like MySQL and stream them directly to Paimon’s ODS (Operational Data Store) layer. This approach reduces latency and simplifies architecture by eliminating intermediate staging areas and complex coordination mechanisms.

These capabilities extend beyond simple data replication. Modern implementations support full database synchronization scenarios, where entire database schemas can be migrated to lakehouse formats with full support for automatic schema evolution. When source system schemas change—whether through adding new columns, modifying data types, or restructuring relationships—downstream Paimon tables automatically adapt without manual intervention or pipeline reconstruction.

Once data enters the lakehouse via ingestion layers, it undergoes a series of processing stages to refine and enrich information. The Data Warehouse Detail (DWD) layer represents the first major transformation phase, where raw operational data is cleaned, normalized, and enriched.

These transformations often involve complex data joins to create “wide tables” by combining information from multiple source systems. For example, an e-commerce organization might merge customer profiles with transaction history, product catalogs, and marketing campaign data to build a comprehensive view of customer behavior. The real-time nature of this processing ensures these enriched views remain up-to-date as source data evolves, providing fresh insights to analysts and applications without the delays inherent in batch processing.

Processing progresses to the Data Warehouse Summary (DWS) layer, where aggregated computations generate business metrics and key performance indicators (KPIs). Unlike traditional data warehouses that calculate these aggregates daily or hourly, real-time lakehouse approaches enable continuous computation of business metrics as events occur. This capability is transformative for organizations requiring real-time monitoring of performance, rapid response to operational issues, or automated actions triggered by analytical insights.

Data management within a lakehouse introduces unique challenges distinct from traditional data warehouse management. Paimon addresses these challenges with a comprehensive suite of lakehouse management tools and optimization techniques that operate transparently to maintain system performance and efficiency.

Small file management represents one of the most critical operational challenges in any lake-based storage system. As stream data arrives continuously, it naturally leads to the creation of numerous small files, which degrade read performance and increase metadata overhead. Paimon’s automated file merging feature resolves this by intelligently merging small files based on configurable policies, ensuring storage remains optimized without manual intervention.

These features work synergistically to maximize query performance while minimizing storage costs—a critical consideration for organizations handling vast volumes of historical data and real-time streams.

The maturation of the Flink and Paimon ecosystem has driven increasingly sophisticated optimizations to address specific performance bottlenecks and operational challenges in production deployments. Two particularly significant advancements include enhanced handling of semi-structured data and optimized Lookup Join operations.

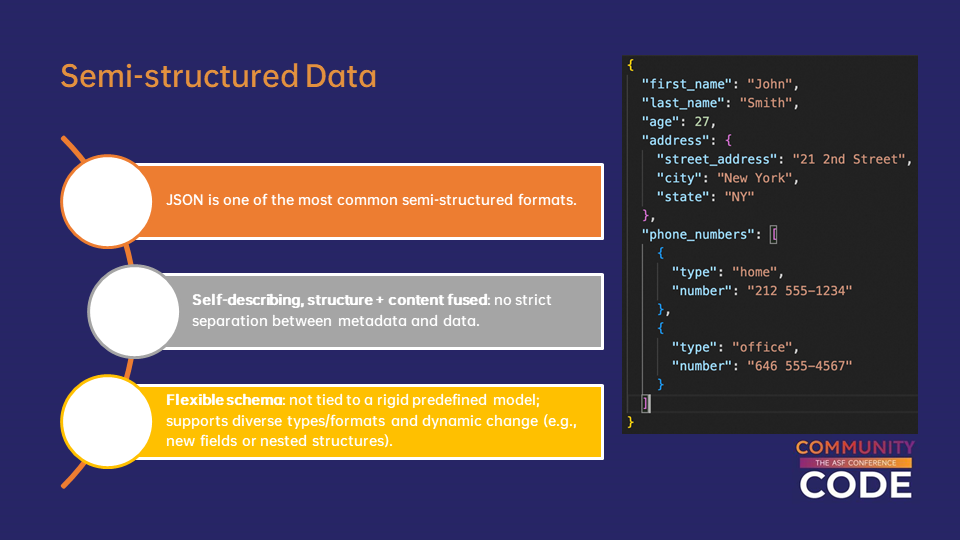

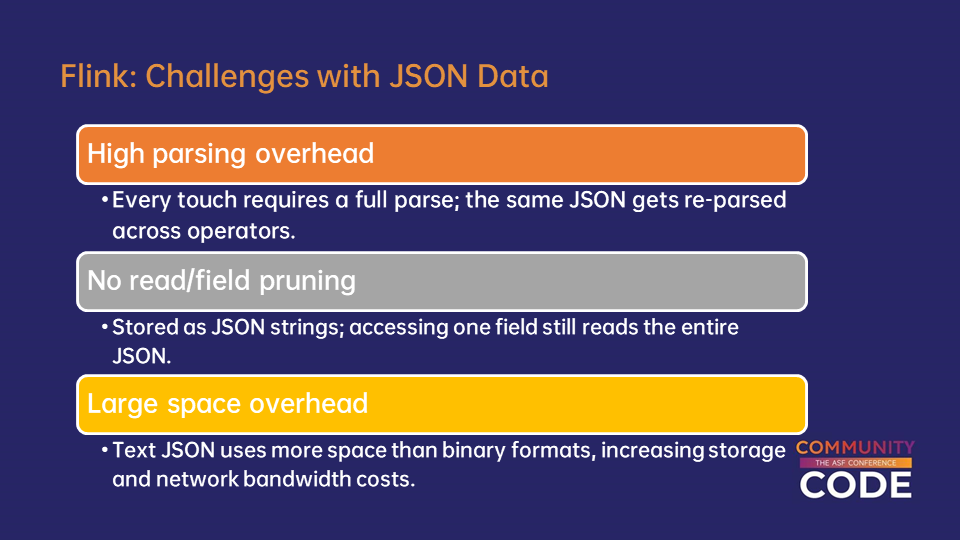

Modern data environments are characterized by the proliferation of semi-structured data formats, with JSON being the most prevalent. The rise of web applications, mobile devices, IoT sensors, and API-driven integrations has made JSON ubiquitous in enterprise data pipelines. However, its widespread use introduces notable performance challenges when processed with traditional stream processing approaches.

The core issue lies in JSON’s self-descriptive nature. Unlike structured data, where schema information is decoupled from the data itself, JSON embeds type and structure information directly within the data payload. While this flexibility enables dynamic schema evolution, it creates substantial computational overhead when processing large volumes of JSON data in streaming environments.

Flink’s traditional JSON handling treats semi-structured data as simple string values, requiring full parsing operations each time a field is accessed. This architecture, though simple to implement, is suboptimal for performance. Even accessing the first field in a JSON object necessitates parsing the entire document, and since data is distributed as string values across Flink jobs, every downstream operator must repeat the parsing process.

Storage faces similar issues. JSON’s text-based format, while human-readable and widely supported, consumes significantly more storage than equivalent binary representations. This increased storage footprint directly translates to higher costs and greater network bandwidth consumption during random operations, where data is transferred between operators in the streaming pipeline.

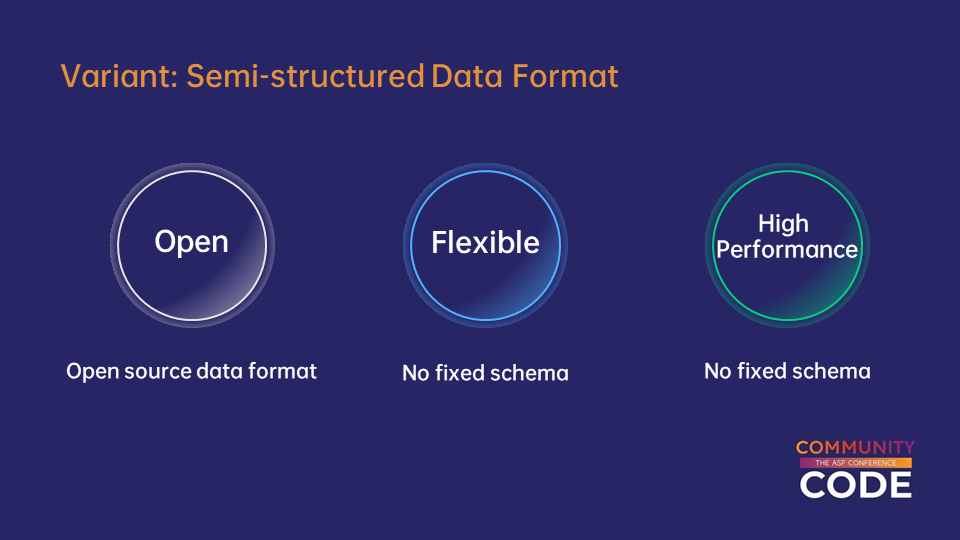

The introduction of the Variant data type marks a fundamental shift in Flink’s approach to semi-structured data processing. Instead of treating JSON as opaque text, Variant provides a native binary representation that retains the flexibility of semi-structured data while delivering performance closer to structured data processing.

Inspired by similar efforts in broader data processing ecosystems (e.g., Parquet’s proposed semi-structured data format), the Variant approach ensures compatibility with other processing engines through open standards.

Variant’s binary encoding strategy achieves performance improvements through multiple mechanisms. Schema information is encoded once in metadata sections, drastically reducing storage overhead. Field access operations leverage this metadata to directly navigate to specific fields without parsing irrelevant portions of the data structure, significantly enhancing selectivity in query performance.

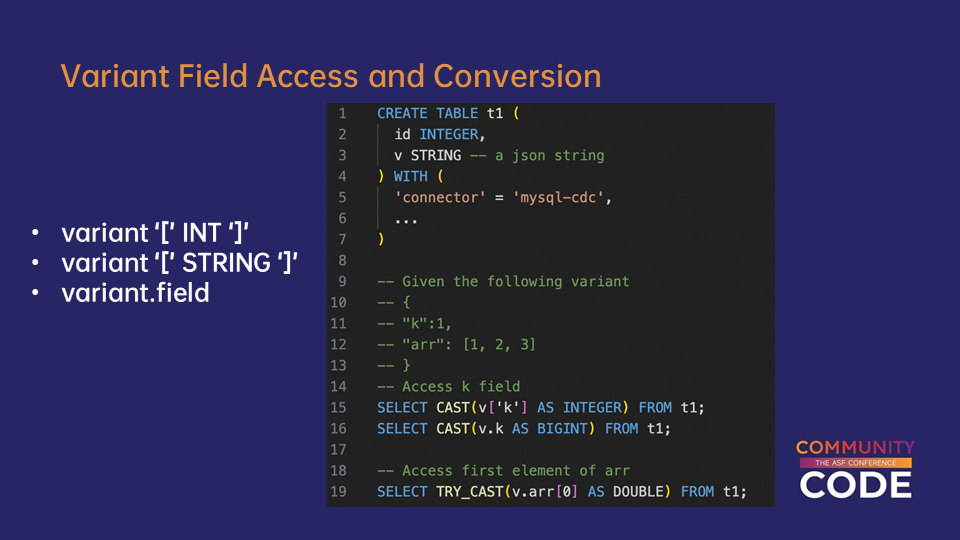

Beyond performance gains, Variant introduces significant improvements to the developer experience when handling semi-structured data. Traditional methods require developers to use complex SQL functions for field access, resulting in verbose and error-prone queries. Variant enables a more intuitive syntax model aligned with expectations from other programming environments.

Direct field access using familiar bracket notation and dot syntax simplifies query development and improves code maintainability. Array element access follows a similar pattern, enabling developers to naturally handle nested structures. Type conversion capabilities allow seamless integration with downstream strong-type processing, where Variant fields can be cast to specific data types as needed.

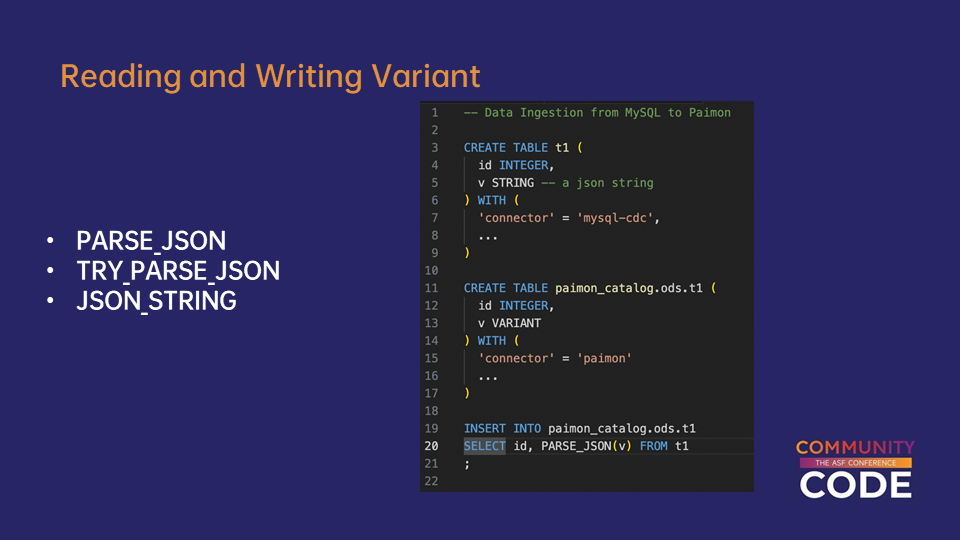

Conversion functions between JSON strings and Variant types provide migration paths for existing systems while enabling gradual adoption of the new format. PARSE_JSON and TRY_PARSE_JSON functions handle conversions from text-based JSON to binary Variant formats, with the latter offering error handling for malformed input. JSON_STRING facilitates conversion back to text format when interfacing with systems yet to adopt Variant support.

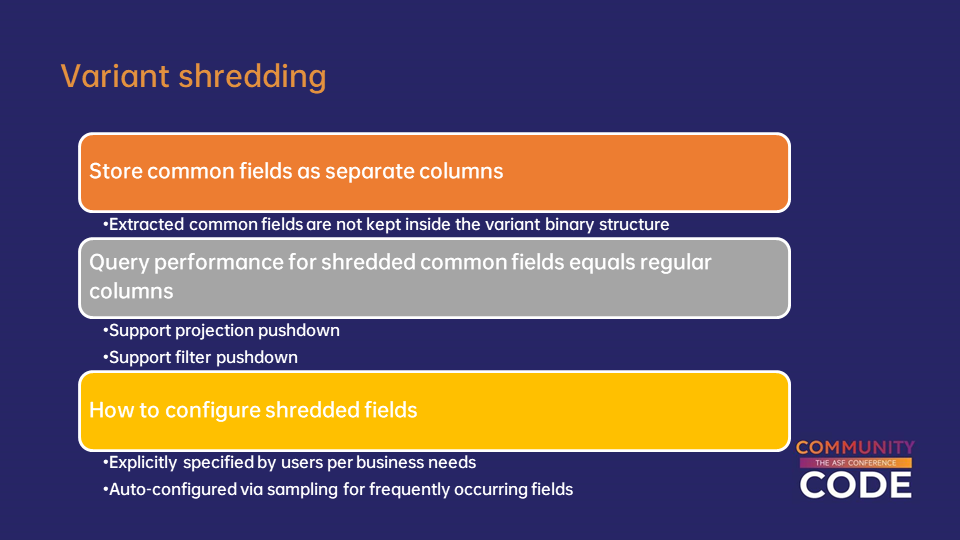

One of the most complex optimizations in the Variant implementation addresses a common pattern in semi-structured data: the coexistence of frequently accessed static fields and truly dynamic parts. While JSON’s flexibility allows arbitrary structures, production systems often exhibit consistent patterns where certain fields appear repeatedly across records.

Variant Shredding leverages this observation by storing frequently accessed fields as separate physical columns outside the main Variant binary structure. This hybrid approach combines the flexibility of semi-structured data with the columnar performance characteristics of frequently accessed fields. Shredded fields can be accessed with nearly the same performance as conventional structured columns.

The impact of this optimization extends beyond field access performance. Shredded fields fully participate in Flink’s query optimizations, including projection pushdown (reading only required columns from storage) and filter pushdown (evaluating predicates as close to the data source as possible). These optimizations drastically reduce I/O requirements, a critical benefit when processing large historical datasets and real-time streams.

Shredded field identification can occur via two methods: manual configuration, where developers explicitly specify shredding fields based on data access patterns and business needs, or automated discovery mechanisms that analyze incoming data samples to identify fields with sufficient frequency to benefit from shredding.

The second major optimization tackles a common architectural pattern in real-time analytics: enriching stream data with dimension information stored in Paimon tables.

Lookup Joins represent a critical operation in stream analytics, where real-time event data must be enriched with relatively static dimension information. Common use cases include enriching transaction events with customer profiles, adding product details to purchase events, or augmenting log entries with configuration data. The challenge lies in efficiently accessing dimension data potentially spread across multiple storage partitions while maintaining the low-latency characteristics required for real-time processing.

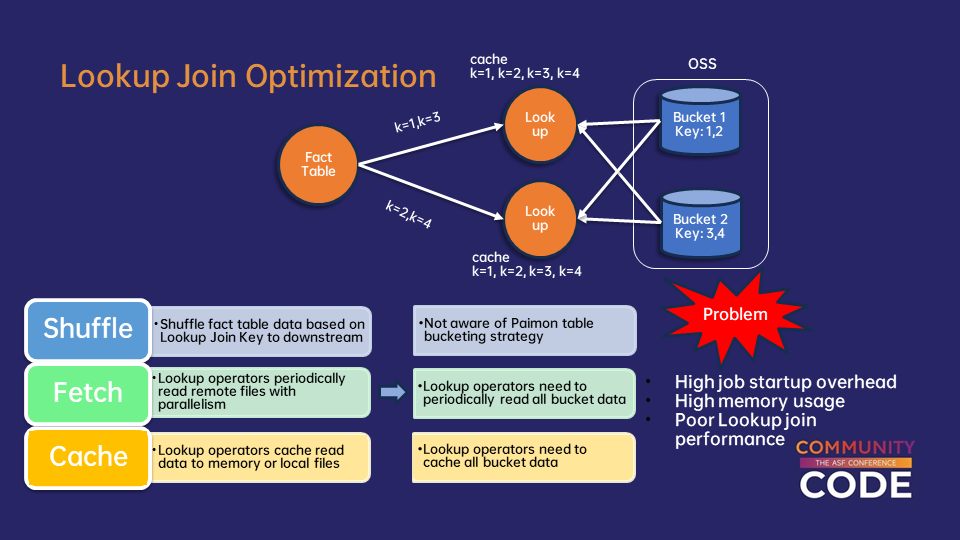

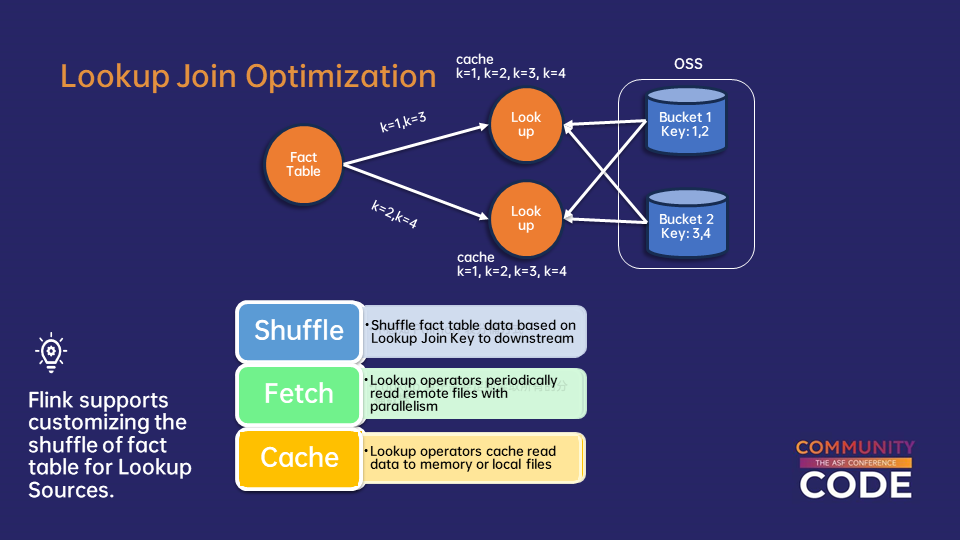

Flink’s Lookup Join typically involves three stages: distributing fact table data via random shuffles based on join keys, retrieving dimension data from remote storage, and maintaining local caches of dimension data for fast access.

This approach works well with traditional dimension storage systems like Redis, where data is inherently unpartitioned and all operators must read the full dataset. However, Paimon’s bucketed storage strategy creates a fundamental mismatch, leading to significant performance inefficiencies.

Paimon organizes data using a bucketing strategy, where records are distributed across buckets based on hash functions of keys. While this enables scalability and efficient data organization, it introduces inefficiencies in traditional Lookup Join implementations.

The core issue is that Flink’s Lookup operators are unaware of Paimon’s bucketing strategy. Each concurrent Lookup operator assumes it may need to join with any record in the dimension table, forcing every operator to maintain a full local copy of all dimension data. This results in redundant data management, with each operator reading and caching the entire Paimon table regardless of parallelism levels.

In large-scale deployments, the impact becomes severe. Job startup times can extend to tens of minutes as each operator pulls the full dimension dataset. Memory consumption scales with operator parallelism, as each operator maintains duplicate copies of all dimension data. The overhead of managing large local caches and the computational cost of searching through full datasets significantly degrade overall Lookup Join performance.

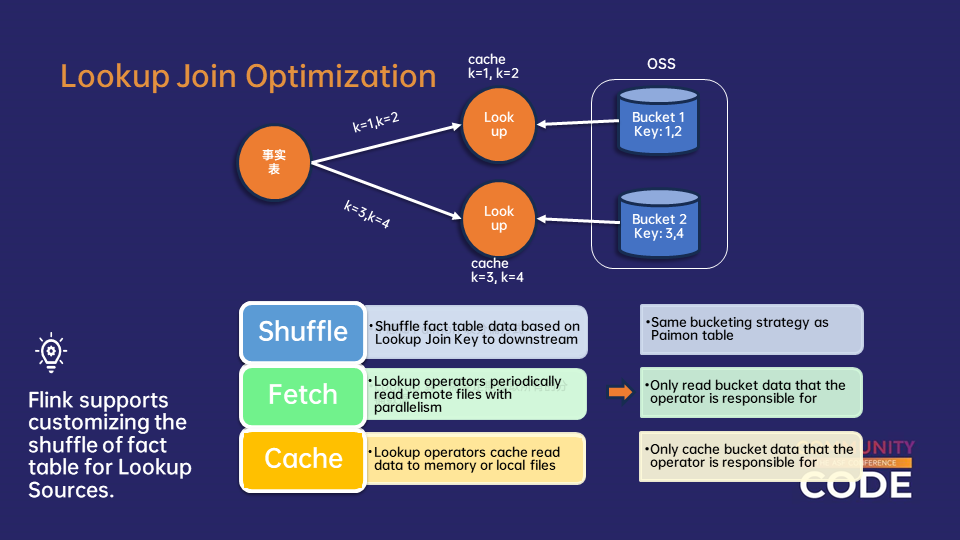

The solution involves extending Flink’s Lookup Join architecture to support custom shuffle strategies. This alignment ensures records expected to connect to specific Paimon buckets are processed by the same Lookup operator responsible for that bucket’s dimension data.

With this alignment, each Lookup operator focuses exclusively on its assigned bucket data, maintaining only a local copy of its allocated bucket subset instead of the entire dimension table. This drastically reduces the data volume each operator must manage and eliminates redundant storage across operators.

Performance improvements are substantial: in high-parallelism scenarios, job startup times can drop from minutes to seconds. Per-operator memory consumption decreases significantly, enabling more efficient resource utilization. Overall Lookup Join performance improves due to smaller local caches and more focused data access patterns.

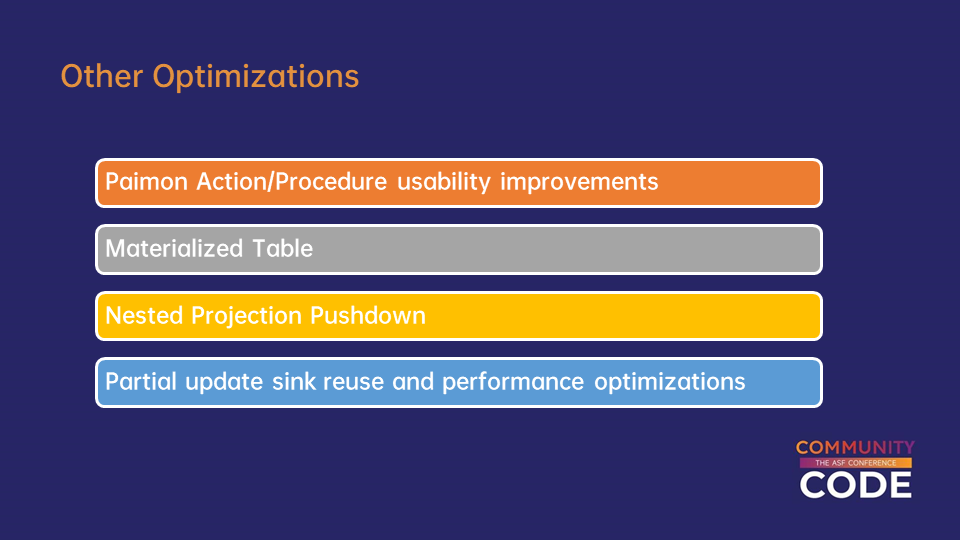

Beyond the core advancements of Variant data types and Lookup Join optimizations, the Flink-Paimon integration includes additional critical features that collectively form a comprehensive real-time lakehouse optimization ecosystem.

Improvements to Paimon Action and Procedure usability represent a major UX upgrade. Traditional lakehouse management operations often require complex configurations and deep technical expertise, creating a high barrier for developers and operations teams.

New usability enhancements simplify common lakehouse management tasks, including table creation, data compaction, snapshot management, and metadata maintenance. Users can now perform these tasks with straightforward SQL statements.

Flink’s newly introduced Materialized Tables enable users to write business logic in Flink SQL while automatically deciding whether to launch streaming or batch jobs based on specified freshness requirements. This eliminates the need for manual job configuration and maintenance.

This optimization targets query performance for complex nested data structures. In modern data environments, formats like JSON, Avro, and Parquet often contain deeply nested structures. Traditional query processing requires reading entire nested objects, even when only a few fields are needed.

Nested Projection Pushdown analyzes field access patterns in queries and pushes field selection operations to the earliest stage of data retrieval. This means only the required nested fields are extracted during storage reads, rather than parsing the entire structure.

For datasets with hundreds of fields (e.g., user behavior event data), this optimization can reduce I/O overhead by an order of magnitude. It also minimizes network data transfer and memory usage, enhancing overall query pipeline efficiency.

Partial Update Sink Reuse and Performance Optimizations

Paimon’s partial update functionality is widely used, but when multiple data sources write to the same Paimon table in a Flink job, concurrent compaction during checkpoints can cause job failures. To address this, Flink’s SQL planner has been modified to identify and reuse identical sinks, preventing such issues.

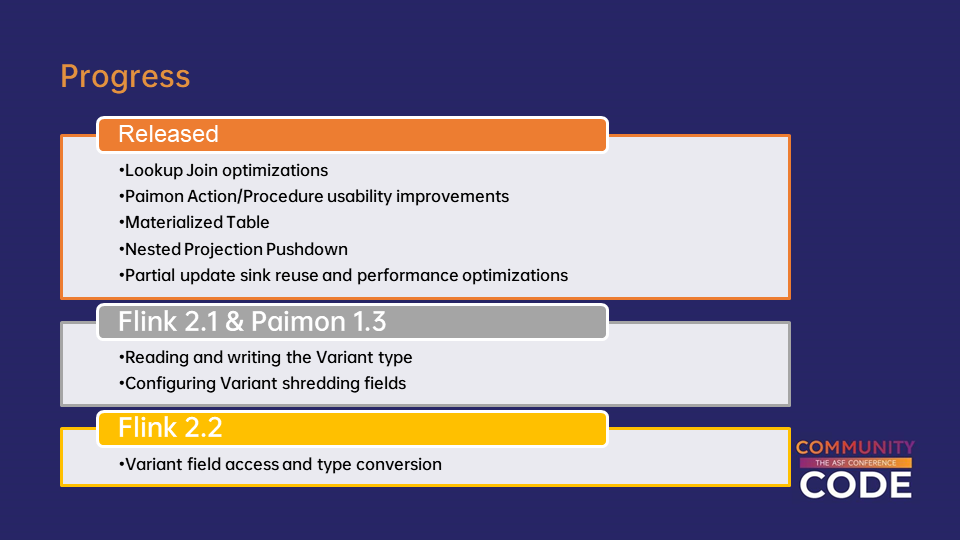

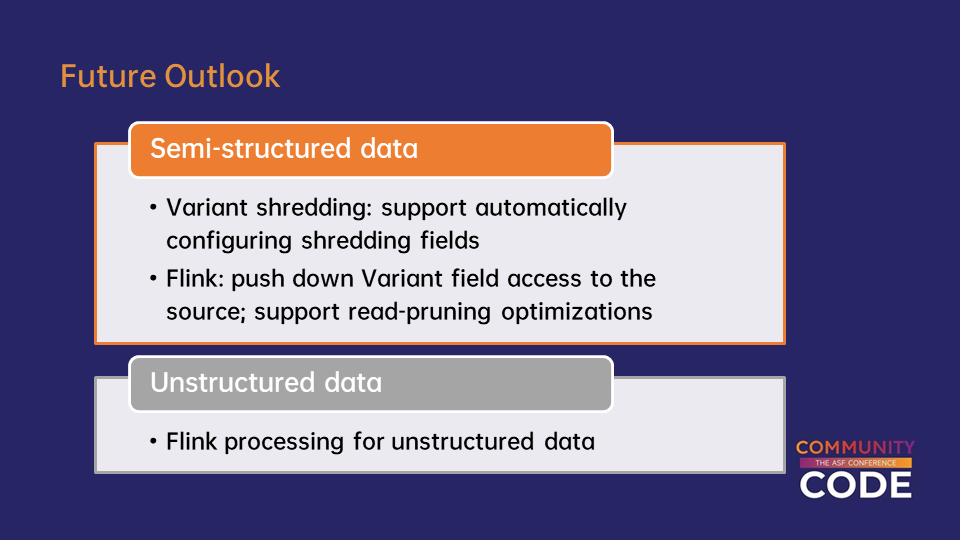

The Flink-Paimon integration follows a clear technical roadmap, with optimization features prioritized and scheduled across different release cycles based on maturity and importance.

Current released versions include core optimizations already in production, such as Lookup Join improvements, Paimon Action/Procedure usability enhancements, Materialized Table support, Nested Projection Pushdown, and Partial Update Sink Reuse. These features have been validated in large-scale production environments, providing stable, high-performance capabilities for enterprise real-time lakehouse deployments.

Flink 2.1 and Paimon 1.3 focus on foundational Variant data type support. This version delivers complete Variant read/write capabilities, enabling native semi-structured data processing in Flink and Paimon without relying on complex string parsing.

It also introduces configurable shredding field support for Variant, allowing users to manually specify which fields benefit from shredding based on data access patterns. This provides granular control for enterprises with clear business requirements.

Flink 2.2 further refines Variant data types, emphasizing flexible and powerful field access. Users will gain intuitive syntax for accessing nested fields in Variant types, along with robust type conversion capabilities for seamless integration with existing strong-type workflows.

This version aims to bring semi-structured data processing experiences closer to structured data while retaining the flexibility of semi-structured formats.

Future advancements will focus on automation and intelligence. Variant shredding will support automatic field identification, where systems analyze historical query patterns and access frequencies to determine optimal shredding fields without manual configuration.

Flink will also push Variant field access optimizations to the data source level, combining read pruning techniques to perform field selection and filtering during data retrieval. This source-side optimization will drastically reduce data transfer and processing overhead, particularly for large-scale semi-structured data.

Flink’s roadmap includes significant extensions to unstructured data processing, supporting text, images, audio, and other formats. This will enable broader applications such as content analysis, multimedia processing, and document parsing, solidifying Flink’s role as a unified data processing platform.

These innovations will further enhance Flink’s position as a comprehensive solution for modern data architectures, empowering developers to build scalable, real-time systems with unprecedented flexibility and performance.

Alibaba Cloud, Ververica, Confluent, and LinkedIn Join Forces on Streaming Innovation for Agentic AI

The Delta Join in Apache Flink: Architectural Decoupling for Hyper-Scale Stream Processing

206 posts | 56 followers

FollowApache Flink Community - July 5, 2024

Apache Flink Community - May 10, 2024

Apache Flink Community - November 21, 2025

Apache Flink Community - March 7, 2025

Apache Flink Community - September 30, 2025

Apache Flink Community - August 14, 2025

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Data Lake Analytics

Data Lake Analytics

A premium, serverless, and interactive analytics service

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Apache Flink Community