By Tian Jie

High concurrency applications are not unusual for databases in daily usage. The iconic forms of such high concurrency include a high rate of connection creation (CPS, such as short-lived PHP connections) and a massive number of requests sent to the database layer.

Like a tsunami, a large number of new connections and requests challenge the database layer's processing capacity, making the database prone to hanging or extremely slow responses. Imagine a situation where your workload suddenly multiplies in a short period of time without any forecast or buffer. Wouldn't that be overwhelming for you?

A database usually serves as the data access and aggregation unit at the bottom of the architecture. Its performance and robustness determine the final performance and user experience of applications. It's not possible to overly stress the importance of databases to businesses.

Here are the solutions that can keep your businesses "alive" in a tsunami-like scenario.

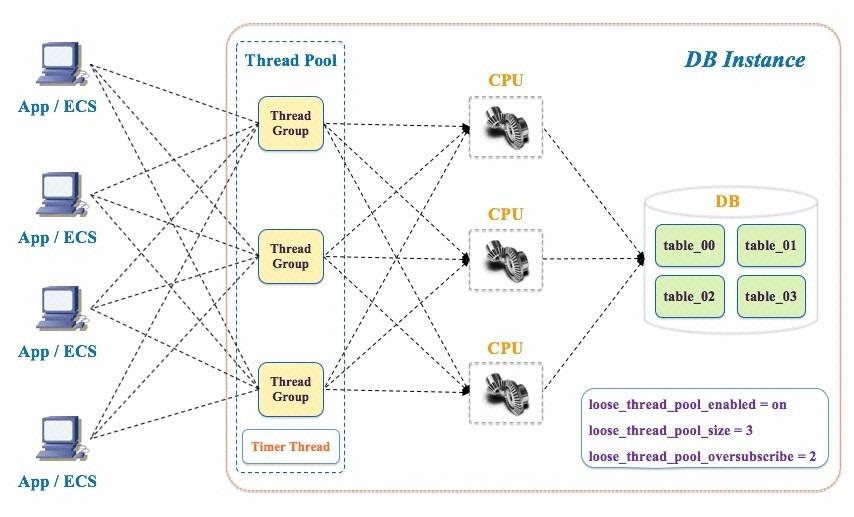

Here is a real-life example of a thread pool to facilitate understanding.

For example, a bank has 10 windows (10 CPUs of the instance specification), and the bank is officially claimed to be capable of accommodating 10 customers (10 client threads). The client flow is low at ordinary times, and all businesses operate smoothly. Occasionally when the number of customers grows slightly, everyone squeezes in a little, and then things get done. The 10 windows can accommodate 50 customers, as not all the customers handle their businesses all the time. The efficiency is not low.

However, when it comes to the end of the year, people flood into the bank to settle company payments or purchase commemorative coins. They are all in urgent need and unwilling to give way to others. The bank is so crowded that people cannot move. If some people squabble, nobody will be able to handle their business.

Then a security guard comes (the timer thread), who sets up a queuing mechanism (10 queues, loose_thread_pool_size=10), with only 10 people allowed in at a time according to the rule. Then the efficiency remains high.

Of course, if 1,000 people crowded in all at once, the queue waiting at the door will be very long. Although the bank may not break down due to the long queue, those at the end part of the queue will have to wait for a very long time, and some will file complaints (errors returned to the application upon wait timeout).

Then a problem arises that some customers don't know which commemorative coins to buy and they look around, unable to make a quick decision. The window tellers are not very busy as well. In view of this, the security guard sets up a new rule called stall_limit.

According to the rule, if some clients hesitate for longer than the time defined in stall_limit, they are deemed stalled, and another new client will be allowed in (oversubscribing). However, there is an upper limit on the number of people handling businesses at the windows, that is, 50 people (10 windows, 5 people per window, loose_thread_pool_oversubscribe=4) at most.

After this limit is reached, one client can enter only after another client finishes their transactions. If no one ends his or her transactions, the process is then hung. At this time, at least the security guard should be able to go into the bank and remove those who have been too slow in their business handling so that the queue can be unfrozen and get moving again.

Moreover, some customers may forget to bring their certificates or documentation and have to wait until the documentation is sent over. So, these people find a place and wait there (lock wait). These waiting people do not count as oversubscribing, so the security guard can allow more people in until the number of people reaches thread_pool_max_threads.

But if the documentation takes too long to arrive, the bank will be taken up by those waiting for their documentation (that is, the bank is hung). By contrast, if all the documentation arrives at the same time, the bank will become busy all of a sudden, approaching its breaking point (which is equivalent to hotspot updates).

In other words, if the bank won't see the scenario where a large number of customers handle businesses at the same time, no security guard will be needed, and no queue is necessary (loose_thread_pool_enabled=OFF). Moreover, the bank can accommodate up to 50 people at the same time. But if the security guard only allows 10 people in, the efficiency will be low.

When the queue at the door gets long, there may be three reasons:

The preceding example shows that the thread pool restricts the client thread concurrency of databases through the queuing mechanism (controlling the number of running threads), to avoid high competition and client thread creation overhead to improve the CPU usage and throughput (ensure the database's processing capability when applications' access to the database increases).

The preceding example can help summarize the applicable scenarios of the thread pool as follows:

The following table summarizes the overall concepts.

| # | Parameter | Allow Modification? | Default Value | Description |

|---|---|---|---|---|

| 1 | loose_thread_pool_enabled | Yes | on | Whether the thread pool is enabled? |

| 2 | loose_thread_pool_oversubscribe | Yes | 3 | The number of threads that each thread group can execute concurrently (active) when the thread group has a stalled thread. The maximum number of threads that can be concurrently executed (active) in a thread pool = (Thread_pool_oversubscribe +1) x Thread_pool_size. The value is recommended to be equal to or greater than 3. |

| 3 | loose_thread_pool_size | Yes (RDS) | Number of CPUs of the current instance specification (PolarDB) | Number of thread groups in the thread pool. It is recommended that you set this value to the number of CPUs of the current instance specification. |

| 4 | thread_pool_max_threads | No | 10000 | Maximum number of threads in the thread pool. No new threads can be created after this number is reached. |

| 5 | thread_pool_idle_timeout | No | 60 seconds | The idle time before the idle thread in the thread group exits. |

| 6 | thread_pool_stall_limit | No | 10 ms | The interval for the timer thread to check the "stall" status. This prevents a thread from occupying a thread group for a long time. |

Official Documentation: Thread Pool

Kernel Document: Thread Pool (article in Chinese)

When some queries are executed for a long time, are there other methods available to reduce the execution cost except for optimizing SQL queries? (Sometimes, optimizing SQL queries to reduce the execution cost is not feasible. If a query is not time-sensitive, consider moving it to a read-only instance for execution.) The answer is "Yes". The next method is "throttling".

If the "tsunami" is exceptionally violent, and you can identify a number of queries with the same characteristics in the "tsunami", such as in the case that the Redis cache was broken down and a large number of similar or duplicate queries hit the database layer, or such as the long-running queries in the thread pool as in the preceding example, you can apply the throttling method to these requests — if the transactions support or allow degradation — to maintain the normal operation of databases.

Compared with the thread pool mechanism, which adopts an all-encompassing approach, flow throttling is more like a targeted blow, gathering all strength, to a specific point.

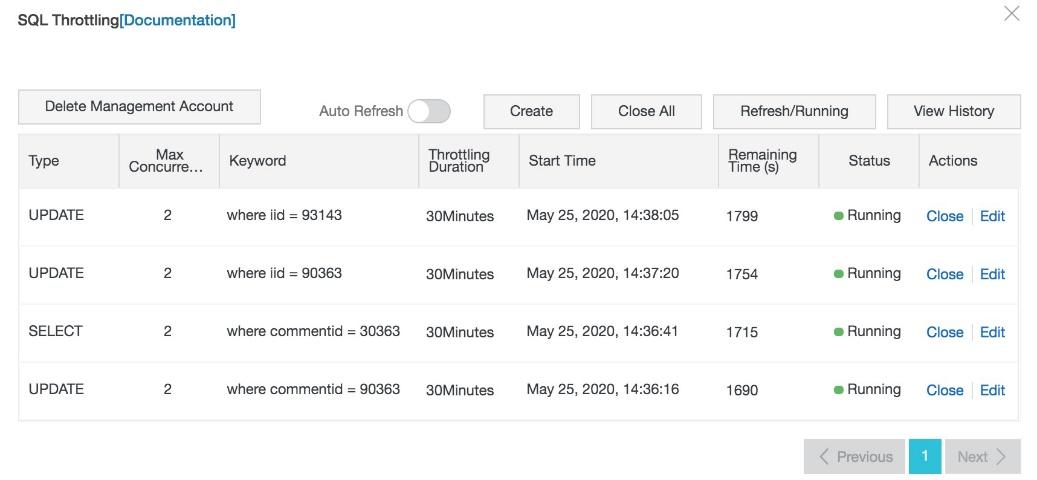

For RDS for MySQL 8.0 and PolarDB for MySQL, use the statement concurrency control feature to implement throttling on specified queries.

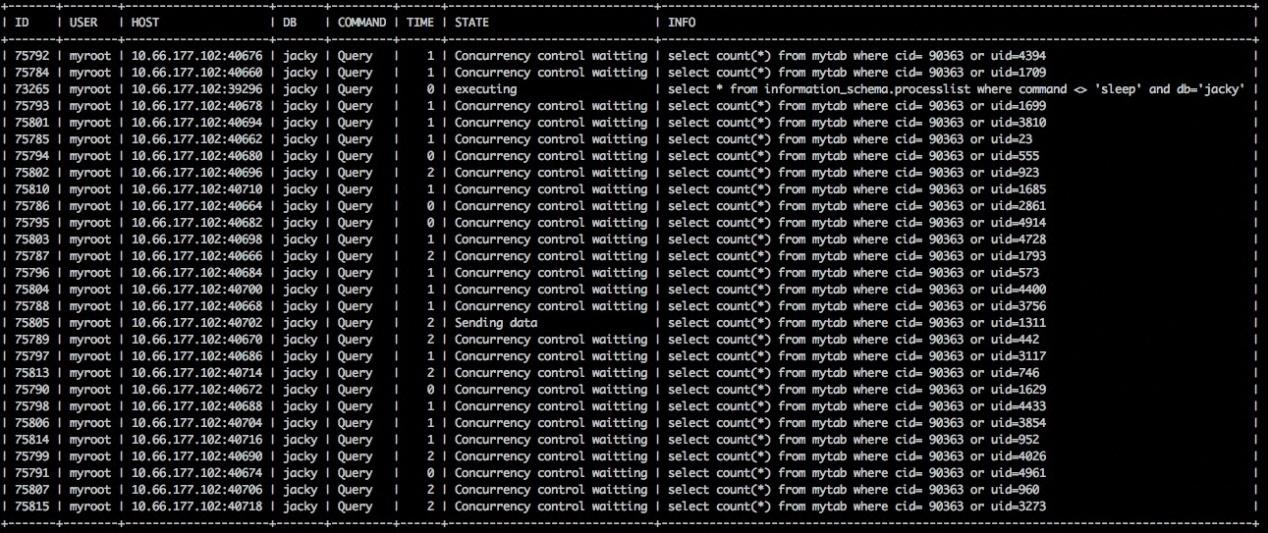

For example, you find that the following queries degrade the performance of the entire instance in a high-concurrency scenario, and the business side agrees that the query can be throttled.

# High-cost slow queries

select count(*)

from jacky.mytab

where cid = 90363

or uid = ???After identifying the SQL statement, call the dbms_ccl toolkit to create a throttling rule based on the SQL statement characteristics.

# Add a throttling rule to allow a maximum of one concurrent execution

call dbms_ccl.add_ccl_rule('select','jacky','mytab',1,'cid=;uid=');

# Display the current throttling rule

call dbms_ccl.show_ccl_rule();

+------+--------+--------+-------+-------+-------+-------------------+---------+---------+----------+-----------+

| ID | TYPE | SCHEMA | TABLE | STATE | ORDER | CONCURRENCY_COUNT | MATCHED | RUNNING | WAITTING | KEYWORDS |

+------+--------+--------+-------+-------+-------+-------------------+---------+---------+----------+-----------+

| 2 | SELECT | jacky | mytab | Y | N | 1 | 116 | 1 | 26 | cid=;uid= |

+------+--------+--------+-------+-------+-------+-------------------+---------+---------+----------+-----------+After a throttling rule is added, all SQL requests that exceed the defined concurrency will be held in the "Concurrency control waiting" state.

The CPU usage changes from 100% before the throttling to around 50% and service availability is ensured.

Official Documentation: Statement Concurrency Control

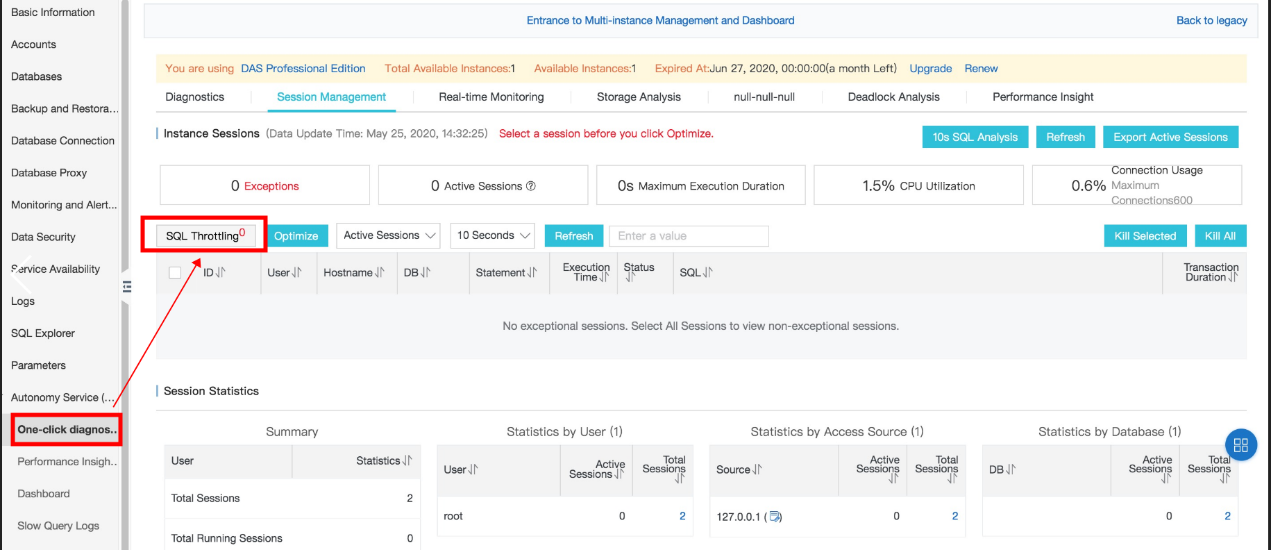

The CloudDBA in the console of RDS for MySQL 5.6 and RDS for MySQL 5.7 integrates the SQL throttling feature.

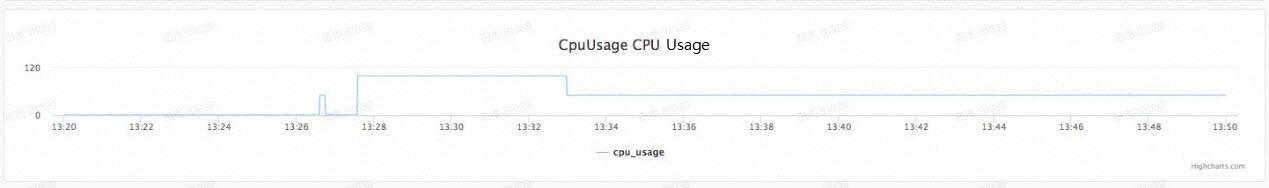

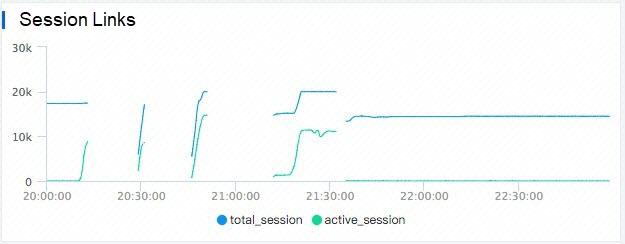

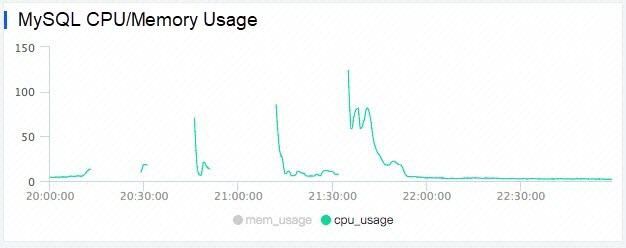

Here is a real-life example. During peak hours, a large number of concurrent requests flooded into a customer's instances with advanced configurations, and the CPUs of the instances were fully occupied, resulting in very slow responses. According to the monitoring data, the number of active sessions at that time surpassed 14,700.

After repeated failures to restore services through adjustments at the business layer, services are restored when an SQL throttling rule is applied at 2020.3.24 21:35.

Sessions on ApsaraDB RDS instances:

CPU usage on ApsaraDB RDS instances:

Official Documentation: SQL Throttling

The preceding part discusses the countermeasures at the database layer. Do we have to passively defend against the problems at the database level? Is there a way to keep the enemy outside?

| # | Name | Description |

|---|---|---|

| 1 | Short-lived connection | A TCP connection is established when data interaction is required between two parties and disconnected after data is sent. PHP-based applications usually use short-lived connections for database access. |

| 2 | Persistent connection | An idle TCP connection is reused when data interaction is required between two parties. If no idle TCP connection is available, a new TCP connection is established. For future reuse, the TCP connection is not disconnected after data is sent. Java-based applications usually use persistent connections for database access. |

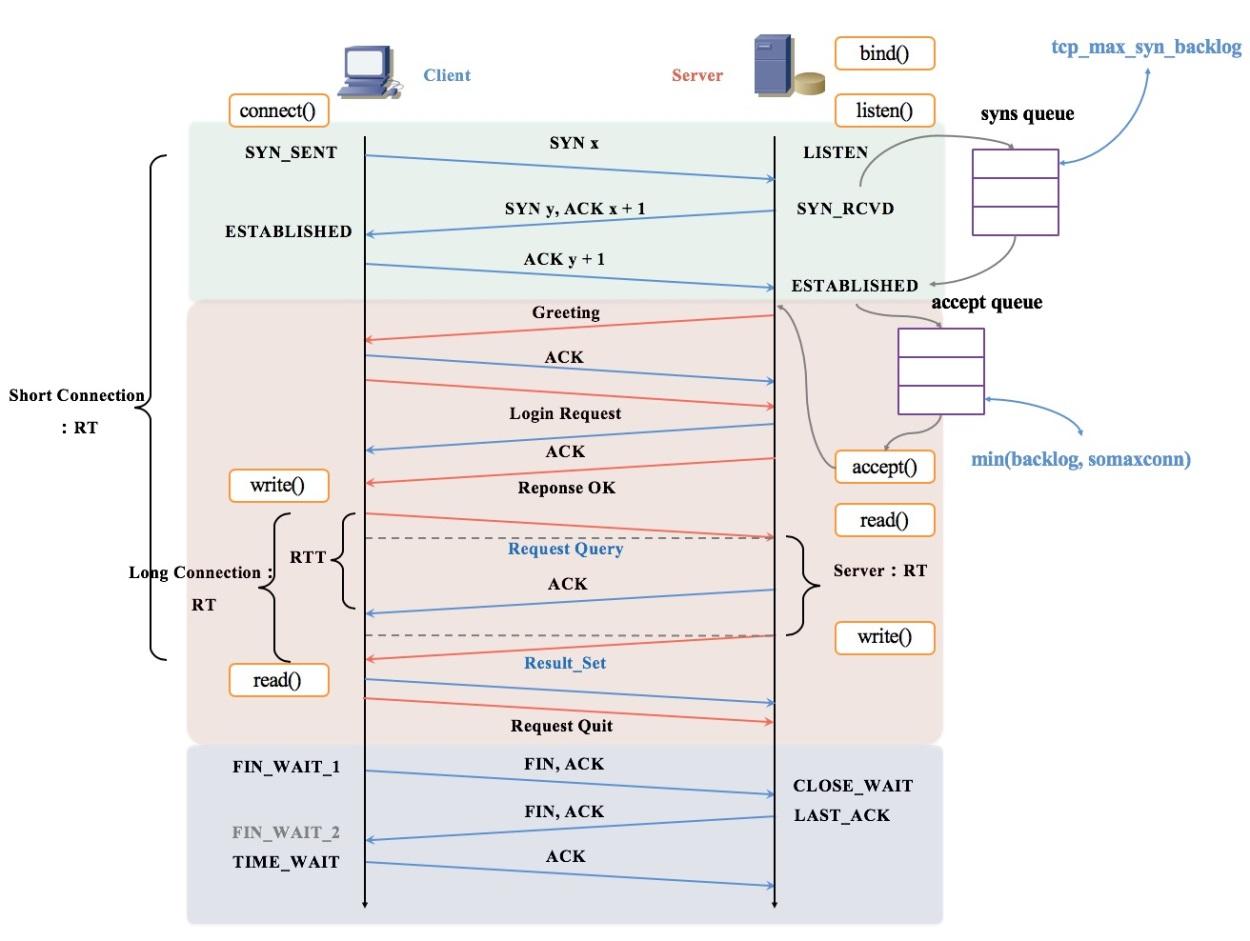

| 3 | syn queue | A SYN queue serves as the connection socket queue that stores received SYN requests. The system kernel automatically responds with an SYN-ACK after the TCP protocol stack receives an SYN request, puts the connection represented by the SNY into the SNY queue, and determines whether to retransmit the SYN-ACK. The length of an SYN queue is determined by the Linux kernel parameter of tcp_max_syn_backlog or somaxconn. |

| 4 | accept queue | An accept queue stores the connection sockets for completing TCP three-way handshakes. When MySQL calls accept(), a socket is retrieved from the queue for processing. The length of an accept queue is determined by the smaller one of the backlog parameter set by the application and the kernel parameter somaxconn. |

| 5 | ListenOverFlow | The number of times when newly received SYN requests are discarded without being processed because the SYN queue is full. |

| 6 | ListenDrops | The number of times when the connections for completing TCP three-way handshakes are discarded without being processed because the accept queue is full. |

First, let's take a look at how a common SQL request is sent from an application to a database over the network and then processed.

As shown in the preceding time-series graph, if no network connection is available between the application and the database, a MySQL network connection based on TCP/IP must be established before the application can send SQL requests to the database instance and obtain the results.

If an application (such as a PHP-based application) uses a short-lived connection mechanism, a TCP network connection to the database instance needs to be created for each SQL query. This will consume the CPU resources of the database instance and of the physical server where the database instance runs.

In a tsunami-like scenario, applications using short-lived connections will feature a high rate of connection creation (CPS, greater than or equal to the QPS in ListenOverFlow and ListenDrops scenarios). This will further consume the CPU resources of the database instance in addition to the already high load (QPS), increasing the CPU usage and reducing the CPU efficiency. As a result, the database may crash.

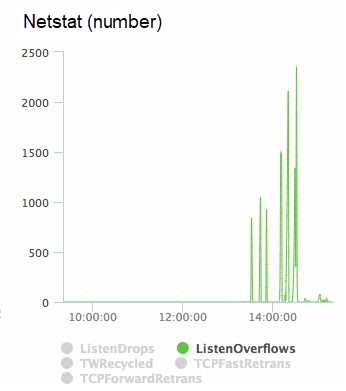

When CPU resources are exhausted, a large number of connection requests may be backlogged and cannot be processed, triggering ListenOverFlow and ListenDrops.

Here is a real-life example.

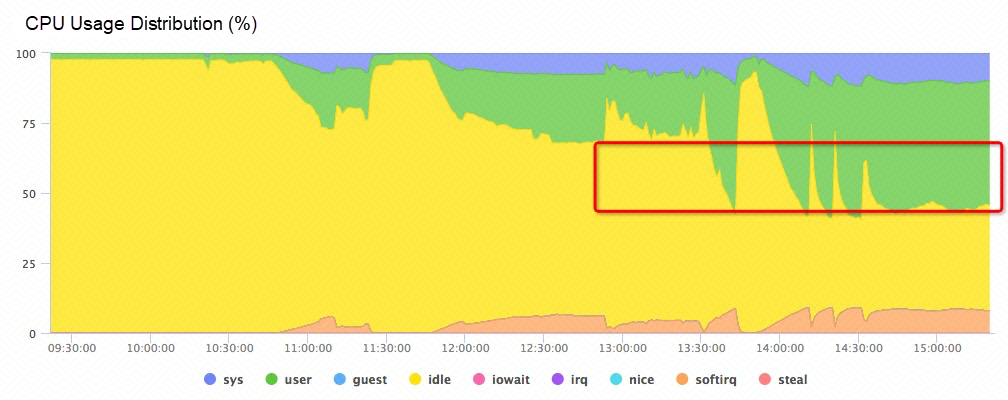

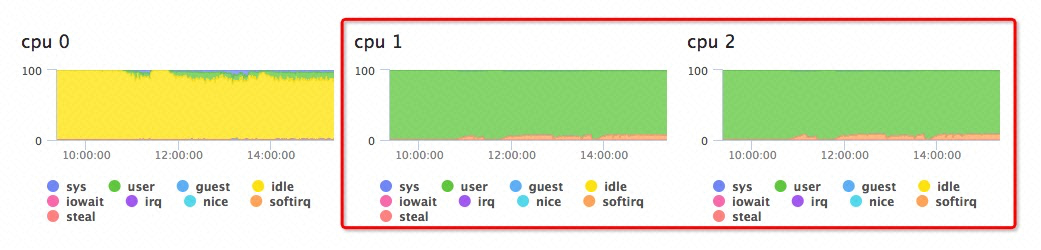

The customer changed the application from the persistent connection mode to the short-lived connection mode at 13:30. Due to the high concurrency of connection creation requests in short-lived connection mode (CPS - New connections per second), the instance's CPU usage went up by more than 25% after the change, a large number of connection failures or errors occurred on the service side, and the slow responses of the RDS instance were perceivable.

Some CPU resources were used up, and ListenOverFlow or ListenDrops errors occurred due to the failures to handle high-concurrency connection requests.

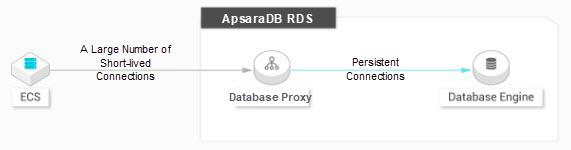

Thread pool is a good solution to such problems at the database layer. The database instances enabled with the dedicated proxy function (ApsaraDB RDS for MySQL read/write splitting addresses and PolarDB for MySQL cluster addresses) can also enable the link-layer solution with short-lived connection optimization.

After an application is disconnected, the dedicated proxy of the database checks whether the previous connection is idle. If the connection is idle, the proxy retains the connection between the proxy and the database in the connection pool for a period of time and only releases the connection between the application and the proxy.

If the application initiates a new connection within the period, the dedicated proxy uses the reserved connection from the connection pool to reduce connection creation overhead.

Official Documentation: Short-lived Connection Optimization

Lonch Group Cuts O&M Costs by 50% by Migrating to Alibaba Cloud

Fast and Cost-effective MySQL Analysis Solution Based on Alibaba Cloud DLA

ApsaraDB - April 15, 2019

Alibaba Clouder - June 24, 2020

Alibaba Cloud Community - September 1, 2022

Alibaba Cloud Community - January 5, 2023

Alibaba Cloud Experts Column - September 20, 2022

Alibaba Clouder - September 30, 2019

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn MoreMore Posts by ApsaraDB