By Wuzhe and Zanye

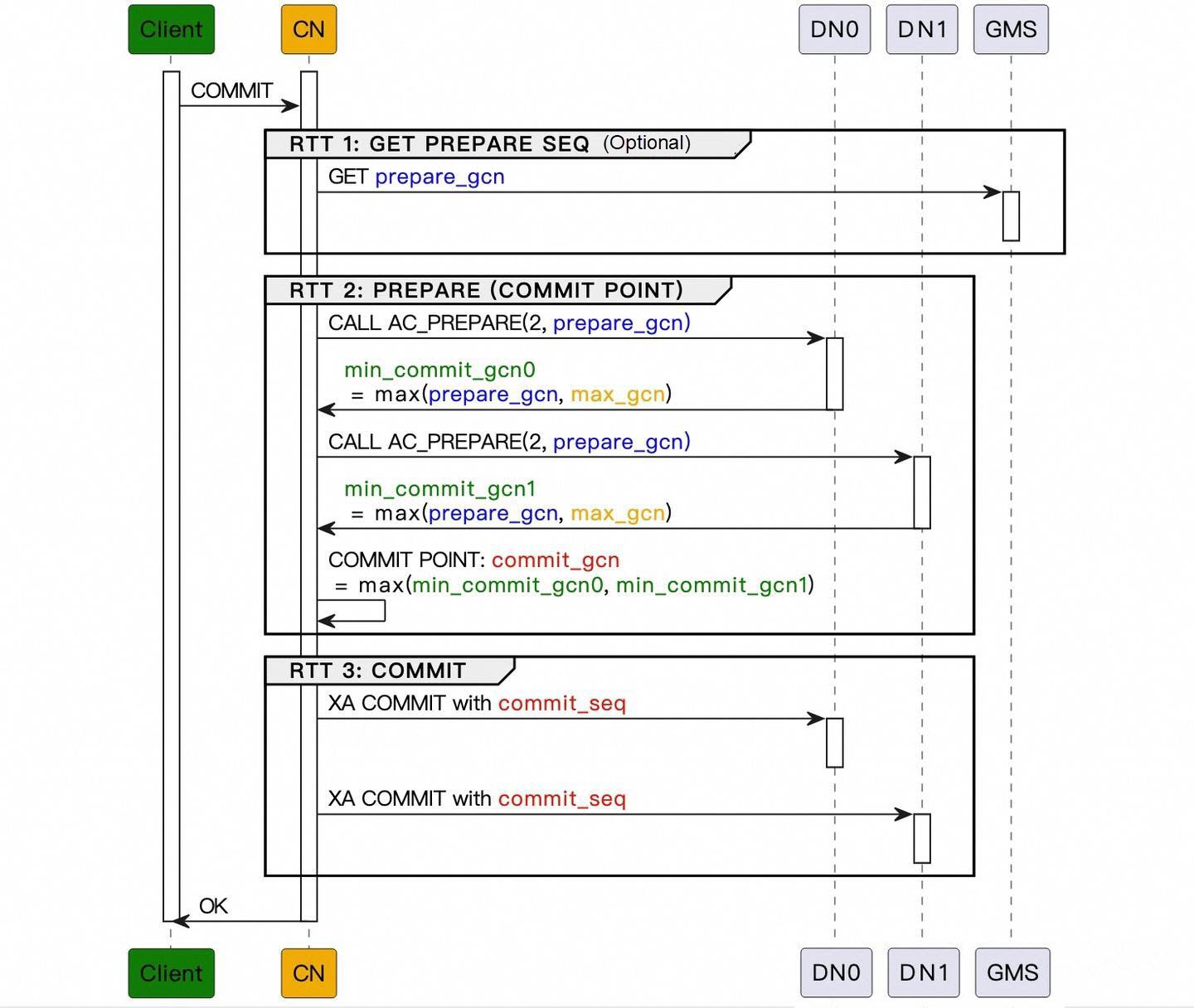

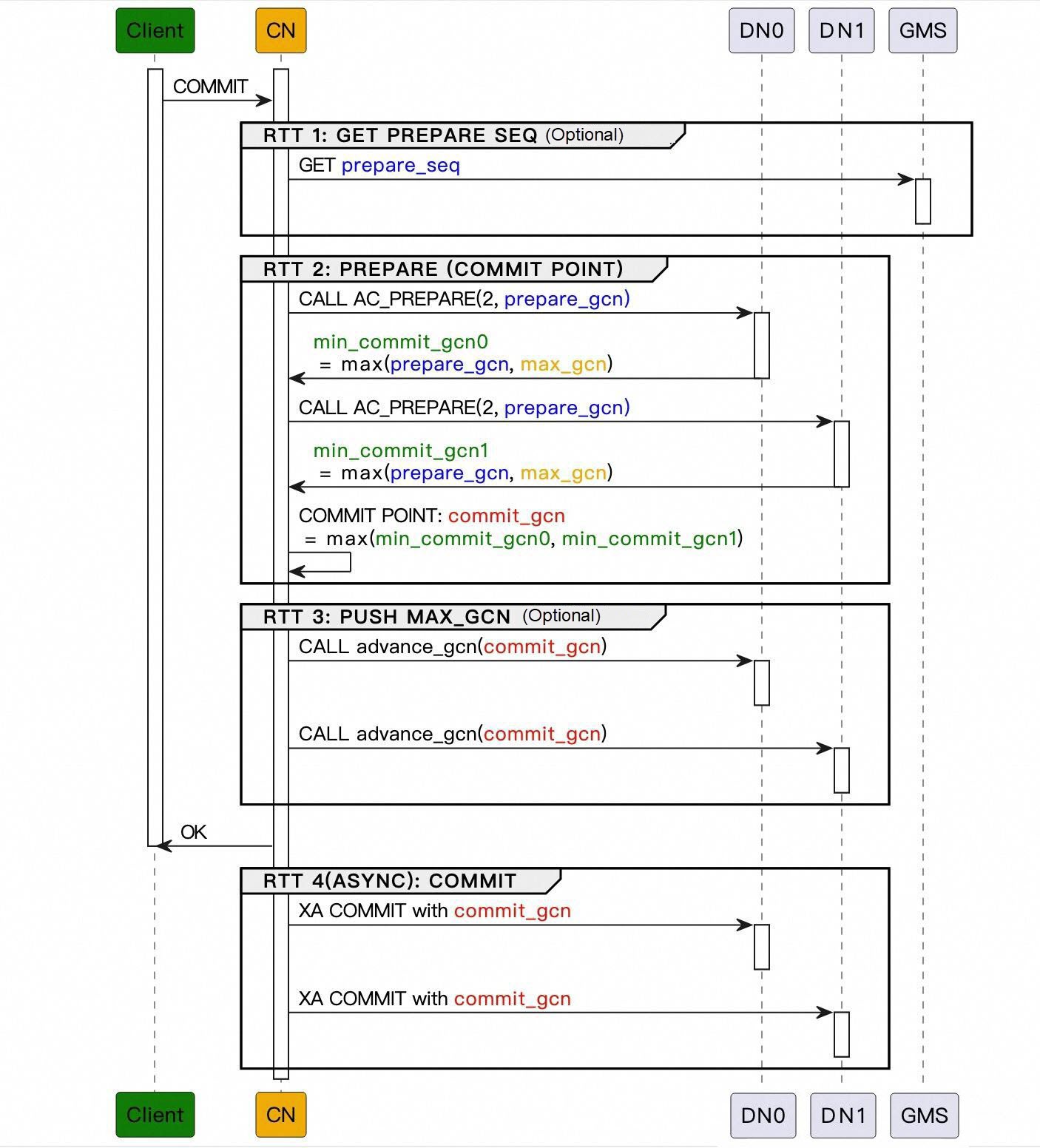

In the previous article, Implementation of PolarDB-X Distributed Transactions: Commit Optimization Based on the Lizard Transaction System (Part 1), we eliminated the transaction log step, reducing the commit latency of distributed transactions to three round-trip times (RTTs) and two data persistence operations, with the first RTT (obtaining the prepare_gcn) being optional. In this article, we will continue to discuss the implementation details of the commit optimization, focusing on the challenges and difficulties encountered during its deployment. Let's recap on what we've done so far.

As discussed earlier, once all participants complete the PREPARE phase, the transaction reaches the COMMIT POINT. Even if the subsequent XA COMMIT fails, a (possibly new) coordinator can determine from the participants' states that the transaction is committed and continue the commit process. This raises the question: Can we return an acknowledgment to the client immediately after PREPARE, while performing the final COMMIT asynchronously, thereby saving one RTT? If asynchronous commit is feasible, the client-perceived commit latency would be reduced to just one RTT and one data persistence operation. Before delving into this optimization, let's first discuss single-shard read optimization.

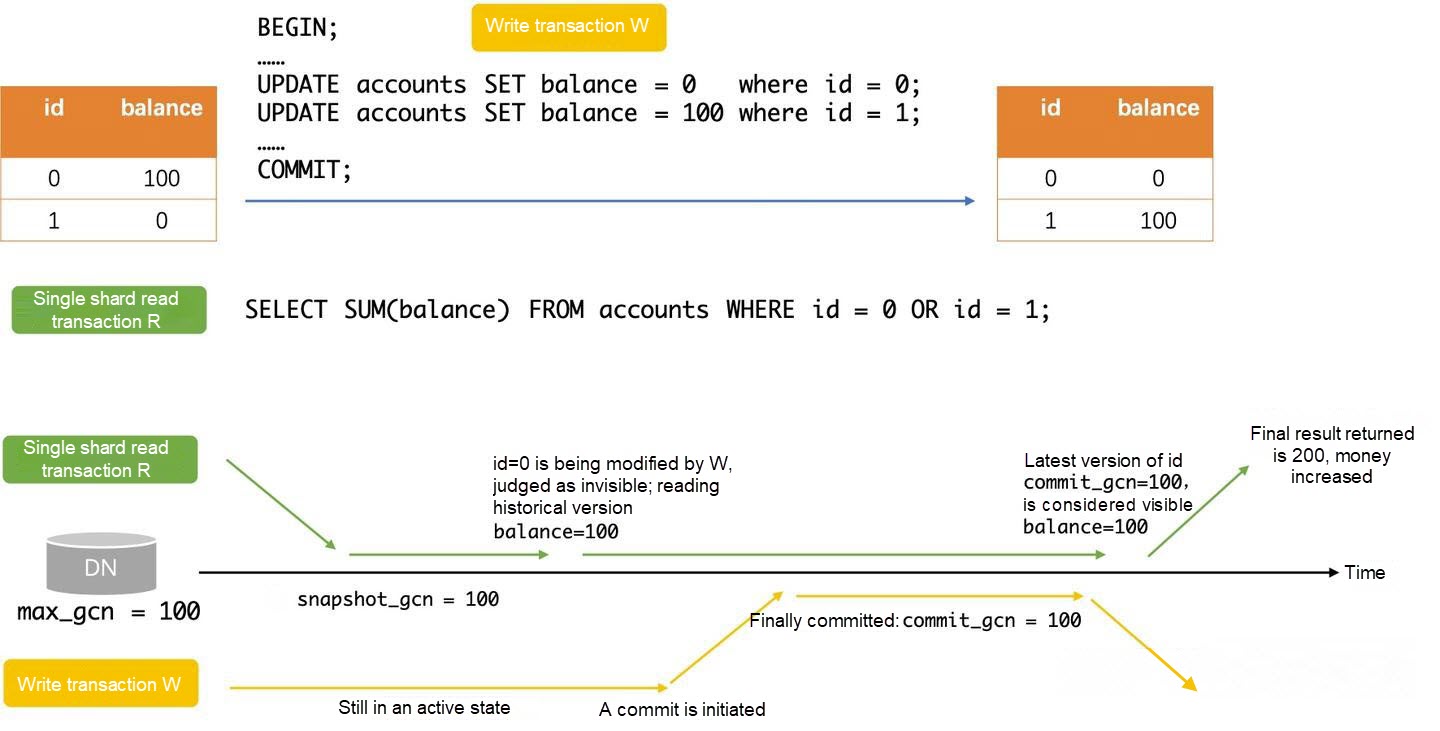

During the implementation of the commit optimization, the most challenging issue we encountered was the single-shard read optimization. In PolarDB for Xscale (PolarDB-X), if an autocommit read statement touches only one data shard, we skip obtaining a snapshot_gcn from the global meta service (GMS) and instead use that shard's max_gcn as the read's snapshot_gcn. The max_gcn is the largest global commit number (GCN) the data node (DN) has observed, and can be elevated by other transactions' snapshot_gcn and commit_gcn values. This optimization eliminates one RTT to the GMS and significantly improves single-shard read performance. Before the commit optimization, the visibility determination rules for single-shard reads were:

These rules ensured that single-shard reads preserved both snapshot isolation and external consistency.

After the commit optimization is introduced, however, visibility determination for single-shard reads becomes more complex. Under the new flow, a transaction's final commit_gcn may itself be derived from a DN's max_gcn, just like a single-shard read's snapshot_gcn. When a single-shard read's snapshot_gcn equals a write transaction's commit_gcn, the guarantee that "the single-shard read occurred after the commit" no longer necessarily holds. Consequently, we can no longer unconditionally treat equality as visibility. A single-shard read might first see the write as active and thus treat it as invisible, and later observe it as committed with the same commit_gcn and treat it as visible. The following diagram illustrates this scenario.

Can we simply treat cases where the snapshot_gcn equals the commit_gcn as invisible? No. Doing so would violate read-after-write consistency. After a write transaction commits (the client has received the acknowledgment), a subsequent single-shard read may still obtain a snapshot_gcn equal to the write's commit_gcn. In that case the read must be able to see the write's updates and cannot simply treat it as invisible. To solve this, after the commit optimization is introduced, when a single-shard read encounters a commit-optimized transaction and finds that the snapshot_gcn equals the commit_gcn, the read falls back to the standard distributed read path: It obtains a snapshot_gcn from the timestamp oracle (TSO) and then performs the read.

This also answers the question from the previous article: Why can a normal distributed read safely treat a commit-optimized transaction as invisible when the snapshot_gcn equals the commit_gcn? According to the TSO's GCN generation rules, that equality can only occur if the distributed read started before the write began committing, so the read precedes the commit and should indeed consider the write invisible.

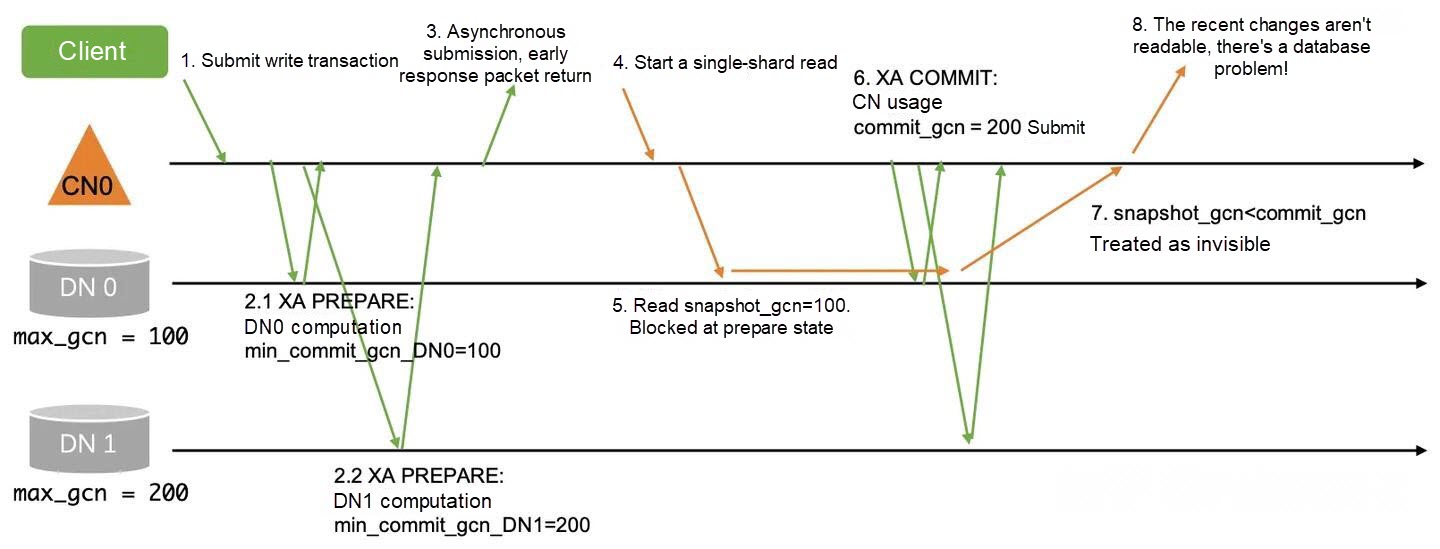

Now, if we were to make the final commit step asynchronous as well, what problem would arise? The answer is that it would break read-after-write consistency. The specific scenario is illustrated in the diagram below.

The situation arises because the final commit_gcn may be derived from max_gcn values on other DNs. The DN serving the single-shard read has no visibility into those remote max_gcn values, so it cannot guarantee that it will use a sufficiently large snapshot_gcn when issuing the read.

To address this, before returning the acknowledgment to the client, we propagate the finalized commit_gcn to each participating DN to advance its local max_gcn. This adds one network RTT but only updates an in-memory value on each DN, so it incurs no data persistence operation. In our implementation, the compute node (CN) tracks the highest max_gcn it has previously pushed to each DN. If a propagation would push a max_gcn that is less than or equal to the recorded value, the CN skips the propagation and returns the acknowledgment immediately. Additionally, if single-shard reads are rare for a workload, we provide an option to disable single-shard reads so that all reads follow the standard distributed read path. In that mode, the max_gcn propagation can be omitted, enabling transaction commit with just one RTT and one data persistence operation.

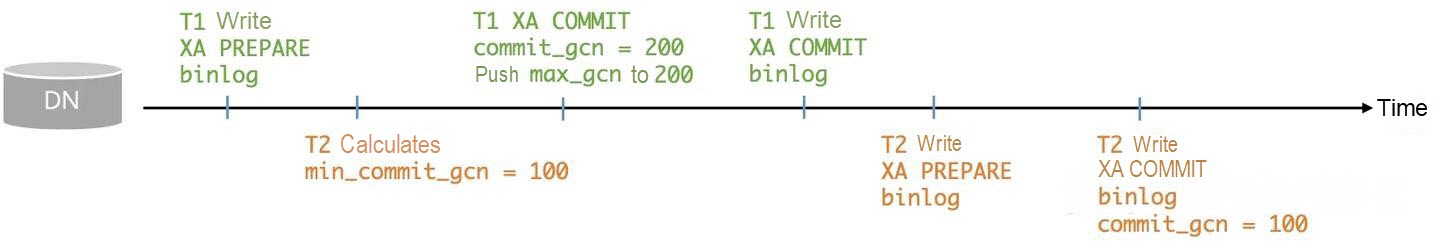

Since we have modified how the commit_gcn is generated, we must also ensure compatibility with the Change Data Capture (CDC) component. The CDC relies on the following property to correctly merge and order distributed transactions:

• For two branch transactions T1 and T2 on the same DN, if the physical binlog (the DN's local binlog) records them in the order P1 C1 P2 C2 (where P represents an XA PREPARE event and C represents an XA COMMIT event), then it must hold that T1's commit_gcn ≤ T2's commit_gcn.

Before the commit optimization, this property naturally held because the GCNs assigned by the TSO were strictly monotonically increasing. Since the commit_gcn was obtained between XA PREPARE and XA COMMIT, T1's commit_gcn was always smaller than T2's commit_gcn.

However, after the commit optimization is introduced, the prepare_gcn is obtained before the XA PREPARE phase, which can no longer guarantee the property above. Fortunately, we can still rely on the max_gcn. In the initial implementation, the computation of min_commit_gcn = max(prepare_gcn, max_gcn) and the writing of the XA PREPARE binlog were not performed atomically. They were two separate operations. In concurrent commit scenarios, this could lead to the problem shown in the following diagram.

Eventually, the DN's physical binlog could show the sequence P1 C1 P2 C2. However, T1's commit_gcn is actually greater than T2's.

Binlog persistence is a serial operation: All PREPARE/COMMIT transactions enter a global queue and are written to disks in batches, so the physical binlog order follows the queue order. To fix the issue, we moved the computation of min_commit_gcn to the moment a transaction enters the global queue. At that point, the min_commit_gcn computed for any PREPARE transaction cannot be smaller than the commit_gcn of any COMMIT transaction that has already been persisted to disks or has already entered the queue, because those earlier COMMIT transactions will have used their commit_gcn to advance the DN's max_gcn.

Finally, after resolving the single-shard read optimization and ensuring compatibility with CDC ordering, the results of the transaction commit optimization are shown in the diagram below.

In the default scenario, a transaction commit still requires three RTTs and one data persistence operation. However, the first RTT for obtaining the prepare_gcn and the third RTT for pushing the max_gcn are both lightweight operations with minimal latency. In cases where strict external consistency is not required, and with the single-shard read optimization disabled, a distributed transaction commit can even be completed with just one RTT and one data persistence operation.

We conducted performance tests of the commit optimization using sysbench, focusing on two scenarios: a write-only scenario and an update_index scenario with a global secondary index (GSI).

• sysbench write only

| Number of threads | Original commit TPS | Original commit RT (ms) | Optimized commit TPS | Optimized commit RT (ms) | Improvement |

|---|---|---|---|---|---|

| 1 | 104.69 | 9.55 | 129.32 | 7.73 | 23.53% |

| 50 | 5076.35 | 9.85 | 6293.05 | 7.94 | 23.97% |

| 100 | 8787.60 | 11.38 | 10918.16 | 9.16 | 24.25% |

| 200 | 13896.21 | 14.39 | 17212.93 | 11.62 | 23.87% |

| 400 | 16185.88 | 24.71 | 19754.65 | 20.25 | 22.05% |

• sysbench update GSI

We created a GSI on column k. In this scenario, each update modifies the partition key, resulting in a distributed transaction.

| Number of threads | Original commit TPS | Original commit RT (ms) | Optimized commit TPS | Optimized commit RT (ms) | Improvement |

|---|---|---|---|---|---|

| 1 | 119.22 | 8.39 | 160.06 | 6.25 | 34.26% |

| 50 | 5723.69 | 8.74 | 7397.83 | 6.76 | 29.25% |

| 100 | 9682.37 | 10.33 | 12342.79 | 8.10 | 27.48% |

| 200 | 14640.35 | 13.66 | 18127.87 | 11.03 | 23.82% |

| 400 | 16180.69 | 24.72 | 19629.86 | 20.38 | 21.32% |

| 1000 | 17617.62 | 56.75 | 21005.05 | 47.60 | 19.23% |

It can be seen that in the two scenarios above, database performance improves by 20% to 30%. Commit optimization not only increases TPS but also reduces response time (RT). When concurrency is relatively low, meaning database resources are not fully utilized, as is typical in real production environments, the performance gains from commit optimization will be even more pronounced.

PolarDB Brings Alibaba Cloud Another SIGMOD Best Paper Award

ApsaraDB - November 26, 2025

ApsaraDB - May 16, 2025

ApsaraDB - January 23, 2024

ApsaraDB - January 17, 2024

ApsaraDB - March 17, 2025

ApsaraDB - October 17, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB