By Hongchao Deng, Cloud Native Application Team, Alibaba

This is an excerpted transcript from a talk that Hongchao Deng gave in DeveloperWeek 2020 at San Francisco.

Nowadays, Alibaba runs Kubernetes (abbr. k8s) at cloud scale. Alibaba not only manages 10,000s of k8s clusters on cloud, but also runs clusters at size of 10,000s of nodes to support e-commerce business operations.

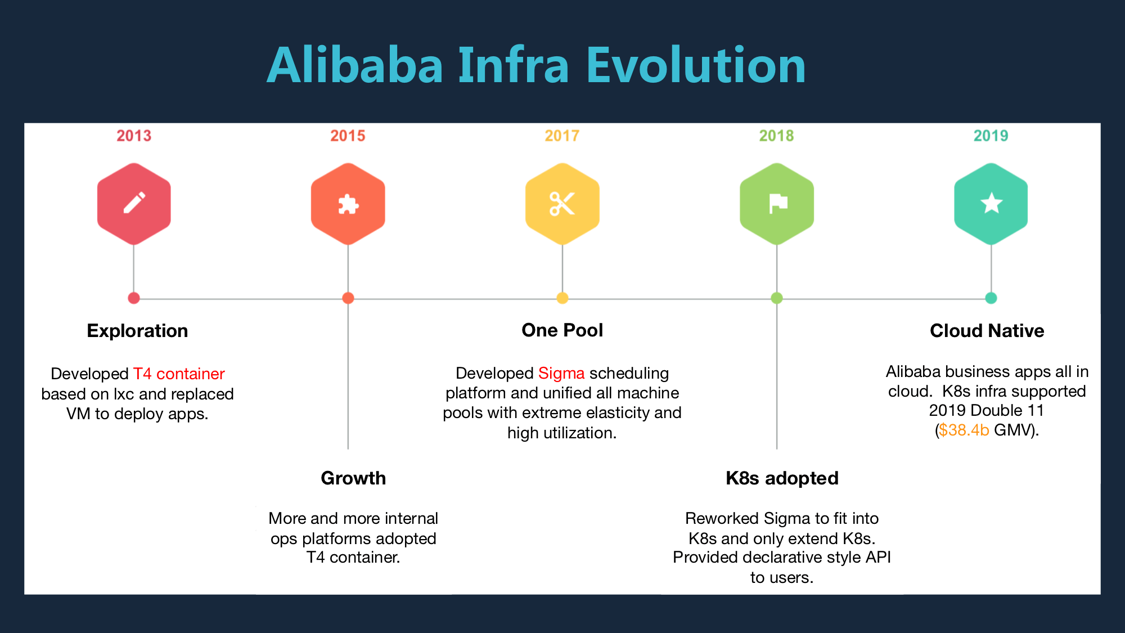

Our journey of containerization began in 2013. Before Docker was born, there was something called T4, a container engine based on lxc developed in Alibaba to deploy apps colocated on bare metal machines. In 2017, Sigma, a container orchestration engine similar to k8s, was developed as a unified layer to manage internal pools of machines with average utilization of 40%. In 2018, Sigma was reworked and migrated to be k8s compatible. Our engineers wrote custom controllers and scheduling algorithms, and exposed declarative style API to users. By the end of 2019, most applications in Alibaba are running on k8s and dozens of frameworks are built on top of k8s ecosystem. The 2019 Double 11 event has been not only a business success but also a technical proof that k8s can support infrastructure at such a scale.

After we resolved the scalability and stability issues in k8s, we were facing another big challenge -- k8s API was too complicated and hard for developers to learn. Why? Let me clarify the three major reasons:

First of all, k8s resource model is not application centric. There is no "application" concept in k8s, but just loosely coupled infra level resources. To deploy an app requires writing infra resources like Pod or network or storage, and they are loosely coupled because there is no centric point. For developers, they don't want to specify such low level details; they want to specify high level application spec, such as a stateless service component with auto-scaling, monitoring, VPC, etc. We need to provide a high level application spec and an application centric resource model to bridge the gap between deploying application and configuring infra.

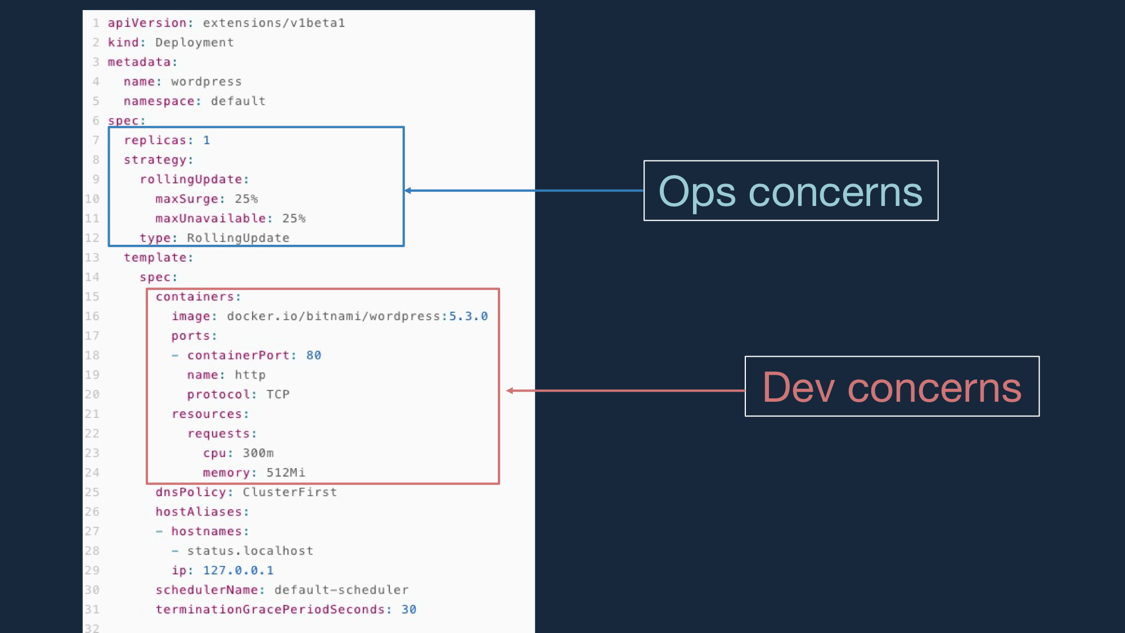

Secondly, there is no separation of concerns in k8s API. From the picture above, we can tell that k8s encapsulates all fields in one API which belong to different roles. For example, developers would only specify container image, ports, and health checks; Operators are responsible for specifying replica size, rolling update strategy, volume access mode, etc. There is nothing wrong with k8s API. K8s is designed to expose infrastructure capabilities and used to build other platforms. Thus it needs to include everything and provides all-in-one APIs. But we found that all-in-one APIs are not suitable for end-user applications. On top of k8s API, we need to decouple roles and separate concerns of developers and operators.

Third, there is no portable application abstraction on k8s. K8s defines a standard way to consume infra resources. But as mentioned above deploying an app needs ingress, monitoring, etc. We need to standardize those app-operation interfaces and have portable application abstraction across platforms.

This comes down to a new thing I will introduce today called Open Application Model (OAM). It is a standard specification that defines modular, extensible, portable application model for building and delivering cloud native applications. Let's take a deeper look into OAM and see how it solves the above problems.

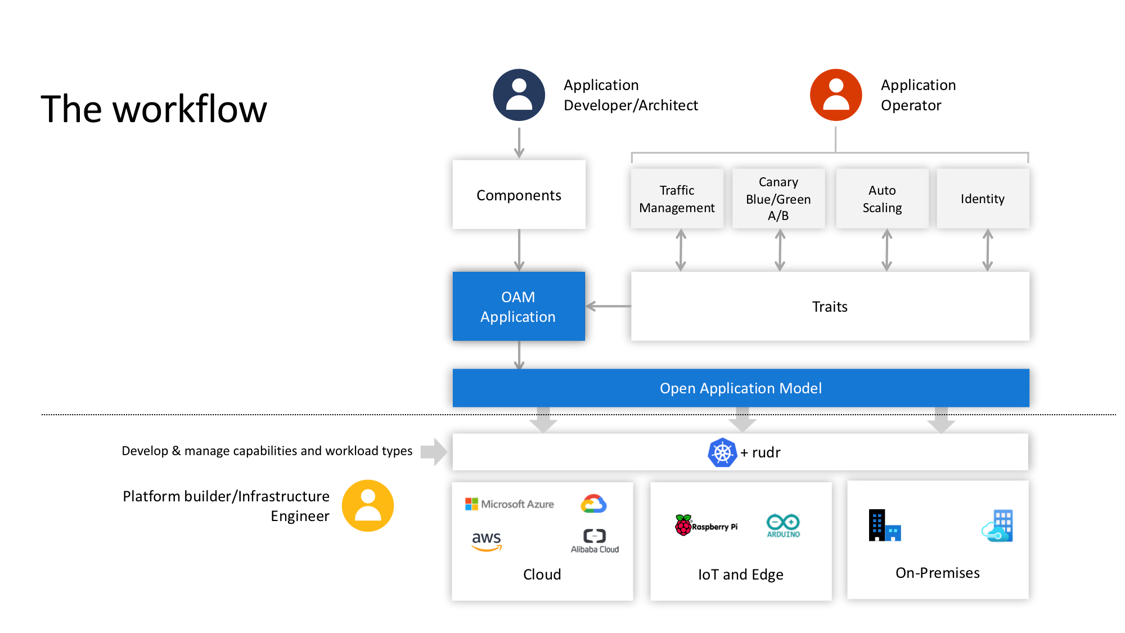

From the picture above, we can see that developers define Components to describe service units. Then operators define and attach operational Traits to Components in an ApplicationConfiguration which is the final OAM deliverable. The underlying infra resources will then be rendered by the OAM platform. OAM hides low level details such as whether infra is Cloud or IoT and standardizes deployment model from application centric point of view.

OAM defines a core containing a set of Workload/Trait/Scope types as the cornerstone of an application delivery platform. They are the minimum set of functionalities to build deployment pipelines. An open source implementation, called Rudr, has implemented the core spec.

Additionally, Rudr provides mechanisms to let users extend its functionalities. For example, Rudr core provides Server Workload to run applications in containers and manage application lifecycle. I can add more Workloads such as FaaS to run serverless functions, or Traits such as CronHPA to define CronJob style HPA policies. OAM manages those capabilities and their relationship in a single platform in a standard declarative manner.

So far, we have discussed the motivation and architecture details of OAM. It is noteworthy that the OAM project is vendor neutral and community driven. The spec and implementation are supported by an open community including Alibaba, Microsoft, Upbound. It welcomes more people to get involved and build the future of application delivery together. You can:

Contribute to repos:

Join the mailing list: https://groups.google.com/forum/#!forum/oam-dev

Join the community call: Bi-weekly, Tuesdays 10:30AM PST

Getting Started with Kubernetes | A Brief History of Cloud-native Technologies

664 posts | 55 followers

FollowAlibaba Developer - December 27, 2019

Alibaba Developer - September 6, 2021

Alibaba Cloud Native Community - March 11, 2025

Alibaba Cloud Serverless - February 17, 2023

Alibaba Cloud Native Community - May 23, 2025

Alibaba Cloud Native Community - June 23, 2022

664 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn MoreMore Posts by Alibaba Cloud Native Community

Ganison February 21, 2020 at 3:22 pm

Genial