ACID transactions are a crucial feature of relational databases and pose one of the biggest challenges for NewSQL databases. In the PolarDB-X architecture, the data node (DN) synchronizes logs through Paxos to ensure transaction durability, while atomicity, consistency, and isolation are ensured through appropriate transaction strategies. Additionally, in a distributed environment where data is distributed across different nodes, linearizability is also an important characteristic of the transaction strategy.

Currently, most distributed databases use the strategy based on two-phase commit (2PC), including the Percolator algorithm and the XA protocol.

Percolator is a distributed system developed by Google based on BigTable, with a key design of the Percolator algorithm to support distributed transactions. In the Percolator algorithm, the client is the most critical component which caches updates locally and submits them to the server through 2PC during commit. One of the major advantages of the Percolator algorithm is that the main states are managed by the client, while the server only needs to support simple CAS and does not need to maintain transaction states or introduce additional transaction managers.

However, Percolator has some known drawbacks such as higher latency during the commit phase, support only for optimistic lock scenarios, and the report of conflict errors only at commit time. The XA protocol, fully named the X/Open XA Protocol, is a generic standard for transaction interfaces. The XA protocol is also based on the two-phase commit (2PC) strategy.

PolarDB-X uses a distributed transaction strategy based on the XA protocol, which can be further divided into multiple implementations:

• BestEffort transactions for MySQL 5.6

• XA transactions based on InnoDB

• TSO transactions implemented by PolarDB-X 2.0

This article focuses on XA transactions and TSO transactions.

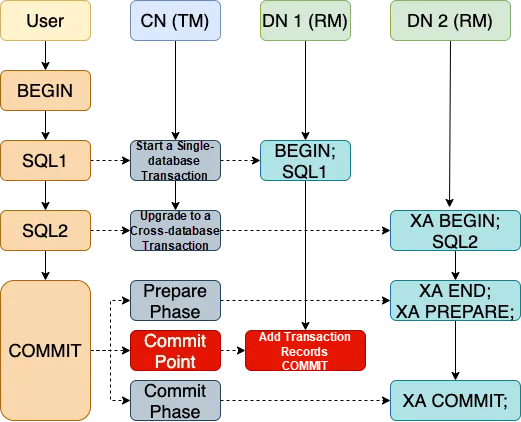

There are two roles designed in the XA protocol:

• Transaction Manager (TM): initiates transaction commits and handles exceptions when transactions fail. In PolarDB-X, this role is performed by the compute node (CN).

• Resource Manager (RM): is a participant of transactions, such as a database in MySQL. In PolarDB-X, this role is performed by the data node (DN).

The XA protocol completes the two-phase commit through the XA PREPARE and XA COMMIT commands sent by the TM to multiple RMs. Common criticisms of two-phase commit include the single-point failure of the TM and potential data inconsistency if an exception occurs during the commit phase. To address these issues, PolarDB-X introduces the concepts of the transaction log table and COMMIT POINT. After confirming that all participating nodes are prepared successfully, we add a transaction commit record to the global transaction log as COMMIT POINT. If a TM exception occurs, we can select a new TM to continue the two-phase commit. The new TM will choose to restore the transaction state or roll back the transaction based on whether there is a COMMIT POINT record in the primary database.

• If the COMMIT POINT does not exist, no RM will enter the commit phase. In this case, you can safely roll back all RMs.

• If the COMMIT POINT exists, it means that all RMs complete the prepare phase. In this case, you can proceed with the commit phase.

XA transactions have certain limitations in terms of concurrency. In the process of execution, we must use a transaction strategy similar to Spanner's locking read-write, which specifies LOCK IN SHARE MODE in all SELECT operations, thereby causing mutual blocking between reads and writes. The overall performance of distributed transactions based on the Lock mode is relatively low when there is a single-record concurrency conflict. The current solution in the database industry is the multi-version concurrency control (MVCC) strategy. Although the data node InnoDB itself supports MVCC-based snapshot reads, we cannot provide an efficient snapshot read transaction strategy, because different data nodes execute XA COMMIT at different times, and a snapshot read request to different shards occurs at different times. This means that when a cross-DN snapshot read request is initiated, it may read some committed data of a transaction and fail to obtain a global snapshot.

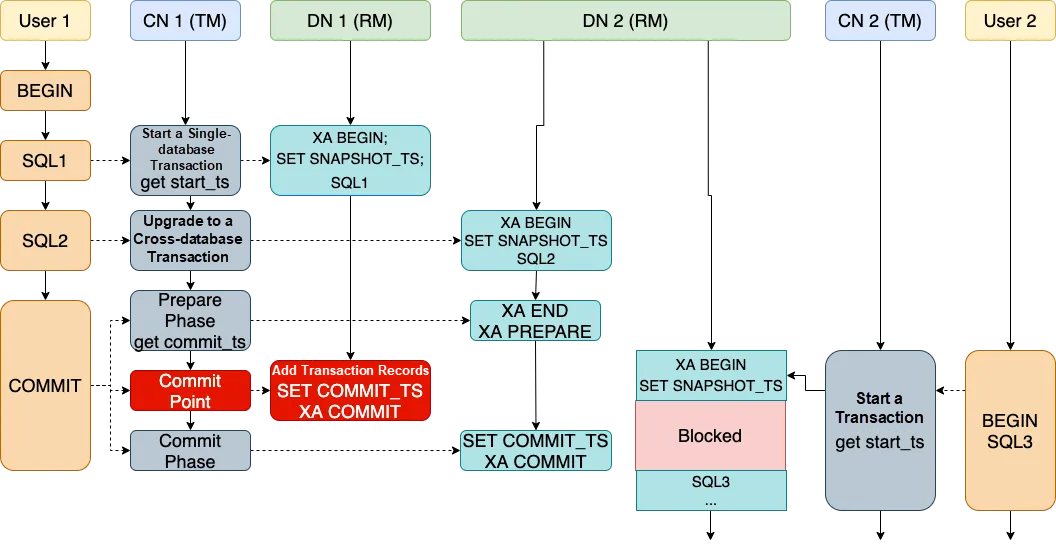

The core reason why XA transactions cannot provide snapshot reads is that we lack a global timestamp to order each transaction since transactions and views across different data nodes might be in different orders. Therefore, since PolarDB-X 2.0, we have introduced optimized TSO transactions based on XA transactions. TSO transactions require a strategy to generate a globally monotonically increasing timestamp. Common strategies include True Time (Google Spanner), HLC (CockroachDB), and TSO (TiDB). In our current implementation, we use the TSO, and GMS is used as a highly available single-point service to generate a timestamp. TSO guarantees correct linearizability and good performance, but it may also introduce relatively high latency in scenarios with deployment across global data centers.

The native InnoDB engine does not support TSO transactions. Therefore, we modify its commit logic and visibility judgment logic and insert two custom variables SNAPSHOT_TS and COMMIT_TS before XA BEGIN and XA COMMIT.

• SNAPSHOT_TS is used to determine whether the committed data from other transactions is visible to the current transaction. It unifies the time at which reads occur within a distributed transaction across each shard. SNAPSHOT_TS determines the snapshot of the current transaction.

• COMMIT_TS unifies the time at which a distributed transaction commits data across each shard and records it in the InnoDB engine. COMMIT_TS determines the order of the current transaction in a global transaction.

In a privatized InnoDB, we determine visibility based on the SNAPSHOT_TS of transactions. At the same time, we also set read requests of new transactions to wait if they encounter data in the prepare state. This avoids the issue that the COMMIT_TS of a transaction in the prepare state is smaller than the current SNAPSHOT_TS, resulting in different data before and after the commit.

Based on TSO transactions, we have also provided a series of new features and optimizations:

Because TSO provides the global snapshot capability, we can forward any part of the query to any backup node of any shard for reading without breaking the transaction semantics. This feature is very important to implement the hybrid executor for HTAP, and TP/AP resource separation and MPP execution framework all rely on this feature to ensure correctness.

If we find that the transaction involves only one shard during the commit phase, we will optimize it into a one-phase commit and use the XA COMMIT ONE PHASE statement to commit the transaction. For normal TSO transactions, we use the SNAPSHOT_TS and COMMIT_TS timestamps. However, for one-phase commit transactions, their behavior is similar to that of standalone transactions. Therefore, we do not need to use TSO to obtain COMMIT_TS. Instead, InnoDB can directly calculate an appropriate COMMIT_TS to commit the transaction. The specific calculation rule is COMMIT_TS = MAX_SEQUENCE + 1, where MAX_SEQUENCE is the largest SNAPSHOT_TS maintained locally by InnoDB in history.

If a transaction is started by using START TRANSACTION READ ONLY, then we mark the transaction as a read-only transaction. We will directly obtain the required data through a single statement of multiple autocommit to avoid the overhead of long-term holding connections and transactions. Based on TSO, we can use the same TS to ensure consistent reads. Therefore, we support embedding a SNAPSHOT_TS within each statement through the PolarDB-X proprietary protocol, which ensures that multiple single statements within the same transaction read the same data.

In general, users rarely initiate transactions with START TRANSACTION READ ONLY. Therefore, for regular transactions, we employ a delayed initiation of XA transactions for each connection. For all connections, the transaction will not start in the read-only form by default, and the normal XA transaction is performed until the first write request or the FOR UPDATE read request occurs.

In addition to read-only transactions, another scenario of this optimization is multi-shard read + single-shard write transactions. Through this scheme, we can optimize it into a one-phase commit transaction, which yields a 14% performance improvement in the TPC-C test result.

In the above read-only connection optimization, we optimize the COMMIT_TS acquisition by removing the connection that only uses snapshot reads from the transaction. Conversely, if all connections are writes or locked current reads, no snapshot reads are needed. Therefore, we have also made such an optimization: SNAPSHOT_TS is obtained only at the first snapshot read. This optimization is aimed at some scenarios with particular requirements for Serializable:

BEGIN;

SELECT balance FROM accounts WHERE id = 0 FOR UPDATE; # Check the balance and lock it

UPDATE accounts SET balance = balance - 1 WHERE id = 0;

UPDATE accounts SET balance = balance + 1 WHERE id = 1;

COMMIT;The SQL executed here represents a typical transfer scenario that transfers 1 yuan from account ID 0 to account ID 1. The entire transaction involves no snapshot reads, so we can skip the acquisition of SNAPSHOT_TS in such scenarios.

If multiple partitions of a distributed transaction are located on the same data node, they can be considered as a standalone transaction. In this case, multiple RPCs can be merged to complete the PREPARE and COMMIT for multiple shards at a time. If all shards are located on the same DN, the transaction can even be committed at 1PC.

The preceding optimizations are still aimed at some specific scenarios. For multi-shard distributed transactions, the latency is often longer than that of standalone transactions. Therefore, we have designed an asynchronous commit solution. Any distributed transaction can directly return success after the PREPARE phase is completed, which achieves a commit latency close to that of a standalone transaction (one RPC across data centers) without affecting data reliability and linearizability. We will detail this solution in the following articles, and you can follow our column to obtain the updates.

This article introduces the implementation of TSO distributed transactions in PolarDB-X 2.0. Compared with the default XA transactions in PolarDB-X 1.0 (DRDS), TSO transactions offer better performance by avoiding read locks through MVCC and providing various optimizations such as asynchronous commit. In addition, based on TSO transactions, PolarDB-X 2.0 enables consistent reads in secondary databases.

ApsaraDB - May 16, 2025

ApsaraDB - November 26, 2025

ApsaraDB - December 2, 2025

ApsaraDB - August 23, 2024

ApsaraDB - June 5, 2024

ApsaraDB - June 13, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB

kinder August 29, 2024 at 5:17 pm

This was a really insightful read on how PolarDB-X handles distributed transactions. The explanation of the TSO transactions and the optimizations for better performance were particularly interesting. It's impressive to see how much thought has gone into ensuring consistency and efficiency in such a complex system. Looking forward to learning more in future posts!