By Ziyang

The development of things generally follows a pattern from simple to complex, from micro to macro, from local to global, and from specific to general. This is no different for databases. As a distributed database, PolarDB-X has evolved over more than a decade, following the same pattern to continuously develop. It is now a significant part of the distributed database field.

In this article, we will explore the dimension of space and discuss how PolarDB-X has continuously developed and expanded its cross-space deployment capabilities. We will also cover its high availability and disaster recovery capabilities across different spatial scopes. Additionally, we will introduce GDN (Global Database Network), the latest product feature of PolarDB-X.

Before delving into the high availability and disaster recovery capabilities, let's first discuss the space philosophy of PolarDB-X In database systems, by increasing space usage, transforming space forms, adjusting the spatial distribution, or extending the spatial scope, many benefits can be achieved:

When it refers to high availability and disaster recovery, we are largely discussing data replication or data copy technologies. Whether using standard asynchronous master-slave replication, semi-synchronous master-slave replication, or strong synchronous replication based on Paxos or Raft, these methods extend the spatial distribution of data to achieve high availability and disaster recovery. Therefore, they have a relative spatial scope, and different disaster recovery solutions can be applied across racks, data centers (the data center is referred to as AZ in this article), regions, and clouds. Generally, the larger the spatial coverage, the stronger the disaster recovery capability, but the complexity also increases. Currently, the common high-availability/disaster recovery architectures in the database industry include:

The following table summarizes and compares the disaster recovery architectures listed above:

|

Architecture |

Scope |

Minimum Data Center |

Data Replication |

Advantages and Disadvantages |

|

Single Data Center |

Rack / Single Replica Failure |

One |

Asynchronous |

Asynchronous replication with good performance but RPO>0 |

|

Synchronous |

Synchronous replication with slight performance loss but RPO=0 (in case of minority failure) |

|||

|

Multiple Data Centers in the Same Region |

Single data center level |

Two |

Asynchronous |

Asynchronous replication with good performance but RPO>0 |

|

Three |

Synchronous |

More widely used, average RT increase of approximately 1ms |

||

|

Three Data Centers across Two Regions |

Data Center, Region |

Three data centers and two regions |

Synchronous |

More widely used, average RT increase of approximately 1ms |

|

Five Data Centers across Three Regions |

Data Center, Region |

Five data centers and three regions |

Synchronous |

Higher construction costs for data centers, average RT increase of approximately 5~10ms, depending on the physical distance between regions |

|

Geo-Partitioning |

Data Center, Region |

Three data centers and three regions |

Synchronous |

The service has an adaptation cost (the region attribute is added to the table partition), average RT increase of approximately 5~10ms, depending on the physical distance between regions |

|

Global Database |

Data Center, Region |

Two data centers and Two regions |

Asynchronous |

More widely used, near-read and remote write, suitable for disaster recovery scenarios with more reads and fewer writes in different regions |

The data nodes of PolarDB-X use a multi-replica architecture, such as three or five replicas. To ensure strong consistency among replicas (RPO=0), PolarDB-X uses the majority-based replication protocol of Paxos. Each write must be acknowledged by more than half of the nodes, so even if one node fails, the cluster can still provide services normally. In addition, PolarDB-X offers data replication capabilities that are highly compatible with the MySQL master-slave replication protocol, based on CDC Binlog. This allows for data synchronization between two independent PolarDB-X instances. Based on these fundamental capabilities, PolarDB-X supports various disaster recovery architectures, including:

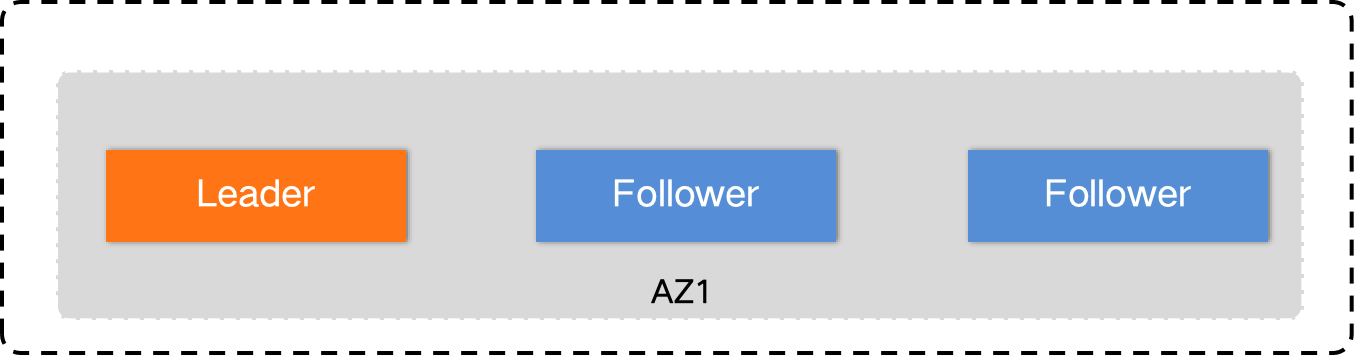

• Single data center (Paxos 3 Replicas): Can handle the failure of a single minority node.

• Three data centers in the same region (Paxos 3 Replicas): Can handle the failure of a single data center.

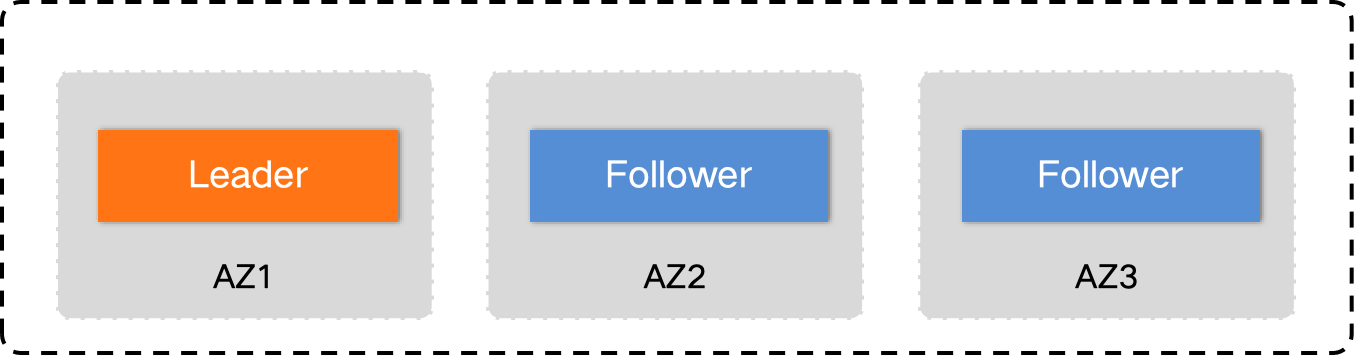

• Three data centers across two regions (Paxos 5 Replicas): Can handle regional failures.

• GDN multi-active (asynchronous master-slave replication): Can handle regional failures and provide local read capabilities.

The disaster recovery solutions of PolarDB-X based on Paxos have been detailed in our previous articles.

Now, let's unveil the details of PolarDB-X GDN.

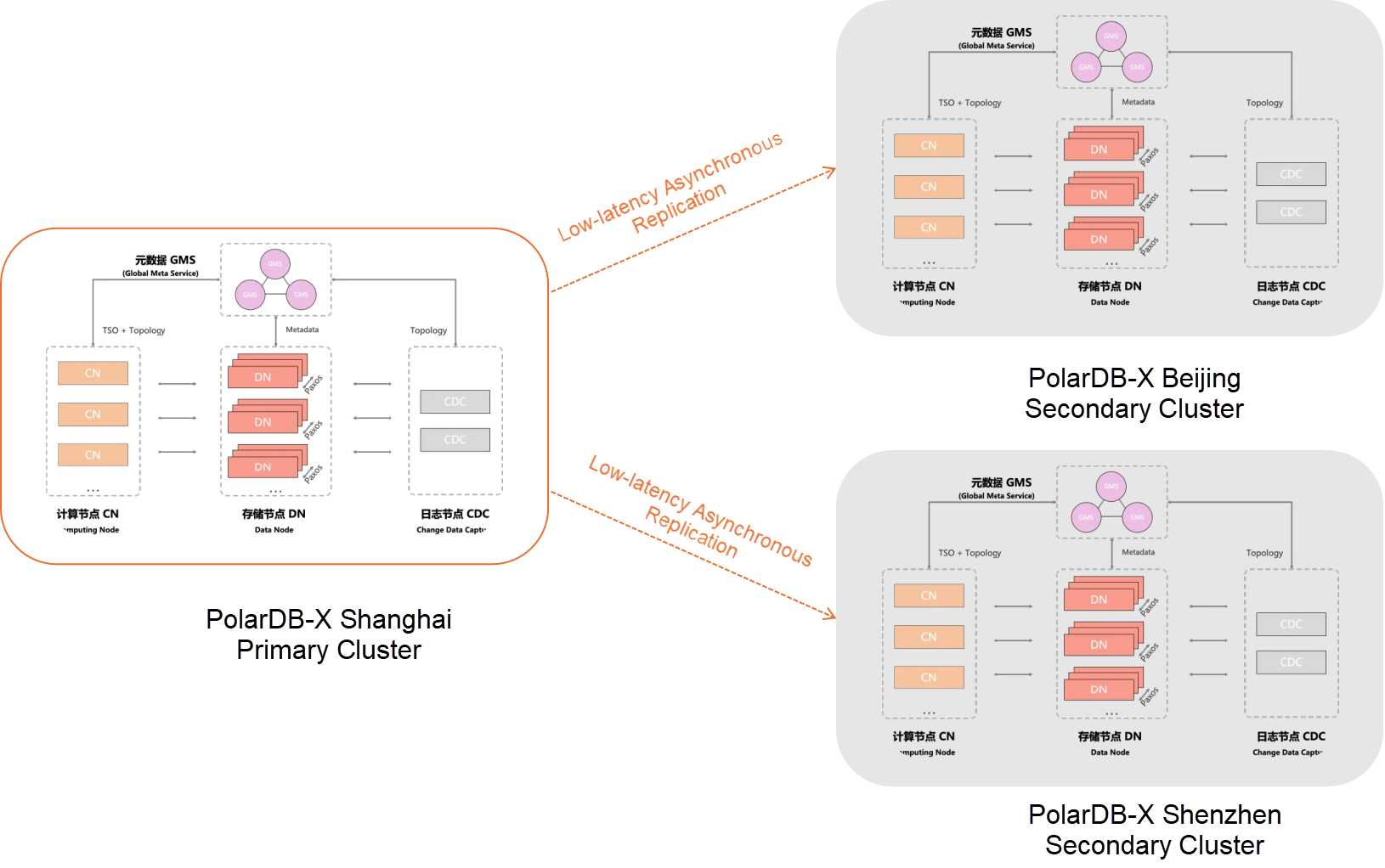

Global Database Network (GDN) is a network that consists of multiple PolarDB-X instances that are distributed in multiple regions within the same country. Its basic form is illustrated in the following figure, where multiple PolarDB-X instances form a cross-regional high-availability disaster recovery cluster with one master and multiple slave nodes.

With GDN, two core objectives can be achieved:

• Active Geo-redundancy

If you deploy applications in multiple regions but deploy databases only in the primary region, applications that are not deployed in the primary region must communicate with the databases that may be located in a geographically distant region. This results in high latency and poor performance. GDNs replicate data across regions at low latencies and provide cross-region read/write splitting. GDNs allow applications to read data from the nearest database. This allows databases to be accessed within seconds.

• Geo-disaster Recovery

GDNs support geo-disaster recovery regardless of whether applications are deployed in the same region. If a fault occurs in the region where the primary instance is deployed, you need only to manually switch over the service to a secondary instance. The switchover between the primary and secondary instances can be completed within 120 seconds.

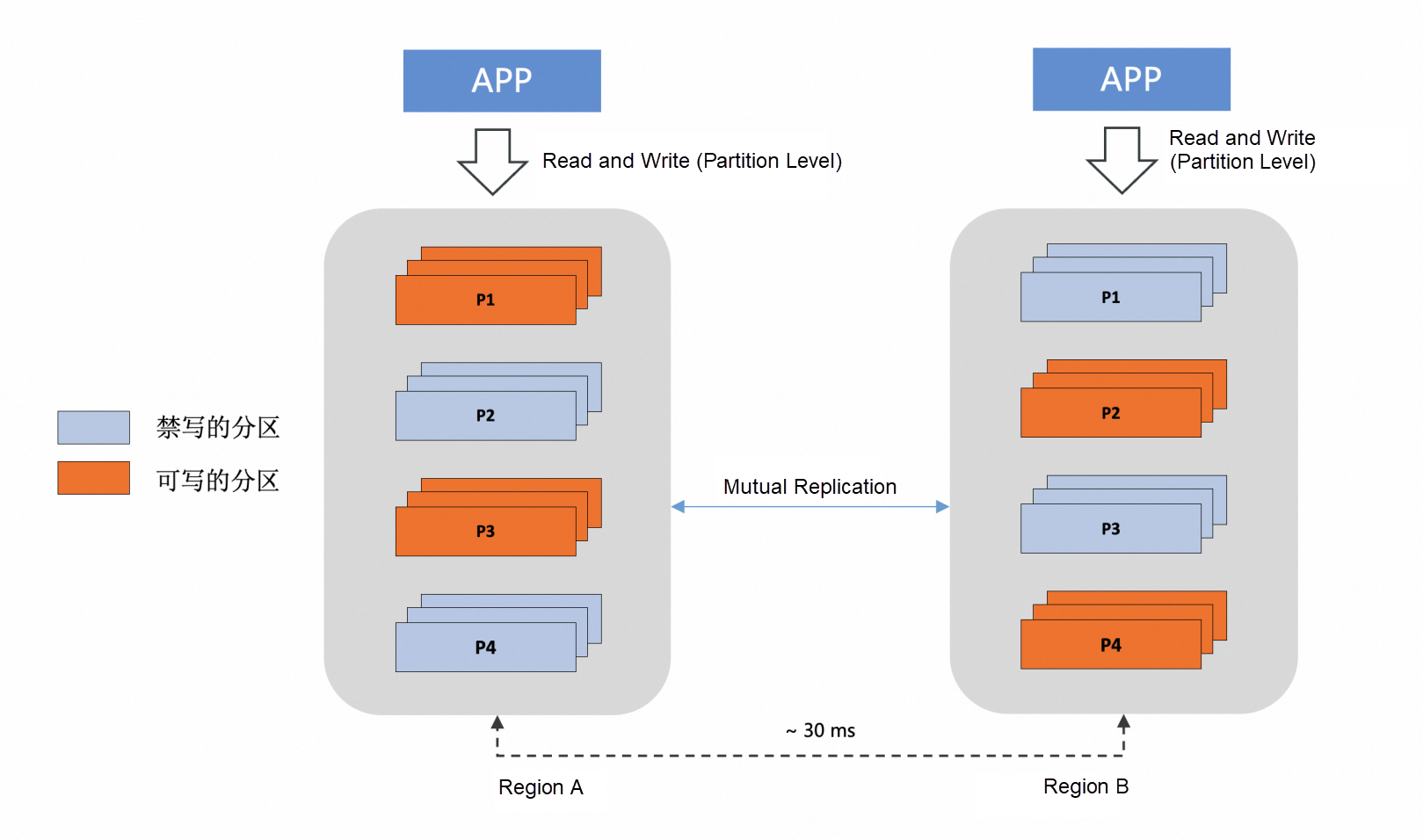

The primary-secondary mode is just the basic form of GDN, which requires minimal modifications on the application side to integrate with GDN. A more advanced form of GDN is the dual-primary mode or even multi-primary mode. When an application needs to build a multi-region, multi-active, and multi-write disaster recovery architecture, the common approach involves first making cellular modifications on the business side. Then, on the database side, it involves setting up bidirectional synchronization links, configuring loop prevention strategies for synchronization, and defining conflict resolution policies when write conflicts occur. With the advanced forms of GDN, combined with the partitioning capabilities of PolarDB-X, achieving multi-active and multi-write disaster recovery becomes simpler. For example:

1. PolarDB-X offers a variety of partition types: Key partitions, List partitions, Range partitions, and also supports subpartitioning by combining various partition types. Additionally, it even allows users to submit custom UDFs as partition functions. By associating the cellular routing rules with the partitioning rules of PolarDB-X's partitioned tables, the multi-write architecture becomes simpler, more flexible, and safer. We can set the aligned partitions in each region (cell) to be read-write, and the unaligned partitions to be read-only (write-prohibited). This makes traffic switching more flexible, allowing for overall switching at the cell level or partial switching of specific partitions to another cell. Furthermore, by adding write prohibition on the database side, even if there are routing errors on the application side, PolarDB-X, as the final gatekeeper, rejects illegal write operations to avoid write conflicts. As mentioned above, this is difficult to achieve in traditional scenarios with non-distributed databases and non-partitioned tables, but PolarDB-X can certainly support the traditional dual-write architecture as well. Both approaches are not contradictory.

2. In a multi-active and multi-write architecture, bidirectional replication links need to be configured between different regions. The biggest challenge with bidirectional replication links is preventing replication loops. PolarDB-X includes a lightweight loop prevention mechanism based on server_id, which is simple and easy to use. More details will be provided in the following section.

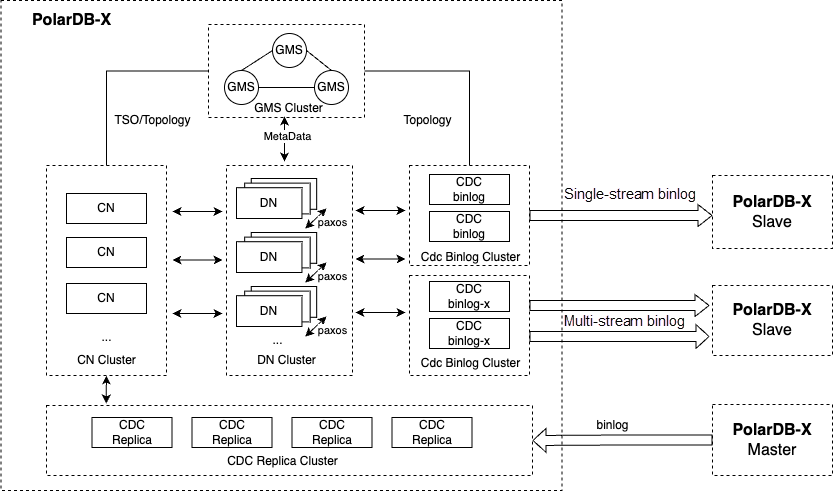

The technical foundation of GDN is real-time data replication. PolarDB-X not only supports CDC Binlog, which is fully compatible with MySQL binlog, but also offers a data replication product called CDC Replica, which is highly compatible with MySQL Replication. Based on these two core capabilities, the GDN replication network can be quickly established.

GDN Technical Architecture

Note:

DML operations trigger data changes and record the change details in binlogs in the form of events. By replaying the change events in the binlog, the modified data can be replicated to the downstream. For DML-based data replication, GDN supports various optional strategies to meet different replication needs, balancing performance and consistency.

| Replication Policy | Performance | Transaction Consistency | |

|---|---|---|---|

| TRANSACTION | Moderate | High | 1. This policy features serial replication and ensures transactional integrity. It is suitable for scenarios with high requirements for transactional consistency, such as in the financial sector, where an RPO > 0 can be tolerated, but the integrity of transactions cannot be compromised. 2. Transactional integrity can only be guaranteed in a single-stream binlog replication scenario because multi-stream binlog, by its implementation principle, needs to break transactional integrity to achieve higher parallelism. 3. Parallel replication between transactions without data conflicts is not supported. |

| SERIAL | Moderate | Low | 1. This policy features serial replication but does not guarantee transactional integrity. It is suitable for scenarios where transactional consistency is not a high priority, but there is a strong requirement for serialization, such as when there are foreign key constraints or business sequence dependencies between tables. 2. Under this policy, each change is processed in an auto-commit mode, meaning that each transaction is a single-node transaction and does not trigger a distributed transaction. Therefore, compared to the TRANSACTION replication strategy, it generally offers better performance, unless each transaction in the binlog contains only one data change, in which case the performance difference between the two strategies is minimal. |

| SPLIT | Good | Low | 1. This policy features parallel replication but does not guarantee transactional integrity. It is suitable for scenarios where there are no requirements for dependencies between data, as long as eventual consistency is maintained. 2. The parallel replication mode is at the row level, meaning that data from different tables and with different primary keys will be placed into separate execution threads for full parallel writes. 3. It is the default policy for GDN. |

| MERGE | Excellent | Low | 1. This policy uses techniques like change compression and batch writes for parallel replication, but it does not guarantee the type of changes or the integrity of the change transactions. It is suitable for scenarios where there are no requirements for dependencies between data, and the type of changes is not a concern, as long as eventual consistency is maintained. 2. The parallel replication mode is at the row level, and data is batched by table for full parallel and batch writes. |

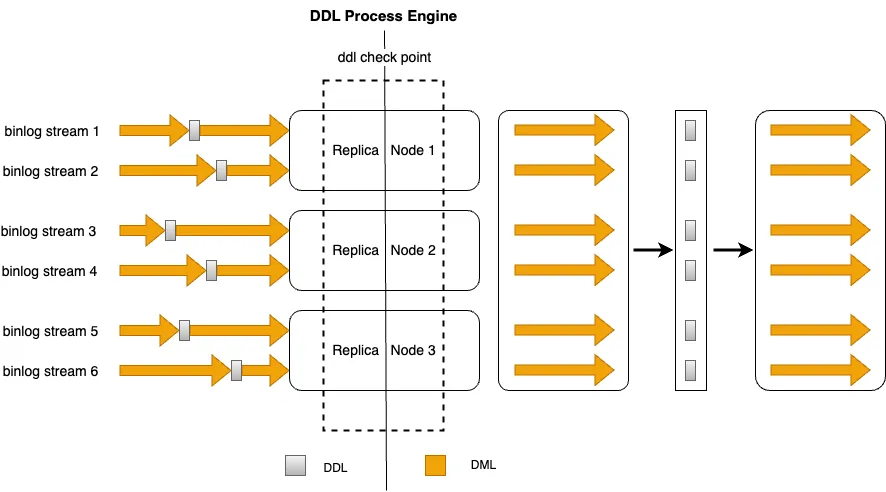

Efficient DML replication can ensure the consistency of table content between upstream and downstream clusters in GDN. On the other hand, DDL replication is the core capability that ensures schema consistency between the primary and secondary clusters in GDN. Schema consistency between GDN's primary and secondary clusters is not limited to table structure consistency but extends to all database objects. This ensures that the secondary cluster can handle application traffic during a failover. Otherwise, even the loss of a single index could lead to severe performance issues.

PolarDB-X supports various types of database objects, including common MySQL-compatible tables, columns, indexes, views, and functions. Additionally, it supports custom object types such as TableGroup, Sequence, GSI, CCI, and Java Function, along with many custom extension syntaxes such as TTL, Locality, OMC, Local Index, Move Partition, and Alter Index.

DDL replication is a challenging capability. It is not simply a matter of extracting DDL SQL from the primary cluster's binlog and executing it on the secondary cluster. It involves multiple challenges related to compatibility, consistency, stability, and availability. PolarDB-X's built-in native replication capability encapsulates these complexities at the kernel level, providing an efficient and easy-to-use DDL replication solution. This can be summarized in the following aspects:

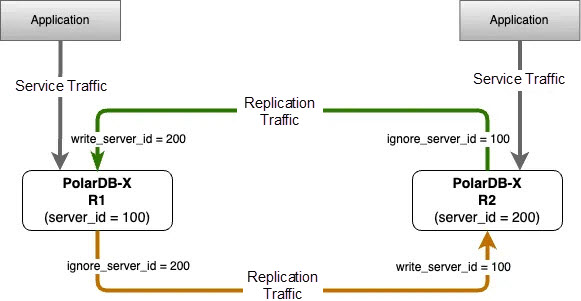

PolarDB-X provides a lightweight bidirectional replication capability that is compatible with MySQL and can filter loop traffic based on server_id, as shown in the following figure:

The advantages of this capability can be summarized in two aspects:

Filtering traffic directly based on the server_id in the binlog event header is more lightweight compared with external synchronization tools, which typically use transaction tables for traffic filtering. This method incurs no additional performance overhead and offers better scalability. Transaction tables are intrusive to the user's database and require DML operations on the transaction table for each synchronized transaction, leading to write amplification.

To quickly set up a bidirectional replication architecture with loop traffic filtering, simply execute two change master statements on each of the two PolarDB-X instances.

Syntax:

CHANGE MASTER TO option [, option] ... [ channel_option ]

option: {

IGNORE_SERVER_IDS = (server_id_list)

}

Example:

R1: CHANGE MASTER ... , IGNORE_SERVER_IDS = (100) , ...

R2: CHANGE MASTER ... , IGNORE_SERVER_IDS = (200) , ...In data replication paths, especially in traditional multi-active, multi-write architectures where cellular rules and table partitioning rules are not aligned, conflicts may occur due to bugs or unexpected situations, such as both sides modifying the same primary key or unique key. A conflict detection mechanism is needed to mitigate these risks. PolarDB-X's data replication includes a built-in conflict detection mechanism that supports the following types of conflicts:

• Uniqueness conflicts caused by INSERT operations

When an INSERT statement violates a uniqueness constraint during synchronization, such as when two PolarDB-X instances simultaneously or nearly simultaneously insert a record with the same primary key value, the INSERT will fail on the other end because a record with the same primary key already exists.

• Inconsistent records caused by UPDATE operations

If the records to be updated do not exist in the destination instance, PolarDB-X can automatically convert the UPDATE operation into an INSERT operation (not enabled by default). However, uniqueness conflicts may occur.

The primary keys or unique keys of the records to be inserted may conflict with those of existing records in the destination instance.

• Non-existent records to be deleted

The records to be deleted do not exist in the destination instance. In this case, it ignores the DELETE operation regardless of the conflict resolution policy that you specify.

For data conflicts, PolarDB-X provides configuration parameters to control the resolution strategy. These can be adjusted using the CHANGE MASTER command during the creation or maintenance of the replication path.

CHANGE MASTER TO option [, option] ... [ channel_option ]

option: {

CONFLICT_STRATEGY = {OVERWRITE|INTERRUPT|IGNORE}

}• OVERWRITE: Replaces the conflicting data with a REPLACE INTO statement, which is the default resolution strategy.

• INTERRUPT: Interrupts the replication path.

• IGNORE: Ignores the conflict.

It is important to note that conflict resolution is a best-effort mechanism. In a multi-active, multi-write architecture, it is challenging to completely prevent data conflicts due to factors such as differences in system time between the two ends of the synchronization and the presence of synchronization delays. Therefore, it is crucial to make corresponding modifications at the business level to ensure that records with the same primary key, business key, or unique key are only updated on one of the bidirectionally synchronized PolarDB-X instances. Aligning cellular rules with table partitioning rules and relying on the database kernel to enforce partition write prohibitions is the simplest and most effective way to avoid data conflicts. In addition, native GDN capabilities make this even simpler.

GDN provides native data reconciliation capabilities with the following advantages of its built-in data verification:

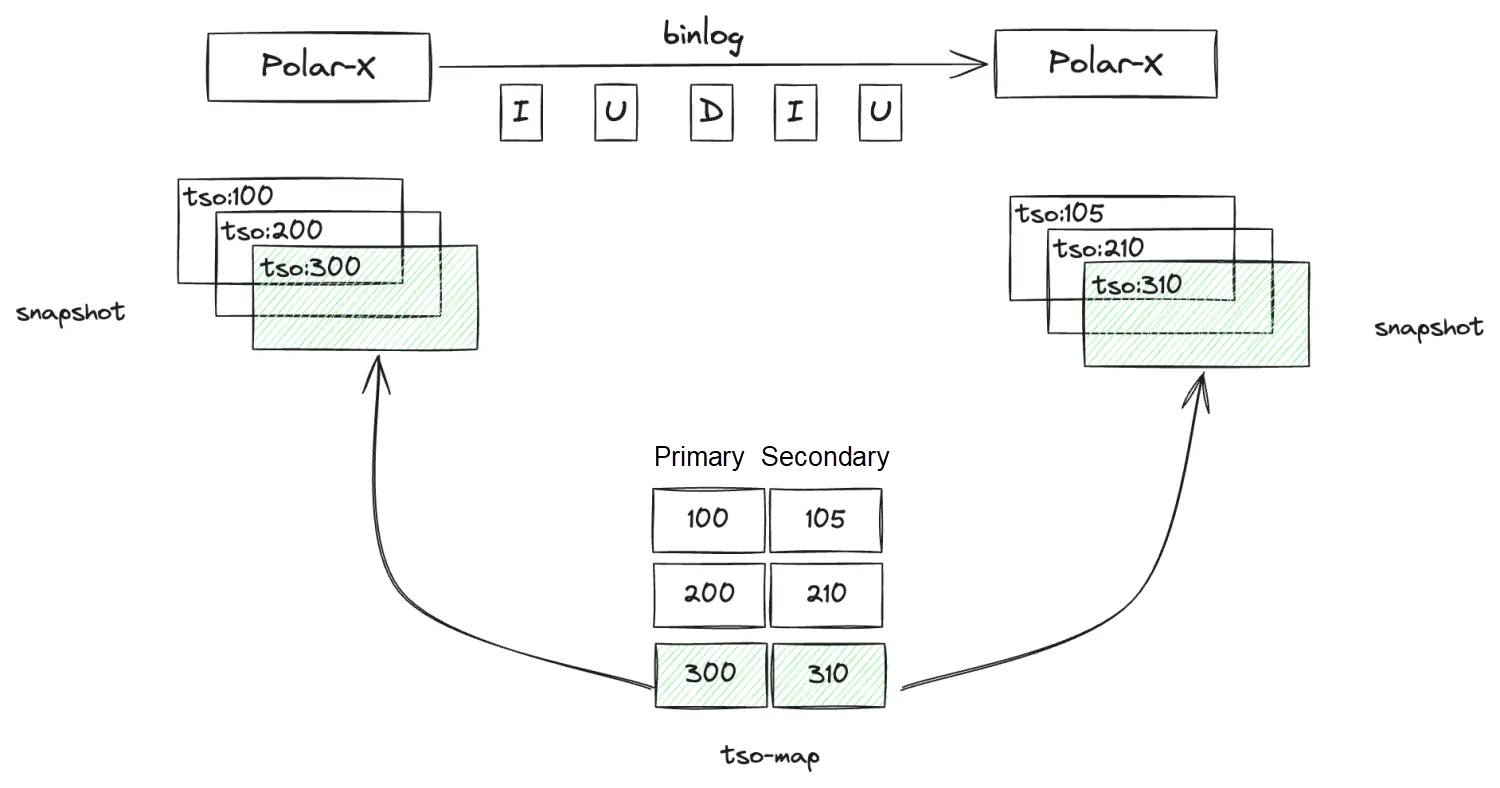

1. Support for multiple verification modes:

• Direct Verification: This mode verifies data based on the latest data from the primary and secondary instances. In scenarios with frequent incremental changes, this method may introduce errors and require multiple rounds of review or manual verification.

• Snapshot Verification: PolarDB-X supports TSO-based snapshot queries. The global binlog ensures linearly consistent sync points. GDN leverages these capabilities to build a TSO-mapping that ensures data consistency between the primary and secondary clusters. It then performs data verification based on consistent snapshots, which is error-free and more user-friendly.

2. Low resource consumption and fast verification:

GDN's data verification uses a combination of checksum verification and detailed row-by-row comparison. First, it divides the data into segments through a sampling algorithm and compares the checksums of each segment. If the checksums do not match, it switches to a detailed row-by-row comparison. Compared with traditional one-by-one row comparisons, this method can achieve up to 10 times better performance and lower resource consumption.

For detailed usage instructions, please refer to the official documentation: Verify Data in the Primary and Secondary Instances - Cloud-Native Database PolarDB (PolarDB) - Alibaba Cloud Help Center.

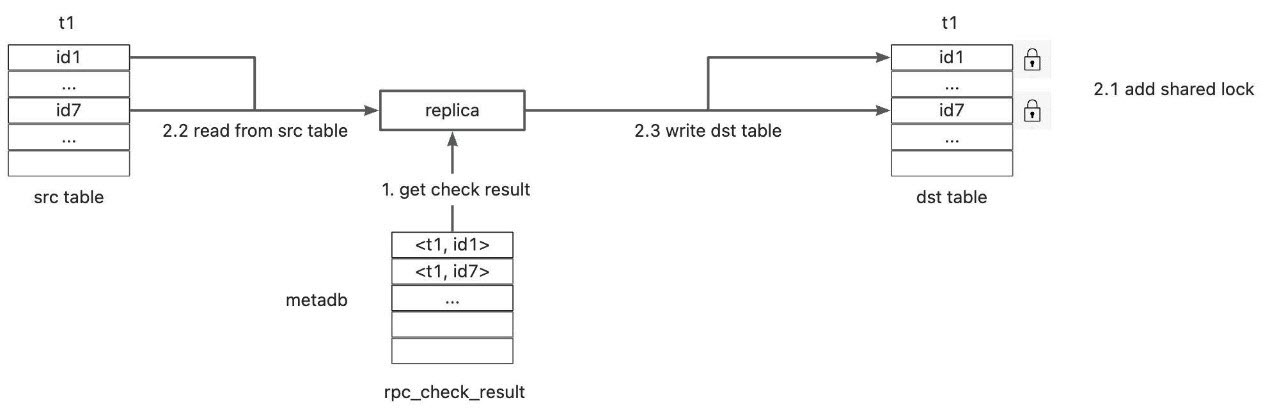

Data correction can be performed in two scenarios: Regular online data correction and data correction after a failover. For the former, GDN provides a data correction solution based on shared locks, primarily suitable for unidirectional master-slave replication. The implementation is straightforward: Based on the results of data verification, obtain the diff data; group the diff data into batches; for each batch, acquire a shared lock on the data in the secondary instance; retrieve the latest values of the data from the primary instance; and write the updated values to the secondary instance to complete the data correction (Beta version).

For the latter, if a failover is performed using an emergency forced switch, it is possible that some binlogs from the primary instance have not been replicated to the secondary instance. This means that after the switch, the new primary instance may be missing some data. If the original primary instance cannot be recovered, the corresponding data will ultimately be lost. However, if the original primary instance is eventually restored after the disaster recovery, there is an opportunity to compensate the missing binlog data to the new primary instance.

How to compensate? Do you need to identify and manually handle each entry one by one? You can do it this way, of course. But it does not have to be that cumbersome. PolarDB-X provides a full mirror matching capability that makes data compensation easier. You can enable full mirror matching on the original replication link before the switch and then consume the remaining binlog data for automatic compensation:

• For Insert events in the binlog, when replaying to the new primary instance, use the INSERT IGNORE strategy.

• For Delete events in the binlog, when replaying to the primary instance, append all column values to the WHERE condition. If they do not match, the event is automatically skipped.

• For Update events in the binlog, when replaying to the primary instance, append all column values to the WHERE condition. If they do not match, the event is automatically skipped.

Of course, this method is not foolproof. If certain columns of a record have been changed back and forth, the compensated data might not meet expectations. However, such cases are rare, and in most scenarios, the full mirror matching capability can achieve automatic compensation.

Future versions of GDN are planning more advanced automatic compensation capabilities. Building on the full mirror matching feature, these capabilities will combine the binlogs from the new primary instance to accurately determine if a particular piece of data has been modified after the failover. This will help in precisely identifying whether there are double write conflicts between the data to be compensated and the target data.

You can enable or disable the full mirror matching feature with a single SQL command.

CHANGE MASTER TO option [, option] ... [ channel_option ]

option: {

COMPARE_ALL = {true|false}

}For the public cloud offering, the PolarDB-X console provides a visualized interface for switching GDN primary and secondary instances. There are two types of switching methods:

• Regular Switch (RPO=0): This is a planned switch used when all instances within the cluster and the data replication links between them are operating normally. When performing a planned switch, all instances in the cluster will be set to read-only, and the duration of this read-only period depends on the time it takes to eliminate data replication lag. A planned switch does not result in data loss.

• Forced Switch (RPO>=0): When the primary instance experiences an unrecoverable failure within a short period, a forced emergency switch can be considered to prioritize business continuity. During an emergency switch, the selected secondary instance will immediately be promoted to the primary instance. Any uncommitted transactions from the previous primary instance that have not been replicated to the secondary instance will be lost. The amount of data loss depends on the replication lag.

PolarDB-X also provides convenient atomic APIs. In scenarios where you are building your own GDN based on PolarDB-X, you can combine and call these atomic APIs to create your own switch task flow.

# To disable/enable writes for the entire PolarDB-X instance

alter instance set read_only = {true|false}

# show full master status can be used to view the latest TSO of the binlog on the primary instance

mysql> show full master status ;

+---------------+-----------+--------------------------------------------------------+-------------+-----------+-----------+-------------+-------------+-------------+--------------+------------+---------+

| FILE | POSITION | LASTTSO | DELAYTIMEMS | AVGREVEPS | AVGREVBPS | AVGWRITEEPS | AVGWRITEBPS | AVGWRITETPS | AVGUPLOADBPS | AVGDUMPBPS | EXTINFO |

+---------------+-----------+--------------------------------------------------------+-------------+-----------+-----------+-------------+-------------+-------------+--------------+------------+---------+

| binlog.006026 | 221137311 | 721745002099847993617485152365471498250000000000000000 | 211 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

+---------------+-----------+--------------------------------------------------------+-------------+-----------+-----------+-------------+-------------+-------------+--------------+------------+---------+

# show slave status can be used to view the latest TSO consumed by the secondary instance

mysql> show slave status \G;

*************************** 1. row ***************************

...,...

Exec_Master_Log_Tso: 721745002099847993617485152365471498250000000000000000

...,...PolarDB-X GDN supports the construction of a distributed data replication pipeline with multi-stream parallel replication capabilities based on CDC multi-stream binlog. It offers powerful scalability, allowing you to adjust the level of parallelism based on actual load conditions. This ensures low-latency data replication even in cross-region high network latency scenarios, thereby keeping the RPO close to zero. Of course, achieving the best possible performance for single-stream replication is also our goal. Below is the performance data for single-stream replication in TPCC and Sysbench scenarios. We are continuously working to optimize these further.

Test environment: PolarDB-X Instance Configuration (Dedicated Type)

• CN:16core 128G * 4

• DN: 16core 128G * 4 (Version 5.7)

• CDC: 32core 64G * 2

• Number of Multi-Streams: 6

Test data:

| Test case | Test metrics | Single-stream RPS | Multi-stream RPS | Latency |

|---|---|---|---|---|

| Sysbench_oltp_write_only • 32 tables • 256 thread • 10000000 per table |

• TPS: 3w/s • QPS: 12w/s |

4w/s | 12w/s | Multi-stream: < 2s |

| TPCC transaction stress testing • 1000 warehouses • 500 concurrency |

• tpmC: 30w | 10w/s | 20w/s | Multi-stream: < 2s |

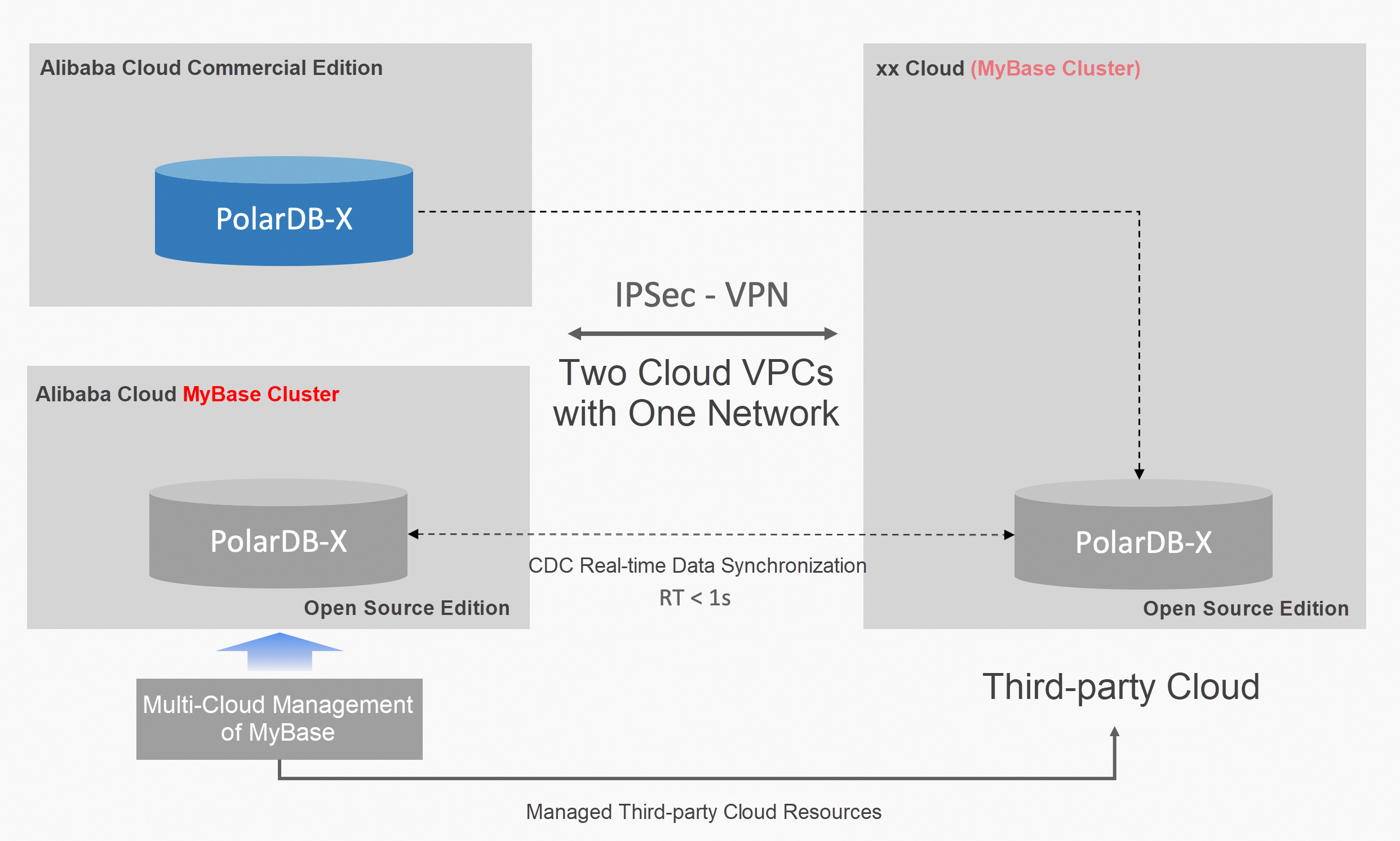

Alibaba Cloud provides the MyBase product, which allows you to manage database instances deployed across multiple cloud providers including IDCs within a single MyBase space. Through the multi-cloud management capabilities provided by MyBase, you can achieve cross-cloud disaster recovery deployment for databases. The cross-cloud GDN capability of PolarDB-X based on MyBase is on the way. For readers interested in multi-cloud disaster recovery, stay tuned.

PolarDB-X's disaster recovery capabilities are always a work in progress. From cross-AZ to cross-Region, and now to cross-Cloud, we have taken a significant step forward. However, this is just a small step on the continuous journey of improvement. With ongoing efforts, we believe that PolarDB-X's disaster recovery capabilities will continue to improve. In the future, it will evolve into a more native global database with global disaster capabilities.

Implement Scheduled Elastic Scaling of Serverless ApsaraDB RDS for SQL Server By Calling API

Alibaba Container Service - April 17, 2024

Alibaba Container Service - April 24, 2025

Alibaba Clouder - January 31, 2019

ApsaraDB - July 30, 2024

ApsaraDB - January 14, 2025

ApsaraDB - June 19, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Backup and Archive Solution

Backup and Archive Solution

Alibaba Cloud provides products and services to help you properly plan and execute data backup, massive data archiving, and storage-level disaster recovery.

Learn MoreMore Posts by ApsaraDB