By Shuashua

Open source PolarDB-X 2.4.1 has been released to enhance enterprise-level O&M capabilities. To meet the database O&M and data management requirements of database administrators (DBAs), PolarDB-X 2.4.1 provides new features such as dump and restoration of cloud backup, online DDL, database scaling, and TTL. This version comprehensively enhances the maintainability of PolarDB-X in multi-cloud and large-scale distributed deployment.

• In October 2021, Alibaba Cloud announced the open source release of PolarDB-X at the Apsara Conference. The core components of PolarDB-X such as the compute engine, storage engine, and log engine are open source. In addition, this version allows you to deploy PolarDB-X by using Kubernetes.

• In January 2022, PolarDB-X 2.0.0 was officially released to fix multiple issues and provide new features such as cluster scaling and compatibility with the binary log ecosystem. Maxwell and Debezium are supported for incremental log subscriptions.

• In March 2022, PolarDB-X 2.1.0 was officially released to enhance stability and improve compatibility within the cloud-native ecosystem. The new version is deployed in a three-node architecture based on the Paxos consensus algorithm.

• In May 2022, PolarDB-X 2.1.1 was officially released to support new features such as tiered storage for hot and cold data. This feature allows you to store business table data in different storage media based on data characteristics.

• In October 2022, PolarDB-X 2.2.0 was officially released. This version complies with distributed database financial standards and focuses on compatibility between enterprise-level and domestic ARM. The eight core features of PolarDB-X 2.2.0 improve universal applicability across multiple sectors such as finance, communications, and government administration.

• In March 2023, PolarDB-X 2.2.1 was officially released. This version further strengthens key capabilities at the production level based on the distributed database financial standards. This way, the database-oriented production environment is more accessible and secure.

• In October 2023, PolarDB-X 2.3.0 was officially released. PolarDB-X Standard Edition (centralized architecture) provides independent services for data nodes and supports the multi-Paxos protocol. PolarDB-X 2.3.0 includes a distributed transaction engine called Lizard that is fully compatible with MySQL.

• In April 2024, PolarDB-X 2.4.0 was officially released to support columnar. The Clustered Columnar Index (CCI) feature allows you to store data in columns and rows in tables. This meets the requirements of query acceleration in distributed systems and implements hybrid transaction/analytical processing (HTAP) based on the vectorization performed on compute nodes (CNs).

Open source PolarDB-X fully inherits the production-level stability of PolarDB-X Enterprise Edition. The physical formats of data files of the two editions are compatible. Therefore, you can use open source PolarDB-X to create backup databases for PolarDB-X Enterprise Edition.

PolarDB-X Operator V1.7.0 was released to restore PolarDB-X clusters from backup sets.

This feature can be used in the following scenarios:

• Multi-cloud disaster recovery If your production instance is on Alibaba Cloud, you can use this feature to create a secondary instance of the production instance.

• Offline testing If your PolarDB-X instance is on Alibaba Cloud, you can use this feature to perform testing on offline self-managed machines.

To restore data from a backup set, you need to import the backup set and create a restoration task. To run the tool used to import the backup set, you must add the required configuration files to the configuration directory of the tool. The following table describes the configuration files.

| Category | File name | Required | Description |

|---|---|---|---|

| Backup set metadata file | backupset_info.json | Yes | A JSON file that stores the metadata of the backup set. The information includes the instance topology and download URLs of backup files. |

| Configuration file of the open source storage media of the backup set | sink.json | Yes | A JSON file that stores the configurations of the storage media of the backup set. The information includes the type, address, and authentication key. |

| Configuration file of the tool used to import the backup set | filestream.json | No | A JSON file that stores the configurations of the tool used to import the backup set. You can specify the parallelism parameter to specify the import concurrency. Type: INT. Default value: 5. |

• To obtain the backup set metadata, call the DescribeOpenBackupSet operation and specify the following required parameters: RegionId, DBInstanceName, and RestoreTime. The complete backup set metadata file includes the physical files of the backup set and download URLs of incremental files.

• You can store backup sets by using SSH File Transfer Protocol (SFTP), MinIO, Amazon Simple Storage Service (Amazon S3), and Alibaba Cloud Object Storage Service (OSS). For more information about the metadata configurations of each storage method, see related documentation.

To dump a backup set, run the following command:

docker run -d -v /root/config:/config --network=host \

--name=polardbx-backupset-importer \

--entrypoint="/backupset-importer"

polardbx-opensource-registry.cn-beijing.cr.aliyuncs.com/polardbx/backupset-importer:v1.7.0 \

-conf=/configYou can create a backup dump task that is used to automatically download a backup set based on the metadata of the backup set and upload the downloaded backup set to the specified backup storage media.

Alternatively, you can use PolarDB-X Operator to create an instance based on an imported backup set by using Kubernetes.

You can efficiently create a PolarDB-X backup disaster recovery environment in self-managed data centers and multi-cloud Elastic Compute Service (ECS) environments by using the feature to dump and restore cloud backup sets.

Changing column types during database O&M is troublesome. In traditional MySQL databases, external tools such as pt-osc and gh-ost are generally required to perform lock-free DDL operations.

A great number of open source tools are available to perform lock-free DDL operations. You can use these tools to change column types smoothly without locking tables. The tools include gh-ost from GitHub and pt-osc from Percona Toolkit. To perform online DDL operations, perform the following steps:

gh-ost and pt-osc use different methods to synchronize incremental data: gh-ost reads and replays binary logs and pt-osc double writes incremental data by using triggers. However, these solutions still have limits.

For example, gh-ost uses the same thread to replay incremental data and copy existing data. This is slow but less intrusive. During peak hours, gh-ost cannot read binary logs in sync with the operations. This way, the DDL operations cannot be performed.

Trigger-based pt-osc may incur deadlocks. If a DDL operation is paused, the corresponding triggers may not be canceled.

PolarDB-X 2.4.1 provides the kernel-native lock-free DDL feature that better supports column type changes. This feature focuses on incremental data synchronization, intelligent throttling, and multi-version metadata switching.

PolarDB-X supports a new DDL algorithm named Online Modify Column (OMC).

Syntax:

ALTER TABLE tbl_name

alter_option [, alter_option] ...

ALGORITHM = OMCExamples:

# Create a test table.

CREATE TABLE t1(a int primary key, b tinyint, c varchar(10)) partition by key(a);

# Modify the types of column b and column c in the t1 table.

ALTER TABLE t1 MODIFY COLUMN b int, MODIFY COLUMN c varchar(30), ALGORITHM=OMC;

# Modify the name and type of column b in the t1 table and add column e of the BIGINT type.

ALTER TABLE t1 CHANGE COLUMN b d int, ADD COLUMN e bigint AFTER d, ALGORITHM=OMC;In this example, a test is run to compare the complete performance of changing column types online between OMC, gh-ost, and pt-osc based on the oltp_read_write benchmark in Sysbench by running the following command:

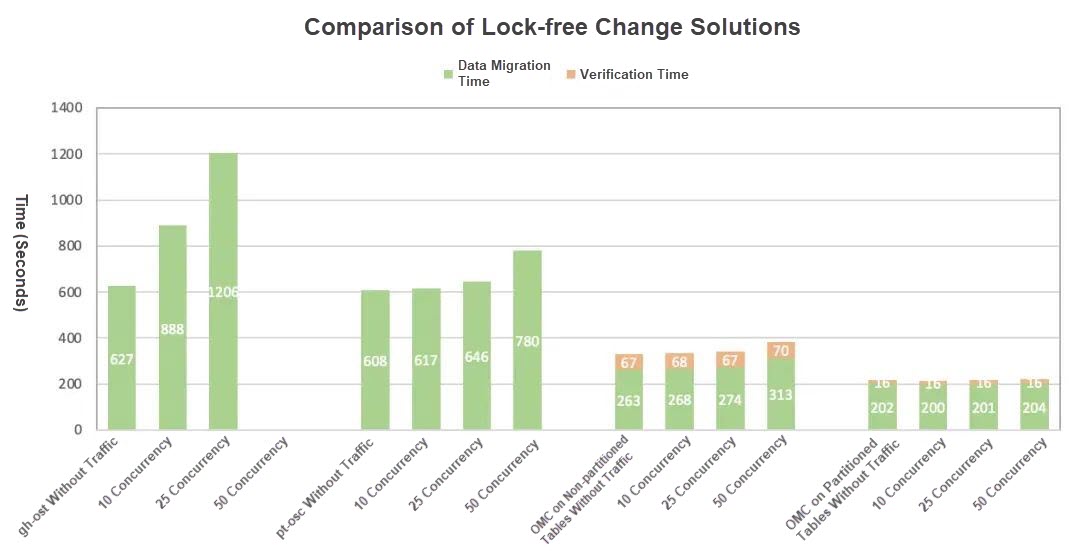

ALTER TABLE MODIFY COLUMN `sbtest1` MODIFY COLUMN k bigint;The following figure shows the test result.

• The time required by gh-ost to perform online DDL operations increases significantly as the number of concurrent operations rises. When concurrency reaches 50, gh-ost fails to replay incremental changes in sync with the operations. As a result, online DDL operations cannot be complete.

• When concurrency reaches 50, pt-osc can still complete online DDL operations within an extended period of time.

• PolarDB-X shows high stability and efficiency in performing lock-free column type changes on partition tables. The time required by OMS is less affected by traffic and is one-third of the time required by pt-osc.

• Compared to pt-osc and gh-ost, PolarDB-X shows significantly improved efficiency with a substantial time reduction in lock-free column type changes on non-partitioned tables. Even the time required increases as the traffic rises.

During the change operations, the transactions per second (TPS) performance measured by the oltp_read_write benchmark is affected due to resource competition. The following table lists the decrease ratio of TPS.

| Concurrency | gh-ost | pt-osc | OMC on non-partitioned tables | OMC on partition tables |

|---|---|---|---|---|

| 10 | 3% | 11% | 15% | 4% |

| 25 | 3% | 3% | 7% | 3% |

| 50 | N/A | 5% | 11% | 6% |

In scenarios of managing non-partitioned tables, PolarDB-X shows a slightly more significant temporary impact on traffic although PolarDB-X requires less time than gh-ost and pt-osc to perform lock-free column type changes. This reflects the limits of PolarDB-X under high-concurrency operations on a single data node (DN).

In scenarios of managing partition tables, PolarDB-X shows excellent performance in performing lock-free column type changes. The process requires less time and the impact on the traffic is comparable to that of gh-ost. This highlights the benefits of PolarDB-X on both speed and stability.

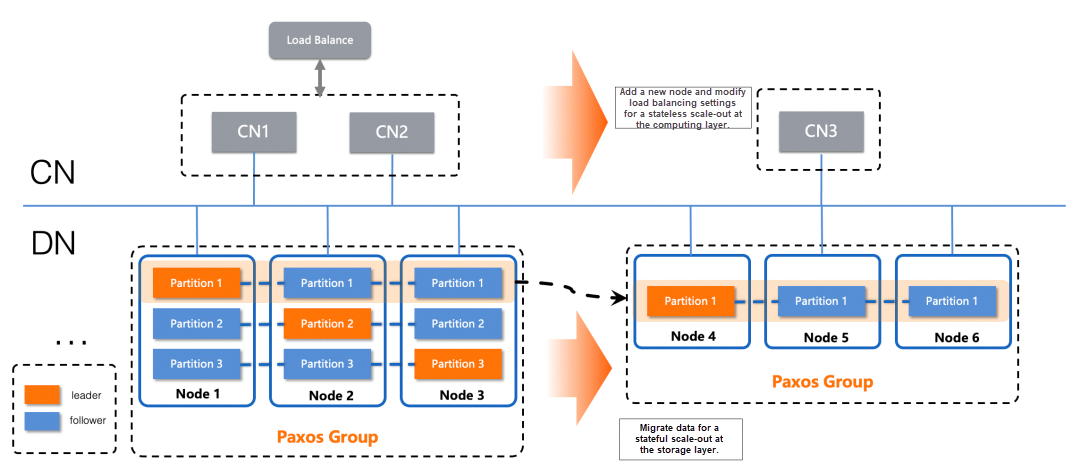

An important capability of distributed databases is horizontal linear scaling, which enhances overall performance by adding distributed nodes. This involves scaling out or in databases.

The scaling of a distributed database is to migrate data shards. The migration process combines the migration of full and incremental data. PolarDB-X 2.4.1 upgrades the scaling capability to provide a new full migration method from the previous default logical data migration to physical file-based migration.

For example, the full migration of logical data is implemented by using a TableScan operator to read all rows of the data shards that need to be migrated. The data is then written into the specified node by using an Insert operator. This method has the following limits:

• The leader needs to be read to ensure data migration consistency. Although only TableScan-based read operations are performed, CPU overheads still incur.

• Although batch processing optimization can be applied when data is written into the specified node by using an Insert operator, logical iteration is still required. This causes significant CPU overheads and lowers migration efficiency.

• The degree of parallelism in logical migration is low in a distributed system to not make full use of the benefits of multiple distributed nodes. For example, if you want to scale out a database from 50 to 75 nodes, the migration process may take an extended period of time.

PolarDB-X 2.4.1 provides a new full migration method by using physical files. This feature has the following benefits:

• Data is read by accessing the followers to ensure that online business is not affected. Binary-level reading is impletented by directly accessing physical files without logical parsing.

• The data writing process is also based on physical files. During this process, the binary stream of data read from the source is directly written to the destination node. This is similar to copying a binary physical file. The CPU only handles network forwarding and disk I/O without the need for logical iteration.

• A higher degree of parallelism allows you to create more defined tasks to copy physical files. A distributed scaling task can be divided into multiple subtasks to copy physical files. The subtasks are then scheduled to multiple nodes based on massively parallel processing (MPP) to implement distributed scaling.

In various testing scenarios, physical file copy and migration speed is approximately 5 to 10 times faster than logical migration. The testing scenarios include scenarios that involve binary large objects (BLOBs), wide tables, Sysbench benchmark, and TPC Benchmark C (TPC-C). Sample code:

# Enable physical replication.

set global physical_backfill_enable=true;

# Disable physical replication.

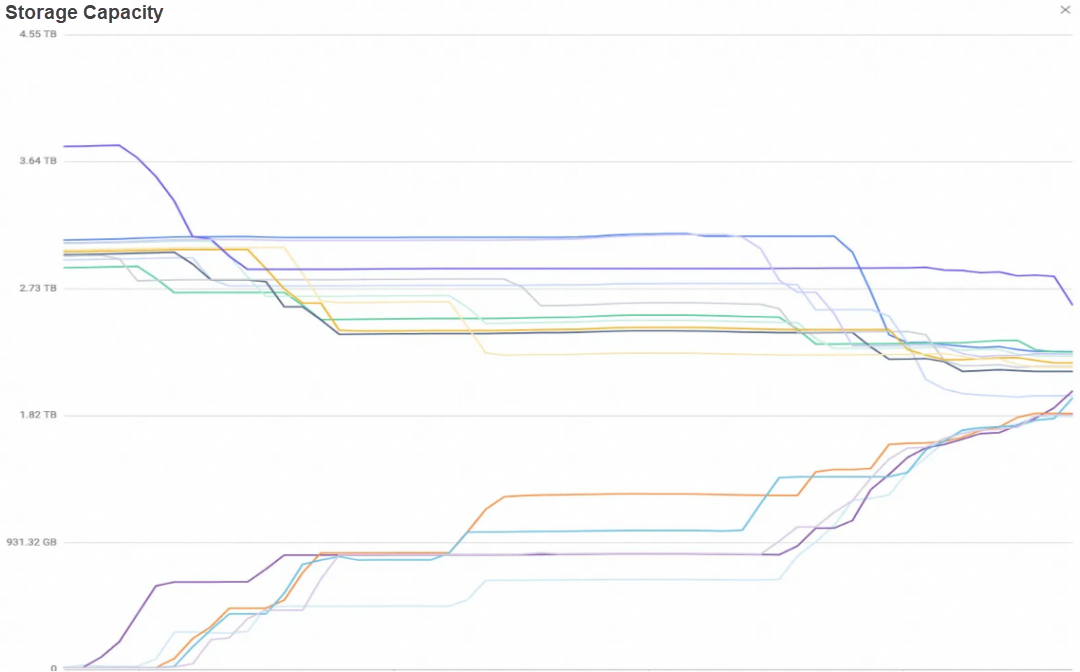

set global physical_backfill_enable=false;In addition, a large-scale distributed scaling experiment was conducted. The experiment involves the following instance specifications: 35 CNs, each of which has 8 CPU cores and 32 GB of memory, 70 DNs, each of which has 8 CPU cores and 32 GB of memory, and TPC-C with 500,000 warehouses that have approximately 45 TB of data. The following results are returned:

• When 70 DNs are scaled in to 40 DNs, 19.53 TB of data is involved with a total duration of 80 minutes and 5 seconds. The overall migration speed is 4,096 MB/s, with an average of 135.6 MB/s per node.

• When 40 DNs are scaled out to 70 DNs, 17.51 TB of data is involved with a total duration of 68 minutes and 6 seconds. The overall migration speed is 4,439.4 MB/s, with an average of 149.8 MB/s per node.

During the scaling process, the resources that core CN and DN components consume are below the specified threshold. The CPU, memory, and IOPS are used as expected.

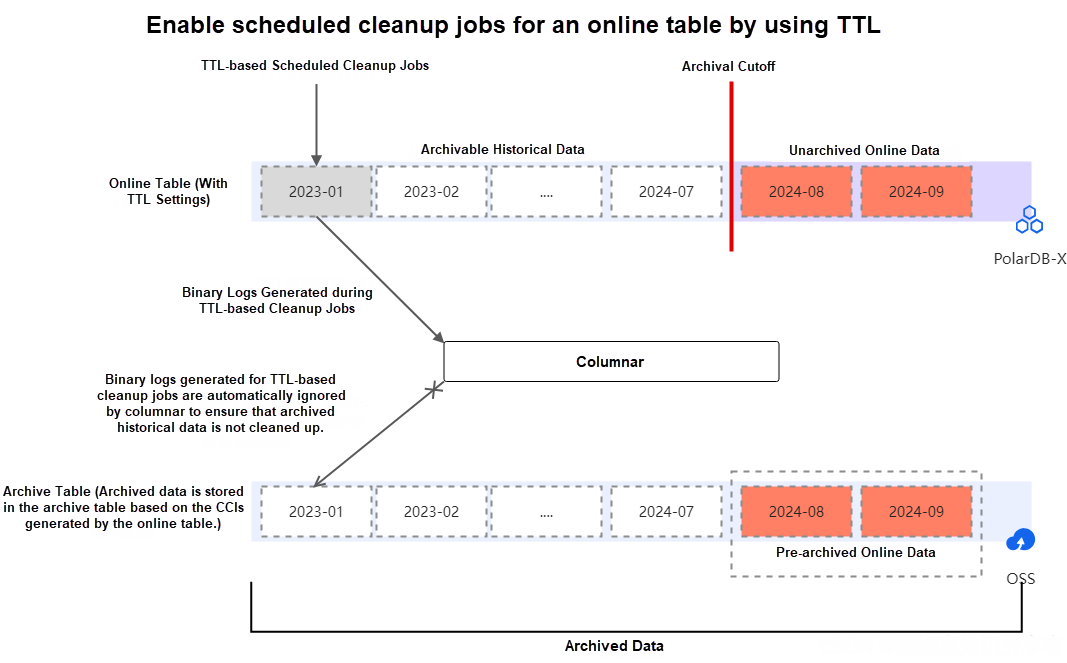

Many enterprises want to retain only the most recent and frequently accessed data (hot data). For old data (cold data) that is infrequently accessed and continuously accumulates, enterprises require a solution that can meet the following requirements:

• Clear cold data at regular intervals.

• Store cold data at lower costs.

• Allow the use of archived cold data for analytical and statistical purposes.

PolarDB-X provides a new version of the TTL feature that can be used to clear and archive expired data at the row and partition levels. The archived data can be stored in OSS by using CCIs to implement more flexible TTL solutions. You can perform the following operations to implement a TTL solution:

• Configure a TTL strategy for an existing data table. For example, you can specify the time column for which you want to set a TTL and the TTL.

• Define rules to perform cleanup jobs. For example, you can specify that cleanup jobs are manually run or automatically run as scheduled and the status of cleanup jobs is reported.

• Define rules to archive data. For example, you can specify whether to only clear expired data or archive expired data for storage.

Configure a TTL strategy. Example:

# Configure a dynamic TTL for an existing data table.

ALTER TABLE `orders_test`

MODIFY TTL

SET

TTL_EXPR = `date_field` EXPIRE AFTER 2 MONTH TIMEZONE '+08:00';In this example, the date_field column in the orders_test table is the time column, the data retention period is two months, and the time zone of the scheduled cleanup job is UTC+8.

Define rules to run cleanup jobs. Examples:

• Manually run cleanup jobs:

# Manually run a cleanup job.

ALTER TABLE `orders_test` CLEANUP EXPIRED DATA;• Automatically run cleanup jobs as scheduled:

# Specify that the cleanup jobs are scheduled to run at 02:00 every day.

ALTER TABLE `orders_test` MODIFY TTL \

SET TTL_JOB = CRON '0 0 2 */1 * ? *' TIMEZONE '+08:00';Define rules to archive data. Example:

# Create a table to store archived data.

CREATE TABLE `orders_test_archive`

LIKE `orders_test`

ENGINE = 'Columnar' ARCHIVE_MODE = 'TTL';Note:

• The ENGINE parameter must be set to Columnar, which indicates that columnar is used.

• All DDL operations performed on the primary table are captured. For example, if a column is added to the primary table, the column is automatically added to the table that stores the archived data. This ensures that subsequent archiving tasks are not interrupted.

For more information about how TTL works, see the following article:

• Overview: https://www.alibabacloud.com/help/en/polardb/polardb-for-xscale/principle-overview

• Definitions of TTL tables: https://www.alibabacloud.com/help/en/polardb/polardb-for-xscale/definition-and-creation-of-ttl-table

• Clean up expired data of TTL tables: https://www.alibabacloud.com/help/en/polardb/polardb-for-xscale/ttl-table-expired-data-cleansing

• Archive table statements: https://www.alibabacloud.com/help/en/polardb/polardb-for-xscale/archive-table-syntax-description

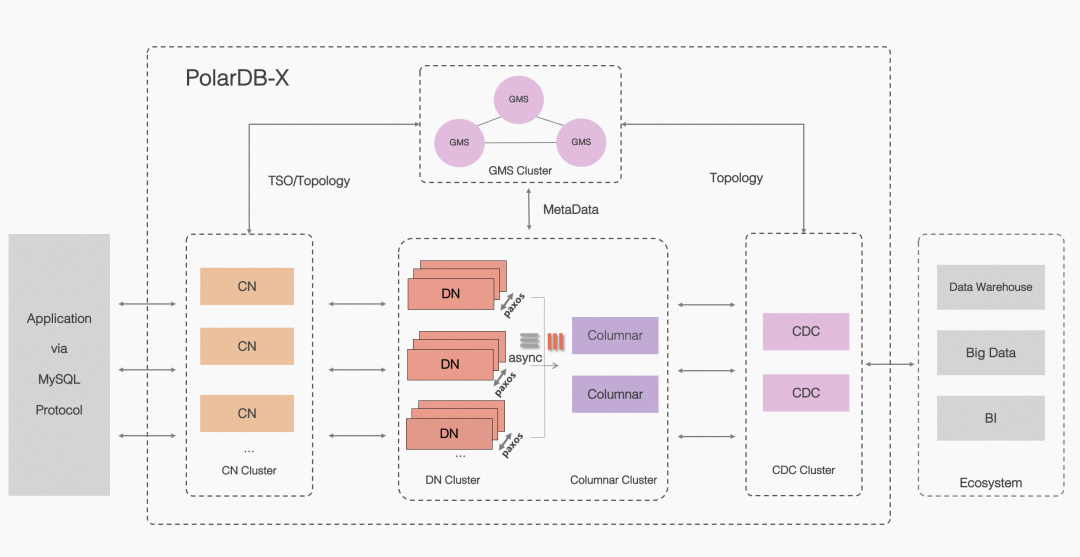

PolarDB-X uses a shared-nothing system architecture to decouple storage and computing resources. PolarDB-X consists of the following core components to support financial-grade high availability, transparent distribution, HTAP, and integration of centralized and distributed architectures.

CNs are the entrance of the system. These nodes are stateless and include models such as the SQL parser, optimizer, and executor. CNs are responsible for distributed data routing, computation, dynamic scheduling, distributed transaction coordination based on the Two-Phase Commit (2PC) protocol, and global secondary index maintenance. CNs provide enterprise-level features such as SQL throttling and the three-role mode.

DNs are responsible for data persistent. DNs ensure data durability and provide strong consistency guarantees based on the multi-majority Paxos protocol. DNs use multiversion concurrency control (MVCC) to maintain the visibility of distributed transactions.

Global Meta Service (GMS) is responsible for maintaining globally consistent system metadata such as table metadata, schema metadata, and statistics metadata. GMS manages security-related information such as user accounts and permissions. GMS provides the Timestamp Oracle (TSO) service.

Change data capture (CDC) allows you to subscribe to incremental binary logs and provides the primary/secondary replication feature for MySQL databases.

Columnar provides persistent CCIs, consumes the binary logs of distributed transactions in real time, and builds CCIs based on OSS to meet the requirements of real-time updates. When combined with CNs, columnar can provide snapshot-consistent query capabilities for CCIs.

PolarDB-X provides multiple deployment modes. You can select a deployment mode based on your business requirements. The following table describes the deployment modes. For more information, visit https://github.com/polardb/polardbx-sql

| Deployment mode | Description | Prerequisites | Required tool |

|---|---|---|---|

| RPM Package Manager (RPM)-based deployment | You can manually deploy a PolarDB-X database by installing an RPM package without the need to install other components. | The RPM package is downloaded and installed. | RPM |

| PXD-based deployment | PXD is a tool developed by Alibaba Cloud that allows you to deploy a PolarDB-X database by configuring a YAML file. | PXD is downloaded. | Python 3 and Docker |

| Kubernetes-based deployment | You can deploy a PolarDB-X database based on Kubernetes Operator. | A Kubernetes cluster is created. | Kubernetes and Docker |

PolarDB-X Operator is developed based on Kubernetes Operator. PolarDB-X Operator V1.7.0 is officially released to provide deployment and O&M capabilities for PolarDB-X databases. We recommend that you use this deployment mode in the production environment. For more information, visit https://doc.polardbx.com/zh/operator/

PolarDB-X Operator V1.7.0 adapts to multi-cloud deployment capabilities to provide new features such as restoration from backup sets that can be stored by using Amazon S3. PolarDB-X Operator V1.7.0 also integrates commercial, open source, and multi-cloud systems. For more information about the release notes, visit https://github.com/polardb/polardbx-operator/releases/tag/v1.7.0

PolarDB-X 2.4.1 enhances enterprise-level O&M capabilities and provides more valuable features on database O&M and data management for DBAs. For more information about the release notes, visit https://github.com/polardb/polardbx-operator/releases/tag/v1.7.0

On September 30, 2024, China Information Technology Security Evaluation Center (CNITSEC) issued Notice on the Safety and Reliability Assessment Results (2024 No.2), which indicates that PolarDB-X 2.0 becomes one of the first distributed databases to pass the safety and reliability assessment. For more information, visit http://www.itsec.gov.cn/aqkkcp/cpgg/202409/t20240930_194299.html

PolarDB-X uses a shared-nothing system architecture to decouple storage and computing resources and integrates centralized and distributed architectures. PolarDB-X instances are available in two editions: Standard Edition (centralized architecture) and Enterprise Edition (distributed architecture). PolarDB-X provides features such as financial-grade high availability, distributed horizontal scaling, hybrid loads, low-cost storage, and high elasticity. PolarDB-X is designed to be compatible with the open source MySQL ecosystem and to provide high-throughput, large-storage, low-latency, scalable, and high-availability database services in the cloud era.

Best Practices for Disaster Recovery Capability Construction of ApsaraDB RDS for PostgreSQL

[Infographic] Highlights | Database New Features in December 2024

ApsaraDB - January 3, 2024

ApsaraDB - June 19, 2024

ApsaraDB - July 7, 2022

ApsaraDB - July 23, 2021

ApsaraDB - April 10, 2024

ApsaraDB - October 17, 2024

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn MoreMore Posts by ApsaraDB