By Sun Lili, senior technical expert at Alibaba Cloud

This article is a translation of the speech on how to quickly implement data warehouse and lake house based on MaxCompute.

This article consists of the following four parts:

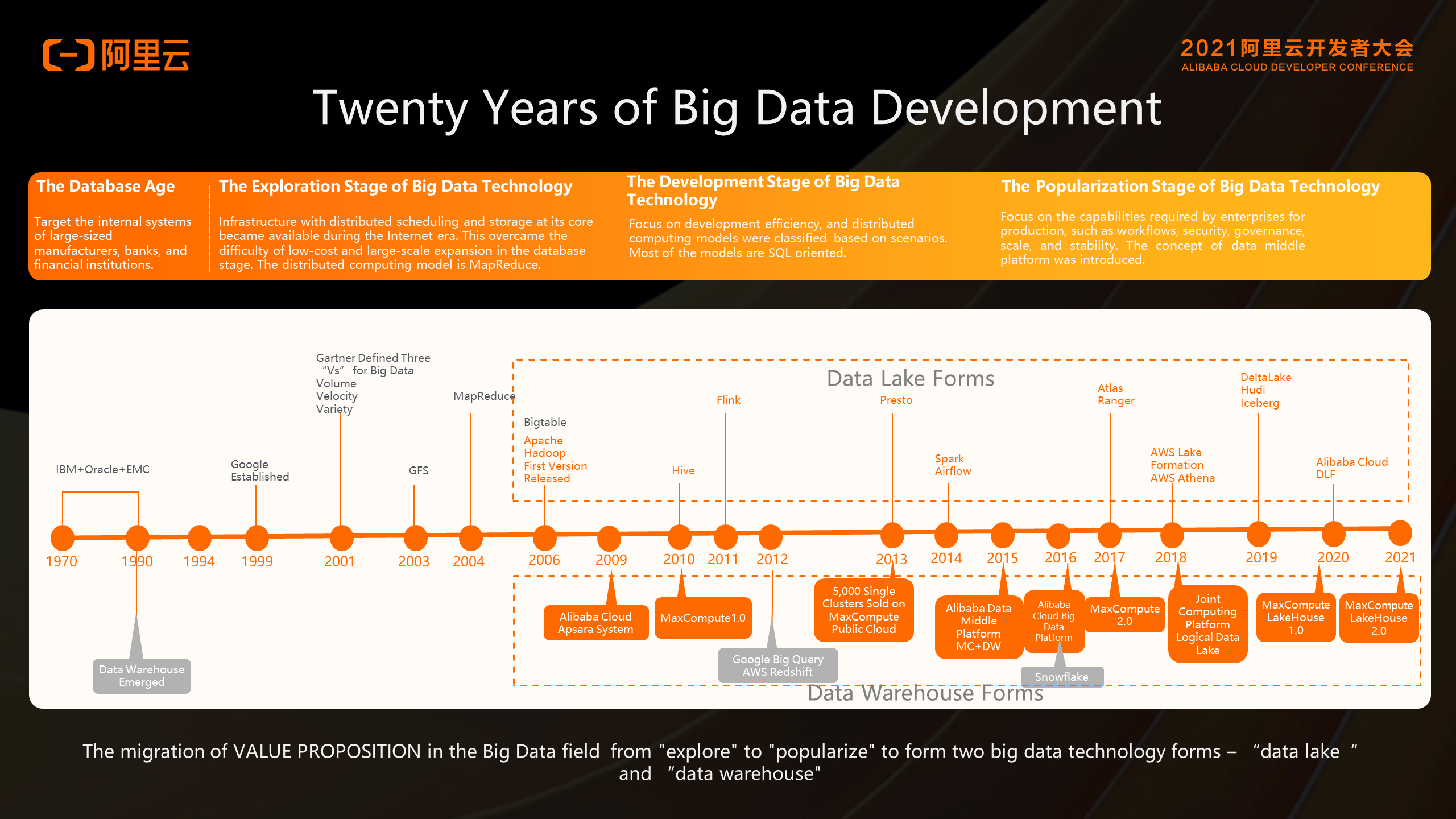

At present, many enterprises do not know whether the existing big data system is a data lake or a data warehouse. So let's review the previous 20 years of the development process of big data technology. Hopefully through this analysis, we can understand what data lakes and data warehouses are, the reason they came into being, and the background of the lake house.

Throughout the computer science and technology field, the development of data processing technology can be divided into four stages: the database stage, the exploration stage of big data technology, the development stage big data technology, and the big data popularization stage.

The database stage lasted from the 1970s to 1990s. This stage was the golden age of database plus single machine. The database system was oriented to operations, transactions, and online business systems. In fact, the concept of data warehouse already emerged in the 1990s. The data warehouse was oriented towards the analysis and exploration of historical full data. However, the overall amount of data at that time was not large, so some database technology extensions could support the needs of the data warehouse at that time.

Around 2000, with the outbreak of Internet technology, we have ushered in the big data era. At this stage, it is difficult for us to meet the requirements of massive data processing by using traditional database technology. As we all know, Google's three papers on distributed storage, scheduling, and computing have laid the foundation for the entire big data technology. At the same time, in 2006, the Hadoop system emerged. Alibaba developed the Apsara system in 2009, and leading companies such as Microsoft has also developed relatively excellent distributed systems. In this phase, the entire big data technology is all about making a large volume of data.

Around 2010, big data has entered a booming stage, a stage in which we hope big data technology will change from usable to easy-to-use. At this stage, a series of engines based on SQL expression appeared, including a series of engines derived from Hadoop system, such as Hive, Flink, and Presto. At this time, HDFS storage gradually became the unified storage, while ORC and Parquet became the open file formats. There was a system with many open engines, which is similar to the data lake system we are discussing today. At this stage, Hadoop is a data lake system in its essence. Then, what is the essence of a data lake? It is a unified storage that can store raw data and support multiple computing paradigms.

During the same period, Alibaba Cloud released MaxCompute, based on the Apsara system, with Google releasing Big Query and AWS releasing Redshift. These systems are called cloud data warehouses in the big data era. What are the differences between the cloud data warehouse system and the Hadoop system described above? The cloud data warehouse does not expose the file system, but the description of the data in the form of tables or views. The storage engine and the computing engine were blocked in the system. Therefore, the storage engine and the computing engine can be deeply optimized, but imperceptible to users. From this stage, we can see that the entire big data technology has begun to be subdivided into lakes and warehouses.

Now we are at the stage, that is, in 2015 or so, we have entered the stage of big data popularization stage. There are two trends at this stage. The first is the development of big data technology. It focuses not only the scale and performance, but more on the enterprise-level capabilities, such as data security, data governance, stability, and low costs. We can see that Alibaba Cloud has built a data middle platform system with many Alibaba characteristics based on MaxCompute. The Atlas and Ranger were also developed in open-source system, which focuses on open-source projects such as lineage, governance, and security.

The second one is that, with the development of AI, IOT, and cloud-native technologies, the demand for unstructured data processing is growing. The trend of using cloud-based object storage as unified storage is increasing. The Hadoop system is gradually changing from using HDFS as the unified storage, to using cloud storage, such as S3, and OSS, as a unified storage for data lake system. At the same time, many data lake formation products, such as AWS Lake Formation and Alibaba Cloud DLF, have emerged. For the warehouse, we are also pushing forward the integration with the data lake for an appearance through which the federated computing can be performed on data in the database.

Throughout the 20 years of development, it is actually the lake and warehouse systems that have been developed as the evolution of big data technologies advances forward.

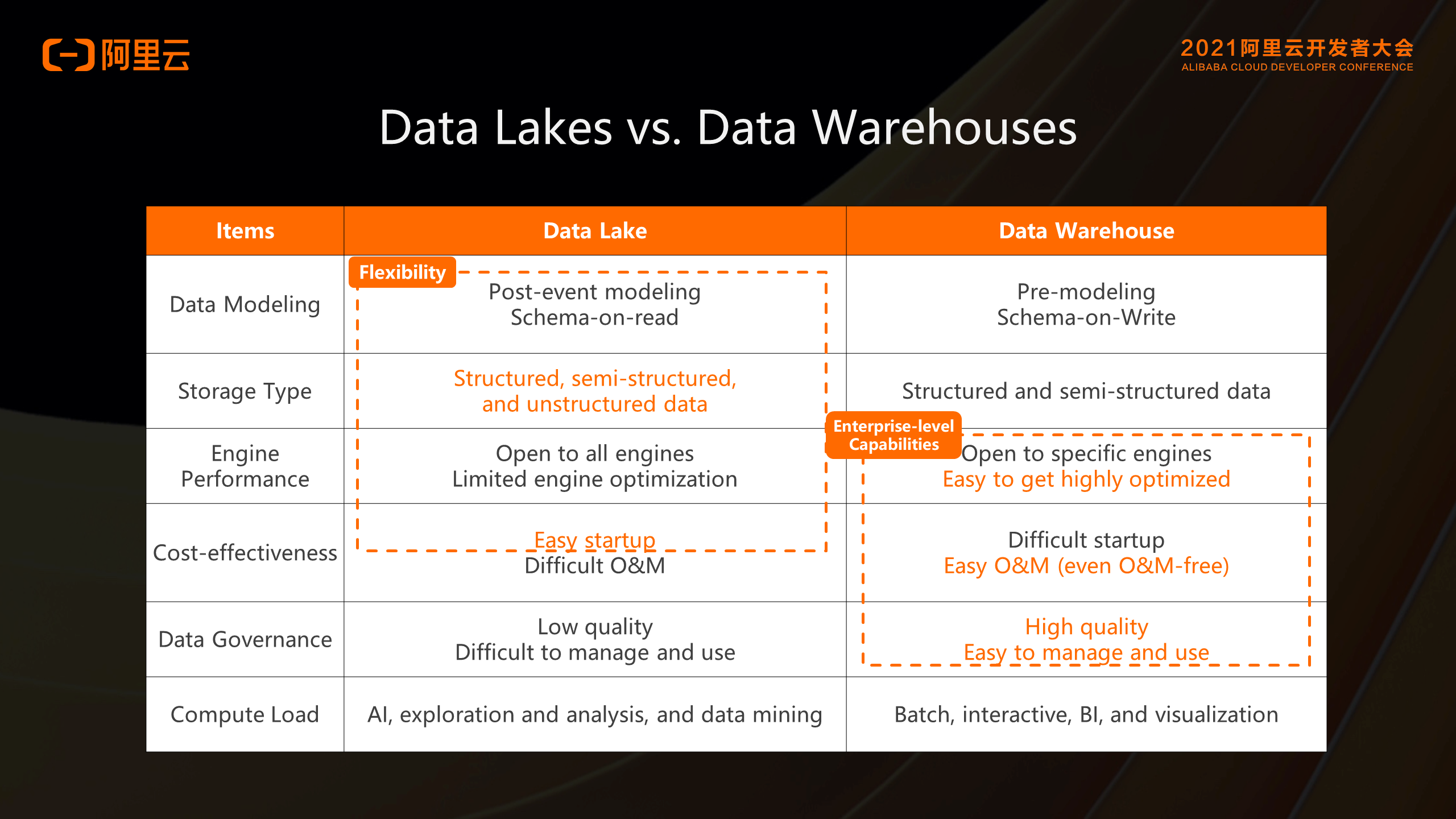

The following figure shows the differences between a data lake and a data warehouse.

Generally, a data lake is a loosely coupled system with a wide input and output. A data warehouse is a tightly coupled system with strict input and output rules. A data lake means that data is first produced and then used, with structured, semi-structured, and unstructured data stored as well, which follows a post-event modeling rule. It provides a standard set of open interfaces to support more engines like plug-ins so that it is open to all engines. Note that since it functions like a plug-in, the computing and storage are actually two independent systems. In fact, they cannot understand each other and cannot achieve in-depth optimization. As a result, engines can only be optimized in a limited way.

A data lake is easy to start. However, as data grows, a series of governance problems emerge, making it difficult for O&M in the later stage. This is because the data lake does not check the strong consistency of the Schema, which results in the low data governance and the difficulty in management. Since the data of the data lake is first produced and then used, it is more suitable for solving unknown problems, such as exploration analysis, scientific computing, data mining and other computational processing.

A data warehouse is basically in the opposite spectrum of a data lake in comparison. A data warehouse is a strict system, so it needs to be modeled in advance. Then the data is converted and cleansed into the warehouse, and the data stored become structured or semi-structured. The data warehouse is a relatively enclosed system, or a self-enclosed loop system that it is open only to specific engines. However, just because of this, its computing engine, storage engine, and metadata can be deeply and vertically optimized to achieve excellent performance.

It is difficult and costly to start a data warehouse because data is only achieved after modeling. However, once the data is in the data warehouse, the entire data is of high quality and easy to be governed. In this case, the overall cost will be reduced, and the data may even become O&M-free. The Schema of the data warehouse checks for strong consistency, so the data quality is high and the data is easy to use. Therefore, the computing load of the data warehouse is naturally suitable for offline computing, interactive computing, BI, and visualization.

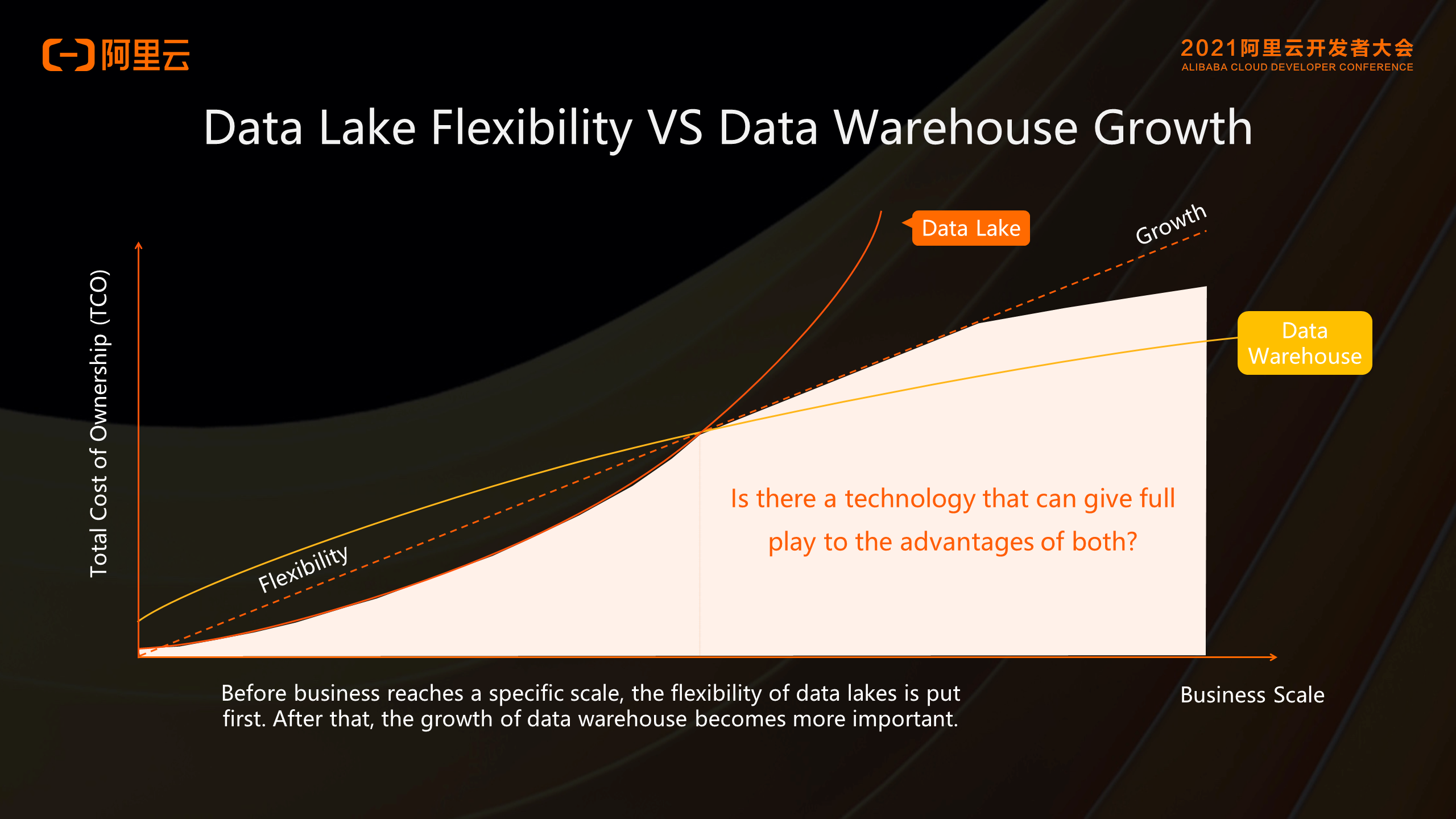

In general, a data lake is more flexible and a data warehouse is equipped with more enterprise-level capabilities. So what does it mean to enterprises? The following figure illustrates the total cost of ownership (TCO) of data lakes vs. data warehouses based on the scale of a business.

The x-axis represents the business scale of an enterprise, and the y-axis represents the cost for an enterprise to build a big data system. At the startup period, the business scale is relatively small. The entire process from data generation to consumption is a stage of exploration and innovation. At this stage, a data lake is very easy to start and with relatively low cost. However, as the business grows, it involves more and more employees and departments.

Therefore, the requirements for data quality management, permission control, and costs become higher and higher. When a data lake is used, the cost increases exponentially. In this case, you can use data warehouses to control costs and manage data quality. As shown in the preceding figure, data lakes and data warehouses play critical roles in different phases of an enterprise. Is there any technology or architecture that can give full play to the advantages of both?

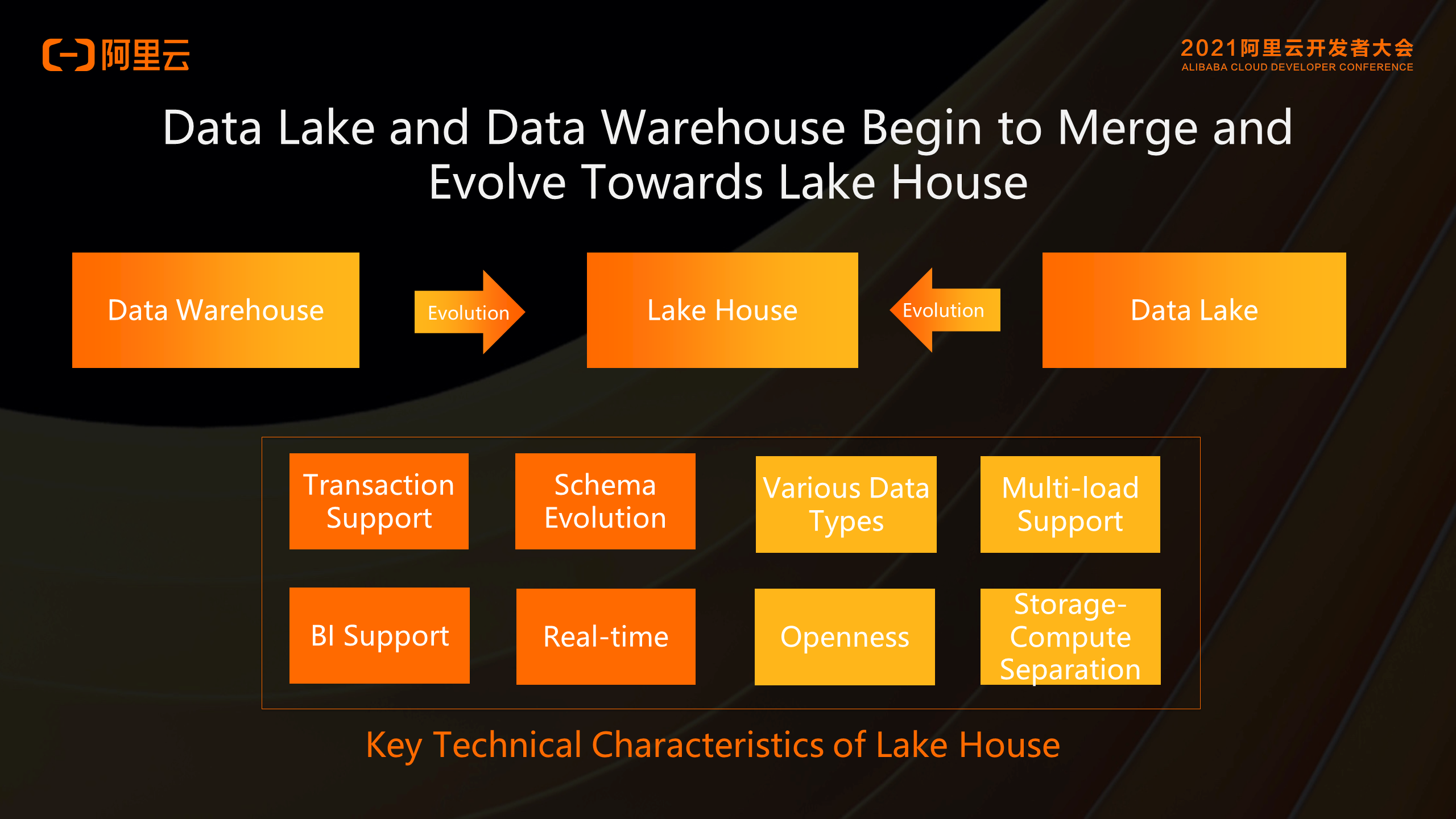

Based on Alibaba Cloud’s observations of the industry and its practices, we believe that data lakes and data warehouses are now coming into fusion, and they evolved in their own ways towards the lake house. As shown in the preceding figure, they actually go in a reverse way. So what needs to be done for their own evolution respectively?

As a strict system, data warehouse is more suitable for transaction due to the strong consistency check and evolution by Schema with BI naturally supported, which makes it easier to implement real-time feature. With a storage-compute separation architecture, a data lake is advantageous for its abundance of data types, multiple computing models, and open file systems and file formats.

Therefore, the evolution of data warehouse to lake house requires the development of data lake features based on their own features. In fact, it requires the integration with systems such as HDFS and OSS so that the structure of the data warehouse is more about the left-to-right structure. For the evolution to the lake house, more efforts are needed to build the foundation for HDFS and OSS to implement strong warehouses. Therefore, the data lake structure is more like an above-to-below structure. DeltaLake and Hudi add a layer to the upper and lower structures to create a file type that supports strong warehouses above the lake.

However, both data lake and data warehouse would go in the same direction towards the lake house. The characteristics of the lake house remain the same, which consist of four warehouses features, four data lake features.

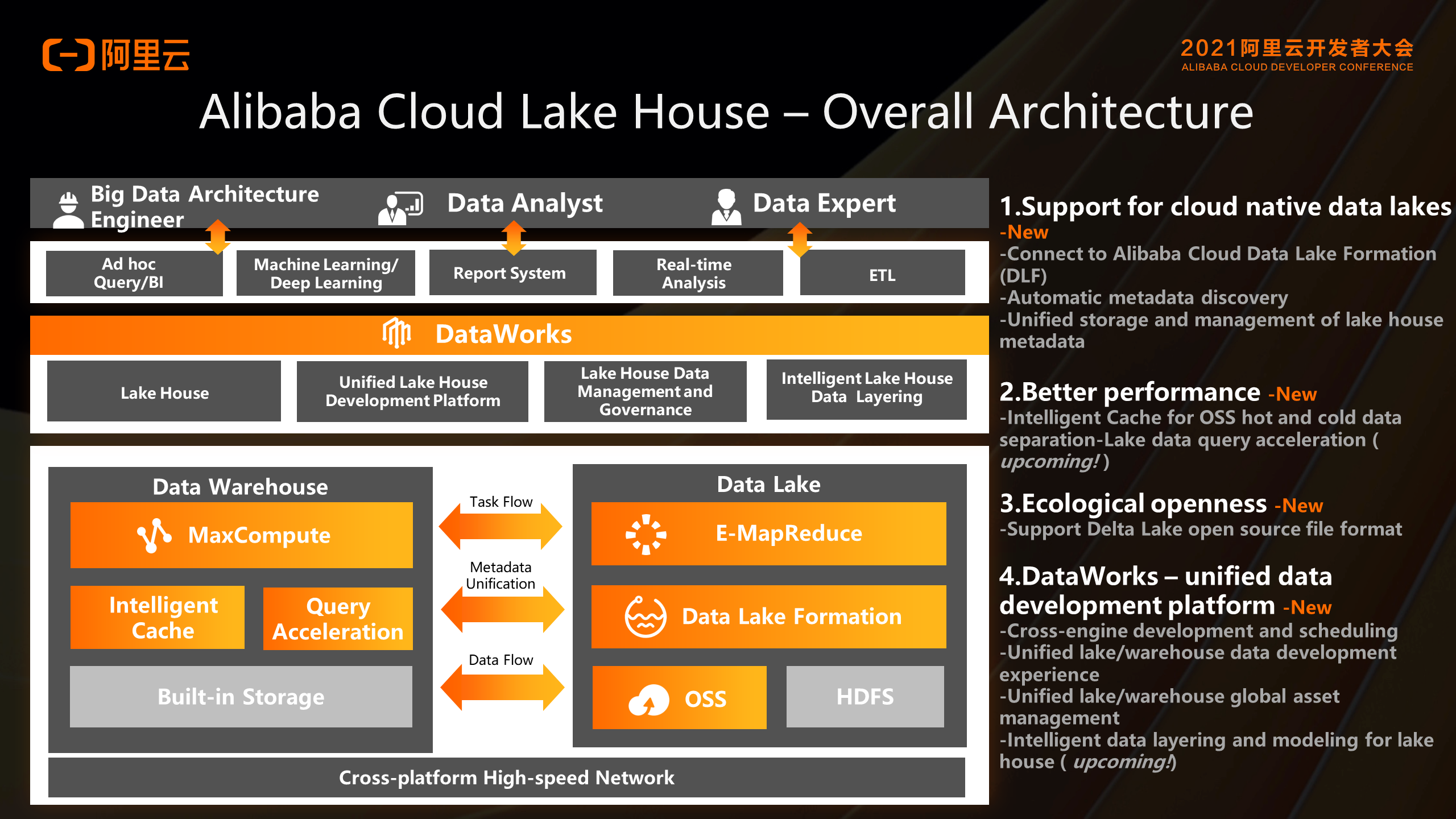

The preceding figure shows the overall architecture of Alibaba Cloud lake house. From the bottom to the top, the bottom layer is the network layer, the middle layer is the lake warehouse engine layer, the upper layer is the DataWorks, namely the data development layer of data lake and warehouse, and the top is the business application layer. Let’s focus on the engine layer. The lake house by Alibaba Cloud adopts a left-to-right structure. On the left side is Alibaba Cloud data warehouse MaxCompute, and on the right side is Alibaba Cloud Data Lake EMR. In the middle is the unification of metadata, data and tasks can flow freely between the data warehouse and the data lake with open format compatibility. We announced the support for Hadoop Data Lake at the Apsara Conference 2020. Recently, we have supported the OSS lake house.

On the right of the figure above shows some specific function points that we have released recently are listed.

MaxCompute is connected to the Alibaba Cloud Data Lake formation product DLF, enabling automatic metadata discovery, storage, and management of lake or warehouse metadata.

Recently, Alibaba Cloud has been working on the intelligent Cache, which can realize intelligent data layering from OSS to warehouse. The query acceleration feature was implemented in MaxCompute in 2020. In a future version, the query acceleration engine will be projected onto the data lake to support query acceleration on the data lake.

The Delta Lake open source file format has been supported recently.

DataWorks supports multiple engines to provide an experience of lake house unified development.

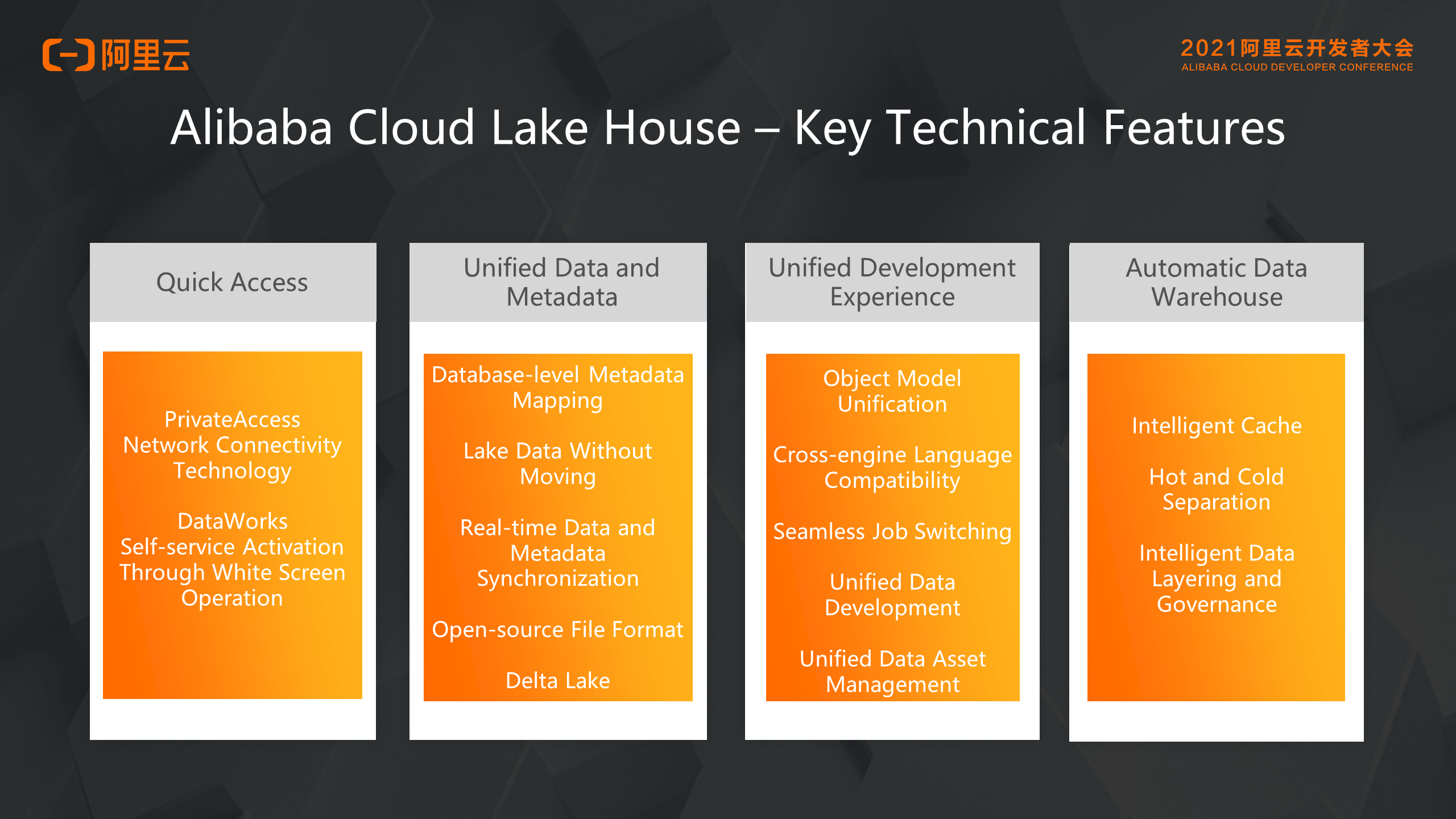

Whether it’s left-to-right structure or above-below structure, it should eventually be a system that is simple and easy to use. The Alibaba Cloud lake house has four key features, all of which focus on how to make data lakes and data warehouses more easy-to-use.

It mainly has two layers, one is the network layer, and the other is the opening layer of lake house. MaxCompute can integrate with the Hadoop ecosystem in any environment on the cloud or off the cloud. Due to the MaxCompute multi-tenant system, connecting to a specific user environment is technically challenging. Thus, we developed the PrivateAccess network connection technology to achieve this goal. Second, for the DataWorks white-screen operation for self-activating lake house, we will soon release a version in the future that users can quickly activate lake house in the console. At present, the employment order method is still required to submit the opening.

The key technology is a Database-level metadata mapping. That is, we can map the database on the data lake into a Project in MaxCompute. Data on the data lake can be consumed as if it were a common Project, without having to move it around. At the same time, the data/metadata of the data lake and the data warehouse can be synchronized in real time. If a table or Schema in the data lake changes, it can be promptly reflected on the MaxCompute data warehouse. At the same time, MaxCompute has built-in storage and file formats. We are also continuously implementing file formats in the open-source ecosystem, including the Delta Lake mentioned previously.

The data lake and the data warehouse themselves are two different systems. The two systems have different definitions of the database model and the object model. On the MaxCompute side, the data lake is unified with the object model of the data warehouse, and the SQL and Spark of MaxCompute are highly compatible with the community. Therefore, we can seamlessly migrate jobs in the two systems.

This is an interesting and key field that we have invested in recently. Last year, we made a version of Cache, which is mainly based on historical data. This year, I made a new version of Cache, which is an intelligent Cache that can be dynamically adjusted according to business scenarios with the final goal to achieve intelligent hot and cold data separation between a data lake and a data warehouse. The Cache itself needs deep coupling between storage and computing. Therefore, the Cache in the data warehouse can be used to the extreme. In addition, we try to label and identify the data in the data lake to determine which data is more suitable to be put into the warehouse or in the data lake from the perspective of data modeling. For example, structured data that is repeatedly accessed and table data that is frequently accessed can be stored in a data warehouse. Unstructured or semi-structured data that is infrequently accessed can be placed in the data lake. The ultimate goal is to achieve the best balance among performance, cost, and business performance.

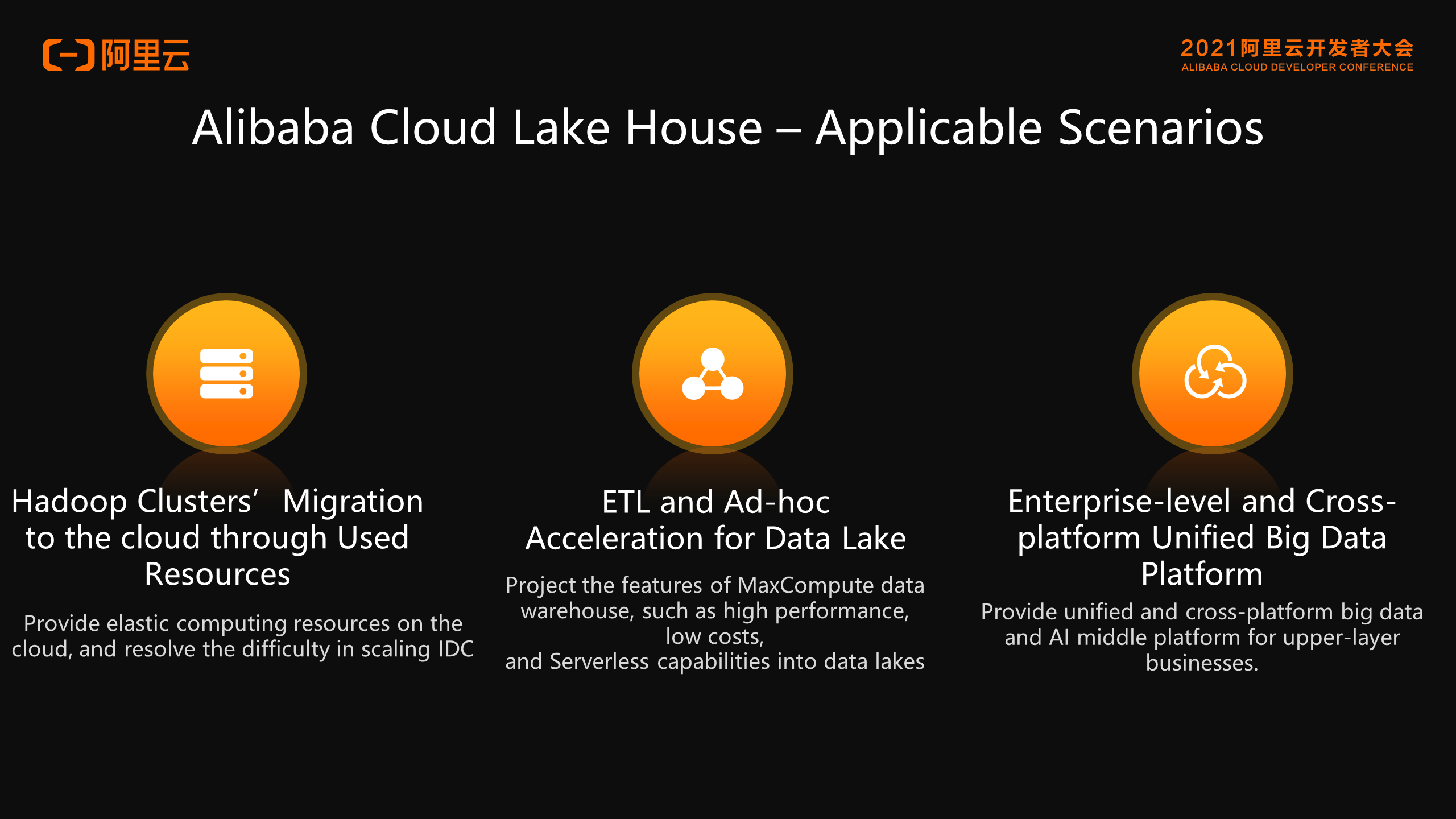

What are the scenarios applicable to Alibaba Cloud Lake House? There are three categories in general.

Migrating the offline Hadoop to the cloud requires a lot of data and task migration, and even modification. In this case, lake house can be used to quickly connect offline Hadoop to Alibaba Cloud MaxCompute. Offline jobs do not need to be modified or relocated, and can be directly run into the MaxCompute system.

As a SaaS-based cloud data warehouse, MaxCompute features high performance, low costs, and Serverless capabilities. Through the Lake warehouse integration, the ability of the warehouse can be projected into the lake.

Based on the lake house technology, enterprises can connect the existing data in one or more Hadoop clusters or even OSS lakes with the data warehouse of MaxCompute, and finally build a complete set of unified data development as well as a platform for centralized data management, governance, and scheduling. In addition, unified and transparent middle platform capabilities are provided for upper-layer businesses.

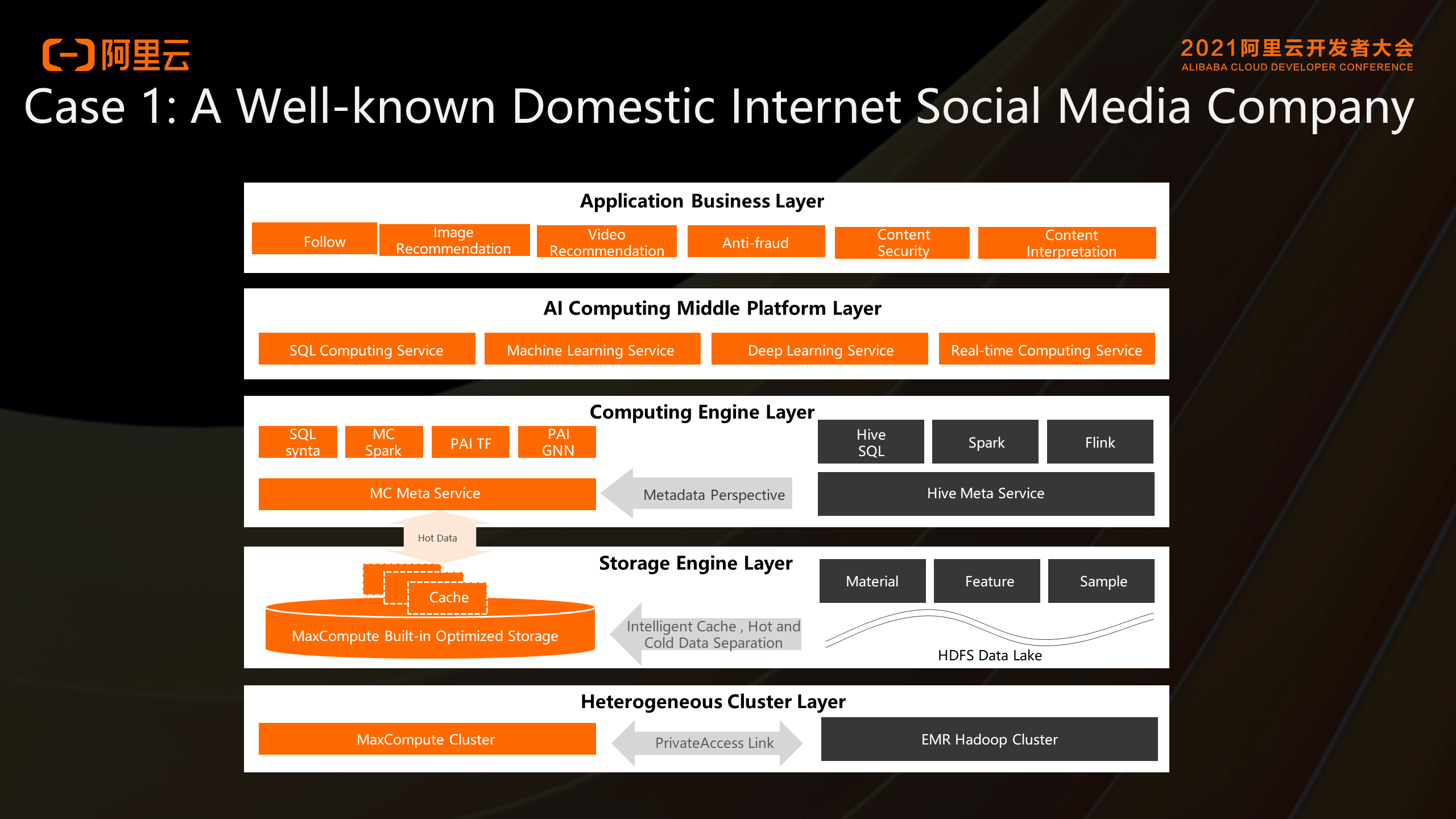

Engaged in recommendation/sorting, text/image classification, and anti-spam/fraud protection in the social media field. Based on the open-source Hadoop data lake technology, Alibaba Cloud's MaxCompute and Machine Learning Platform for AI solution enable feature engineering and model training for ultra-large-scale scenarios, forming a coexistence of MaxCompute data lake and Hadoop data lake.

Pain points:

Benefits:

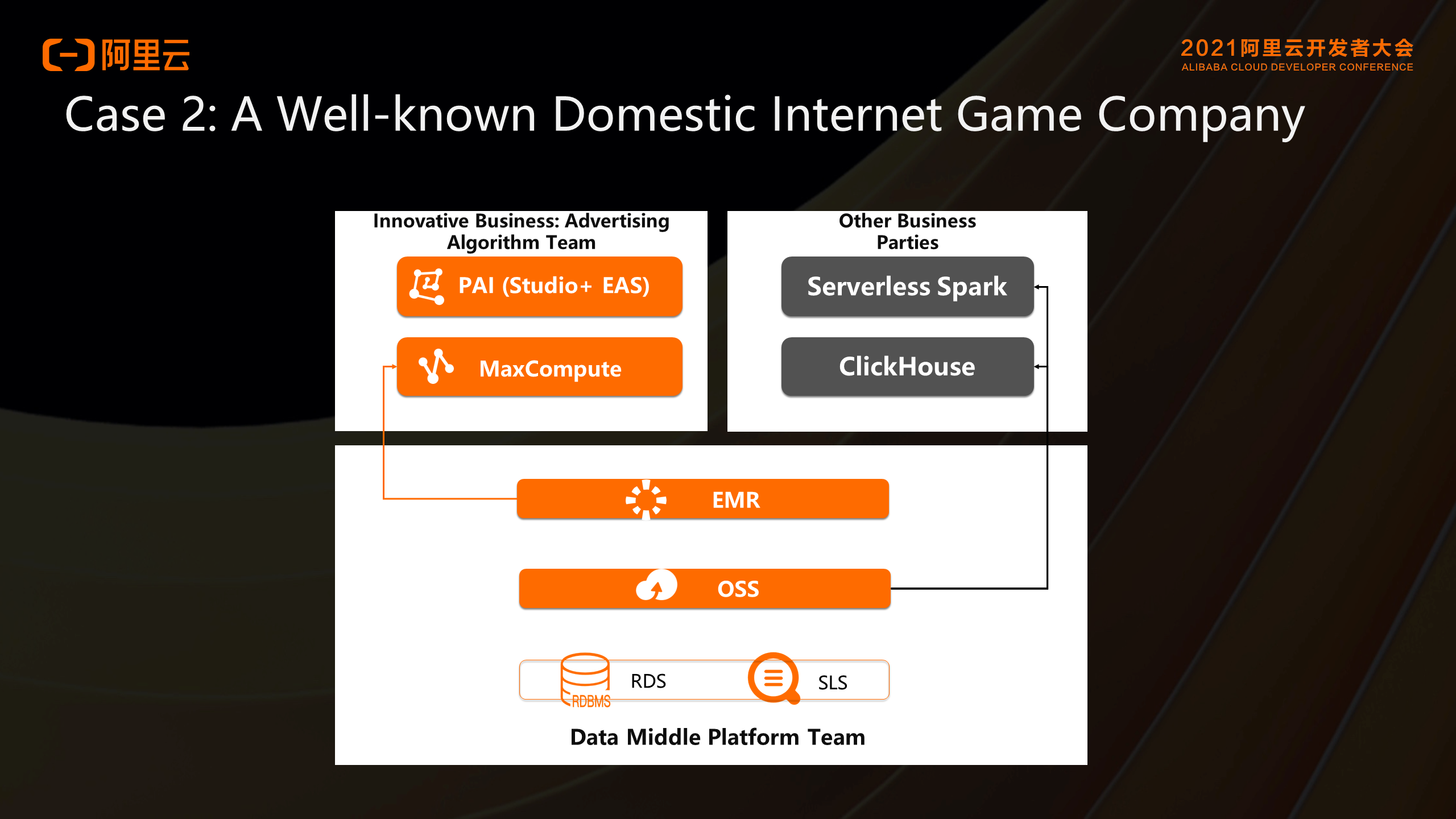

Customer advertising algorithm team is the main customer of the lake house, and the main application is machine learning DW + MC + PAI + EAS online model service.

Pain points:

Benefits:

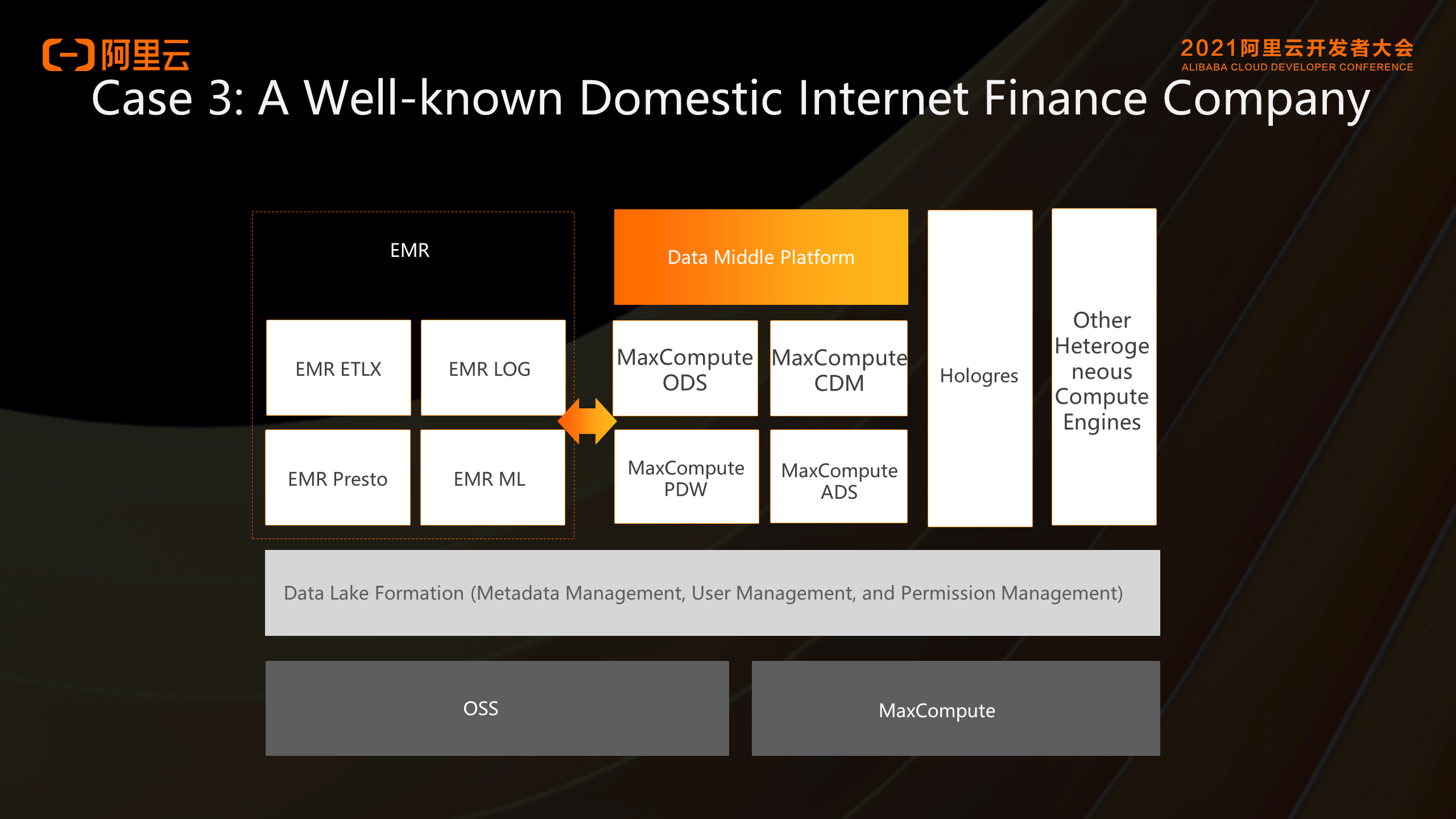

Abundant experience in big data platform construction, continuous iteration and upgrade of the platform to meet the evolving business needs. After migration to Alibaba cloud from a foreign vendor, efforts have been made to actively build and transforms its data Lake architecture.

Pain points:

The first-generation data lake is EMR + OSS. The data middle platform execution engine and storage introduced by the company is Maxcompute. The two heterogeneous execution engines bring about storage redundancy, metadata inconsistency, permission inconsistency, and the inflexible flow of lake house computing.

Benefits:

1. Demo on the unification of MaxCompute data warehouse and OSS Data Lake (in Chinese language)

2. Demo on the unification of MaxCompute data warehouse and Hadoop data warehouse

Click to watch the practice video

MaxCompute is an enterprise-level cloud data warehouse in SaaS mode for analysis. It provides fast and fully-hosted online data warehouse services based on the Serverless architecture. It also minimizes the O&M costs for users to efficiently and economically process and analyze large amounts of data without the restrictions of resource scalability and elasticity. Tens of thousands of enterprises are performing data computing and analysis based on MaxCompute, thus efficiently converting data into business insights.

Continuous Evolution and Development of Data Warehouse Architecture

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - December 22, 2021

Alibaba Cloud MaxCompute - January 21, 2022

Alibaba Cloud MaxCompute - July 14, 2021

Alibaba Cloud Community - September 17, 2021

Alibaba EMR - July 20, 2022

Alibaba Cloud MaxCompute - March 3, 2020

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Alibaba Cloud MaxCompute