By Zheng Kai, Senior Technical Specialist of Alibaba Group

In the open-source big data field, separated storage and computing has become a commonly standard practice, and data lake architecture has become the primary choice for big data platforms. Thus, big data architects need to consider three issues as follows.

Data lake storage is a repository that holds large amounts of raw data in its native format until it is needed. It can be based on Hadoop Distributed File System (HDFS) or also be based on the public cloud, such as Alibaba Cloud Object Storage Service (OSS). Currently, a data lake built based on an OSS instance on the public cloud is the most popular practice. The second issue mainly discussed here should be aligned with the optimization and practice of Alibaba Cloud EMR JindoFS to show how to achieve data lake acceleration.

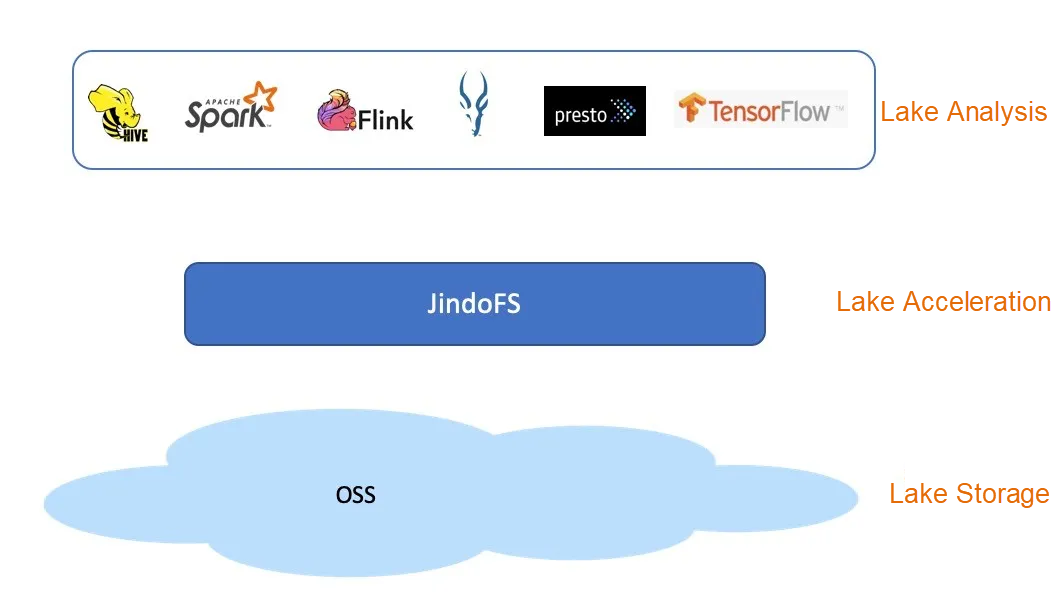

In data lake architectures, lake storage such as HDFS and Alibaba Cloud OSS, as well as lake computing such as Spark, Presto, are well-known already. So what is data lake acceleration? If you search it, there is basically no precise definition. Lake acceleration is proposed by members from Alibaba Cloud Elastic MapReduce (EMR). As its name implies, lake acceleration is the acceleration for data lakes. That means, lake acceleration refers to the middle-tier technology for optimization and cache acceleration to unify support for various computations and provide adaptation support for data lake storage in the data lake architecture. The earlier community solution is Alluxio. The S3A Guard of Hadoop community, the EMR File System (EMRFS) of Amazon Web Services (AWS), both of which are compatible with and can support AWS S3. The Solid-state disk (SSD) cache on computing side of Snowflake, DBIO/DBFS of Databricks, EMR JindoFS of Alibaba Cloud, all can be classified as data lake acceleration.

Why data lake acceleration is needed? This is closely related to the layered data lake architecture and the evolution of corresponding technologies. Next, answers will be introduced from three aspects. They are the basic edition for adaptation, the standard edition for caching; and the advanced edition for deep customization. JindoFS covers features above, realizing full coverage of all scenarios of data lake acceleration.

Hadoop-based big data and cloud computing represented by EC2/S3 on AWS were irrelevant in their early development. After the emergence of EMR, the combination of big data computing, originally MapReduce and S3 becomes a real technical proposition. To integrate S3 and OSS, big data must first adapt to the object interface. Other open-source big data engines of the Hadoop ecosystem, such as Hive and Spark, used to support HDFS, adapting to interfaces of HCFS and other storage systems. The machine learning, such as Python, focuses on portable operating system interface (POSIX) interfaces and local file systems. Deep learning frameworks like TensorFlow also support HDFS interfaces. OSS provides the REST API and encapsulated SDKs for major development languages, all of which are made up of object storage semantics. Therefore, to use these popular computing frameworks, HCFS interfaces must be adapted and converted or POSIX must be support. This is the reason why adapting and supporting Cloud OSS products has become a hot topic in the Hadoop community as the prevalence of cloud computing, such as S3A FileSytem. JindoFS is developed by the Alibaba Cloud EMR team to fully support and perform acceleration optimization for Alibaba Cloud OSS. The efficient adaptation method is not as simple as adding an interface transformation in the design mode. To implement the adaptation well needs to understand the major differences between OSS systems and file systems. Here is further explanation.

OSS provides low-cost storage for a large amount of data. Compared with file systems such as HDFS, Alibaba Cloud OSS is considered to be more scalable. With the popularity of business intelligence (BI) and artificial intelligence (AI), data value mining has become feasible. Users tend to store more and more different types of data into Alibaba Cloud OSS, such as images, voice, logs. The challenge at the adaptation layer is to process more data and files than traditional file systems. It usually concludes large directories with tens of millions of files, even with numerous small files. On this occasion, general adaptation operations fail to deal with them with error of out of memory (OOM) or out of work. JindoFS has accumulated a lot of experience, with deep optimization implemented in listing and du/count statistical operations for large directories. The current results are that the listing of the basic edition is twice as fast as the community edition in massive directories process, and the du/count is 21% faster with more stable and reliable performance generally.

OSS system provides the mapping of key to binary large object (blob). The key is flat, which doesn't have hierarchical structure as file system. Therefore, the hierarchy of files or directories can only be emulated at the adaptation layer. Some critical file or directory operations are costly precisely because they rely on emulation rather than native support, the most well-known of which is rename. When rename or move a file, it only needs to move inode of the file on the directory tree in the file system, which is an atomic operation. However, in OSS, often limited by the internal implementation and the standard interfaces provided, the adapter generally needs to copy the object to a new location before deleting the old object. This process contains two independent steps and API calls. The rename on the directory is more complicated, which involves all the files under the directory, and each of them repeats the copy and deletion process. If the directory level is complicated, the rename operation also requires recursive nesting, which involves a large number of client calls. The copy of an object is usually related to its size, and is still slow in many products, which can be further improved. Alibaba Cloud OSS has made a lot of optimizations for fast copy. On rename operations of millions of large directories, JindoFS performs nearly three times faster than community version by the optimization and the combination of client concurrency.

In order to pursue ultra-large concurrency, many OSS products provide final consistency, such as S3, rather than the strong consistency semantics common in file systems. The impact is that, for example, the programmer wrote 10 files in a directory, however in the list, only some are visible. This is accuracy rather than performance. Therefore, the Hadoop community has made great efforts to address this issue in the S3A adaptation mode to meet computing requirements for big data in the adaptation layer. Similarly, AWS also provides EMRFS that supports ConsistentView. Alibaba Cloud OSS provides strong consistency. JindoFS greatly simplifies this feature so that users do not have to worry about consistency and correctness issues in computing frameworks.

OSS does not use directories. Directories are emulated through the adaptation layer. An operation on a directory is converted into multiple calls on the client for all subdirectories and files in the directory. Therefore, even though each call to an object is continuous, operations on this directory cannot be without interruption. For example, if the deletions of any subdirectory or file in a directory including retries fail, the directory deletion operation failed as a whole. What to do in this situation? Usually only one directory in an intermediate failure state can be left. When adapting to these directory operations, for instance, rename, copy, deletion, and etc., JindoFS integrates with the extension and optimization of Alibaba Cloud OSS to retry or roll back as much as possible on the client. JindoFS can link each computing of data lakes for correct processing between upstream and downstream components of a pipeline.

OSS products evolved independently with their own advantages. To make full use of these advantages may have to break through the limitations of HCFS abstract interfaces. Here, the advanced feature of OSS, Concurrent MultiPartUpload (CMPU), must be focused on. The feature allows the program to efficiently write to a large object in the form of concurrent upload. There are two advantages. One is that the large object can be written concurrently or even in a distributed manner to achieve high throughput and fully achieve the advantages of OSS. Another is all parts are written to a staging area first, and the entire object does not appear in the target location until executing complete instruction. By using the advanced feature of Alibaba Cloud OSS, JindoFS developed the Job Committer against MapReduce for Hadoop, Spark, and similar frameworks. In this mechanism, each task writes computing results to staging area based on its part, and then completes the results to the final area when it is committed without rename. Additional interfaces have also been revealed in the computing layer by the support of Flinkfile sink connector, and based on this feature, the Exactly-Once semantics have been performed as well.

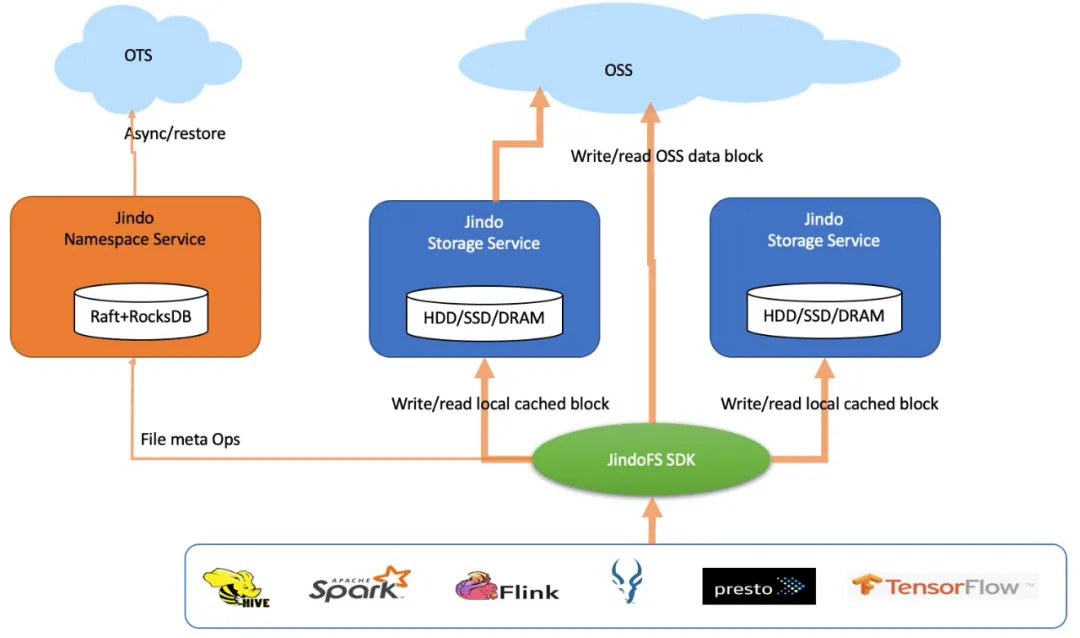

Another impact of the data lake architecture on big data computing is the separation of storage and computing. The separation decouples storage and computing in the architecture. Storage is supplying large capacities at a low cost and on a large scale, while computing is developing towards auto scaling, richness, and diversity. On the whole, it is conducive to the professional division of labor and the deepening of technology so that to maximize the customer value. However, the separation results in the supply of storage bandwidth that may be incompatible with the computing demand for storage bandwidth in some cases. Computing's access to storage needs across networks. In this case, the disappearance of data locality limits the access bandwidth by this network as a whole. More importantly, under the data lake concept, multiple computing result more and more computations requirements toward data access for bandwidth at the same time, eventually causing an imbalance between bandwidth supply and bandwidth demand. In many cases, for the same OSS bucket, data warehouses of Hive and Spark need to undergo extract-transform-load (ETL), while Presto needs interactive analysis, and machine learning needs to extract training data. These practices were unimaginable before the data lake era when MapReduce might be the most common scenario. The diversified computing requirements for the performance of data access and demands of throughput are even increasing. Users want to complete more computing tasks in resident clusters, while in clusters with auto scaling, computing tasks should be completed as soon as possible to release a large number of nodes for cost reduction. Moreover, tasks in interactive analysis, like Presto, have been considered to achieve faster and better results for sub-second stable responses without affection by other computing operations. In GPU training applications, it is expected to completely localize data to achieve great throughput. How to break these situations above? It is unrealistic to increase the storage throughput infinitely because the whole process is limited by the network between computing clusters. To effectively ensure the storage bandwidth requirement of rich computing, the answer given by specialists is the cache on the computing side. Alluxio has been doing this for a long time. The core of JindoFS is the data lake acceleration layer, which has the same idea with Alluxio. The following figure shows the cache architecture.

JindoFS provides distributed caching and computing acceleration while being optimized for Alibaba OSS adaptation. Data that has been written out and repeatedly accessed can be cached on local devices with optimization, such as HDDs, SSDs, and memories. The cache-based acceleration is transparent to users without additional detection and task modifications. In practice only need a configuration switch to enable data caching. Compared with some open source caching solutions, the remarkable performance shows multiple computing scenarios by the optimized adaptation. Thanks to the disk cache, which enables us to better balance the load on multiple disks and efficiently manage fine-grained cache blocks. When using 1TB of TPC-DS for comparative tests, the performance of Spark SQL is 27% faster, 93% lead in Presto and 42% performance lead in the HiveETL scenario. FUSE in JindoFS adapts full native code development without the burden of Java Virtual Machine (JVM). Based on SSD caching, the TensorFlow program could read the OSS data cached in JindoFS by using JindoFS for training. The performance is 40% faster than that of open-source solutions.

Under the data lake architecture, if cache devices deployed on the computing side and introduce caches, the benefits of computing acceleration can be realized. The increase in computing efficiency means less elastic computing resource usage and cost. This will also add additional cache costs and burdens to users. To determine how to measure the costs and benefits and whether to introduce a cache, tests and evaluation with the actual computing scenarios are needed.

Most large-scale analyses and machine learning training computations can be met after optimizing OSS adaptation in JindoFS to maximize the performance of Jindo distributed cache. There are large amounts of existing JindoFS deployments, including data warehouses such as Hive, Spark, and Impala, interactive analysis by Presto, or training by TensorFlow. The results of these deployments and uses showed that all ways can use the cache disks of Alibaba Cloud to customize compute models to implement efficient access to the OSS data lake. However, the data lake architecture determines the openness and diversity of computing. The above computations may be the main ones, but they are not the whole. JindoFS was initially designed to support a wide range of application scenarios through deployment. A typical situation is that quite a few users want JindoFS to completely replace HDFS, not only with Hive or Spark. Moreover, users do not want to mix other storage systems when they use the data lake architecture. So, the following cases should be considered.

First, during the above discussion of OSS adaptation, it is mentioned that some atomic requirements for file and directory operations cannot be solved, such as file rename, directory copy, rename and deletion. The solutions are to completely meet the file system semantics by file metadata management, such as that of HDFS NameNode.

Second, HDFS supports many advanced features and interfaces, including truncate, append, concat, hsync, snapshot, and Xattributes. For example, HBase relies on hsync and snapshots, while Flink relies on truncate. The openness of the data lake architecture also determines that more engines will be connected, and there are more requirements for these advanced interfaces.

Third, heavy users of HDFS want to be able to smoothly migrate to the cloud or fine-tune the selection of storage solutions, but the original HDFS-based applications can still be used for O&M and governance. Users also want to achieve functional support for Xattributes, file permissions, Ranger integration, and even audit log. In addition, in terms of performance, they want to get no less or better performance than HDFS, even without the optimization for NameNode. In order to enjoy the benefits of the data lake architecture as well, how to help these users upgrade their OSS-based architectures?

Fourth, to break through the limitations of OSS products such as S3, the big data industry is also customizing new data storage formats in depth for data lakes, such as Delta, Hudi, and Iceberg. The ways to support and effectively optimize such formats also requires further consideration.

Based on these factors, the JindoFS block mode has been developed and introduced. This model is deeply customized for big data computing on the basis of the OSS object storage with standard HCFS interface still. We firmly believe that, even the route of deep customization is taken, it is easier for users and computing engines to promote and use by following the existing standards and habits. Furthermore, this way is also more in line with the positioning and mission of lake acceleration. The JindoFS targets HDFS, but the difference is that it adopts the cloud-native architecture. Relying on the cloud platform, a lot of simplifications have been done, making the entire system flexible, lightweight, and easy to O&M.

As shown in the preceding figure, the system architecture of JindoFS in block mode reuses the cache system of JindoFS. Under the architecture, OSS stores files in blocks to guarantee data reliability and availability. OSS also backs up cached data in local clusters to accelerate the caching process. Asynchronous writing of file metadata to Alibaba's Open Table Service (OTS) database prevents local misuse and facilitates JindoFS cluster reconstruction and recovery. During normal read and write operations, metadata is cached in the local RocksDB database and least recently used (LRU) cache is used in the memory. Therefore, JindoFS supports hundreds of millions of files. JindoFS is more stable with higher throughput than HDFS during the peak hours of large-scale and highly concurrent jobs by the combination with granularity lock features of files and directories in the metadata service. For the most critical open and create operations in the concurrent tests by HDFS NNBench, the IOPS of JindoFS is 60% higher than that of HDFS. In the tests of tens of millions of large directories, the file listing operation is 130% faster than HDFS, and du/count operation of file statistics is twice as faster as HDFS does. With the distributed Raft protocol, JindoFS supports high availability (HA) and multiple namespaces, then the overall deployment and maintenance of JindoFS is much simpler than that of HDFS. In terms of IO throughput, IO could be read by both local disks and bandwidth of OSS. Therefore, with DFSIO in the same cluster adaptation, the read throughput in JindoFS is 33% faster than that in HDFS.

JindoFS further supports the block mode in the lake acceleration solution, broadening more application scenarios of data lakes and more engines supports. Many customers are supported to use HBase. In order to benefit from this architecture with the separation of storage and computing and to accelerate the cache by using locally managed storage devices, more open-source engines will be developed. For example, for Kafka, Kudu, and ClickHouse of OLAP, it is important to free those from handling bad disks and scale to focus on their scenarios. Originally, some customers who insisted on using HDFS were attracted by the advantages of light O&M, flexibility, low cost and high performance under the block mode. So, these customers also switched to the data lake architecture. Just like the cache mode that JindoFS supports for OSS, this mode still compatibly supports for HCFS and FUSE. There is no extra burden on the use of a large number of data lake engines.

In conclusion, it is an obvious trend in the industry to upgrade the architecture of the big data platform based on the data lake. The data lake architecture includes lake storage, lake acceleration and lake analysis. Alibaba Cloud provides a variety of solutions of data lake acceleration for various scenarios by JindoFS. Alibaba Cloud also launched Data Lake Formation for data lake management.

Based on years of experience in the cloud, Alibaba Cloud has collected various scenarios, challenges, and technical solutions for data lake acceleration in EMR JindoFS. This article aims to make a comprehensive explanation of the Alibaba Cloud data lake solution by showing optimization methods, and the advantages of JindoFS over the existing community solutions. At the same time, more values may be brought for the big data exploration by understanding Alibaba Cloud data lakes by the combination of JindoFS and OSS, DataLake Formation and EMR.

Zheng Kai, also known as Tiejie, is a senior technical specialist of Alibaba Group. He is a member of Apache Hadoop Project Management Committee (PMC), and the founder of Apache Kerby. Zheng Kai has been deeply engaged in the development of distributed systems and open-source big data systems for many years. Currently, he is working on Hadoop and Spark of Alibaba Cloud, aiming to improve the usability and elasticity of these big data platforms.

62 posts | 7 followers

FollowAlibaba EMR - May 14, 2021

Alibaba EMR - June 8, 2021

Alibaba Cloud MaxCompute - December 22, 2021

Alibaba EMR - July 9, 2021

Alibaba EMR - August 5, 2024

Alibaba Cloud MaxCompute - July 15, 2021

62 posts | 7 followers

Follow Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Data Lake Formation

Data Lake Formation

An end-to-end solution to efficiently build a secure data lake

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba EMR