By Zheng Kai – Alibaba Cloud's Senior Technical Expert and Zhang Chenhui – Alibaba Cloud's Product Expert

What is a data lake? How is the data lake analyzed, developed, and governed? This article introduces the best practices and cases for building, analyzing, developing, and governing cloud-native data lakes.

The concept of data lake has been very popular in recent years, which is also a hot topic for practitioners who engage in big data. Some may say, "We are doing Hudi data lake. Do you use Delta or Iceberg?" Others may say, "I have an OSS data lake on Alibaba Cloud." "What? You use HDFS for your data lake?" "We are implementing JindoFS on Alibaba Cloud to optimize data lakes." "We were recently holding discussions on lake-warehouse unification."

It is fair to say that discussions on data lakes vary. Each technical engineer may have a different understanding when working with data lakes based on their background. So, what does a data lake mean? First, let's learn about the three elements of a data lake.

Three core elements of a data lake are listed below:

1. All-Inclusive Data

2. Ideal Storage

3. Open Computing

What is a cloud-native data lake?

The concept of a cloud-native data lake is a data lake storage system based on the concept of cloud-native. It is built quickly out of Data Lake Formation products based on the Object Storage Service (OSS) out of the data lake. Based on these requirements, we can perform BI and AI analysis. Let's take the cloud-native data lake on Alibaba Cloud as an example to describe how to migrate to the cloud.

As you can see, we can use Alibaba Cloud Data Lake Formation and a unified OSS to build a data lake quickly.

With this data lake, we can migrate various data sources to the lake using the DataHub integrated by data and the methods provided by Data Lake Formation. Our main business purpose is to use the various open computing methods mentioned above for analysis.

We provide MaxCompute, an analysis product developed by Alibaba Cloud, and E-MapReduce, an open-source big data analysis product for BI analysis. We can use the Alibaba Cloud PAI and EMR DataScience to perform AI analysis.

As mentioned earlier, computing is very open and diversified. If you have a self-built Hadoop cluster or a CDH cluster on Alibaba Cloud, you can also connect the cluster to a data lake for data analysis. Strategically, we have cooperated with a large number of third-party products, such as Databricks, which can also analyze the data lake.

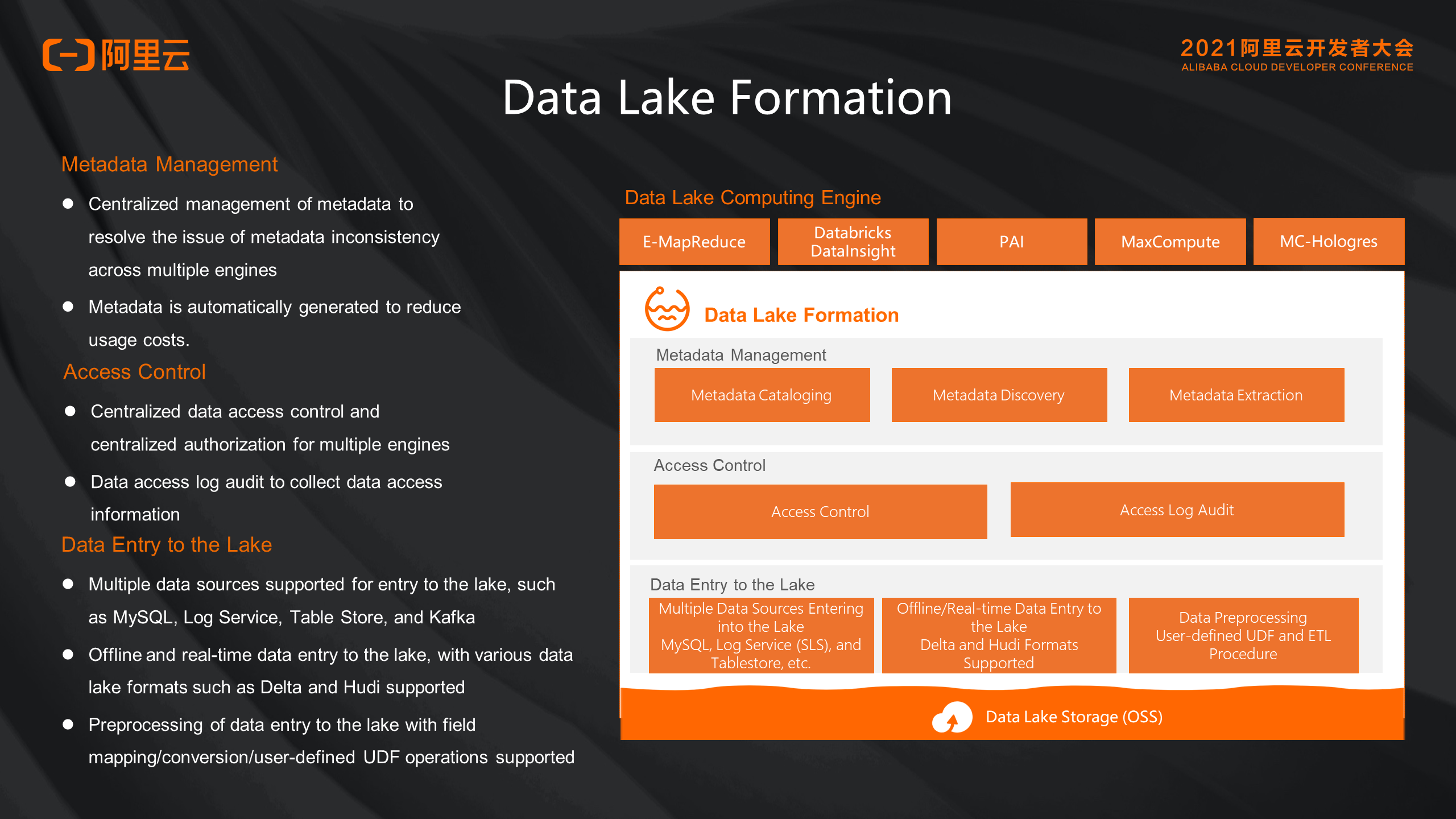

Next, let's take a look at the process of building and analyzing a data lake and how we support it. The following section talks about how to build a data lake. For this purpose, we have provided a product called Data Lake Formation.

The core is to maintain the metadata of the data lake. A data lake includes the data and metadata of the data. Metadata is centrally managed and stored in the Data Lake Formation. This avoids inconsistency caused by different computing products maintaining the file metadata separately. With centralized management, we can also perform centralized access control for permissions or log auditing. Data Lake Formation supports offline and real-time connection to various data sources, such as MySQL and Kafka. Currently, it supports the Delta and Hudi data lake formats.

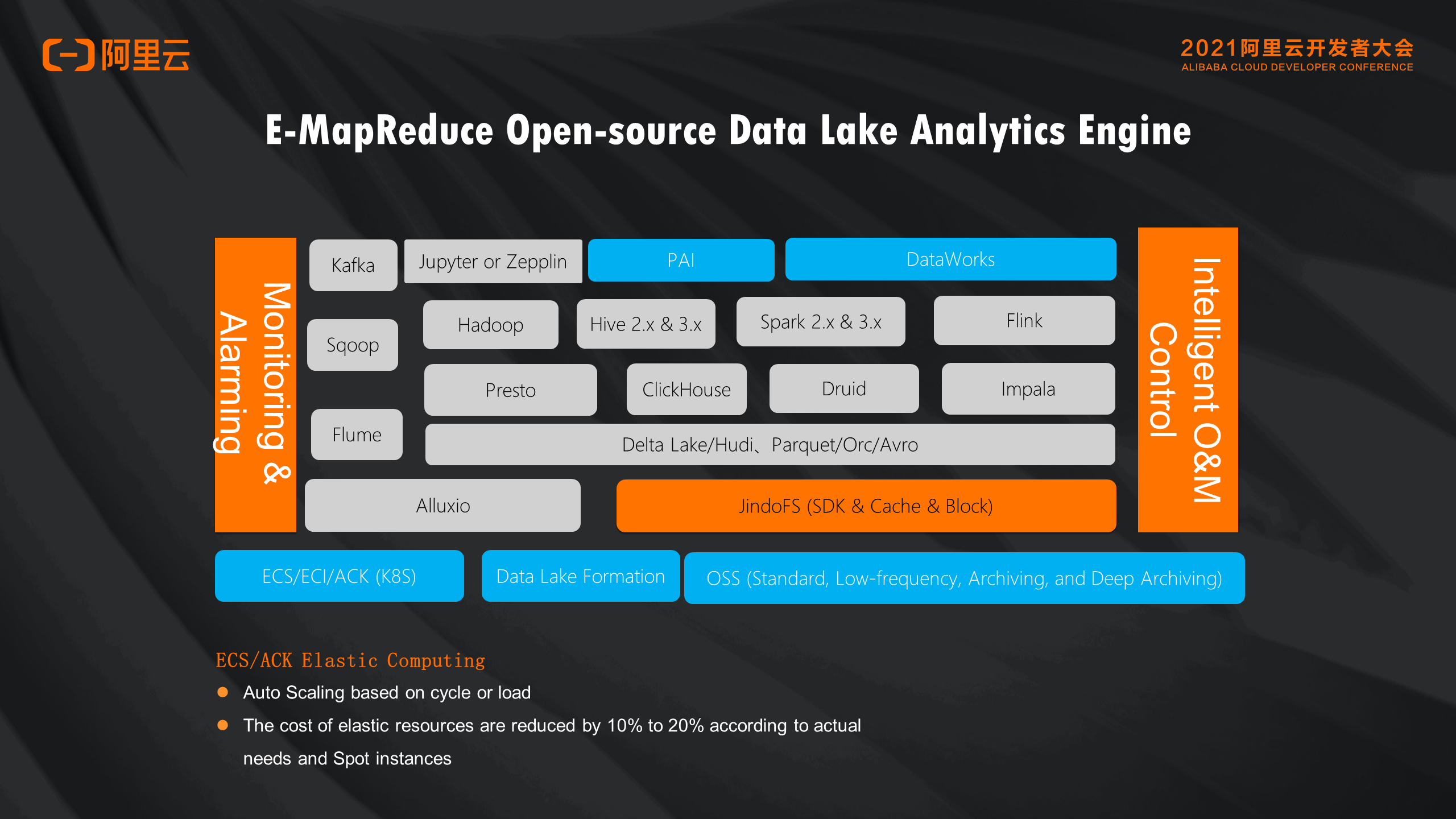

Next, in terms of analysis products, we use E-MapReduce, the open-source big data analytics suite, to do data lake analytics.

E-MapReduce is an open-source big data platform. Here, we only list the relevant support for data lake analysis. We also support data lake acceleration between analysis engines and OSS data lakes. We have Alluxio, an open-source accelerator, and JindoFS, an exclusive accelerator. JindoFS provides data lake OSS with open-source analytics engines.

The entire EMR product can run on ACK or ECS. We make the entire data lake analysis cost-effective using the auto scaling of ECS and ACK.

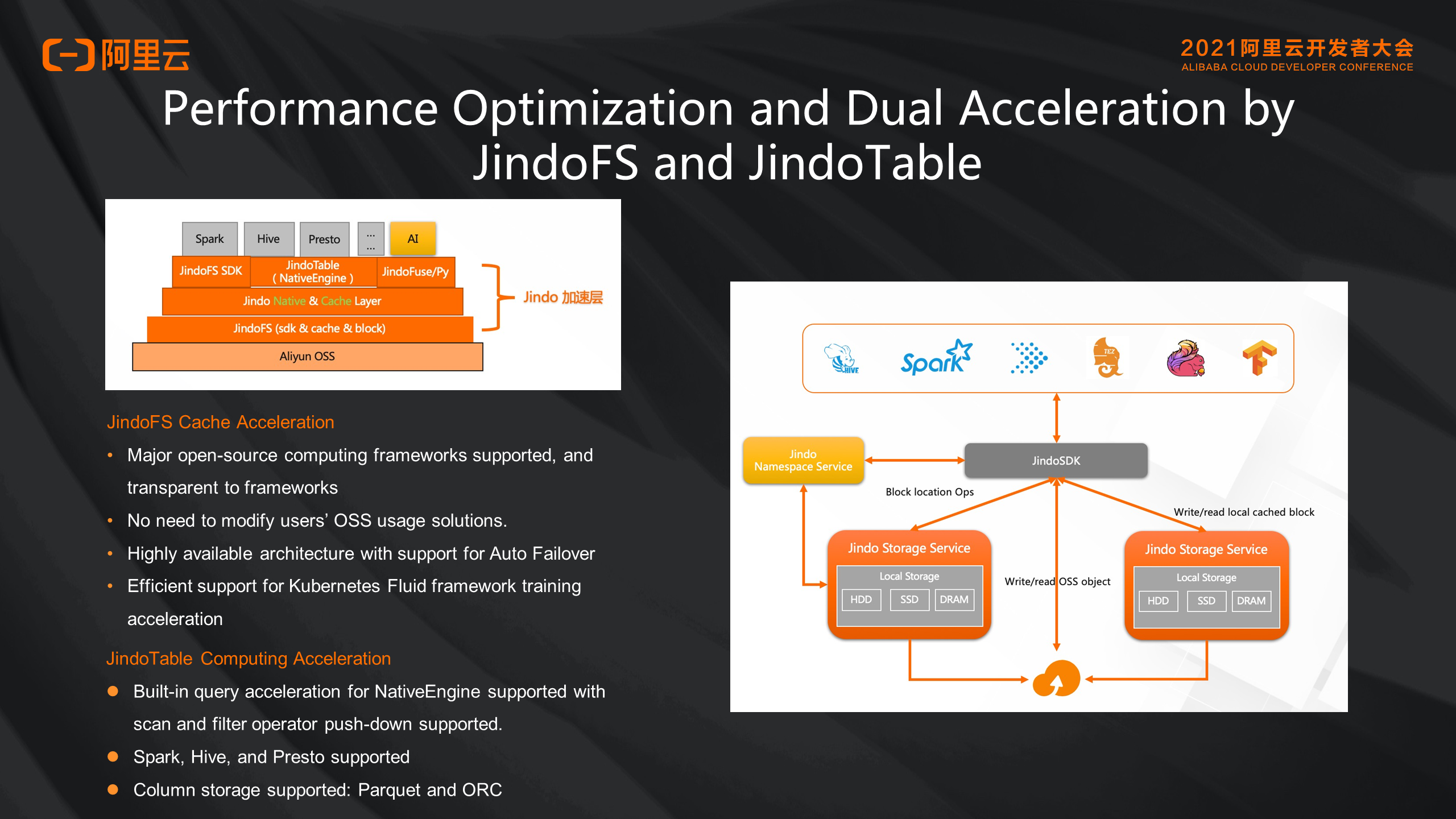

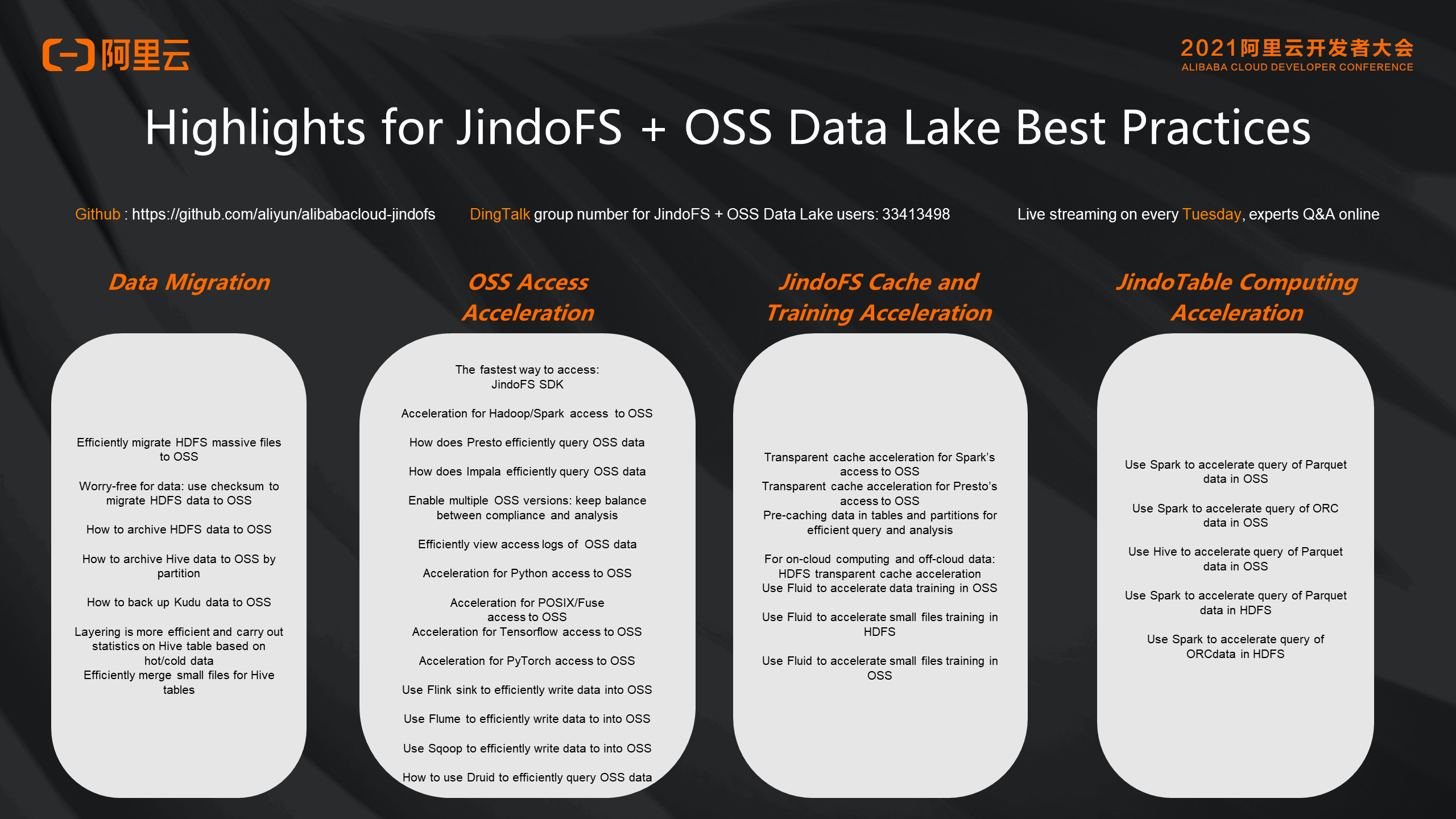

As mentioned earlier, the EMR product suite includes data lake acceleration. Now, let's focus on the dual-acceleration capabilities of JindoFS and JindoTable.

JindoFS mainly uses disk resources at the computing side to accelerate the caching of remote data in OSS from the file system level, improving the analysis and processing capabilities of Hive, Spark, and Presto significantly. JindoTable and JindoFS work together to cache and accelerate at the table partitioning level. Then, the Parquet and ORC formats are optimized in a native way, improving the processing capability of the analysis engines mentioned above.

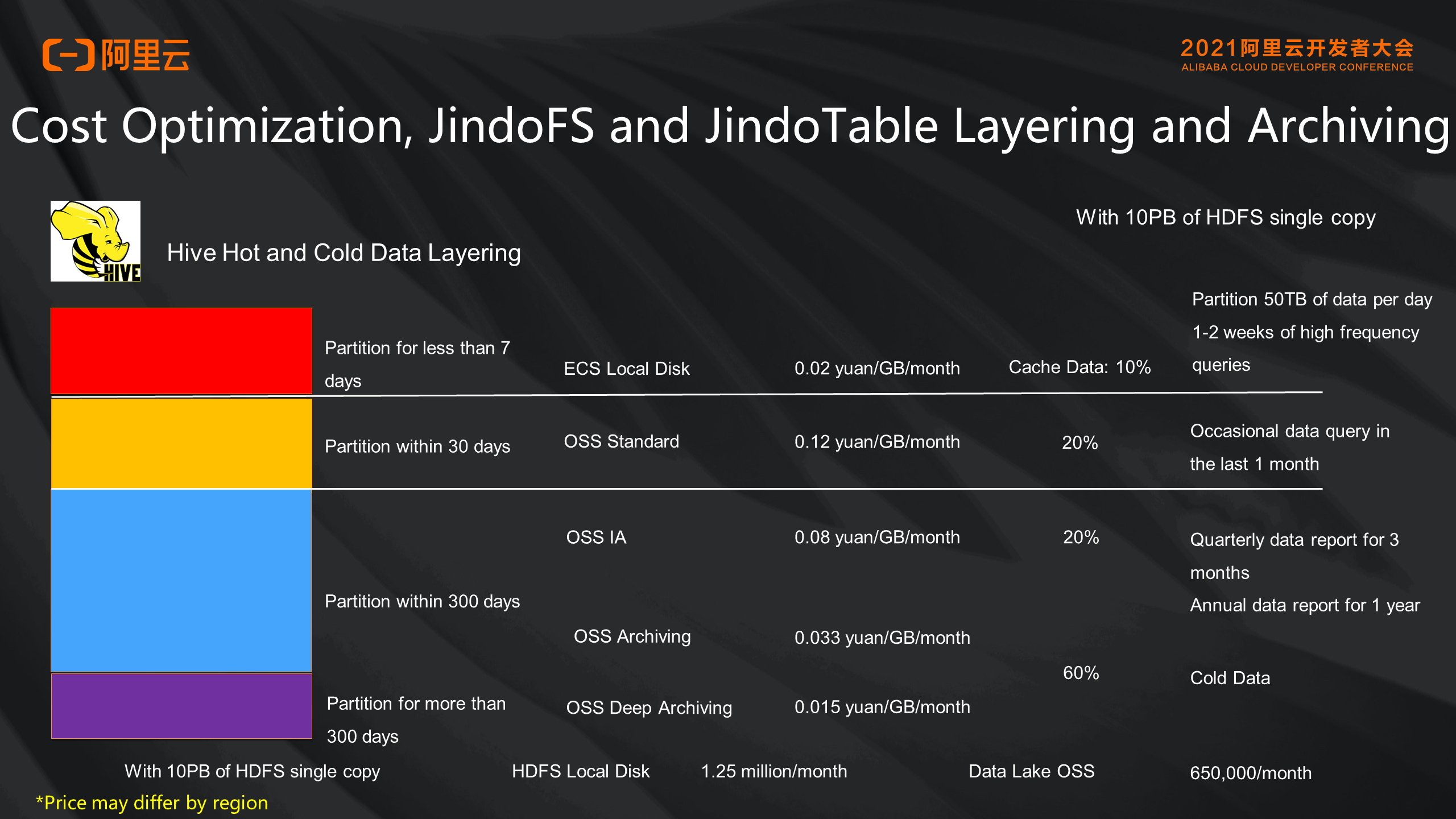

In addition to performance optimization, a data lake requires cost optimization because it stores a large amount of data. JindoTable and JindoFS also work collaboratively through layering and archiving. We can maintain the popularity of the data using the basic capabilities of OSS and then use the relevant commands of Jindo to cache, archive, and layer the data. With layering, if a user has 10PB of data, it costs millions of RMB to implement the HDFS storage solution. However, if we switch to the OSS data lake solution, we can archive a large amount of cold data and reduce storage costs significantly.

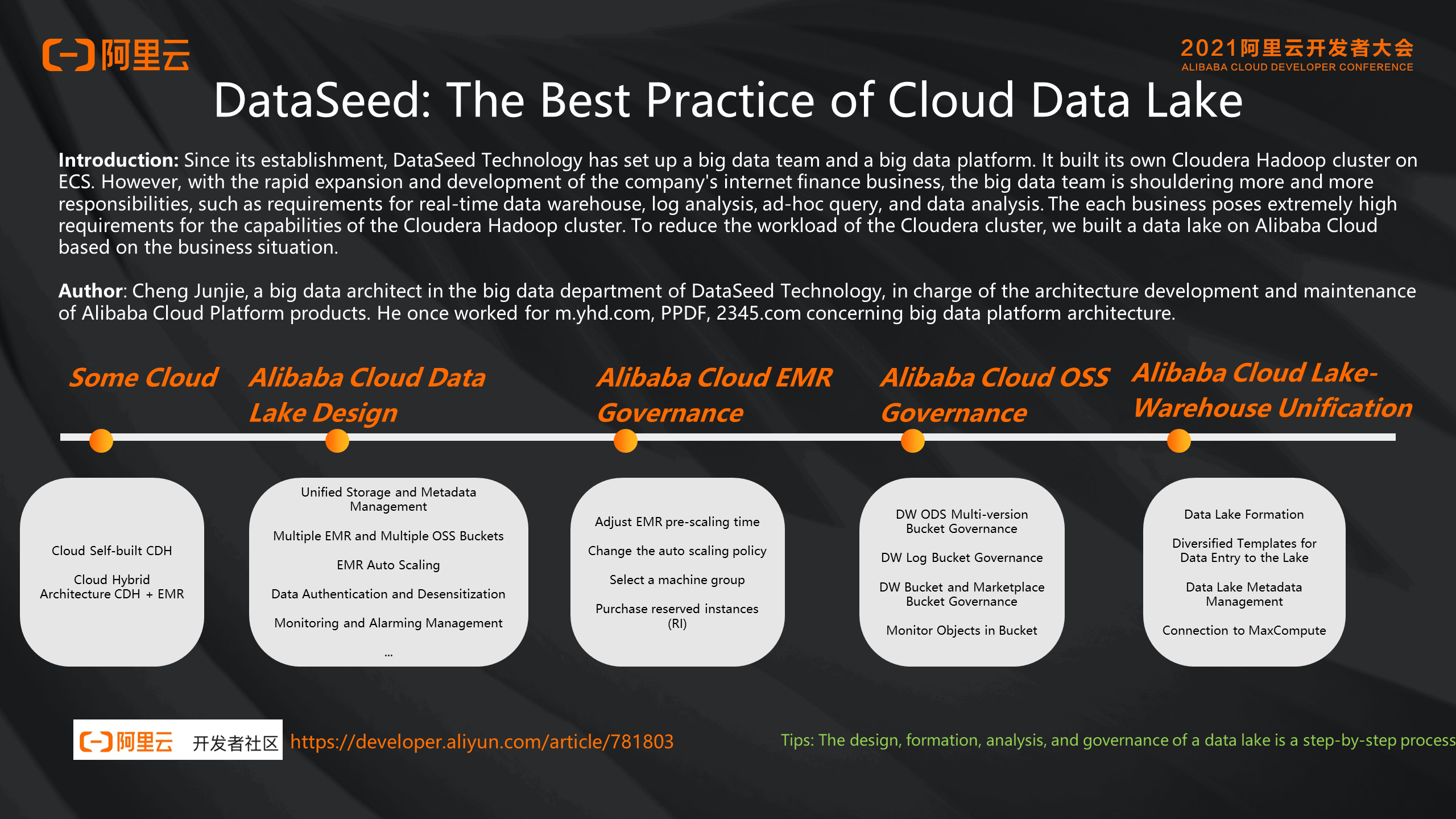

Next, let's look at the best practice of data lake from a case study article shared in the Alibaba Cloud community, written by Mr. Cheng Junjie, a big data architect from Shanghai DataSeed Information Technology. We summarized and edited his article (with his consent) in the section below:

Shanghai DataSeed Information Technology uses CDH + EMR on the cloud and deploys hybrid cloud architecture. After migrating to Alibaba Cloud, it was designed based on the philosophy of data lake, taking different business requirements into account as well as permission control and masking.

Over the past year, after successfully migrating to the Alibaba Cloud Data Lake architecture, they also implemented EMR governance and OSS governance. Lots of their governance experiences are shared in the article. Recently, they upgraded to the lake-warehouse unification architecture, which manages metadata in a centralized manner using Data Lake Formation. Then, they use multiple Alibaba Cloud computing products, such as EMR and MaxCompute, to analyze the data lake.

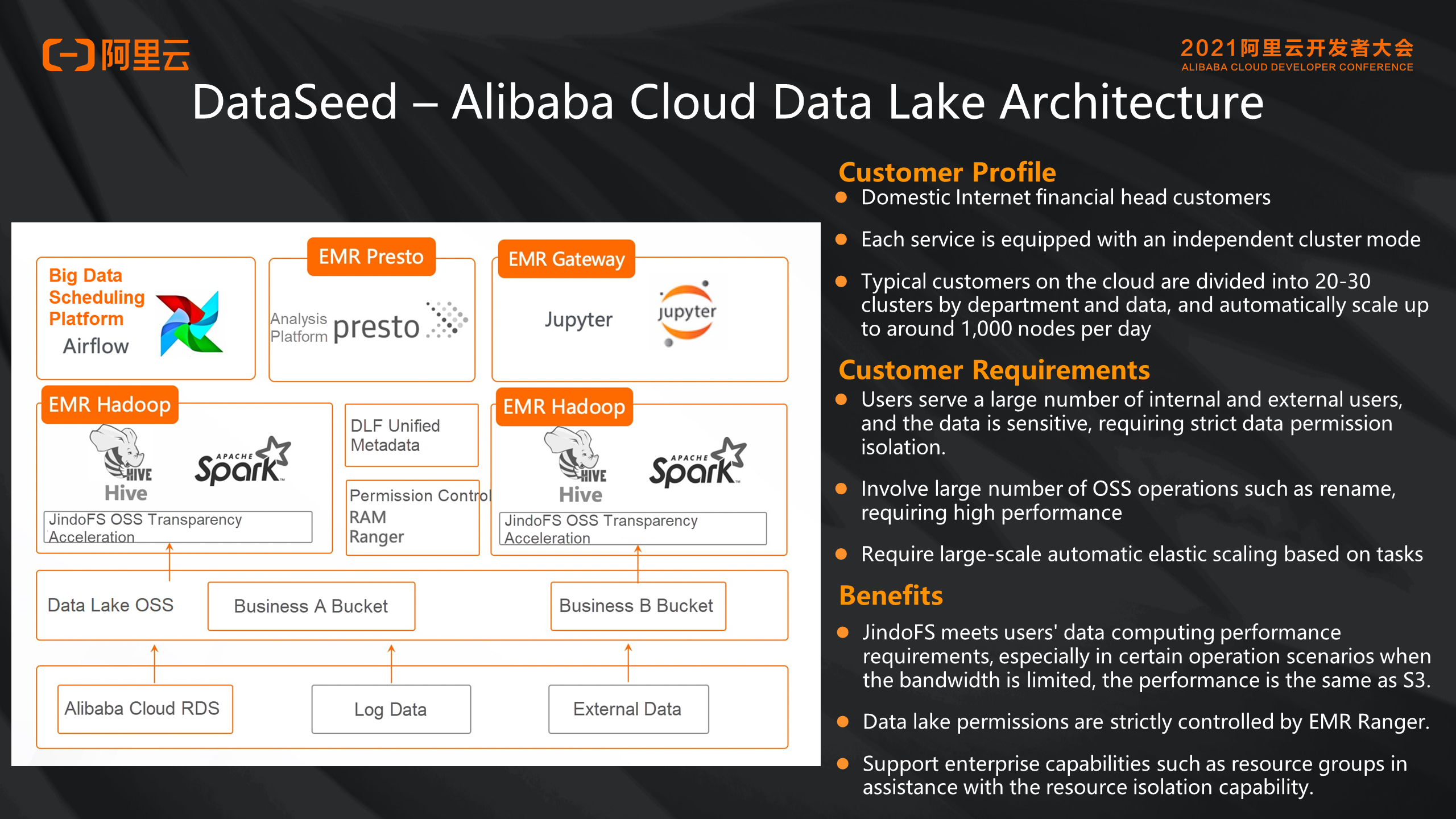

The diagram above is the architecture of the data lake deployed by DataSeed Information Technology on Alibaba Cloud. As you can see, this architecture owns multiple buckets on the OSS data lake. Multiple EMR clusters can perform analysis based on different business requirements using the OSS transparent cache acceleration capability provided by JindoFS on the cloud. When dealing with a large number of clusters, their scheduling is based on Airflow, a popular big data scheduling platform.

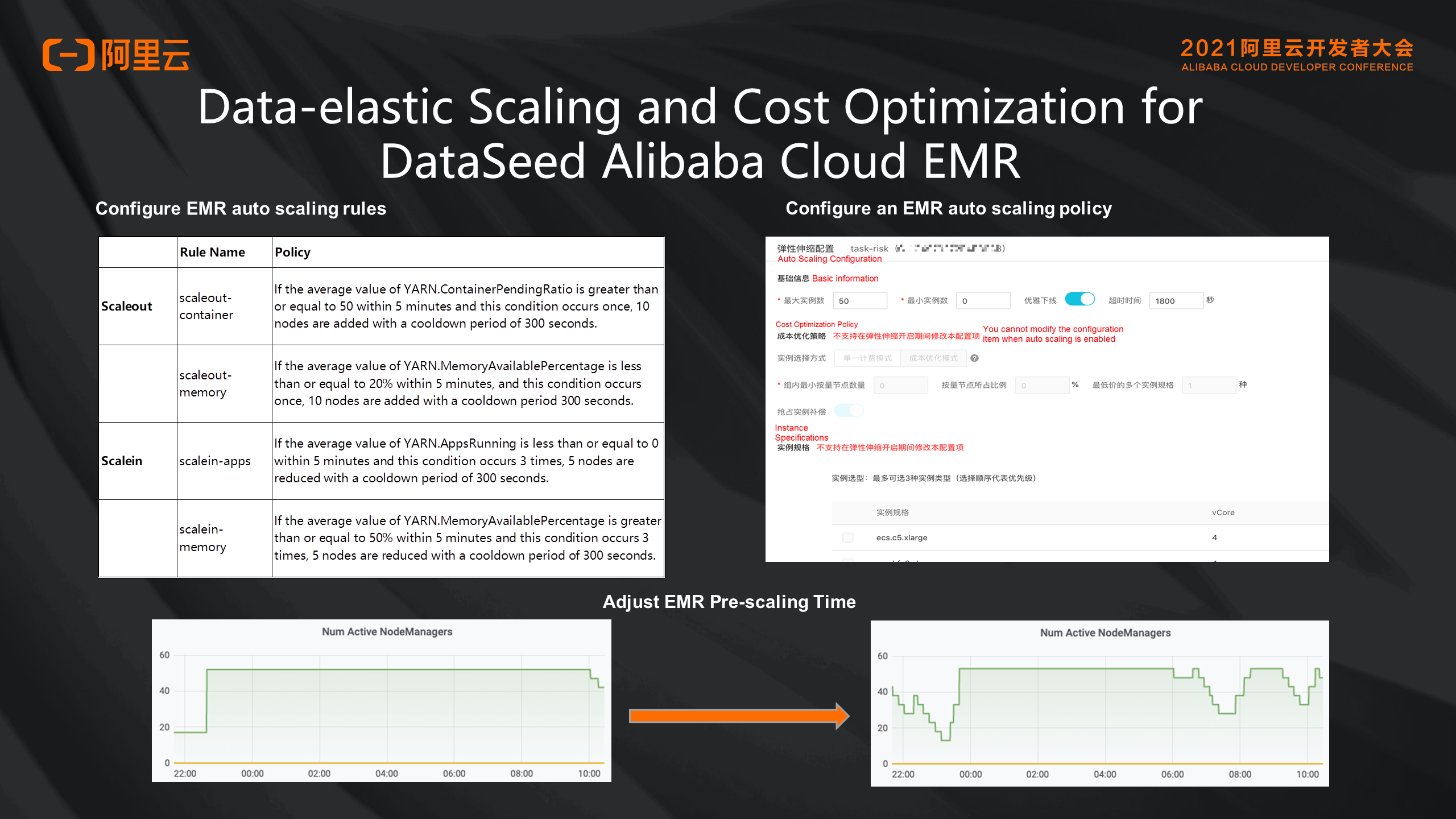

As mentioned earlier, one of the great values of a data lake is to reduce storage and computing costs. Computing costs are reduced mainly by elastic scaling. In EMR, you can set the auto scaling policies and rules. You can learn when to scale clusters in and out based on the auto scaling rules and the YARN scheduling capability. You can set the time for pre-scaling and use it as necessary to minimize computing costs. This is completely different from the Hadoop cluster implemented several years ago.

The preceding section describes how to build a cloud-native data lake. There is so much structured, semi-structured, and unstructured data stored in your database and data warehouse; how should we manage it? First, let's take a look at the problems enterprises face in the process of data management.

1. Data Silos:

2. High Costs of Data Development and O&M:

3. Difficulty in Data Sharing and Application

4. Difficulty in Governance of Large-Scale Data

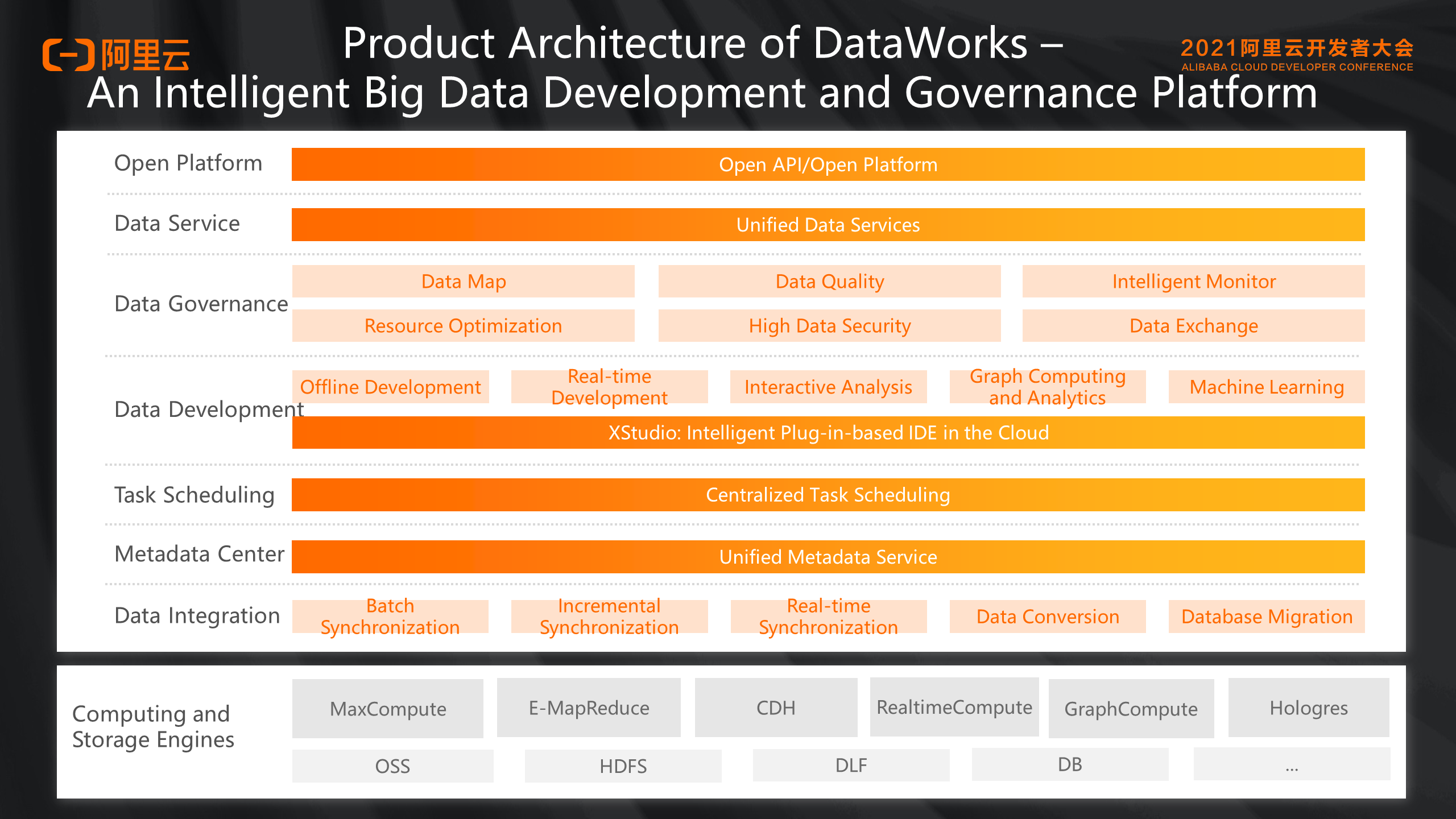

Alibaba Cloud DataWorks provides an all-in-one data development and governance solution to address these problems.

It is built on different computing and storage engines, including the MaxCompute big data service developed by Alibaba Cloud and the open-source big data platform EMR/CDH. It also supports real-time computing, graph computing, and interactive analysis. It is built on lake-warehouse unification systems based on OSS, HDFS, and DLF to provide real-time and offline data integration and development. It uses unified scheduling tasks and metadata services to provide a wide range of data governance capabilities, such as data quality, intelligent monitoring, data map, data security, and resource optimization. Unified metadata service enables enterprises to cover the last mile from the data platform to the business system.

Finally, we can make the entire platform open to customers through open APIs, and you can perform deep integration of the entire DataWorks product without seeing the DataWorks interface.

What are the core capabilities of such a product? It is summarized below:

First, it enables data warehousing through data integration. Second, after the data entering system, the data development will refine and develop the data by supporting multi-engine capabilities. Third, data governance provides a unified metadata service based on the multi-engine lake-warehouse unification system, so your data can be easier to use with higher availability. Finally, you can use data service to transfer data to your system.

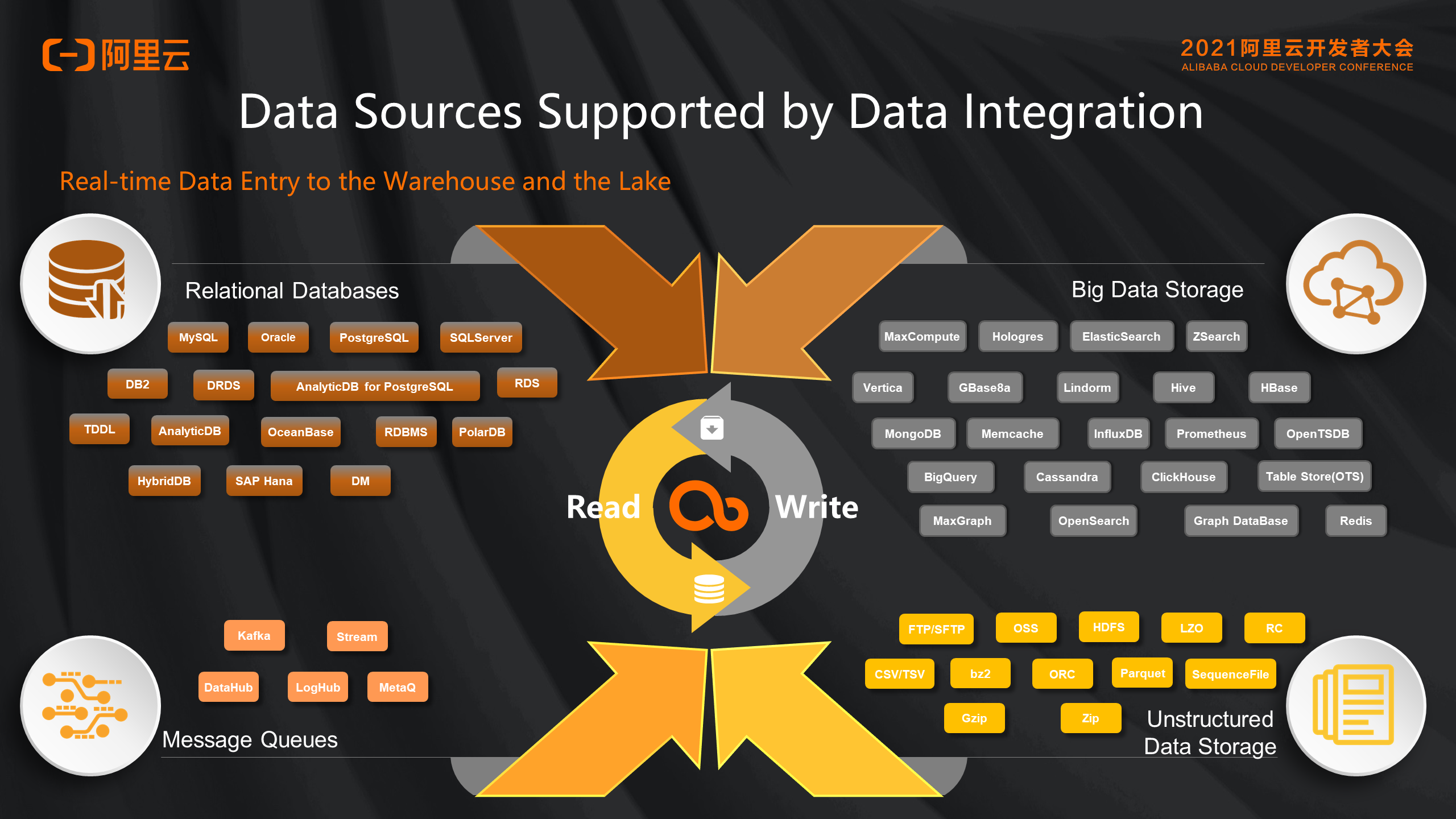

Data integration provides synchronization between more than 50 types of data, such as relational databases, big data storage, message queues, and unstructured data. Meanwhile, we provide offline and real-time warehousing and lake access.

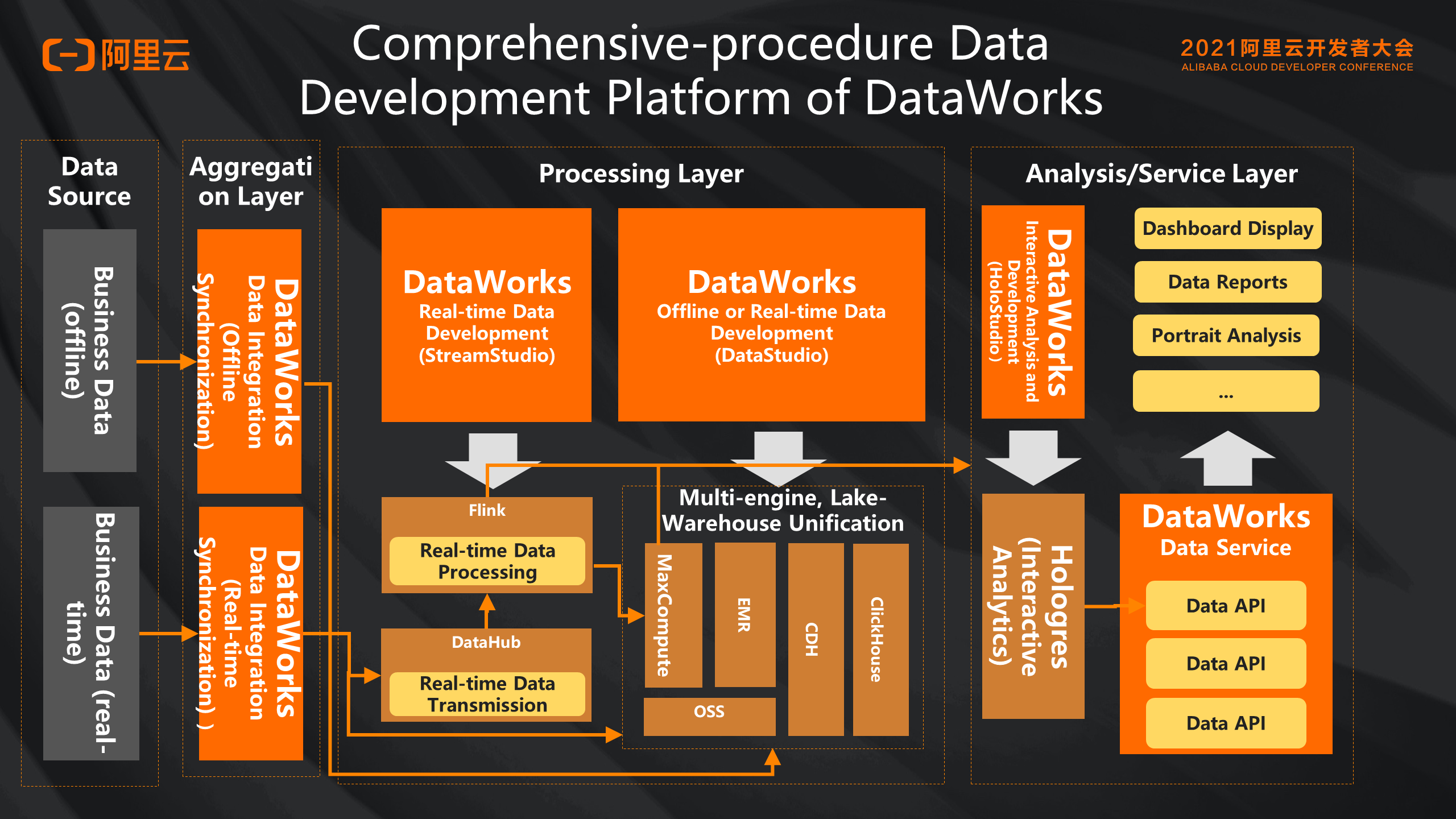

When the business data enters the computing and storage engines through data integration, DataWorks can perform real-time and offline development. With multiple engines supported and cross-engine scheduling, you can choose the most suitable scheduling engine according to the performance of various engines and integrate them as a whole for data processing and development. Finally, the data will be provided through data service for various BI analysis tools to display data reports and image analysis.

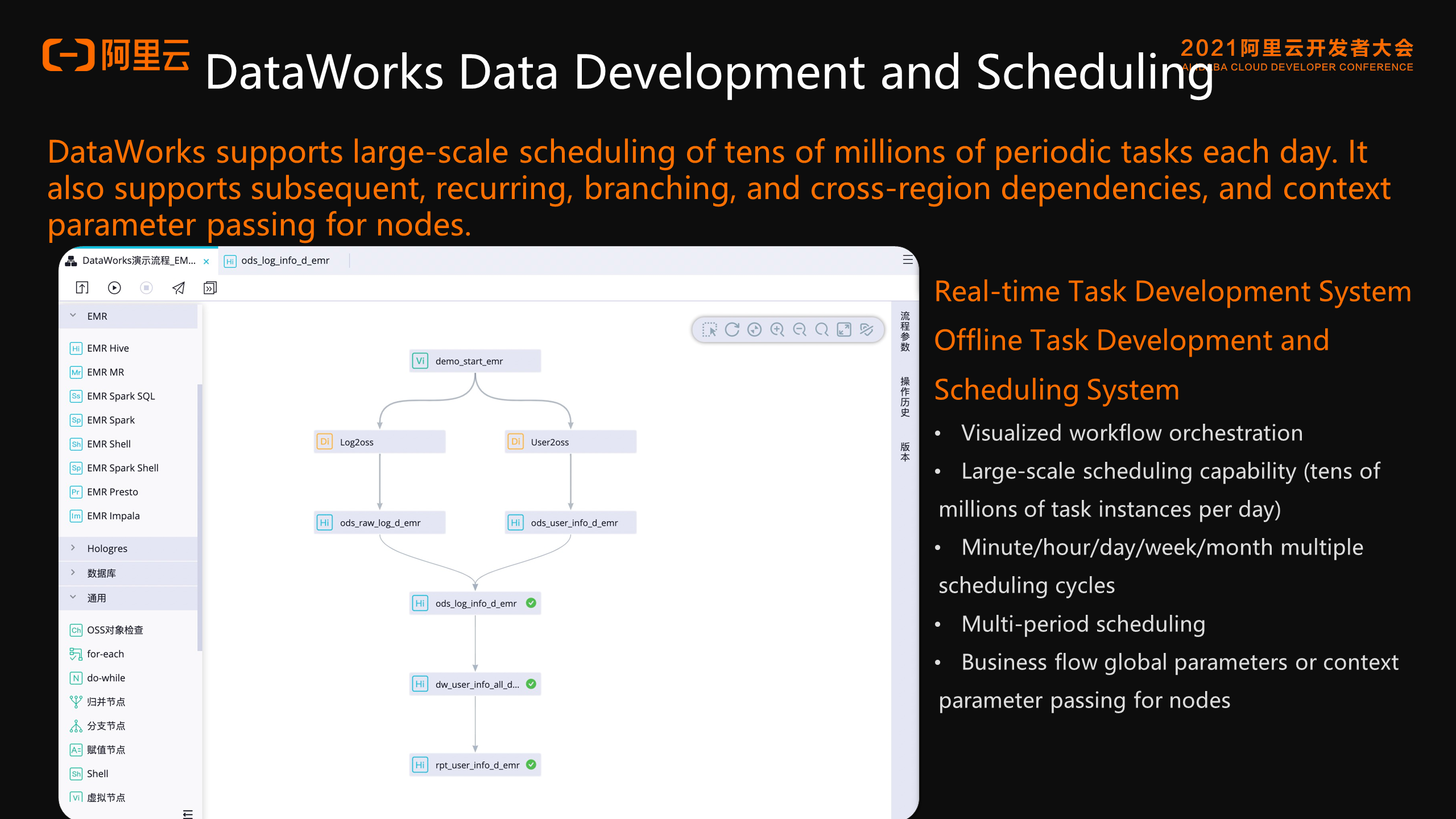

The preceding figure shows the price of a simple EMR-based data development schedule. We can see that it supports different types of EMR jobs. In addition, it supports a series of logical business nodes, including loops, sequences, branches, cross-region, and dependency. It provides large-scale scheduling with tens of millions of data per day to conform to the complex logical business structure of the enterprise.

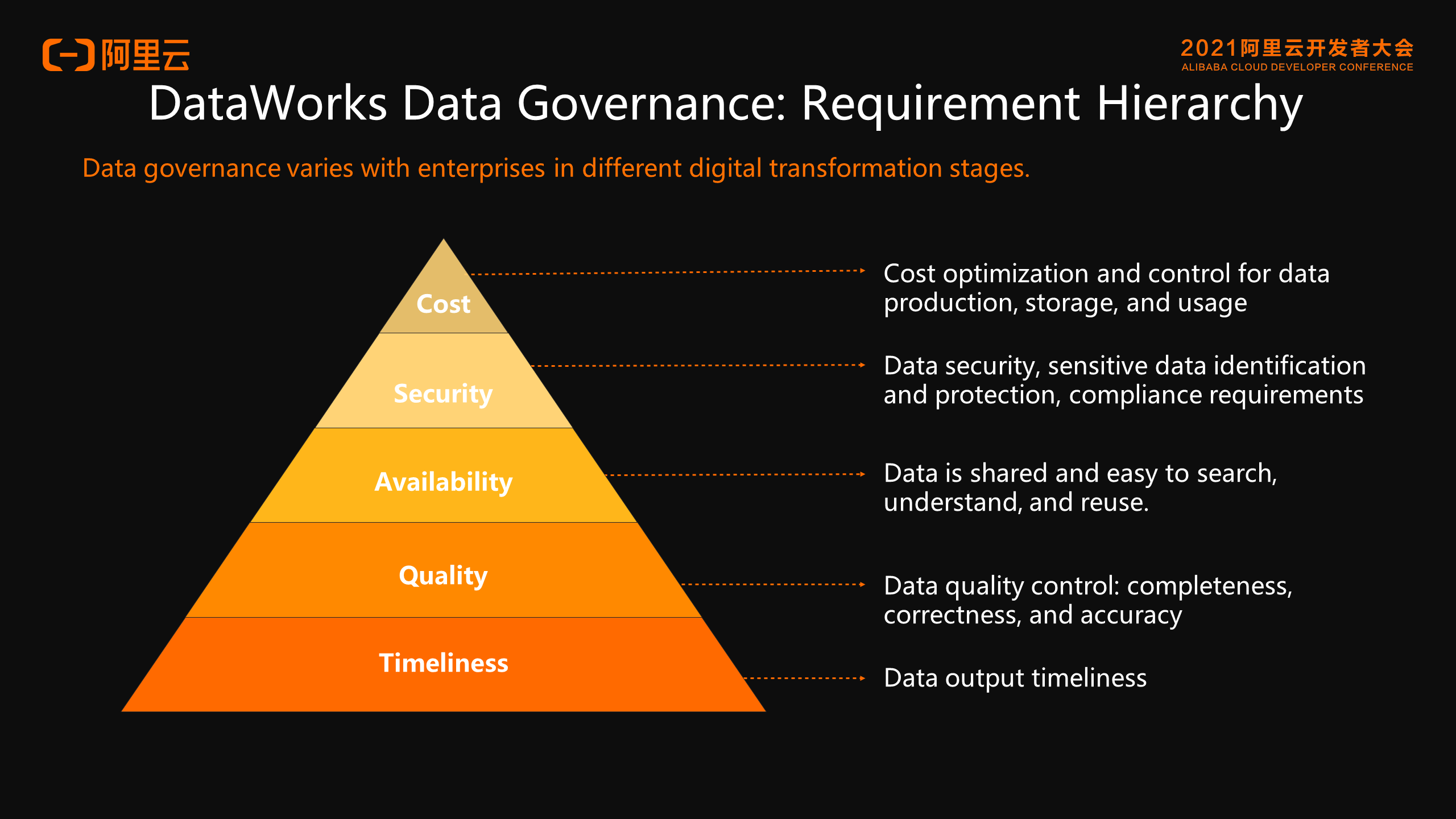

This data goes through precise data development. With the continuous development of enterprises' businesses and different stages of digital transformation, different requirements for data governance are presented at different levels. Apart from the proper real-time data production, a higher demand is put forward in data sharing, ease of use, easy understanding, data security, sensitive data identification, and cost optimization.

In terms of various data governance requirements, what capabilities can DataWorks provide to help enterprises manage and govern data?

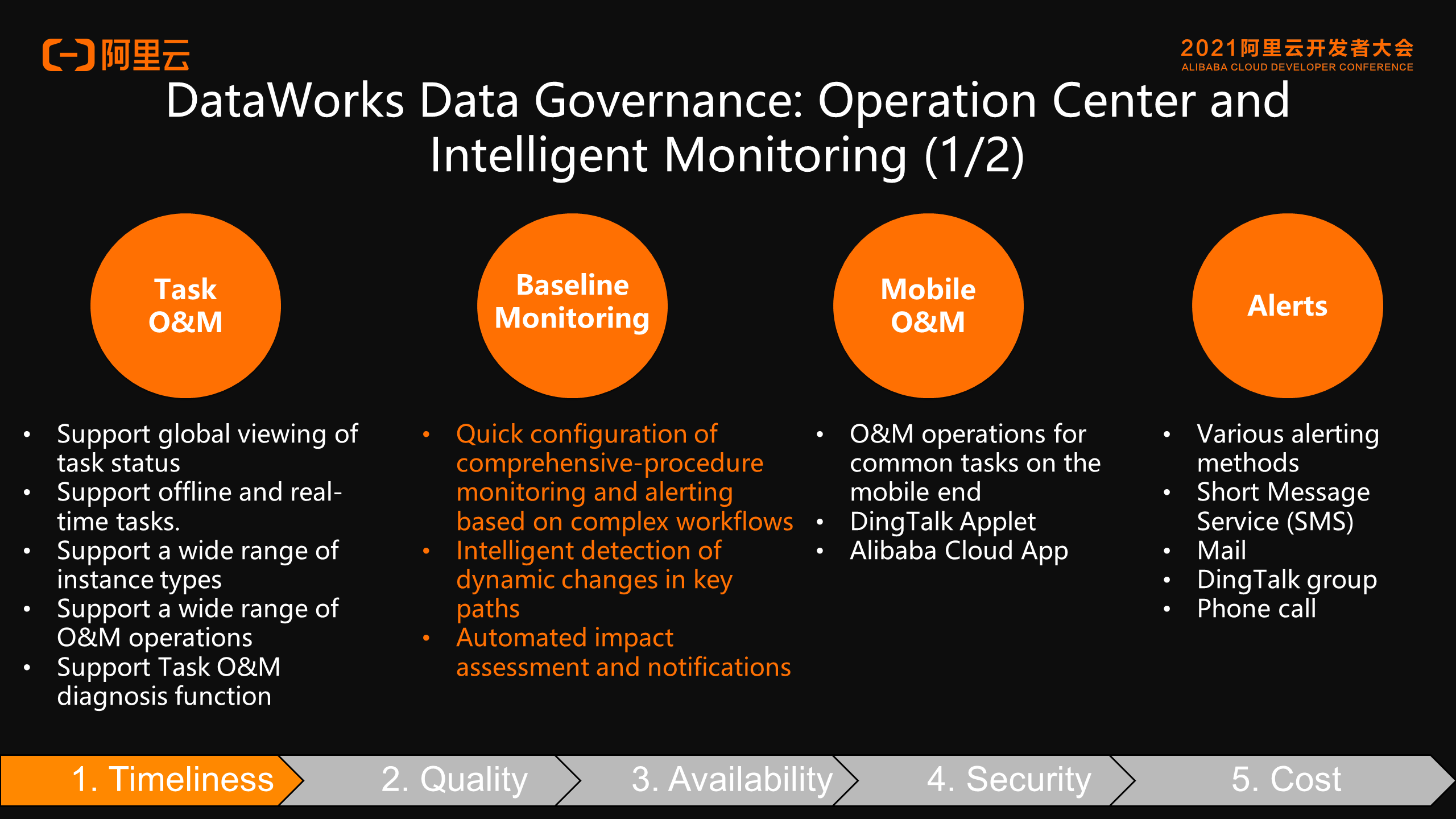

First of all, in terms of timeliness, we have an all-around O&M and intelligent monitoring system. Various means, such as SMS, email, DingTalk, phone call, and mobile O&M, are used to alert you in a timely manner so you can handle your online tasks in time on your mobile phone.

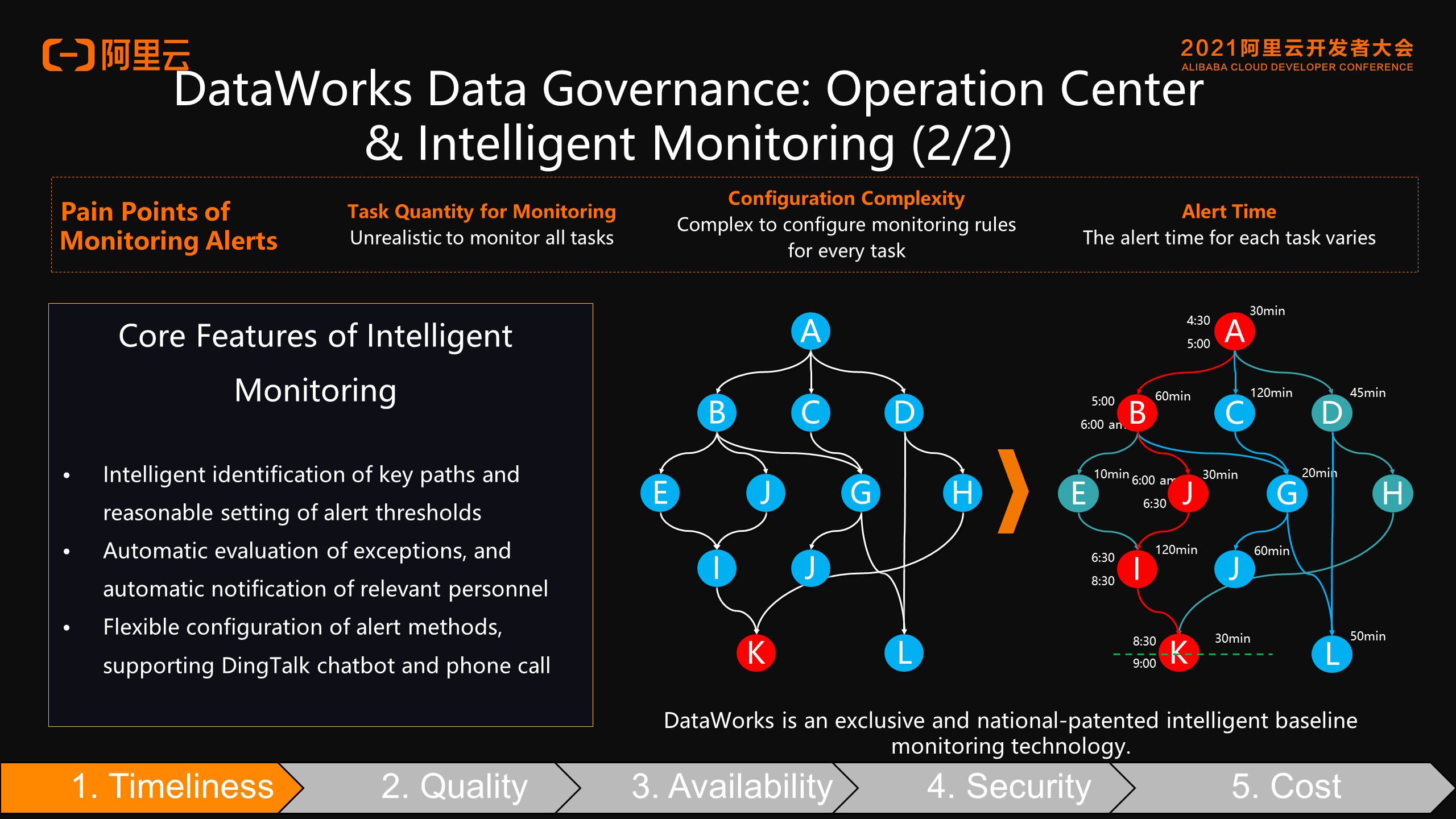

As you can see, this is a national patent-winning intelligent baseline monitoring technology exclusively created by DataWorks. On the node K shown in the preceding figure, you only need to focus on the final node rather than on its upstream node. DataWorks can search for and traverse its upstream nodes intelligently. It can find out its key path and set up corresponding intelligent monitoring and warning at each node on the key path, so problems can be detected in advance for timely intervention.

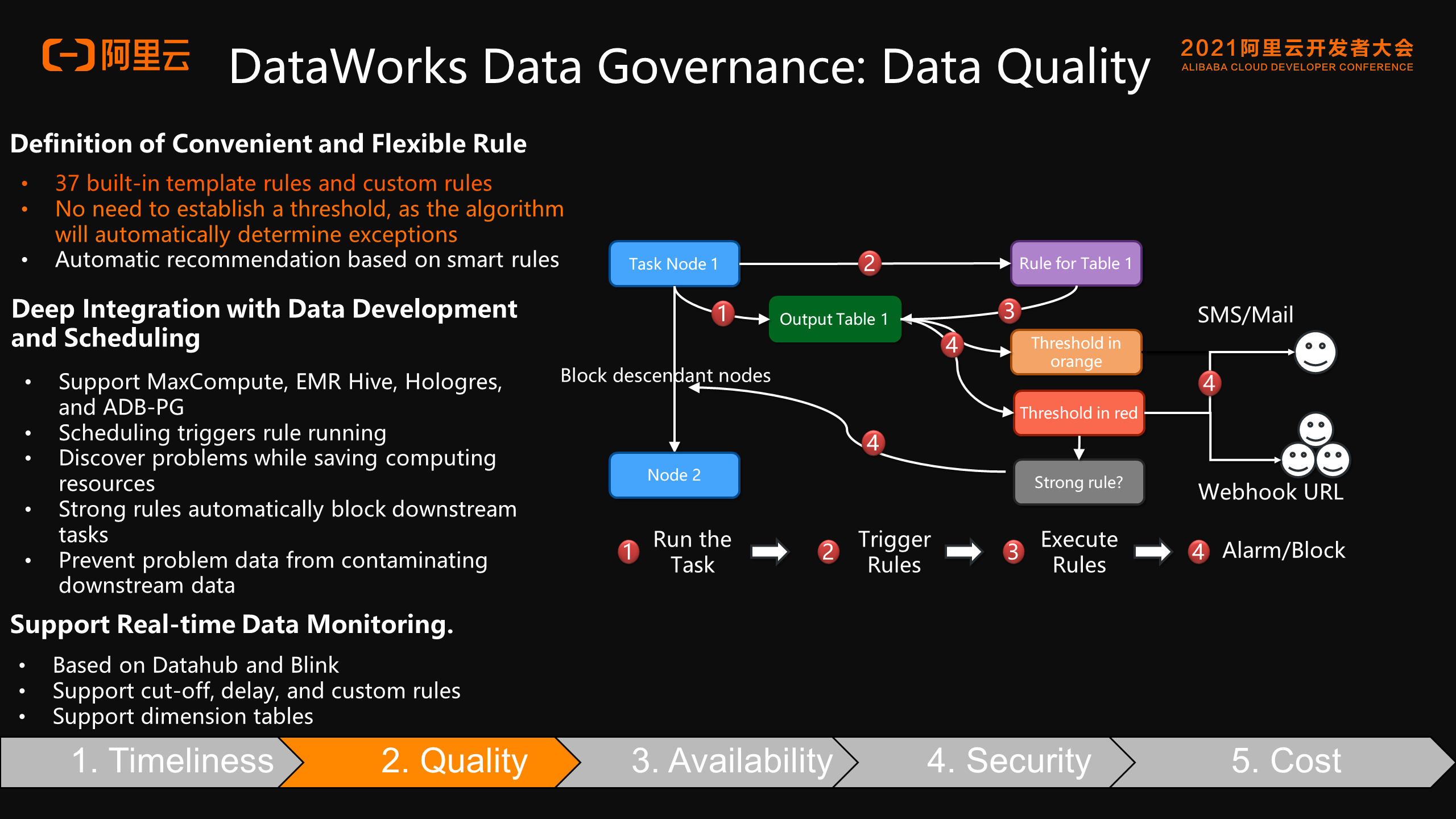

We provide over 30 built-in templates and custom template settings, allowing you to set up verification rules for any report based on your needs. At the same time, it is closely integrated with the data development process. You can set corresponding rules for any table in any of your business nodes that will also be triggered when tasks are scheduled at this node. You can also set various alarms by level or block downstream services to prevent dirty data generation.

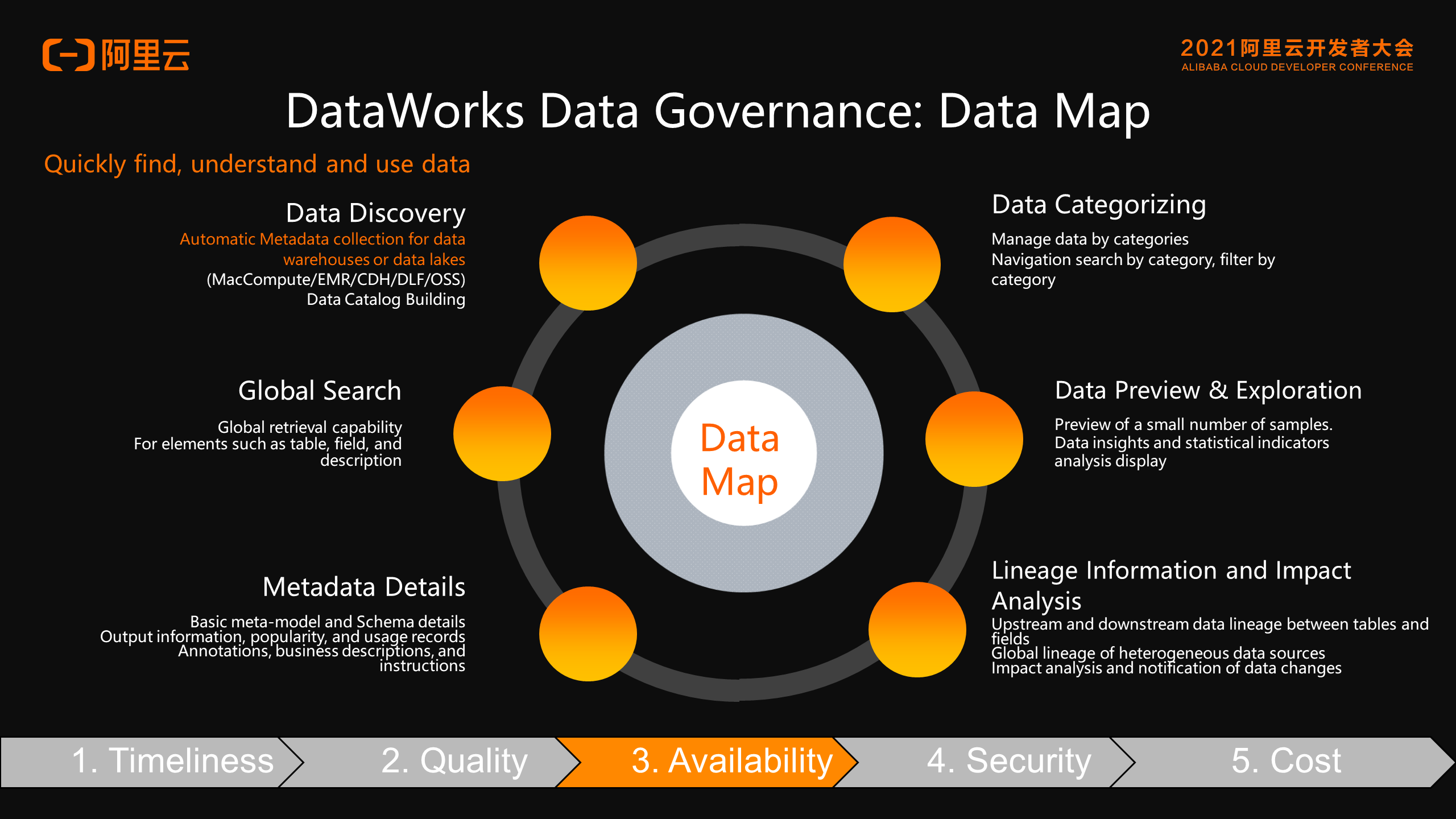

DataWorks provides unified metadata collection and management of different engines for lake-warehouse unification based on high quality and efficient data generation. The metadata collected and managed provides global data retrieval, detailed analysis, data popularity, data output information, and accurate data lineage. You can trace the source of the data and perform various data analyses based on this lineage, which makes it easier to understand, use, and search for the data.

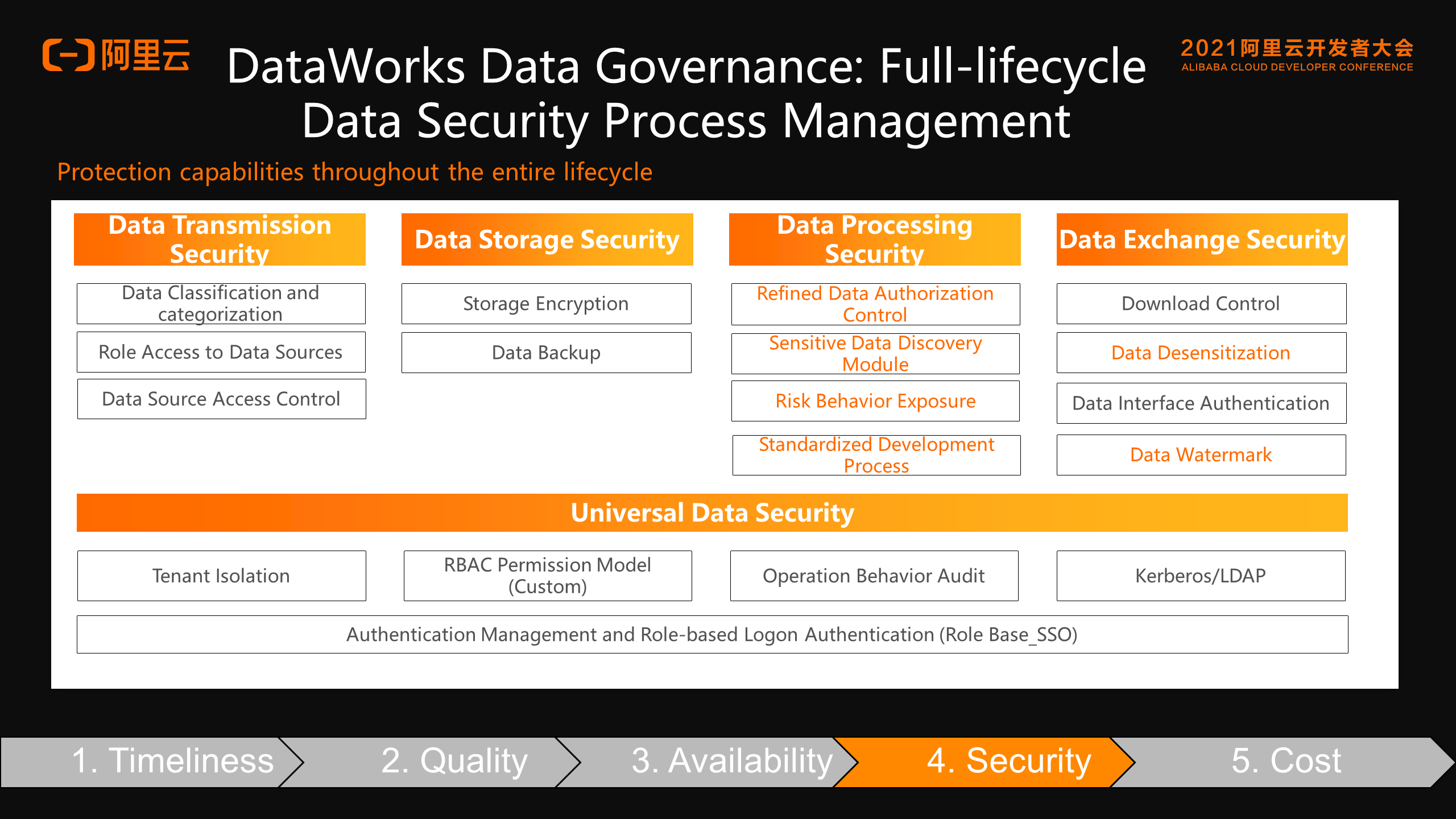

In addition, we provide data security protection for the full process. In terms of data security, we provide the tenant-isolated Role Base coupled with management and operation logs interconnected with the open-source Kerberos/LDAP. The entire data development process also has full-process security protection starting from data transmission. For example, we can control the access of the data source of data transmission, carrying out storage encryption, data backup, and recovery in the process of data storage. In data processing, we can carry out a more detailed security control and then download the data from data exchange for API authentication and data desensitization.

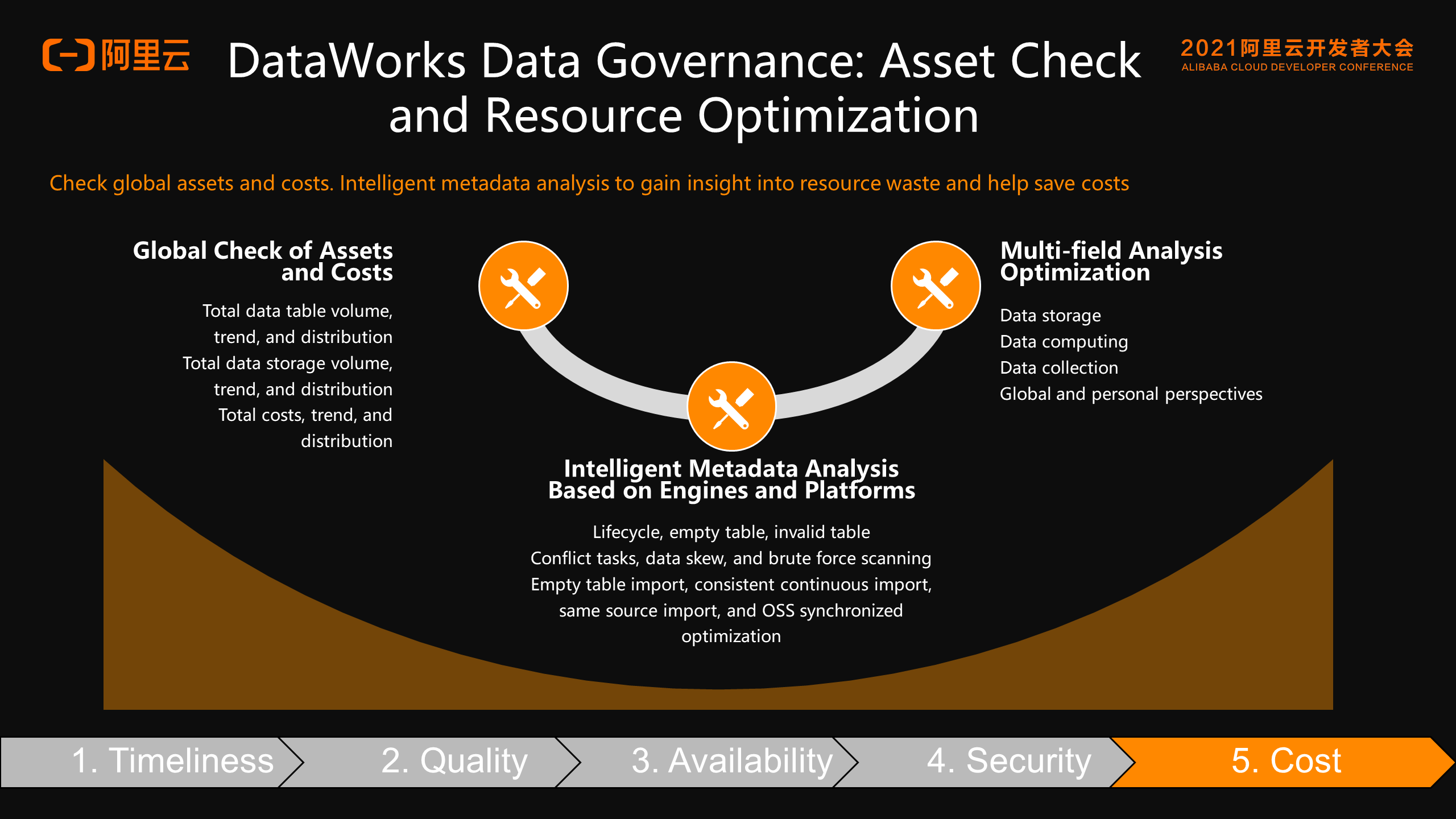

As the enterprise assets expand due to the increase in data volume, there is a growing need for enterprises to manage and control their assets. DataWorks has also gradually expanded the data warehouses to the entire data lake scenario we discussed previously. Based on the lake-warehouse unification, it gradually checks and plans the resources of the entire enterprise and optimizes them before offering them to customers to ensure cost control.

Let's take EMR as an example to see how the EMR product is deeply integrated for unified data development and governance:

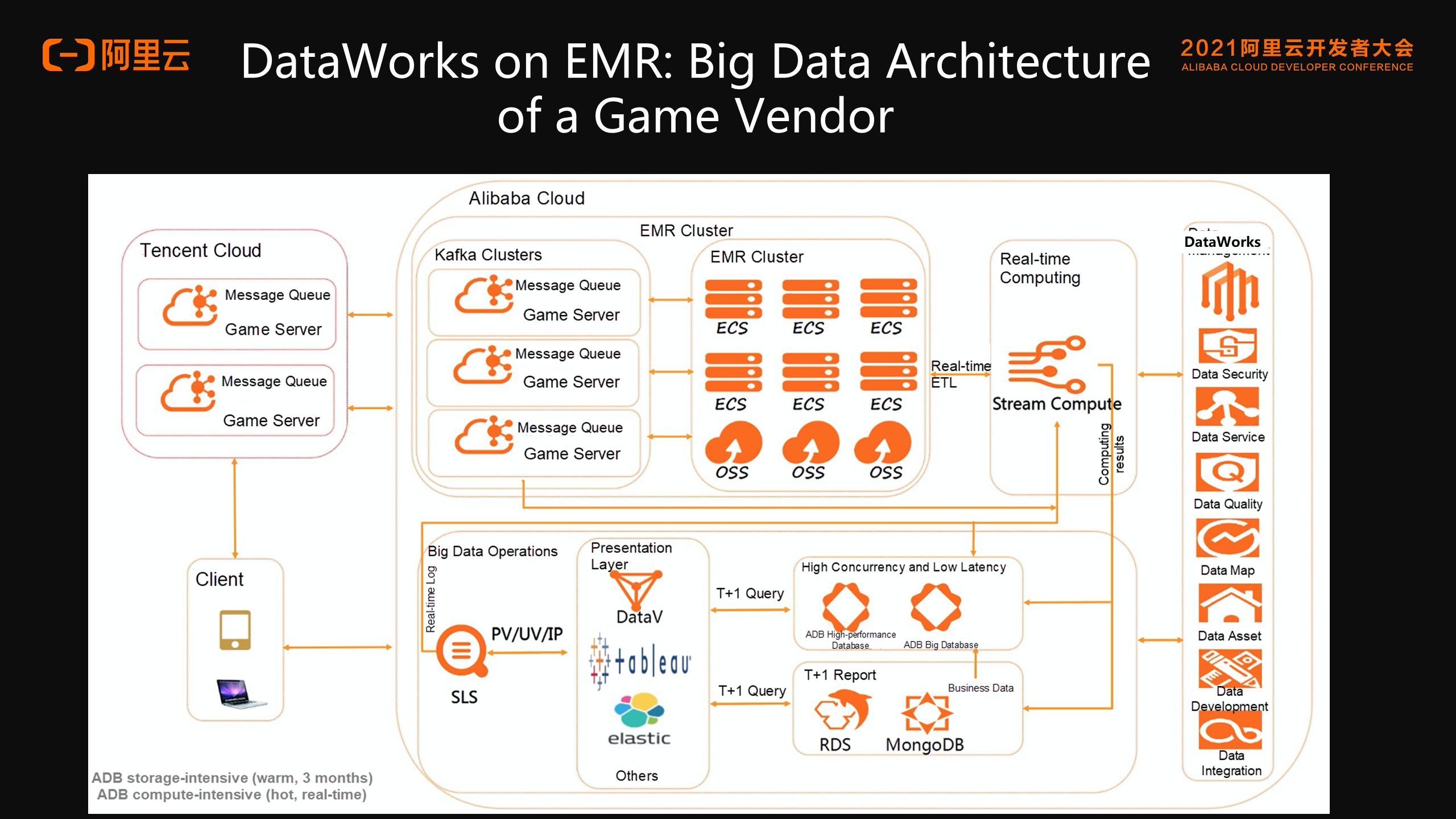

After a game vendor's data enters the entire Alibaba Cloud system, it goes to the EMR cluster for message queues. Then, it goes to the EMR cluster for real-time computing and interactive analysis to support big data BI presentation.

Throughout the procedure, we can see how DataWorks is deeply combined with a wide variety of data storage and computing engines to cover the entire data development and governance process in the full process.

To sum up, DataWorks supports multiple engines and provides a global data development and governance platform based on the lake-warehouse unification. It also helps enterprises achieve data-based business and business-based data capabilities.

StarLake: Exploration and Practice of Mobvista in Cloud-Native Data Lake

Alibaba Cloud JindoFS Handles Stress Testing Easily with More Than One Billion Files

62 posts | 7 followers

FollowAlibaba Cloud Community - September 17, 2021

Alibaba Cloud MaxCompute - July 14, 2021

ApsaraDB - November 17, 2020

Alibaba EMR - February 20, 2023

Alibaba Cloud MaxCompute - December 8, 2020

Alibaba Cloud MaxCompute - January 21, 2022

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba EMR