With the rise of data lake architecture in the recent past, many enterprises have become interested in building a data lake. Compared to big data platforms, a data lake has high data governance requirements. This challenges "traditional" big data tools in many areas. JindoTable solves the major problems related to structured or semi-structured data management in a data lake, including data governance and query acceleration.

Data Lake stores data from all types of data sources. For HDFS clusters and compute-storage separated data lakes, small files create multiple problems and trouble users. For example, too many files take more time to list in the directory and small files also affect the concurrency of computing engines. Moreover, an excessive number of small files increases the request time as OSS is object-based. It also affects metadata operation performance and increases the usage costs for enterprises. However, if the file is huge and the data do not support a multi-part archive, the concurrency of subsequent computations becomes too low. This results in limited use of the cluster computing capability. Therefore, governing and optimizing data files in the data lake architecture is crucial.

JindoTable provides customers with a one-click optimization function based on the metadata information managed by a data lake. As long as users activate optimization instructions with enough spare system resources, JindoTable can automatically optimize data, adjust file size for sorting and precomputing, and generate appropriate index information and statistics. Besides modifying the computing engine, JindoTable can generate a more efficient plan to reduce the query execution time. The data will be consistent before and after the operation due to transparent data optimization. This is an indispensable part of data governance in a data lake.

Query acceleration is a noteworthy feature of JindoTable. In a data warehouse, accelerated data analysis is always beneficial. Ad-hoc scenarios are particularly sensitive to query latency. At present, the concept of "Lake-warehouse Integration" is very popular. For a data lake, a typical compute-storage separated architecture and reducing I/O overheads are critical for shortening the query time.

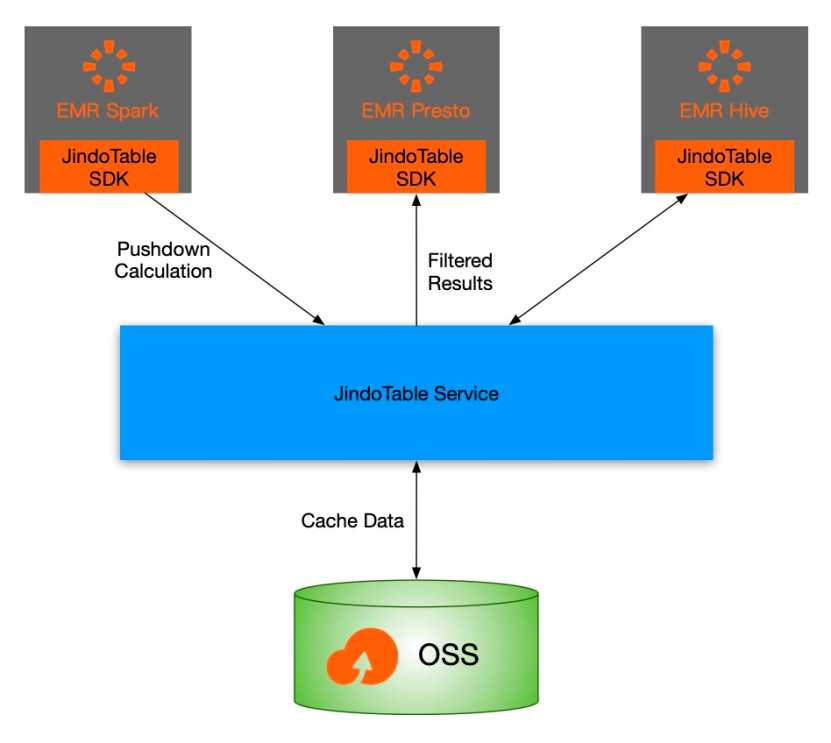

JindoTable's data optimization function introduced earlier reduces storage side overheads and lays a solid foundation for runtime optimization by pre-computing. The query acceleration function pushes computations to the storage side during query execution, thus reducing the overall I/O pressure during computation. Simultaneously, it uses the spare computing resources on the storage side to provide efficient computation and reduce the overall query time. The acceleration function of JindoTable and various modified computing engines can push down the desired number of operators to the cache side. Further, it uses the efficient native computing capability to filter a large amount of raw data, which is then transferred to the computing engine. By doing so, the acceleration function reduces the data processed by the computing engine. Here some computations need no execution; thus, it saves time for subsequent computations.

Generally, the data volume in a data lake grows rapidly. A traditional Hadoop cluster requires more storage resources with rising data volume. As a result, the cluster scales rapidly and the computing resources become excessive. Besides the problems caused by the growth in cluster scale, the cluster operating cost alone troubles a lot. Fortunately, the public cloud platform provides OSS that enables users to pay according to the stored data volume. Users can save the operation costs with OSS. Moreover, it also improves HDFS stability in case of quickly increasing cluster resources and data volume. However, the rapid growth of data volume will still increase the overall cost proportionally.

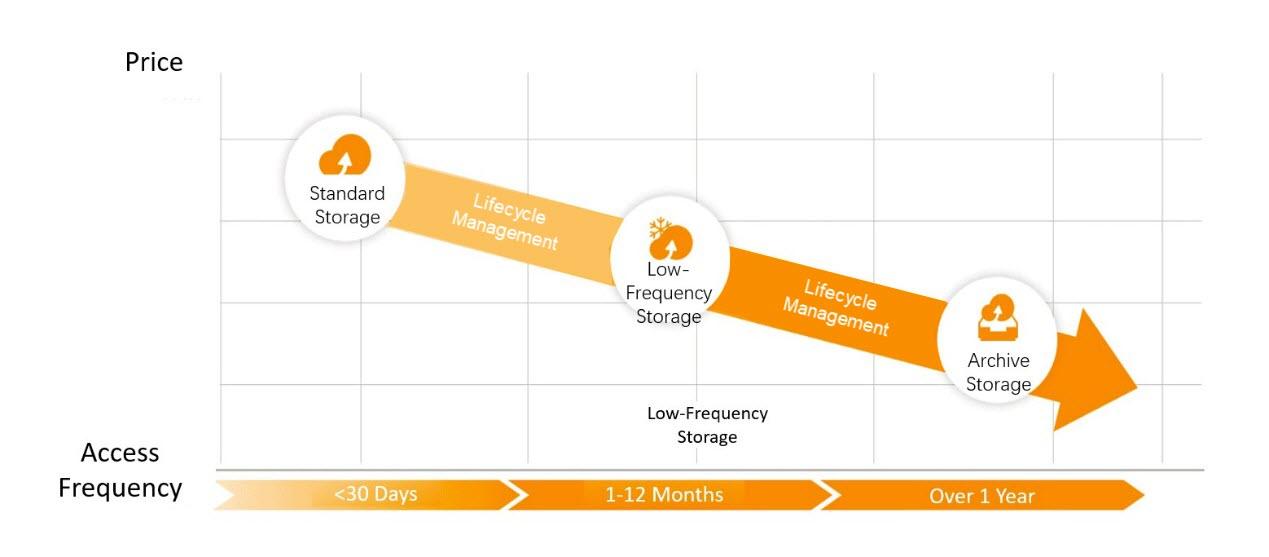

Alibaba Cloud OSS provides low-frequency and archive storage modes. It may be cost-saving to store less frequently accessed data in low-frequency and archive storage modes. However, if the data is accessed less frequently and stored in OSS away from computing resources, the I/O performance will be limited during computing.

By staying connected to various computing engines in a data lake, JindoTable takes a table or partition as the minimum unit and calculates access frequency. JindoTable can identify frequently accessed tables or partitions based on rules set by users. Users can use JindoTable instructions to cache the corresponding data in the computing cluster with the base support. By doing so, users can accelerate query execution. Besides, users can store data of infrequently accessed tables or partitions in low-frequency and archive storage modes or set data lifecycle. When operating archived data, users can also use JindoTable to restore it. Moreover, JindoTable also provides metadata management to help view the current storage status of tables or partitions. With these features, users can manage data efficiently and save costs without sacrificing computing performance.

A data lake integrates data from various sources for enterprises. With the support of multiple cloud products, the data lake architecture helps enterprises explore data value and realize their corporate vision. As a part of data lake solution, JindoTable provides the value-added functions of data governance and query acceleration. Moreover, it lowers the threshold for data migration into a data lake and helps achieve higher data value with lower cost.

How to Use JindoDistCp for Offline Data Migration to a Data Lake

62 posts | 7 followers

FollowAlibaba EMR - July 9, 2021

Alibaba EMR - June 8, 2021

Alibaba EMR - April 30, 2021

Alibaba EMR - February 15, 2023

Apache Flink Community - May 30, 2025

Alibaba EMR - November 18, 2020

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba EMR