Watch the replay of the Apsara Conference 2021 at this link!

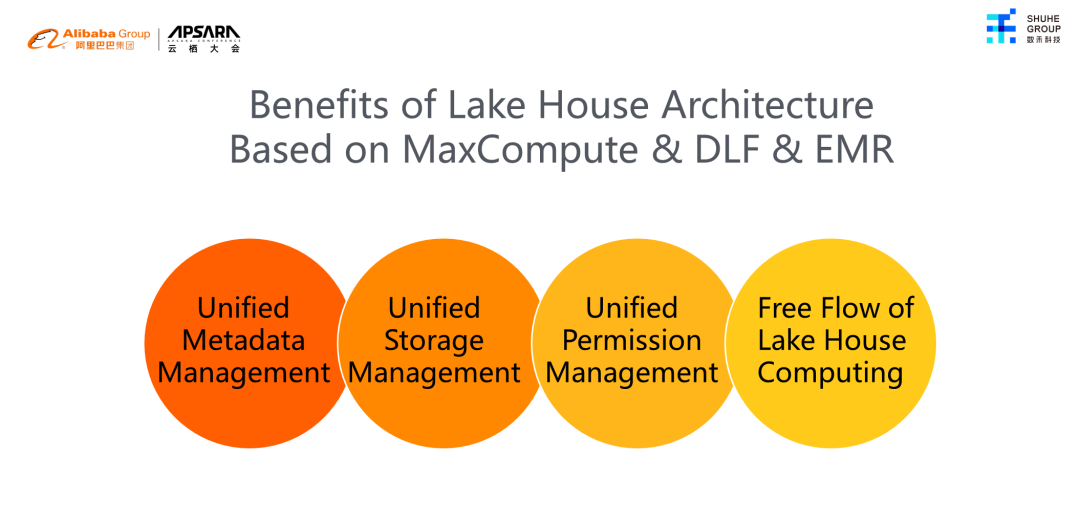

"The lake house architecture of MaxCompute & DLF & EMR realizes unified metadata management, storage management, and permission management. It truly realizes the free flow of lake house computing and helps the rapid development of enterprise business," said Cheng Junjie, Head of the Big Data platform of Shanghai Shuhe Group.

Cheng Junjie, Head of the Big Data Platform of Shanghai Shuhe Group

Today, I want to explain the four stages of lake house evolution within the Shanghai Shuhe Group, including the architecture of the big data platform in each stage and the reasons behind the evolution. I will focus on the lake house architecture based on MaxCompute & Data Lake Formation (DLF) & E-MapReduce (EMR). Also, I will introduce the big data platform architecture based on lake house, the challenges faced when implementing this architecture, and the benefits after landing. Finally, I will introduce our future planning for lake house. I hope this article can assist the rapid growth of big data platforms of other enterprises and contribute to their development.

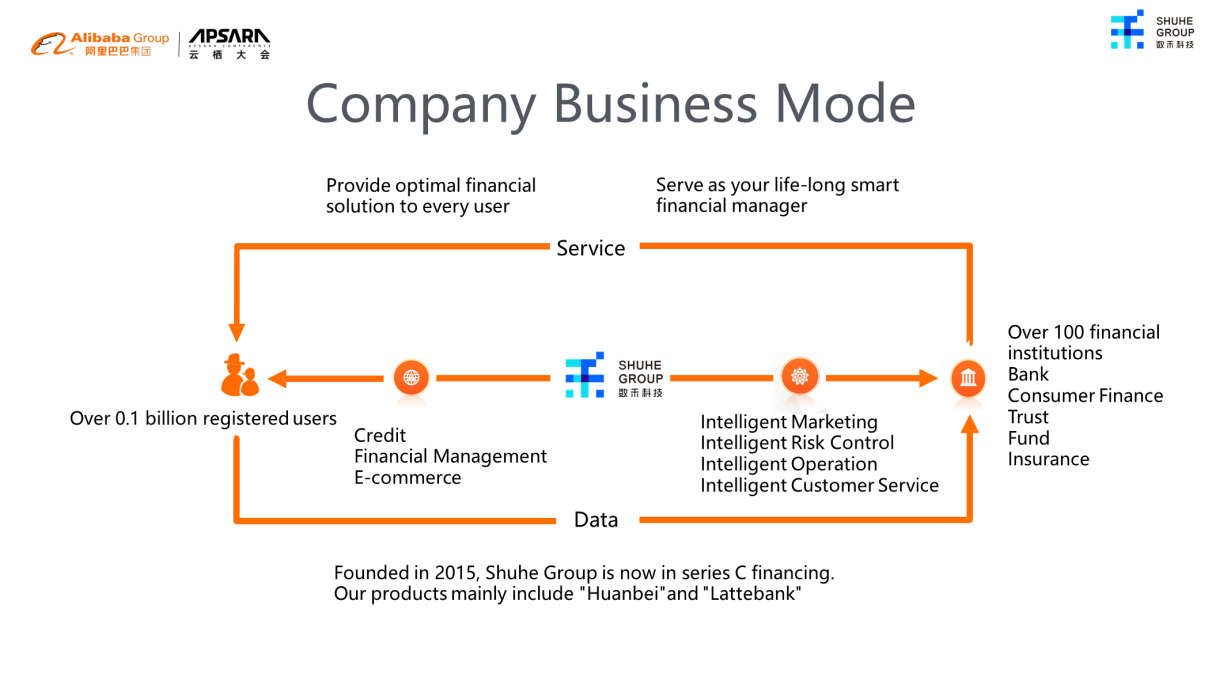

Shanghai Shuhe Group was established in 2015. We are now in the Series C round, and our main products are Huanbei and Lattebank.

We currently have more than 100 million users. Our businesses cover credit, wealth management, and e-commerce, and our products include intelligent marketing, risk control, operation, and customer service. We cooperate with more than 100 financial institutions, including banks and institutions of consumer finance, trusts, funds, and insurances.

Our mission is to provide everyone with the optimal solution of financial services, and our vision is to be smart finance managers that can accompany our clients for a lifetime.

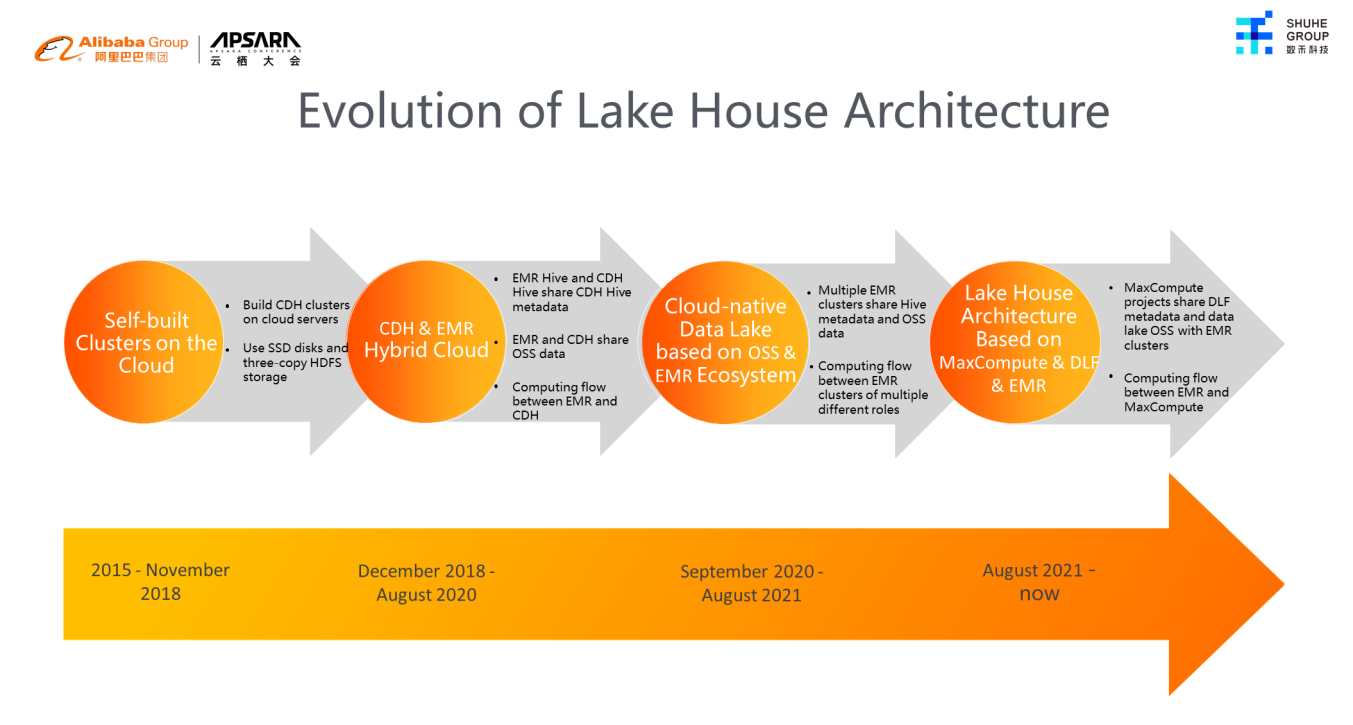

The evolution of the lake house architecture of Shuhe Group's big data platform is divided into the following four stages:

2015 - November 2018: Self-Built CDH Cluster on the Cloud

At the beginning of the company's establishment, Shuhe purchased cloud servers and built CDH clusters on them. We used Hive as our primary data warehouse. At first, the data volume was small, so we chose a local SSD disk, and three-copy storage was adopted for HDFS storage.

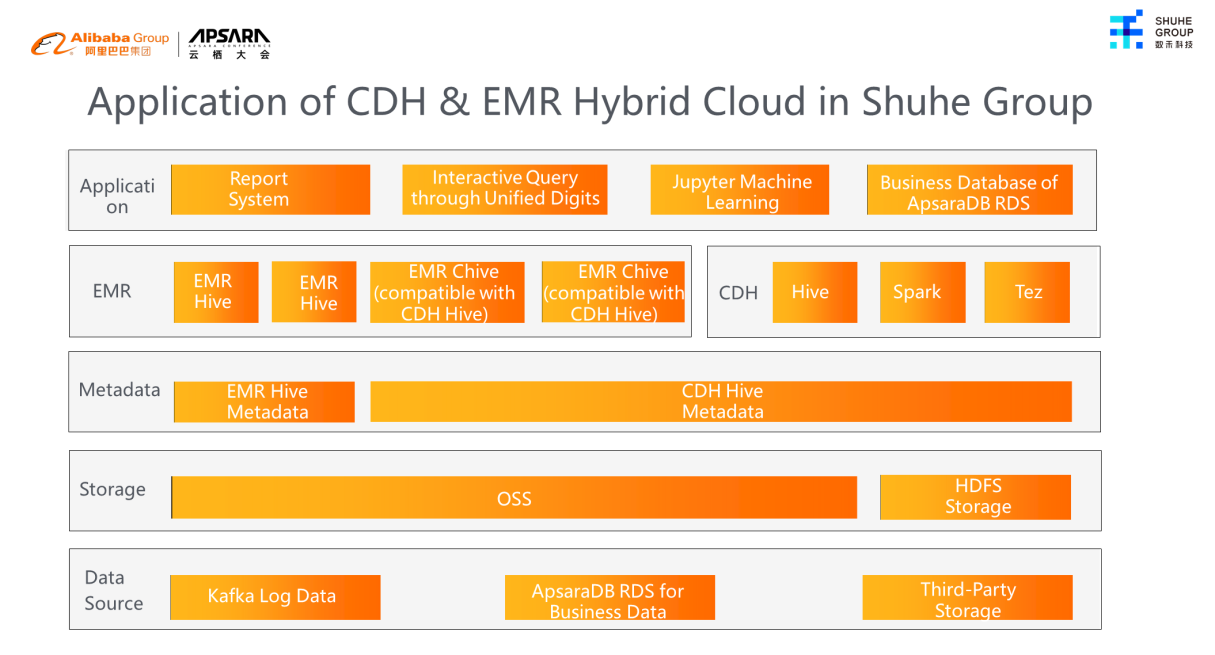

December 2018 - August 2020: CDH & EMR Hybrid Cloud Architecture

With the rapid development of our business, we modified the source code of EMR Hive to extend the computing power of CDH clusters, making different versions of EMR Hive and CDH Hive to share metadata of CDH Hive. The computing flow between EMR and CDH was realized, and the pressure on CDH computing resources was reduced. Some cold data on HDFS, such as logs and backup data, were stored in OSS. CDH and EMR could share the data through external tables, reducing the cost pressure on CDH storage.

September 2020 - August 2021: The Cloud-Native Data Lake Based on OSS & EMR Ecosystem

Under this architecture, we built EMR clusters for every department. In terms of computing, multiple EMR clusters shared the same Hive metadata, and each EMR cluster performed its own tasks, fully achieving the computing flow between EMR of different roles. In terms of storage, multiple EMR clusters shared OSS, and each EMR cluster was bound to its own RAM role to control the permissions of accessing OSS through EMR clusters.

August 2021 - Now: Lake House Architecture Based on MaxCompute & DLF & EMR

Since Shuhe purchased Alibaba Cloud's data middle platform that uses MaxCompute as the computing engine, it has posed some challenges to the compatibility of the cloud-native data lake architecture in the previous stage. After several discussions with Alibaba Cloud's expert team, the lake house architecture based on MaxCompute & DLF & EMR was implemented. MaxCompute projects share DLF metadata and OSS for data lake storage with EMR clusters. This enables computing flows between EMR and MaxCompute.

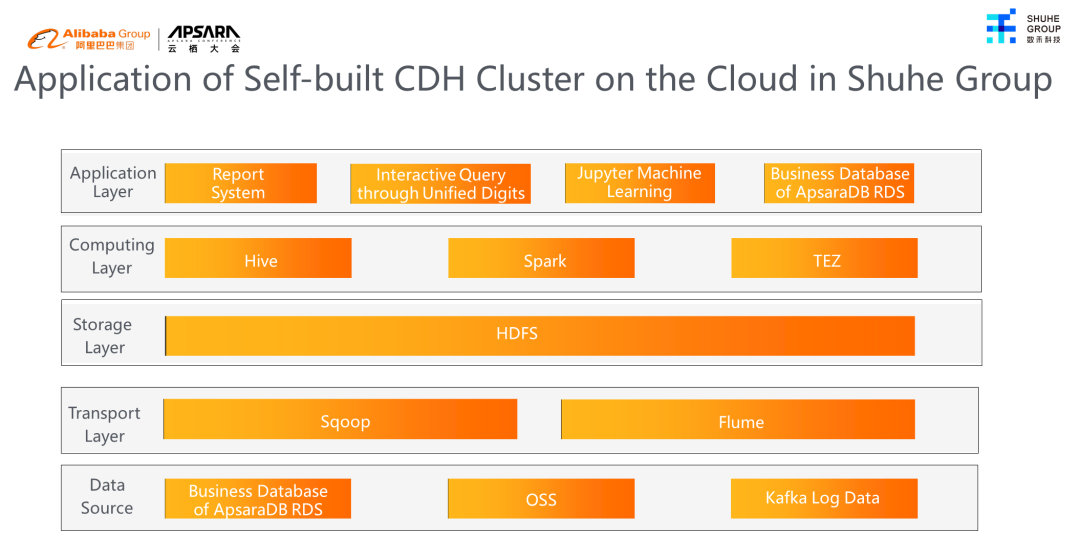

Six years ago, like most start-up companies, Shuhe chose the big data solution of purchasing servers on the cloud and building CDH clusters.

Data sources included offline data sources and real-time data sources. Offline data sources were stored in ApsaraDB RDS and OSS, and real-time data sources were Kafka data of tracking point logs. Offline data was extracted using the Sqoop component, and real-time data was extracted using the Flume component. The extracted real-time and offline data were stored in the HDFS storage of CDH.

The computing layer used Hive at the beginning and adopted the Spark and TEZ computing engines later, which were more efficient in execution.

The application layer was a reporting system, including interactive query through unified digits, Jupyter machine learning, and business library of ApsaraDB RDS. The interactive query through unified digits refers to an in-house interactive query system of Shuhe featuring ad hoc queries. It integrates functions, such as work order approval, permission control, data sampling, and lineage display. The business library of ApsaraDB RDS is used to write the data at the application layer generated by data warehouse processing in the early morning and allow the business system to call it during the day.

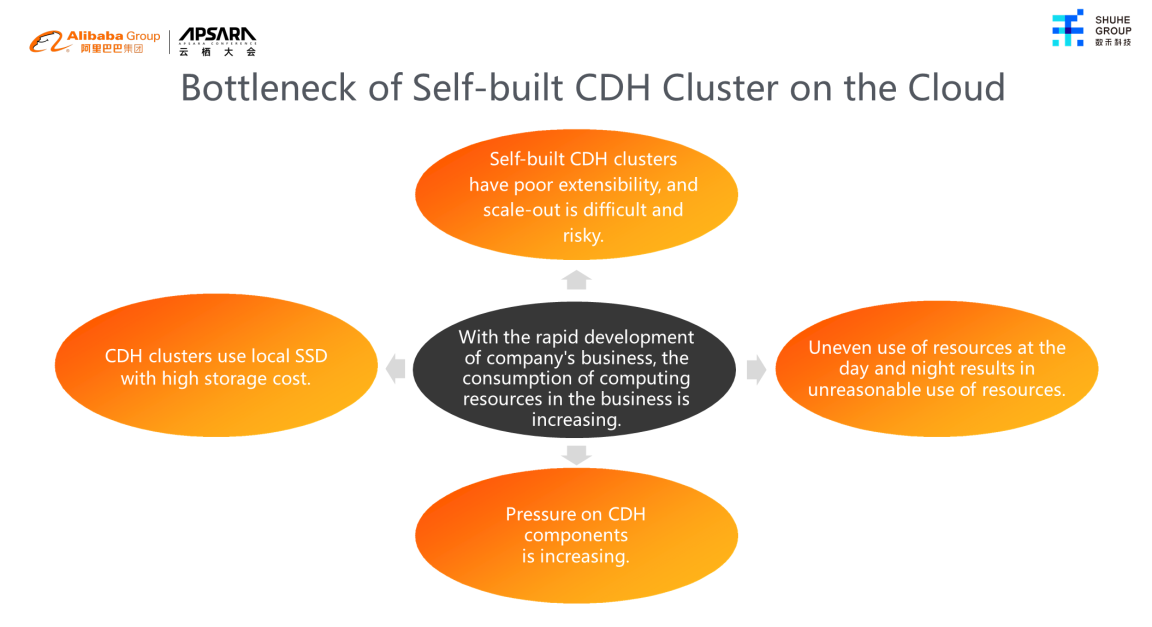

With the rapid development of the company's businesses, the consumption of computing resources used by the business increased. A series of bottlenecks have emerged in the self-built CDH clusters on the cloud. These bottlenecks are listed below:

Poor Extensibility of Self-Built CDH Clusters: The operation is difficult and has certain risks. In addition, the operation cycle is long, involving purchasing machines, adding nodes to clusters, assigning roles, and distributing configurations. We may also need to restart dependent components or clusters at the right time. The whole process lasts from half a day to one day.

Uneven Use of Resources during the Day and at Night Results in Unreasonable Use of Resources: After the daytime cutoff of the business system is completed in the middle of the night, the data warehouse starts to extract and process data, which consumes a large number of computing resources. The data warehouse has to output some core data in the morning on time. If an accident occurs at midnight and the tasks are delayed, the data warehouse needs to speed up data processing to ensure that data is generated on time in the morning. This requires additional resource reserves. However, the cluster is mainly used for ad hoc query, analysis, and modeling by business personnel during the day and does not require too many computing resources. Since the cluster does not need to scale in or scale out, the amount of cluster resources needs to remain high, resulting in resource waste.

CDH Clusters Use Local SSD, so the Storage Cost is High: At first, the data volume was small. SSD disks were chosen to improve the I/O performance of CDH disks, and three-copy HDFS storage was selected to save data. As the data volume increased gradually, the cost of SSD disks became higher. In addition, computing and storage were not separated. The increase in computing resources also resulted in a corresponding increase in storage resources.

CDH Components Are under Increasing Pressure: As the cluster tasks increase gradually, the ResourceManager and HiveServer2 components of CDH are under increasing pressure. We can only upgrade the configurations of the Master node, adjust JVM configurations, restart components, and enhance monitoring and alert for components to solve this problem. However, there is still a great risk of accidents.

A big data architecture of CDH & EMR hybrid cloud is proposed to extend the computing capability of CDH clusters.

In terms of computing, multiple independent EMR clusters are built according to various business scenarios. The source code of Hive Metastore of the later Hive version of EMR is modified to extend the computing capability of CDH clusters and be compatible with the metadata of earlier Hive versions of CDH. By doing so, CDH and EMR Hive use a unified set of CDH Hive metadata, and CDH computing resources are extended by the auto scaling capability of EMR. Thus, the pressure on CDH computing resources is relieved, and the auto scaling capability ensures the effective utilization of computing resources.

In terms of storage, some cold data in CDH disks, such as logs and backup data, are stored in OSS in the form of external Hive tables so that CDH and EMR can share these data. As the cost of OSS is much lower than SSD disk storage, the cost pressure on CDH disks is reduced significantly.

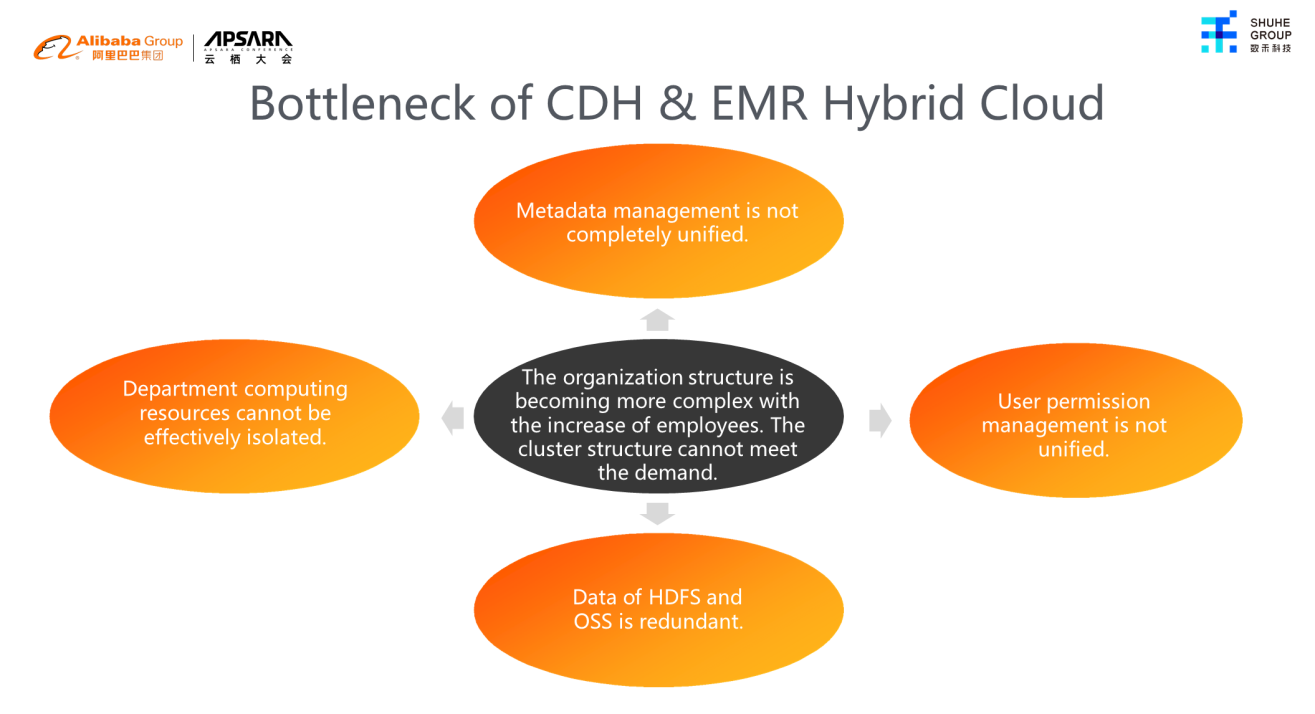

With the increasing number of employees and the increasingly complex organizational architecture, the hybrid cloud architecture of CDH & EMR cannot meet the following requirements:

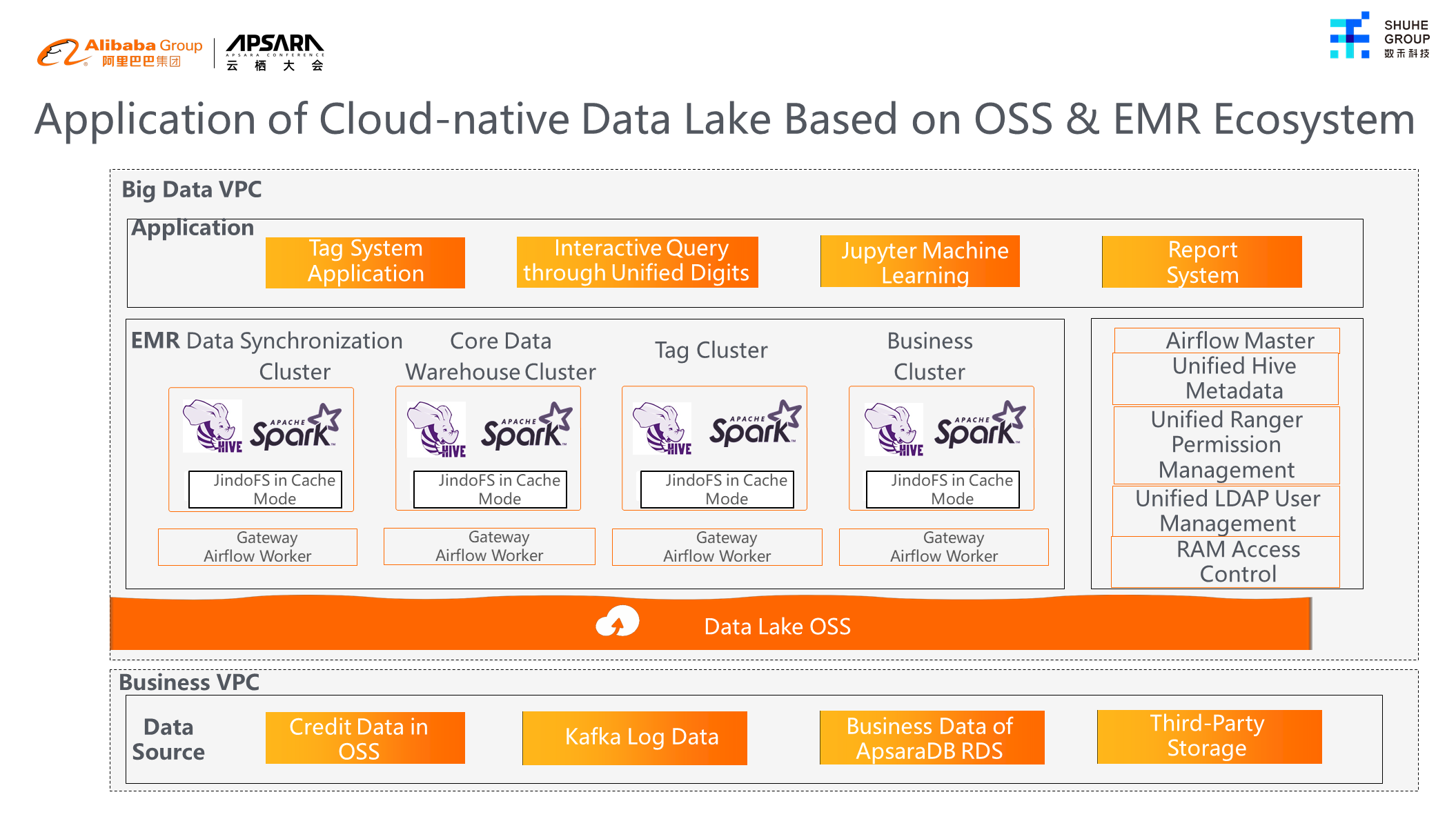

We have proposed the cloud-native data lake architecture of the OSS & EMR ecosystem to solve the problems above.

Shuhe divides multiple VPCs according to business units to facilitate the management of VPC resources, including business VPCs and big data VPCs.

Business data is stored on the business VPCs. For example, for business VPCs, the credit data is stored in OSS, data of tracking point logs are stored in Kafka, and business data is stored in ApsaraDB RDS databases. Some third-party storage data of MongoDB and Oracle is also stored on the business VPC.

Based on the functions of EMR, EMR clusters on big data VPCs are divided into data synchronization clusters, core data warehouse clusters, tag clusters, and business clusters for each department. The data synchronization cluster uses the Sqoop component to extract the business data of ApsaraDB RDS and OSS on the business VPC to store them in OSS. The core data warehouse cluster processes the data at the ODS layer and puts it into the data warehouse layer. The tag cluster processes the data at the data warehouse layer to produce offline tags. The business clusters of each department process the data at the data warehouse layer to generate their own mart data. Each EMR cluster performs its duties to complete the functions of the entire layered data warehouse.

In terms of EMR access rights, each EMR binds its RAM role according to its functions and accesses its OSS bucket data. In user management, an EMR LDAP cluster is built separately to store all EMR Hive user accounts. Moreover, this LDAP cluster T+1 is synchronized with the company's Active Directory to add accounts of new employees and delete accounts of former employees. In permission management, a unified Ranger permission management system is used to store all EMR Ranger metadata in one metadata database for management, and all EMR Hive metadata is also stored in a unified metadata database. The scheduling system uses Airflow to build a highly available Airflow Master architecture, and Airflow Workers are deployed on the Gateway node of each EMR cluster to facilitate scheduling tasks to be submitted directly to the EMR cluster. The JindoFS component is used to accelerate the storage and computing of the data lake through the cache mode of JindoFS.

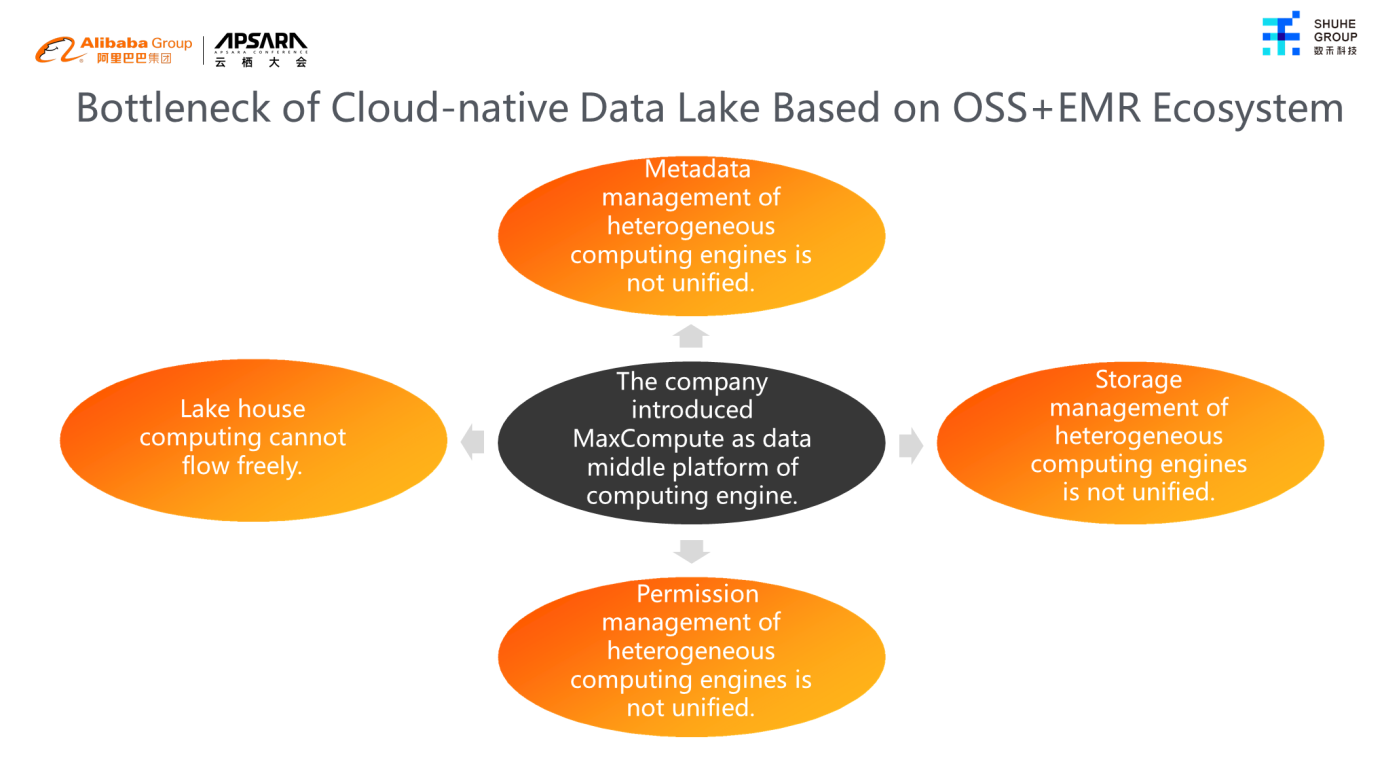

Shuhe introduced MaxCompute as the data middle platform of the computing engine, which brought challenges to the original cloud-native data lake architecture based on the OSS + EMR ecosystem. These challenges are listed below:

After several discussions with Alibaba Cloud's expert team, the lake house architecture based on MaxCompute & DLF & EMR was proposed.

DLF is a fully managed product that builds cloud data lake services. It provides unified permission management, metadata management, and automatic metadata extraction for cloud data lakes.

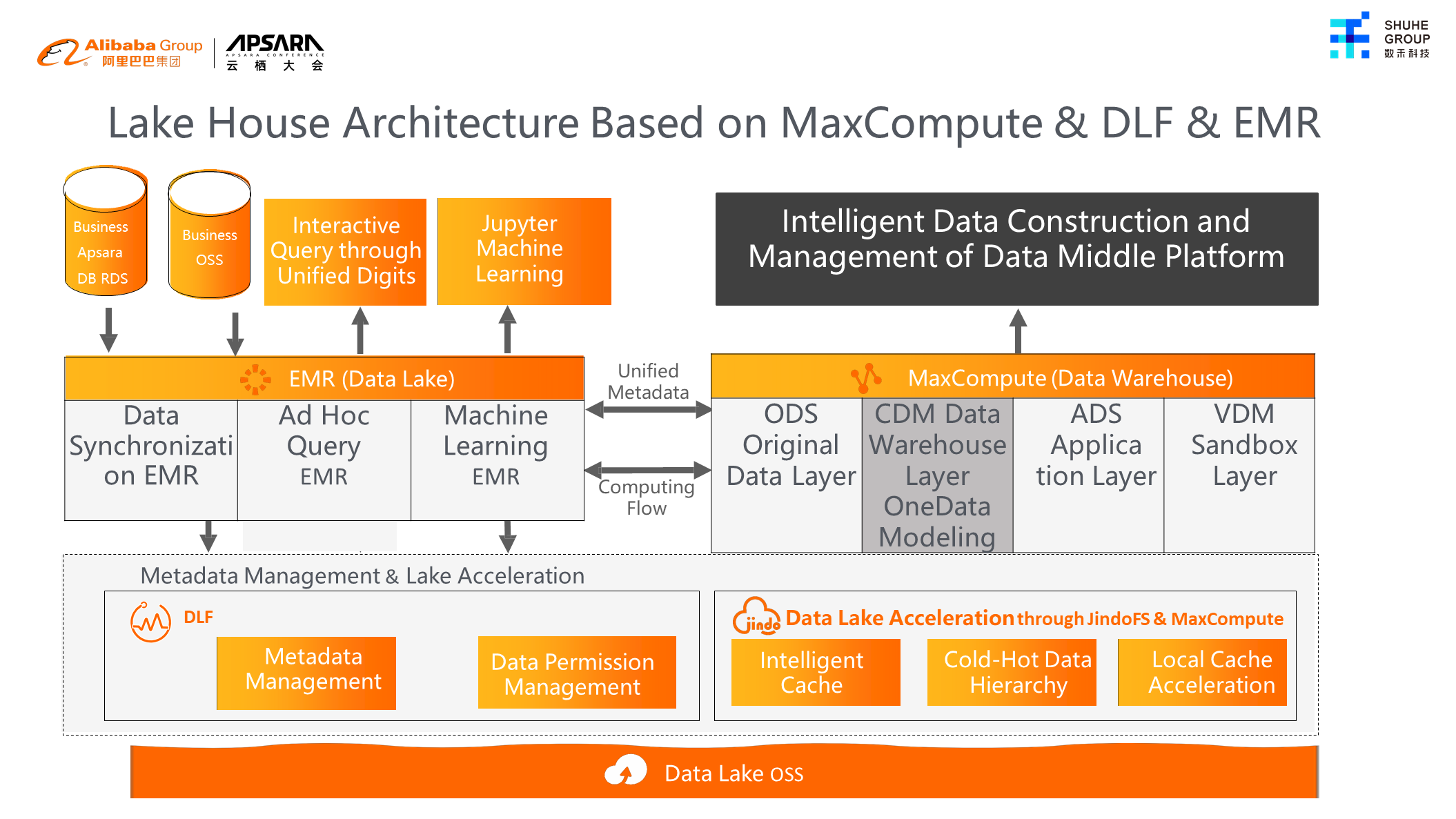

From the perspective of architecture, the bottom layer still uses OSS, and the middle layer involves metadata management and data lake acceleration. Metadata management is built through DLF, including metadata management, data lineage management, and data permission management. Data lake acceleration is achieved by JindoFS & MaxCompute acceleration, intelligent cache, hot-cold data hierarchy, and local cache acceleration to accelerate computing and storage. At the computing engine layer, EMR clusters in the data lake and MaxCompute projects in the data warehouse have achieved metadata unification and free computing flow with the help of unified metadata management and data lake acceleration.

From the perspective of the data stream, EMR extracts business data from ApsaraDB RDS and OSS and takes it to the data lake. Then, the ODS project of MaxCompute bound to Alibaba Cloud data middle platform performs data cleaning, masking, unified naming, and precision cleaning of ODS data in the data lake. CDM projects mainly provide the function of OneData standard modeling. It should be emphasized here that due to the design of the Alibaba Cloud data middle platform, the data in this layer is mainly stored in MaxCompute in the form of internal tables but not in the data lake. It is marked in gray. ADS projects are mainly provided to each business party to establish their data marts based on the data at the CDM layer, which performs direct read and write on the data lake. VDM projects provide functions of querying and analyzing ADS and CDM data to end users.

As such, the interactive query through unified digits of the data lake can query and analyze the data in the data lake through ad hoc query EMR. Jupyter can also use machine learning EMR to perform model training and inference on the data in the data lake.

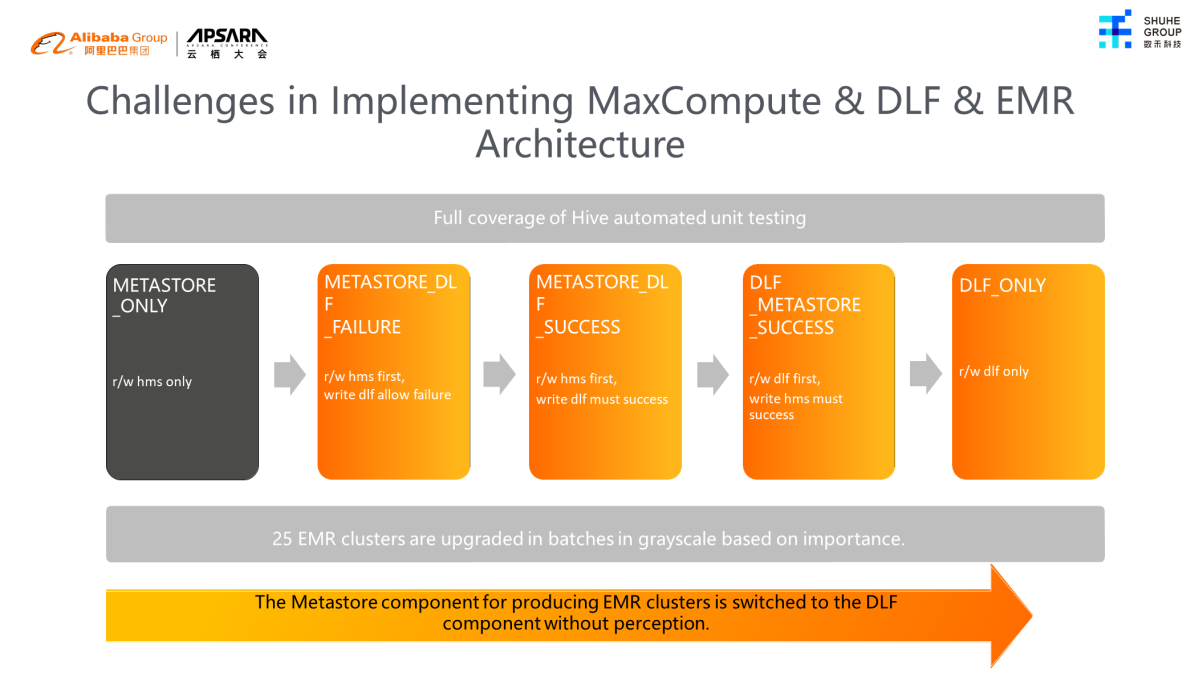

Shuhe has 25 EMR clusters in operation, and any accident will result in the delay of EMR output and asset loss to us. The DLF team of Alibaba Cloud designed a complete set of solutions to help Shuhe switch from Hive Metastore to DLF. The solution is divided into five stages and contains four switching processes:

In each stage, 25 EMR clusters are divided into unimportant clusters, non-critical business clusters, critical business clusters, and core clusters. The grayscale switching is performed in sequence. After each EMR completes switching, it is verified by the automated full-scenario unit test script of Hive to ensure that the switching result is correct.

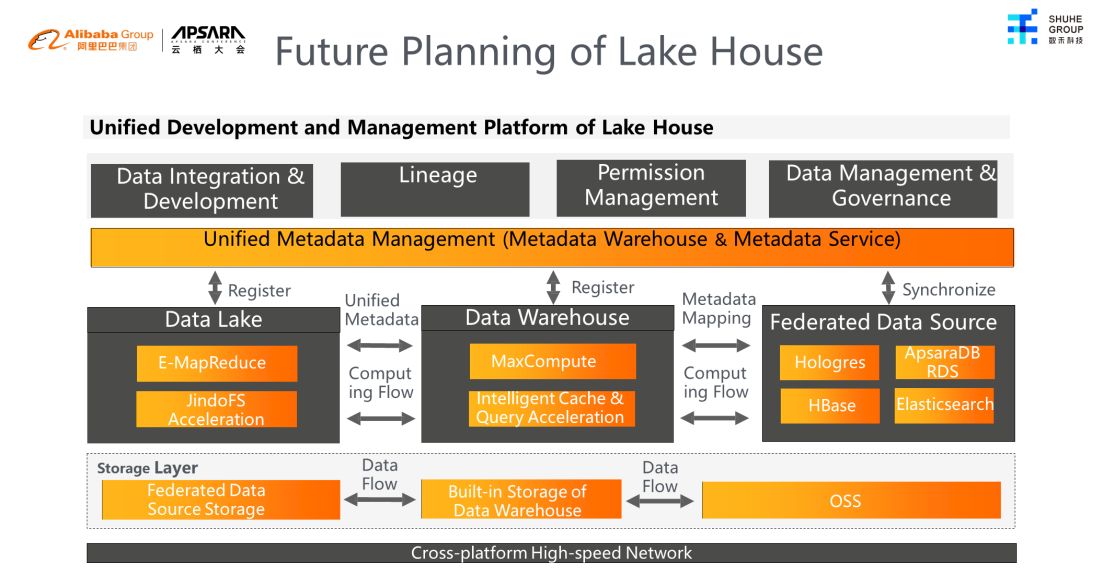

In the future, lake house will upgrade the metadata management function of DLF to a unified metadata management platform, involving a unified metadata warehouse and unified metadata services.

We can register the metadata of data lake EMR to the metadata management platform. The data lake uses EMR as the execution engine and uses JindoFS as the acceleration module for computing and storage. Data is stored in the form of OSS objects. We can register the metadata of the MaxCompute data warehouse to the metadata management platform. The internal and external tables of MaxCompute are supported. The data warehouse uses MaxCompute as the computing engine and intelligent cache to accelerate computing and storage. Data can be stored in OSS or within MaxCompute. Other federated data sources can be also synchronized to the metadata management platform. These sources include Hologres, ApsaraDB RDS, Elasticsearch, and HBase. This enables the unification of metadata from multiple data sources and the free flow of computing and data.

At the application layer, we use the lake house development and management platform to develop and manage all cloud products connected to the unified metadata management platform. It contains multiple modules. The integration and development module of lake house data can integrate multiple data sources and perform federated queries of multiple data sources without data transmission. The lineage module of lake house manages the lineage of all data sources. The permission management module of lake house manages users' permissions of accessing all data sources. The management and governance module of lake house data provides naming conventions, data validity verification, and other functions for table fields from multiple data sources.

The Best Practice of Cloud-Native Full-Stack Data Warehouses in So-Young

137 posts | 21 followers

FollowApsaraDB - January 9, 2023

Alibaba Cloud MaxCompute - July 15, 2021

Alibaba Clouder - October 18, 2019

Alibaba Cloud Community - September 17, 2021

Alibaba Clouder - May 20, 2020

Alibaba Cloud MaxCompute - January 21, 2022

137 posts | 21 followers

Follow Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud MaxCompute