Apache HDFS was the preferred solution for establishing data warehouses with massive storage capabilities in the early days of the big data era. With the development of cloud computing, big data, AI, and other technologies, all cloud vendors are constantly improving their object storage technologies to better adapt to Apache Hadoop/Spark and various AI ecosystems. Considering that object storage has the advantages of massive capacity, high security, low cost, high reliability, and easy integration, various IoT devices and website data store different forms of original files in object storage. It is also a consensus in the industry to enhance and expand Big Data AI through object storage. Seeing this trend, the Apache Hadoop community has also launched the native object storage "Ozone." Transferring from HDFS to object storage and data warehouses to a data lake ensures data is stored in the unified storage for more efficient analysis and processing.

For cloud customers, technology selection in the early stage matters a lot for data lake formation. As the amount of data continues to grow, the cost of subsequent architecture upgrades and cloud data migration will also increase. The establishment of large-scale storage systems on the cloud using HDFS by enterprises has exposed many problems. As the native storage system of Hadoop, HDFS has set the storage standard in the big data ecosystem after 10 years of development. Despite being continuously optimized as a storage facility, the NameNode SPOB and JVM bottleneck affect cluster expansion in HDFS. Continuous optimization and cluster splitting is required in HDFS when data volume grows from 1 PB to over 100 PB. Even though HDFS supports up to EB-level data, high O&M costs are incurred to solve problems such as slow startup, signaling storms, node scaling, node migration, and data balancing.

Data lake formation based on the Alibaba Cloud OSS is the best choice among cloud-native big data storage solutions. As an object storage service on Alibaba cloud, OSS has the advantages of high performance, unlimited capacity, high security, high availability, and low cost. JindoFS adapts to OSS and accelerates caching in accordance with the big data ecosystem. It provides special file metadata services to meet the various analysis and computing requirements from cloud customers. Therefore, the combination of JindoFS and OSS on Alibaba Cloud has become the ideal choice for customers to migrate data lake architecture to the cloud.

Jindo is an on-cloud distributed computing and storage engine customized by Alibaba Cloud based on Apache Spark and Hadoop. Jindo used to be a proprietary code of Alibaba Cloud open-source big data team, which sounds like somersault cloud in Chinese. Jindo has undergone a lot of open-source optimizations and extensions. It is also deeply integrated and connected with many basic Alibaba Cloud services.

JindoFS is a big data cache acceleration service developed by Alibaba Cloud for on-cloud storage. This means JindoFS is elastic, efficient, stable, and cost-effective. JindoFS is fully compatible with the Hadoop file system, leading to more flexible and efficient data lake acceleration. JindoFS also supports all computing services and engines in EMR, including Spark, Flink, Hive, MapReduce, Presto, and Impala. It has two usage modes, namely Block and Cache. The following section introduces the way to configure and use JindoFS in EMR and the scenarios corresponding to different modes:

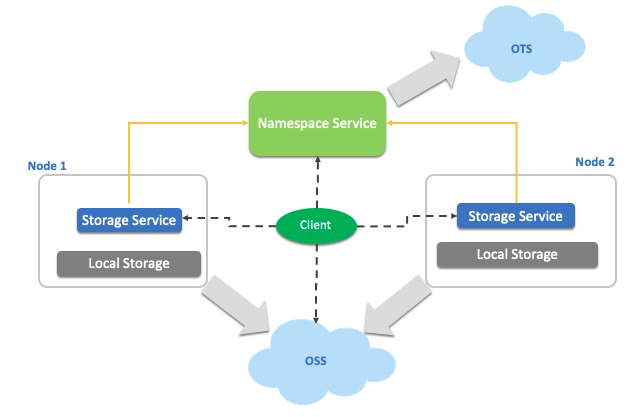

JindoFS consists of two service components: namespace service and storage service.

As shown in the following JindosFS architecture diagram, namespace service is deployed on independent nodes. We recommend deploying three Raft for high service availability in the production environment. Storage service is deployed on computing nodes of the cluster, managing spare storage resources, such as local disks, SSD, and memories. It provides distributed cache capability in JindoFS.

JindoFS namespace service internally stores metadata based on K-V structure. It is better than traditional memory storage in terms of efficient operations, easy management, and easy recovery.

JindoFS storage service mainly provides high-performance cache acceleration locally, thus greatly simplifying O&M.

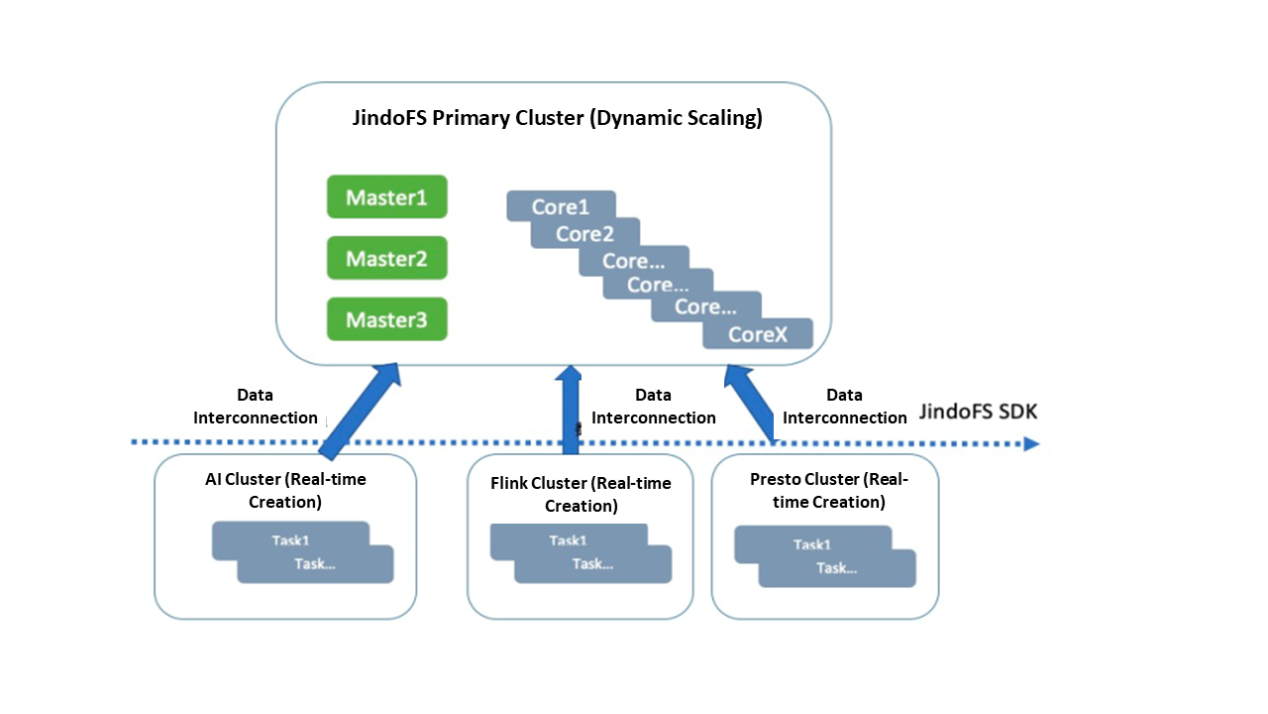

The metadata of JindoFS is stored in the namespace service (highly available deployment) of the Master nodes, reaching the same level as HDFS in terms of performance and user experience. Storage service of Core nodes stores one data block on OSS, enabling quick elastic scaling of local data blocks in line with node resources. You can also interconnect multiple clusters.

To support multiple usage scenarios of a data lake, one set of JindoFS deployment provides two usage patterns of OSS, namely Block and Cache.

oss://bucket1/file1. With Cache, all files are stored in OSS, and elastic usage of cluster-level can be realized.Compared with other solutions, a data lake solution based on JindoFS and OSS has advantages both in performance and cost.

The following part introduces advantages in terms of storage costs. Before that, let's understand what storage cost is? Storage cost refers to the cost incurred after storing data. OSS charges in pay-as-you-go mode, which is more cost-saving than HDFS clusters created based on local disks:

Refer to this: https://www.alibabacloud.com/product/ecs

Refer to this: https://www.alibabacloud.com/product/oss/pricing

When accelerating OSS data caching, more disk space of the computing node is required, resulting in a cost increase. The increased cost generally depends on the scale of hot data or data to be cached, yet has little to do with the total amount of data to be stored. The increased cost can lead to computing efficiency improvement and computing resource savings. The overall effect can be evaluated based on the actual scenario.

A data lake is open and requires connections to various computing engines. Currently, JindoFS supports components, including Spark, Flink, Hive, MapReduce, Presto, and Impala. To make better use of a data lake, JindoFS enables JindoTable to optimize structured data and accelerate queries. JindoFS also encourages JindoDistCp to support offline data migration from HDFS to OSS. Moreover, JindoFuse of JindoFS can accelerate machine learning training in a data lake.

62 posts | 7 followers

FollowAlibaba EMR - June 8, 2021

Alibaba EMR - July 9, 2021

Alibaba EMR - November 18, 2020

Alibaba EMR - May 10, 2021

Alibaba EMR - October 12, 2021

Alibaba Cloud MaxCompute - December 22, 2021

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba EMR