By Wu Junyin, senior technical expert on intelligent Elastic Compute Service (ECS) at Alibaba Cloud

Cloud computing has been developing for more than ten years now, and its priority has shifted from just "cloud migration" to a more sophisticated "cloud optimization" mindset. The former issue is actually a decision-making problem. That is, when an enterprise decides to migrate to the cloud, the topic is over. However, the latter one is a continuous topic. There is no silver bullet for resource optimization, and the goal towards a fully-optimized system is a never-ending process.

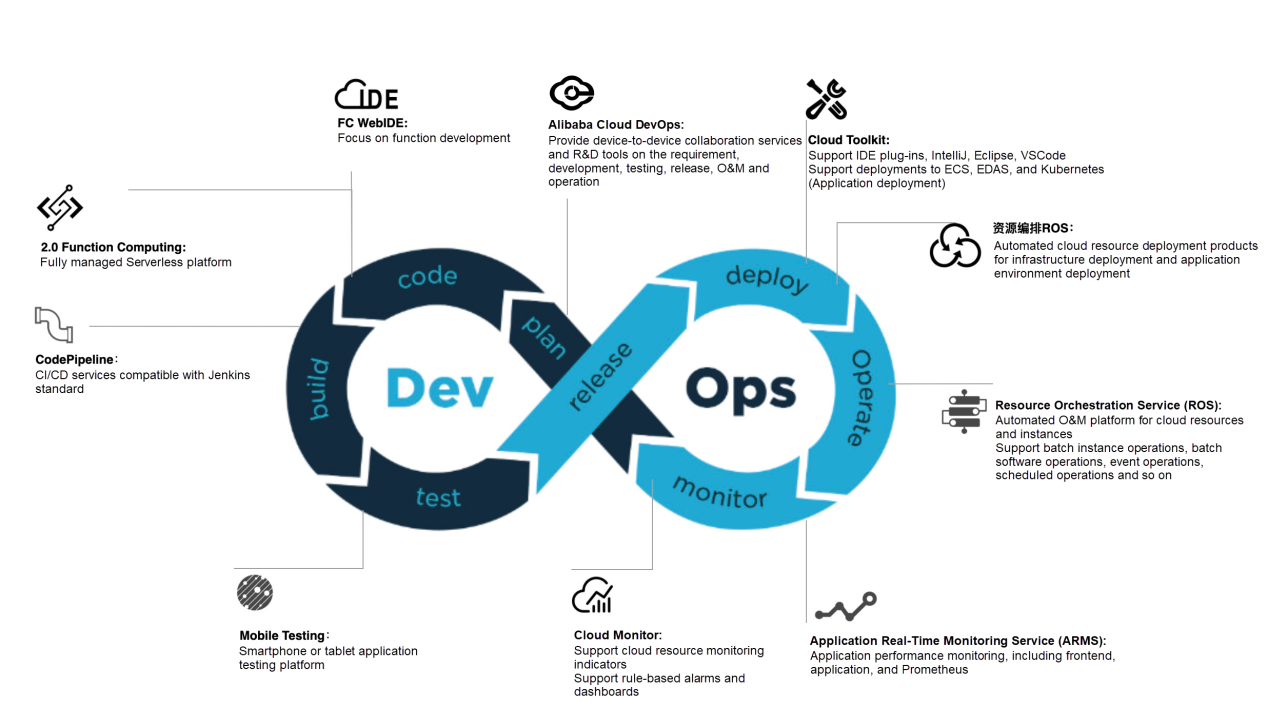

In general, at the beginning of the planning stage, enterprises may begin to think about "how to use the cloud well". This issue accompanies the whole process of using the cloud. From the perspective of work type, excluding the development (Dev) of the business code, all parts can be classified as operations (Ops), including resource creation This includes environment deployment, application deployment, resource management, resource monitoring, alerting, and troubleshooting.

I have been engaged in cloud computing for more than five years, and I have been involved in the development of several cloud products. It can be said that I am both a consumer and a producer of cloud computing products. In this blog, I would like to share my thoughts and summaries on the DevOps capability system on the cloud for years, in hopes that this article can be helpful to O&M personnel who are ready to or have already migrated to the cloud.

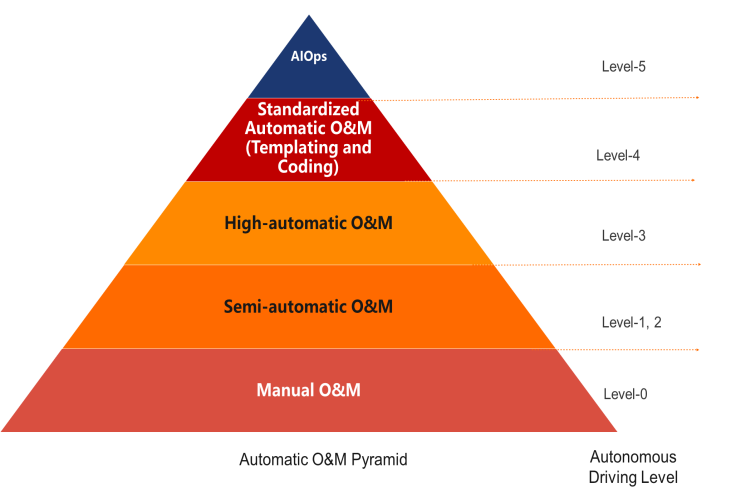

In terms of the O&M automation level, DevOps is advanced and clearly defined. The hierarchical relationship between O&M automation and DevOps can be obtained from the autonomous driving standards. Here is a comparison chart, as shown in Figure 1.

Figure 1: Automated O&M Pyramid

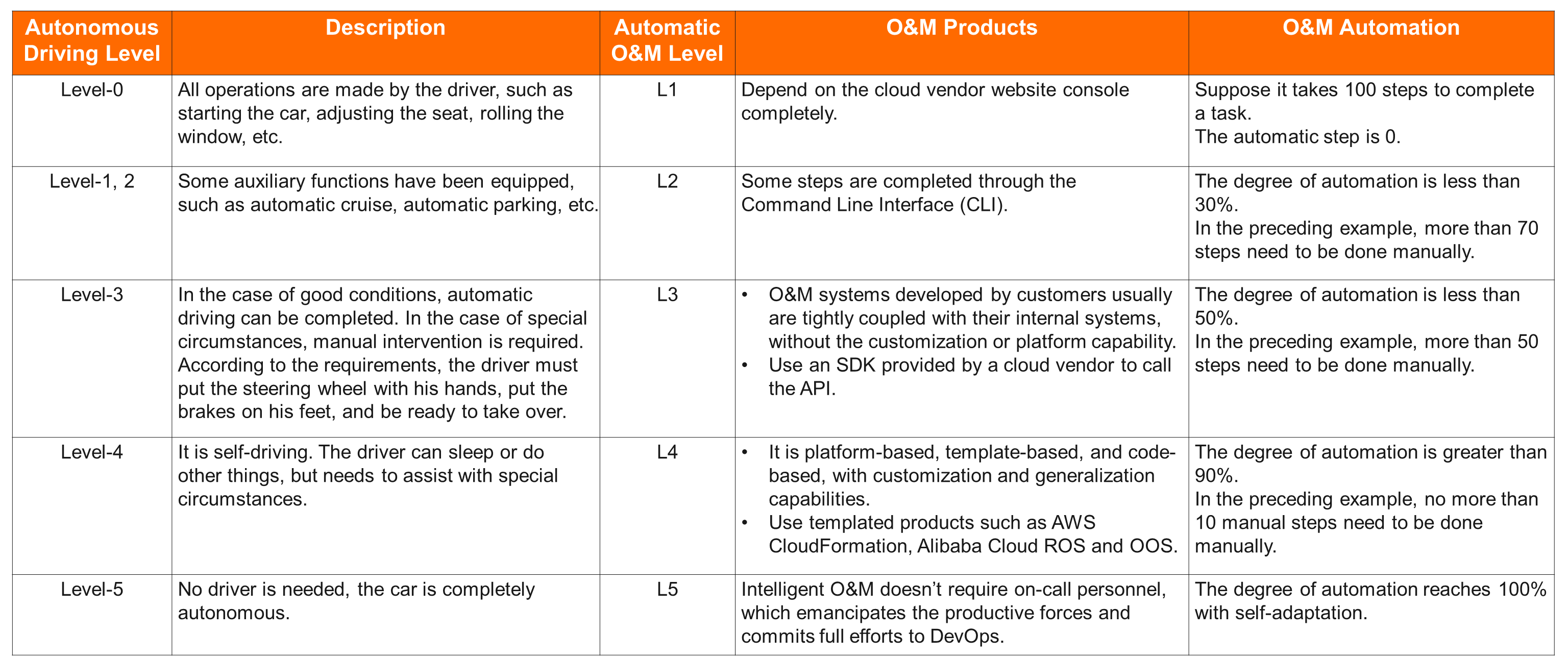

As shown in figure 1, automated O&M can be divided into five levels, which are manual O&M, semi-automated O&M, highly-automated O&M, standardized automated O&M and AIOps. These five levels correspond to the six levels of autonomous driving, with semi-automated O&M corresponding to Level 1 and 2 of autonomous driving. The following table compares the five levels of O&M automation.

Table 1: A Reference for Comparison of Autonomous Driving Level and Automated O&M Level

Here, I would like to focus on the differences between highly-automated O&M (Level 3) and standardized automated O&M (Level 4). Most of the in-house O&M systems are highly automated. For example, a function is developed on the internal O&M system, which can immediately create 10 cloud servers. While the next time to create other resources, for example, to create three message queues, an extra function for additional message queues needed to be created. So, highly-automated O&M can be regarded as a single "system" focusing on specific scenarios.

However, standardized automated O&M is more universal and completes a higher level of abstraction. Most cloud platforms, such as AWS CloudFormation and Resource Orchestration Service (ROS) of Alibaba Cloud, provide automated deployment services. Therefore, standardized automated O&M system is like a platform.

When using a Level 4 product to create resources, users only need to create templates. When users create new resources, they only need to apply templates without additional development. It should be noticed that the templates mentioned here are usually files in form of YAML or JSON. Recently, code has begun to be used in YAML and JSON forms again, and the ideas are the same as those of templates. DevOps pioneers can pay attention to products of AWS CDK and Pulumi.

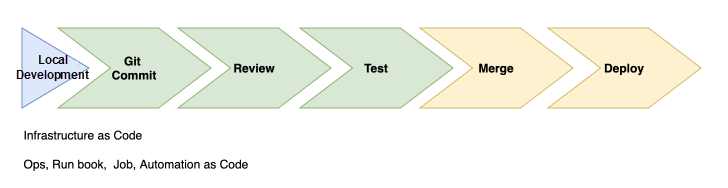

After the template is implemented, users can manage the templates in the same way as the code, using version control software, such as Git, in a code-like manner. In addition, the process of modifying and publishing templates can enjoy code-like benefits such as review, build, and continuous release. This is how automated DevOps works.

Although AIOps may be the common goal for everyone, but there is still a long way to go. At this stage, AIOps is only available in a few specific scenarios. For example, under the scenario of elastic scaling, historical data can be learned and then pre-scaled, or AI can be used to detect whether a certain metric is abnormal. So AIOps is far from being fully automated. It is recommended that AIOps research can be conducted in specific scenarios to keep the focus on AIOps.

Even so, I am still willing to express my views. Templating or coding of automated O&M (Level 4) should be the foundation of AIOps. Because only when all O&M work can be automated and standard, AI can have the opportunity to learn and AIOps can become a reality.

Usually, the first step of an automated O&M system on the cloud is environment deployment, which is a fundamental and important step. It is costly to modify the application after the deployment is completed. Especially after going online, it will be a project for environment migration. That's why environmental deployment needs to be designed at the very beginning.

Figure 2: System Operating Environment for CI/CD Pipelines

Take the example of IaC of CI/CD streamlined application as an example to illustrate the entire process of automated deployment.

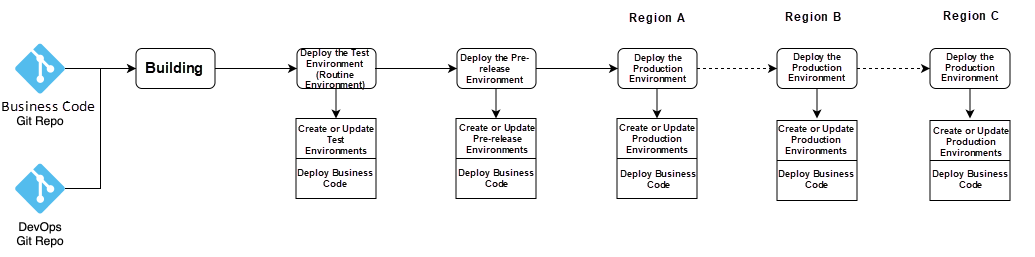

Figure 3: CI/CD Pipeline

In general, the source of the pipeline starts with Git, so some people call this mode GitOps. Obviously, Git here can also be replaced by other version control systems, such as SVN, because the ideas are the same. This article still calls them DevOps.

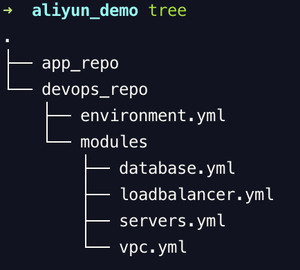

As shown in figure 3, the pipeline here includes not only the business code repository (Repo) but also the DevOps Repo. What content should DevOps Repo use to store? The focus here is on the configuration of the operating environment on which the system depends. The operating environment here is usually called "infrastructure", that is the system environment the business applications rely on, including cloud servers, networks, databases, server load balancer (SLB) and middleware. The directory is shown in figure 4.

Figure 4: System Environment Directory

In figure 4, the file of environment.yml is a complete template required by the environment. In the modules section, you can specify the resource templates such as ECS, database, SLB, and virtual private cloud (VPC). The file contains only the basic cloud resources, experienced DevOps engineers can also add more resources, including middleware such as message queue and Kafka, NoSQL databases, and monitoring and alarm rules.

The configuration information of the environment can be stored on the pipeline so that environments can be deployed first and then the business. When deploying the environment, users can choose to create a new environment or update the environment based on the actual situation. An environment configuration typically includes the following information.

The biggest difference between a cloud environment and a traditional data center is that all cloud services are API-centric. Users can create, modify, and delete resources by API. Therefore, deployment on the cloud is naturally standardized, thus improving the deployment efficiency on the cloud, i.e., realizing efficient and unified standardized deployment. Repeat deployment is required in the following four typical scenarios.

Generally, efficient and standard deployment on the cloud provides three major capabilities. They are the elimination of environmental differences, rapid replication of environments, and the capability to rebuild environments.

Minor environment differences will cause great differences in business, and troubleshooting is much more difficult than code debugging. After all, most of the code logic can be reproduced and debugged locally, however, it is more complicated for the differences caused by the environment. The standardized deployment on the cloud can eliminate environmental differences and achieve the consistency of the environment, greatly simplifying the O&M.

From the routine environment to the production environment, from region A to region B, and from single cluster to multiple clusters, all of them require efficient replication capabilities. Among them, the capability to replicate the environment is the first but the most critical step. Standardized deployment can reduce the workload from several days to several hours, or even to one click. It can be said to greatly improve the O&M efficiency.

Many environmental problems are caused by history. Various nonstandard operations for a long time eventually will lead to environmental confusion. Therefore, it is very necessary for the routine test environment to regularly rebuild. The reason is similar to automated testing in that the cleaner the environment, the better the capability to verify, reproduce and debug business problems. So, when a test environment has a problem, it should be possible to be released at any time and be rebuilt in one click.

Therefore, if the automation deployment is considered in the project planning stage, the subsequent deployment will undoubtedly be much smoother. However, can this model also be used for existing projects? The answer is yes. To achieve this, the corresponding service must have the capability to convert existing cloud resources into deployment templates, and then modify the templates accordingly for repeat use.

If 100% automation is the ideal form of DevOps, the lack of any process may become an obstacle to the practice of DevOps. Generally, DevOps involves eight stages in its O&M. They are planning, coding, building, testing, publishing, O&M, and monitoring, then return to the planning and start a new round of iteration.

Figure 5: DevOps Flowchart

It is worth emphasizing that the above deployment templates also include settings such as monitoring and alarm. Because everything is cloud products and all cloud products provide APIs. For these reasons, deployment templates can be unified and centralized.

For example, the DevOps System of Alibaba Cloud enables both template-based environment deployment and template-based O&M orchestration, namely automation O&M by OOS of Alibaba Cloud. The industry also calls this mode Ops as Code, Automation as Code, or Runbook as Code. The product design principles and ideas are consistent with the deployment templates, the detailed steps will not be repeated.

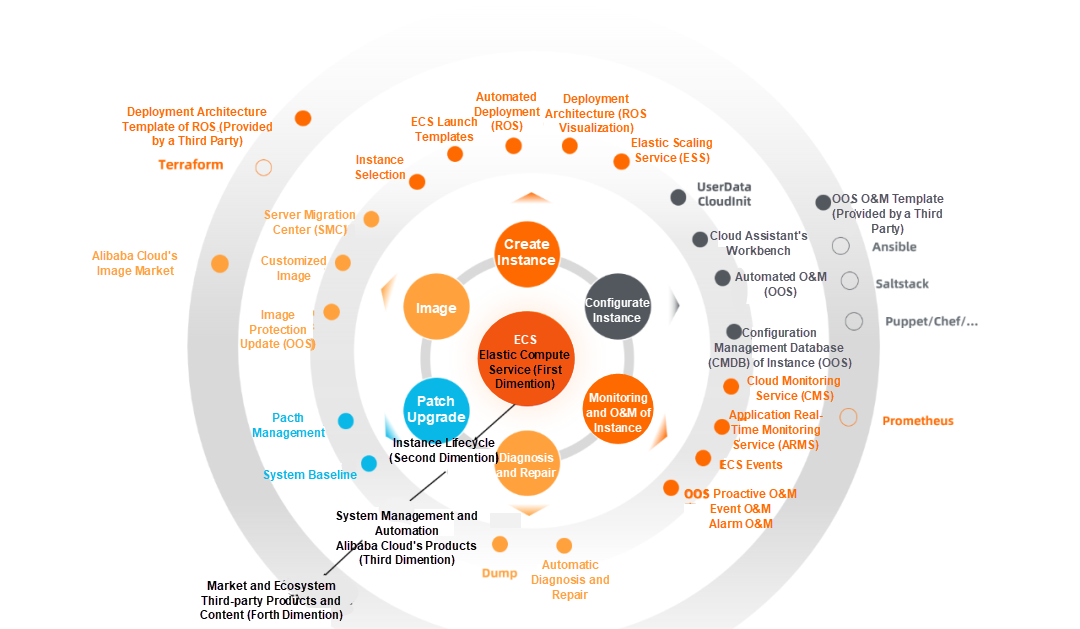

As a producer of cloud computing products, I also sort out a closed-loop of DevOps from the full lifecycle of a cloud resource, as shown in figure 5. I also elaborated on this figure at the 2020 Apsara Conference. Friends who are familiar with Beijing like to call it a Four-ring Diagram.

Figure 6: Four-Ring Diagram of the Lifecycle of Cloud Resources

The first dimension is the core cloud resources include server resources, virtual servers, bare metal instances, and container instances.

The second dimension includes the six phases of an ECS instance lifecycle. They are the creation of the instance, image, patch upgrade, diagnosis and repair, monitoring and O&M instance, and instance configuration.

The third dimension covers different tools to improve the efficiency of the six phases mentioned in the second dimension. For example, in addition to the monitoring products provided by cloud vendors, many third-party monitoring products are also available for instance monitoring and O&M. It is recommended, for O&M, to use automated O&M products, such as OOS, to template common O&M tasks and standardize to reduce errors caused by manual operations, avoid O&M personnel carrying the blame.

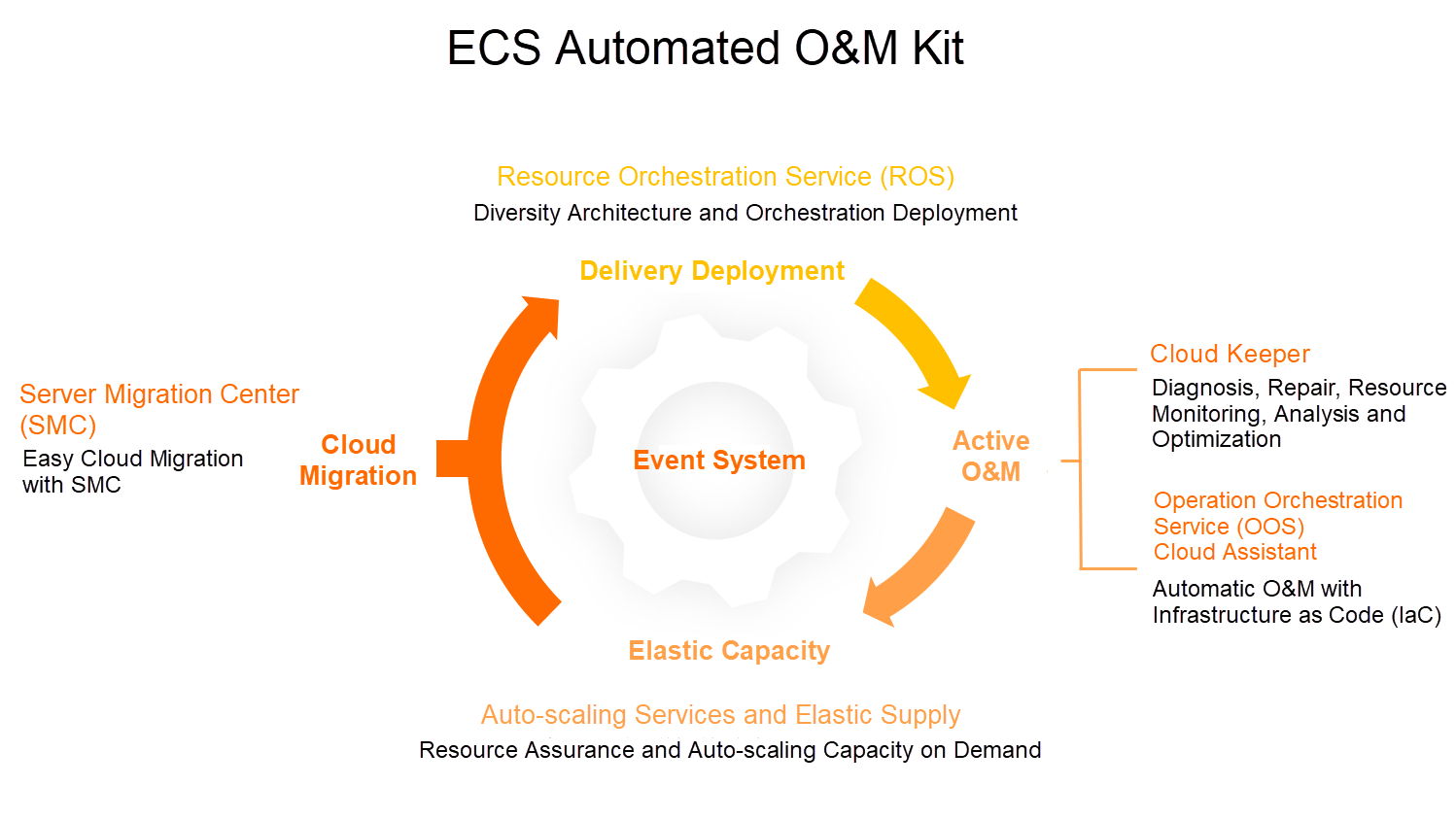

Recently, this complete set of ECS automated O&M kits was officially released to help enterprises implement automated O&M with an entire lifestyle from planning, migration, deployment, elastic scaling, to routine management. With this tool, each enterprise on the cloud can build its own automated O&M system at a low cost.

Figure 7: Different Phases of the ECS Automated O&M Kit

The fourth dimension is that in addition to using cloud platform tools, third-party tools can be used as well, such as Terraform and Ansible. Furthermore, many tools use similar templates, such as YAML, JSON, and customized HCL of Hashicorp. An ecosystem can be built based on templates, facilitating external contributions by participants. To some extent, these tools enrich the DevOps and ultimately achieve higher efficiency.

Figure 6 shows not only the Alibaba Cloud DevOps tools but also the popular O&M tools in the industry. Their design ideas are similar, so the tools can be adopted separately according to actual needs. Generally, when using cloud resources from a single cloud platform, the products on the cloud platform have the most consistent experience, the highest level of integration, and the relatively lowest cost.

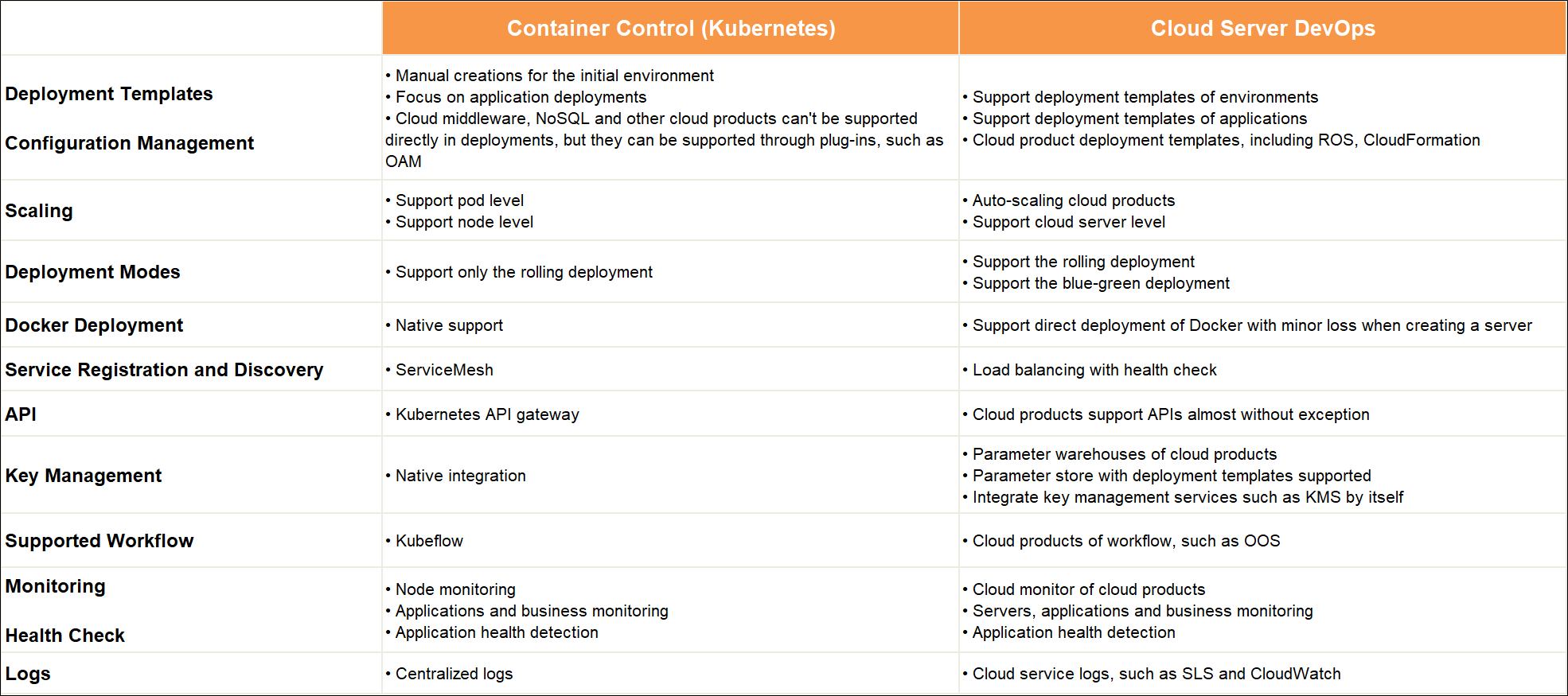

Here I would also like to mention the differences between Kubernetes (Kubernetes) and cloud server DevOps. Currently, Kubernetes has become the standard of container orchestration and control. Almost all cloud service providers have implemented various managed Kubernetes, including some Serverless Kubernetes.

Many cloud server users are eager for Kubernetes, but they need to continue to use cloud servers for various reasons. I once commented on Kubernetes in this way, "Kubernetes itself is not a major technical innovation, but rather productization of many best practices in DevOps." Some container experts agree on this idea.

Table 2: Comparison between Container Control (Kubernetes) and Cloud Server DevOps

In fact, DevOps is not a new concept, but few enterprises have implemented DevOps yet. There are many reasons for DevOps. According to my years of experience, there are two biggest issues, one is finance, and the other is the habits of O&M developers.

The typical planned model requires estimation before procurement. The biggest challenge here is that no one can accurately predict what will happen tomorrow, so the result is either an overestimate or an underestimate. The next estimate must be tightened if the estimate is too high, relaxed if the estimate is low, and then the cycle repeats itself.

Financial restrictions are sometimes fatal to the development of DevOps. This planned model directly affects the model of creating and releasing resources on the cloud. The distribution the financial experts prefer to choose is seemingly cost-effective, while DevOps O&M developers prefer the distribution on demand and auto-scaling approach.

Therefore, I want to appeal to the financial experts to give more consideration and give the technical architecture certain flexibility in budget, which can help and promote the rapid development of the business very effectively.

By Wu Junyin, senior technical expert on intelligent Elastic Compute Service (ECS) at Alibaba Cloud. He is responsible for the architectures of some new ECS products and trusted computing instances, and for the technology development and O&M architecture of intelligent OnECS and OnAliyun of Alibaba Cloud. Moreover, Wu Junyin has rich experience in cloud computing and is committed to creating unified management and O&M experience that based on ECS-centric automation and DevOps.

31 posts | 5 followers

FollowAlibaba Cloud Community - February 6, 2022

Alibaba Cloud Community - February 6, 2022

Alibaba Cloud Community - February 28, 2022

Alibaba Cloud Community - February 4, 2022

Academy Insights - May 30, 2025

Alibaba Cloud Community - March 1, 2022

31 posts | 5 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Elastic Container Instance

Elastic Container Instance

An agile and secure serverless container instance service.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by Alibaba Cloud New Products