By Liu Yu, Tian Chudong, Lu Mengkai, and Wang Renda

UC Berkeley believes that the emergence process of Serverless architecture is similar to the process of shifting from assembly language to high-level language more than 40 years ago. The use of Serverless architecture will soar in the future. Perhaps server-based cloud computing will not disappear, but it will promote the development of BaaS to support the Serverless architecture better.

The application development process based on Serverless architecture is simpler than that based on traditional architecture. If users want to develop applications in Serverless architecture, they only need to write code, build products based on the specification, and deploy them online.

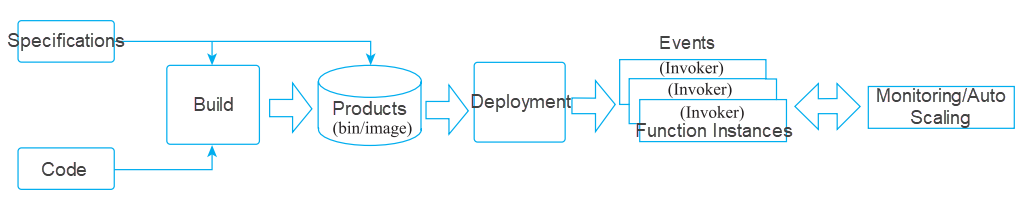

As shown in Figure 1, CNCF Serverless Whitepaper v1.0 states that the life cycle of a function starts with writing code and providing the metadata of the specifications. A Builder entity will obtain the code and the specification and then compile and convert it into artifacts. Next, deploy the artifacts on the cluster with a controller entity. This controller entity is responsible for scaling the number of function instances based on event traffic and/or the load on the instances.

Figure-1: Function Deployment Process Diagram

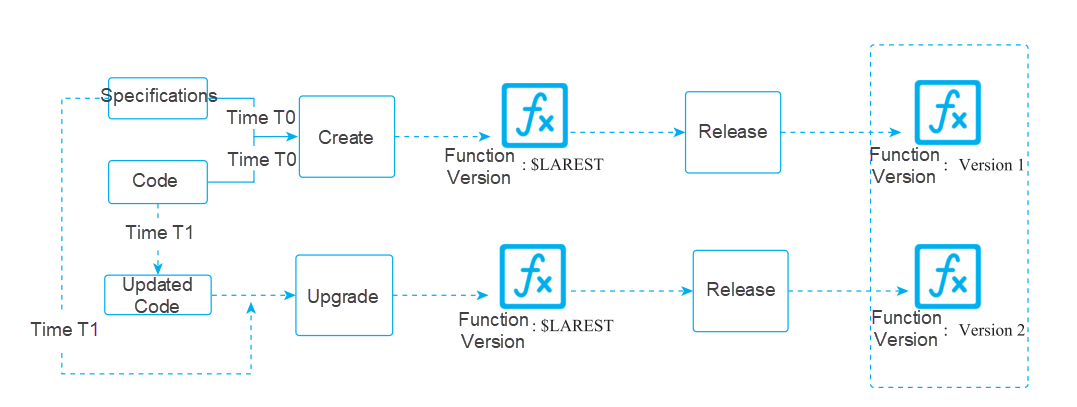

As shown in Figure-2, the complete function creation and update process are listed below:

Figure-2: Function Creation/Update Process Diagram

1) When creating a function, provide its metadata as part of the function creation and compile it to make it publishable. Next, start and disable the function. Function deployment must support the following use cases.

2) Users can release a function, which will create a new version (a copy of the latest version), and the released version may be marked or have an alias.

3) Users may want to directly execute/call functions (bypass event sources or API Gateway) for debugging and developing. Users can specify call parameters (such as the required version, synchronous/asynchronous operations, and detailed log levels).

4) Users may want to obtain function statistics (such as the number of calls, average uptime, average delay, number of failures, and number of retries).

5) Users may want to retrieve log data, which can be filtered by severity level, time range, and content. Log data is at the function level and includes events (such as function creation/deletion, warning or debug messages, and optional Stdout or Stderr for functions). Preference is given to a method that has one log entry per call or associates log entries with specific calls (to allow easier tracking of the function execution flow).

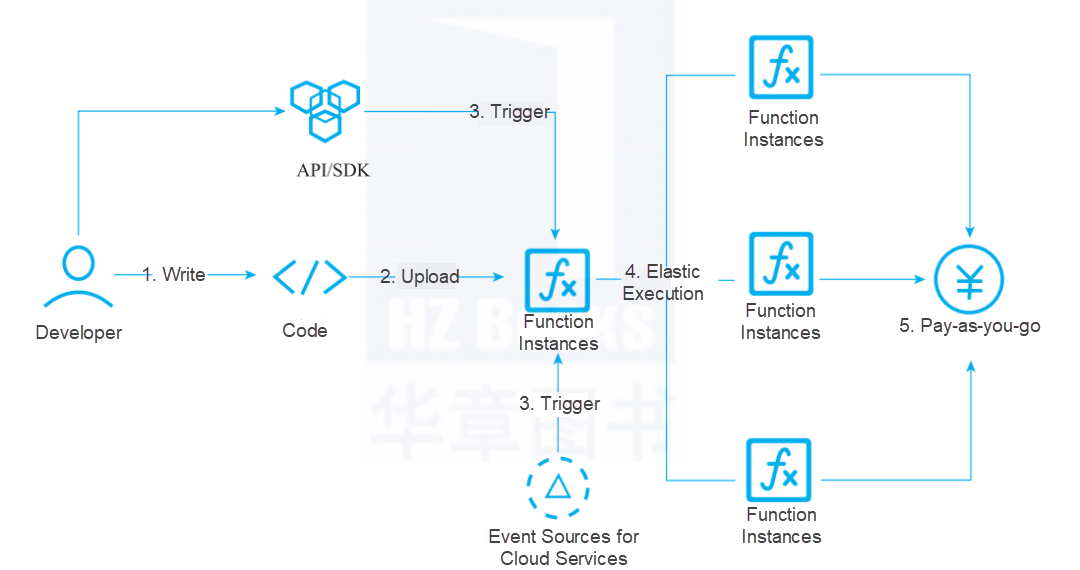

Figure-3: The Process of Developing Serverless Applications

As shown in Figure-3, taking Alibaba Cloud Serverless products as an example, the process of developing Serverless applications in the production environment is listed below:

Step 1: Select a familiar programming language based on the runtime provided by FaaS and then develop and test the project

Step 2: Upload the code to the FaaS platform

Step 3: After the upload is complete, use APIs, SDKs, or cloud-based event sources to trigger the functions uploaded to the FaaS platform.

Step 4: The FaaS platform automatically executes the corresponding functions based on the trigger concurrency.

Step 5: Users pay according to the pay-as-you-go billing method based on the actual resource usage.

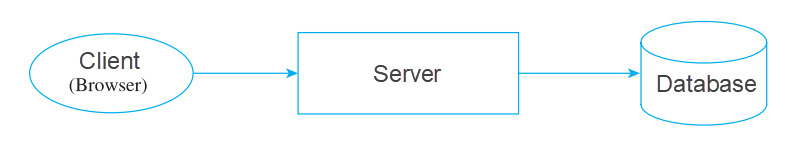

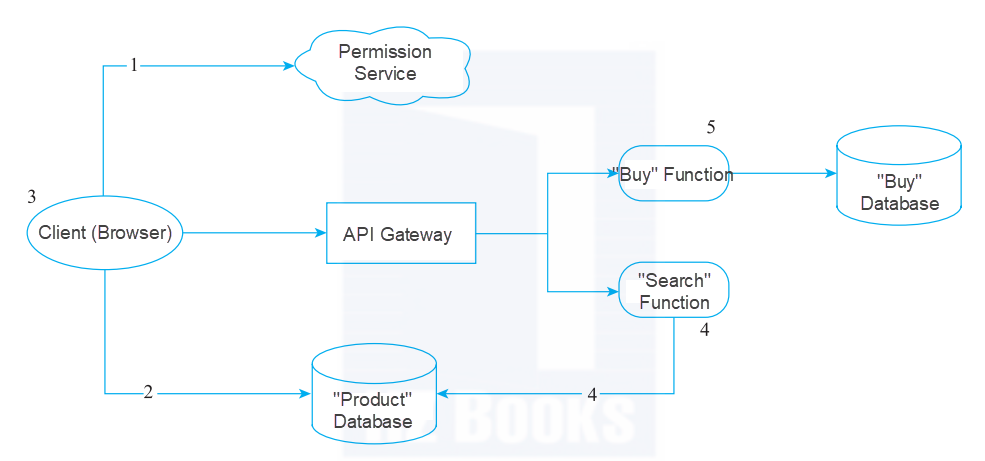

The following example compares the application development in the traditional architecture with the application development in the Serverless architecture through cases in the production environment. Let’s take a Web application as an example, as shown in Figure-4:

Figure-4: Architecture Diagram of an E-Commerce Website under the Traditional Three-Layer C/S Architecture

In most cases, Web applications are based on the traditional three-layer C/S architecture. For example, a common e-commerce application uses Java on its server and HTML/JavaScript on its client. In this architecture, the server is only a cloud server and carries a large number of business functions and business logic. For example, most of the logic in the system (authentication, page navigation, search, and transaction) is implemented on the server.

When transforming the three-layer C/S architecture into the Serverless architecture, the effect is shown in Figure-5.

Figure-5: Architecture Diagram of an E-Commerce Website under the Serverless Architecture

Step 1: In the serverless application form, remove the authentication logic from the original application and replace it with a third-party BaaS service.

Step 2: Allow the client to access a part of the data directly. This part of the data is completely hosted by a third party. Some security configurations are used to manage the permissions of the client to access the corresponding data.

Step 3: The first two points have already implied a very important third point: some of the logic on the server side has been transferred to the client (such as maintaining the user session, understanding the UX structure of the application, and obtaining data and rendering the user interface). The client has gradually evolved into a single-page application.

Step 4: Some tasks need to be retained on the server, such as heavy computing tasks or operations that need to access a large amount of data. Let’s take search as an example. The search function can be split from the continuously running server side and implemented in FaaS mode. It receives requests from the API Gateway (explained in detail later) and returns responses. This server-side function can read product data from the same database as the client. This search function can be implemented by slightly modifying the original search code.

Step 5: You can also change the buy function to another FaaS function. It needs to be implemented on the server rather than on the client (for security reasons). The API Gateway exposes it for external use.

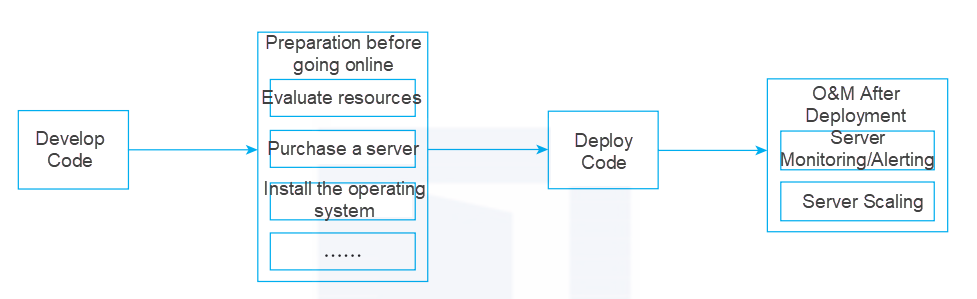

The development and launch of applications under the traditional Cloud Server architecture are shown in Figure-6:

Figure-6: Diagram of Application Development and Launch Process under the Traditional Cloud Server Architecture

After developers complete code development, they need to make preparations for code going online, including (but not limited to) evaluating resources, purchasing servers, installing operating systems, and installing server software. After that, they can deploy the code. Professional personnel or teams are required to continuously monitor, operate, and maintain resources (such as servers). For example, a smooth scale-out of servers is required when traffic increases suddenly, and a smooth scale-in of servers is required when traffic drops suddenly.

However, the entire development model has undergone major changes under the Serverless architecture.

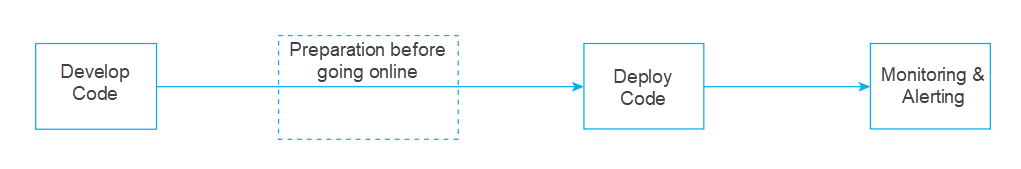

Figure-7: Diagram of Application Development and Launch Process under the Serverless Architecture

In the application development and launch process above, Serverless architecture developers only care about the business logic in the function. Some of the products and services that were previously on the server side (such as the authentication logic, API Gateway, and databases) are all provided by cloud vendors.

Users do not need to pay attention to server-level maintenance, nor do they need to invest in O&M resources for traffic peaks and troughs. They do not need to make additional expenses for idle resources. Under the Serverless architecture, the pay-as-you-go billing method, auto-scaling capabilities, and low O&M or O&M-free capabilities of the server can reduce resource costs and labor costs for users, significantly improve the overall R&D efficiency, and strongly ensure the performance, security, and stability of the project.

In summary, the difference between the application development process under the Serverless architecture and the traditional architecture is that the former allows developers to focus more on their business logic and emphasizes the concept of Noserver, leaving more professional things for professional people to do, which helps promote business innovation, improve efficiency, and reduce business launch and iteration cycles.

Although the Serverless architecture develops rapidly and is considered the real cloud computing, even at the 2020 Apsara Conference when Serverless reasserted how – “It will lead the next decade of cloud computing,” it still faces many challenges.

The Cloud Programming Simplified: A Berkeley View on Serverless Computing UC Berkeley article from 2019 summarizes the following five challenges for Serverless architecture.

Resource Requirement: Serverless allows developers to specify the memory size and execution time of cloud functions, but developers cannot specify other resource requirements. This hinders people that want to control more specified resources, such as CPU, GPU, or other types of accelerators.

Data Dependency: Today's cloud function platforms do not understand the data dependencies between cloud functions, let alone the amount of data that these functions may exchange. This may lead to sub-optimal placement, causing inefficient communication patterns.

Ephemeral Storage: One way to provide ephemeral storage for Serverless applications is to use optimized network stacks to build distributed memory services to ensure microsecond latency.

Durable Storage: Like other applications, Serverless database applications are limited by the latency and IOPS of the storage system and require long-term data storage and variable state semantics of the file system.

Coordination Service: The shared state between functions usually adopts the producer-consumer design pattern, which requires consumers to know immediately when the producer obtains the data.

Minimize Startup Time: The startup time consists of three parts, the first is the time to schedule and start resources to run cloud functions, the second is the time to download application software environments (such as operating systems and libraries) to run function codes, and the third is the time to execute application-specific startup tasks (such as the time to load and initialize data structures and libraries). Resource scheduling and initialization may incur significant latency and overhead due to creating an isolated execution environment and configuring the customer's VPC and IAM policies.

Cloud capabilities may incur significant overhead on popular communication primitives (such as broadcast, aggregation, and shuffle).

The Serverless architecture reassigns security responsibilities, changing many people from cloud users to cloud providers without fundamentally changing them. However, the Serverless architecture also has risks inherent in the application decomposition of multi-tenant resources.

The performance of the x86 microprocessor that dominates the cloud is slow to improve.

The challenges facing Serverless architecture are relatively abstract. According to the situation of the current industry, these challenges are still widespread, and many cloud vendors are working on them. From the perspective of Serverless developers, combining the challenges above with several issues that concern developers most, it can be considered that the current challenges facing the Serverless architecture include (but are not limited to) cold start problems, vendor lock-in, and imperfect supporting resources.

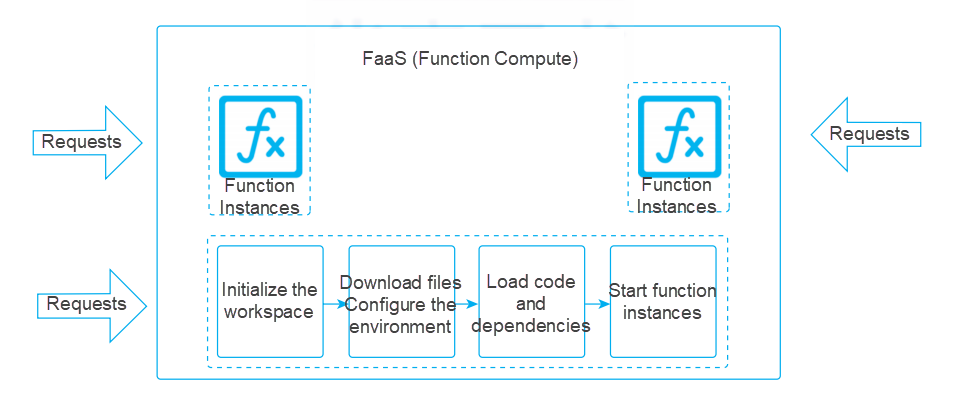

The cold start problem refers to how the Serverless architecture may trigger the complete instance startup process of environment preparation (initializing workspace), downloading files, configuring the environment, loading code and configurations, and starting function instances during auto scaling, resulting in the request responses originally available in milliseconds or tens of milliseconds to be obtained in hundreds of milliseconds or seconds, thus slowing down business processing.

As mentioned earlier, everything has two sides. While the Serverless architecture has the advantage of auto scaling, it introduces a new problem compared with the ServerFul architecture: the cold start problem. Under the serverless architecture, after developers submit code, the platform generally only persists it and does not prepare the execution environment for it. Therefore, when a function is triggered for the first time, there will be a relatively long process of preparing the environment, which includes opening up the network environment and preparing the required files, codes, and other resources.

This process from the preparation of the environment to the execution of the function is called the cold start of the function. Since the Serverless architecture has auto scaling capabilities, Serverless service vendors increase or decrease instances based on traffic fluctuations. Therefore, the platform may frequently prepare new environments, download function codes, and start instances to respond to continuously generated requests.

Figure-8: Schematic Diagram of Auto Scaling by Function Compute on Function Instances Based on Traffic

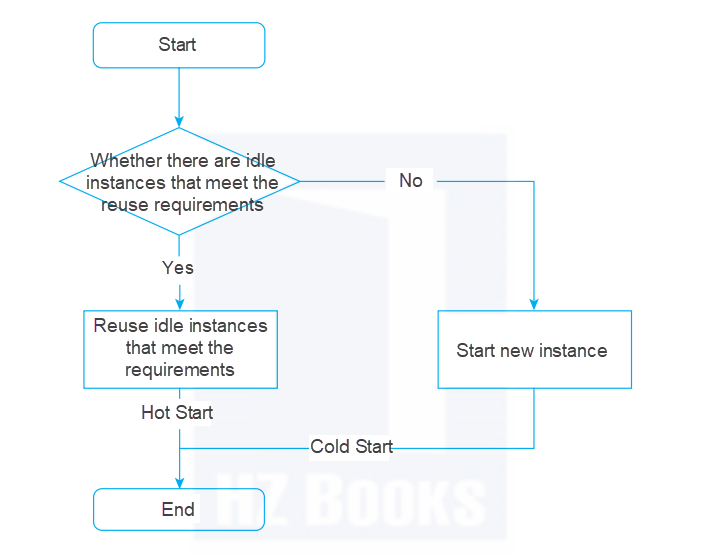

As shown in Figure-8, when a function is triggered in the FaaS platform of the Serverless architecture, the FaaS platform will reuse the instance or start a new instance according to the specific situation.

Figure-9: Schematic Diagram of Instance Startup Process on FaaS Platform

As shown in Figure-9, the FaaS platform prioritizes the use of idle and reusable instances as they become available, a process known as the hot start. Otherwise, the FaaS platform will start a new instance to respond to the requests at this time, which is the corresponding cold start process.

The automatic management-free horizontal scaling of Serverless architecture will continue until there are enough code instances to handle all workloads.

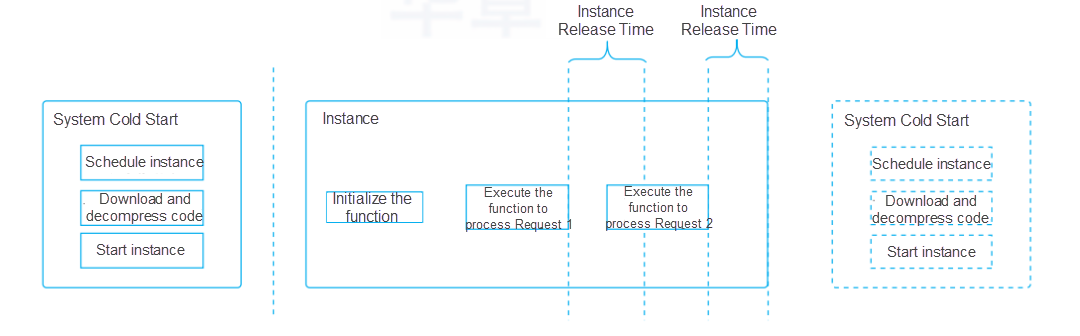

The startup of a new instance includes several steps (such as initializing the workspace, downloading files, configuring the environment, loading code and dependencies, and starting function instances). Compared with the hot start that start within a few milliseconds or tens of milliseconds, the extra steps of the cold start may take hundreds of milliseconds or even seconds. This new instance startup that occurs during production and causes the business response speed to be affected is usually the impact of the cold start that everyone is concerned about, as shown in Figure-10.

Figure-10: Schematic Diagram of Function Cold Start

In summary, it is not difficult to analyze and summarize the common scenarios of cold start problems.

First Startup of a Function: When the function is started for the first time after deployment, there is usually no existing instance, so the cold start problem is very prone to occur at this time.

Request Concurrency: When a new request is received before the current one is completed, the FaaS platform will start a new instance to respond to the new request, which causes the cold start problem.

Too Long of an Interval between Two Triggers: If the interval between the two triggers of functions is too long and exceeds the threshold of the instance release time, the cold start problem may also occur. Currently, the cold start challenge faced by the Serverless architecture is severe, but it is not fatal because all cloud vendors are working hard to solve the cold start problem, including (but not limited to) instance warm-up, instance reservation, resource pooling, single instance processing multiple requests, etc.

The vendor lock-in means the Serverless architecture developed by different vendors has different manifestations, including product forms, functional dimensions, and event data structures. Therefore, once you use one vendor's Serverless architecture, it usually means you must use the FaaS platform and corresponding backend infrastructure provided by this vendor. If you want to carry out multi-cloud deployment and project migration across cloud vendors, it will be difficult. The cost is extremely high.

As we all know, functions are triggered by events, so the data structure agreed upon by the FaaS platform and supporting infrastructure services often determines the processing logic of functions. If the event structure of the same type of trigger is different for each vendor, huge costs will be incurred when multi-cloud deployment and project migration across cloud vendors are performed.

When a developer develops a feature and implements it in Serverless architectures provided by different cloud vendors, the code logic and product capabilities involved are different, but even the business logic and O&M tools are completely different.

Therefore, if enterprises want to perform business migration and multi-cloud deployment across vendors, they will face extremely high compatibility costs, business logic transformation costs, multi-product learning costs, and data migration risks.

Currently, no complete and unified standard is followed by all cloud vendors. Serverless architectures of different vendors are severely bound to their own products and business logic. Therefore, cross-cloud disaster recovery and cross-cloud migration are very difficult for developers. Currently, the serious lock-in of the Serverless architecture by cloud vendors is one of the most complained about and worrying issues for developers.

CNCF and other organizations and teams are aiming to improve and deal with it through more standardized and scientific methods.

The imperfect supporting resources mean one of the core ideas of Serverless architecture is to leave the professional things to cloud vendors. However, in practice, cloud vendors may not be able to do more things that should be done in Serverless architectures due to some problems (such as the priority of requirements and cloud vendors' business quality), resulting in difficulties and complaints from developers in developing projects and operating and maintaining applications based on Serverless architectures.

With the rapid development of Serverless architectures, the vendors are also working hard to improve their supporting resources and facilities. However, many supporting resources are still imperfect in the Serverless architecture, which does not enable developers to develop Serverless applications more smoothly and perform O&M on Serverless applications more easily. The main problems are listed below:

On the one hand, the lack of developer toolchains on the market makes development and deployment difficult, increasing costs. On the other hand, the lack of relevant toolchains hinders the improvement of the experience on the Serverless architecture at the experience level. The lack of high-quality tool chains makes it more difficult for Serverless developers worried about being bound to vendors to untie themselves from vendors.

In 2020, the first Chinese Cloud Native User Survey Report released by the China Academy of Information and Communications Technology (CAICT) stated that before using the Serverless architecture, 49% of users considered deployment costs, 26% of users considered the problem of vendor lock-in, and 24% of users consider the perfection degree of the relevant toolsets.

The truth behind this data is that there is a strong demand from developers to improve the toolchain. According to the current situation, there is no absolute unified and consistent Serverless developer tool. Each vendor has its developer tool, and the usage and behavior are different, which leads to severe challenges for developers in research before development, debugging during development, and O&M after deployment.

In addition, most of the Serverless developer tools are more resource orchestration and deployment tools and cannot be called development tools or O&M tools, especially in debugging, with consistent online and offline environments being not supported. In O&M, most of the Serverless developer tools can't debug the business quickly and ensure easier troubleshooting. In terms of locating problems, most of the Serverless developer tools don't have a unified and complete solution, which makes the learning cost and usage cost of Serverless architecture very high for developers.

According to the current situation, the learning resources of Serverless architectures are relatively scarce. Whether from the perspectives of text, video, and experiments, or the perspectives of cases, tutorials, and best practices provided by vendors, there are no perfect learning resources and reference cases. Since Serverless learning resources and development experience cases are relatively scarce, it is difficult for developers to find suitable learning resources during the learning phase. They often encounter unknown errors during the development process, which seriously impedes the confidence construction of Serverless architecture developers.

The aspects above are only part of the imperfect performance of Serverless architectures in supporting resources and facilities. In addition, how can we integrate the Serverless architecture more closely with the traditional architecture? How can we migrate traditional businesses to the Serverless architecture more easily? How can the Serverless architecture conduct monitoring and alerting? How can we manage Serverless applications and Serverless resources? What is the best practice of scientific release and O&M of the Serverless architecture? All these issues need to be studied and explored.

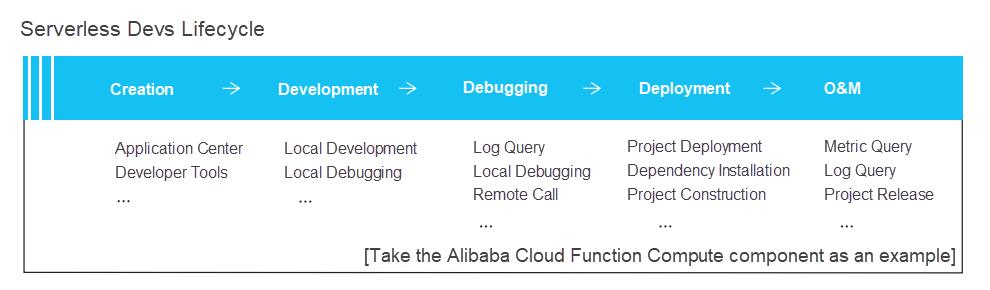

Today, although there are many challenges to the Serverless architecture, everyone is trying to help users deploy business code to the Serverless architecture more simply and quickly through a better experience. For example, the open-source Serverless Devs provided by the Alibaba Cloud Serverless Team is a full-lifecycle management tool for Serverless applications without vendor lock-in.

As shown in the figure, Serverless Devs can be introduced in the entire process of project creation, development, debugging, deployment, and O&M. The Alibaba Cloud Function Compute component is used as an example:

Figure-11: Schematic Diagram of Full-Lifecycle Management of Serverless Applications by Serverless Devs

Serverless architecture is very popular now. Vendors are also making greater efforts to improve their Serverless products and promote Serverless ecosystems and mental development. However, objectively speaking, the challenges facing Serverless architecture are not only those described earlier.

Leaving professional things to the professionals makes the Serverless architecture have greater security protection. However, due to the extreme elasticity of the Serverless architecture, developers are more worried. If someone maliciously attacks my business, will the extreme elasticity and pay-as-you-go billing method of the Serverless architecture cause huge losses to me quickly? This is different from the inability to provide services shown by the traditional Cloud Server, but it is more worrying for developers.

Although many vendors are now addressing the issue by using whitelist and blacklist functions of API Gateway, setting the upper limit on the instance resource configurations of Function Compute and other related functions, many developers still have concerns and worries.

Compared with the traditional Cloud Server architecture, the Serverless architecture has a black box capability. Therefore, the development of applications under the Serverless architecture often results in some undetected errors.

For example, some inexperienced Serverless application developers may face serious trigger loops when using object storage triggers. Specifically, the client uploads an image to the object storage, the object storage triggers the function to execute the image compression, and the result image is written back to the object storage. If the trigger condition here is not set clearly, it may trigger loop compression and write-back operations. In addition to the errors that are difficult to detect, the Serverless architecture also faces the challenge of troubleshooting errors. A common scenario is when users develop and debug the business logic locally, and incidental errors occur after the code is deployed online. At this time, challenges arise (such as troubleshooting errors and tracing sources) because the user cannot log in to the machine for debugging, and the instance may be released after the trigger.

In summary, just like the advantages of Serverless architecture, although many of the challenges facing Serverless architecture have been described in the preceding section, there are good solutions to some of the challenges. This series of articles will introduce in detail how to solve these challenges. Although the Serverless architecture is faced with many challenges, it will bring new opportunities to more organizations and teams!

App Deploy as Code! SAE and Terraform Combine to Implement IaC-Style Application Deployment

99 posts | 7 followers

FollowAlibaba Cloud Community - January 4, 2026

Alibaba Cloud Community - May 17, 2024

Alibaba Clouder - February 26, 2021

Alibaba Cloud Serverless - June 13, 2022

Alibaba Cloud Native - January 25, 2024

Alibaba Clouder - February 3, 2021

99 posts | 7 followers

Follow YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Global Application Acceleration Solution

Global Application Acceleration Solution

This solution helps you improve and secure network and application access performance.

Learn MoreMore Posts by Alibaba Cloud Serverless