The Serverless architecture will become an important technical architecture of the cloud computing field and will be adopted by more businesses in the future. Delving further, in which scenarios does the Serverless architecture perform well and not perform well? Which scenarios does the Serverless architecture fit better?

The application scenario of the Serverless architecture is usually determined by its characteristics, and the supported triggers determine the specific scenario. As shown in Figure 1-1, the following user scenarios are suitable for the Serverless architecture described in CNCF Serverless Whitepaper v1.0:

Figure 1-1: User Scenarios for Serverless Architecture Described in the CNCF Serverless Whitepaper v1.0

In addition to providing four applicable user scenarios based on the features of the Serverless architecture, CNCF provides detailed examples with common triggers:

Based on the features of the Serverless architecture, CNCF Serverless Whitepaper v1.0 theoretically describes the suitable scenarios or businesses for the Serverless architecture. Cloud service vendors describe the typical application scenarios of the Serverless architecture from the perspective of their business.

Typically, when object storage is used as a trigger for Serverless products, the typical application scenarios include video processing and data ETL processing. API Gateway is used to provide users with external links and related functions. When API Gateway is used as a trigger for Serverless products, the typical application scenarios are backend services, including App backend services, website backend services, and WeChat applets.

Some smart speakers will also open related interfaces, which can also trigger cloud functions through API Gateway to obtain corresponding services. In addition to Object Storage Service triggers and API Gateway triggers, common triggers include Message Queue triggers, Kafka triggers, and log triggers.

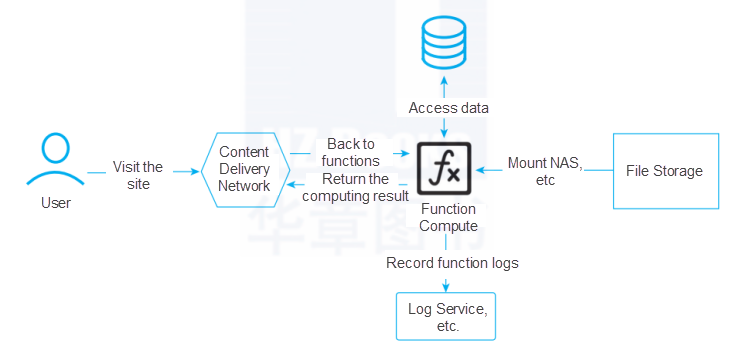

If the Serverless architecture is combined with other cloud products provided by cloud service vendors, developers can build scalable mobile applications or Web applications and easily create rich serverless backends. These programs are available in multiple data centers. Figure 1-2 shows an example of Web application backend processing.

Figure 1-2: Web Application Backend Processing Example

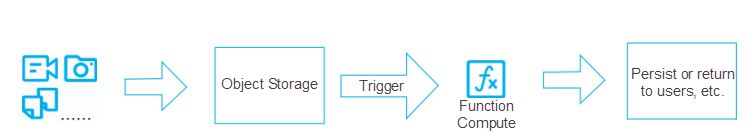

The total amount of images, audio, and video uploaded by users in video application and social application scenarios are often large and frequent, requiring high real-time and concurrency capabilities of the processing system. In this case, we can use multiple functions to process images uploaded by users (including image compression and format conversion) to meet the needs of different scenarios. Figure 1-3 shows an example of real-time file processing.

Figure 1-3: Real-Time File Processing Example

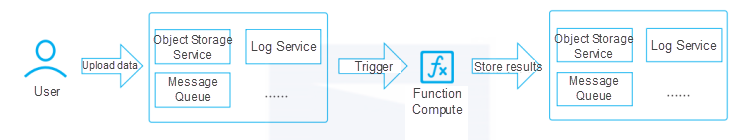

We can process data in real-time using a wide range of event sources, event-triggering mechanisms, code, and simple configurations supported by the Serverless architecture. For example, we can decompress Object Storage Service packages, clean logs or data in databases, and customize the consumption of MNS messages. Figure 1-4 shows an example of real-time data processing.

Figure 1-4: Real-Time Data Processing Example

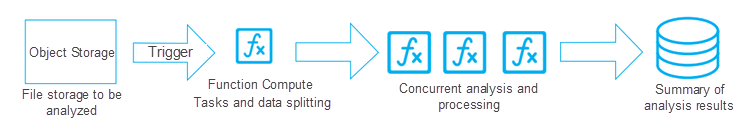

Generally, we need to build related big data frameworks (such as Hadoop or Spark) and have a cluster that processes data to process big data. However, through Serverless technology, we only need to continuously store the obtained data in the Object Storage Service, trigger the data-splitting function to split the related data or tasks through the Object Storage Service triggers, call related processing functions, and store processing results in the cloud database.

For example, a securities company does statistics every 12 hours on the transactions during the period and lists the top 5 transactions during the period. It processes the transaction flow logs of the Seckill website once a day to obtain errors caused by sold-out to analyze the popularity and trends of the commodities. The unlimited capacity of Function Compute makes it easy for users to process large amounts of data.

The Serverless architecture allows users to concurrently execute the mapper and reducer functions on source data to complete the work in a short time. Compared with traditional working methods, using the Serverless architecture can avoid idle resources and thus save costs. The data ETC processing process can be simplified in Figure 1-5.

Figure 1-5: Data ETL Processing Example

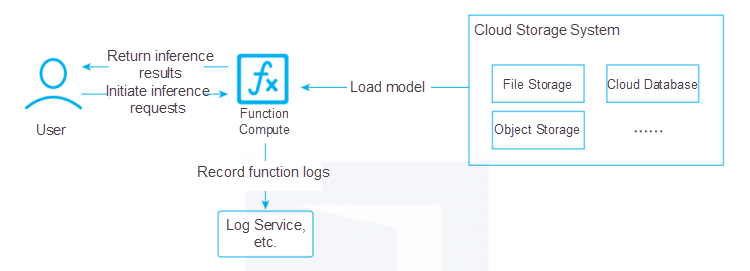

When the AI model is trained, and the inference service is provided externally, the data model is wrapped in the calling function based on the Serverless architecture, and the code isn't run until the actual requests from users arrive. Compared with traditional inference and prediction, this method has an advantage that allows function modules, backend GPU servers, and other related Machine Learning services to perform auto scaling based on the pay-as-you-go billing method, ensuring service stability and performance simultaneously. Figure 1-6 shows an example of Machine Learning (AI inference and prediction) processing.

Figure 1-6: Machine Learning (AI Inference and Prediction) Processing Example

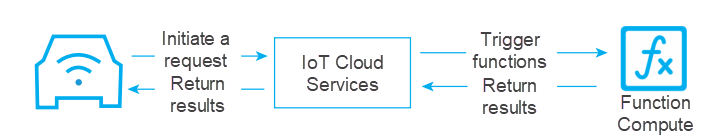

Currently, many vendors are launching smart speaker products. Users can say a specific word to activate the smart speakers. Then, the smart speakers pass this word to backend services through the Internet, get feedback results, and return them to users. The Serverless architecture allows vendors to combine API Gateway, cloud functions, and database products to replace traditional servers or virtual machines.

On the one hand, the Serverless architecture can ensure that resources can be paid with the pay-as-you-go billing method, which means users will only be billed when using the function. On the other hand, when the number of users increases, the backend of the smart speaker system implemented through the Serverless architecture will also carry out auto scaling to ensure the stability of the user side service. Also, the maintenance of one of the features is equivalent to the maintenance of a single function, so it will not bring additional risks to the main process, which is relatively more secure and stable. Figure 1-7 shows an example of IoT backend processing.

Figure 1-7: IoT Backend Processing Example

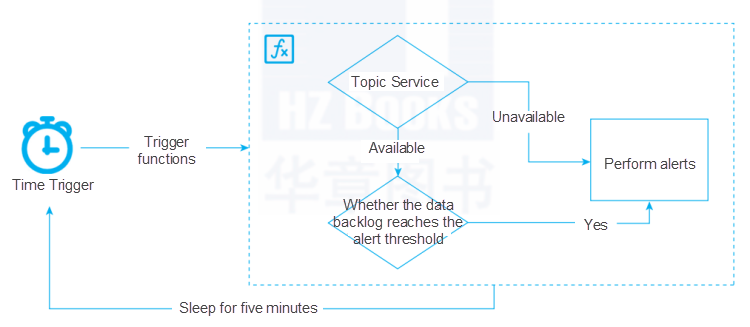

We often need to do some monitoring scripts in actual production to monitor whether the website service or API service is healthy, including whether it is available and whether the response speed is normal. The traditional method is to monitor and alert through some website monitoring platforms (such as DNSPod monitoring,website service monitoring, and Alibaba CloudMonitor).

The principle of these monitoring platforms is that users set the websites to be monitored and expected time thresholds. The servers deployed in various regions of the monitoring platform regularly initiate requests to determine the availability of websites or services. Although these servers are very versatile, they are not necessarily suitable. For example, you now need to monitor the status code of a website and the latency of different regions and set a latency threshold. When the website status is abnormal, or the latency is too large, the platform will notify and alert through emails.

Currently, it is difficult for most monitoring platforms to implement such a customized demand directly, so it is particularly important to develop a tool for website status monitoring. In addition, it is necessary to monitor the cloud services and alert users to abnormal situations in actual production and O&M . For example, when you use Hadoop and Spark, you must monitor the health of nodes. When you use Kubernetes, you must monitor the metrics of API Server and ETCD. When you use Kafka, you must monitor metrics (such as data backlog, topics, and consumers).

We cannot judge the monitoring of these services with simple URLs and certain statuses. In traditional O&M, we usually set up a scheduled task on an additional machine to perform bypass monitoring on the related service. An important application scenario of Serverless architecture is O&M, monitoring, and alerting, which means the Serverless architecture is used in combination with timing triggers to monitor the health status of certain resources. Figure 1-8 shows an example of website monitoring and alerting.

Figure 1-8: Website Monitoring and Alerting Example

A Practical Guide | Application Development in Serverless Architecture

99 posts | 7 followers

FollowAliware - March 19, 2021

Alibaba Developer - September 6, 2021

Alibaba Clouder - September 28, 2020

Alibaba Cloud Native - November 23, 2022

Alibaba Cloud Native - October 27, 2021

Alibaba EMR - August 5, 2024

99 posts | 7 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by Alibaba Cloud Serverless